UAV Tracking via Saliency-Aware and Spatial–Temporal Regularization Correlation Filter Learning

Abstract

1. Introduction

- We integrated a spatial–temporal regularization term into the formulation of multiple training samples to combine DCF learning and model updating, which was conducive to tracking accuracy and robustness, and it could adapt to changes in the appearance of different objects at different times.

- The saliency detection method is employed to acquire a saliency-aware weight so that the tracker can adapt to appearance changes and suppress background interference.

- Unlike the SRDCF, which produced high complexity by using multiple training image processing methods, the ADMM algorithm can be utilized to effectively optimize the SSTCF model, which the three subproblems have the analytic solutions.

- We evaluated the SSTCF tracker on two classic tracking benchmarks and the latest long-term UAV tracking datasets, which were the OTB2015 dataset [27], the VOT2018 dataset [28], and the LASOT dataset [29]. Experimental results display that the SSTCF tracker has higher accuracy and real-time performance than many existing methods.

2. Related CF-Based Models

2.1. Standard DCF

2.2. Spatially Regularized Discriminative Correlation Filters (SRDCF)

2.3. Background-Aware Correlation Filters (BACF)

3. Proposed Method

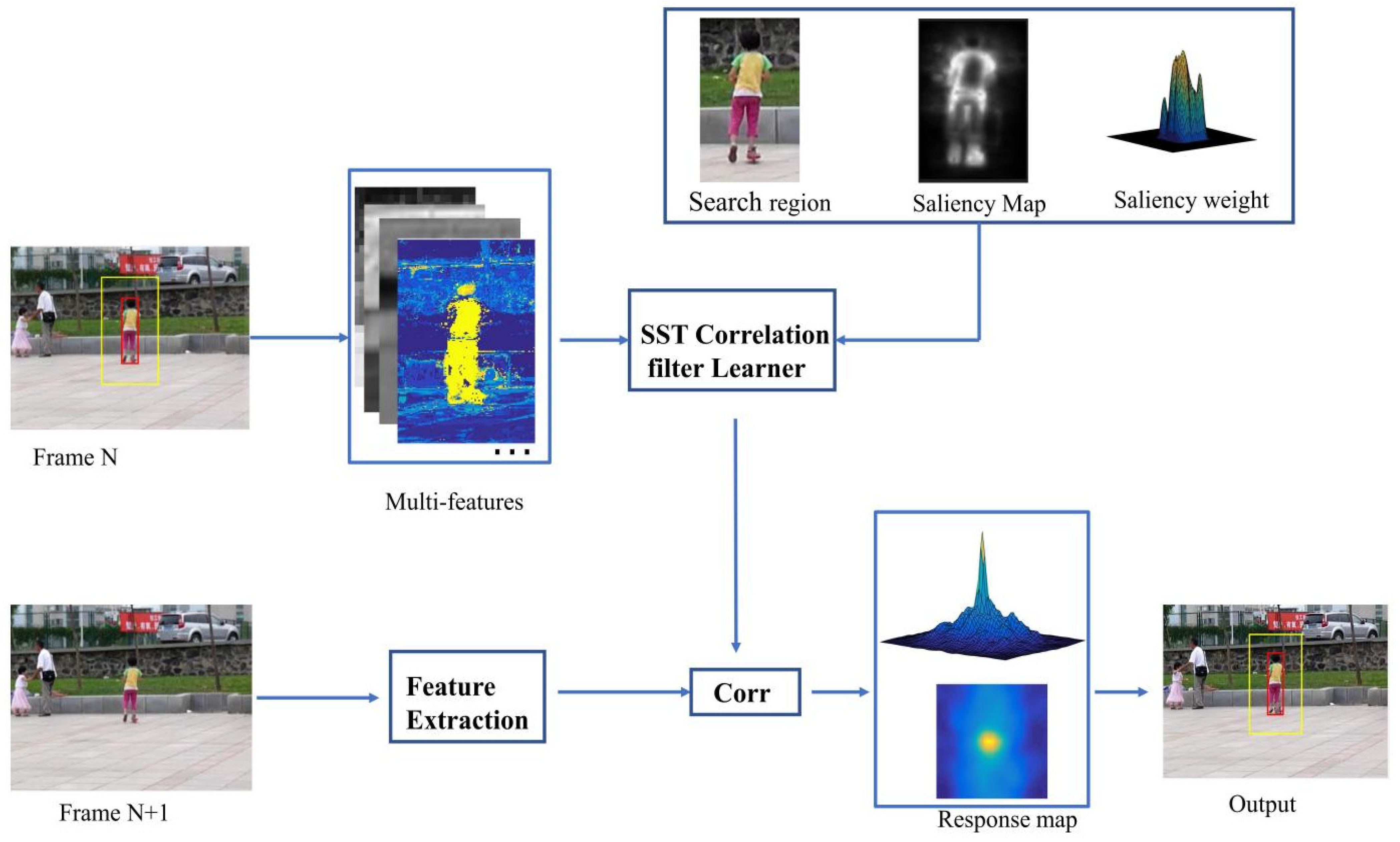

3.1. The Overall Framework

3.2. Saliency-Aware and Spatial-Temporal Regularized Model

3.2.1. Objective Function of Spatial-Temporal Regularized Model

3.2.2. Algorithm Optimization

- Subproblem

- Subproblem

- Lagrange multiplier

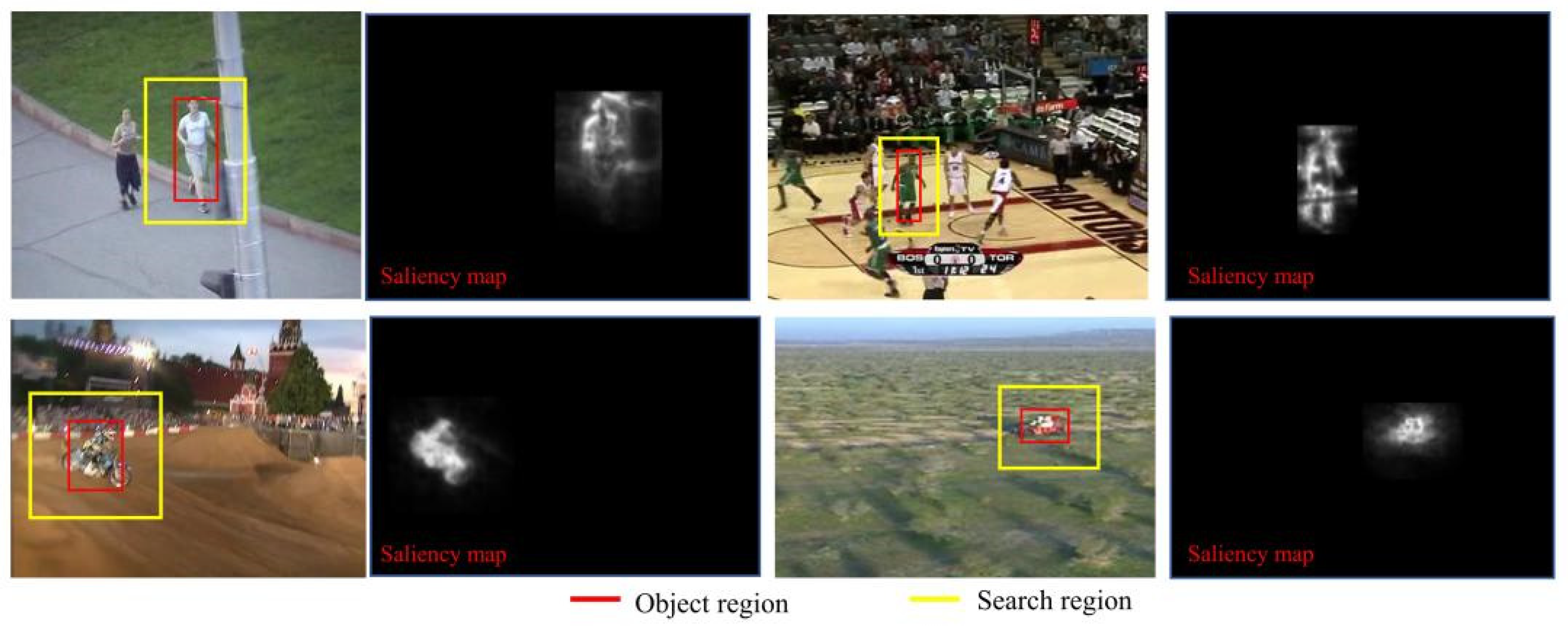

3.2.3. Saliency Detection for Updating Spatial Weights

3.2.4. Object Localization and Scale Estimation

| Algorithm 1: The proposed tracking algorithm |

| Input: The initial position and scale size of the object in the initial frame. Output: Estimated object position and scale size in the th frame, tracking model, and CF template. When frame ,

repeat:

|

4. Experimental Results

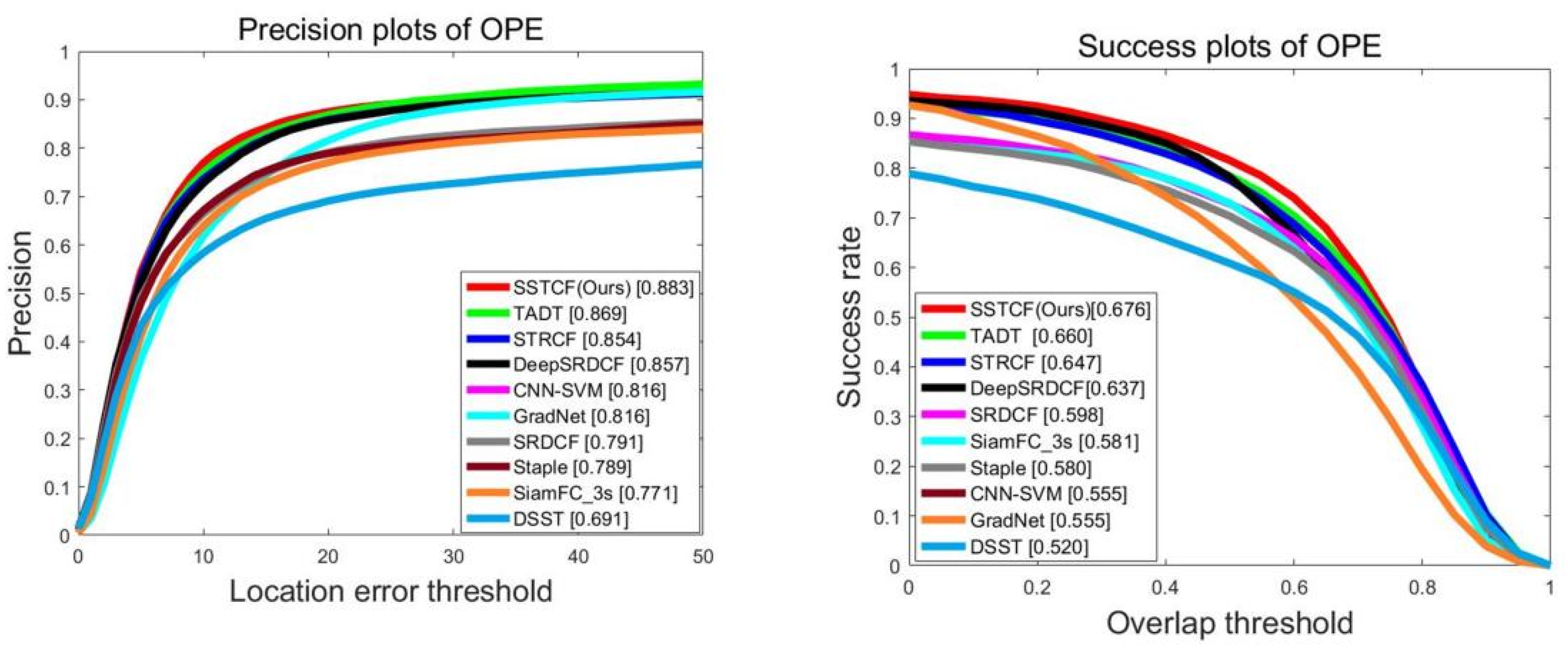

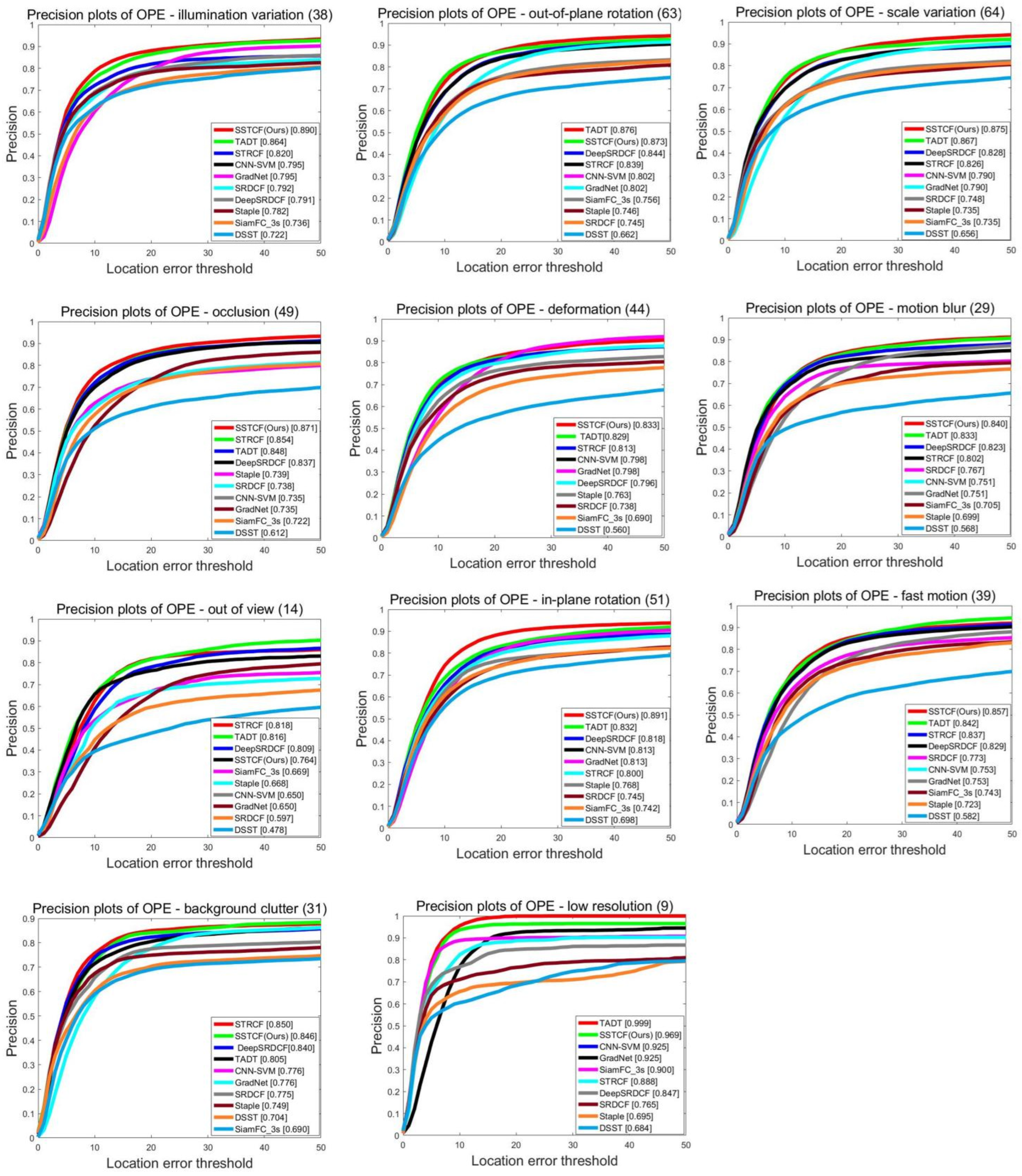

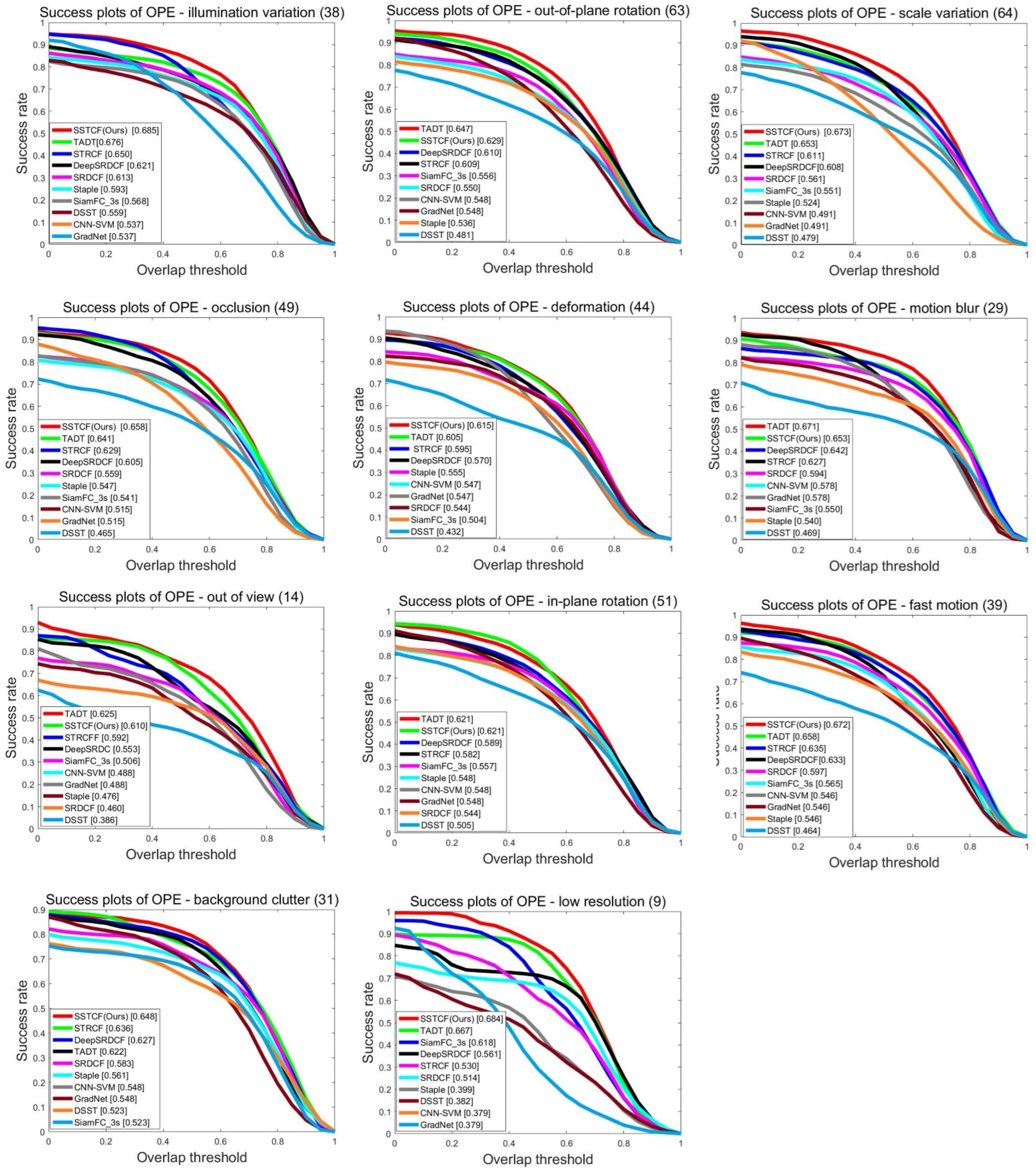

4.1. Evaluation of OTB2015 Dataset

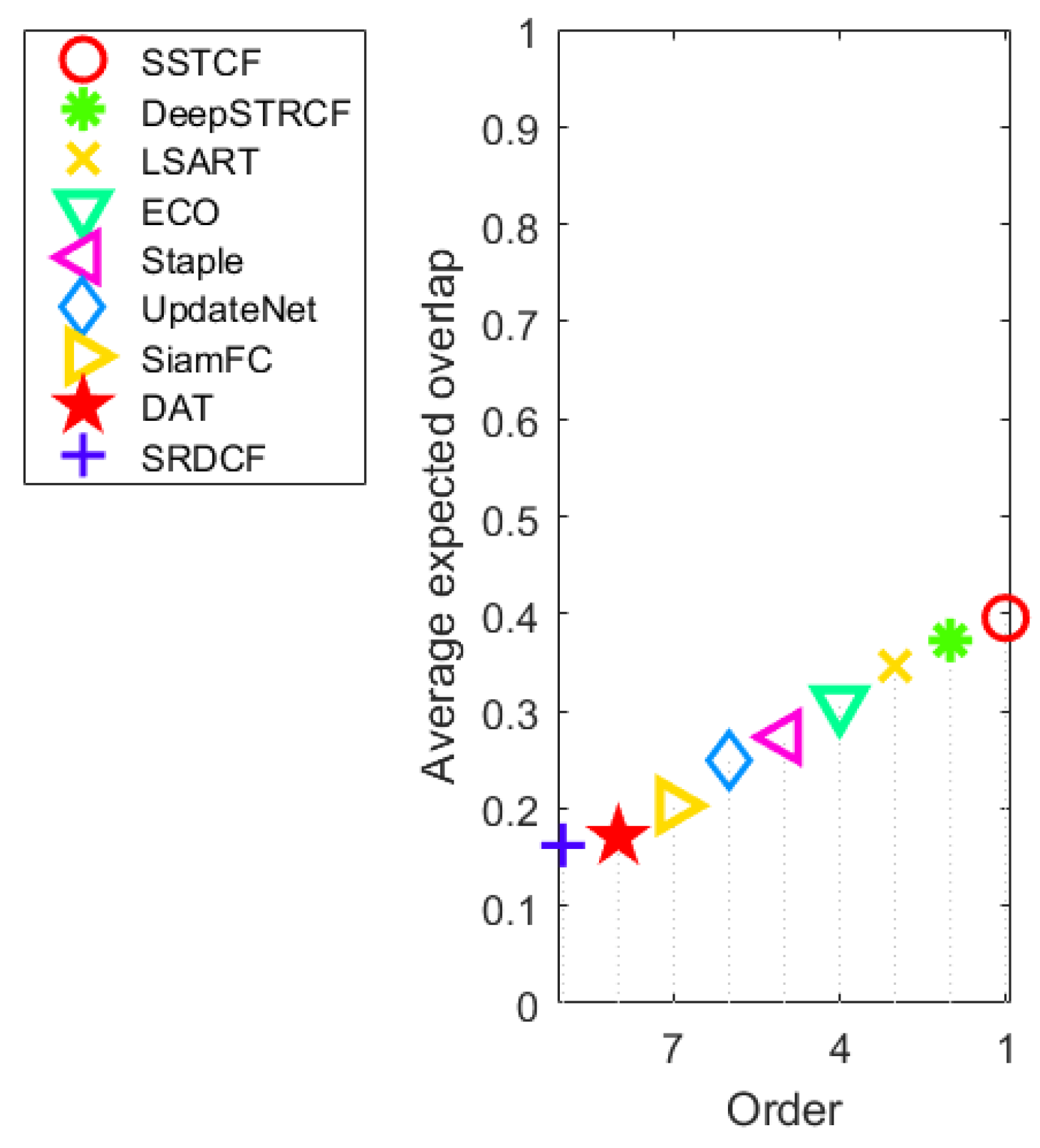

4.2. Evaluation of VOT2018 Dataset

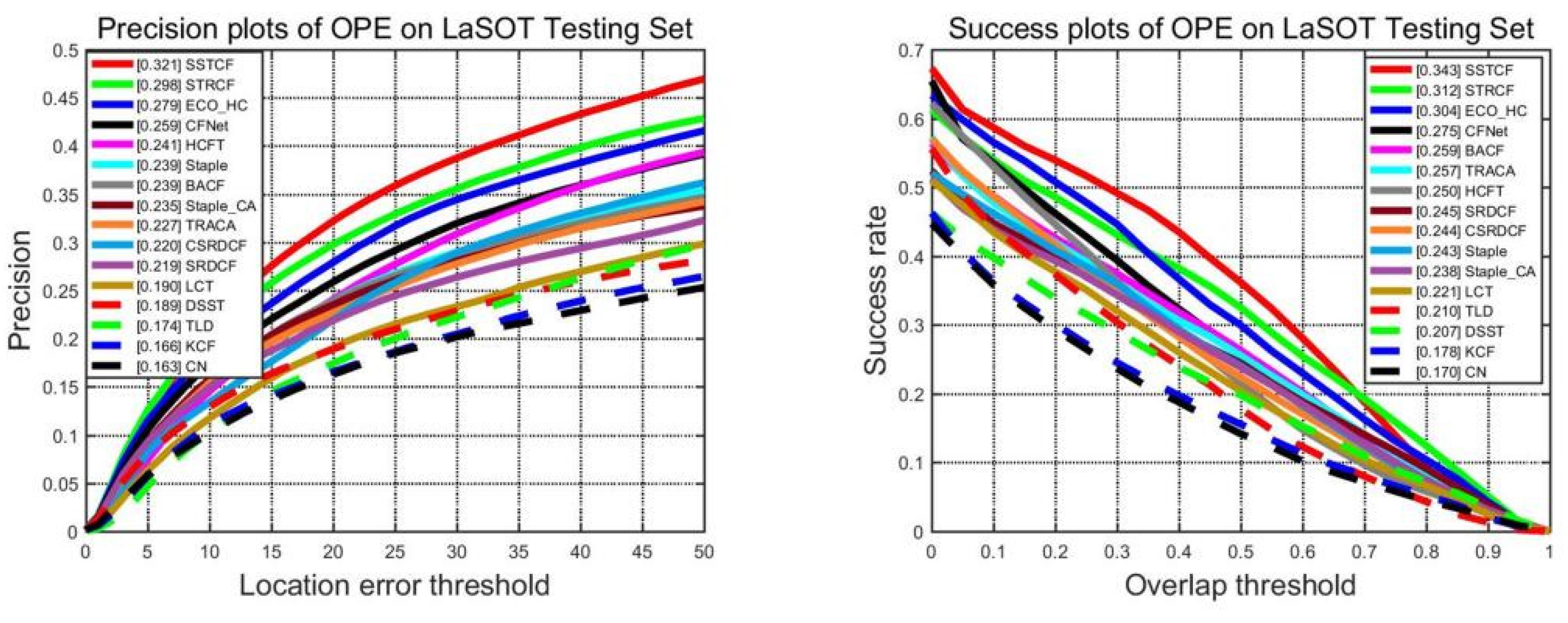

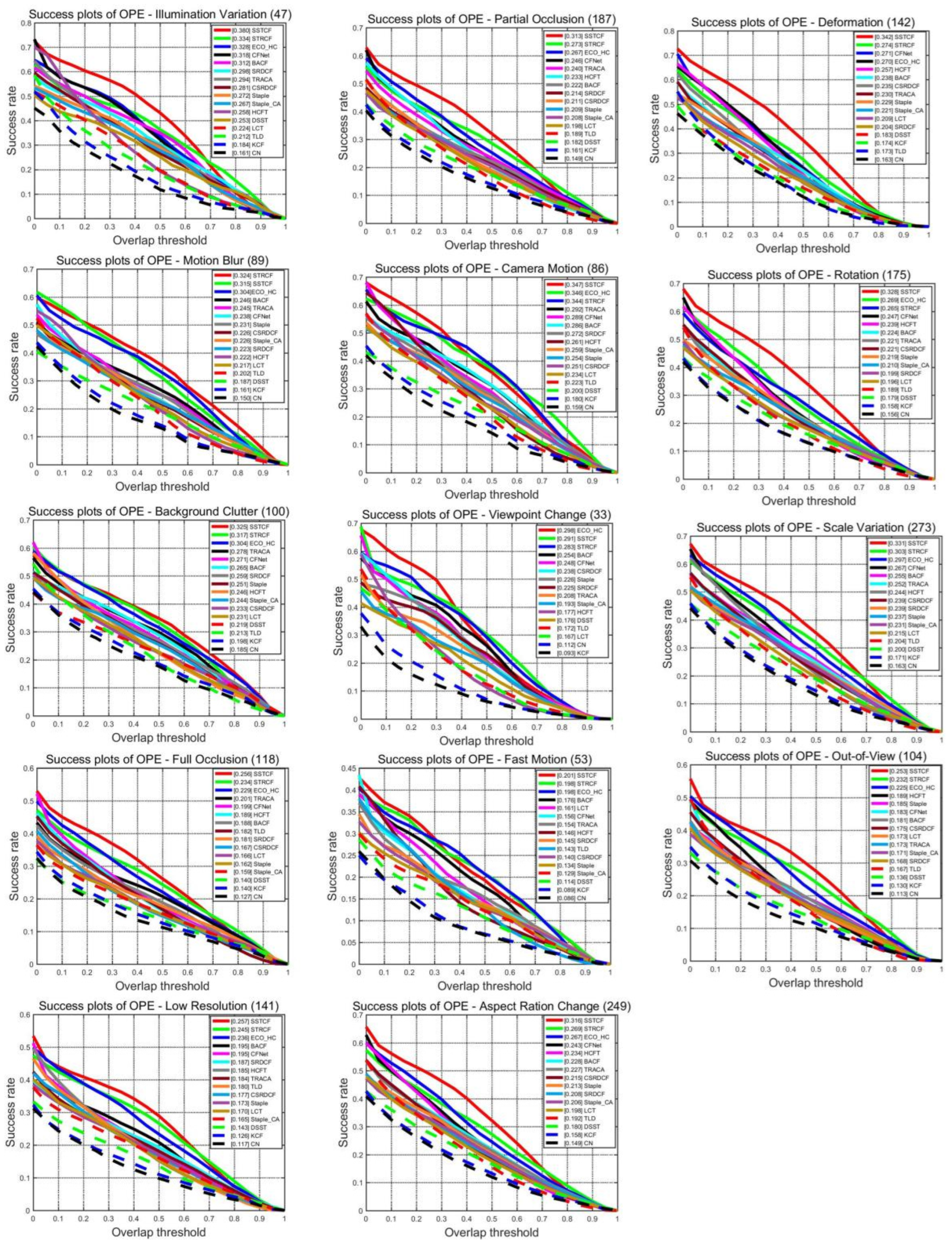

4.3. Evaluate on LaSOT Dataset

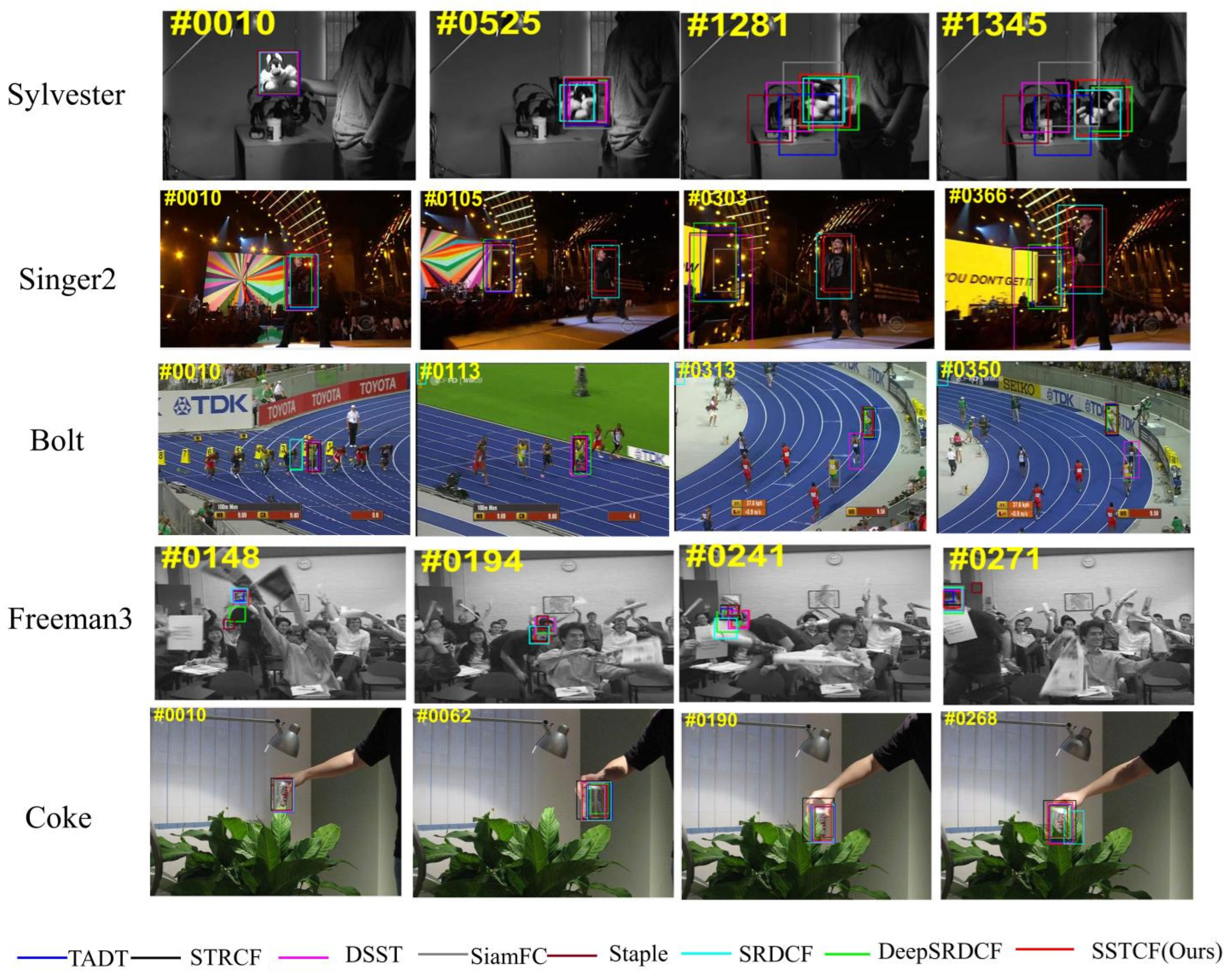

4.4. Qualitative Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Comput. Surv. 2006, 38, 13. [Google Scholar] [CrossRef]

- Li, P.X.; Wang, D.; Wang, L.J.; Lu, H.C. Deep visual tracking: Review and experimental comparison. Pattern Recognit. 2018, 76, 323–338. [Google Scholar] [CrossRef]

- Wang, N.Y.; Shi, J.; Yeung, D.Y.; Jia, J. understanding and diagnosing visual tracking systems. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3101–3109. [Google Scholar]

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A. Visual tracking: An experimental survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1442–1468. [Google Scholar] [PubMed]

- Jang, J.; Jiang, H. MeanShift++: Extremely Fast Mode-Seeking With Applications to Segmentation and Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4100–4111. [Google Scholar] [CrossRef]

- Sundararaman, R.; De Almeida Braga, C.; Marchand, E.; Pettré, J. Tracking Pedestrian Heads in Dense Crowd. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 3864–3874. [Google Scholar] [CrossRef]

- Liu, L.; Cao, J. End-to-end learning interpolation for object tracking in low frame-rate video. IET Image Process. 2020, 14, 1066–1072. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2010, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 702–715. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Discriminative Scale Space Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1561–1575. [Google Scholar] [CrossRef] [PubMed]

- Galoogahi, H.K.; Sim, T.; Lucey, S. Correlation Filters with Limited Boundaries. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4630–4638. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar] [CrossRef]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1144–1152. [Google Scholar] [CrossRef]

- Dai, K.; Wang, D.; Lu, H.; Sun, C.; Li, J. Visual Tracking via Adaptive Spatially-Regularized Correlation Filters. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 4665–4674. [Google Scholar] [CrossRef]

- Boyd, S. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2010, 3, 1–122. [Google Scholar] [CrossRef]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M. Learning Spatial-Temporal Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4904–4913. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.H.; Ding, F.Q.; Huang, Z.Y.; Lu, G. AutoTrack: Towards High-Performance Visual Tracking for UAV With Automatic Spatio-Temporal Regularization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11920–11929. [Google Scholar] [CrossRef]

- Han, R.; Feng, W.; Wang, S. Fast Learning of Spatially Regularized and Content Aware Correlation Filter for Visual Tracking. IEEE Trans. Image Process. 2020, 29, 7128–7140. [Google Scholar] [CrossRef]

- Liu, L.; Feng, T.; Fu, Y. Learning Multifeature Correlation Filter and Saliency Redetection for Long-Term Object Tracking. Symmetry 2022, 14, 911. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Z.; Xia, G.; Zhang, L. Accurate object tracking by combining correlation filters and keypoints. In Proceedings of the International Joint Conference on Neural Networks, Vancouver, BC, Canada, 24–29 July 2016. [Google Scholar] [CrossRef]

- Xie, F.; Wang, C.; Wang, G.; Cao, Y.; Yang, W.; Zeng, W. Correlation-Aware Deep Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Convolutional Features for Correlation Filter Based Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 621–629. [Google Scholar] [CrossRef]

- Danelljan, M.; Robinson, A.; Shahbaz, K.F.; Felsberg, M. Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Computer Vision—ECCV 2016. ECCV 2016. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9909. [Google Scholar] [CrossRef]

- Sun, Y.; Sun, C.; Wang, D.; He, Y.; Lu, H. ROI Pooled Correlation Filters for Visual Tracking. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5776–5784. [Google Scholar] [CrossRef]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-to-End Representation Learning for Correlation Filter Based Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Matej, K.; Ales, L.; Jiri, M.; Michael, F.; Roman, P.; Luka, C.; Tomas, V.; Goutam, B.; Alan, L.; Abdelrahman, E.; et al. The Sixth Visual Object Tracking VOT2018 Challenge Results; Leal-Taixé, L., Roth, S., Eds.; Computer Vision—ECCV 2018 Workshops. ECCV 2018. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11129. [Google Scholar] [CrossRef]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. LaSOT: A High-Quality Benchmark for Large-Scale Single Object Tracking. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern RECOgnition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5369–5378. [Google Scholar] [CrossRef]

- Eckstein, J.; Bertsekas, D.P. On the Douglas—Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 1992, 55, 293–318. [Google Scholar] [CrossRef]

- Feng, W.; Han, R.; Guo, Q.; Zhu, J.; Wang, S. Dynamic saliency-aware regularization for correlation filter-based object tracking. IEEE Trans. Image Process. 2019, 28, 3232–3245. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Cao, J.; Niu, Y. Visual Saliency Detection Based on Region Contrast and Guided Filter. In Proceedings of the 2nd IEEE International Conference on Computational Intelligence and Applications (ICCIA), Beijing, China, 8–11 September 2017; pp. 327–330. [Google Scholar]

- Yang, X.; Li, S.Y.; Ma, J.; Yang, J.Y.; Yan, J. Co-saliency-regularized correlation filter for object tracking. Signal Process. Image Commun. 2022, 103, 116655. [Google Scholar] [CrossRef]

- Goferman, S.; Zelnik-Manor, L.; Tal, A. Context-Aware Saliency Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 34, 1915–1926. [Google Scholar] [CrossRef] [PubMed]

- Karen, S.; Andrew, Z. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vision 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M. Online Object Tracking: A Benchmark. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar] [CrossRef]

- Hong, S.; You, T.; Kwak, S.; Han, B. Online tracking by learning discriminative saliency map with convolutional neural network. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lile, France, 6–11 July 2015; Volume 37, pp. 597–606. [Google Scholar]

- Li, P.; Chen, B.; Ouyang, W.; Wang, D.; Yang, X.; Lu, H. GradNet: Gradient-Guided Network for Visual Object Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6161–6170. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar] [CrossRef]

- Li, X.; Ma, C.; Wu, B.; He, Z.; Yang, M. Target-Aware Deep Tracking. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 1369–1378. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking; Hua, G., Jégou, H., Eds.; Computer Vision—ECCV 2016 Workshops. ECCV 2016. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9914. [Google Scholar] [CrossRef]

- Danelljan, M.; Robinson, A.; Shahbaz, K.F.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6931–6939. [Google Scholar] [CrossRef]

- Zhang, L.; Gonzalez-Garcia, A.; Weijer, J.V.D.; Danelljan, M.; Khan, F.S. Learning the Model Update for Siamese Trackers. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Long Beach, CA, USA, 16–20 June 2019; pp. 4009–4018. [Google Scholar] [CrossRef]

- Possegger, H.; Mauthner, T.; Bischof, H. In defense of color-based model-free tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Santiago, Chile, 7–13 December 2015; pp. 2113–2120. [Google Scholar] [CrossRef]

- Sun, C.; Wang, D.; Lu, H.; Yang, M. Learning Spatial-Aware Regressions for Visual Tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8962–8970. [Google Scholar] [CrossRef]

- Matej, K.; Jiri, M.; Alexs, L.; Tomas, V.; Roman, P.; Gustavo, F.; Georg, N.; Fatih, P.; Luka, C. A Novel Performance Evaluation Methodology for Single-Target Trackers. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2137–2155. [Google Scholar] [CrossRef]

- Lukežic, A.; Vojír, T.; Zajc, L.C.; Matas, J.; Kristan, M. Discriminative Correlation Filter with Channel and Spatial Reliability. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4847–4856. [Google Scholar] [CrossRef]

- Ma, C.; Huang, J.-B.; Yang, X.; Yang, M.H. Hierarchical Convolutional Features for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3074–3082. [Google Scholar] [CrossRef]

- Mueller, M.; Smith, N.; Ghanem, B. Context-aware correlation filter tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1396–1404. [Google Scholar]

- Choi, J.; Chang, H.J.; Fischer, T.; Yun, S.; Lee, K.; Jeong, J.; Demiris, Y.; Choi, J.Y. Context-Aware Deep Feature Compression for High-Speed Visual Tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 479–488. [Google Scholar] [CrossRef]

- Ma, C.; Yang, X.; Zhang, C.Y.; Yang, M. Long-term correlation tracking. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Santiago, Chile, 7–13 December 2015; pp. 5388–5396. [Google Scholar] [CrossRef]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Van De Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1090–1097. [Google Scholar] [CrossRef]

- Montoya-Morales, J.R.; Guerrero-Sánchez, M.E.; Valencia-Palomo, G.; Hernández-González, O.; López-Estrada, F.R.; Hoyo-Montaño, J.A. Real-time robust tracking control for a quadrotor using monocular vision. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2023, 237, 2729–2741. [Google Scholar] [CrossRef]

| Camera Motion | Empty | Illum Change | Motion Change | Occlusion | Size Change | Mean | Weighted Mean | Pooled | |

|---|---|---|---|---|---|---|---|---|---|

| SSTCF | 0.5910 | 0.5940 | 0.6010 | 0.5548 | 0.4985 | 0.5018 | 0.5589 | 0.5601 | 0.5763 |

| LSART | 0.5470 | 0.5729 | 0.5032 | 0.5071 | 0.4746 | 0.4565 | 0.5102 | 0.5234 | 0.5377 |

| DSTRCF | 0.4855 | 0.5499 | 0.5912 | 0.4493 | 0.4322 | 0.4398 | 0.4913 | 0.4866 | 0.5009 |

| ECO | 0.5221 | 0.5598 | 0.5253 | 0.4775 | 0.3714 | 0.4436 | 0.4833 | 0.4978 | 0.5130 |

| UpdateNet | 0.5226 | 0.5713 | 0.5179 | 0.4936 | 0.4805 | 0.4842 | 0.5117 | 0.5194 | 0.5324 |

| SRDCF | 0.4855 | 0.5499 | 0.5912 | 0.4493 | 0.4322 | 0.4398 | 0.4913 | 0.4866 | 0.5009 |

| Staple | 0.5580 | 0.5958 | 0.5634 | 0.5187 | 0.4764 | 0.4799 | 0.5320 | 0.5405 | 0.5518 |

| DAT | 0.4660 | 0.4812 | 0.3388 | 0.4234 | 0.3216 | 0.4382 | 0.4116 | 0.4412 | 0.4492 |

| SiamFC | 0.5144 | 0.5597 | 0.5683 | 0.5058 | 0.4361 | 0.4675 | 0.5086 | 0.5114 | 0.5165 |

| Camera Motion | Empty | Illum Change | Motion Change | Occlusion | Size Change | Mean | Weighted Mean | Pooled | |

|---|---|---|---|---|---|---|---|---|---|

| SSTCF | 11.0000 | 5.0000 | 1.0000 | 7.0000 | 9.0000 | 6.0000 | 8.0000 | 10.0835 | 33.0000 |

| LSART | 16.9333 | 3.7333 | 0.5333 | 8.6667 | 18.5333 | 7.7333 | 9.3556 | 10.2036 | 37.0667 |

| DSTRCF | 11.0000 | 11.0000 | 2.0000 | 13.0000 | 10.0000 | 7.0000 | 9.0000 | 10.2551 | 36.0000 |

| ECO | 19.000 | 7.000 | 4.000 | 18.000 | 18.000 | 9.000 | 12.500 | 13.511 | 44.000 |

| UpdateNet | 29.0000 | 11.0000 | 3.0000 | 33.0000 | 21.0000 | 13.0000 | 18.3333 | 20.8763 | 75.0000 |

| SRDCF | 52.0000 | 20.0000 | 8.0000 | 47.0000 | 27.0000 | 28.0000 | 30.3333 | 35.4262 | 116.0000 |

| Staple | 37.0000 | 24.0000 | 5.0000 | 27.0000 | 36.0000 | 25.0000 | 25.6667 | 28.7784 | 102.0000 |

| DAT | 37.0000 | 24.0000 | 5.0000 | 27.0000 | 36.0000 | 25.0000 | 25.6667 | 28.7784 | 102.0000 |

| SiamFC | 28.0000 | 14.0000 | 5.0000 | 41.0000 | 25.0000 | 22.0000 | 22.5000 | 24.6681 | 90.0000 |

| Method | All |

|---|---|

| SSTCF | 0.3935 |

| DeepSTRCF | 0.3727 |

| LSART | 0.3464 |

| ECO | 0.3077 |

| Staple | 0.2733 |

| UpdateNet | 0.2499 |

| SiamFC | 0.2029 |

| DAT | 0.1709 |

| SRDCF | 0.1621 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, L.; Feng, T.; Fu, Y.; Yang, L.; Cai, D.; Cao, Z. UAV Tracking via Saliency-Aware and Spatial–Temporal Regularization Correlation Filter Learning. Symmetry 2024, 16, 1076. https://doi.org/10.3390/sym16081076

Liu L, Feng T, Fu Y, Yang L, Cai D, Cao Z. UAV Tracking via Saliency-Aware and Spatial–Temporal Regularization Correlation Filter Learning. Symmetry. 2024; 16(8):1076. https://doi.org/10.3390/sym16081076

Chicago/Turabian StyleLiu, Liqiang, Tiantian Feng, Yanfang Fu, Lingling Yang, Dongmei Cai, and Zijian Cao. 2024. "UAV Tracking via Saliency-Aware and Spatial–Temporal Regularization Correlation Filter Learning" Symmetry 16, no. 8: 1076. https://doi.org/10.3390/sym16081076

APA StyleLiu, L., Feng, T., Fu, Y., Yang, L., Cai, D., & Cao, Z. (2024). UAV Tracking via Saliency-Aware and Spatial–Temporal Regularization Correlation Filter Learning. Symmetry, 16(8), 1076. https://doi.org/10.3390/sym16081076