1. Introduction

Single-Hidden Layer Feedforward Neural Networks (SLFNs) [

1] are popular training algorithms, which have a hidden layer and output layer, and the weight between the input layer and the hidden layer is adjustable. When we correctly choose the activation function of the hidden node, SLFNs can form a decision region of arbitrary shape. SLFNs have a large number of applications in the field of pattern recognition [

2], extracting the features of the input data in the hidden layer, with the network classifying and recognizing different modes, such as speech recognition [

3], image classification [

4], etc. In addition, they are also widely used to solve nonlinear problems and time series analysis, for instance, stock price forecast [

5], weather forecast [

6], etc. Although SLFNs have many advantages and applications, they also have great limitations. Because SLFNs rely too much on the training sample, the networks are not able to generalize well to a new dataset, which makes the methods prone to overfitting phenomena. Moreover, when processing large-scale datasets, the training speed of SLFNs is relatively slow, and the accuracy is correspondingly reduced.

In order to break the bottleneck of SLFNs, Extreme Learning Machine (ELM) was proposed by Professor Huang [

1,

7] in 2004. ELM is a new single-hidden layer feedforward network training algorithm. The advantage of this framework is that the input weights and the bias of the hidden nodes are randomly generated, and we only need to analyze the output weights of all the parameters. Compared with traditional neural networks, ELM has the advantages of simple structure, good versatility, and low computational cost [

8]. In recent years, due to the rapid learning, outstanding generalization, and general approximation capability [

9,

10,

11,

12,

13,

14,

15], ELM has been used in biology [

9,

10], pattern classification [

11], big data [

12], robotics [

13], and other fields. However, ELM learns only one hyperplane, which leads to challenges in ELM for handling large-scale datasets as well as non-balanced data. Therefore, two non-parallel hyperplanes have been developed [

16,

17,

18,

19]. One of the most widely known is the Twin Support Vector Machine (TSVM), which was presented by Jayadeva et al. [

16]. Influenced by TSVM, the Twin Extreme Learning Machine (TELM) was introduced by Wan et al. [

20]. TELM introduces two ELM models and trains them together, so TELM learns two hyperplanes. The inputs of the two hyperplanes are the same dataset, and different feature expressions are learned under different target functions. Finally, the results obtained by the two models are integrated to obtain richer feature expression and classification results. In 2019, Rastogi et al. [

21] proposed the Least Squares Twin Extreme Learning Machine (LS-TELM). The LS-TELM introduces the least squares method based on the TELM to solve the weight matrix between the hidden layer and the output layer. While maintaining the advantages of TELM, LS-TELM transforms the inequality constraints into equality constraints, so that the problem becomes solving two sets of linear equations, which greatly reduces the computational cost.

In many areas, TELM and its variants are widely used, but they encounter bottlenecks when dealing with issues with outliers. To remove this dilemma, many scholars have studied deeply and proposed many robust algorithms based on TELM (see [

22,

23,

24,

25,

26]). For example, Yuan et al. [

22] proposed Robust Twin Extreme Learning Machines with correntropy-based metric (LCFTELM) which enhance the robustness and classification performance of the TELM by employing the non-convex fractional loss function. A Robust Supervised Twin Extreme Learning Machine (RTELM) was put forward by Ma and Li [

23]. The proposed framework employs a non-convex squared loss function, which greatly suppresses the negative effects of outliers. The presence of outliers is an important factor affecting the robustness. To reduce the effect of outliers and improve the robustness of the model, we can use a non-convex loss function so that it can consistently penalize outliers. The above experimental results show that it is an effective method. Therefore, to suppress the negative effects of the outliers, we introduce a non-convex, bounded, and smooth loss function (Welsch loss) [

27,

28,

29,

30]. The Welsch estimation method is a robust estimation. The Welsch loss is a loss function based on the Welsch estimation method. It can be expressed as

, where

is a turning parameter that can control the degree of penalty for the outliers. When the data error is normally distributed, it is comparable to the mean squared error loss, but, when the error is non-normally distributed, if the error is caused by outliers, the Welsch loss is more robust than the mean squared error loss.

It is worth mentioning that TELM has good performance in classification, but it uses the square

-norm distance, which increases the influence of outliers on the model and changes the construction of the hyperplane. In recent years, many researchers have also turned their attention to the

-norm measure and proposed a series of robust algorithms, such as

-norm and non-square

-norm [

31], Non-parallel Proximal Extreme Learning Machine (

-NPELM) [

32] based on

-norm distance measure, and robust

-norm Twin Extreme Learning Machine (

-TELM) [

33]. Overall, the

-norm alleviates the effects of outliers and improves the robustness, but it also performs poorly when dealing with large numbers of outliers due to the unboundedness of the

-norm. Based on this point, the document [

33] presented Capped

-norm Support Vector Classification (SVC). The Capped

-norm Least Squares Twin Extreme Learning Machine (C

-LSTELM) was proposed in [

34]. The convergence of the above methods was proven in theory, and the capped

distance metric significantly improves the robustness when dealing with outliers.

Inspired by the above excellent works, we propose two novel distance metric optimization-driven robust twin extreme learning machine learning frameworks for pattern classification, namely, CWTELM and FCWTELM. CWTELM was based on optimization theory. CWTELM introduced the capped -norm measure and Welsch loss into the model, which greatly improves the robustness and classification ability. In addition, in order to maintain relatively stable classification performance of CWTELM and accelerate its operation, we presented the least squares version of CWTELM (FCWTELM). Experimental results with different noise rates and different datasets show that the CWTELM and FCWTELM algorithms have significant advantages in terms of classification performance and robustness.

The main work of this paper is summarized as follows

- (1)

By imbedding the capped -norm metric distance and Welsch loss to the TELM, a novel robust learning algorithm called Capped -norm Welsch Robust Twin Extreme Learning Machine (CWTELM) is proposed. CWTELM enhances the robustness while maintaining the superiority of the TELM, so that the performance of classification is also polished;

- (2)

To speed up the computation of CWTELM and carry forward its advantages, we present a least square version of CWTELM, namely, Fast CWTELM (FCWTELM). While inheriting the superiority of the CWTELM, FCWTELM transforms the inequality constraints into equality constraints, so that the problem becomes solving two sets of linear equations, which greatly reduces the computational cost;

- (3)

Two efficient iterative algorithms are designed to solve CWTELM and FCWTELM, which are easy to realize, and guarantee the existence of a reasonable optimization method theoretically. Simultaneously, we have carried out a rigorous theoretical analysis and proof of the convergence of the two designed algorithms;

- (4)

A great deal of experiments conducted across various datasets and different noise proportions demonstrates that CWTELM and FCWTELM are competitive with five other traditional classification methods in terms of robustness and practicability;

- (5)

A statistical analysis is performed for our algorithms, which further verifies that CWTELM and FCWTELM exceed five other classifiers in robustness and classification performance.

The remainder of the article is constructed as follows. In

Section 2, we briefly review the TELM, LS-ELM, RTELM, Welsch loss, and the capped

-norm. In

Section 3, we describe the proposed CWTELM and FCWTELM in detail and give an analysis in theory. In

Section 4, we introduce our experimental setups; the proposed algorithm is compared with five other classical algorithms with different noise and different datasets, and the statistical detection analysis is implemented. This article is summarized in

Section 5 after giving experimental results for multiple datasets in

Section 4. First we present the abbreviations and main notations in

Table 1 and

Table 2.

5. Conclusions

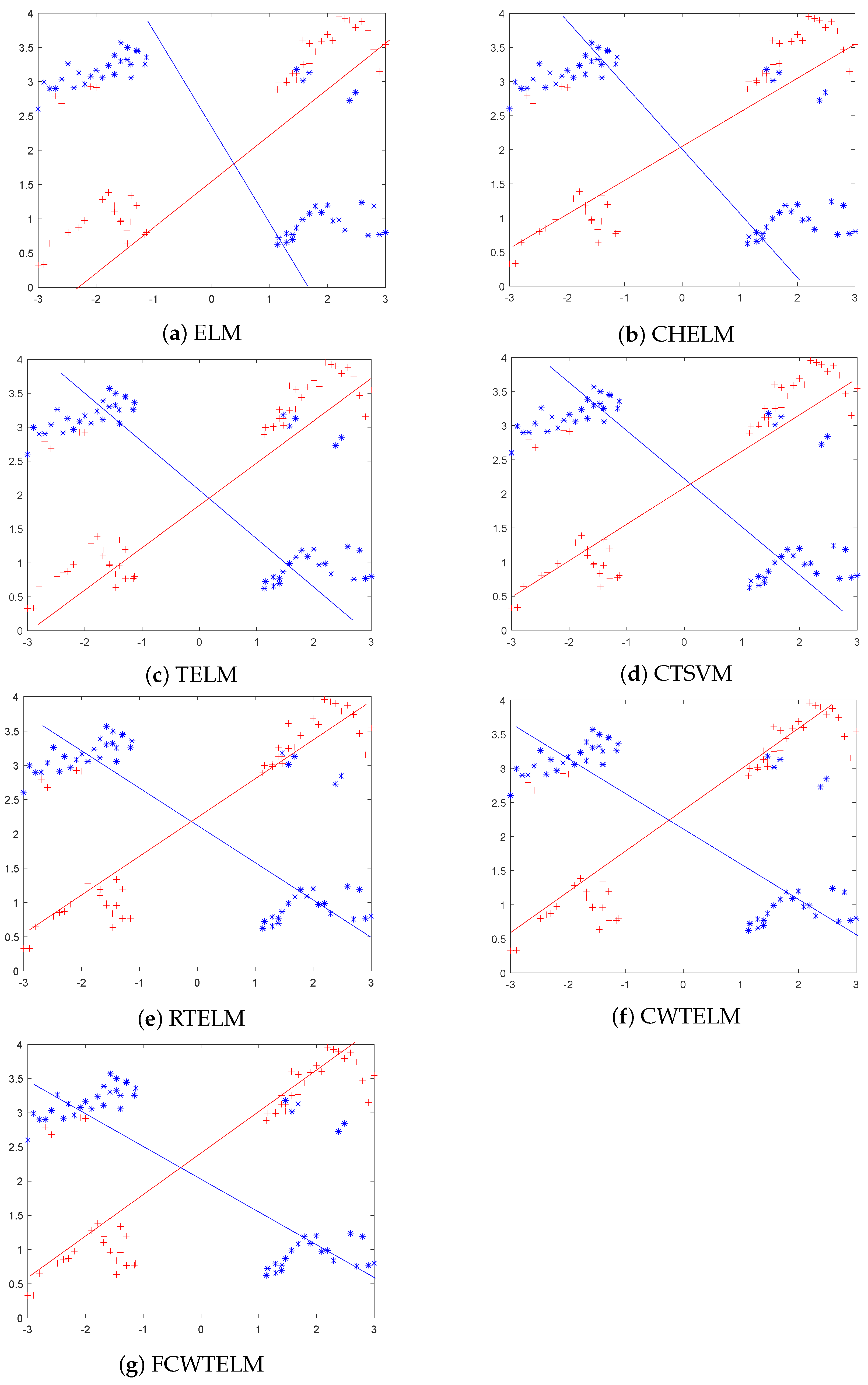

The Welsch loss function has good qualities such as smooth, non-convex and boundness and, therefore, it is more robust than the commonly used and losses. Capped -norm is an excellent norm distance that can reduce the negative effects of outliers and thus improve the robustness of the model. In this paper, we proposed a distance metric optimization-driven robust twin extreme learning machine learning framework, namely CWTELM, which introduced Welsch loss and -norm distance to the TELM in order to enhance the performance of robust. Then, to speed up the computation of CWTELM while maintaining its advantages, we presented a least square version of CWTELM, namely Fast CWTELM (FCWTELM). Meanwhile, we design two efficient iterative algorithms to solve CWTELM and FCWTELM, respectively, and guarantee their convergence and computational complexity in theory. To evaluate the performance of CWTELM and FCWTELM, we experiment with them with five classical algorithms in different datasets and different noise rates. In the absence of noise, CWTELM achieved the best results in seven datasets. The experimental results of FCWTELM in the eight datasets are slightly lower than CWTELM, but the gap is small, and its running time is the shortest among the seven algorithms. In the case of noise, we take 10% noise as an example, CWTELM achieved the best results in Australian, Balance, Cancer, Wholesale, QSAR, WDBC, and FCWTELM performed the best in Pima. From a running time perspective, FCWTELM has the fastest running speed in the six datasets and all within 1 s. In addition, we found that CWTELM and FCWTELM have little difference between no noise and 10% noise conditions in same dataset. We continue to observe the experimental data with 20% and 25% noise and can also obtain the above conclusions. To this end, this paper takes Australian, Vote, WDBC, and Cancer as examples to more clearly show the accuracy of the seven algorithms in the form of different noise proportions. Similarly, we also conducted comparative experiments on the seven algorithms in the artificial dataset, and showed the classification effect of the seven algorithms more intuitively in the form of a scatter plot. The performance of CWTELM and FCWTELM is still excellent. Finally, we carried out statistical tests on seven algorithms and verified that CWTELM and FCWTELM exceeded other five models and that the two models had no significant difference in performance. From the above works, we can obtain that CWTELM and FCWTELM alleviate the negative effects of outliers to some extent, so they have good robustness. Besides they also have little difference in classification performance and have a outstanding operation while maintaining the advantages of TELM. The algorithms CWTELM and FCWTELM proposed in this paper can be applied to pattern classification. On the one hand, our algorithm has good classification representation and robustness, and it can learn the nonlinear relationship between the input data. In this way, a high-precision classification model can be obtained. Therefore, our model is able to obtain more accurate results when performing the pattern classification. On the other hand, our algorithm can improve the robustness of pattern classification. They can automatically choose and solve the specificity in the classification process, and can deal with the noise between different categories, so they are more suitable for different pattern classification tasks in practical application scenarios. Of course, in addition to pattern classification, CWTELM and FCWTELM can also be applied in many fields, such as data mining, pattern recognition, action recognition in robot control, path planning, image classification and so on. In the future, to improve the algorithms we proposed, in-depth studying for them is necessary, such as exploring better loss functions for the TELM framework to improve the robustness of the model and algorithm performance. In addition, we can also deepen the basic research, derive the upper bound of their generalization ability, etc.