Abstract

We propose a new tool for estimating the complexity of a time series: the entropy of difference (ED). The method is based solely on the sign of the difference between neighboring values in a time series. This makes it possible to describe the signal as efficiently as prior proposed parameters, such as permutation entropy (PE) or modified permutation entropy (mPE). Firstly, this method reduces the size of the sample that is necessary to estimate the parameter value, and secondly it enables the use of the Kullback–Leibler divergence to estimate the “distance” between the time series data and random signals.

PACS:

05.45.-a; 05.45.Tp; 05.45.Pq; 89.75.-k; 87.85.Ng

MSC:

65P20; 65Z05; 91B70

1. Introduction

Permutation entropy (PE), introduced by Bandt and Pompe [1], as well as its modified version [2], are both efficient tools for measuring the complexity of chaotic time series. Both methods propose to analyze time series by first choosing an embedding dimension m to split the original data in a subset of m-tuples, , and to then substitute the m-tuples values by the rank of the values, resulting in a new symbolic representation of the time series. For example, consider the time series . Choosing, for example, an embedding dimension , will split the data in a set of 4-tuples: . The Bandt–Pompe method will associate the rank of the value with each 4-tuple. Thus, in , the lowest element is in Position 2, the second element is in Position 1, is in Position 4, and finally is in Position 3. Thus, the 4-tuple is rewritten as . This procedure thus results in each being rewritten as a symbolic list: . Each element is then a permutation of the set . Next, the probability of each permutation in is then computed, , and finally, the PE for the embedding dimension m is defined as . The modified permutation entropy (mPE) just deals with those cases in which equal quantities may appear in the m-tuples. For example, for the m-tuple , computing PE will produce , while computing mPE will associate . Both methods are widely used due to their conceptual and computational simplicity [3,4,5,6,7,8]. For random signals, PE leads to a constant probability (for white Gaussian noise), which does not make it possible to evaluate the “distance” between the probability found in the signal, , and the probability produced by a random signal, , with the Kullback–Leibler (KL) divergence [9,10]: . Furthermore, the number of m-tuples is for PE and even greater for mPE [2], thus requiring then a large data sample to perform a significant statistical estimation of .

2. The Entropy of Difference Method

The entropy of difference (ED) method proposes to substitute the m-tuples with strings s containing the sign (“+” or “−”), representing the difference between subsequent elements in the m-tuples. For the same , this leads to the representation (“− + −”, “+ − −”, “− − +”, ⋯). For an m value, we have strings s from “+ + + ⋯ +” to “− − − ⋯ −”. Again, we compute, in the time series, the probability distribution of these strings s and define the entropy of difference of order m as . The number of elements, , to be treated, for an embedding m, is smaller for ED compared with the number of permutations in PE or to the elements in mPE (see Table 1).

Table 1.

K values, for different m-embeddings.

Furthermore, the probability distribution for a string s, in a random signal, , is not constant and could be computed through the recursive equation. Indeed, let be the probability density for the signal variable at time t, and let be the corresponding cumulative distribution function (). Consider the hypothesis that the signal is not correlated in time, which means that the join probability is only the product of the probability . Under these conditions, we can easily evaluate the . For example, . We therefore have three data , and we can have 4 possibilities. is the probability of having , is that of having and , is that of having and , and finally is that of having . Using the Heaviside step function , if , and if , and noting the cumulative distribution function , we can evaluate :

For , we need to integrate . Using the obvious , we have

This result is totally independent of the probability density provided that the signal is not correlated in time. We can proceed in the same way for any and thus obtain a recurrence on (see Appendix A) (in the following equations, x and y are strings made of “+” and “−”):

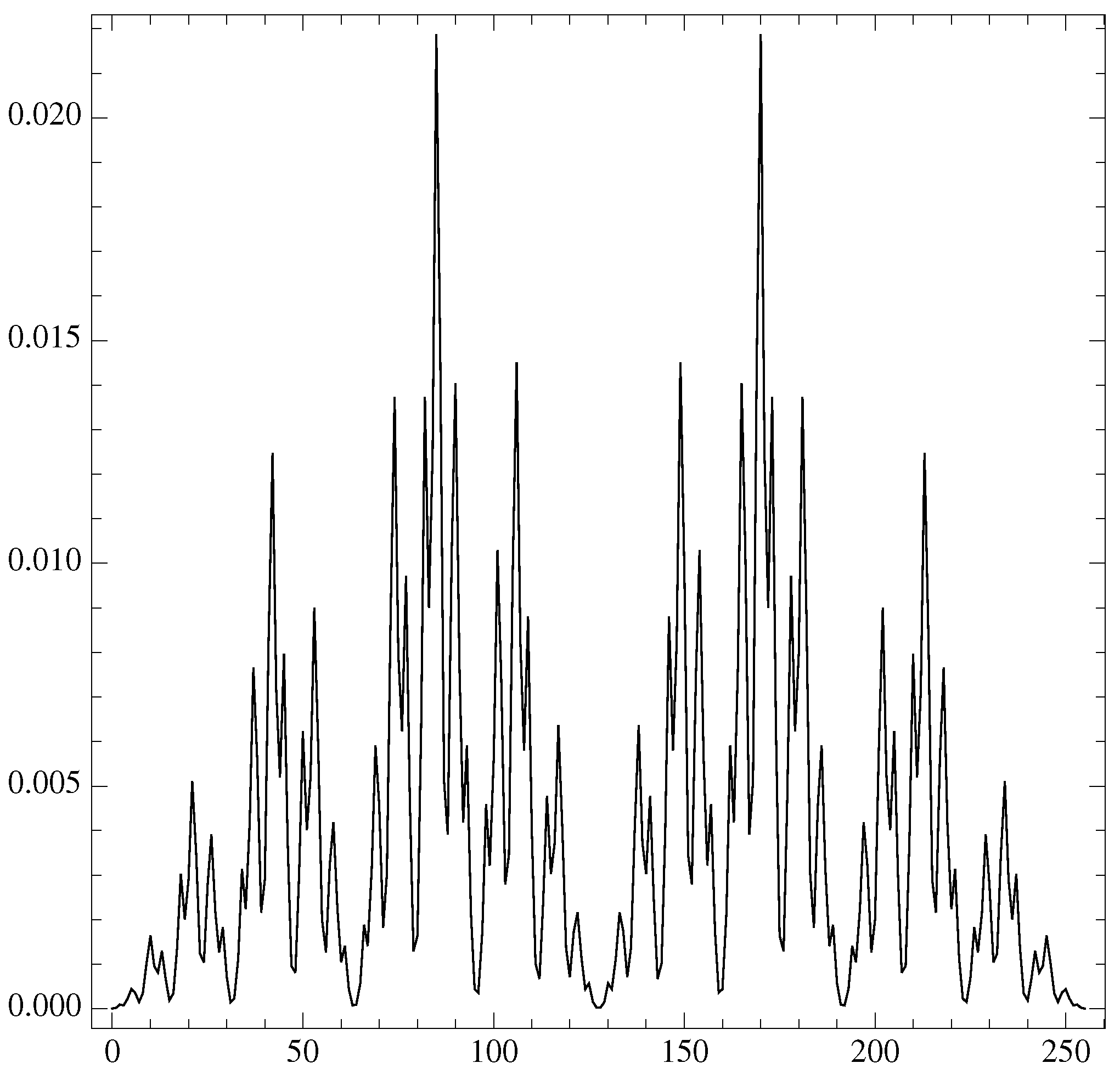

leading to a complex probability distribution for . For example, for , we have strings with the highest probability for the “+ − + − + − + −” string (and its symmetric “− + − + − + − +”): (see Figure 1). These probabilities could then be used to determine the KL-divergence between the time series probability and the random uncorrelated signal.

Figure 1.

The values for the probability of , from to .

To each string s, we can associate an integer number, its binary representation, through the substitutions and . Therefore, for , we have “− − −” = 0, “− − +” = 1, “− + −” = 2, “− + +” = 3, and so on, up to “+ + +” = 7 (see Table 2 and Table 3).

Table 2.

values, for different m-embeddings, ordered by the binary representation of the string.

Table 3.

values, for different m-embeddings.

The recurrence gives some specific . To simplify the notations, we can write , a set of a successive “+”. For example, the second and third rules gives

then

We can also write

This equation is also valid when and thus for (with ) or for . We can continue in this way and determine the general values of and so on.

In the case, where the data are integers, we can avoid the situation where two successive data are equal () by adding a small amount of random noise. For example, we take the first decimal of (and we add a small amount of noise ), and we have the following (See Table 4 and Table 5):

Table 4.

values for , for different m-embeddings.

Table 5.

values for , for different m-embeddings.

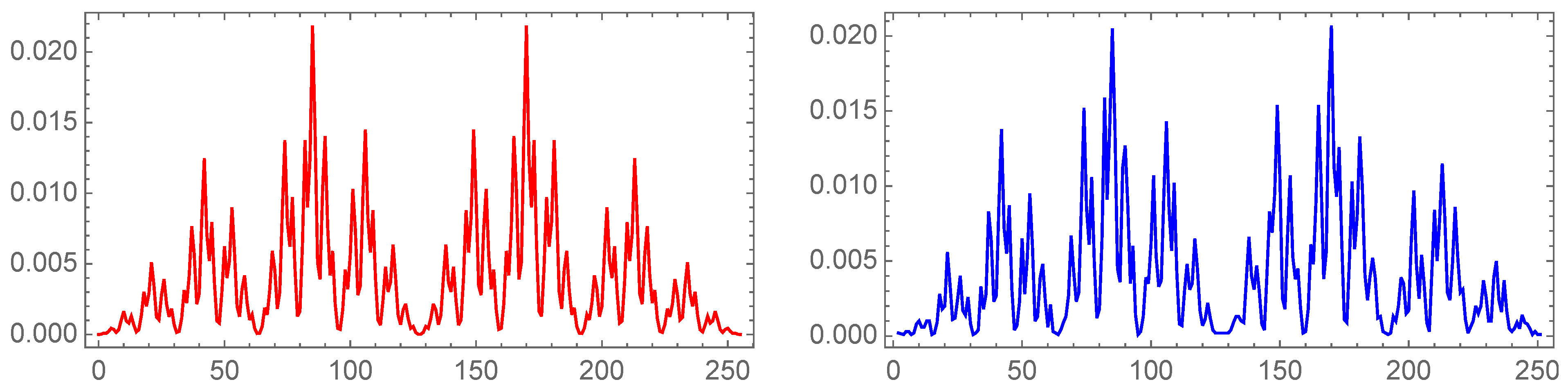

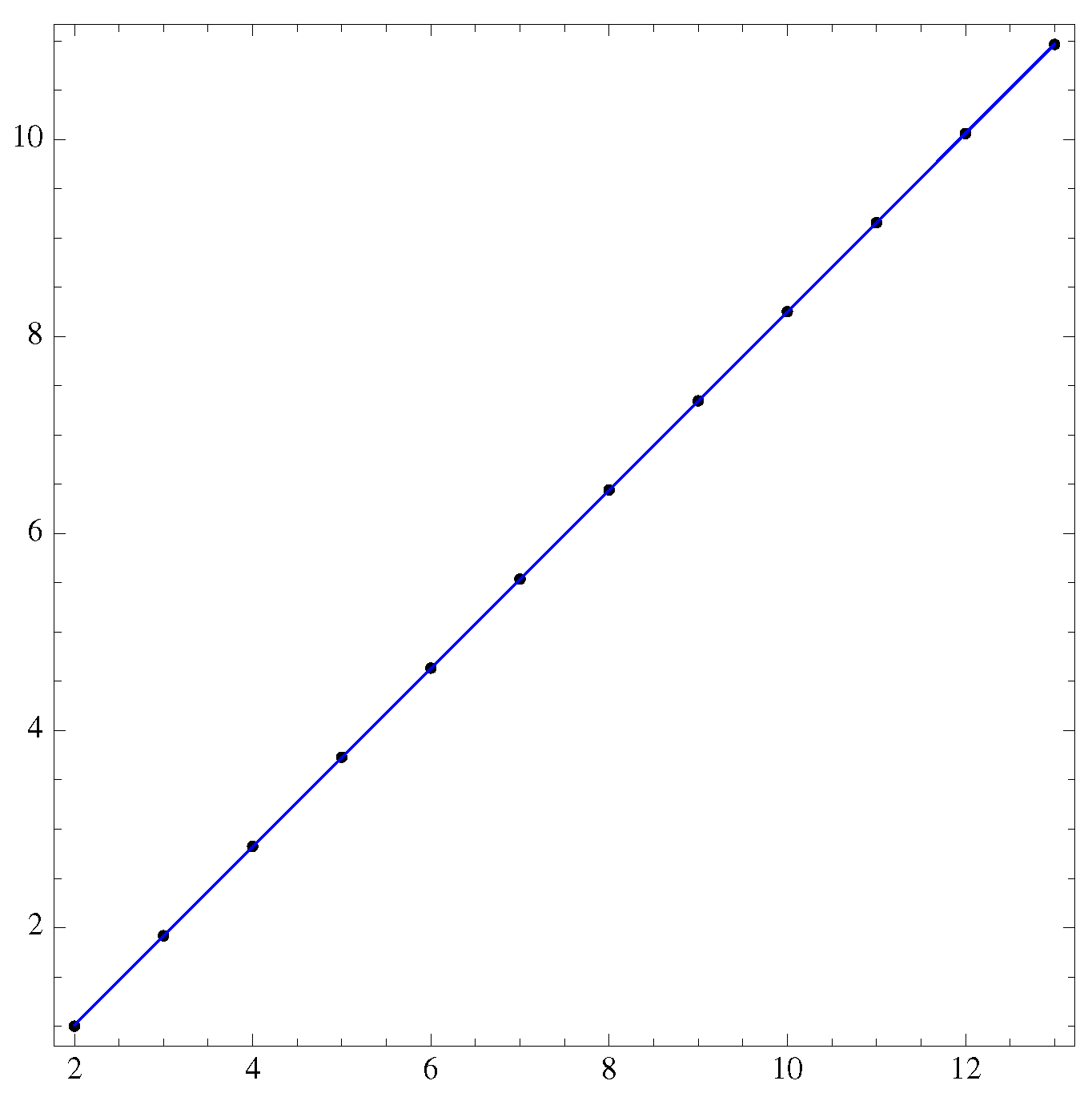

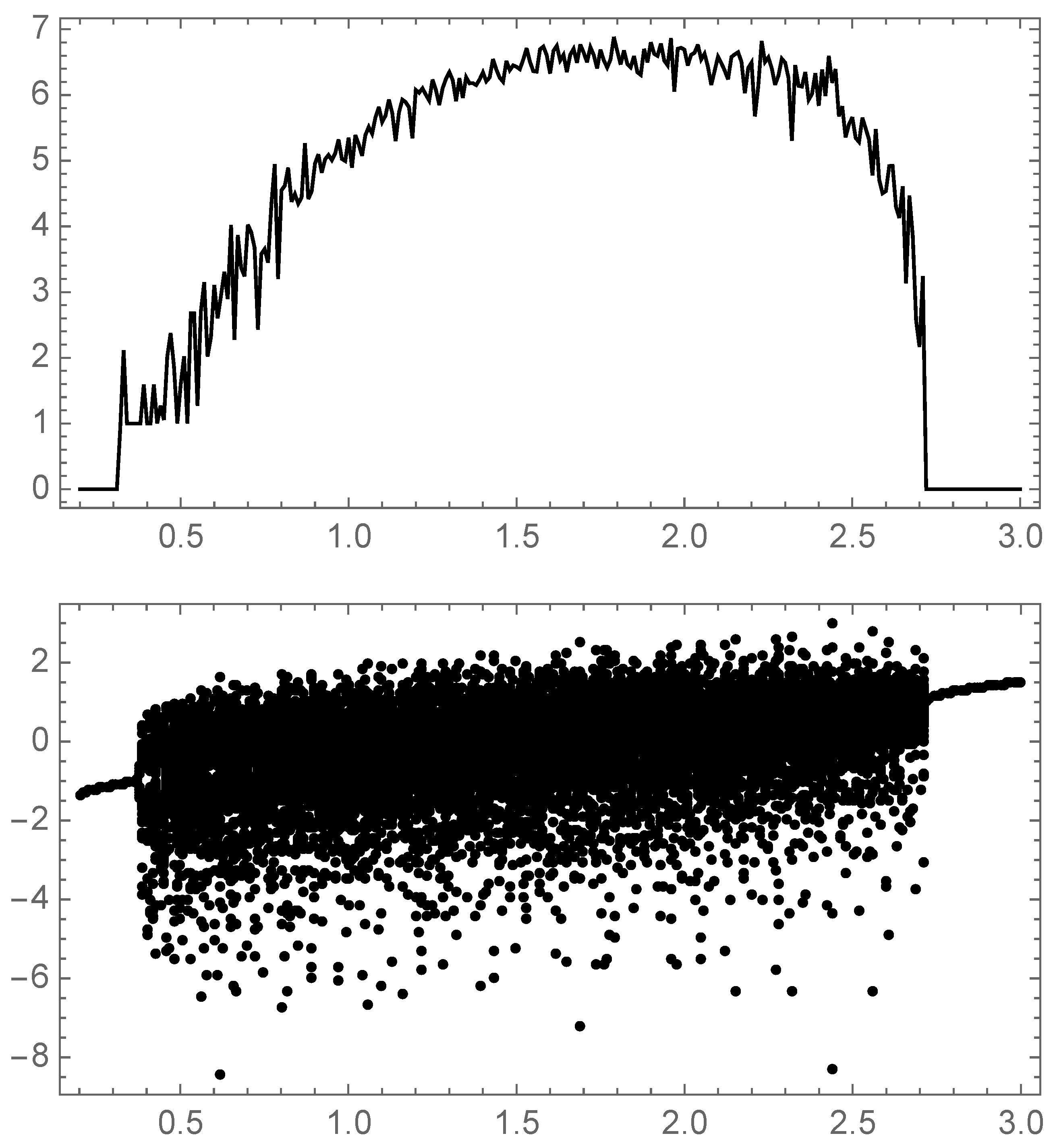

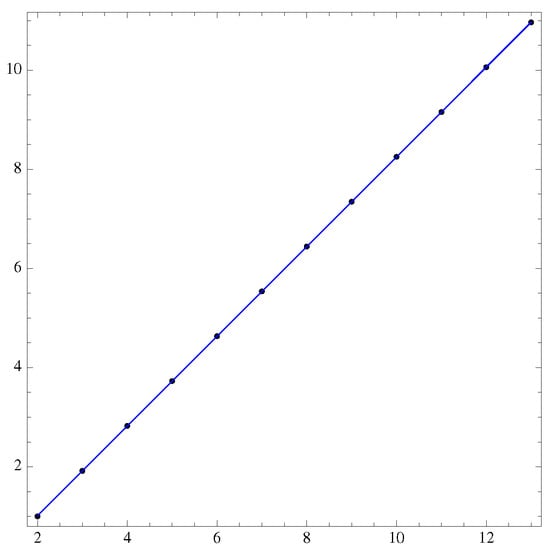

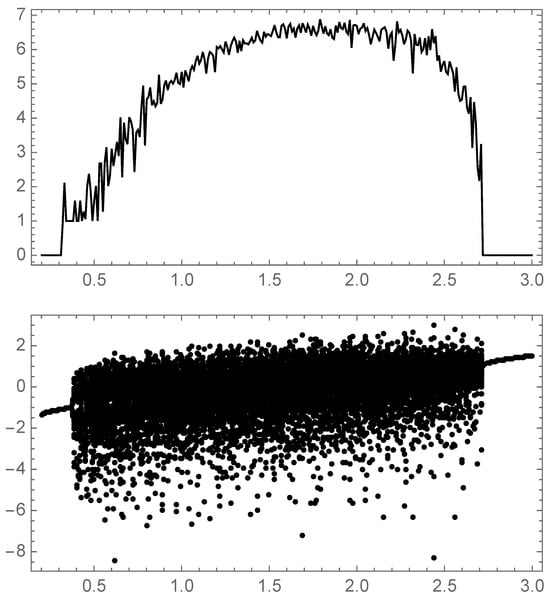

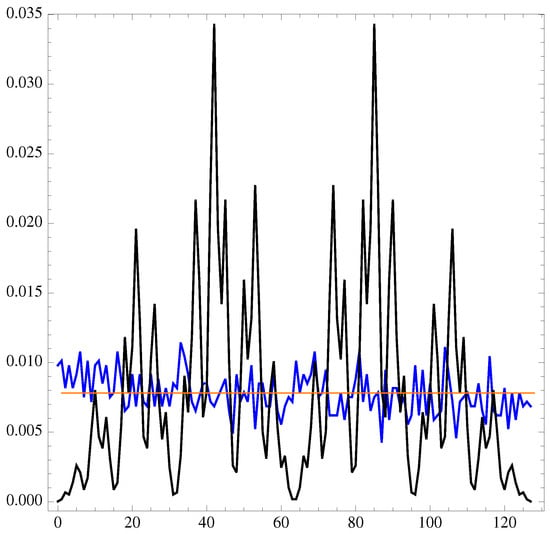

Despite the complexity of , the Shannon entropy for a random signal, , increases linearly with m (see Figure 2): . If the m-tuples are equiprobable, it will lead to .

Figure 2.

The values for the probability of , for the decimal (blue), and for a random distribution (red).

3. Periodic Signal

We will now see what happens with a period 3 data . To evaluate , we only have 3 types of 2-tuples. For example, for , we have , , and . We have only two possible strings, “+” or “−”, so the probabilities must be or . For , again we have only 3 types of 3-tuples: , , and . We have possible strings , , , and . The consistency of the inequalities between , , and reduces the number of possible strings to 3. For example, if gives , then must be , and must be . Due to Period 3, these values will appear times. To evaluate , we have again only 3 types of 4-tuples: , , and , and again these will appear times in the data. This reasoning can be generalized to a signal of period p, ; consequently, , and this remains constant for . Obviously, since we are only using the differences between the ’s, the periodicity in terms of signs may be smaller than the periodicity p of the data, so .

4. Chaotic Logistic Map Example

Let us illustrate the use of ED on the well known logistic map [11] driven by the parameter .

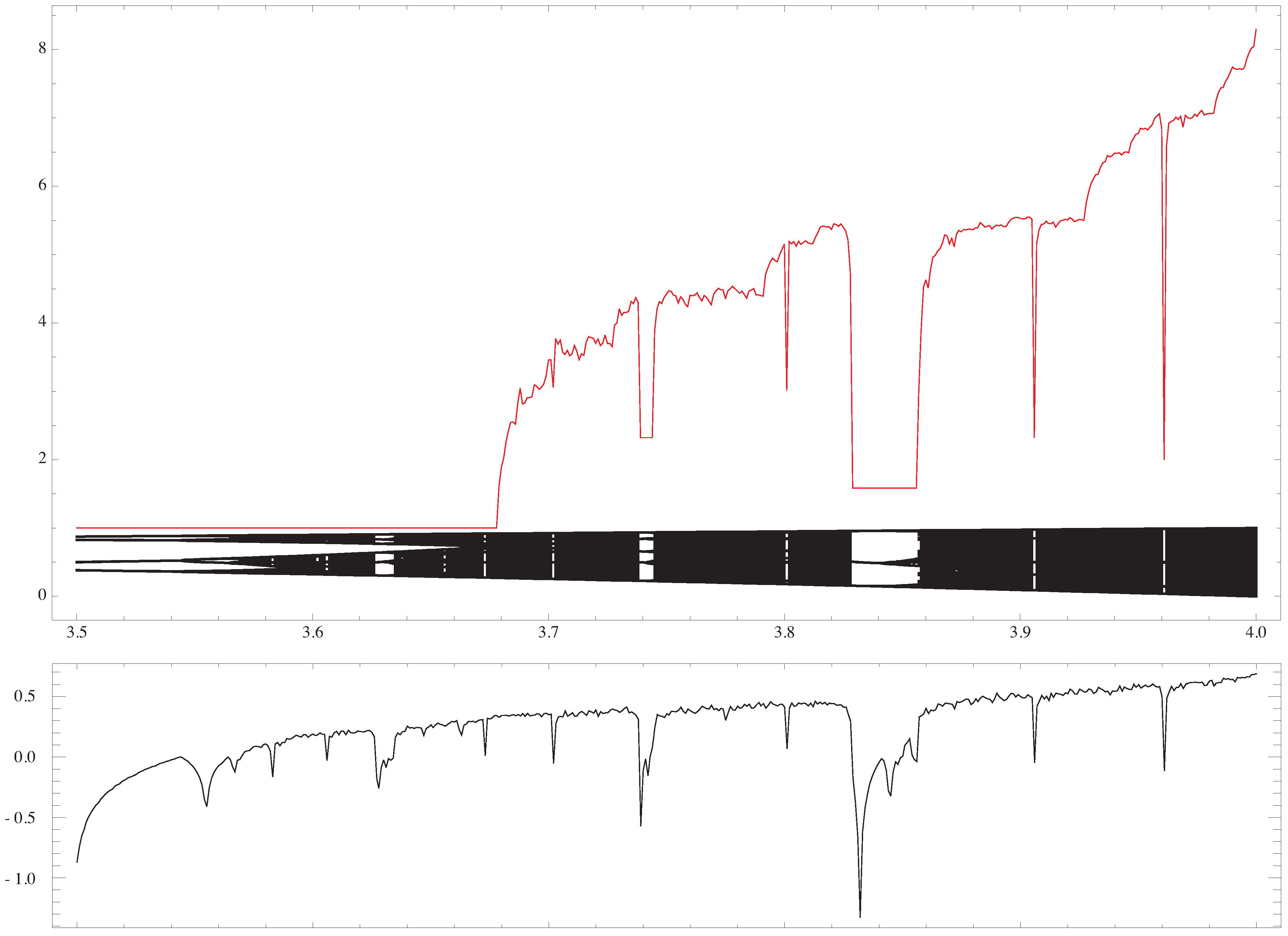

It is obvious that, for a range of values of , where the time series reaches a periodic behavior (any cyclic oscillation between n different values), the ED will remain constant. The evaluation of the ED could thus be used as a new complexity parameter to determine the behavior of the time series (see Figure 3).

Figure 3.

The Shannon entropy of : increases linearly with m, and the fit gives a sum of squared residuals of and a p-value = and on the fit parameter respectively.

For , we know that the data are randomly distributed with a probability density given by [12]

However, the logistic map produces correlations in the data, so we expect a deviation from the uncorrelated random .

We can then compute exactly the ED for an m-embedding as well as the KL-divergence from a random signal. For example, for , we can determine and by solving the inequality and , respectively, which implies that and . Then,

In this case, the logistic map produces a signal that contains twice as many increasing pairs “+” than decreasing pairs “−”. Thus,

For , we can perform the same calculation. We have, respectively,

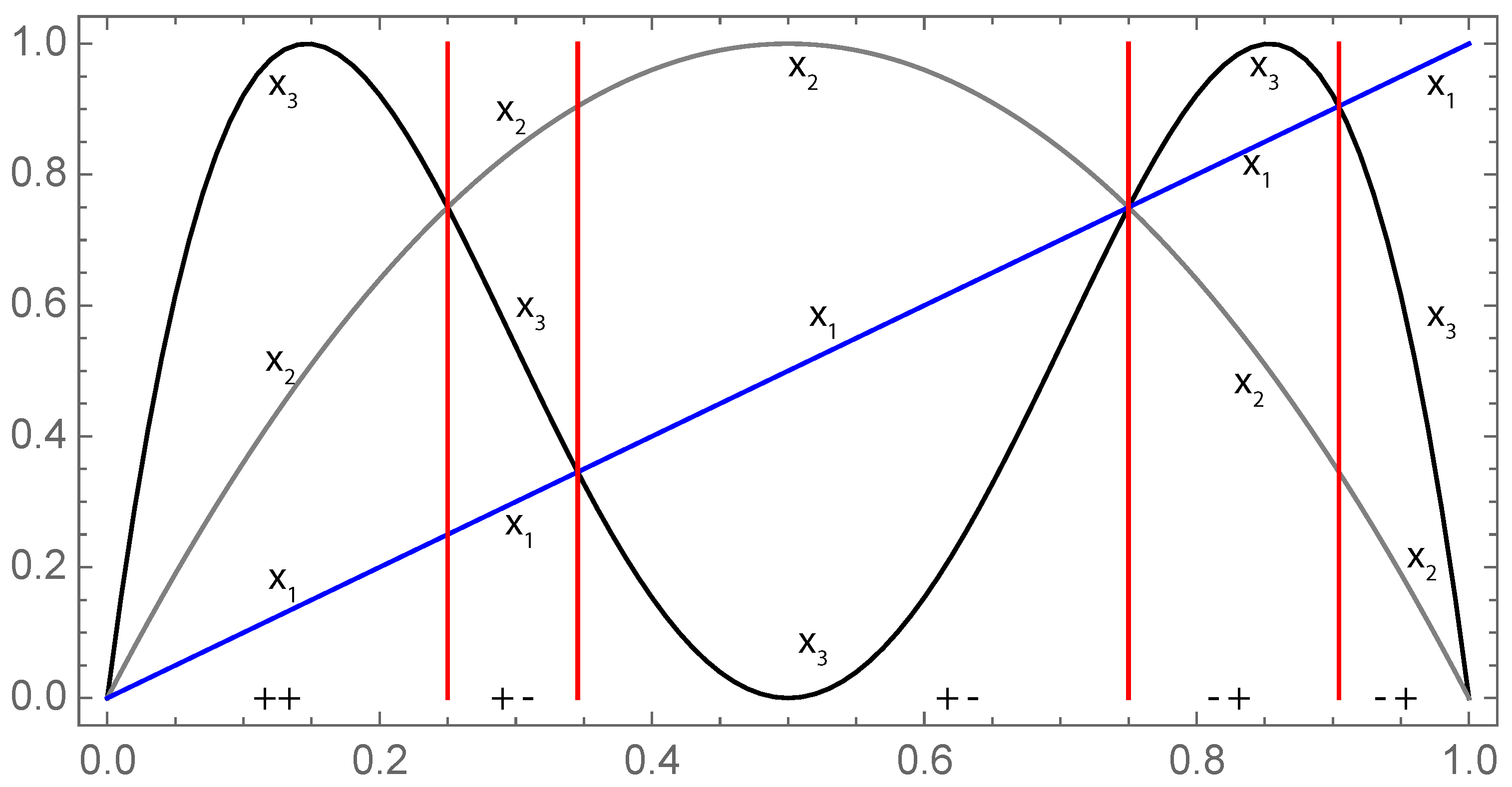

Graphically we have:

Effectively, the logistic map with forbids the string “” where . For strings of length 3, we have

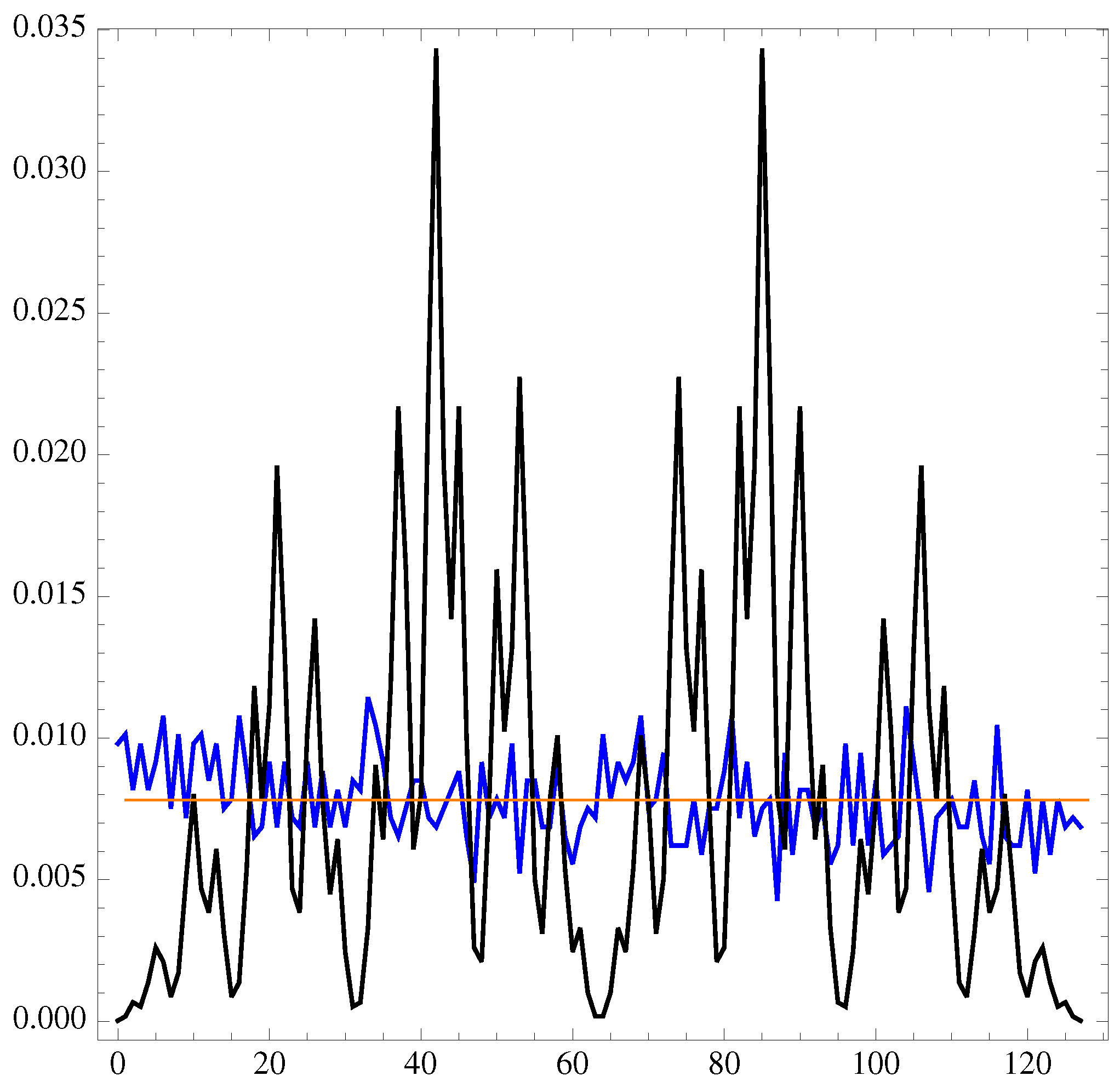

The probability of difference for some string length m versus s, the string binary value, where “+” and “−”, gives us the “spectrum of difference” for the distribution q (see Figure 4 and Figure 5).

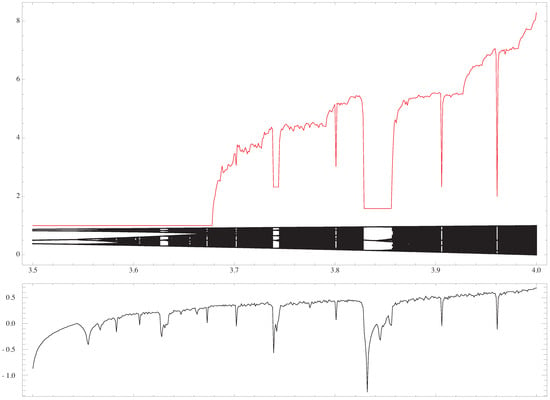

Figure 4.

The (strings of length 12) is plotted versus , with the bifurcation diagram, and the value of the Lyapunov exponent, respectively [13]. The constant value appears when the logistic map enters into a periodic regime.

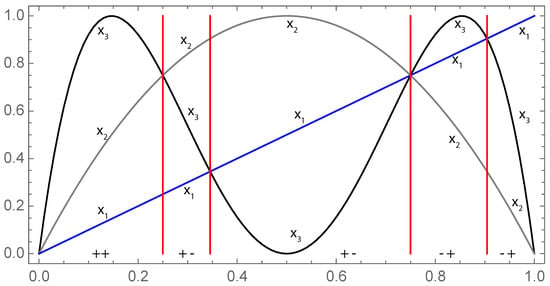

Figure 5.

From (blue), the first iteration of the logistic map (gray) gives , and the second iteration (black) gives . The respective positions of allow us to determine .

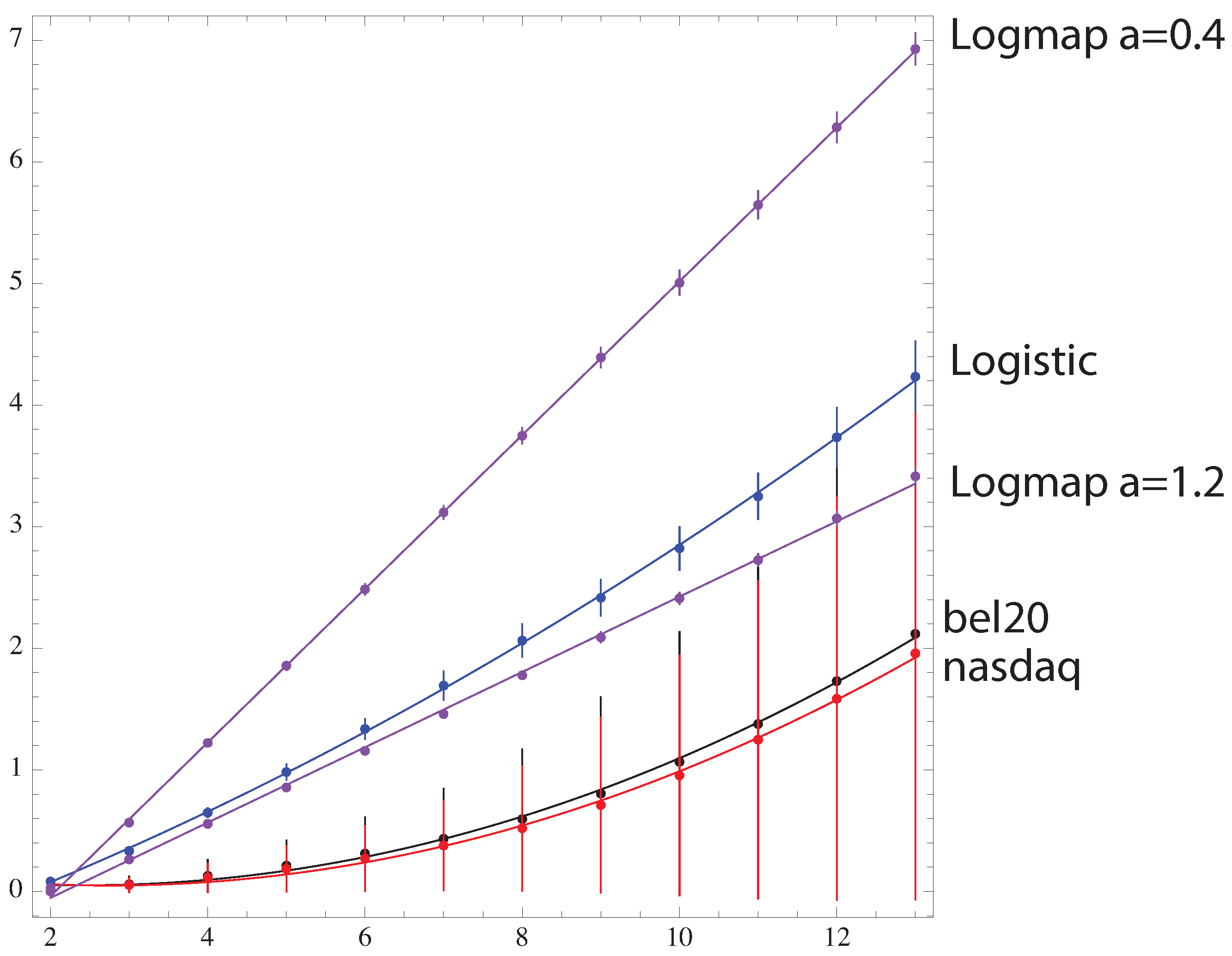

5. KLm(p|q) Divergences Versus m on Real Data and on Maps

The manner in which the evolves with m is another parameter of the complexity measure. measures the loss of information when the random distribution is used to predict the distribution . Increasing m introduces more bits of information in the signal, and the behavior versus m shows how the data diverge from a random distribution.

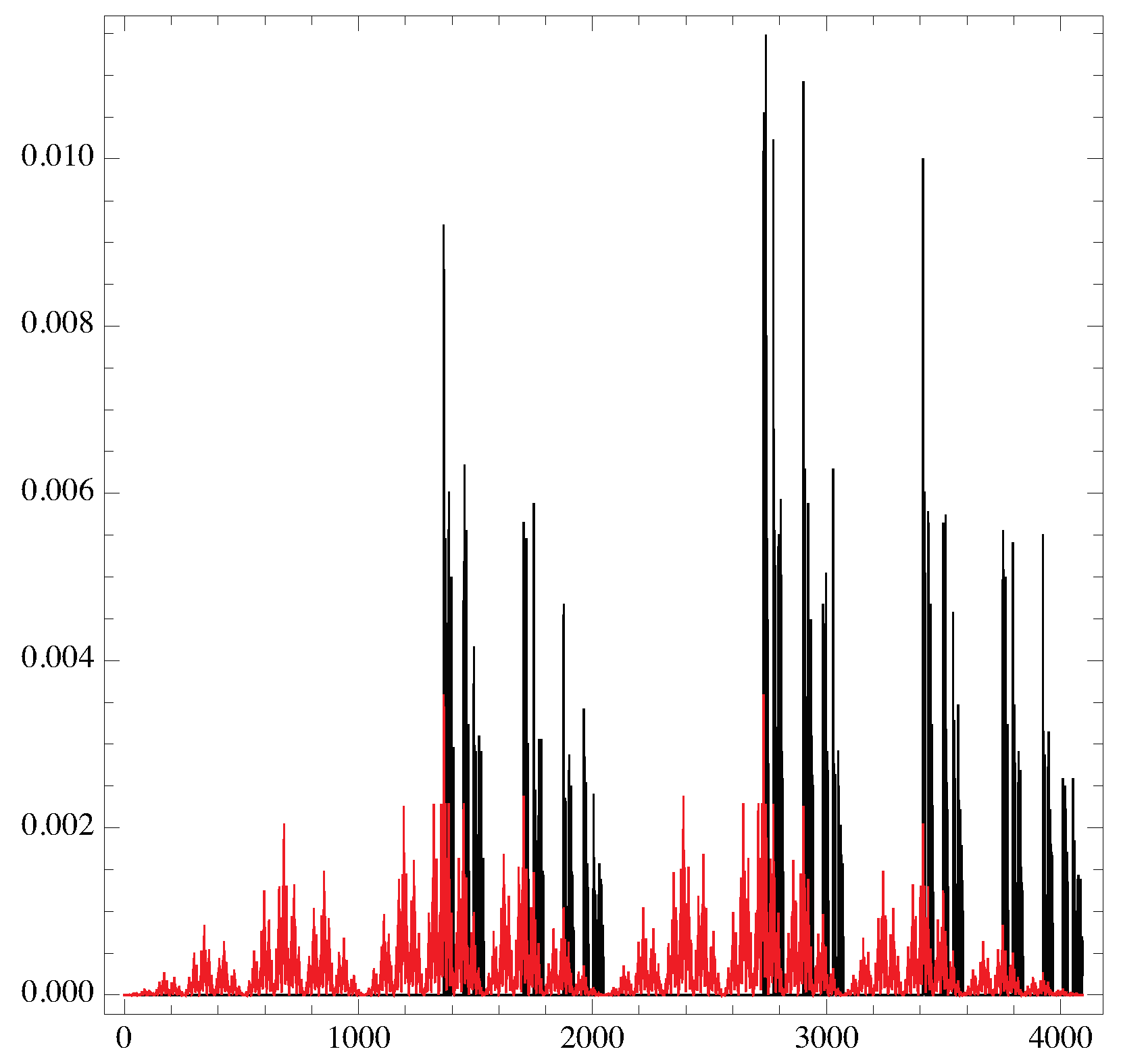

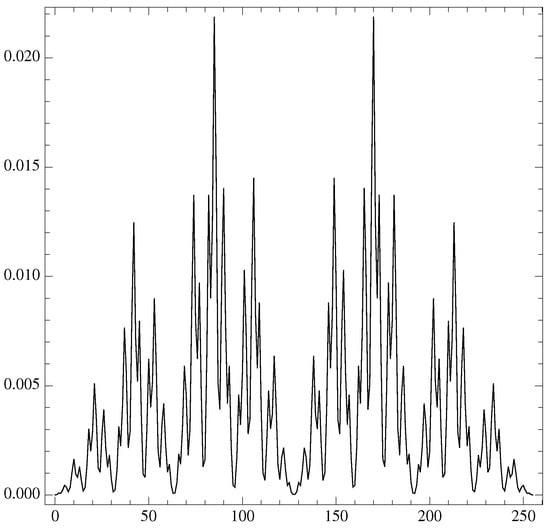

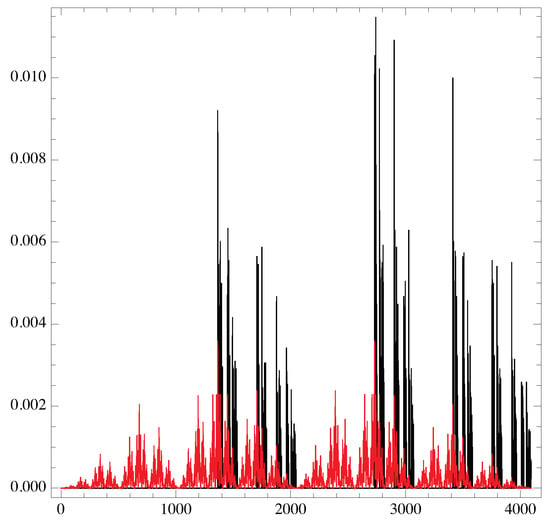

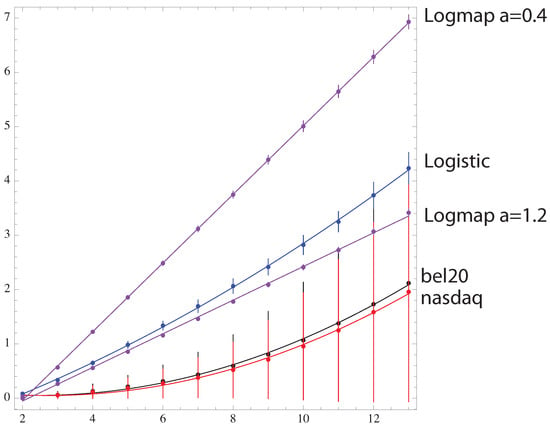

The graphics (see Figure 6) show the behavior of versus m for two different chaotic maps and for real financial data [14]: the opening value of the nasdaq100, bel20 every day from 2000 to 2013. For maps, the logarithmic map and the logistic map are shown (see Figure 6 for the logarithmic map (Figure 7)).

Figure 6.

The spectrum of (black) versus the string binary value (from 0 to ) for the logistic map at and the one from a random distribution (red).

Figure 7.

The versus a for the logarithm map .

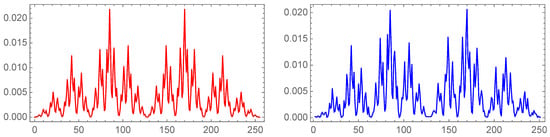

For maps, the simulation starts with a random number between 0 and 1 and first iterates 500 times to avoid transients. Starting with these seeds, 720 iterates were kept, and was computed. It can be seen that the Kullback–Leibler divergence from the logistic map at to the random signal is fitted by a quadratic function of m: (p-value for all parameters), while the logarithmic map behavior is linear in the range . Financial data are also quadratic , with a higher curvature than the logistic map due to the fact that the spectrum of the probability is compatible with a constant distribution (see Figure 6), rendering the prediction of an increase or decrease signal completely random, which is not the case in any true random signal (See Figure 8 and Figure 9).

Figure 8.

The KL-divergence for the data.

Figure 9.

The spectrum of versus the string binary value (from 0 to ) for the bel20 financial data.

6. Conclusions

The simple property of increases or decreases in a signal makes it possible to introduce the entropy of difference as a new efficient complexity measure for chaotic time series. This new technique is numerically fast and easy to implement. It does not require complex signal processing and could replace the evaluation of the Lyapunov exponent (which is far more time-consuming). For a random signal, we have determined the value of , which is independent of the probability of distribution of this signal. This makes it possible to calculate the “distance” between the analyzed signal and a random signal (independent of its distribution probability). As “distance”, we evaluate the Kullback–Leibler divergence versus the number of data m used to build the difference string. This shows different behavior for different types of signal and can also be used also to characterize the complexity of a time series. Since the only assumption for a random signal is that it is uncorrelated, this method makes it possible to determine the correlated nature of signals, even in chaotic regimes.

Author Contributions

Conceptualization and methodology, formal analysis, P.N.; writing, review and editing, P.N. and G.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

The Mathematica program for the probability :

P["+"]= P["-"] = 1/2; P["-", x__] := P[x] - P["+", x]; P[x__, "-"] := P[x] - P[x, "+"]; P[x__, "-", y__] := P[x] P[y] - P[x, "+", y]; P[x__] :=1/(StringLength[StringJoin[x]] + 1)!

References

- Bandt, C.; Pompe, B. Permutation Entropy: A Natural Complexity Measure for Time Series. Phys. Rev. Lett. 2002, 88, 174102. [Google Scholar] [CrossRef]

- Bian, C.; Qin, C.; Ma, Q.D.Y.; Shen, Q. Modified permutation-entropy analysis of heartbeat dynamics. Phys. Rev. E 2012, 85, 021906. [Google Scholar] [CrossRef]

- Zunino, L.; Pérez, D.G.; Martín, M.T.; Garavaglia, M.; Plastino, A.; Rosso, O.A. Permutation entropy of fractional Brownian motion and fractional Gaussian noise. Phys. Lett. A 2008, 372, 4768. [Google Scholar] [CrossRef]

- Li, X.; Ouyang, G.; Richard, D.A. Predictability analysis of absence seizures with permutation entropy. Epilepsy Res. 2007, 77, 70. [Google Scholar] [CrossRef]

- Li, X.; Cui, S.; Voss, L.J. Using permutation entropy to measure the electroencephalographic effects of sevoflurane. Anesthesiology 2008, 109, 448. [Google Scholar] [CrossRef] [PubMed]

- Frank, B.; Pompe, B.; Schneider, U.; Hoyer, D. Permutation entropy improves fetal behavioural state classification based on heart rate analysis from biomagnetic recordings in near term fetuses. Med. Biol. Eng. Comput. 2006, 44, 179. [Google Scholar] [CrossRef] [PubMed]

- Olofsen, E.; Sleigh, J.W.; Dahan, A. Permutation entropy of the electroencephalogram: A measure of anaesthetic drug effect. Br. J. Anaesth. 2008, 101, 810. [Google Scholar] [CrossRef] [PubMed]

- Rosso, O.A.; Zunino, L.; Perez, D.G.; Figliola, A.; Larrondo, H.A.; Garavaglia, M.; Martin, M.T.; Plastino, A. Extracting features of Gaussian self-similar stochastic processes via the Bandt–Pompe approach. Phys. Rev. E 2007, 76, 061114. [Google Scholar] [CrossRef] [PubMed]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. Ann. Math. Statist. 1951, 22, 79. [Google Scholar] [CrossRef]

- Roldán, E.; Parrondo, J.M.R. Entropy production and Kullback–Leibler divergence between stationary trajectories of discrete systems. Phys. Rev. E 2012, 85, 031129. [Google Scholar] [CrossRef] [PubMed]

- May, R.M. Simple mathematical models with very complicated dynamics. Nature 1976, 261, 459. [Google Scholar] [CrossRef] [PubMed]

- Jakobson, M. Absolutely continuous invariant measures for one-parameter families of one-dimensional maps. Commun. Math. Phys. 1981, 81, 39–88. [Google Scholar] [CrossRef]

- Ginelli, F.; Poggi, P.; Turchi, A.; Chate, H.; Livi, R.; Politi, A. Characterizing Dynamics with Covariant Lyapunov Vectors. Phys. Rev. Lett. 2007, 99, 130601. [Google Scholar] [CrossRef] [PubMed]

- Available online: http://www.wessa.net/ (accessed on 1 February 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).