Initial Boundary Value Problem for the Coupled Kundu Equations on the Half-Line

Abstract

:1. Introduction

2. Basic Riemann–Hilbert Problem

2.1. Formulas and Symbols

- The Pauli matrix can be written as , , , and .

- The matrices S and T are matrices, where S represents the inner product of T; that is, .

- The matrix commutator is , and is calculated in this study as follows: .

- The complex conjugation of a function is represented by an overline, for example, .

2.2. Lax Pair

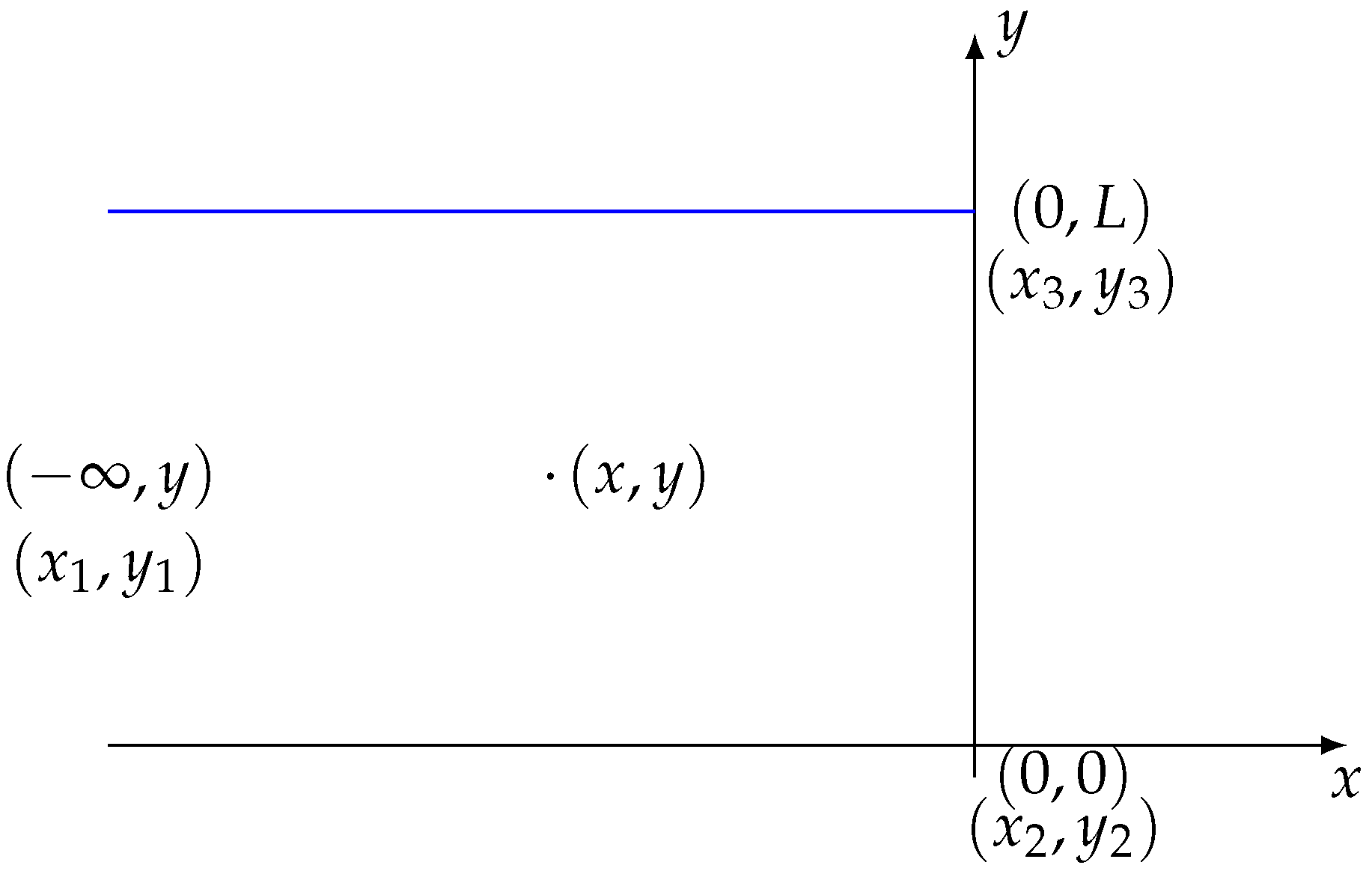

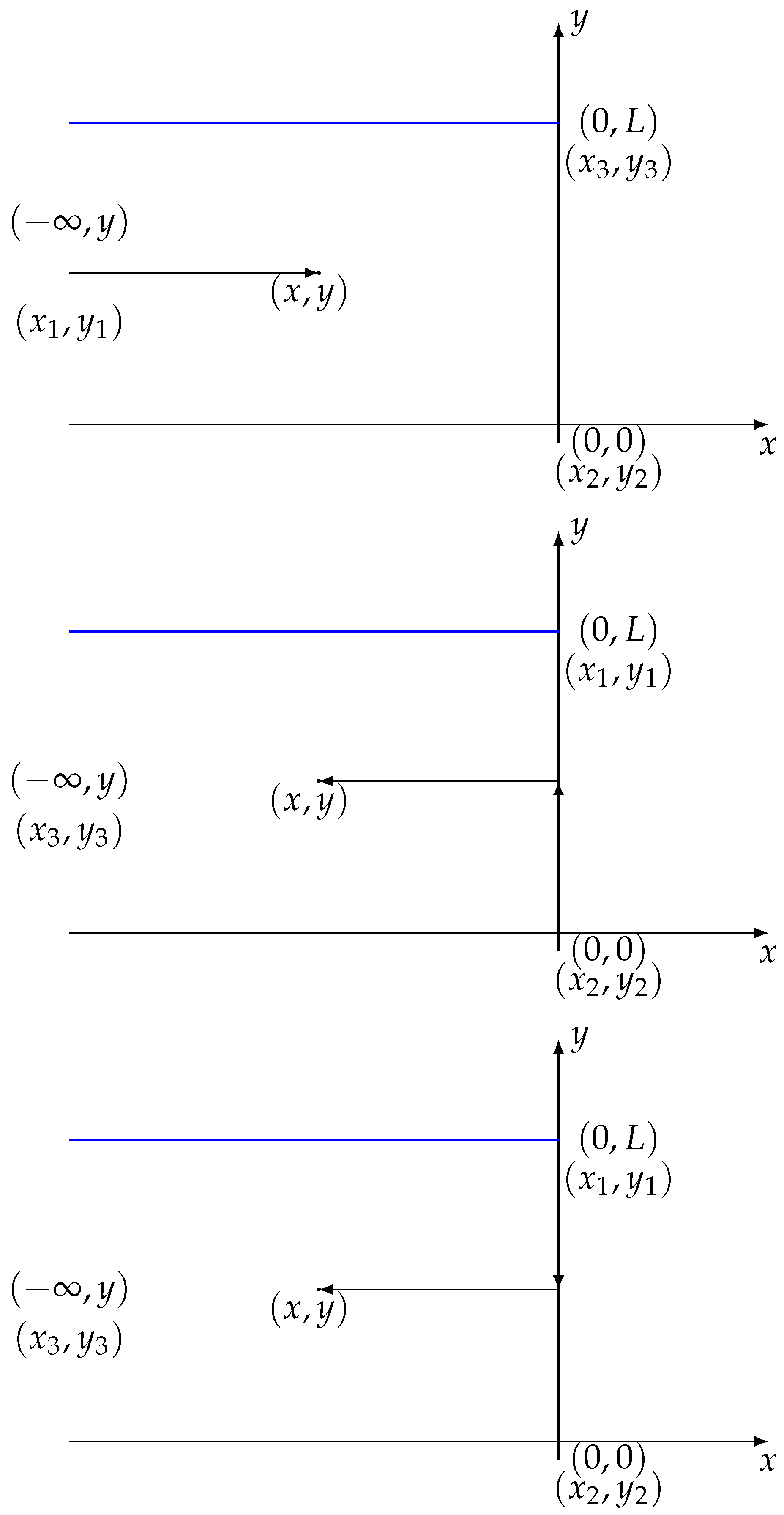

2.3. Spectral Analysis and Asymptotic Analysis

2.4. Eigenfunctions and Their Relations

2.5. The Spectral Functions and Their Propositions

- (1) .

- (2) The analytic properties of the function are apparent, and .

- (3) The analytic properties of the function are apparent, and .

- (4) The analytic properties of the function are apparent, and .

- (5) The analytic properties of the function are apparent, and .

- (6) The analytic properties of the function are apparent, and .

- (7) The analytic properties of the function are apparent, and .

- (1)

- (2)where .

- (3)

2.6. Jump Matrix

- (1) contains simple zeros (). We assume that () pertains to , and () pertains to .

- (2) contains simple zeros (). We assume that () pertains to , and () pertains to .

- (3) There are distinctions between the simple zeros of and .

- (1) Res =, .

- (2) Res =, .

- (3) Res =, .

- (4) Res =, .

2.7. The Inverse Problem

3. Definition and Properties of Spectral Functions and Riemann–Hilbert Problem

3.1. Characteristics of Spectral Functions

- (1) For , and are analytical.

- (2) as , .

- (3) As and , the definition of the inverse map for is as follows:where fulfills the subsequent Riemann–Hilbert problem. (see Theorem 2).

- (1) is a piecewise analytic function.

- (2) , , and

- (3)

- (4) contains simple zeros , (), we assume that () is a part of , and () is a part of .

- (5) There exist simple poles in at , where . The locations of the simple poles in can be identified as , where .

- ,

- ,

- ,

- ,

- (1) For , and are analytical.

- (2) as , .

- (3) As and , the definition of the inverse map for is as follows:

- (1) is a piecewise analytic function.

- (2) , , and

- (3)

- (4) contains simple zeros , (), and we assume that () is a part of and () is a part of .

- (5) There exist simple poles in at , where . The locations of the simple poles in can be identified as , where .

- (1) For , and are analytical;

- (2) as , ;

- (3) , ;

- (4) , ;

- (5) As and , the definition of the inverse map for is as follows:where fulfills the subsequent Riemann–Hilbert problem.

- (1) is a piecewise analytic function.

- (2) , , and

- (3)

- (4) contains simple zeros (), we assume that () is a part of , and () is a part of .

- (5) There exist simple poles in at , where . The locations of the simple poles in can be identified as , where .

3.2. Riemann–Hilbert Problem

- The function is an analytic function that operates on slices while maintaining a determinant of unity.

- meets the jump condition

- Simple poles are located at , , and , in . Additionally, simple poles exist at , , and , in .

- .

- fulfills the residue relationship mentioned in Hypothesis 1.

- (1)

- (2)

4. Conclusions and Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gardner, C.; Clifford, S.; Kruskal, M. The Korteweg-de Vries Equation and Generalizations. VI. Method for exact Solutions. Commun. Pur. Appl. Math. 1974, 27, 97–133. [Google Scholar] [CrossRef]

- Fokas, A.; Zakharov, V. Important Developments in Soliton Theory; Springer: Berlin, Germany, 1993. [Google Scholar]

- Fokas, A. On a Class of Physically Important Integrable Equations. Physica D 1995, 87, 145–150. [Google Scholar] [CrossRef]

- Dong, H.; Zhang, Y.; Zhang, X. The New Integrable Symplectic Map and the Symmetry of Integrable Nonlinear Lattice Equation. Commun. Nonlinear Sci. 2016, 36, 354–365. [Google Scholar] [CrossRef]

- Fang, Y.; Dong, H.; Hou, Y.; Kong, Y. Frobenius Integrable Decompositions Of Nonlinear Evolution Equations with Modified Term. Appl. Math. Comput. 2014, 226, 435–440. [Google Scholar] [CrossRef]

- Fokas, A. A Unified Transform Method for Solving Linear and Certain Nonlinear PDEs. Proc. R. Soc. Lond. Ser. A-Math. Phys. Eng. Sci. 1997, 453, 1411–1443. [Google Scholar] [CrossRef]

- Fokas, A.; Its, A.; Sung, L.Y. The Nonlinear Schrödinger Equation on the Half-Line. Nonlinearity 2005, 18, 1771–1822. [Google Scholar] [CrossRef]

- Fokas, A.; Its, A. The Nonlinear Schrödinger Equation on the Interval. J. Phys. A 2004, 37, 6091–6114. [Google Scholar] [CrossRef]

- Boutet, A.; Monvel, D.; Fokas, A. The mKDV Equation on the Half-Line. J. Int. Math. Jussieu 2004, 3, 139–164. [Google Scholar] [CrossRef]

- Boutet, D.; Monvel, A.; Shepelsky, D. Initial Boundary Value Problem for the MKdV Equation on a Finite Interval. Ann. I’institut Fourier 2004, 54, 1477–1495. [Google Scholar] [CrossRef]

- Monvel, A.; Shepelsky, D. Long Time Asymptotics of the Camassa-Holm Equation on the Half-Line. Ann. I’institut Fourier 2009, 7, 59. [Google Scholar]

- Lenells, J. The Derivative Nonlinear Schrödinger Equation on the Half-Line. Physica D 2008, 237, 3008–3019. [Google Scholar] [CrossRef]

- Lenells, J. An Integrable Generalization of the Sine-Gordon Equation on the Half-Line. IMA J. Appl. Math. 2011, 76, 554–572. [Google Scholar] [CrossRef]

- Lenells, J.; Fokas, A. On a Novel Integrable Generalization of the Nonlinear Schrödinger Equation. Nonlinearity 2009, 22, 709–722. [Google Scholar] [CrossRef]

- Lenells, J.; Fokas, A. An Integrable Generalization of the Nonlinear Schrödinger Equation on the Half-Line and Solitons. Inverse Probl. 2009, 25, 1–32. [Google Scholar] [CrossRef]

- Fan, E. A Family of Completely Integrable Multi-Hamiltonian Systems Explicitly Related to some Celebrated Equations. J. Math. Phys. 2001, 42, 95–99. [Google Scholar] [CrossRef]

- Xu, J.; Fan, E. A Riemann-Hilbert Approach to the Initial-Boundary Problem for Derivative Nonlinear Schrödinger Equation. Acta Math. Sci. 2014, 34, 973–994. [Google Scholar] [CrossRef]

- Xu, J.; Fan, E. Initial-Boundary Value Problem for Integrable Nonlinear Evolution Equation with 3 × 3 Lax Pairs on the Interval. Stud. Appl. Math. 2016, 136, 321–354. [Google Scholar] [CrossRef]

- Chen, M.; Chen, Y.; Fan, E. The Riemann-Hilbert Analysis to the Pollaczek-Jacobi Type Orthogonal Polynomials. Stud. Appl. Math. 2019, 143, 42–80. [Google Scholar] [CrossRef]

- Chen, M.; Fan, E.; He, J. Riemann-Hilbert Approach and the Soliton Solutions of the Discrete MKdV Equations. Chaos Soliton Fract. 2023, 168, 113209. [Google Scholar] [CrossRef]

- Zhao, P.; Fan, E. A Riemann-Hilbert Method to Algebro-Geometric Solutions of the Korteweg-de Vries Equation. Physica D 2023, 454, 133879. [Google Scholar] [CrossRef]

- Zhang, N.; Xia, T.; Hu, B. A Riemann-Hilbert Approach to the Complex Sharma-Tasso-Olver Equation on the Half Line. Commun. Theor. Phys. 2017, 68, 580. [Google Scholar] [CrossRef]

- Zhang, N.; Xia, T.; Fan, E. A Riemann-Hilbert Approach to the Chen-Lee-Liu Equation on the Half Line. Acta Math. Sci. 2018, 34, 493–515. [Google Scholar] [CrossRef]

- Li, J.; Xia, T. Application of the Riemann-Hilbert Approach to the Derivative Nonlinear Schrödinger Hierarchy. Int. J. Mod. Phys. B 2023, 37, 2350115. [Google Scholar] [CrossRef]

- Wen, L.; Zhang, N.; Fan, E. N-Soliton Solution of The Kundu-Type Equation Via Riemann-Hilbert Approach. Acta Math. Sci. 2020, 40, 113–126. [Google Scholar] [CrossRef]

- Hu, B.; Lin, J.; Zhang, L. On the Riemann-Hilbert problem for the integrable three-coupled Hirota system with a 4 × 4 Matrix Lax Pair. Appl. Math. Comput. 2022, 428, 127202. [Google Scholar] [CrossRef]

- Hu, B.; Zhang, L.; Xia, T. On the Riemann-Hilbert Problem of a Generalized Derivative Nonlinear Schrödinger Equation. Commun. Theor. Phys. 2021, 73, 015002. [Google Scholar] [CrossRef]

- Hu, B.; Zhang, L.; Xia, T.; Zhang, N. On the Riemann-Hilbert Problem of the Kundu Equation. Appl. Math. Comput. 2020, 381, 125262. [Google Scholar] [CrossRef]

- Tao, M.; Dong, H. N-Soliton Solutions of the Coupled Kundu Equations Based on the Riemann-Hilbert Method. Math. Probl. Eng. 2019, 3, 1–10. [Google Scholar] [CrossRef]

- Kundu, A.; Strampp, W.; Oevel, W. Gauge Transformations of Constrained KP Flows: New Integrable Hierarchies. J. Math. Phys. 1995, 36, 2972–2984. [Google Scholar] [CrossRef]

- Luo, J.; Fan, E. Dbar Dressing Method for the Coupled Gerdjikov-Ivanov Equation. Appl. Math. Lett. 2020, 110, 106589. [Google Scholar] [CrossRef]

- Kodama, Y. Optical Solitons in a Monomode Fiber. J. Stat. Phys. 1985, 35, 597–614. [Google Scholar] [CrossRef]

- Pinar, A.; Muslum, O.; Aydin, S.; Mustafa, B.; Sebahat, E. Optical Solitons of Stochastic Perturbed Radhakrishnan-Kundu-Lakshmanan Model with Kerr Law of Self-Phase-Modulation. Mod. Phys. Lett. B 2024, 38, 2450122. [Google Scholar]

- Monika, N.; Shubham, K.; Sachin, K. Dynamical Forms of Various Optical Soliton Solutions and Other Solitons for the New Schrödinger Equation in Optical Fibers Using Two Distinct Efficient Approaches. Mod. Phys. Lett. B 2024, 38, 2450087. [Google Scholar]

- Mjolhus, E. On the Modulational Instability of Hydromagnetic Waves Parallel to the Magnetic Field. J. Plasma Phys. 1976, 16, 321–334. [Google Scholar] [CrossRef]

- Han, M.; Garlow, J.; Du, K.; Cheong, S.; Zhu, Y. Chirality reversal of magnetic solitons in chiral TaS2. Appl. Phys. Lett. 2023, 123, 022405. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, J.; Zhang, N. Initial Boundary Value Problem for the Coupled Kundu Equations on the Half-Line. Axioms 2024, 13, 579. https://doi.org/10.3390/axioms13090579

Hu J, Zhang N. Initial Boundary Value Problem for the Coupled Kundu Equations on the Half-Line. Axioms. 2024; 13(9):579. https://doi.org/10.3390/axioms13090579

Chicago/Turabian StyleHu, Jiawei, and Ning Zhang. 2024. "Initial Boundary Value Problem for the Coupled Kundu Equations on the Half-Line" Axioms 13, no. 9: 579. https://doi.org/10.3390/axioms13090579