A New Methodology for the Development of Efficient Multistep Methods for First–Order IVPs with Oscillating Solutions IV: The Case of the Backward Differentiation Formulae

Abstract

1. Introduction

- The theory for determining the phase–lag and amplification–factor of backward differentiation formulae (BDF) was established in Section 2. The theory presented in [28] will serve as the foundation for the development of the general theory since these techniques belong to a specific class of implicit multistep methods. We shall generate the direct equations for the phase–lag and amplification–factor calculations here.

- Section 3 presents the methodologies which will be used for the development of the new backward differentiation formulae (BDF).

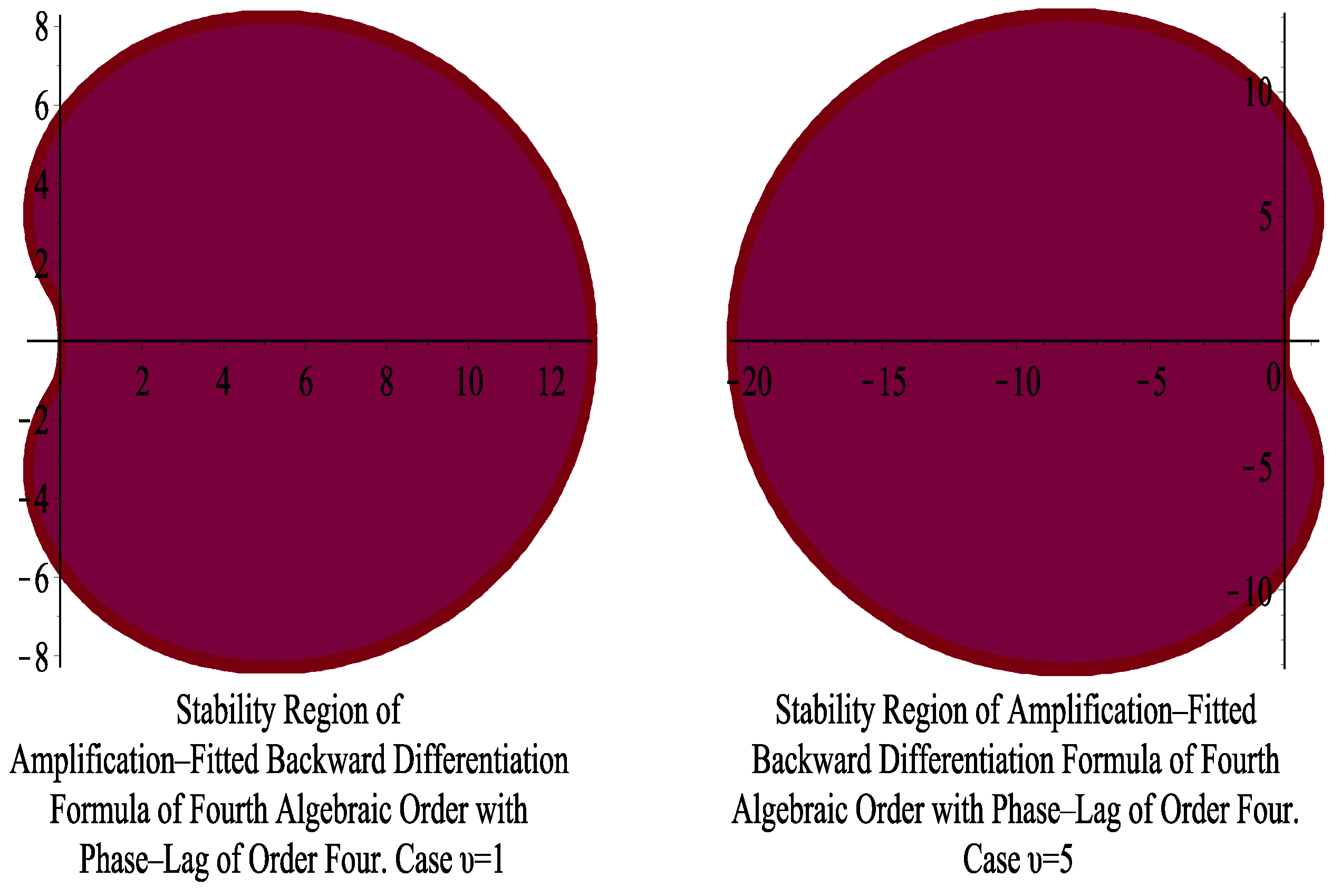

- Section 4 introduces the Backward Differentiation Formula that will be used to examine.

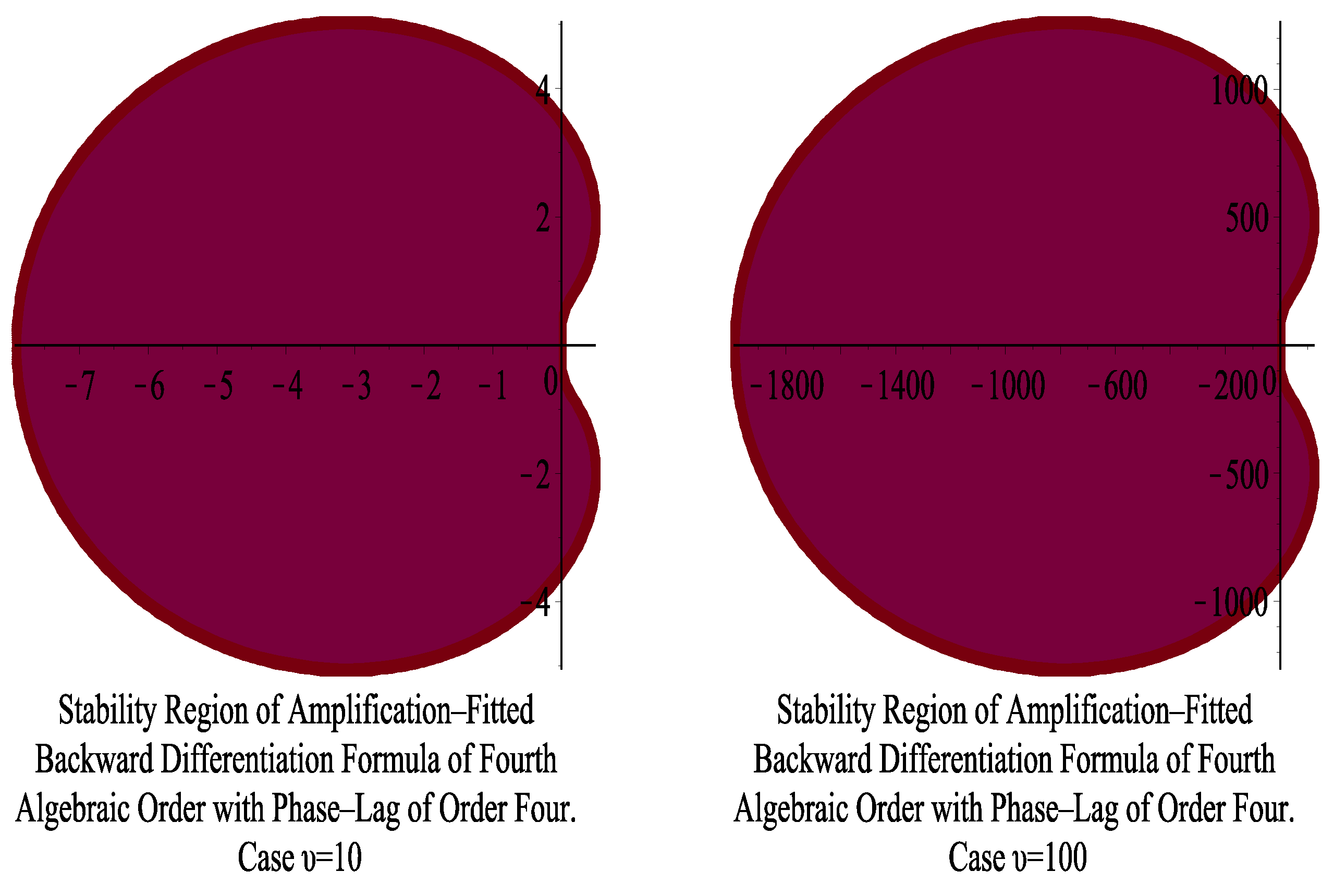

- Section 5 studies the amplification–fitted backward differentiation formula of fourth algebraic order with phase–lag of order four.

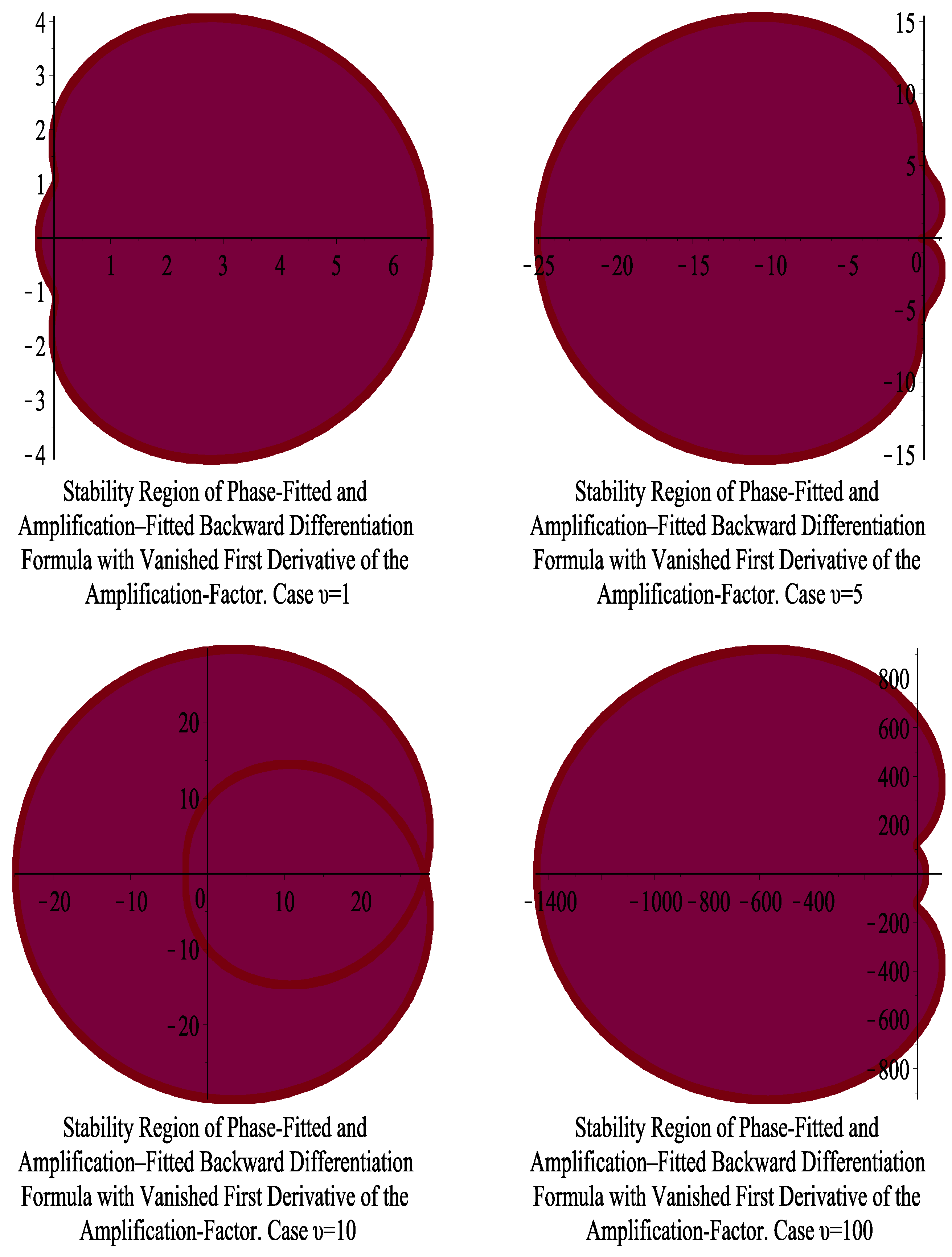

- Section 6 studies the phase–fitted and amplification–fitted backward differentiation formula of fourth algebraic order with elimination of the first derivative of the amplification–factor.

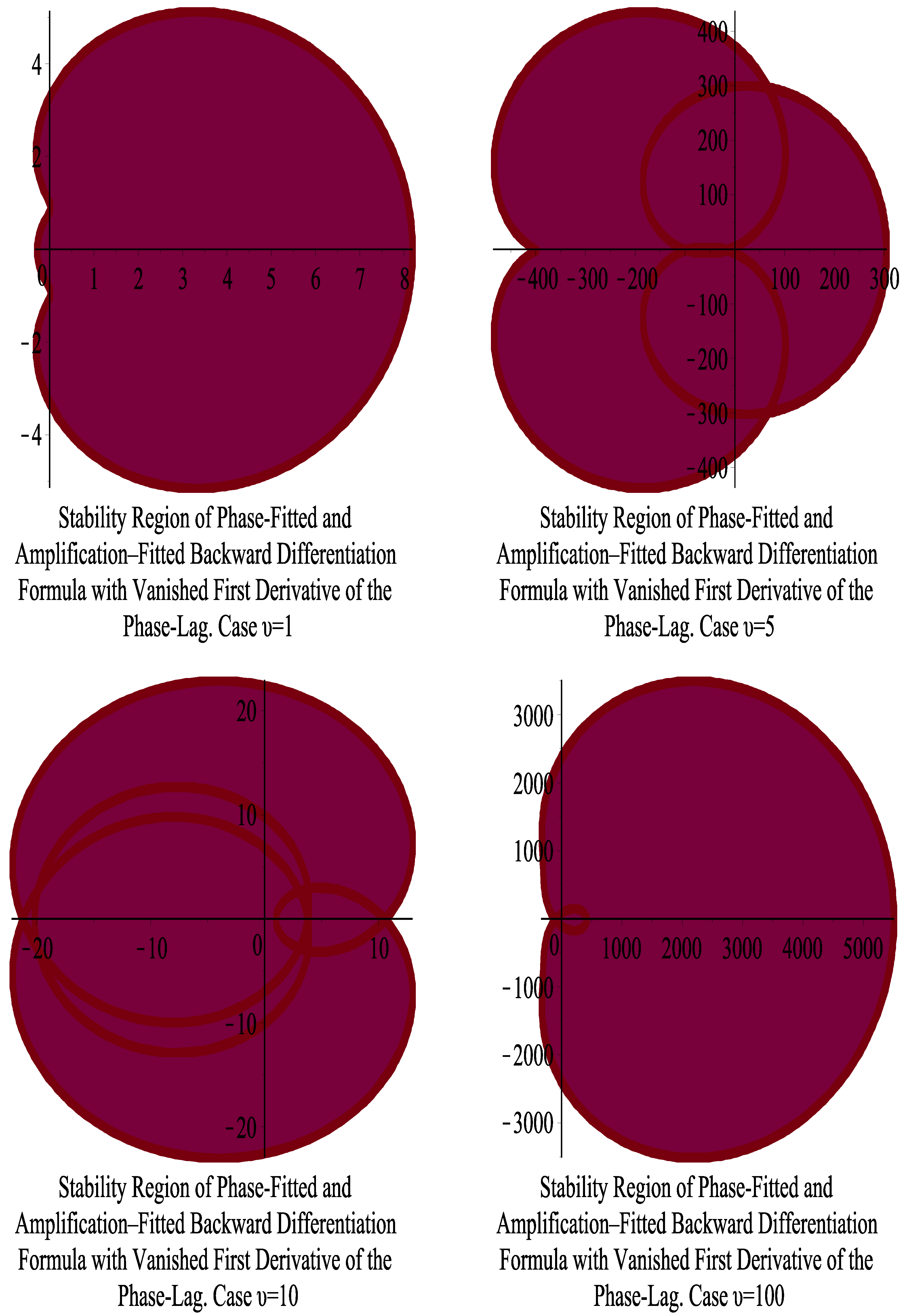

- Section 7 studies the phase–fitted and amplification–fitted backward differentiation formula of fourth algebraic order with the elimination of the first derivative of the phase–lag.

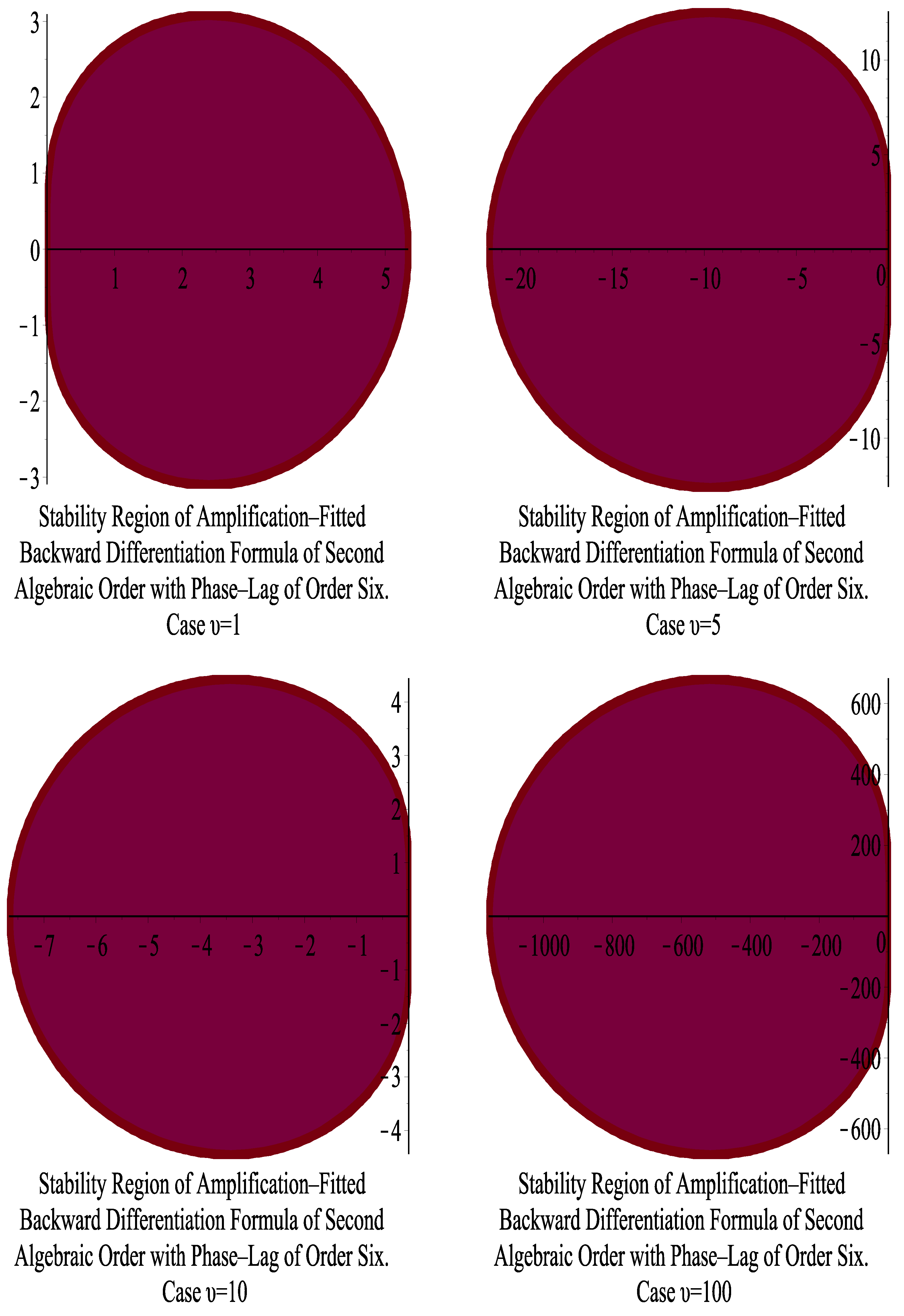

- Section 8 studies the amplification–fitted backward differentiation formula of second algebraic order with phase–lag of order six.

- Section 9 studies the phase–fitted and amplification–fitted backward differentiation formula of fourth algebraic order.

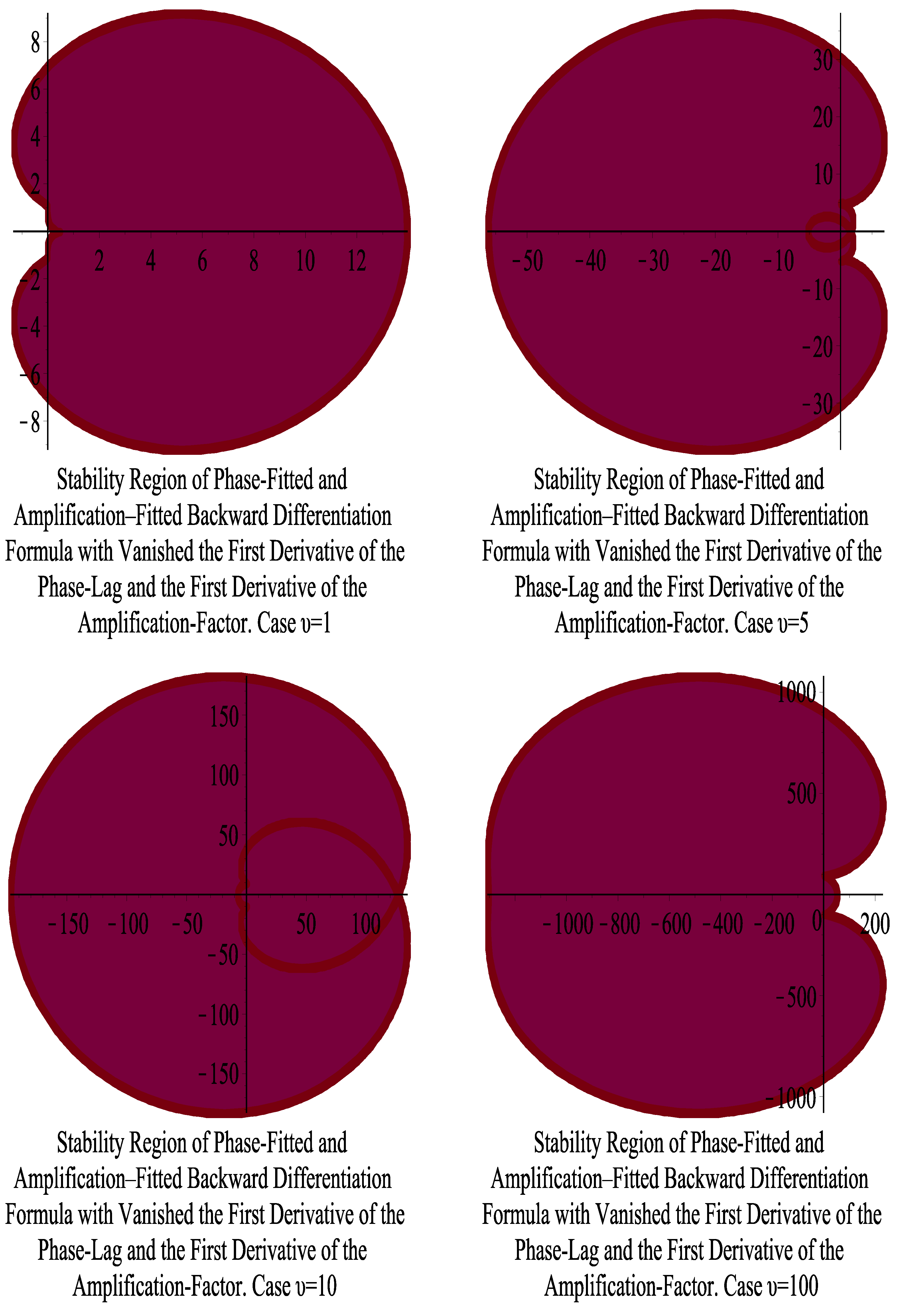

- Section 10 studies the phase–fitted and amplification–fitted backward differentiation formula of fourth algebraic order with the elimination of the first derivative of the phase–lag and the first derivative of the amplification–factor.

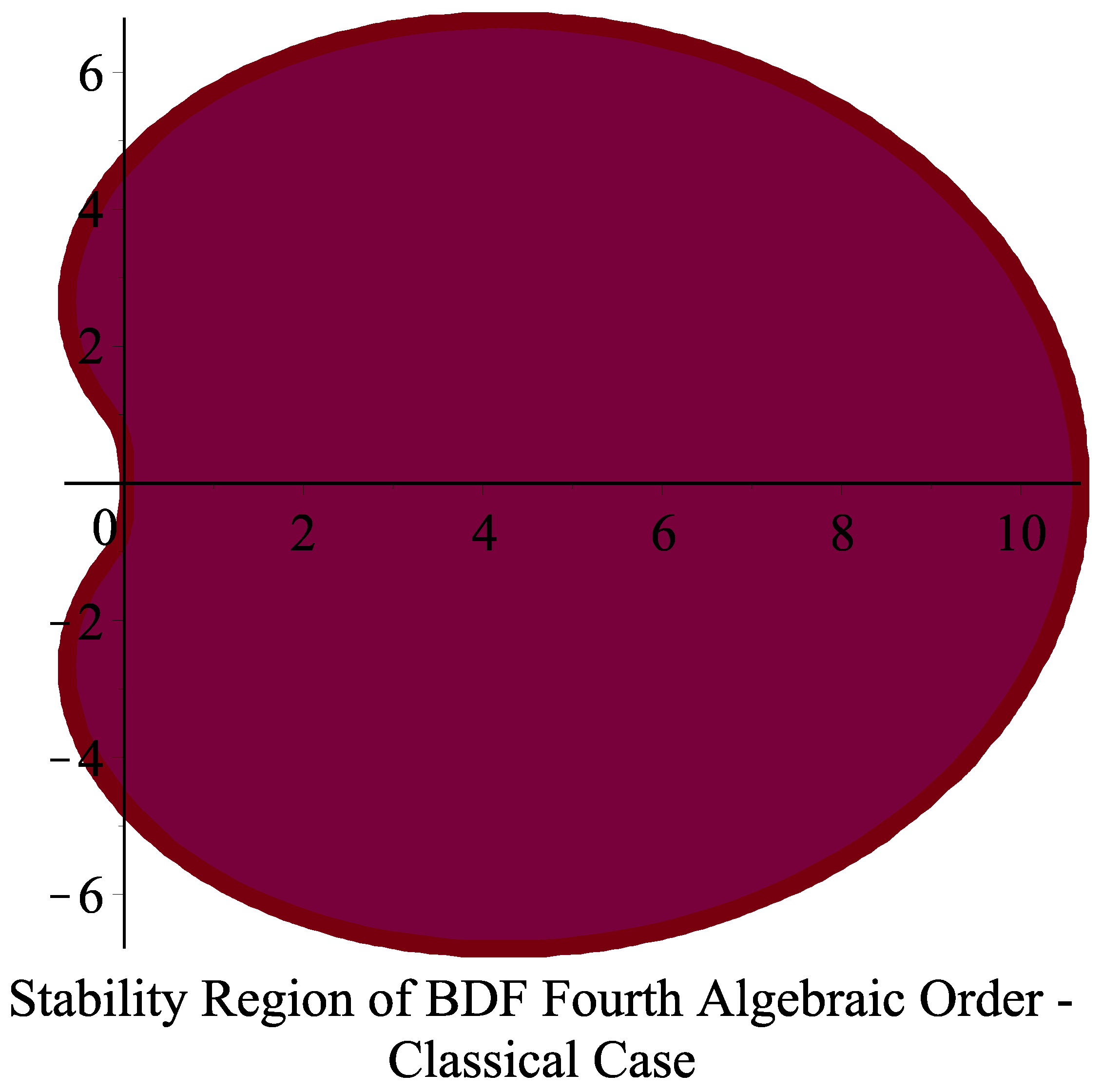

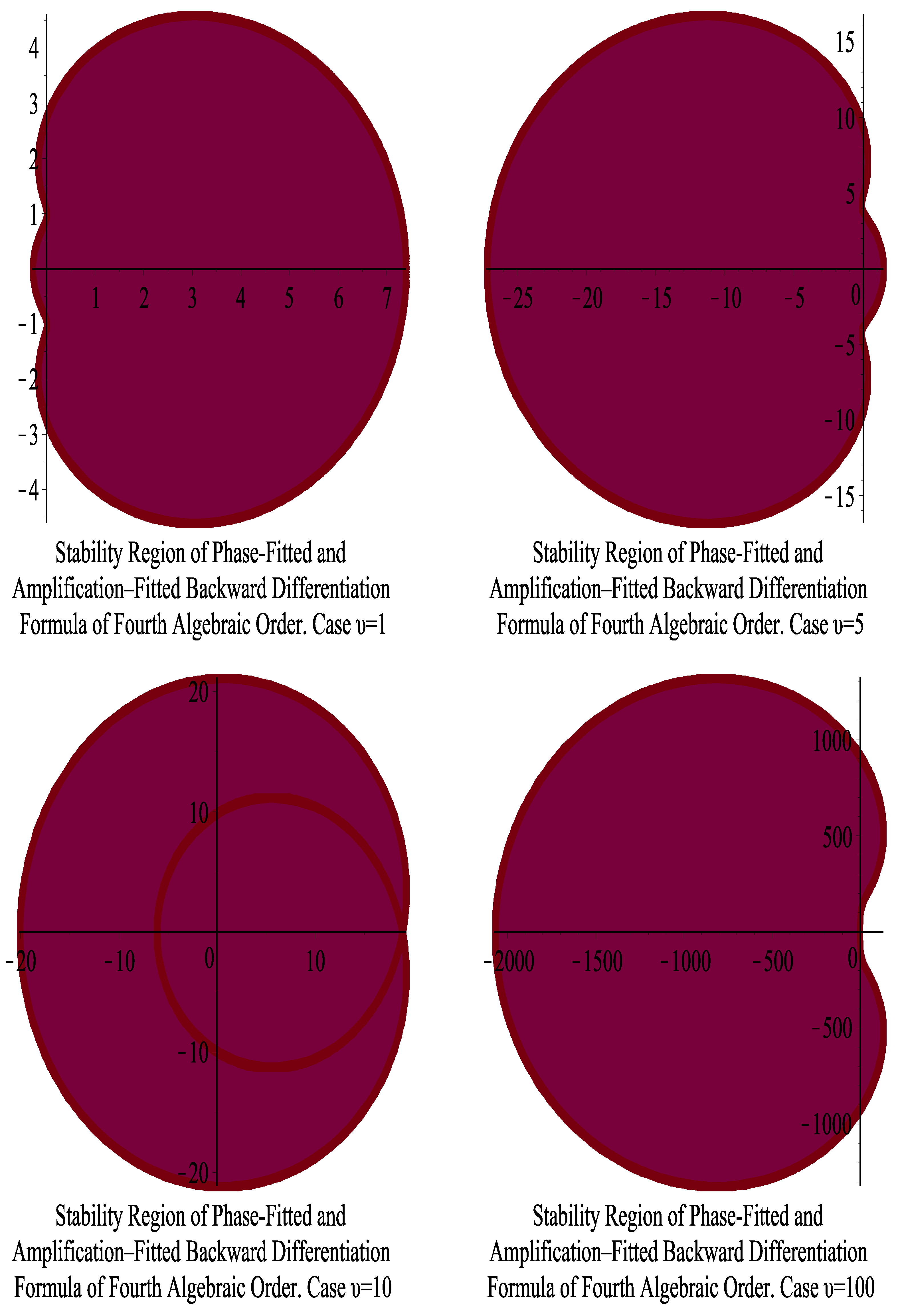

- In Section 11, we examine the stability of the newly acquired algorithms.

- In Section 12, we present several numerical examples and we comment on the numerical results.

- We provide the numerical results and conclusions in Section 13.

2. The Theory

2.1. Direct Formulae for Computation of the Phase–Lag and Amplification–Factor of Backward Differentiation Formulae

2.1.1. The Real Part

2.1.2. The Imaginary Part

2.2. The Role of the Derivatives of the Phase–Lag and Derivatives of the Amplification–Factor on the Efficiency of BDF Algorithms

- The formulae generated in the prior stage are differentiated on .

- For the derivatives of the phase–lag and the amplification–factor that were generated in the preceding stages, we request that they be set equal to zero.

3. Minimizing or Eliminating the Phase–Lag and the Amplification–Factor and the Derivatives of the Phase–Lag and the Amplification–Factor

- Minimization of the phase–lag

- Eliminating the phase–lag

- Eliminating the amplification–factor

- Eliminating the derivative of the phase–lag

- Eliminating the derivative of the amplification–factor

- Eliminating the derivatives of the phase–lag and the amplification–factor

4. Backward Differentiation Formulae (BDF)

5. Amplification–Fitted Backward Differentiation Formula of Fourth Algebraic Order with Phase–Lag of Order Four

5.1. An Approach to Reducing Phase–Lag

- Elimination of the Amplification–Factor.

- Using the coefficient obtained in the previous step, perform the phase–lag calculation.

- Expansion of the previously determined phase–lag via the use of Taylor series.

- Determining the system of equations that reduces the phase–lag.

- Determine the revised coefficients.

5.2. Eliminating the Amplification Factor

6. Phase–Fitted and Amplification–Fitted Backward Differentiation Formula of Algebraic Order Four with the Elimination of the First Derivative of the Amplification–Factor

Methodology for the Vanishing of the First Derivative of the Amplification–Factor

- We calculate the first derivative of the above formula, i.e., .

- We request the formula of the previous step to be equal to zero, i.e., .

7. Phase–Fitted and Amplification–Fitted Backward Differentiation Formula of Algebraic Order Four with the Elimination of the First Derivative of the Phase–Lag

Methodology for the Vanishing of the First Derivative of the Phase–Lag

- We calculate the first derivative of the above formula, i.e., .

- We request the formula of the previous step to be equal to zero, i.e., .

8. Amplification–Fitted Backward Differentiation Formula of Second Algebraic Order with Phase–Lag of Order Six

Eliminating the Amplification Factor

9. Phase–Fitted and Amplification–Fitted Backward Differentiation Formula of Algebraic Order Four

10. Phase–Fitted and Amplification–Fitted Backward Differentiation Formula of Algebraic Order Four with the Elimination of the First Derivative of the Phase–Lag and the First Derivative of the Amplification–Factor

Methodology for the Vanishing of the First Derivative of the Phase–Lag and the First Derivative of the Amplification–Factor

- We apply the Formula (18) to the specific method (78). Thus, we obtain the formula of the Phase–Lag, let say .

- We calculate the first derivative of the above formula, i.e., .

- We apply the Formula (20) to the specific method (78). Thus, we obtain the formula of the Amplification–Factor, let say .

- We calculate the first derivative of the above formula, i.e., .

- We request the formulae of the previous steps to be equal to zero, i.e., , and .

11. Stability Analysis

12. Numerical Results

12.1. Problem of Stiefel and Bettis

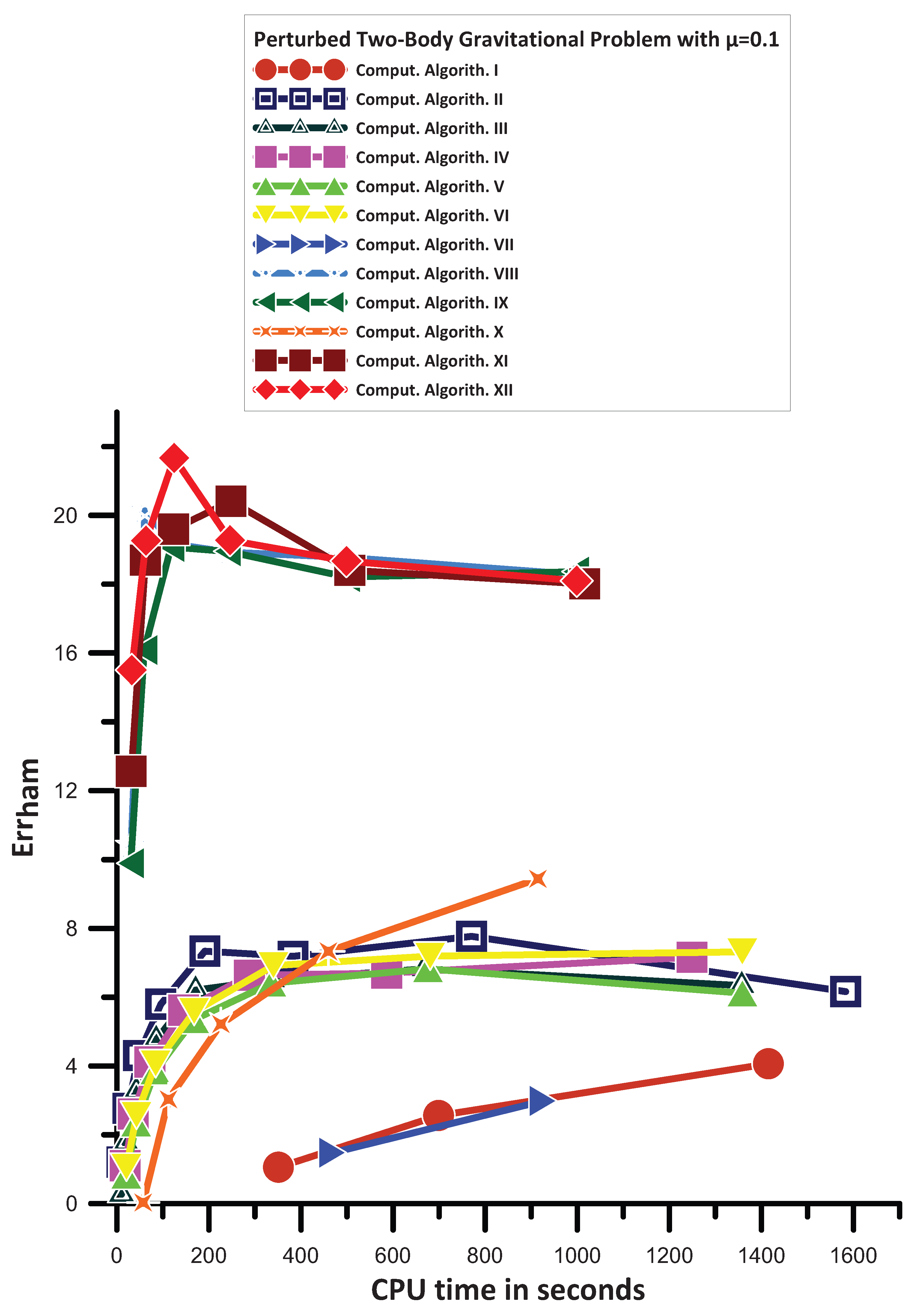

- The Backward Differentiation Formula presented in Section 4 (Classical Case), which is mentioned as Comput. Algorith. I

- The Runge–Kutta Dormand and Prince fourth order method [14], which is mentioned as Comput. Algorith. II

- The Runge–Kutta Dormand and Prince fifth order method [14], which is mentioned as Comput. Algorith. III

- The Runge–Kutta Fehlberg fourth order method [34], which is mentioned as Comput. Algorith. IV

- The Runge–Kutta Fehlberg fifth order method [34], which is mentioned as Comput. Algorith. V

- The Runge–Kutta Cash and Karp fifth order method [35], which is mentioned as Comput. Algorith. VI

- The Amplification–Fitted Backward Differentiation Formula with Phase–Lag of order 4, which is developed in Section 5, and which is mentioned as Comput. Algorith. VII

- The Phase–Fitted and Amplification–Fitted Backward Differentiation Formula with vanished the first derivative of the amplification–factor which is developed in Section 6, and which is mentioned as Comput. Algorith. VIII

- The Phase–Fitted and Amplification–Fitted Backward Differentiation Formula with vanished the first derivative of the phase–lag which is developed in Section 7, and which is mentioned as Comput. Algorith. IX

- The Amplification–Fitted Backward Differentiation Formula with Phase–Lag of order 6, which is developed in Section 8, and which is mentioned as Comput. Algorith. X

- The Phase–Fitted and Amplification–Fitted Backward Differentiation Formula which is developed in Section 9, and which is mentioned as Comput. Algorith. XI

- The Phase–Fitted and Amplification–Fitted Backward Differentiation Formula with vanished the first derivative of the phase–lag and vanished the first derivative of the amplification–factor which is developed in Section 10, which is mentioned as Comput. Algorith. XII

- Comput. Algorith. X is more efficient than Comput. Algorith. III

- Comput. Algorith. I is more efficient than Comput. Algorith. V

- Comput. Algorith. VI has the same, generally, behavior with Comput. Algorith. I

- Comput. Algorith. V is more efficient than Comput. Algorith. I

- Comput. Algorith. IV has the same, generally, behavior with Comput. Algorith. V

- Comput. Algorith. II is more efficient than Comput. Algorith. V

- Comput. Algorith. VII is more efficient than Comput. Algorith. II

- Comput. Algorith. VIII gives mixed results. For big step sizes is more efficient than Comput. Algorith. VII. For small step sizes is less efficient than Comput. Algorith. VII.

- Comput. Algorith. XI has the same, generally, behavior with Comput. Algorith. IX

- Comput. Algorith. XI is more efficient than Comput. Algorith. VIII

- Finally, Comput. Algorith. XII, is the most efficient one.

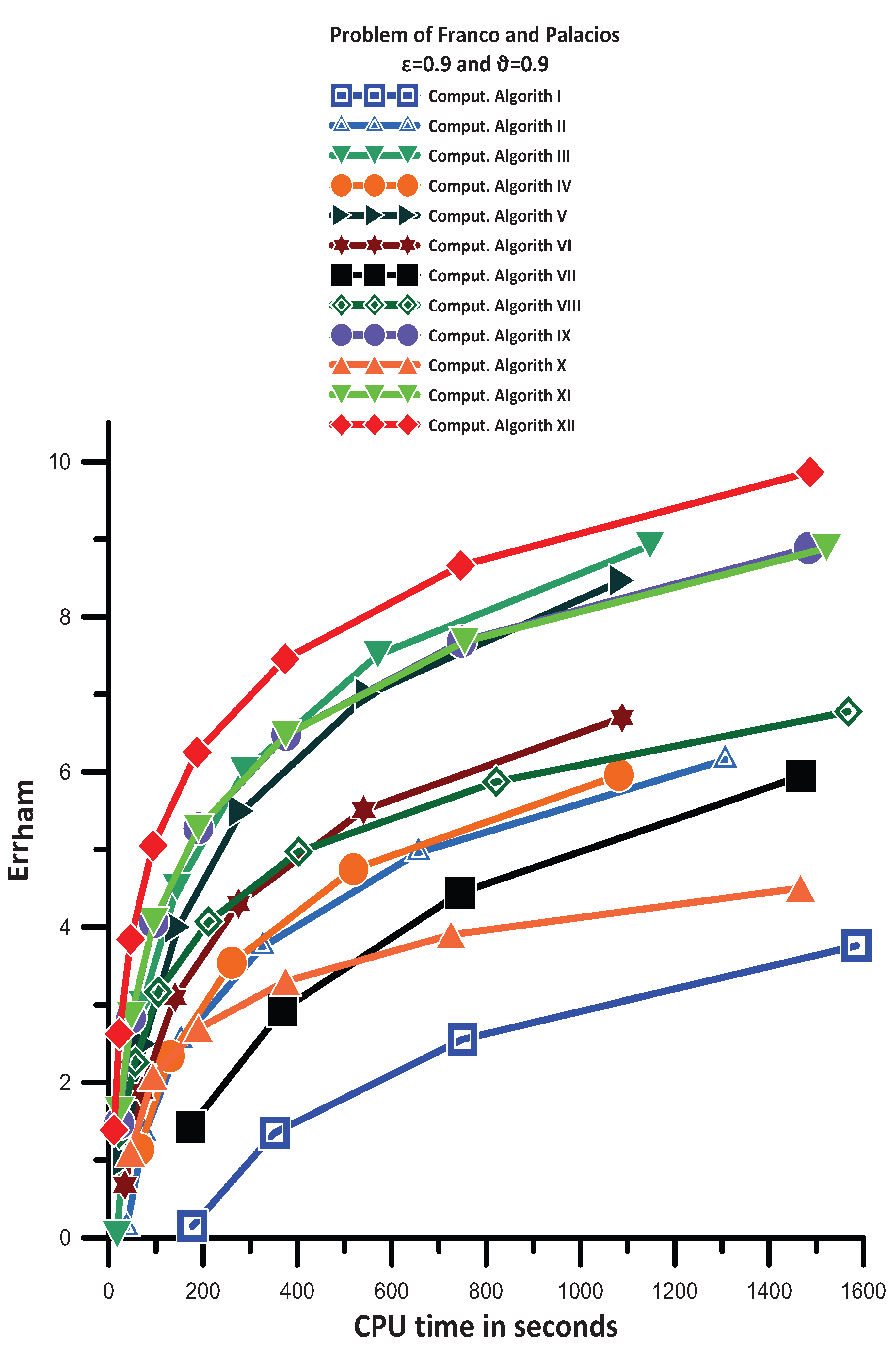

12.2. Problem of Franco and Palacios [32]

- Comput. Algorith. X is more efficient than Comput. Algorith. I.

- Comput. Algorith. VII gives mixed results. For small step sizes is more efficient than Comput. Algorith. X. For big step sizes is less efficient than Comput. Algorith. X.

- Comput. Algorith. II is more efficient than Comput. Algorith. VII.

- Comput. Algorith. IV has the same, generally, behavior with Comput. Algorith. II.

- Comput. Algorith. VIII is more efficient than Comput. Algorith. IV.

- Comput. Algorith. VI gives mixed results. For small step sizes is more efficient than Comput. Algorith. VIII. For big step sizes is less efficient than Comput. Algorith. VIII.

- Comput. Algorith. V is more efficient than Comput. Algorith. VI and Comput. Algorith. VIII.

- Comput. Algorith. XI is more efficient than Comput. Algorith. V. For very small step sizes Comput. Algorith. XI is less efficient than Comput. Algorith. V.

- Comput. Algorith. IX has the same, generally, behavior with Comput. Algorith. XI.

- Comput. Algorith. III has the same, generally, behavior with Comput. Algorith. XI. For small step sizes Comput. Algorith. XI is less efficient than Comput. Algorith. III.

- Finally, Comput. Algorith. XII, is the most efficient one.

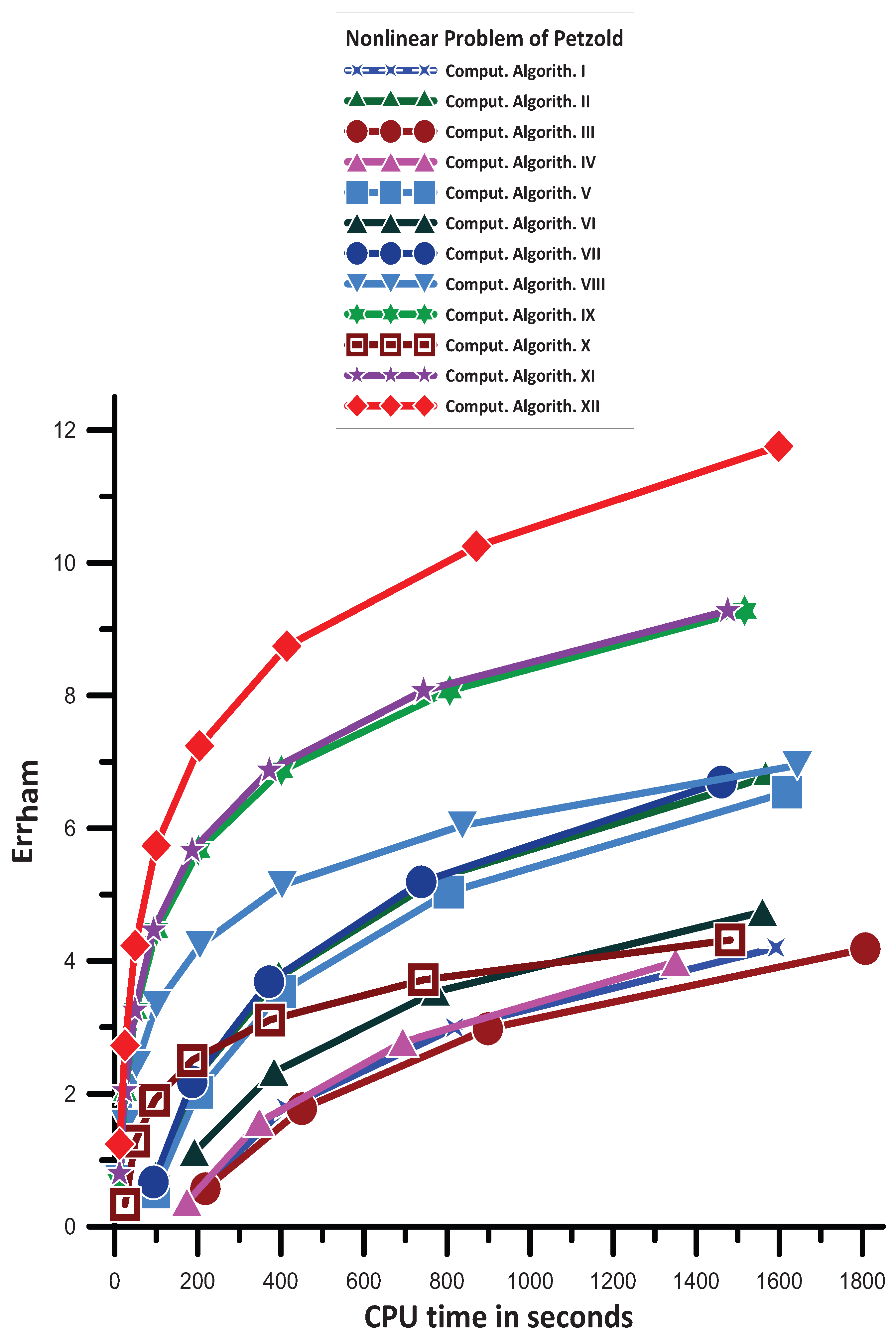

12.3. Nonlinear Problem of Petzold [36]

- Comput. Algorith. I and Comput. Algorith. IV is more efficient than Comput. Algorith. III.

- Comput. Algorith. IV has the same, generally, behavior with Comput. Algorith. I.

- Comput. Algorith. VI is more efficient than Comput. Algorith. IV.

- Comput. Algorith. X gives mixed results. For big step sizes is more efficient than Comput. Algorith. V, Comput. Algorith. VI, and Comput. Algorith. VII. For medium step sizes is more efficient than Comput. Algorith. VI. For very small step sizes is less efficient than Comput. Algorith. VI.

- Comput. Algorith. V gives mixed results. For very big step sizes is less efficient than Comput. Algorith. X. For small step sizes is more efficient than Comput. Algorith. X.

- Comput. Algorith. II has the same, generally, behavior with Comput. Algorith. VII.

- Comput. Algorith. VII is more efficient than Comput. Algorith. V.

- Comput. Algorith. VIII is more efficient than Comput. Algorith. VII.

- Comput. Algorith. IX is more efficient than Comput. Algorith. VIII.

- Comput. Algorith. XI has the same, generally, behavior with Comput. Algorith. IX.

- Finally, Comput. Algorith. XII, is the most efficient one.

12.4. A Nonlinear Orbital Problem [37]

- Comput. Algorith. I, Comput. Algorith. II, Comput. Algorith. III, Comput. Algorith. IV, Comput. Algorith. V, Comput. Algorith. VI, Comput. Algorith. VII, and Comput. Algorith. X are not convergent.

- Comput. Algorith. VIII, Comput. Algorith. IX, Comput. Algorith. XI, and Comput. Algorith. XII have approximately the same behavior and are very efficient.

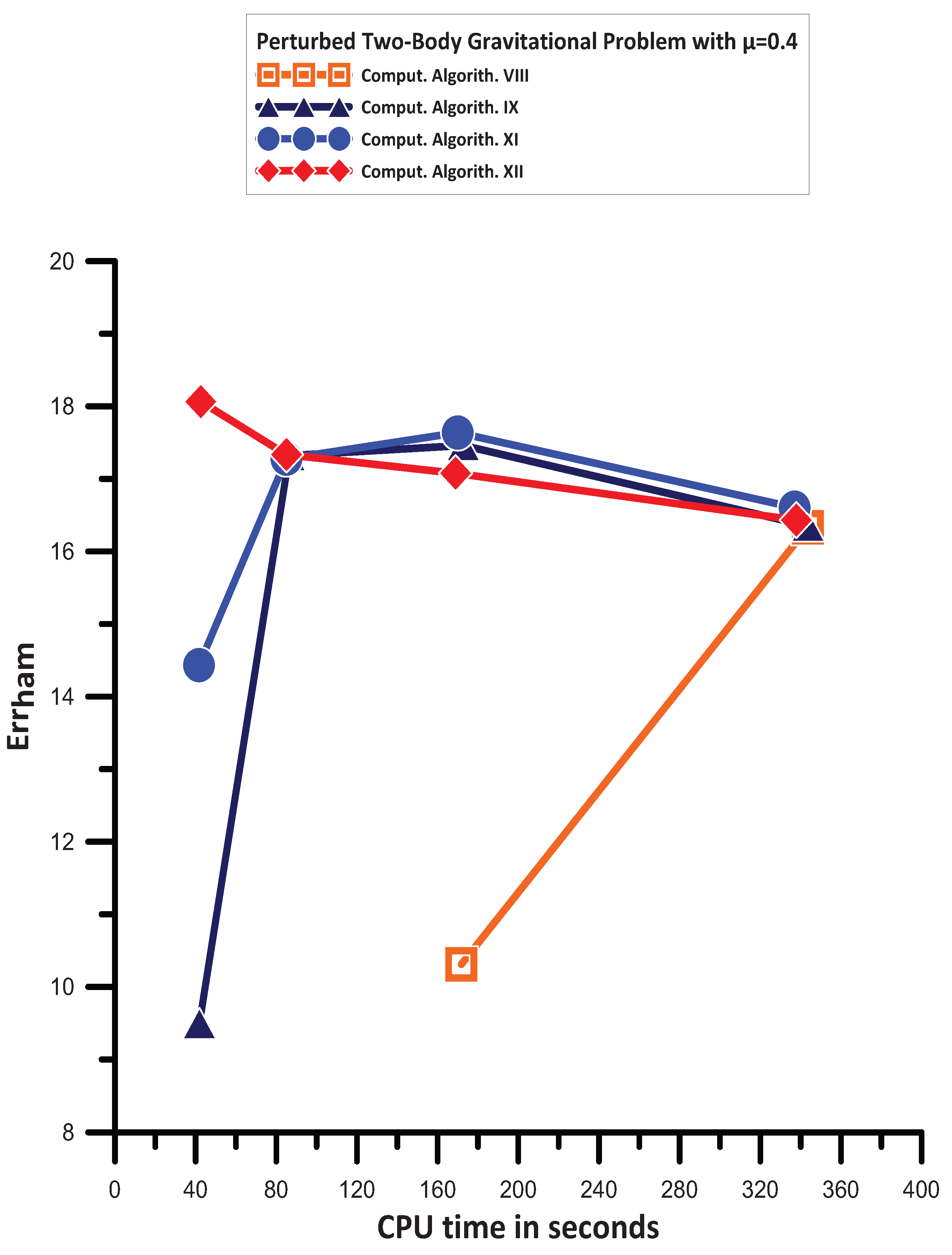

12.5. Perturbed Two–Body Gravitational Problem

12.5.1. The Case of

- Comput. Algorith. I has the same, generally, behavior with Comput. Algorith. VII. We note here that Comput. Algorith. I converge to the solution faster than Comput. Algorith. VII

- Comput. Algorith. X gives mixed results. For big step sizes is less efficient than Comput. Algorith. II, Comput. Algorith. II, Comput. Algorith. IV, Comput. Algorith. V, Comput. Algorith. VI, Comput. Algorith. VIII, Comput. Algorith. IX, Comput. Algorith. X, Comput. Algorith. XI, and Comput. Algorith. XII, and is more efficient than Comput. Algorith. I, and Comput. Algorith. VII. For medium step sizes has the same, approximately, accuracy with Comput. Algorith. II, Comput. Algorith. III, Comput. Algorith. IV, Comput. Algorith. V, and Comput. Algorith. VI. For small step sizes is more efficient than all the algorithms except Comput. Algorith. VIII, Comput. Algorith. IX, Comput. Algorith. XI, and Comput. Algorith. XII.

- Comput. Algorith. V is more efficient than Comput. Algorith. I, and Comput. Algorith. VII.

- Comput. Algorith. IV is more efficient than Comput. Algorith. V

- Comput. Algorith. III gives mixed results. For big step sizes is more efficient than Comput. Algorith. V. For small step sizes has the same, generally, behavior with Comput. Algorith. V.

- Comput. Algorith. II is more efficient than Comput. Algorith. III.

- Comput. Algorith. IX gives mixed results. For very big step sizes has the same, generally, behavior with Comput. Algorith. XI. For medium step sizes is less efficient than Comput. Algorith. XI. For small step sizes has the same, generally, behavior with Comput. Algorith. XI.

- Comput. Algorith. VIII has the same, generally, behavior with Comput. Algorith. IX.

- Comput. Algorith. XII for big step sizes is the most efficient one. For medium step sizes is less efficient than Comput. Algorith. XI. For small step sizes has the same, generally, behavior with Comput. Algorith. VIII, Comput. Algorith. IX, Comput. Algorith. XI.

12.5.2. The Case of

- Comput. Algorith. I, Comput. Algorith. II, Comput. Algorith. III, Comput. Algorith. IV, Comput. Algorith. V, Comput. Algorith. VI, Comput. Algorith. VII, and Comput. Algorith. X are not convergent.

- Comput. Algorith. IX is more efficient than Comput. Algorith. VIII.

- Comput. Algorith. XI is more efficient than Comput. Algorith. IX.

- Comput. Algorith. XII for big step sizes is the most efficient one. For medium step sizes is less efficient than Comput. Algorith. IX, and Comput. Algorith. XI. For small step sizes has the same, generally, behavior with Comput. Algorith. VIII, Comput. Algorith. IX, Comput. Algorith. XI.

- The algorithm described in Section 10 has as its primary goals the elimination of the phase–lag and the amplification–factor (phase–fitted and amplification–fitted method) and the vanishing of their first derivatives.

- The algorithm described in Section 9 has as its primary goals the elimination of the phase–lag and the amplification–factor (phase–fitted and amplification–fitted method).

- The algorithm described in Section 7 is centered on the elimination of the phase–lag and amplification–factor (the phase–fitted and amplification–fitted method) and the first derivative of the phase–lag simultaneously.

- The algorithm described in Section 6 is centered on the elimination of the phase–lag and amplification–factor (the phase–fitted and amplification–fitted method) and the first derivative of the amplification–factor simultaneously.

12.6. High–Order Ordinary Differential Equations and Partial Differential Equations

13. Conclusions

- Methodology for the elimination of the amplification–factor.

- Methodology for the elimination of the amplification–factor and minimization of the phase–lag.

- Methodology for the elimination of the phase–lag and elimination of the amplification–factor.

- Methodology for the elimination of the phase–lag and elimination of the amplification–factor together with elimination of the derivatives of the phase–lag.

- Methodology for the elimination of the phase–lag and elimination of the amplification–factor together with elimination of the derivatives of the amplification–factor.

- Methodology for the elimination of the phase–lag and elimination of the amplification–factor together with the elimination of the derivatives of the phase–Lag and the derivatives of the amplification–Factor

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Direct Formulae for the Calculation of the Derivatives of the Phase–Lag and the Amplification–Factor

Appendix A.1. Direct Formula for the Derivative of the Phase–Lag

Appendix A.2. Direct Formula for the Derivative of the Amplification–Factor

Appendix B. Formulae , i = 8–12

Appendix C. Formulae , , , and

Appendix D. Formula Par22

Appendix E. Formulae Par23, Par24, Par25, and Par26

Appendix F. Formulae Parq, q = 27–31

Appendix G. Formulae Pari, i = 32–34

References

- Landau, L.D.; Lifshitz, F.M. Quantum Mechanics; Pergamon: New York, NY, USA, 1965. [Google Scholar]

- Prigogine, I.; Rice, S. (Eds.) Advances in Chemical Physics; New Methods in Computational Quantum Mechanics; John Wiley & Sons: Berlin/Heidelberg, Germany, 1997; Volume 93. [Google Scholar]

- Simos, T.E. Numerical Solution of Ordinary Differential Equations with Periodical Solution. Ph.D. Thesis, National Technical University of Athens, Athens, Greece, 1990. (In Greek). [Google Scholar]

- Ixaru, L.G. Numerical Methods for Differential Equations and Applications; Reidel: Dordrecht, The Netherlands; Boston, MA, USA; Lancaster, UK, 1984. [Google Scholar]

- Quinlan, G.D.; Tremaine, S. Symmetric multistep methods for the numerical integration of planetary orbits. Astron. J. 1990, 100, 1694–1700. [Google Scholar] [CrossRef]

- Lyche, T. Chebyshevian multistep methods for ordinary differential equations. Numer. Math. 1972, 10, 65–75. [Google Scholar] [CrossRef]

- Konguetsof, A.; Simos, T.E. On the construction of Exponentially-Fitted Methods for the Numerical Solution of the Schrödinger Equation. J. Comput. Meth. Sci. Eng. 2001, 1, 143–165. [Google Scholar] [CrossRef]

- Dormand, J.R.; El-Mikkawy, M.E.A.; Prince, P.J. Families of Runge-Kutta-Nyström formulae. IMA J. Numer. Anal. 1987, 7, 235–250. [Google Scholar] [CrossRef]

- Franco, J.M.; Gomez, I. Some procedures for the construction of high-order exponentially fitted Runge-Kutta-Nyström Methods of explicit type. Comput. Phys. Commun. 2013, 184, 1310–1321. [Google Scholar] [CrossRef]

- Franco, J.M.; Gomez, I. Accuracy and linear Stability of RKN Methods for solving second-order stiff problems. Appl. Numer. Math. 2009, 59, 959–975. [Google Scholar] [CrossRef]

- Chien, L.K.; Senu, N.; Ahmadian, A.; Ibrahim, S.N.I. Efficient Frequency-Dependent Coefficients of Explicit Improved Two-Derivative Runge-Kutta Type Methods for Solving Third- Order IVPs. Pertanika J. Sci. Technol. 2023, 31, 843–873. [Google Scholar] [CrossRef]

- Zhai, W.J.; Fu, S.H.; Zhou, T.C.; Xiu, C. Exponentially-fitted and trigonometrically-fitted implicit RKN methods for solving y′′ = f (t, y). J. Appl. Math. Comput. 2022, 68, 1449–1466. [Google Scholar] [CrossRef]

- Fang, Y.L.; Yang, Y.P.; You, X. An explicit trigonometrically fitted Runge-Kutta method for stiff and oscillatory problems with two frequencies. Int. J. Comput. Math. 2020, 97, 85–94. [Google Scholar] [CrossRef]

- Dormand, J.R.; Prince, P.J. A family of embedded Runge-Kutta formulae. J. Comput. Appl. Math. 1980, 6, 19–26. [Google Scholar] [CrossRef]

- Kalogiratou, Z.; Monovasilis, T.; Psihoyios, G.; Simos, T.E. Runge–Kutta type methods with special properties for the numerical integration of ordinary differential equations. Phys. Rep. 2014, 536, 75–146. [Google Scholar] [CrossRef]

- Anastassi, Z.A.; Simos, T.E. Numerical multistep methods for the efficient solution of quantum mechanics and related problems. Phys. Rep. 2009, 482–483, 1–240. [Google Scholar] [CrossRef]

- Chawla, M.M.; Rao, P.S. A Noumerov-Type Method with Minimal Phase-Lag for the Integration of 2nd Order Periodic Initial-Value Problems. J. Comput. Appl. Math. 1984, 11, 277–281. [Google Scholar] [CrossRef]

- Ixaru, L.G.; Rizea, M. A Numerov-like scheme for the numerical solution of the Schrödinger equation in the deep continuum spectrum of energies. Comput. Phys. Commun. 1980, 19, 23–27. [Google Scholar] [CrossRef]

- Raptis, A.D.; Allison, A.C. Exponential-fitting Methods for the numerical solution of the Schrödinger equation. Comput. Phys. Commun. 1978, 14, 1–5. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, D.; Dai, Y.; Wu, D. An improved trigonometrically fitted P-stable Obrechkoff Method for periodic initial-value problems. Proc. R. Soc. A-Math. Phys. Eng. Sci. 2005, 461, 1639–1658. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Z. A P-stable eighteenth-order six-Step Method for periodic initial value problems. Int. J. Mod. Phys. C 2007, 18, 419–431. [Google Scholar] [CrossRef]

- Shokri, A.; Khalsaraei, M.M. A new family of explicit linear two-step singularly P-stable Obrechkoff methods for the numerical solution of second-order IVPs. Appl. Math. Comput. 2020, 376, 125116. [Google Scholar] [CrossRef]

- Abdulganiy, R.I.; Ramos, H.; Okunuga, S.A.; Majid, Z.A. A trigonometrically fitted intra-step block Falkner method for the direct integration of second-order delay differential equations with oscillatory solutions. Afr. Mat. 2023, 34, 36. [Google Scholar] [CrossRef]

- Lee, K.C.; Senu, N.; Ahmadian, A.; Ibrahim, S.N.I. High-order exponentially fitted and trigonometrically fitted explicit two-derivative Runge-Kutta-type methods for solving third-order oscillatory problems. Math. Sci. 2022, 16, 281–297. [Google Scholar] [CrossRef]

- Fang, Y.L.; Huang, T.; You, X.; Zheng, J.; Wang, B. Two-frequency trigonometrically-fitted and symmetric linear multi-step methods for second-order oscillators. J. Comput. Appl. Math. 2021, 392, 113312. [Google Scholar] [CrossRef]

- Chun, C.; Neta, B. Trigonometrically-Fitted Methods: A Review. Mathematics 2019, 7, 1197. [Google Scholar] [CrossRef]

- Simos, T.E. A New Methodology for the Development of Efficient Multistep Methods for First–Order IVPs with Oscillating Solutions. Mathematics 2024, 12, 504. [Google Scholar] [CrossRef]

- Simos, T.E. Efficient Multistep Algorithms for First–Order IVPs with Oscillating Solutions: II Implicit and Predictor—Corrector Algorithms. Symmetry 2024, 16, 508. [Google Scholar] [CrossRef]

- Simos, T.E. A new methodology for the development of efficient multistep methods for first–order IVPs with oscillating solutions: III The Role of the Derivative of the Phase–Lag and the Derivative of the Amplification–Factor. Axioms 2024, 13, 514. [Google Scholar] [CrossRef]

- Saadat, H.; Kiyadeh, S.H.H.; Karim, R.G.; Safaie, A. Family of phase fitted 3-step second-order BDF methods for solving periodic and orbital quantum chemistry problems. J. Math. Chem. 2024, 62, 1223–1250. [Google Scholar] [CrossRef]

- Stiefel, E.; Bettis, D.G. Stabilization of Cowell’s method. Numer. Math. 1969, 13, 154–175. [Google Scholar] [CrossRef]

- Franco, J.M.; Palacios, M. High-order P-stable multistep methods. J. Comput. Appl. Math. 1990, 30, 1–10. [Google Scholar] [CrossRef]

- Boyce, W.E.; DiPrima, R.C.; Meade, D.B. Elementary Differential Equations and Boundary Value Problems, 11th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2017. [Google Scholar]

- Fehlberg, E. Classical Fifth-, Sixth-, Seventh-, and Eighth-order Runge-Kutta Formulas with Stepsize Control. NASA Technical Report 287. 1968. Available online: https://ntrs.nasa.gov/api/citations/19680027281/downloads/19680027281.pdf (accessed on 15 June 2024).

- Cash, J.R.; Karp, A.H. A variable order Runge–Kutta method for initial value problems with rapidly varying right-hand sides. ACM Trans. Math. Softw. 1990, 16, 201–222. [Google Scholar] [CrossRef]

- Petzold, L.R. An efficient numerical method for highly oscillatory ordinary differential equations. SIAM J. Numer. Anal. 1981, 18, 455–479. [Google Scholar] [CrossRef]

- Simos, T.E. New Open Modified Newton Cotes Type Formulae as Multilayer Symplectic Integrators. Appl. Math. Model. 2013, 37, 1983–1991. [Google Scholar] [CrossRef]

- Ramos, H.; Vigo-Aguiar, J. On the frequency choice in trigonometrically fitted methods. Appl. Math. Lett. 2010, 23, 1378–1381. [Google Scholar] [CrossRef]

- Ixaru, L.G.; Vanden Berghe, G.; De Meyer, H. Frequency evaluation in exponential fitting multistep algorithms for ODEs. J. Comput. Appl. Math. 2002, 140, 423–434. [Google Scholar] [CrossRef]

- Evans, L.C. Partial Differential Equations, 2nd ed.; American Mathematical Society: Providence, RI, USA, 2010; Chapter 3; pp. 91–135. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Simos, T.E. A New Methodology for the Development of Efficient Multistep Methods for First–Order IVPs with Oscillating Solutions IV: The Case of the Backward Differentiation Formulae. Axioms 2024, 13, 649. https://doi.org/10.3390/axioms13090649

Simos TE. A New Methodology for the Development of Efficient Multistep Methods for First–Order IVPs with Oscillating Solutions IV: The Case of the Backward Differentiation Formulae. Axioms. 2024; 13(9):649. https://doi.org/10.3390/axioms13090649

Chicago/Turabian StyleSimos, Theodore E. 2024. "A New Methodology for the Development of Efficient Multistep Methods for First–Order IVPs with Oscillating Solutions IV: The Case of the Backward Differentiation Formulae" Axioms 13, no. 9: 649. https://doi.org/10.3390/axioms13090649

APA StyleSimos, T. E. (2024). A New Methodology for the Development of Efficient Multistep Methods for First–Order IVPs with Oscillating Solutions IV: The Case of the Backward Differentiation Formulae. Axioms, 13(9), 649. https://doi.org/10.3390/axioms13090649