Reconstruction of Piecewise Smooth Multivariate Functions from Fourier Data

Abstract

1. Introduction

2. The 1D Case

2.1. Reconstructing Smooth Non-Periodic Functions

- The locality of the B-spline basis functions.

- A closed form formula for their Fourier coefficients.

- Their approximation power, i.e., if , there exists a spline such that .

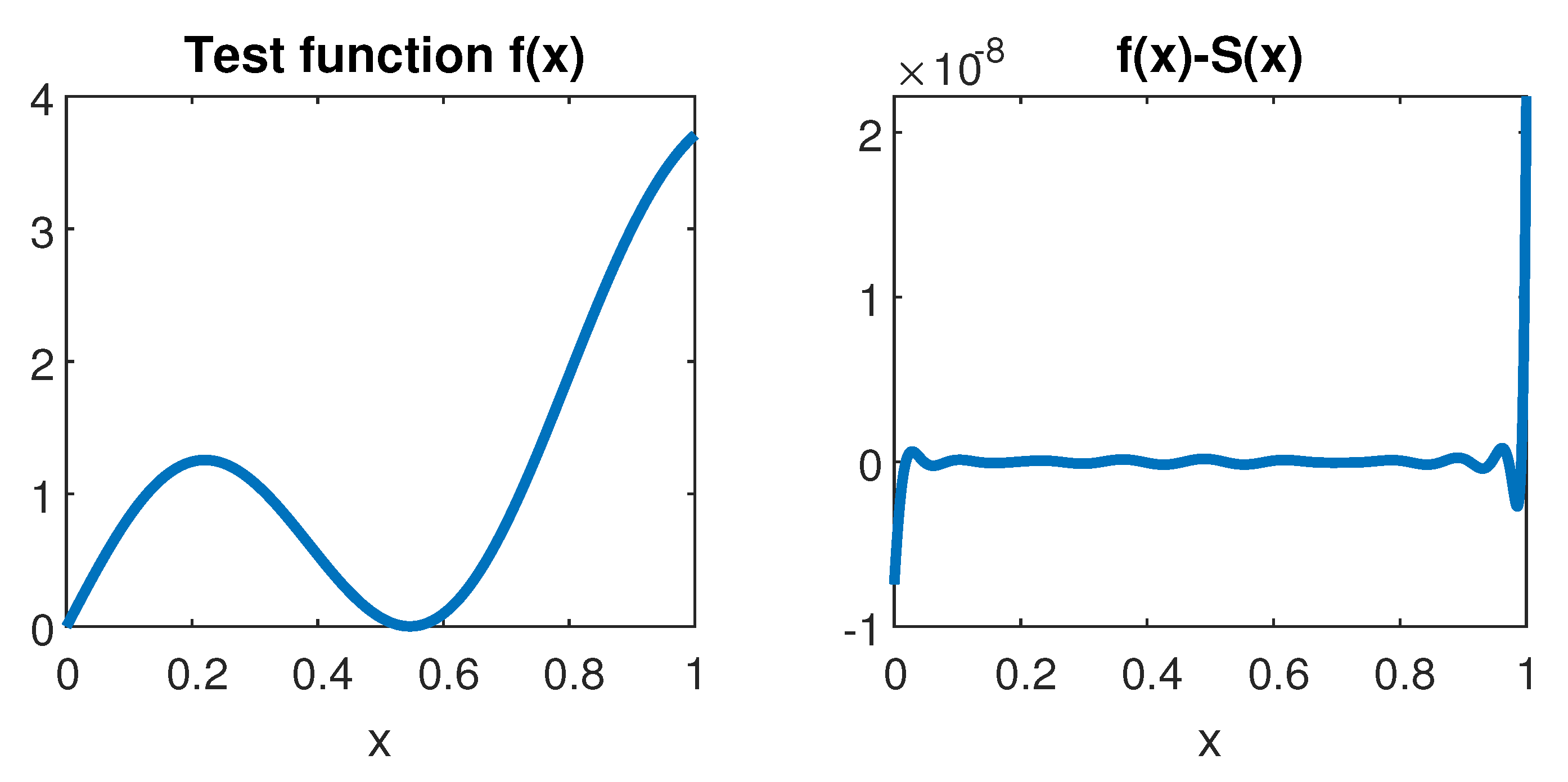

Numerical Example—The Smooth 1D Case

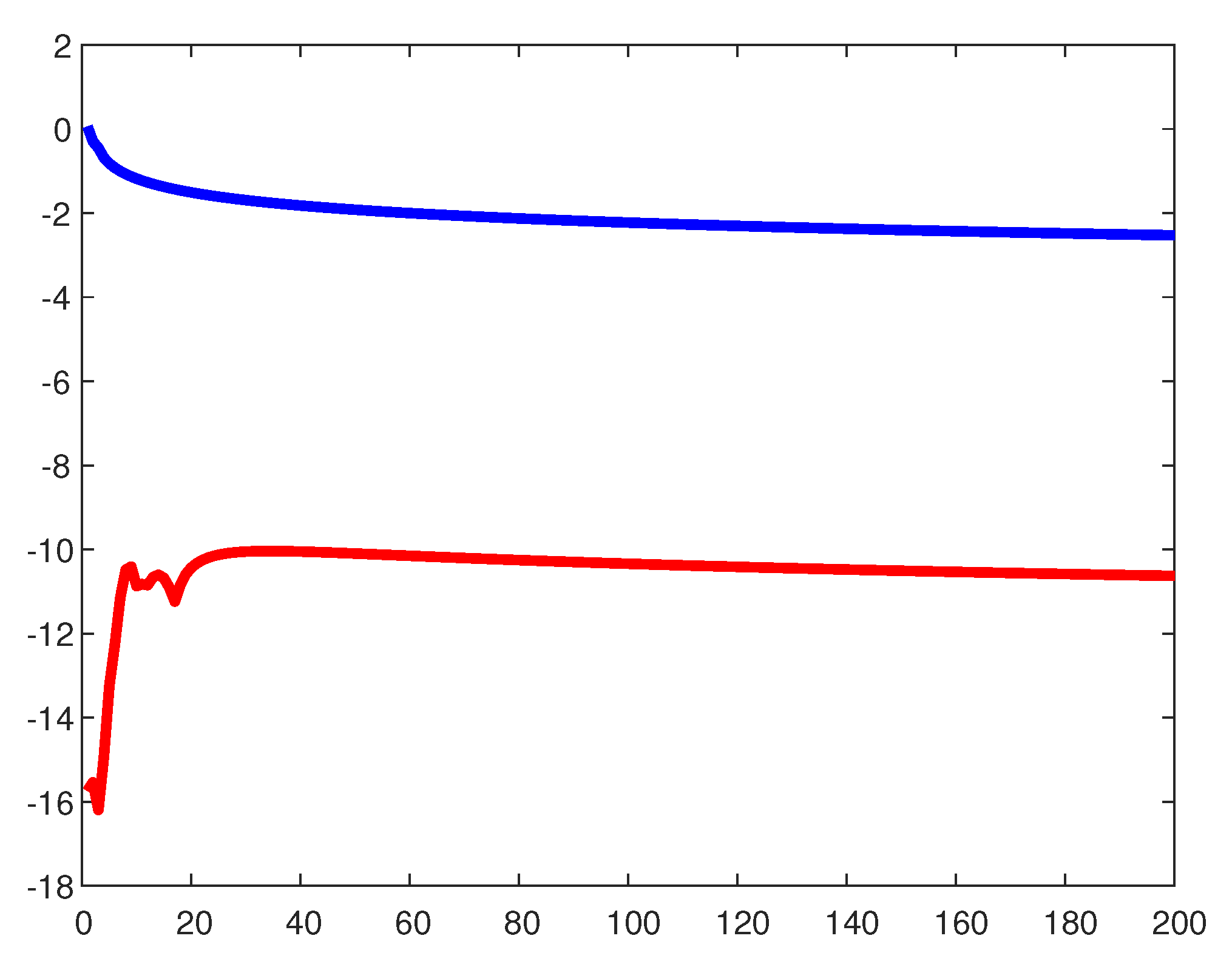

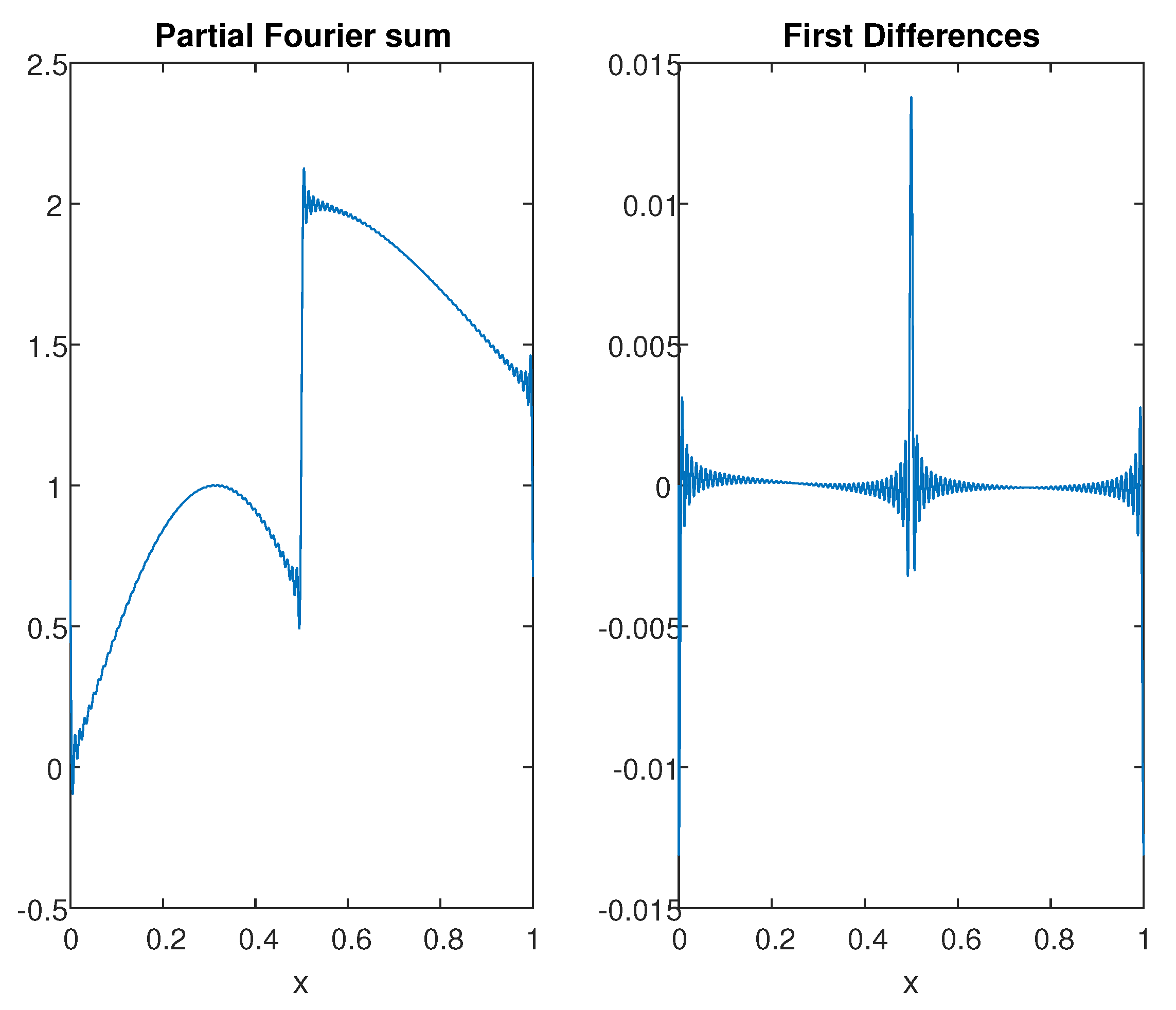

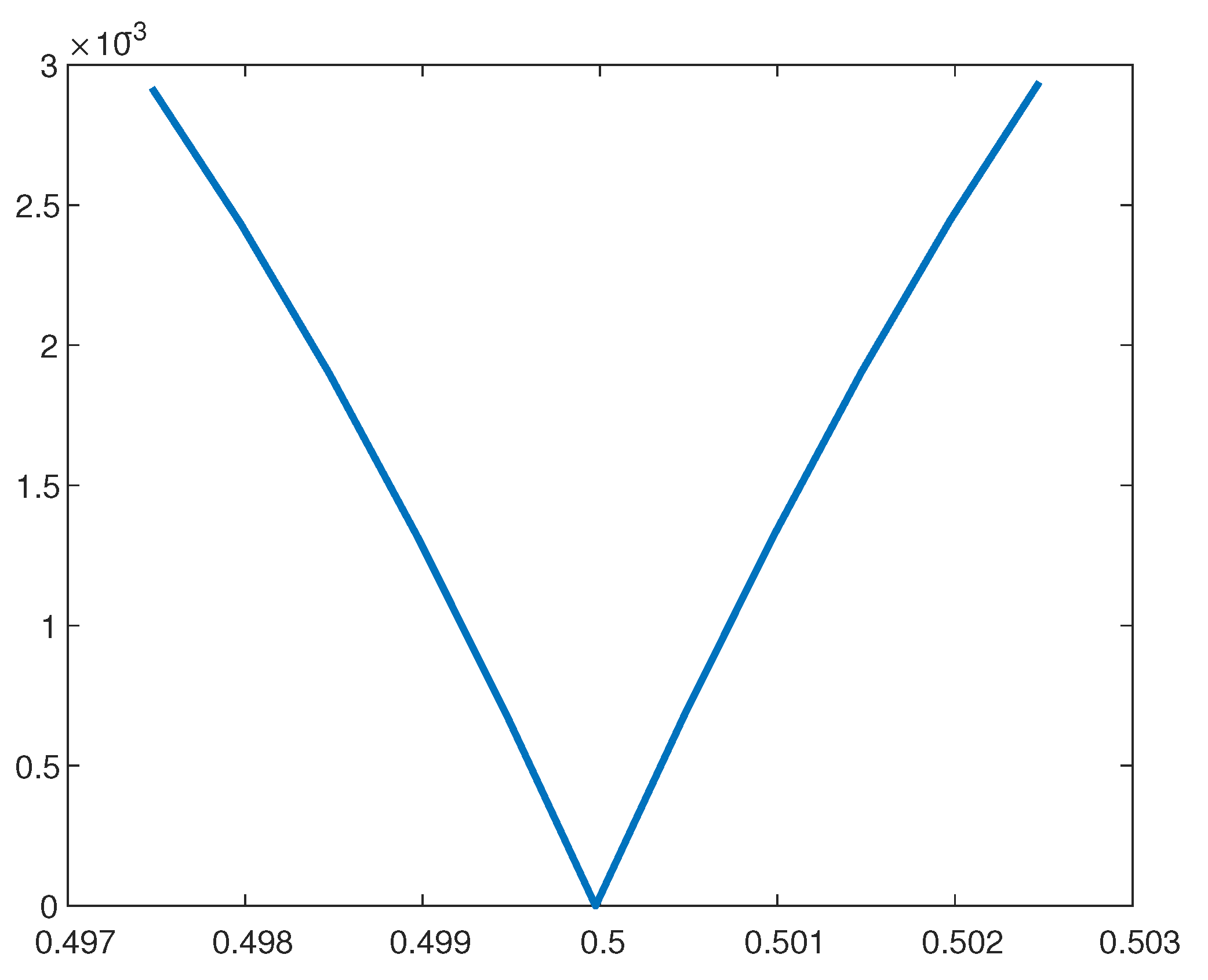

2.2. Reconstructing Non-Smooth Univariate Functions

2.2.1. Finding

2.2.2. The 1D Approximation Procedure

- (1)

- Choose the approximation space for approximating and .

- (2)

- Define the number of Fourier coefficients to be used for building the approximation such that

- (3)

- Find first approximation to : Compute a partial Fourier sum and locate maximal first order difference.

- (4)

- Calculate the first Fourier coefficients of the basis functions of , truncated at .

- (5)

- (6)

- Update the approximation to , by performing quasi-Newton iterations to reduce the objective function in (9).

- (7)

- Go back to (4) to update the approximation.

3. The 2D Case—Non-Periodic and Non-Smooth

3.1. The Smooth 2D Case

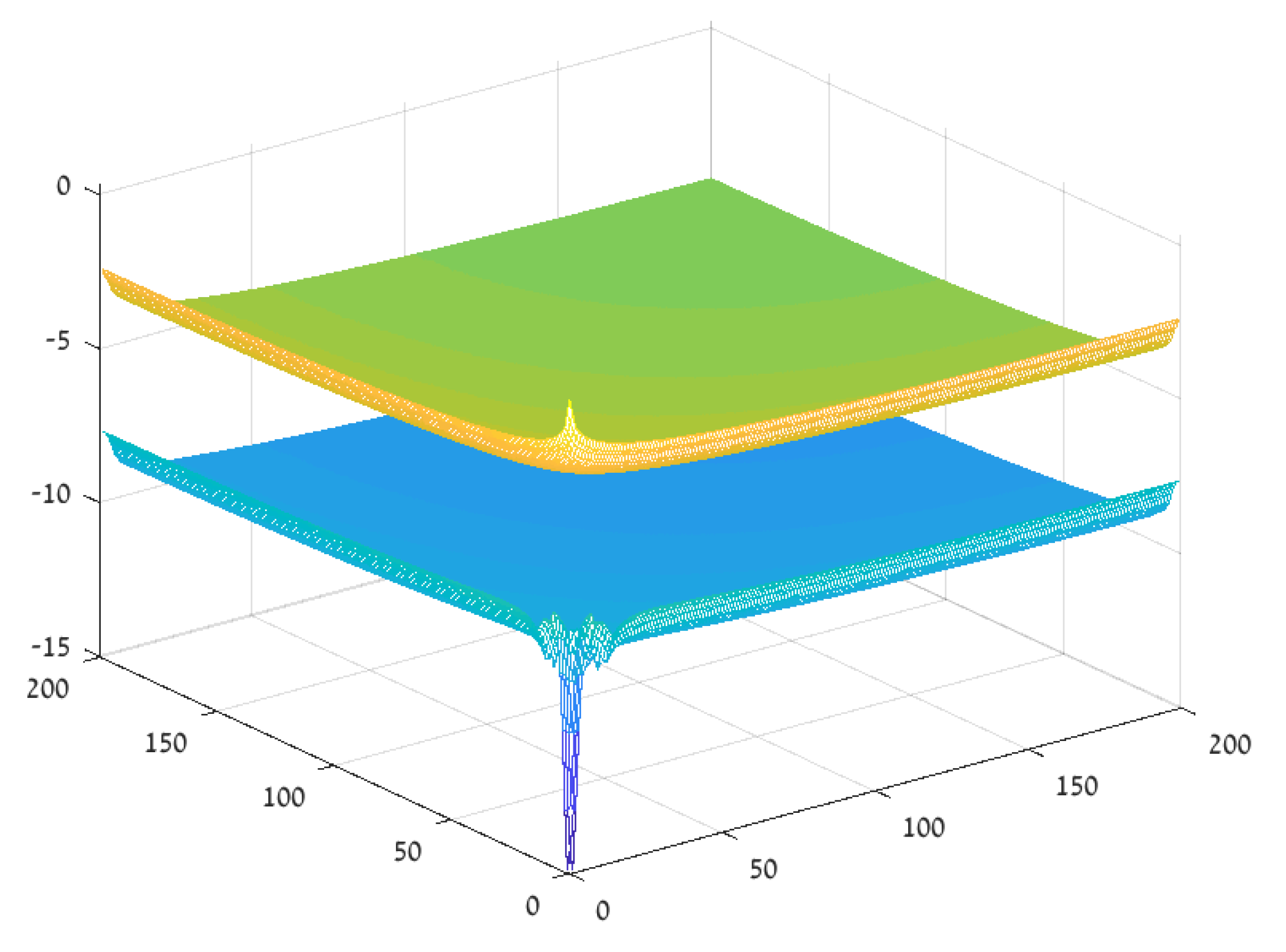

Numerical Example—The Smooth 2D Case

3.2. The Non-Smooth 2D Case

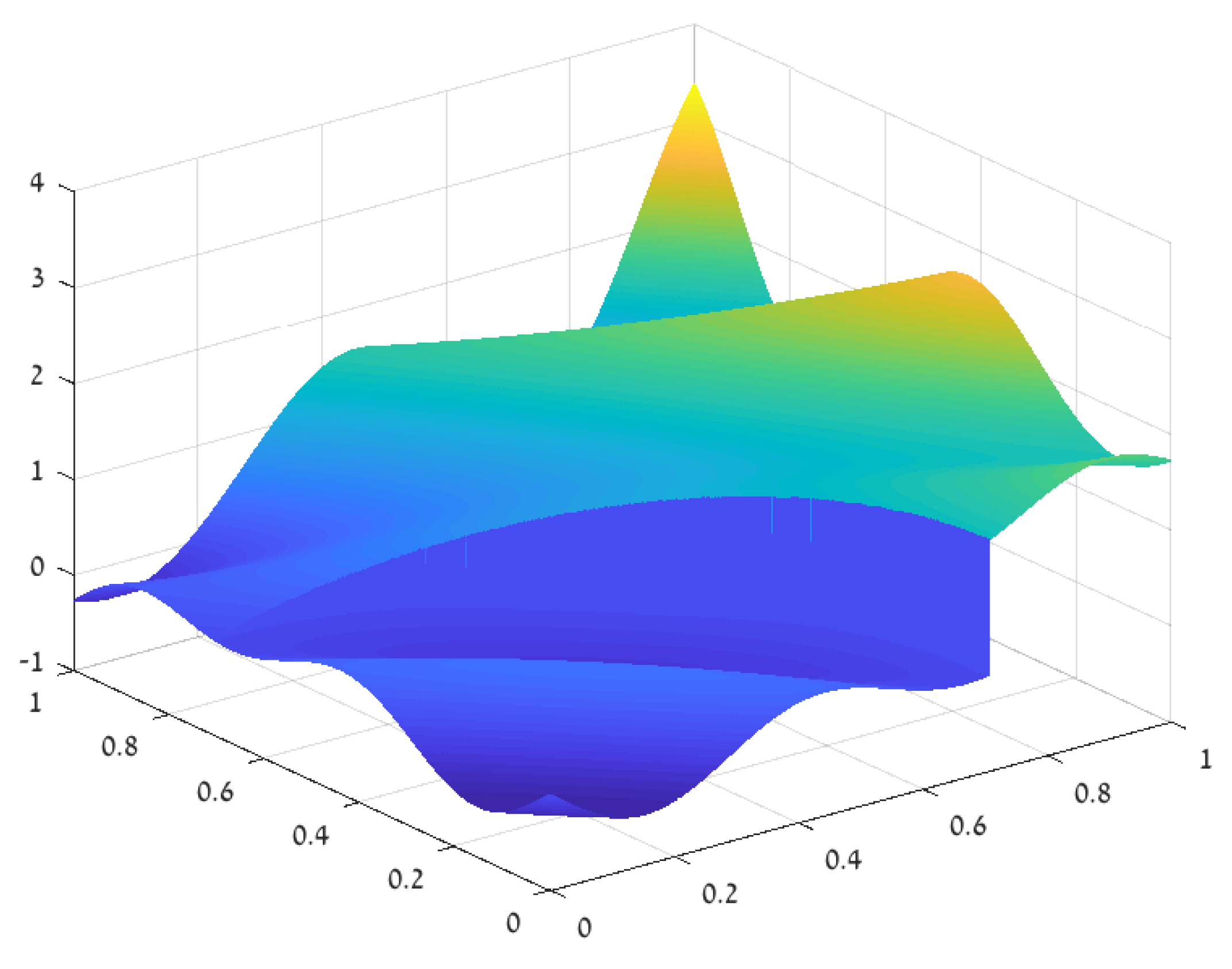

3.2.1. The Approximation Procedure—A Numerical Example

3.2.2. The 2D Approximation Procedure

- (1)

- Choose the approximation space for approximating and and the approximation space for approximating .

- (2)

- Define the number of Fourier coefficients to be used for building the approximation such that

- (3)

- Find first approximation to :

- (a)

- Compute a partial Fourier sum and locate maximal first order differences along horizontal and vertical lines to find points near , with assigned values 0.

- (b)

- Overlay a net of points as in Figure 14, with assigned signed-distance values.

- (c)

- Compute the least-squares approximation from to the values at , denote it .

- (4)

- Calculate the first Fourier coefficients of the basis functions of , truncated with respect to the zero level curve of .

- (5)

- (6)

- Update to improve the approximation to , by performing quasi-Newton iterations to reduce the objective function in (21).

- (7)

- Go back to (4) to update the approximation.

3.2.3. Lower Order Singularities

3.3. Error Analysis

Validity of the Approximation Assumptions

4. The 3D Case

Numerical Example—The Smooth 3D Case

5. Concluding Remarks

Funding

Conflicts of Interest

References

- Gottlieb, D.; Shu, C.W. On the Gibbs phenomenon and its resolution. SIAM Rev. 1977, 39, 644–668. [Google Scholar] [CrossRef]

- Tadmor, E. Filters, mollifiers and the computation of the Gibbs phenomenon. Acta Numer. 2007, 16, 305–378. [Google Scholar] [CrossRef]

- Gelb, A.; Tanner, J. Robust reprojection methods for the resolution of the Gibbs phenomenon. Appl. Comput. Harmon. Anal. 2006, 20, 3–25. [Google Scholar] [CrossRef]

- Eckhoff, K.S. Accurate and efficient reconstruction of discontinuous functions from truncated series expansions. Math. Comput. 1993, 61, 745–763. [Google Scholar] [CrossRef]

- Eckhoff, K.S. Accurate reconstructions of functions of finite regularity from truncated Fourier series expansions. Math. Comput. 1995, 64, 671–690. [Google Scholar] [CrossRef]

- Eckhoff, K.S. On a high order numerical method for functions with singularities. Math. Comput. 1998, 67, 1063–1087. [Google Scholar] [CrossRef]

- Batenkov, D. Complete algebraic reconstruction of piecewise-smooth functions from Fourier data. Math. Comput. 2015, 84, 2329–2350. [Google Scholar] [CrossRef]

- Nersessian, A.; Poghosyan, A. On a rational linear approximation of Fourier series for smooth functions. J. Sci. Comput. 2006, 26, 111–125. [Google Scholar] [CrossRef]

- Levin, D.; Sidi, A. Extrapolation methods for infinite multiple series and integrals. J. Comput. Methods Sci. Eng. 2001, 1, 167–184. [Google Scholar] [CrossRef]

- Sidi, A. Acceleration of convergence of (generalized) Fourier series by the d-transformation. Ann. Numer. Math. 1995, 2, 381–406. [Google Scholar]

- Wilkinson, J.H. Rounding Errors in Algebraic Processes; Prentice-Hall: Englewood Cliffs, NJ, USA, 1963. [Google Scholar]

- Moler, C.B. Iterative refinement in floating point. J. ACM 1967, 14, 316–321. [Google Scholar] [CrossRef]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Levin, D. Reconstruction of Piecewise Smooth Multivariate Functions from Fourier Data. Axioms 2020, 9, 88. https://doi.org/10.3390/axioms9030088

Levin D. Reconstruction of Piecewise Smooth Multivariate Functions from Fourier Data. Axioms. 2020; 9(3):88. https://doi.org/10.3390/axioms9030088

Chicago/Turabian StyleLevin, David. 2020. "Reconstruction of Piecewise Smooth Multivariate Functions from Fourier Data" Axioms 9, no. 3: 88. https://doi.org/10.3390/axioms9030088

APA StyleLevin, D. (2020). Reconstruction of Piecewise Smooth Multivariate Functions from Fourier Data. Axioms, 9(3), 88. https://doi.org/10.3390/axioms9030088