Abstract

Variational inequality theory is an effective tool for engineering, economics, transport and mathematical optimization. Some of the approaches used to resolve variational inequalities usually involve iterative techniques. In this article, we introduce a new modified viscosity-type extragradient method to solve monotone variational inequalities problems in real Hilbert space. The result of the strong convergence of the method is well established without the information of the operator’s Lipschitz constant. There are proper mathematical studies relating our newly designed method to the currently state of the art on several practical test problems.

1. Introduction

Assume that is a nonempty, closed and convex subset of a real Hilbert space , and and are the sets of real numbers and natural numbers, respectively. In this paper, we consider the classical variational inequalities problems [1,2] (in short, ) and the solution set of variational inequalities problem represent by Assume that F is an operator and the variational inequalities problem for an operator is defined in the following way:

The problem (1) is well defined and equivalent to solve the following fixed point problem:

for some where L is the Lipschitz constant of the operator F. We assume that the followings conditions have been satisfied:

- (b1)

- The solution set is represented by and it is nonempty;

- (b2)

- An operator is monotone—i.e.,

- (b3)

- F is Lipschitz continuous if there exists , such that

The variational inequalities theory is a useful technique for investigating a large number of problems in physics, economics, engineering and optimization theory. It was firstly introduced by Stampacchia [1] in 1964 and also well established that the problem (1) is an important problem in nonlinear analysis. It is an advantageous mathematical model that puts together several topics of applied mathematics, such as the network equilibrium problems, the necessary optimality conditions, the systems of non-linear equations and the complementarity problems [3,4,5,6,7].

The projection method and its modified version methods are crucial for finding the numerical solutions of variational inequality problems. Many studies have been suggested and researched different types of projection methods to solve the variational inequalities problem (see for more details [8,9,10,11,12,13,14,15,16,17,18]) and others, as in [19,20,21,22,23,24,25,26,27,28]. The simplistic methodology is the gradient method for which only one projection on a feasible set is required. A convergence of the method, however, requires strong monotonicity on F. To prevent the strong monotonicity hypothesis, Korpelevich [8] and Antipin [29] introduced the following extragradient method.

for some . The subgradient extragradient algorithm was recently developed by Censor et al. [10] to resolve problem (1) in real Hilbert space. Their method has the form of

where and

In this article, motivated by the methods in [10,30,31] and the viscosity method [14] we introduce a new viscosity subgradient–extragradient algorithm to solve variational inequality problems involving monotone operators in Hilbert space. It is important to note that, our proposed algorithm operates more effectively than the existing ones. Particularly in comparison to the results of Yang et al. [30], our algorithm operates efficiently in most situations. Analogously to the results of Yang et al. [30], proof of the convergence of Algorithm 1, it is not compulsory to have the information of the Lipschitz constant of the operator The proposed algorithm could be seen as a modification of the methods that are found in [8,10,30,31]. Under mild conditions, a strong convergence theorem was proven to be associated with the proposed method. Numerical experimental studies have been shown that the new method considers being more effective than the current ones in [30].

The rest of the article is arranged in the following way: Section 2 provides a few definitions and basic results that are used throughout the paper. Section 3 contains the main algorithm and convergence theorem. Section 4 includes the numerical results that illustrate the algorithmic efficacy of the introduced method.

| Algorithm 1 An Explicit Method for Monotone Variational Inequality Problems |

|

2. Background

A metric projection for onto a closed and convex subset of is defined by

Lemma 1

([32]; Page 31). For and then the following relationship holds.

- (i).

- (ii).

- .

Lemma 2

([32,33]). Assume be a nonempty, closed and convex subset of a real Hilbert space and let be a metric projection from onto . Then:

- (i).

- Let and

- (ii).

- if and only if

- (iii).

- For and

Lemma 3

([34]). Assume that is a sequence of non-negative real numbers such that

where and meet with the following criteria:

Then,

Lemma 4

([35]). Assume that is a sequence of real numbers such that there is a subsequence of such that for all Then, there is a non decreasing sequence such that as and the following conditions are fullfilled by all (sufficiently large) numbers :

In fact,

Lemma 5

([36]). Assume that is a nonempty closed convex set in and an operator is monotone and continuous. Then, is a solution of the problem (1) if and only if is a solution of the following problem:

3. Algorithm and Corresponding Strong Convergence Theorem

We provide a method consisting of two convex minimization problems through a viscosity and an explicit stepsize formula which are being used to enhance the rate of convergence the iterative sequence and to make the method independent of the Lipschitz constant L. The detailed method is given below:

Remark 1.

is a half-space and so is a closed and convex set in

Lemma 6.

The sequence is decreasing monotonically with a lower bound and converges to

Proof.

From the sequence we see that this sequence is monotone and nonincreasing. It is given that F is Lipschitz-continuous with . Let such that

The above discussion implies that the sequence has a lower bound Moreover, there exists number such that □

Lemma 7.

Assume that an operator satisfies the conditions(b1)–(b3). For each we have

Proof.

Let consider the following

From the assumption that we have

implies that

Now, using the Equation (4) implies that

Given that is a solution of , we get

Due to the monotonicity of F on , we can obtain

Since it follows that

Thus, we have

Note that and by the definition of , we have

Theorem 1.

Assume that an operator satisfies the conditions(b1)–(b3) and belongs to solution set Then, the sequences and generated by Algorithm 1 strongly converge to .

Proof. Claim 1:

The sequence is bounded in

From Lemma 7, we have

Since , then exits a fixed number such that

Thus, there is a finite number such that

Thus, from (15), we obtain

Let By definition of the sequence and due to contraction f with constant and we obtain

Finally, we deduce that the sequence is bounded.

Claim 2: If then, as a subsequence, of such that as

The reflexivity of and the boundedness of imply that there exists a subsequence such that as It is sufficient to prove that Due to we also have as In addition, the fact that

that is equivalent to

That is, we have

From the monotonicity condition on F, we have

that is

Combining expressions (20) and (21), we obtain

for all since (see Lemma 6) and the sequence is bounded in As and pass the limit in (22) as we obtain

Apply the well-known Minty Lemma 5, this is what we infer:

Claim 3: The sequence is strong convergent in

The strong convergence of the sequence is as follows. The continuity and monotonicity of the operator F and the Minty lemma gives that is a closed and convex set (see [37,38] for more details). As mapping f is a contraction, so is . By using the Banach contraction principle to guarantee that an unique element exists, , such that

Hence, we have

Now, considering and using Lemma 1 (i) and Lemma 7, we have

The remainder of the proof can be divided into two cases:

Case 1: Assume that there is a fixed number such that

Thus, exists and let By using expression (25), we have

Due to the existence of and , we obtain

It follows that

Hence, we obtain

The sequence is bounded and implies that the sequences and are also bounded. Thus, we can take a subsequence of such that converges weakly to some and

We have It means that

From Lemma 7 and Lemma 1 (ii) (∀), we obtain

It follows (32) that

Choose () large enough such that Now, by using expressions (33) and (34) and applying Lemma 3, conclude that as

Case 2: Assume that there is a subsequence of such that

Thus, by Lemma 4 there is a sequence as such that

Similar to case 1 and from (25), we obtain

Due to , and we deduce the following:

It follows that

Similar to case 1, we can easily obtain that

It follows that

Due to as , and we obtain

Finally, the inequality

Consequently, This completes the proof of the theorem. □

4. Numerical Illustrations

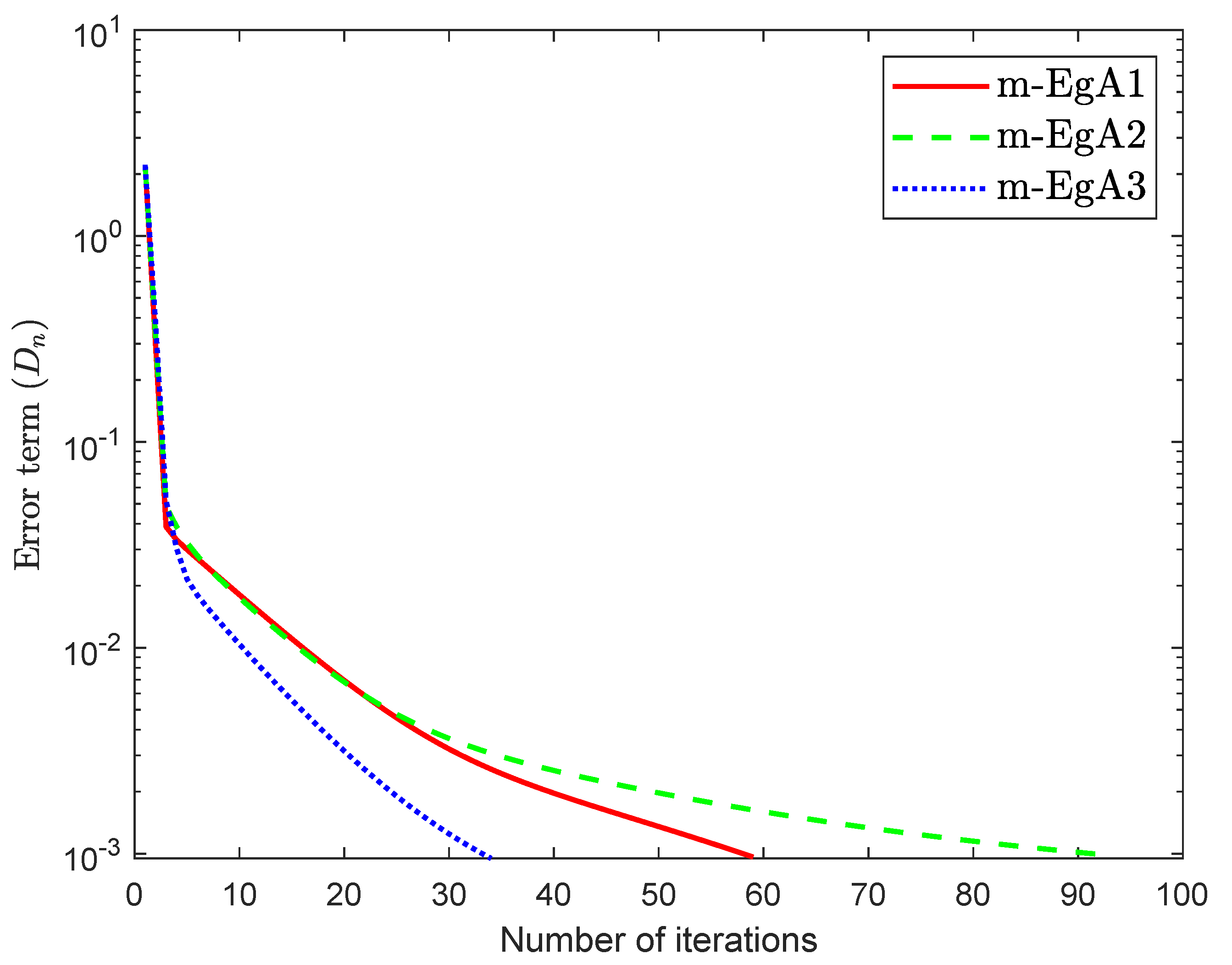

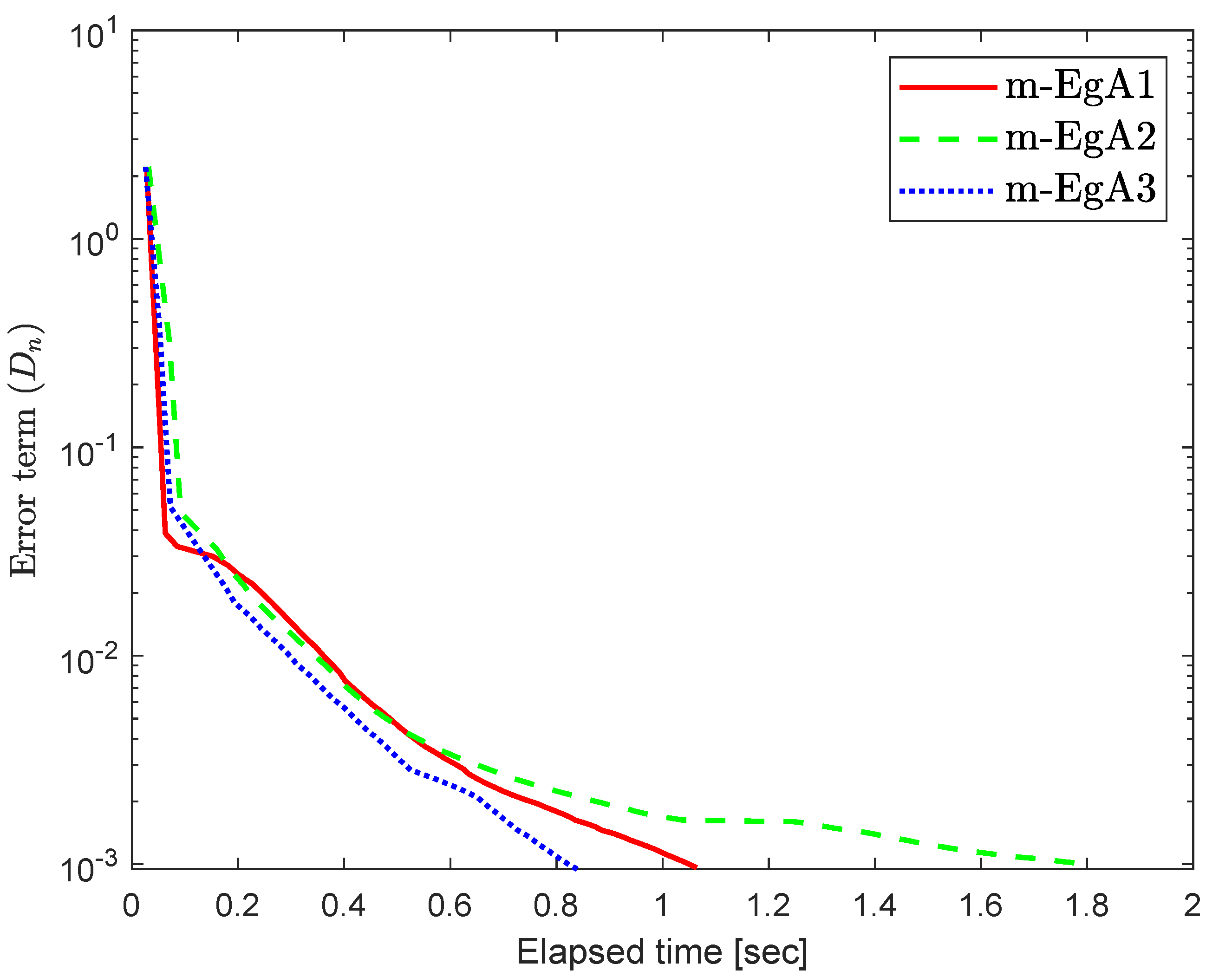

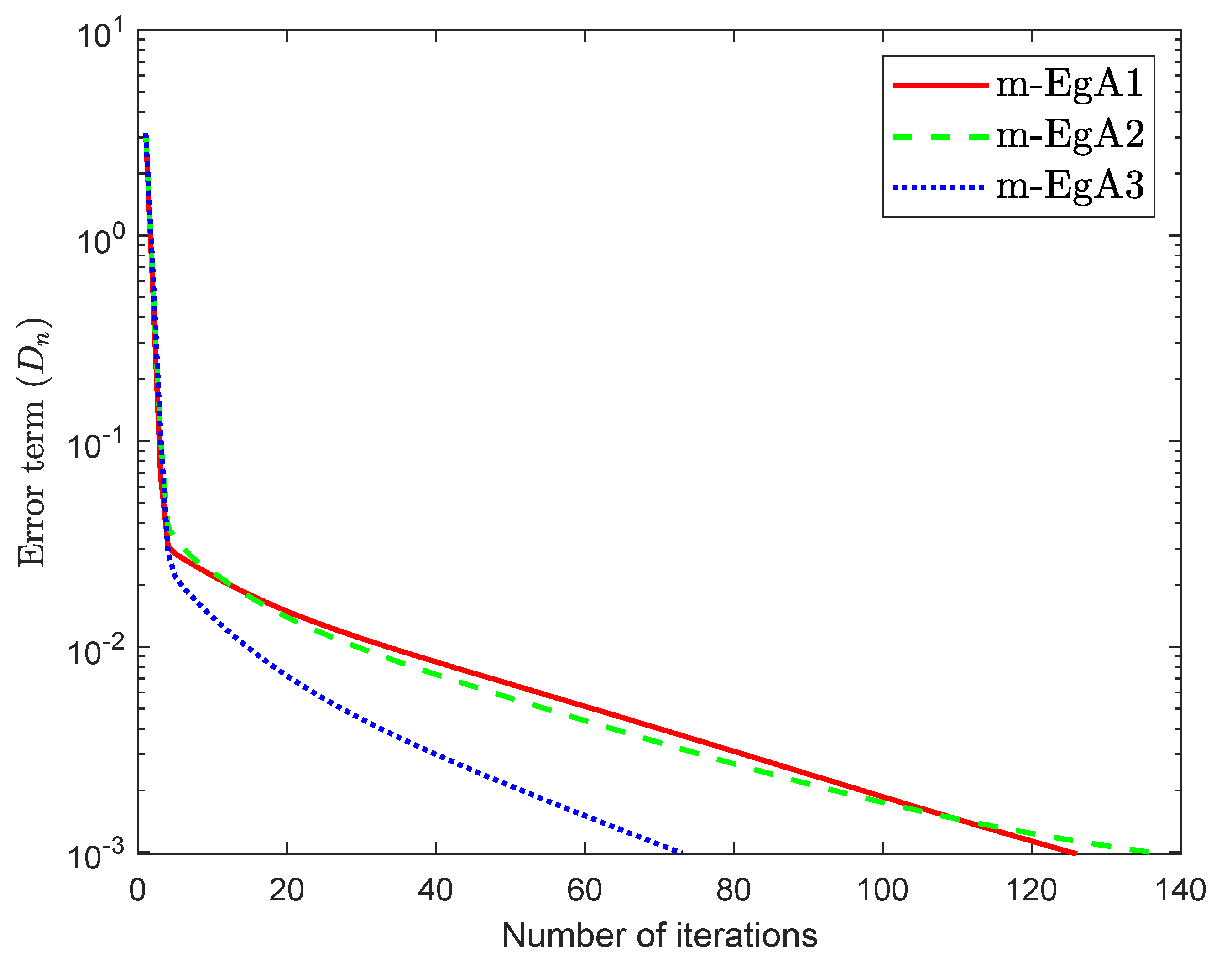

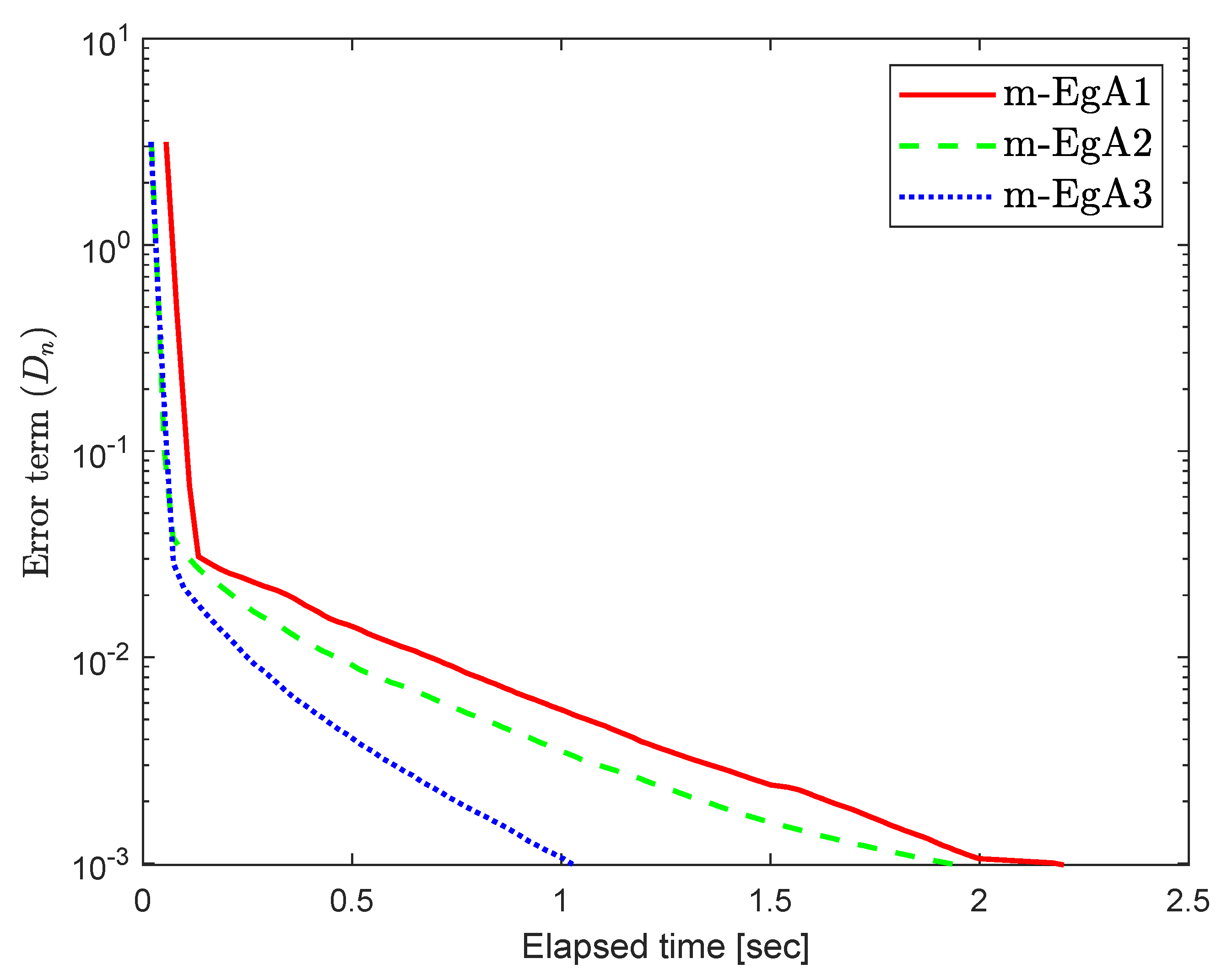

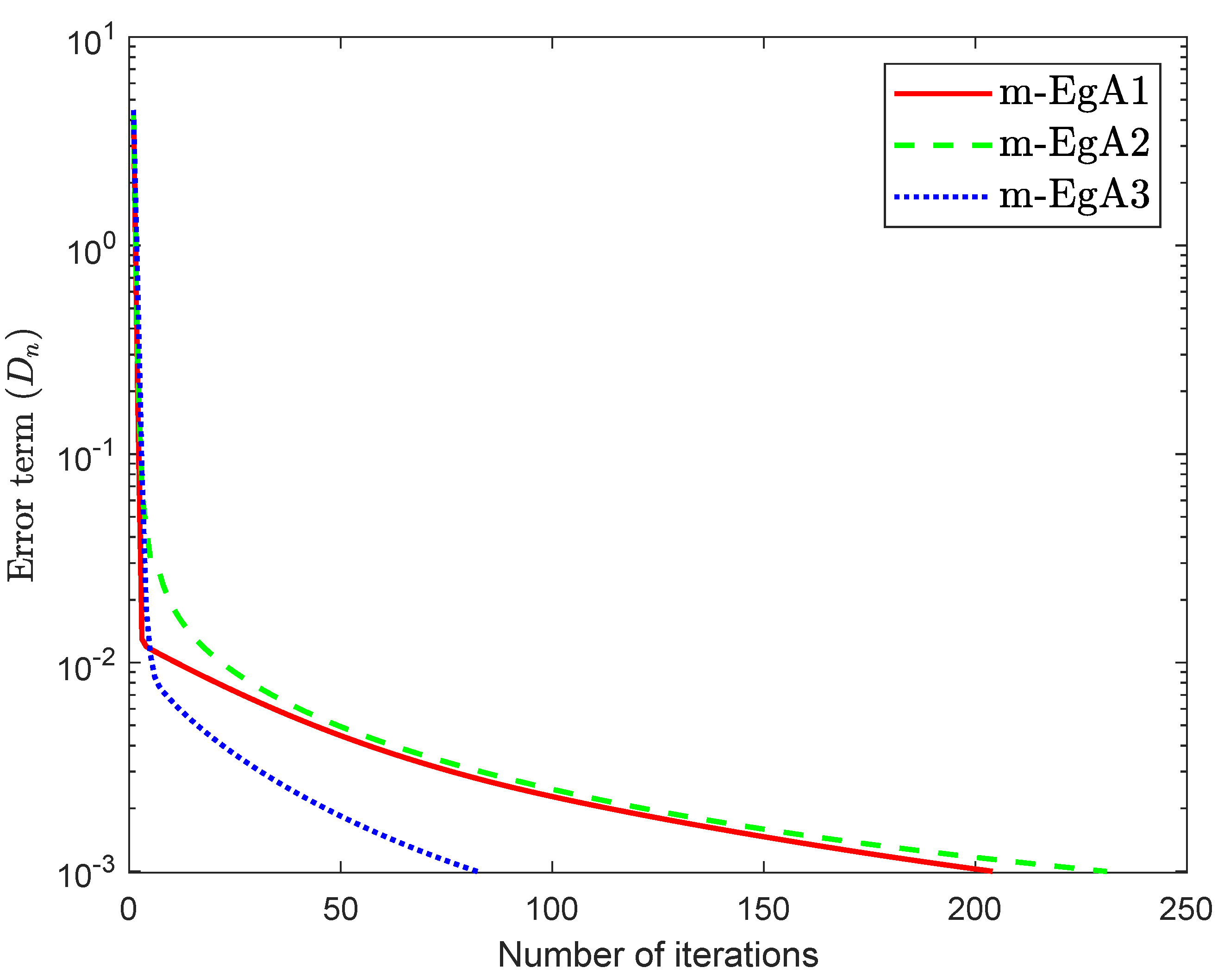

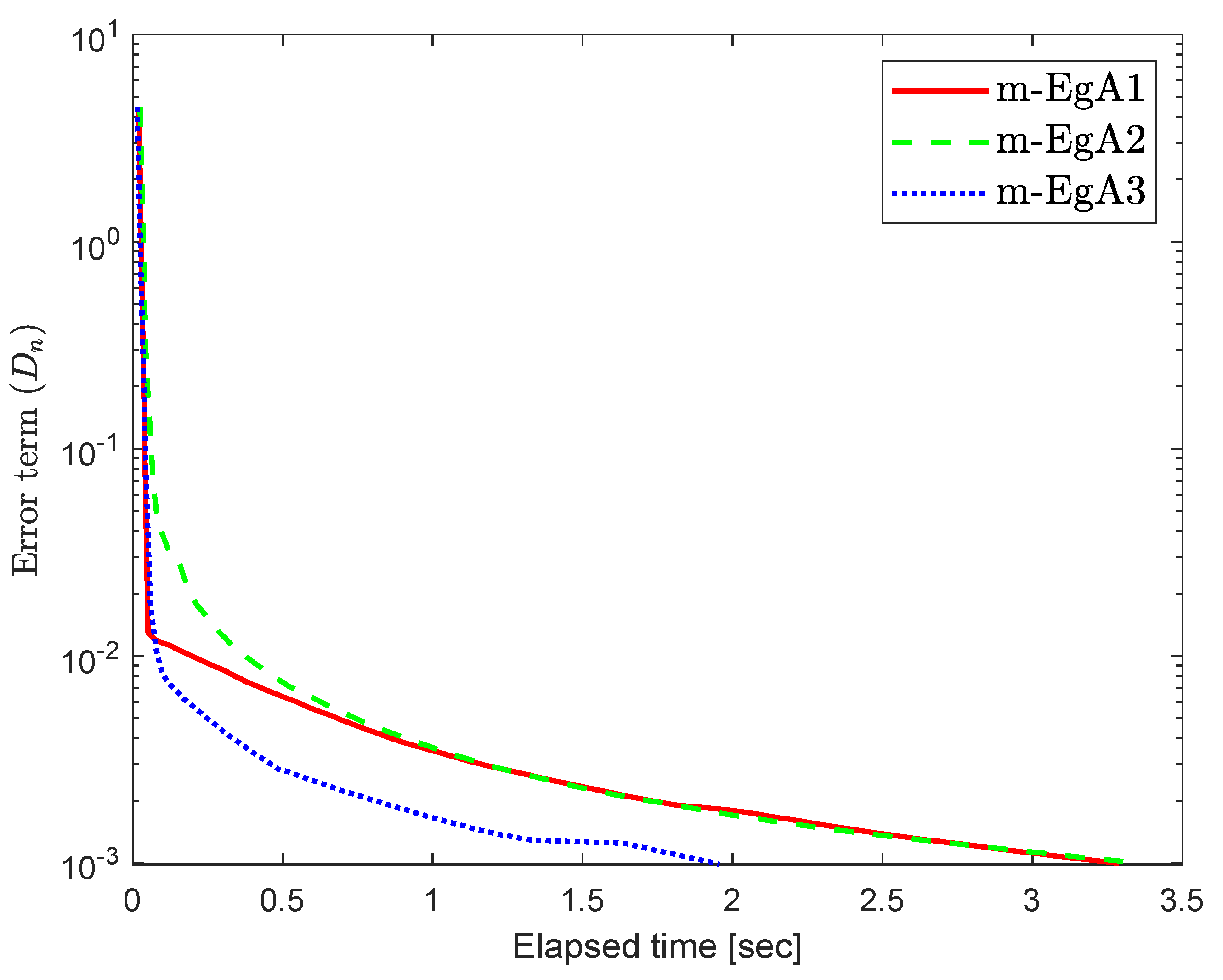

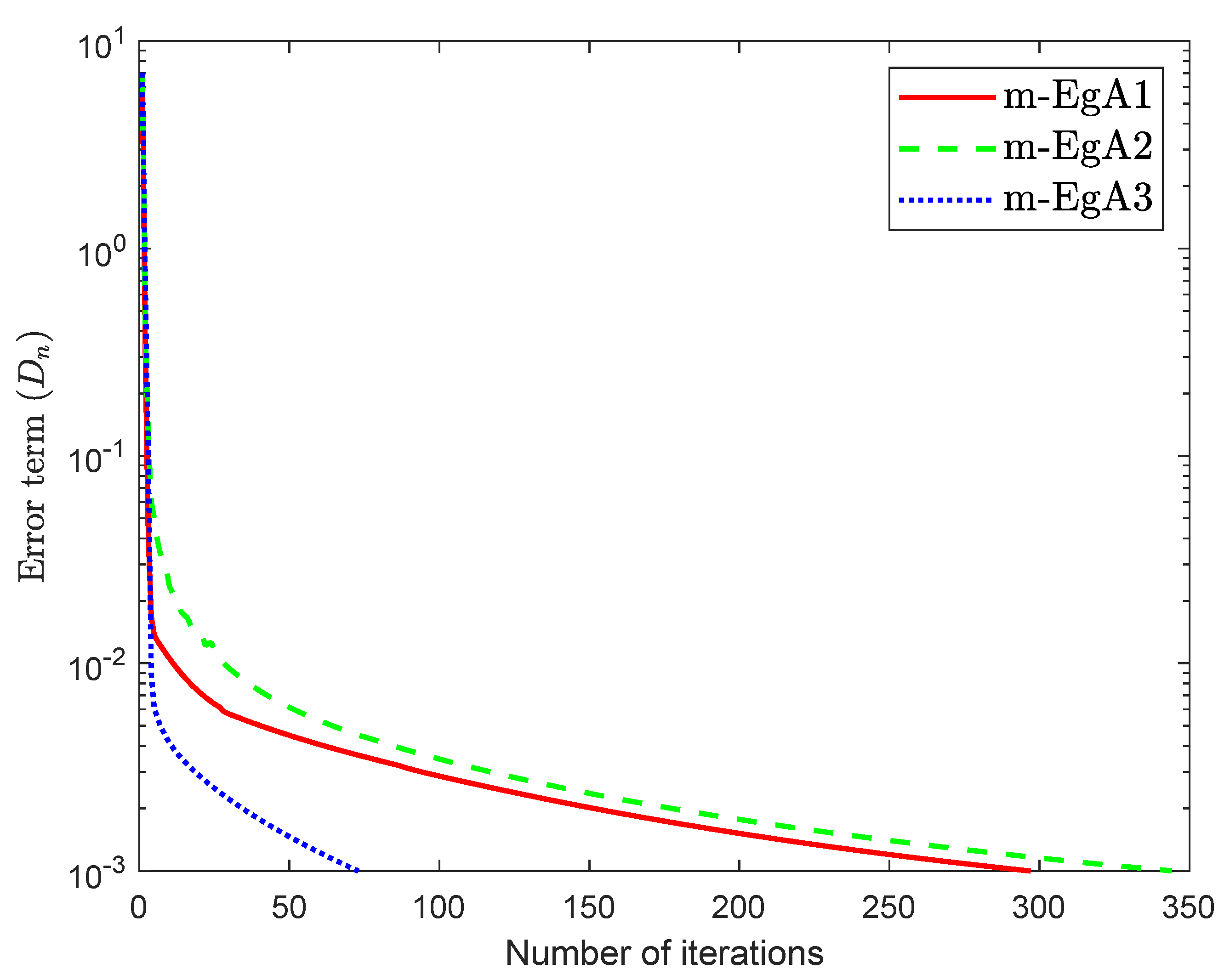

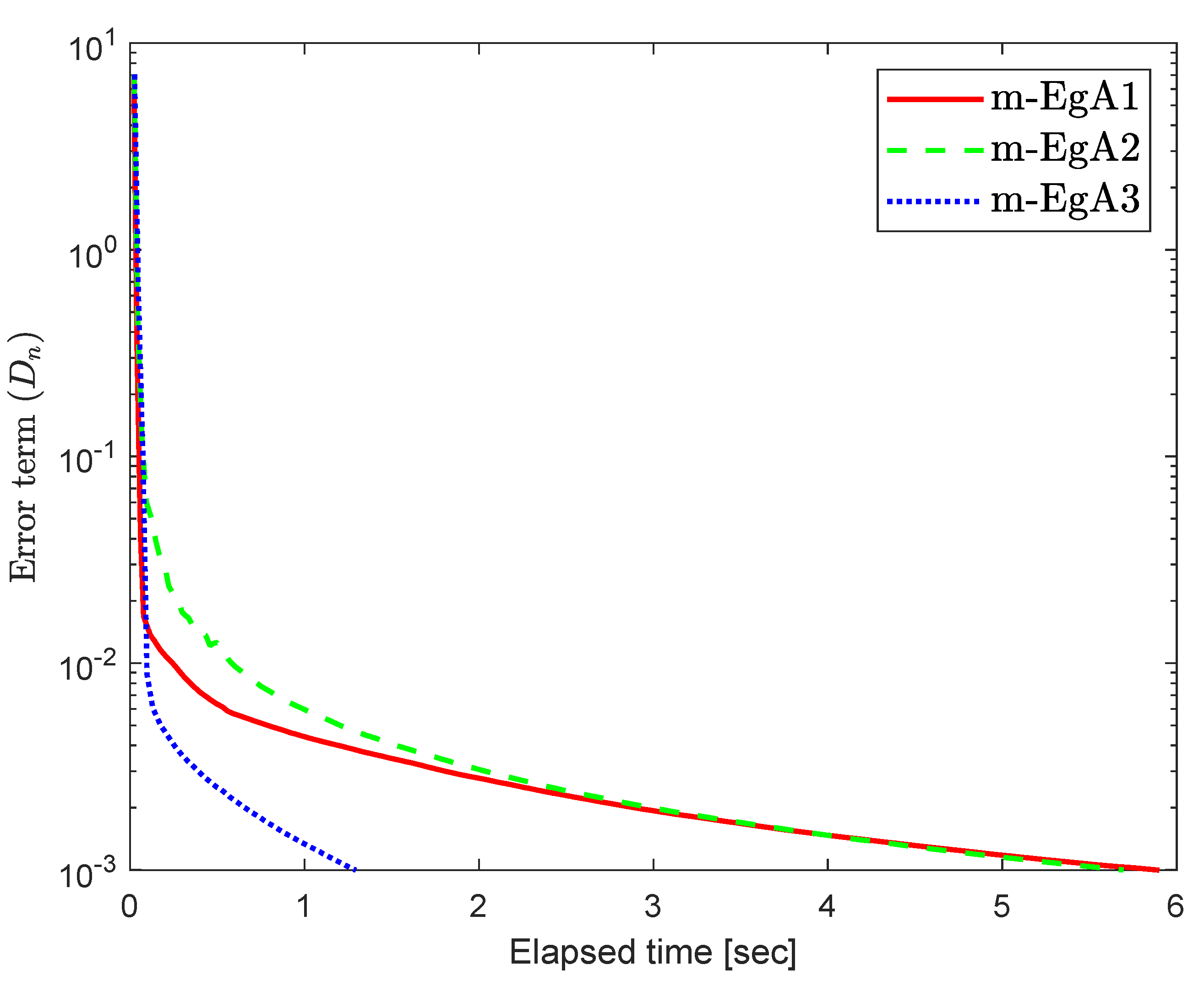

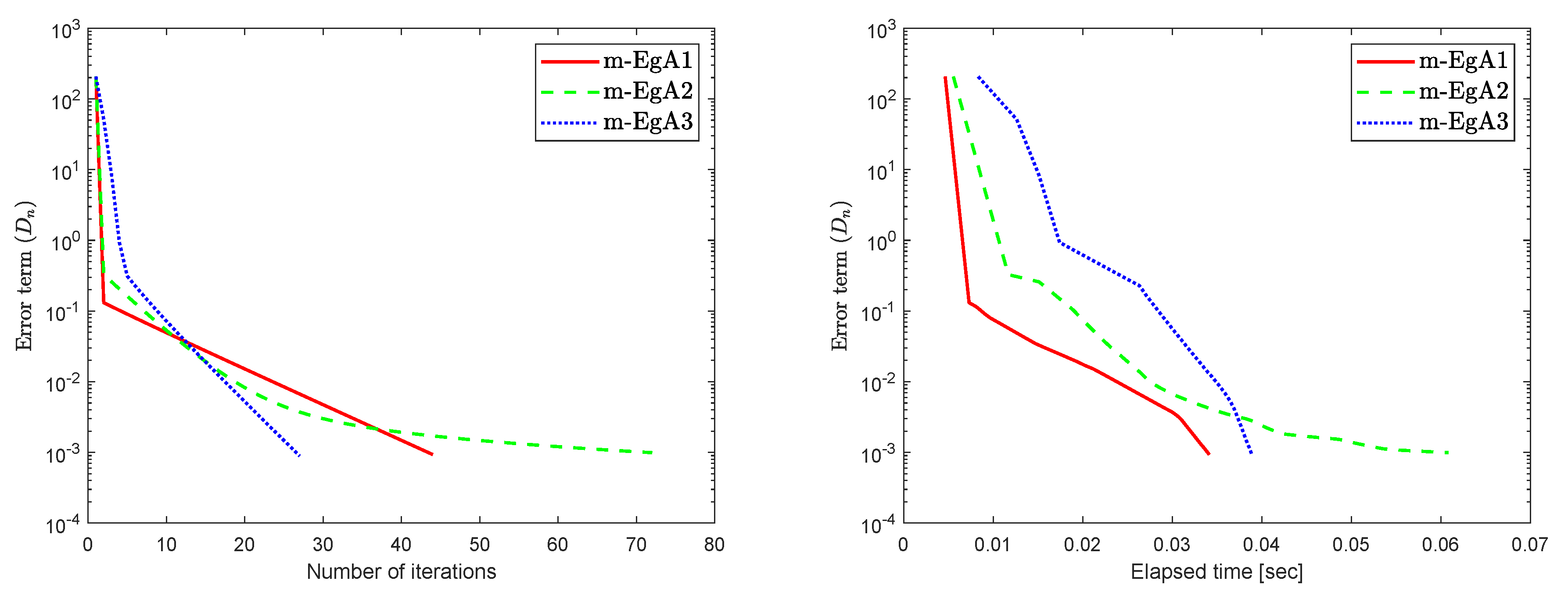

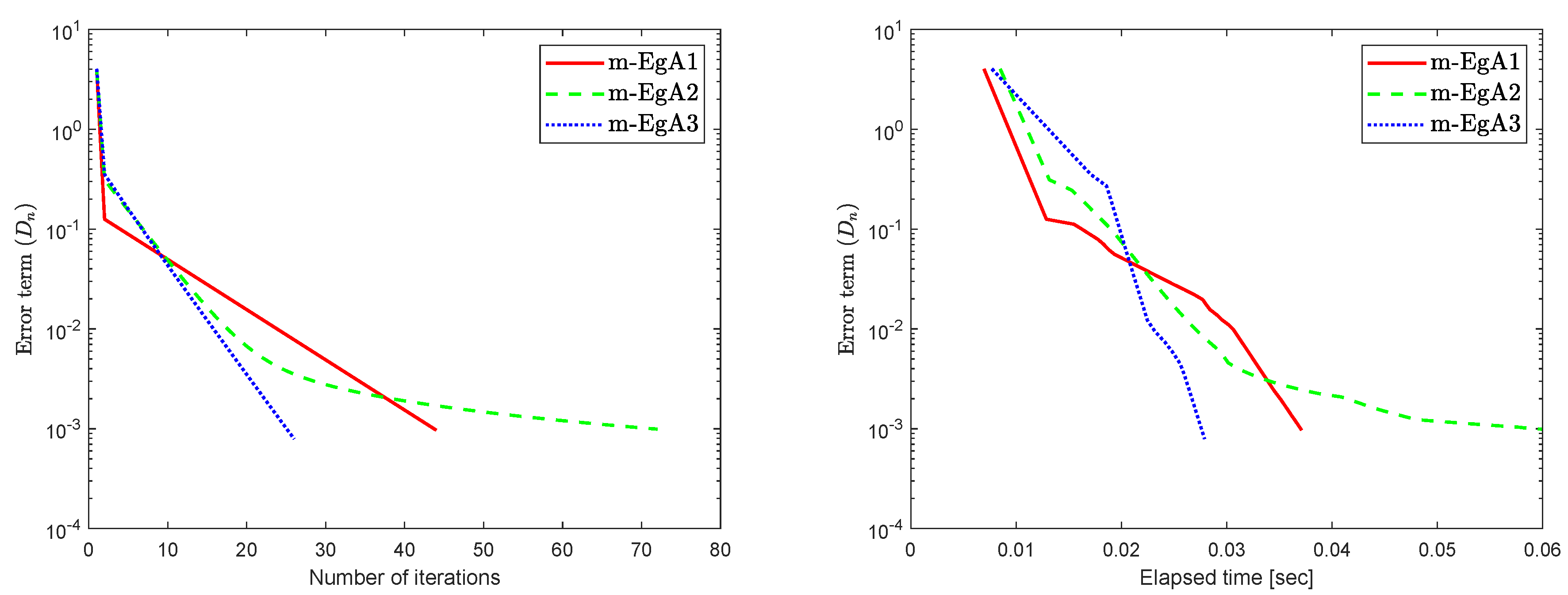

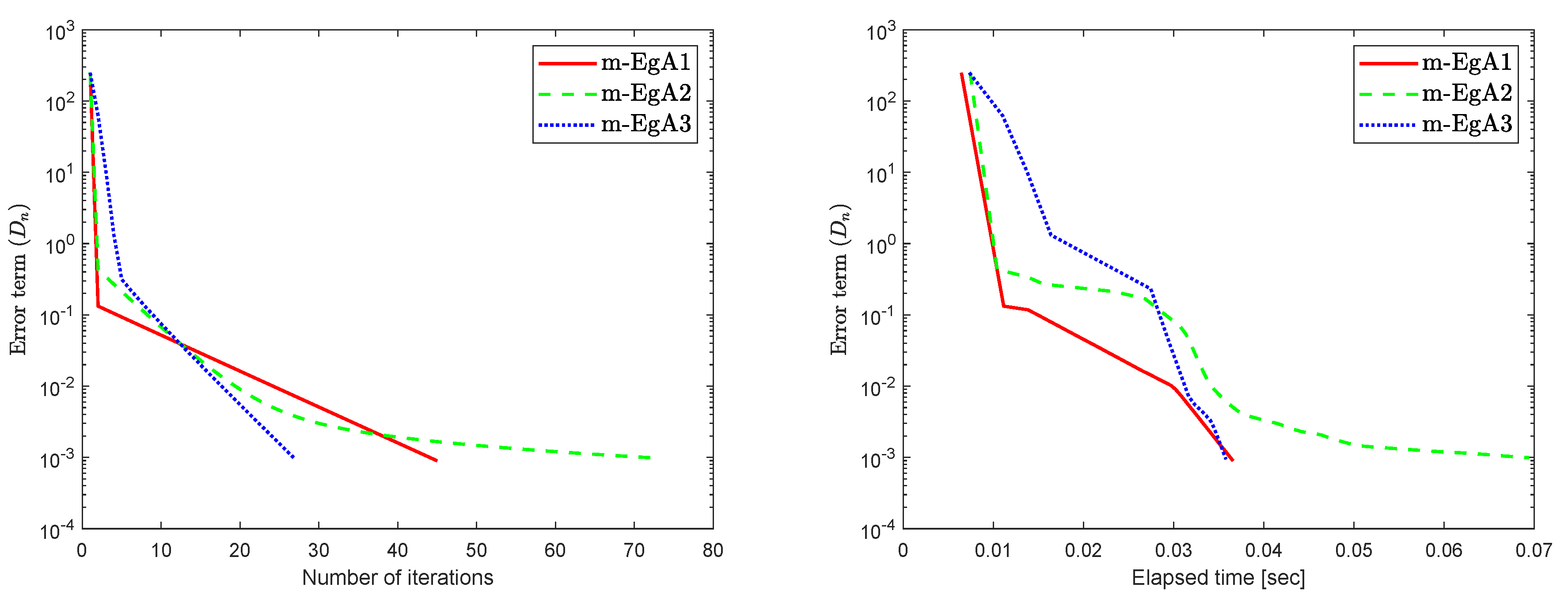

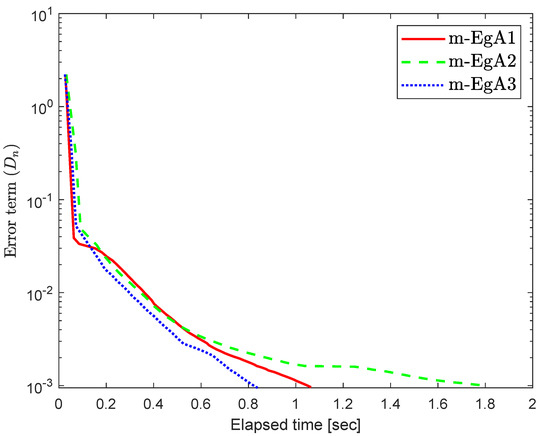

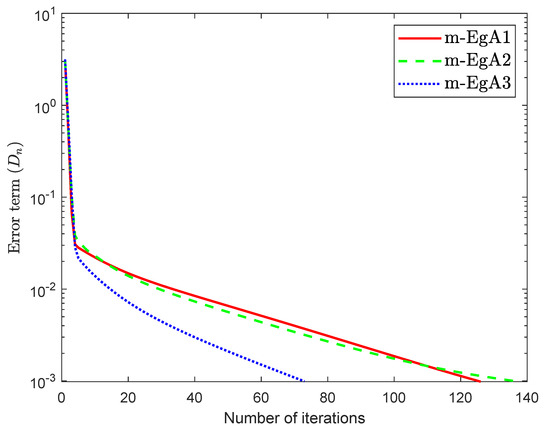

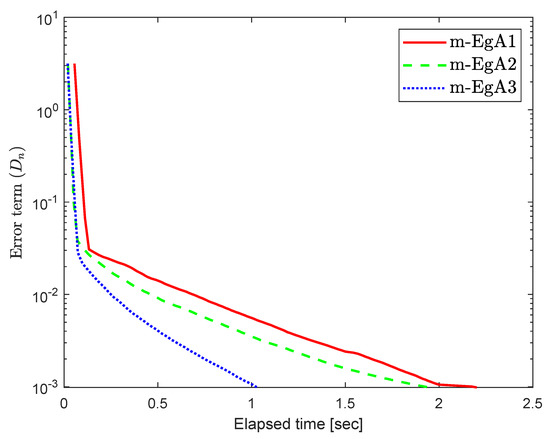

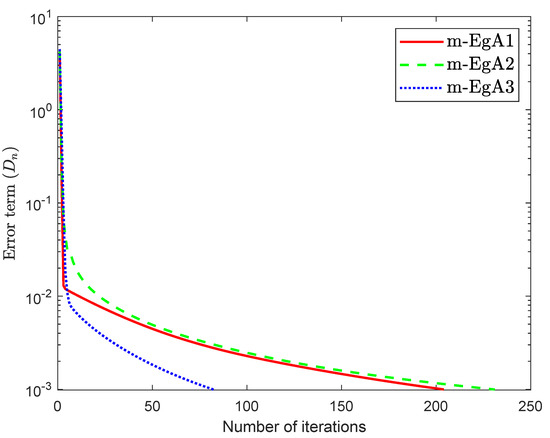

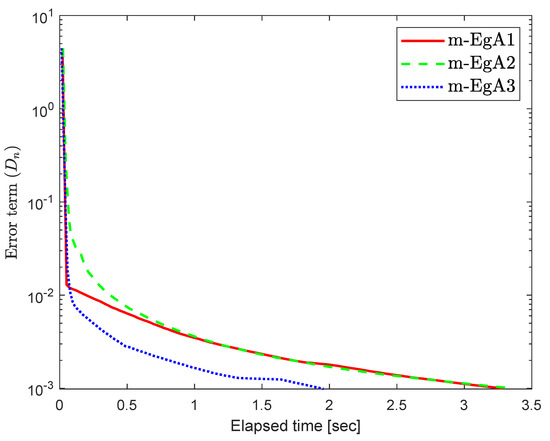

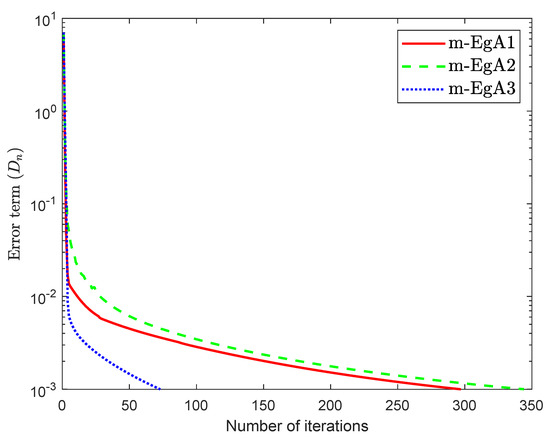

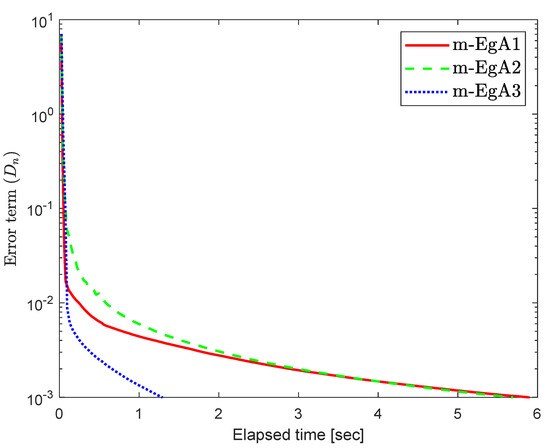

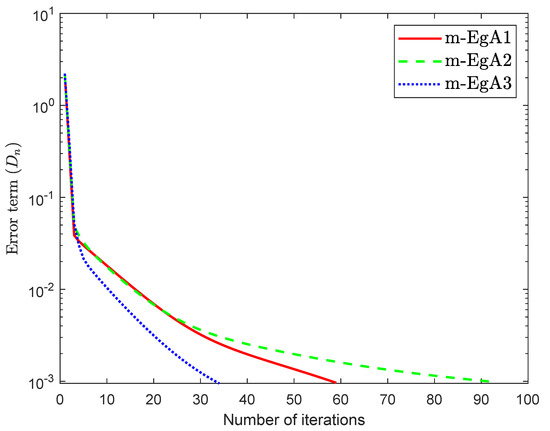

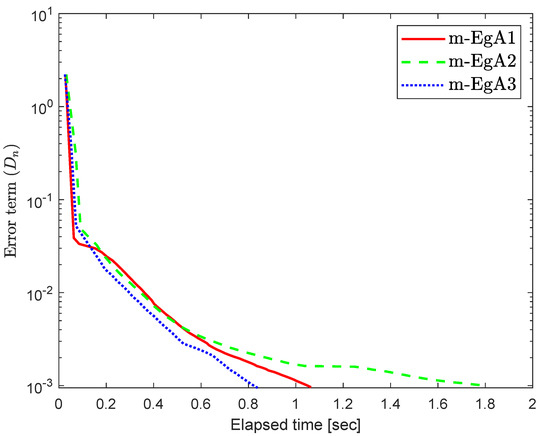

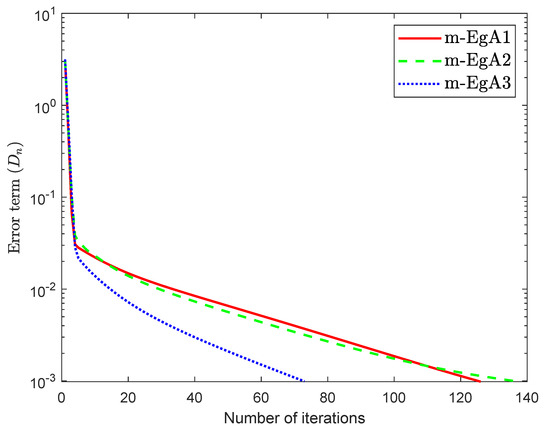

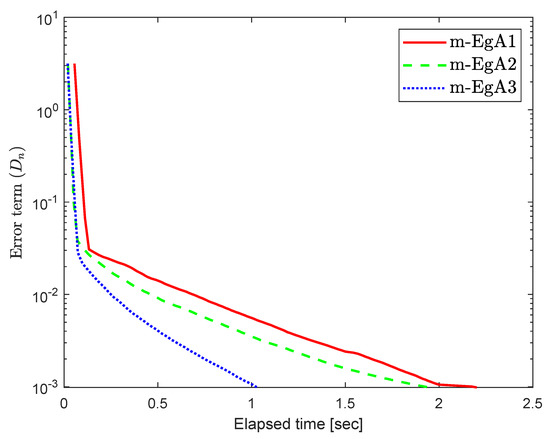

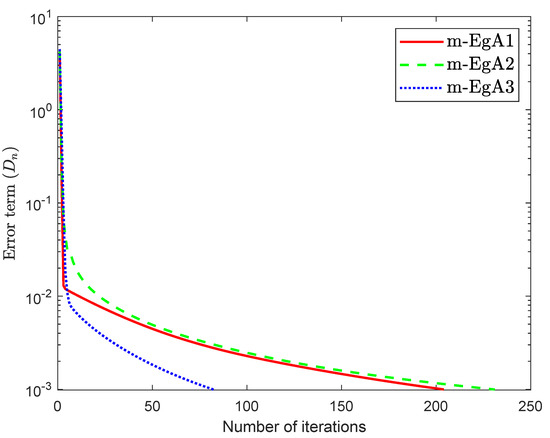

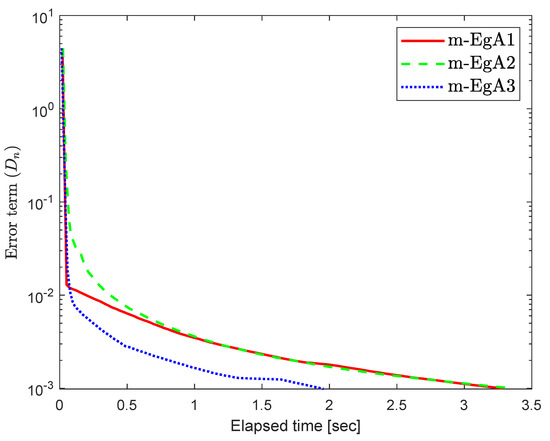

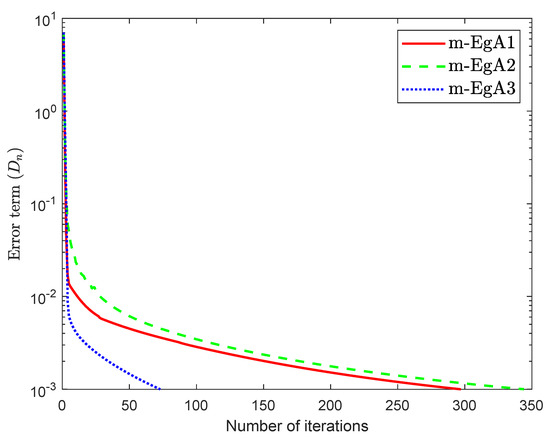

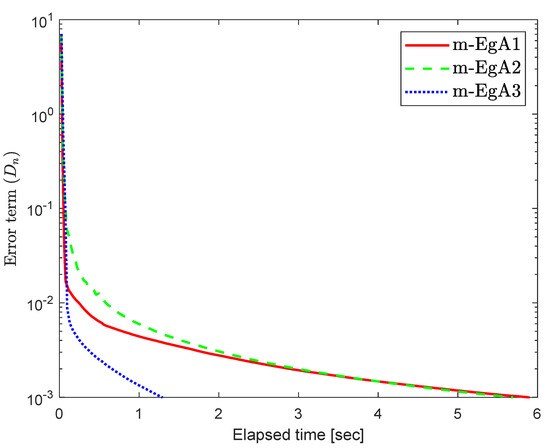

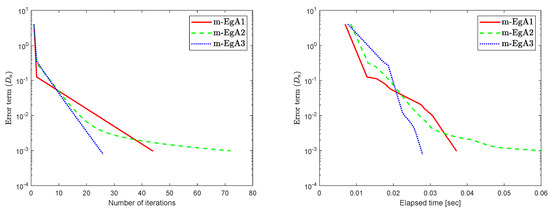

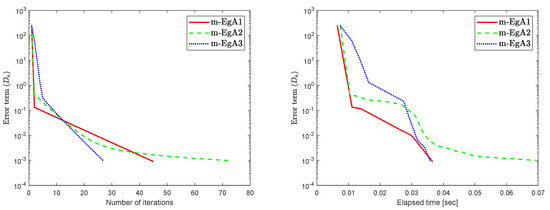

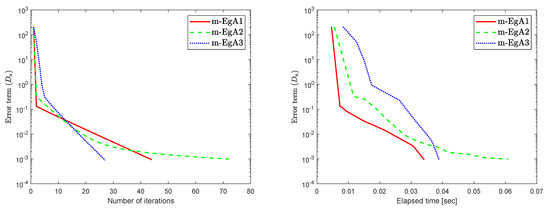

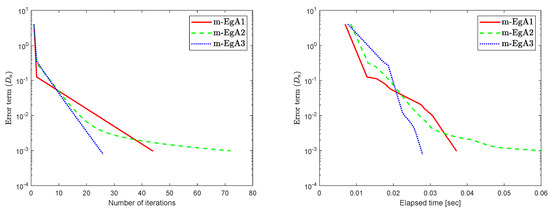

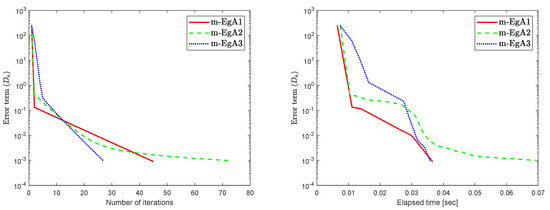

The experimental results are discussed in this section to illustrate the efficacy of our proposed Algorithm 1 (m-EgA3) compared to Algorithm 1 (m-EgA1) in [30] and Algorithm 2 (m-EgA2) in [30].

Example 1.

Consider the HpHard problem which is taken from [39] and considered by many authors for numerical tests (see [40,41,42]), where is an operator defined by with and

where N is an matrix, B is an skew–symmetric matrix and D is an positive definite diagonal matrix. The feasible set is defined by

where Q is an matrix and b is a nonnegative vector in . It is clear that F is monotone and Lipschitz continuous with For , the solution set of the corresponding variational inequality is . In this experiment, we take the initial point and Moreover, the control parameters and for Algorithm 1 (m-EgA1) in [30]; , and for Algorithm 2 (m-EgA2) in [30]; and for Algorithm 1 (m-EgA3). The numerical results of all methods have been reported in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 and Table 1.

Figure 1.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Figure 2.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Figure 3.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Figure 4.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Figure 5.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Figure 6.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Figure 7.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Figure 8.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

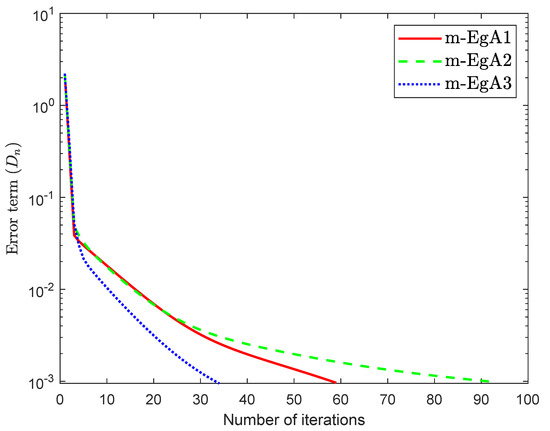

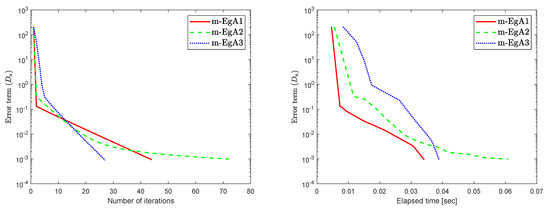

Example 2.

Assume that is a Hilbert space with an inner product

and the induced norm is

Let be the unit ball and is defined by

where

We can see in [41], that F is Lipschitz-continuous with Lipschitz constant and monotone. Figure 9, Figure 10 and Figure 11 and Table 2 show the numerical results by taking different initial values and In this experiment, we take the different initial points and Moreover, the control parameters and for Algorithm 1 (m-EgA1) in [30]; , and for Algorithm 2 (m-EgA2) in [30]; and for Algorithm 1 (m-EgA3).

Figure 9.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Figure 10.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Figure 11.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 1, when .

Example 3.

Let is defined by

and is taken as

This problem was proposed in [43], where F is L-Lipschitz continuous with Lipschitz constant and monotone. In this experiment, we take the different initial points and Moreover, the control parameters and for Algorithm 1 (m-EgA1) in [30]; , and for Algorithm 2 (m-EgA2) in [30]; and for Algorithm 1 (m-EgA3). Table 3 reports the numerical results by using different tolerance and initial points.

Table 3.

Numerical behaviour of Algorithm 1 compared to Algorithm 1 in [30] and Algorithm 2 in [30] for Example 3 by using different initial points .

Author Contributions

Data curation, N.W.; formal analysis, M.Y.; funding acquisition, N.P. (Nuttapol Pakkaranang) and N.P. (Nattawut Pholasa); investigation, N.W., N.P. (Nuttapol Pakkaranang) and H.u.R.; methodology, H.u.R.; project administration, H.u.R., N.P. (Nattawut Pholasa) and M.Y.; resources, N.P. (Nattawut Pholasa); software, H.u.R.; supervision, H.u.R. and N.P. (Nuttapol Pakkaranang); Writing—original draft, N.W. and H.u.R.; Writing—review and editing, N.P. (Nuttapol Pakkaranang). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by School of Science, University of Phayao, Phayao, Thailand (Grant No. UoE 63002).

Acknowledgments

We are very grateful to the editor and the anonymous referees for their valuable and useful comments, which helps in improving the quality of this work. N. Wairojjana would like to thank Valaya Alongkorn Rajabhat University under the Royal Patronage (VRU). N. Pholasa was partially supported by University of Phayao.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Stampacchia, G. Formes bilinéaires coercitives sur les ensembles convexes. Comptes Rendus Hebd. Des Seances De L Acad. Des Sci. 1964, 258, 4413. [Google Scholar]

- Konnov, I.V. On systems of variational inequalities. Russ. Math. C/C-Izv.-Vyss. Uchebnye Zaved. Mat. 1997, 41, 77–86. [Google Scholar]

- Kassay, G.; Kolumbán, J.; Páles, Z. On Nash stationary points. Publ. Math. 1999, 54, 267–279. [Google Scholar]

- Kassay, G.; Kolumbán, J.; Páles, Z. Factorization of Minty and Stampacchia variational inequality systems. Eur. J. Oper. Res. 2002, 143, 377–389. [Google Scholar] [CrossRef]

- Kinderlehrer, D.; Stampacchia, G. An Introduction to Variational Inequalities and Their Applications; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2000. [Google Scholar] [CrossRef]

- Konnov, I. Equilibrium Models and Variational Inequalities; Elsevier: Amsterdam, The Netherlands, 2007; Volume 210. [Google Scholar]

- Takahashi, W. Introduction to Nonlinear and Convex Analysis; Yokohama Publishers: Yokohama, Japan, 2009. [Google Scholar]

- Korpelevich, G. The extragradient method for finding saddle points and other problems. Matecon 1976, 12, 747–756. [Google Scholar]

- Noor, M.A. Some iterative methods for nonconvex variational inequalities. Comput. Math. Model. 2010, 21, 97–108. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2010, 148, 318–335. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 2012, 61, 1119–1132. [Google Scholar] [CrossRef]

- Malitsky, Y.V.; Semenov, V.V. An Extragradient Algorithm for Monotone Variational Inequalities. Cybern. Syst. Anal. 2014, 50, 271–277. [Google Scholar] [CrossRef]

- Tseng, P. A Modified Forward-Backward Splitting Method for Maximal Monotone Mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Moudafi, A. Viscosity Approximation Methods for Fixed-Points Problems. J. Math. Anal. Appl. 2000, 241, 46–55. [Google Scholar] [CrossRef]

- Zhang, L.; Fang, C.; Chen, S. An inertial subgradient-type method for solving single-valued variational inequalities and fixed point problems. Numer. Algorithms 2018, 79, 941–956. [Google Scholar] [CrossRef]

- Iusem, A.N.; Svaiter, B.F. A variant of korpelevich’s method for variational inequalities with a new search strategy. Optimization 1997, 42, 309–321. [Google Scholar] [CrossRef]

- Thong, D.V.; Hieu, D.V. Modified subgradient extragradient method for variational inequality problems. Numer. Algorithms 2017, 79, 597–610. [Google Scholar] [CrossRef]

- Thong, D.V.; Hieu, D.V. Weak and strong convergence theorems for variational inequality problems. Numer. Algorithms 2017, 78, 1045–1060. [Google Scholar] [CrossRef]

- Marino, G.; Scardamaglia, B.; Karapinar, E. Strong convergence theorem for strict pseudo-contractions in Hilbert spaces. J. Inequal. Appl. 2016, 2016. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Cho, Y.J.; Yordsorn, P. Weak convergence of explicit extragradient algorithms for solving equilibirum problems. J. Inequal. Appl. 2019, 2019. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Je Cho, Y.; Suleiman, Y.I.; Kumam, W. Modified Popov’s explicit iterative algorithms for solving pseudomonotone equilibrium problems. Optim. Methods Softw. 2020, 1–32. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Abubakar, A.B.; Cho, Y.J. The extragradient algorithm with inertial effects extended to equilibrium problems. Comput. Appl. Math. 2020, 39. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Kumam, W.; Shutaywi, M.; Jirakitpuwapat, W. The inertial sub-gradient extra-gradient method for a class of pseudo-monotone equilibrium problems. Symmetry 2020, 12, 463. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Argyros, I.K.; Deebani, W.; Kumam, W. Inertial extra-gradient method for solving a family of strongly pseudomonotone equilibrium problems in real Hilbert spaces with application in variational inequality problem. Symmetry 2020, 12, 503. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Argyros, I.K.; Alreshidi, N.A.; Kumam, W.; Jirakitpuwapat, W. A self-adaptive extra-gradient methods for a family of pseudomonotone equilibrium programming with application in different classes of variational inequality problems. Symmetry 2020, 12, 523. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Argyros, I.K.; Shutaywi, M.; Shah, Z. Optimization based methods for solving the equilibrium problems with applications in variational inequality problems and solution of Nash equilibrium models. Mathematics 2020, 8, 822. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Shutaywi, M.; Alreshidi, N.A.; Kumam, W. Inertial optimization based two-step methods for solving equilibrium problems with applications in variational inequality problems and growth control equilibrium models. Energies 2020, 13, 3292. [Google Scholar] [CrossRef]

- Rehman, H.U.; Kumam, P.; Dong, Q.L.; Peng, Y.; Deebani, W. A new Popov’s subgradient extragradient method for two classes of equilibrium programming in a real Hilbert space. Optimization 2020, 1–36. [Google Scholar] [CrossRef]

- Antipin, A.S. On a method for convex programs using a symmetrical modification of the Lagrange function. Ekon. I Mat. Metod. 1976, 12, 1164–1173. [Google Scholar]

- Yang, J.; Liu, H.; Liu, Z. Modified subgradient extragradient algorithms for solving monotone variational inequalities. Optimization 2018, 67, 2247–2258. [Google Scholar] [CrossRef]

- Kraikaew, R.; Saejung, S. Strong Convergence of the Halpern Subgradient Extragradient Method for Solving Variational Inequalities in Hilbert Spaces. J. Optim. Theory Appl. 2013, 163, 399–412. [Google Scholar] [CrossRef]

- Heinz, H.; Bauschke, P.L.C. Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; CMS Books in Mathematics; Springer International Publishing: New York, NY, USA, 2017. [Google Scholar]

- Kreyszig, E. Introductory Functional Analysis with Applications, 1st ed.; Wiley Classics Library: Hoboken, NJ, USA, 1989. [Google Scholar]

- Xu, H.K. Another control condition in an iterative method for nonexpansive mappings. Bull. Aust. Math. Soc. 2002, 65, 109–113. [Google Scholar] [CrossRef]

- Maingé, P.E. Strong Convergence of Projected Subgradient Methods for Nonsmooth and Nonstrictly Convex Minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

- Takahashi, W. Nonlinear Functional Analysis; Yokohama Publisher: Yokohama, Japan, 2000. [Google Scholar]

- Liu, Z.; Zeng, S.; Motreanu, D. Evolutionary problems driven by variational inequalities. J. Differ. Equ. 2016, 260, 6787–6799. [Google Scholar] [CrossRef]

- Liu, Z.; Migórski, S.; Zeng, S. Partial differential variational inequalities involving nonlocal boundary conditions in Banach spaces. J. Differ. Equ. 2017, 263, 3989–4006. [Google Scholar] [CrossRef]

- Harker, P.T.; Pang, J.S. for the Linear Complementarity Problem. Comput. Solut. Nonlinear Syst. Equ. 1990, 26, 265. [Google Scholar]

- Solodov, M.V.; Svaiter, B.F. A New Projection Method for Variational Inequality Problems. SIAM J. Control Optim. 1999, 37, 765–776. [Google Scholar] [CrossRef]

- Van Hieu, D.; Anh, P.K.; Muu, L.D. Modified hybrid projection methods for finding common solutions to variational inequality problems. Comput. Optim. Appl. 2016, 66, 75–96. [Google Scholar] [CrossRef]

- Dong, Q.L.; Cho, Y.J.; Zhong, L.L.; Rassias, T.M. Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 2017, 70, 687–704. [Google Scholar] [CrossRef]

- Dong, Q.L.; Lu, Y.Y.; Yang, J. The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 2016, 65, 2217–2226. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).