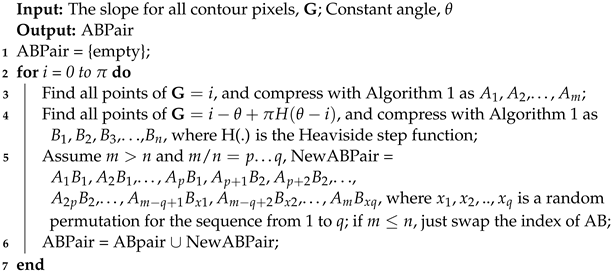

1. Introduction

Object positioning is crucial to many visual robotic systems, such as sorting robot arms, transfer robot arms, assembly robots, etc. These applications require not only the information of an object’s location, but also its orientation, scale, and the number of instances for operation. Although deep learning has shown impressive accuracy in shape recognition and localisation, in dealing with precise orientation and scale information, it suffers from poor performance without a comprehensive training dataset [

1]. On the other hand, many applications are frequently transferred to a totally new operation target. Deep learning methods require days or even months of training data collection to guarantee good performance in comprehensive situations, which is a serious interruption to normal production and cannot meet the online efficiency requirement. An alternative deterministic method is the Generalised Hough Transform (GHT), a shape detection method [

2,

3,

4,

5], which uses the contour of a template shape to build a codebook, known as the R-Table. In the detection step, votes are cast for the presence of the shape in the Hough space by looking up the R-Table. Since the R-Table is a one-to-many mapping between indices and votes, the shape can be identified through majority voting. Local information stored to generate the R-Table can be expanded into interest points [

6], image patches [

7], or regions [

8]. The GHT extends the Hough transform, which can only be used to detect shapes that have analytical models such as line segments, circles, ellipses, etc. [

9,

10,

11], to arbitrary shapes.

The conventional GHT is known [

3,

12] to suffer from two fatal limitations: (a) There is a high computational cost when the input shape is rotated or scaled. If the rotation or scaling of the target shape is different from the template, common practice is to transform the R-Table template to all its potential rotations and scaled sizes, with a certain step. Not only does this approach come at a high cost, but the detection resolution is limited to the step size. Some authors find their own rotation/scale invariant features [

13,

14,

15,

16,

17], but these methods are either too sensitive to noise or limited to shape constraints. Another category of approaches uses supervised training and matching for task-adapted R-Tables called Hough forests [

18]. The primary Hough forest cannot give the rotation information; some extended works [

19,

20] augmented the training dataset with rotated copies of the template or trained a classifier common to “all” orientations. (b) There is poor performance when the target shape is similar, but not identical to the input template; this is a common case in automatic assembly and packaging lines. For example, in the demo images shown in

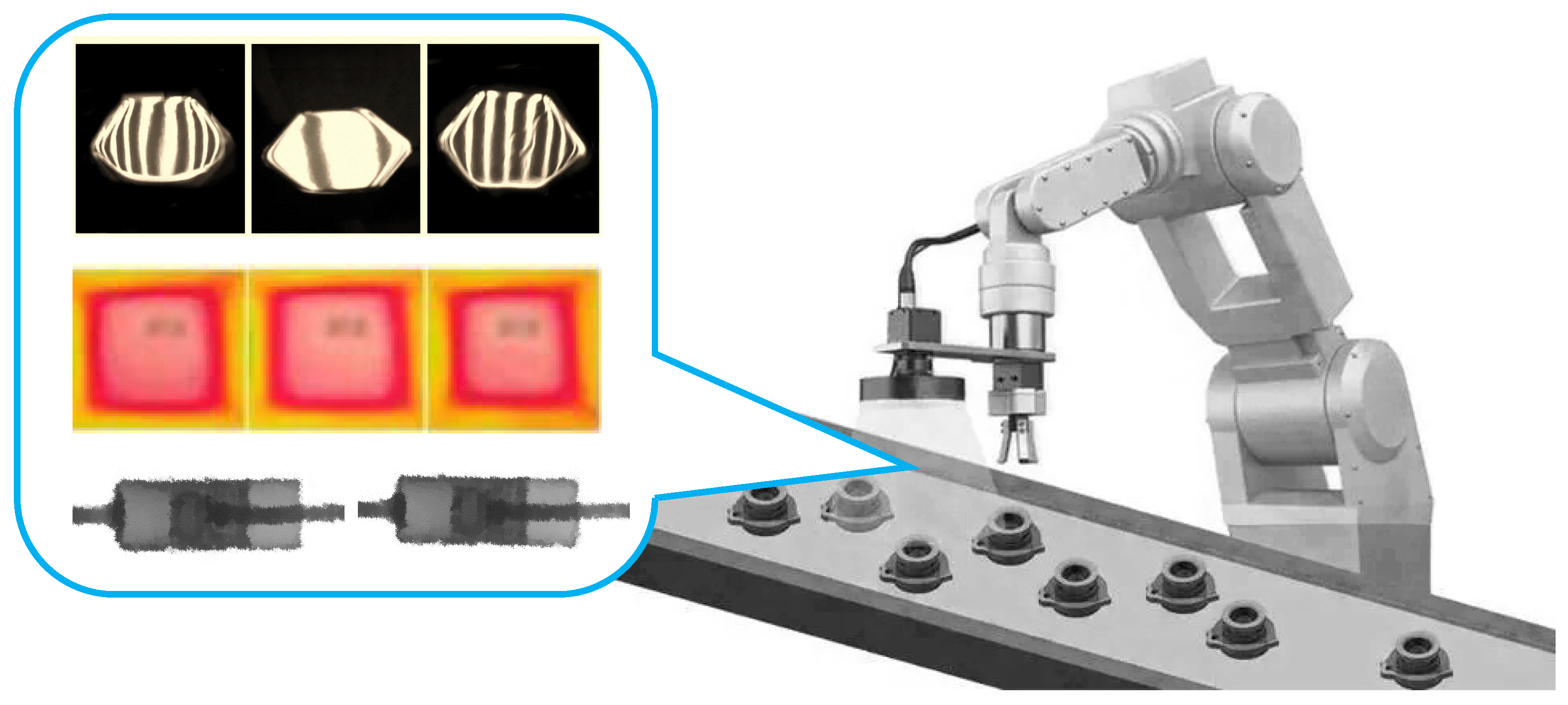

Figure 1, the contour of the target shapes is polluted when there is light interference from the environment or the components themselves have a high reflective rate or shape fluctuation. For precise manufacturing applications, other visual modalities such as infrared and X-ray are also common. These visual images are much more fuzzy than visible light images, which easily leads to shape distortion. The R-Table template in Hough methods is made up of standard shapes, and any distortion in the target will result in votes, which contaminate the ground truth in conventional methods. This phenomenon is exacerbated with the increase in the number of the one-to-many mappings in the R-Table.

In order to address both issues described above, we introduce a novel computer vision algorithm called the Random Verify GHT (RV-GHT). It has low complexity, yet achieves state-of-the-art accuracy. Our main contributions are as follows:

This paper provides one efficient and robust algorithm for arbitrary shape detection under data-scarce cases for visual robotics in automatic assembly lines. The proposed method can be trained with even just a single image to build the R-Table, while deep learning methods require a large dataset to cover different rotations/scalings, and such a dataset usually takes a long time to collect for new detection targets.

To handle the bottleneck of poor performance under shape distortion for the traditional GHT methods, a series of anti-distortion procedures was designed; the major one is the random verify process. The difficulty of the one-to-many mapping problem for traditional GHT is greatly reduced, and this leads to a great accuracy improvement.

To avoid the time-expensive iterative multi-dimensional voting process for traditional GHT methods, this paper designs one single-shot voting scheme to obtain the scaling factor, shape centre, and target rotation from 0 to simultaneously.

To effectively lower the search overhead in detection, a new R-Table is designed, which has the most concise form compared to the existing GHT methods. Besides, a new minimum point set selection algorithm that reduces the number of indices both in R-Table construction and in the detection process is designed.

The validation results illustrate a much better accuracy than other algorithms under shape distortion cases. The overall efficiency is similar to the state-of-the art method.

2. Related Work

Efficient GHT algorithms: The concept of the GHT was introduced in the 1980s [

2], but has been subject to a number of further refinements in the presence of rotation and scaling. The R-table typically records the position of all (or most) boundary points of a template shape relating to a fixed reference point [

12].

Several authors have put effort into improving the overall efficiency in the presence of scaling and rotation. One category is putting the scaling and rotation into the R-Table [

21], but this method costs too much storage space for the R-Table compared to methods that use invariant features. Commonly considered invariant features are invariant interest points [

22,

23], local curvature [

15] and pole–polar triangles [

24,

25,

26,

27,

28]. Methods based on interest points find invariant interest points on both the target and the template image [

29] using detectors such as SIFT, SURF, HoG, etc. The results of such algorithms are sensitive to background noise. Additionally, any outlying interest points or a general insufficiency of interest points decreases the overall performance. Another category of the GHT is based on curvature. It performs poorly for shapes that consist of line segments rather than curves.

A third category of the GHT is based on pole–polar triangles. These algorithms are more robust to noise and have been more popular in practical applications. Pole–polar-triangle-based methods can be categorized into pixel-based and non-pixel-based methods. Pixel-based methods calculate an index for every edge pixel. The advantage is that they are rich in index information, but are time-consuming. Ser et al. [

24] proposed a dual-point GHT (DP-GHT), which uses pixel pairs with the same slope to form the triangle. This method does not work for shapes that lack pixels with the same slope. Chau and Siu [

25] proposed an improved version of the DP-GHT called the GDP-GHT (also called the RG-GHT), which uses index pairs with a constant angle difference. The DP-GHT is a special case of the GDP-GHT. They further extended the algorithm to accommodate shapes with multiple constant angle differences [

28].

By contrast, non-pixel-based methods approximate the target shape using blocks [

30,

31,

32], lines or circles [

26], or polygons [

16]. This approach involves a fast index calculation at the cost of representation errors of the template shape. If the target is identical to the approximation, the algorithm cannot distinguish between the two. This approach may also lack sufficient indices to vote for the true configuration. Jeng and Tsai [

26] used half lines and circles in the R-Table. The detection process involves separate scaling-invariant and orientation-invariant cell incrementing strategies. This works well only when the rotational angle and scaling are in a fairly restricted range. Yang et al. [

16] proposed a polygon-invariant GHT (PI-GHT), which employs the local pole–polar triangle features based on polygonal approximation, using dominant points from the edges as the index. Due to limited invariant feature quantity, the results are too sensitive to the quality of the polygonal approximation, which means the algorithm cannot identify shapes that are the same as their approximation. Ulrich et al. [

27] introduced a hierarchical strategy that splits the image into tiles; each tile has a separate R-table. However, in the absence of invariant features, this is just an improved, but inefficient brute force search. Kimura et al. [

30] proposed a fast GHT (FGHT), which splits the image into small sub-blocks and approximates the slope of each block. In order to reduce the influence of noise on estimating block slope, Reference [

31] proposed a generalized fuzzy GHT (GFGHT). The overall process is much the same as the FGHT, with the exception of a fuzzy voting process, which gives a block pair’s centre point more weighting in the voting if it is closer to the centre of the shape. The serious limitation of the latter two methods is the increased complexity: if

N blocks satisfy the distance threshold, the index pair is

. To reduce the complexity of FGHT methods, Chiu et al. [

32] proposed a fast random GHT (FRGHT), which uses a random pair selection procedure in index searching and weighting. This method greatly reduces the index number, at the cost of reduced robustness.

In summary, the pixel-based subcategory of using the pole–polar triangle as an invariant feature has the richest index and, hence, is the most robust to noise. The state-of-the-art GDP-GHT methods and PI-GHT methods are not limited to shape constraints; however, the high number of one-to-many mappings from the index of the R-Table to a configuration increases the time complexity and the presence of incorrect votes. In the case of both pixel-based and block-based methods, the number of index pairs can be unacceptably high. The FRGHT reduces the number of indices required by the GFGHT at the cost of a sacrifice in robustness.

GHT algorithms that are robust to shape distortion: Few approaches deal with the challenge of shape distortion directly. The most popular methods rely on the fuzzy voting concept, by weighting each vote instead of simply accumulating every single one. For example, Xu et al. [

33] weighted the voting in the GHT by how important the corresponding ship part was. They gave the fore and the poop deck a higher weight because they are most different from the rest of the contour. The Generalised Fuzzy GHT [

31] (GFGHT) gives more weight to index pairs whose middle pixel is closer to the shape centre. The reference point concept in the GFGHT is a good effort to remove incorrect votes. However, these methods still only have a limited ability to deal with shape distortion.

4. Experiments and Discussion

The RV-GHT algorithm resolves the two fatal issues for the traditional GHT, i.e., the high computational cost in the presence of rotation or scaling and the failure to find target shapes that are similar, but not identical to the template shape. The experiments in this section validate our efficiency and accuracy claims.

Comparisons were made against the most efficient of the GHT algorithms in the literature, namely the PI-GHT, GDP-GHT, GFGHT, and FRGHT. The GDP-GHT uses edge pixel pairs with a constant slope difference to calculate the R-Table index and, for each pair, stores the displacement vector in the centre. To improve the overall performance, our algorithm uses the scaling factor obtained from , , and in order to remove some wrong projections for the GDP-GHT. The GFGHT, PI-GHT, and FRGHT divide the images into small blocks and store a reference point in the R-Table. The FRGHT randomly chooses a block as a seed and iteratively looks for a subsequent point that is further than a distance threshold and that has never been chosen before; the found point B in the last iteration is used as Point A in the next iteration; this index chain is also linked to the vote weighting.

4.1. Dataset

Existing publicly available datasets are too simple for efficiency and robustness testing because: (1) there is no real case shape distortion; (2) there is no interference from ambient light or reflective surfaces; (3) existing shapes are relatively regular, which goes against real applications. Our dataset was built to feature ten radically different shapes, shown in

Figure 6. Theses shapes were chosen to be relevant to a variety of potential real applications. For example,

S1 refers to a challenging, highly reflective object positioning;

S2 is a challenging case for accurate rotation and scaling detection due to the rich one-to-many mapping in the R-Table.

S8,

S9, and

S10 represents infrared, ultrasound, and X-ray inspection applications in manufacturing, respectively. Other source shapes are some common components’ positioning in automatic assembly and packaging line.

4.2. Accuracy Analysis

Even in the absence of shape distortion, the one-to-many mapping pollutes the voting, especially when rotation and scaling are also present. The corresponding template for source images in the test dataset are shown in

Figure 7 by Canny edge detection. Compared to deep learning methods, which require a huge dataset for pre-training, this method only requires a single typical image to build a template contour shape. Building a simple, yet unique template for each source shape is preferred, which helps to improve efficiency. Every shape is assigned a centre of coordinates; the position and dimension illustrated in

Figure 6 were set as templates. The R-Tables for each algorithm and every shape are generated before the detection process.

4.2.1. Qualitative Accuracy Performance

To have a first impression of the accuracy of the RV-GHT in dealing with one-to-many mapping, the first experiment involved the most ambiguity-prone shape,

S8 for shape centre and shape and

S2 for rotation and scaling. Both shapes were transformed with an anti-clockwise rotation of

and a scaling factor of

. The constant angle difference for our RV-GHT and the GDP-GHT were set as

; the block size of the GFGHT and FRGHT was set to 10 pixels. The distance threshold for the found pair was 10 and 60 pixels, respectively, for our RV-GHT and the GFGHT/FRGHT. Accumulation with one pixel for each vote is too sensitive to noise or distortion: any noise or distortion will cause position misalignment to affect the voting. A more robust scheme is voting with a Gaussian kernel, as shown in Equations (

9) and (

10). To make the vote fair, in our experiments, we allowed the use of the Gaussian kernel voting scheme, even though the various algorithms did not originally make use of such a scheme.

The voting results for the centre, rotation, and scaling for these two challenging shapes are shown in

Figure 8,

Figure 9 and

Figure 10, respectively. The proposed RV-GHT had the best peak voting. Due to shape

S8 having a serious one-to-many mapping, the GDP-GHT method found many centres that were nowhere near the ground truth, as shown in

Figure 8. The GFGHT and FRGHT had a cleaner vote space because the check point removed some wrong votes, but the resulting centres still did not intersect with the ground truth due to the representation error using blocks. Smaller blocks can improve the accuracy, but will greatly increase the index number.

Figure 9 and

Figure 10 show that the sidelobe for the RV-GHT was much narrower than its counterparts, although all methods detected the configuration correctly; this also means a better confidence level for our method. In the presence of background noise, the peak for the rotation and scaling votes of other methods can easily be defeated.

Figure 11 shows the final detection results of some typical shapes. The proposed method had the cleanest voting space (shown as the white cloud in

Figure 11), and the positioning results were accurate. Other methods were influenced by the one-to-many mapping seriously.

4.2.2. Qualitative Multi-Instance Detection Performance

Figure 12 shows our algorithm’s multi-instance detection ability. Multi-instance detection is not a key argument for this paper, so a sample image containing multiple instances of three shapes was made just to show that our proposed method can deal with it. The detection targets were

T1 and

T4, and

T7 was the interference. Only the results of the RV-GHT are given because other GHT methods do not contain a procedure to deal with multiple instances for different rotations or scalings. The outlier shapes generated almost no votes in the voting space, which shows that our method is robust to background noise. All targets were successfully detected even if they had different rotations and scaling factors.

4.2.3. Quantitative Analysis of Key Parameters for Dealing with Shape Distortion

This experiment analysed key parameters in the proposed RV-GHT for dealing with shape distortion. Shape distortion is one of the major challenges of GHT methods, i.e., when the target shape is similar, but not identical to the template, the performance becomes unacceptably poor. Our proposed method uses a series of procedures to deal with shape distortion including line intersection to find the centre, the random verify, and Gaussian kernel voting. The two key parameters that influence the performance are the back projection point number

and the expansion radius

R in

Figure 5.

Based on the same dataset shown in

Figure 6, different levels of salt and pepper noise were added manually to the template shapes.

Figure 13a illustrates some distorted versions for

S2. Under different salt and pepper noise densities (from 0 to 0.35 with a stepsize of 0.05), there were partial missing, shape distortion, and noise incurred. The

curves under such settings are given in

Figure 14 using Monte Carlo simulation. Every template shape in

Figure 6 was tested and transformed to a random rotation and scale, and every noise density was repeated 50 times to obtain the average performance.

is defined as the rate of total true positives (

s) and true negatives (

s) for all test samples (

N), i.e.,

. The true positives for centres, rotation, and scaling are defined, respectively, when the detected results are within 5 pixels, within

, and within a factor of

of the ground truth. These thresholds do not need to be strictly so; other values that are not too relaxed will not influence the conclusion. Users should set them according to the error tolerance of their task.

For centre, rotation, and scale accuracy, increasing improved the ability of compressing the one-to-many mapping, so . had slightly better accuracy than pixels, but will increase the computation time. As for R, showed the best performance. The principle behind this is that, when R is close to 0, the algorithm will give more weight to the identical part and use it to detect the shape. When R increases from 1, the shape distortion tolerance improved, but it decreased the ability of compressing the one-to-many mapping. Increasing R will not keep improving the tolerance for shape distortion. If or R approaches or becomes greater than half of the image size, the algorithm loses the ability of compressing the one-to-many mapping totally. However, if there is not an absolutely identical part in a real application, setting R as 5 to 10 is a better choice than 1 to allow shape distortion.

4.2.4. Quantitative Accuracy Performance under Shape Distortion

This section compares our proposed method with other counterparts under various levels of distortion. We set

and

according to the analysis in

Section 4.2.3. Some of the detection results are shown in

Figure 13b. Salt and pepper noise brought partial shape loss, shape distortion, and interference. Only the proposed RV-GHT handled all these cases successfully.

For a quantitative study, the more comprehensive evaluation metrics of

and

were used because

ignores the false negatives (

s) and false positives (

s), where

,

.

describes how much ground truth there is within the predicted truth, and

describes how much ground truth has been extracted. The

vs.

curves for centre, rotation, and scaling are shown in

Figure 15. The same Monte Carlo method and setting as in

Figure 14 were used.

and

R were set as 15 and 5, respectively.

Figure 15 shows that the proposed method outperformed all other state-of-the art methods greatly in the accuracy for centre, rotation, and scaling detection.

4.3. Efficiency Analysis

The time complexity of the traditional GHT method is , where the respective numbers refer to all possible rotations, scalings, and indices computed for the target image. Efficient GHT algorithms eliminate the iteration for rotations or scaling, preferably both, leading to a time complexity of around . To further analyse the complexity of efficient GHT methods, this paper recalls the processes that are common to these algorithms. During the detection process, after obtaining the index for the target image, the algorithm will look up this index in the R-Table. When one-to-many mappings are present in the R-Table, an index may correspond to multiple rows, each of which will correspond to a projection and will yield the calculation of a centre, rotation, and scaling triplet, followed by the accumulation for each parameter.

The calculation of these parameters can vary greatly with the particularities of each shape. This makes it nearly impossible to find a closed-form equation for the time complexity. However, from the description of our method, it should be clear that introducing the random verify will greatly reduce the accumulation number by downsampling and by removing some of the wrong voting. Other GHT methods count towards the accumulation of all the relevant rows in the R-Table. In our method, whilst the number of random verify points also increases the overall time, those extra back projections improve the accuracy of the results at the expense of a small amount of extra computation.

Our time efficiency tests were carried out on the dataset from

Section 4.1, on a laptop with a Core i7-7500u CPU. Each shape was considered in a series of rotational positions so as to obtain the average runtime. No scaling or shape distortion were applied. The overall runtimes are given in

Table 1. As expected, the random verify point number (

) caused the overall time to increase. The normalised runtime efficiency shared the same trend for all shapes, which had a gain factor of only around

. Although the proposed method was not the most time-efficient one, it still outperformed the others in most cases when

; this setting already showed a great improvement in accuracy, and

did not improve the overall efficiency much.

5. Conclusions

Efficient and robust component positioning is a basic need for visual robotic systems in automatic assembly and packaging lines. The proposed RV-GHT method addresses the challenges present in current GHT methods of low overall efficiency and poor accuracy when dealing with shape distortion.

Pixel pairs with a constant slope difference are used to index the R-Table. A new, reduced-size R-Table was proposed, which has only five columns. This is an improvement on the data storage, for example nine columns in the GFGHT or eight columns in the GDP-GHT. Its rows are further compressed by a minimum point set selection algorithm.

During the detection process, an index pair search algorithm obtains a minimum index without losing information. These schemes significantly reduce the search overhead without losing integrity. Subsequently, a line intersection method is used to find from the R-Table the primary centre and the corresponding rotation and scaling, which is more robust to shape distortion than traditional methods that simply use the displacement vectors. Then, a random back projection scheme assigns a weighting to each configuration. The random back projection significantly reduces the number of wrong votes, thereby enabling the overall algorithm to downsample the one-to-many mappings, obtaining a linear gain in efficiency.

The runtime was very low compared to the other GHT algorithms, placing our method in the state-of-the-art, simultaneously achieving a ground-breaking improvement in accuracy in distortion cases.

This paper targeted positioning tasks in visual robotic systems in automatic assembly and packaging lines, where the view angle is usually set at a constant or similar point. To benefit wider industrial applications, we will push this method to deal with 3D shape detection where the view angle becomes a key issue, but this is beyond the scope of this article.