1. Introduction

Until the introduction of Industry 4.0 principles, robotics in factories was mostly about machines replacing laborers who were tasked with non-ergonomic duties. The exploitation of robots was almost limited to manipulation of heavy loads or in uncomfortable positions, execution of dangerous tasks due to toxic payloads or environment, and execution of monotonous repetitive operations. Nowadays, thanks to the wide spread of

collaborative robots (or

cobots), the trend is shifting, especially in small and medium enterprises (SME’s) [

1]. In fact, small batch production and high level of product customization make these industrial entities still based on the versatility of human labor. Cobots, for their part, had a chance to easily insert themselves in this productive paradigm for they have been specifically developed for coexistence with people.

In this scenario, the collaboration of the researchers of Università Politecnica delle Marche together with five companies operating in the technology sector resulted in the creation of a laboratory which aims to disseminate the principles of Industry 4.0 in the local industrial fabric. Among them, collaborative robotics plays a crucial role, alongside with the development of tools and strategies for

Human Robot Collaboration [

2,

3]. The aim is that of achieving the seamless team dynamics of an all-human team, and to this goal the last decade of research focused on several aspects [

4,

5] going from the machines themselves (exteroception [

6,

7], collision avoidance [

8,

9], intrinsic safety of the mechanics [

10]), to their relation capabilities with people (interaction modalities [

11,

12], control oriented perception such as gesture recognition [

13] and gaze tracking [

14,

15]).

Many relevant studies of the recent past can be mentioned trying to assess some of the HRC related aspects. Rusch et al. [

16] quantified the beneficial impact, under both ergonomic and economic point of view, of preliminary simulated design of HRC scenarios. Papetti et al. [

17] proposed a quantitative approach to evaluate a HRC simulated application. Similar topics were analysed in [

18] where authors considered such opposing effects on collaborative production lines. However, besides the economic impact, ergonomics analyses surely play a fundamental role for injury hazard management [

19,

20]. This becomes even more important considering the conclusions drawn by Dafflon et al. [

21]: in light of their claims, completely unmanned factories are not reasonably possible mainly due to the un-feasibility of such complex control systems. Therefore, processes involving humans (

Human In The Loop, HITL) will be implemented more and more frequently, justifying the effort of developing dedicated simulation environments and evaluation metrics for anthropocentric approaches [

22,

23,

24,

25].

However, the perception of operator commands in HITL tasks remains a widely investigated topic [

26,

27,

28], since it still represents a critical point of the HRC process. Robustness, effectiveness, and above all safety are the keywords to be kept in mind. Thus, the interest aroused by strategies not involving contact among machines and workers is well understendable. Dinges et al. [

29] considered the use of facial expressions to assess aggravated HRC scenarios; Yu et al. [

30] used a multi-sensor approach to sense both postures and gestures to be interpreted as commands. In all of these examples, the key role played by artificial vision is evident, as also featured by authors of [

31].

In the following, some of these topics are exemplified by case studies approached by the researchers at i-Labs. The aim is that of providing an impression of the ongoing research developed to pursue the principles of Industry 4.0 for what concerns the cooperation among humans and machines. In particular, a simulated environment is used to evaluate the ergonomics of an industrial work-cell in the first example. The second case considers the use of virtual reality for testing of obstacle avoidance control strategies applied to a redundant collaborative industrial manipulator. The third case study shows how a simple yet effective gesture recognition strategy can be developed to control tasks executed by a dual arm cobot. For the last case study, an obstacle avoidance control algorithm has been implemented on a collaborative robot to validate the effectiveness of the proposed law.

2. Simulation Methods in HRC Design

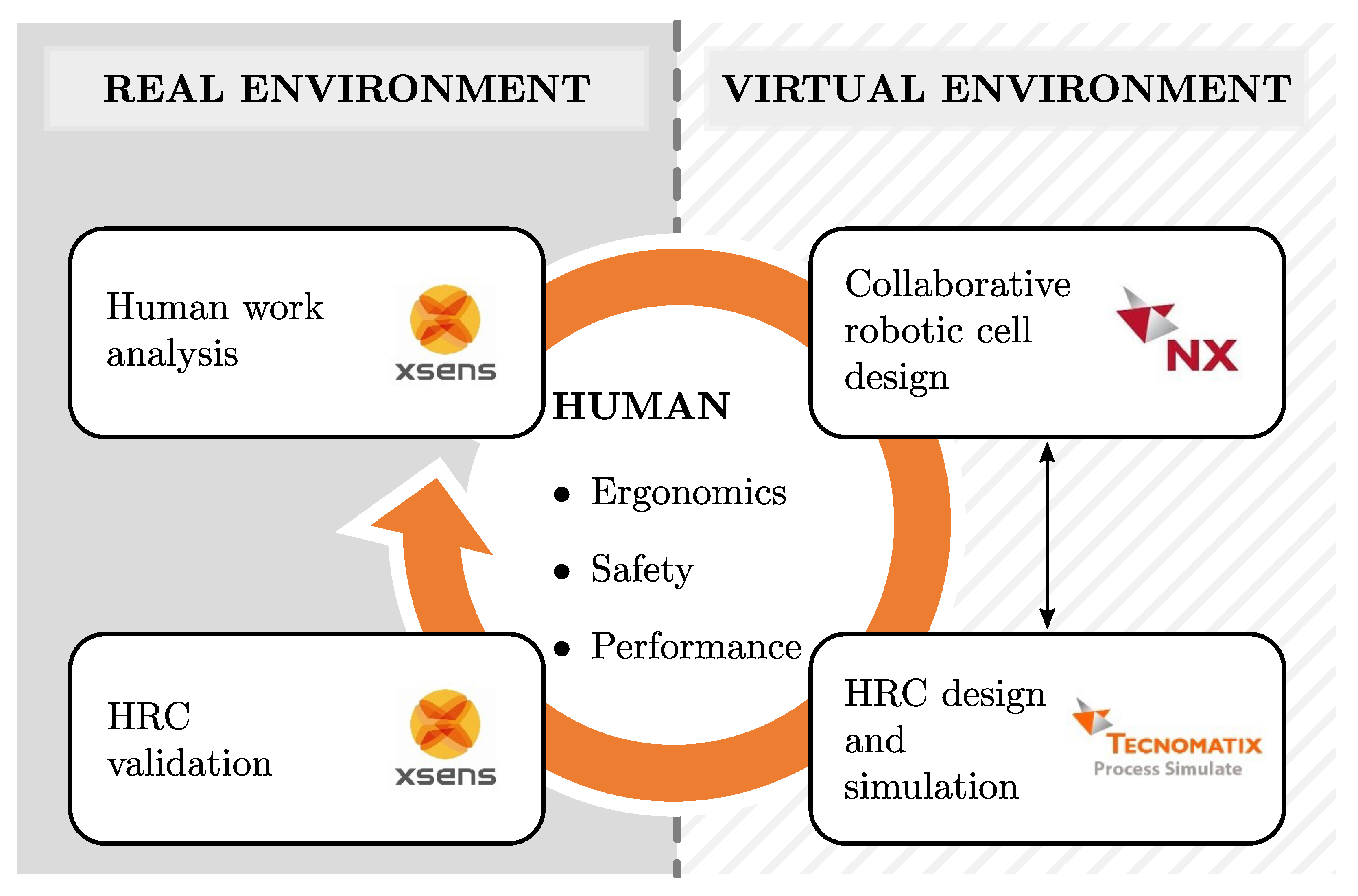

The method proposed in this section aims to support the human-oriented HRC design by merging the enabling technologies of industry 4.0 with the concepts of human ergonomics, safety, and performance. As shown in

Figure 1, it starts with the human work analysis according to objective ergonomic assessments. The XSens™MVN inertial motion capture system is used to measure joint angles and detect awkward postures. The analysis results drive the collaborative robotic cell design; for example, allocating the non-ergonomic tasks to the robot. The preliminary concept is then virtually simulated to identify critical issues from different perspectives (technical constraints, ergonomics, safety, etc.) and improve the interaction modalities. In these two phases, the NX (

https://www.plm.automation.siemens.com/global/uk/products/nx/ (accessed on 1 January 2022)) and Tecnomatix (

https://www.plm.automation.siemens.com/global/en/products/tecnomatix/ (accessed on 1 January 2022)) Process Simulate by Siemens are respectively used. The simulation contributes to the design optimization so that all the requirements are satisfied. The realization of the physical prototype allows the HRC experimentation, optimization, and validation before being implemented in the real production line. The experimentation phase includes a new ergonomic assessment to quantitatively estimate the potential benefits for the operator.

The industrial case study refers to the drawers’ assembly line of LUBE Industries, the major kitchen manufacturer in Italy. Currently, a traditional robot and a CNC machine make the first part of the production line automated. Then, three manual stations complete the drawer assembly.

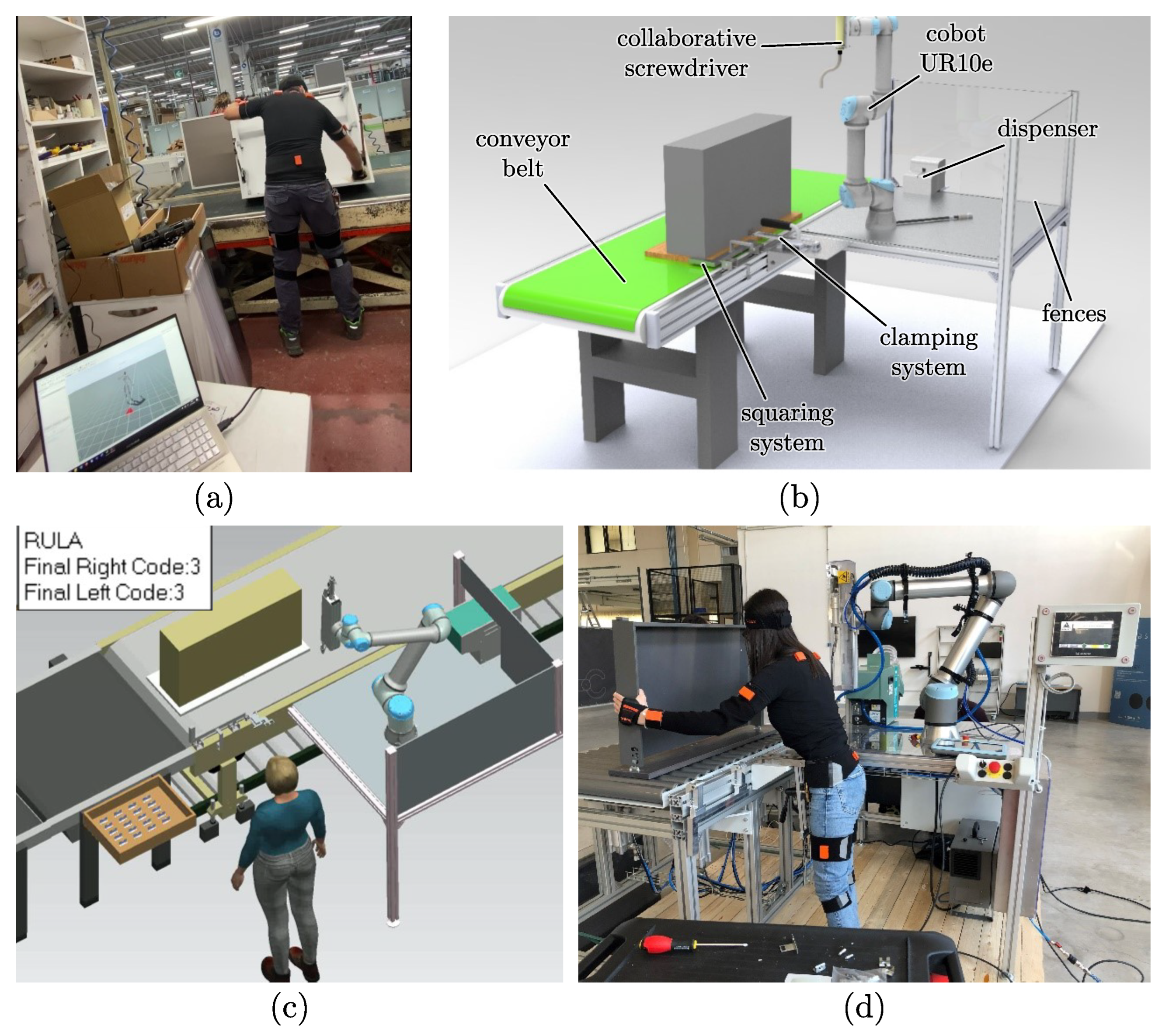

Figure 2a shows one of the involved operators, equipped with 18 Xsens MTw (Wireless Motion Tracker), performing the tasks. The analysis involved all the labourers qualified for that specific task for a total of 6 operators (3 males, 3 females) all having average anthropometric characteristics. All the participants were informed about the goal of the study and the procedure. Also they were asked to read and sign the consent form.

The human work analysis highlighted a medium-high ergonomic risk (asymmetrical posture and stereotypy) for the operator dedicated to screwing. Before the objective evaluation, this workstation was mistakenly considered the most ergonomic of the three. Accordingly, the HRC design was based on the need to assign the screwing task to the robot to preserve the operator’s health.

Figure 2b shows the preliminary concept of the workstation that includes the following elements: conveyor belt; Universal Robot UR10e; collaborative screwdriver with a flexible extension; an aluminum structure where the robot is fixed by four M9 holes; L-shaped squaring system; clamping system; dispenser for feeding the screwdriver, easily and safely accessible by the operator to reload it; two plexiglass fences to reduce collisions risk.

The simulation highlighted several critical issues to be solved. To overcome some safety and technical problems, a new type of screwdriver was designed and implemented, which provides a maximum torque of 12 Nm and weighs 2.3 kg. To improve the line balancing the first two manual stations were aggregated in the cooperative robotic cell. However, the ergonomics simulation (

Figure 2c) highlighted potential ergonomics risks for the operator during the drawer rotation.

Then, an idle rotating roller was inserted so that the operator can manually turn the conveyor belt comfortably and safely. The simulation also showed that the new cooperative cell increased the current takt time. Consequently, an autoloader (FM-503H) was introduced to avoid unnecessary cobot movements. It consists of a tilting blade (suction) that loads the screws from a collection basket. Finally, the human-robot interaction has been improved by introducing the Smart Robots (

http://smartrobots.it/product/ (accessed on 1 January 2022) ) vision system, which monitors the area of cooperation and enables the robot program according to human gestural commands. It allows the management of a high number of product variants.

In the new HRC workstation (

Figure 2d), installed at i-Labs, negligible ergonomics risks, according to RULA (Rapid Upper Limb Assessment) and OCRA methods, were observed. As summarized in

Table 1, also the Pilz Hazard Rating (PHR), which is calculated by (

1), was reduced to acceptable values.

where:

is Degree of Possible Harm

is Probability of Occurrence

is Possibility of Avoidance

is Frequency and/or Duration of Exposure

As shown, the new production line respects the cycle time (57 s) imposed by the CNC machine and the introduction of a collaborative robot led to better line balancing (). The lab test was aimed at recreating the actual working conditions, therefore both a male and a female volunteer (actually two researchers of the i-Labs group) have been asked to take part in the investigation. Also, in this case, their anthropometric characteristics can be considered average.

3. HITL: Gesture Recognition Examples

In the search for effective ways to increase collaboration between workers and robots, new types of communication can be considered. Voice commands, for example, are typical in many systems today, such as vehicles, home automation systems, assistive robotics, etc., but they are not suitable for the industrial environment, where noise and the coexistence of multiple workers in a shared space make their implementation impossible. On the other hand, image sensors, such as standard or RGBD cameras, can be exploited to capture workers’ gestures, which can then be interpreted by artificial intelligence algorithms to generate a command to be sent to the robot.

A distinction should be made between applications that require the use of wearable devices and those that rely on direct hand and body recognition. Regarding the former type, the most common technology for hand gesture recognition are glove devices. As part of their work at i-Labs, the authors developed the Robotely software suite for the specific purpose of overcoming the need for wearing gloves by adopting a hand recognition system using a machine learning approach [

32]. As shown in

Figure 3, the software exploits MediaPipe libraries in order to recognize 20 landmarks of the hand and identify up to three different hand configurations that can be converted to commands which can be sent to whatever robot or system. A specific test case was carried out at i-Labs: a PC with a standard webcam was used to run Robotely and connected to a Universal Robots UR10e cobot in order to sequence some operations between the operator and the robot.

A second example is shown in

Figure 3 where the commercial Smart Robots system is integrated with the ABB YuMi cobot. Smart Robots is a programmable system based on RGBD sensors directly integrated into the robot control software. Again, three different hand gestures can be recognized and converted into commands, such as stop in

Figure 4a, calling a program variation such as a quality inspection (

Figure 4b), and opening the gripper for part rejection (

Figure 4c). In addition, it is possible to define areas of the workspace corresponding to the activation of different program sequences: when the operator’s hand enters a predefined area of the scene (once, or typically twice to avoid inadvertent activation) a program state is changed. In

Figure 4d), for example, the operator covers a letter drawn on the table with his hand to order the robot to compose that letter with LEGO bricks.

4. Safe Autonomous Motions: Collision Avoidance Strategies

This section recalls the obstacle avoidance algorithm introduced by authors in [

8,

9] and presents some experimental results. The research in this field is presented hereby as an advancement in the direction of safe human-robot coexistence. Also, the resulting control algorithm is at the base of the test case presented in the subsequent section, which makes use of virtual reality tools to test and calibrate the empirical parameters introduced by the algorithm.

For a start, it is possible to consider the velocity kinematics of a generic manipulator owning a number of actuators greater or equal to 6 (depending on its degree of redundancy). In matrix form, the velocity kinematics of such a system can be written as

, where

is the

arm Jacobian (with

n number of the joints composing the kinematic chain of the manipulator,

) and

is the vector of joint rates. In such terms, the vector of variables is

where

are the

n joint variables of the arm kinematic chain. With such notation, the Jacobian

depends on the structure of the manipulator. The inverse of the Jacobian

of the redundant system can be obtained as a damped inverse:

where

is the damping factor, modulated as a function of the smaller singular value of the Jacobian matrix (the interested reader is addressed to [

8,

9] for further details). The inverse

can be used to compute the joint velocities needed to perform a given trajectory with a Closed-Loop Inverse Kinematic (CLIK) approach:

where

is the vector of planned velocities,

is a gain matrix (usually diagonal) to be tuned on the application, and

is a vector of orientation and position errors (

and

), defined as:

In (

4) the subscript

d stands for desired planned variable,

is the position of the end-effector, while

,

and

are the unit vectors of the end-effector reference frame.

The collision avoidance strategy is then implemented as a further velocity component (to be added to the trajectory joint velocities) capable of distancing the end-effector and the other parts of the robot from a given obstacle. Such contribution is a function of the distance among the obstacle and every body of the robotic system. To this purpose, the bodies have been represented by means of two control points,

and

referring to

Figure 5. Called

the centre of a generic obstacle, the distance among it and the segment

can be differently computed in three different scenarios:

,

Figure 5a: in this case the obstacle is closer to the

tip than to any other point of

; the distance

d among the obstacle center and the line

coincides with the length of

.

,

Figure 5b: the minimum distance

d lies within points

and

. In this case, it is:

,

Figure 5c: point

is closer to any other point, therefore

.

It is worth remarking that for this paper only spherical obstacles were considered, although similar approaches can be developed for objects of any shape starting from the distance primitives here defined.

Now the set of repulsive velocities for the

body of the system can be introduced as:

where:

is an activation parameter, function of

d, of the obstacle dimension

and the length

which characterize the body

(as represented in

Figure 5, the dimension

defines a region around the line

given by the intersection of two spheres centred in

and

, and a cylinder aligned with

, of radius

). The activation parameter can be whatever function such that

if

, and

if

. Actually, such a transition can be made smoother by the adoption of feasible functions (polynomials, logarithmic, etc.).

is a customized scalar representing the module of the repulsive velocity provided by obstacle to the body.

k is a parameter which depends on the three cases of

Figure 5: in the first case

so that only the point

influences

; in the second case both

and

are considered proportionally to their distance from

, thus

; at last, in the third case

so that only the point

is relevant to

.

and are the damped inverse of the Jacobian matrices of points and .

At this point, the CLIK control law (

3) can be completed as:

being

m the number of segments used to describe the manipulator.

At last, some experimental results are shown as demonstration of the obstacle avoiding strategy consistency. Going a little into details, the CLIK control law of the KUKA KMR iiwa (a 7 axes redundant collaborative manipulator) was built using 6 different segments, while the two obstacles were modelled as spheres. As shown by the test rig image (

Figure 6), the robot carries a collaborative gripper by Schunk which is also considered for the definition of the robot segments (cfr

Figure 7). The results are presented in terms of joints positions and rates, and pose and velocity of the robot end-effector (EE). Each variable is referred, for comparison, to the values collected during the execution of the same trajectory without obstacles in the robot workspace. The robot was controlled via Matlab with a cycle frequency of

Hz. It is worth remarking that the visualization provided in

Figure 7 is a plot built on experimental data. The trajectory under investigation is a simple linear motion with constant orientation of the EE. Two obstacles have been put in the robot workspace, one directly on the planned trajectory (

in

Figure 7) and the other in the space occupied by the robot non-terminal bodies (

in

Figure 7). The results show how the control law is able to follow the given trajectory even in presence of the two obstacles. As expected, obstacle

(met approximately in the time span 2–4.5 s, gray area in graphs of

Figure 7) prevents the robot EE from maintaining the desired pose: the control law permits the dodging of

deviating the bare minimum from the planned motion. Regarding obstacle

(met in the span

–

s, purple areas), the control law exploits the robot redundancy to avoid the contact among the robot elbow and the obstacle while maintaining the EE on the right trajectory and orientation.

5. Virtual Reality Based Design Methods

In this section a virtual implementation of the obstacle avoidance control strategy is presented. Aside the experimentation previously shown, the algorithm has been tested in advance in a simulated interactive scenario to demonstrate the feasibility of the control law, and to tune the parameters used to optimize the robot response to a dynamic obstacle. Such an approach, schematically shown in

Figure 8, represents an efficient paradigm for design and virtual testing of HITL applications. Starting in a simulated environment, the interaction workflow can be designed considering also the presence of labourer and their impact on the production. A further optimization step can be added to optimize the interaction among humans and robots exploiting virtual reality. The VR tools permit one to disregard the modeling of labourer behaviour since their presence is played by actual humans who bring in the design process not only the actual expertise of the production line final users, but also the randomness of human actions and movements with further advantages in terms of security features design.

For this purpose, the idea was to create a virtual reality (VR) application in which a real obstacle is inserted in the simulation loop, while the robot is still completely virtual. The advantages of this approach are many: firstly, the algorithms can be modified and fine-tuned ensuring safety for the operators, secondly these changes can be made easily and quickly, reducing the development time of the real application.

The control architecture used for the VR application is shown in

Figure 9, while

Figure 10 is the experimental rig. The core of the system is the VR engine, running on a standard PC, developed by the SteamVR development suite with Unreal Engine 4 used to model the kinematics of the KUKA robot. A classic HTC VR set is used to equip the operator: the HMD provides an immersive three-dimensional representation of the workspace, which in this case is simply the robot mounted on a stand; the controller, manually managed by the operator, is rigidly connected to a virtual obstacle, shaped like a sphere, which can be moved in the virtual space to interfere with the robot’s motion. The control of the robot is executed by the same PC in a parallel thread developed in Matlab, with a frame rate set to a typical value for communication protocols with robots (e.g.,

or

, depending on the manufacturer). The frame rate of the VR engine is set to

, which is the standard display refresh rate in VR applications. The communication between the two executed threads is realized by TCP/IP protocol. A classic pick and place task is simulated, thus the robot controller sends joint rotations to the VR engine at each time step, similarly to what is done in real robotic systems. The trajectory of the robot is updated in real time by the collision avoidance algorithm if the obstacle enters in a safety region of the manipulator. The position of the obstacle is known once the VR engine reads the coordinates of the controller held by the operator and sends this information to the robot controller.

6. Concluding Remarks

The paper showed the results obtained by the i-Labs laboratory in the wide field of research on human-robot interaction. Many technologies have been investigated and experimented to horizontally approach the issue of close cooperation among labourers and machines, going from off-line design, to on-line safety oriented control strategies.

The first case study showed a possible approach to the design and optimization of an assembly work-cell. The optimization, oriented at enhancing the labourer ergonomics and safety, allowed the realization of the prototype work-cell for the cobot assisted assembly of furniture pieces. The outcome quality has been quantitatively estimated.

Similar aspects of Human In The Loop operations have also been investigated in the second case study, which was about the realization of a visual based interaction paradigm among humans and robot. In this case, a depth camera was used to recognize the labourer commands which allowed the execution of simple tasks by the robot. This kind of contactless interaction pushed a little bit further in direction of security, which have been the core focus of the third case study.

The third case introduced an innovative control algorithm for active obstacle avoidance, applicable to any cobot. The strategy, based on the method of repulsive fields of velocity, was tested on a redundant industrial cobot to evaluate the possibilities offered in terms of both safety and task execution ability. At last, a Virtual Reality approach for the detail design an exploitation of the obstacle avoidance strategy has been described in the fourth case study.

The technologies which have been separately presented in this manuscript, actually represent some of the enabling technologies in the field of Industry 4.0 and 5.0 which place the human operator at the very center of the production process. Such a knowledge base can be exploited for the enhancement of industrial processes, especially in SMEs, which represent the main matter of the local industrial tissue.

Author Contributions

Conceptualization, G.P. and A.P.; methodology, G.P. and A.P.; software, L.C.; validation, D.C. and C.S.; formal analysis, L.C.; investigation, M.-C.P.; resources, M.C.; data curation, C.S.; writing—original draft preparation, L.C.; writing—review and editing, M.C.; visualization, C.S.; supervision, G.P. and A.P.; project administration, M.-C.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partly funded by the project URRA, “Usability of robots and reconfigurability of processes: enabling technologies and use cases”, on the topics of User-Centered Manufacturing and Industry 4.0, which is part of the project EU ERDF, POR MARCHE Region FESR 2014/2020–AXIS 1–Specific Objective 2–ACTION 2.1, “HD3Flab-Human Digital Flexible Factory of the Future Laboratory”, coordinated by the Polytechnic University of Marche.

Data Availability Statement

No publicly archived dataset has been used or generated during the research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Galin, R.; Meshcheryakov, R. Automation and robotics in the context of Industry 4.0: The shift to collaborative robots. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 537, p. 032073. [Google Scholar]

- Vicentini, F. Terminology in safety of collaborative robotics. Robot. Comput. Integr. Manuf. 2020, 63, 101921. [Google Scholar] [CrossRef]

- Costa, G.d.M.; Petry, M.R.; Moreira, A.P. Augmented reality for human–robot collaboration and cooperation in industrial applications: A systematic literature review. Sensors 2022, 22, 2725. [Google Scholar] [CrossRef] [PubMed]

- Ajoudani, A.; Zanchettin, A.M.; Ivaldi, S.; Albu-Schäffer, A.; Kosuge, K.; Khatib, O. Progress and prospects of the human–robot collaboration. Auton. Robot. 2018, 42, 957–975. [Google Scholar] [CrossRef]

- Mukherjee, D.; Gupta, K.; Chang, L.H.; Najjaran, H. A survey of robot learning strategies for human-robot collaboration in industrial settings. Robot. Comput. Integr. Manuf. 2022, 73, 102231. [Google Scholar] [CrossRef]

- Morato, C.; Kaipa, K.; Zhao, B.; Gupta, S.K. Safe human robot interaction by using exteroceptive sensing based human modeling. In International Design Engineering Technical Conferences and Computers and Information in Engineering Conference; American Society of Mechanical Engineers: New York, NY, USA, 2013; Volume 55850, p. V02AT02A073. [Google Scholar]

- Dawood, A.B.; Godaba, H.; Ataka, A.; Althoefer, K. Silicone-based capacitive e-skin for exteroception and proprioception. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 8951–8956. [Google Scholar]

- Chiriatti, G.; Palmieri, G.; Scoccia, C.; Palpacelli, M.C.; Callegari, M. Adaptive obstacle avoidance for a class of collaborative robots. Machines 2021, 9, 113. [Google Scholar] [CrossRef]

- Palmieri, G.; Scoccia, C. Motion planning and control of redundant manipulators for dynamical obstacle avoidance. Machines 2021, 9, 121. [Google Scholar] [CrossRef]

- Lee, W.B.; Lee, S.D.; Song, J.B. Design of a 6-DOF collaborative robot arm with counterbalance mechanisms. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Marina Bay Sands, Singapore, 29 May–3 June 2017; pp. 3696–3701. [Google Scholar]

- Katyara, S.; Ficuciello, F.; Teng, T.; Chen, F.; Siciliano, B.; Caldwell, D.G. Intuitive tasks planning using visuo-tactile perception for human robot cooperation. arXiv 2021, arXiv:2104.00342. [Google Scholar]

- Saunderson, S.P.; Nejat, G. Persuasive robots should avoid authority: The effects of formal and real authority on persuasion in human-robot interaction. Sci. Robot. 2021, 6, eabd5186. [Google Scholar] [CrossRef] [PubMed]

- Kollakidou, A.; Haarslev, F.; Odabasi, C.; Bodenhagen, L.; Krüger, N. HRI-Gestures: Gesture Recognition for Human-Robot Interaction. In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications–Volume 5 VISAPP, online, 5–7 February 2022; pp. 559–566. [Google Scholar] [CrossRef]

- Sharma, V.K.; Biswas, P. Gaze Controlled Safe HRI for Users with SSMI. In Proceedings of the 20th International Conference on Advanced Robotics (ICAR), Ljubljana, Slovenia, 6–10 December 2021; pp. 913–918. [Google Scholar]

- Li, W.; Yi, P.; Zhou, D.; Zhang, Q.; Wei, X.; Liu, R.; Dong, J. A novel gaze-point-driven HRI framework for single-person. In International Conference on Collaborative Computing: Networking, Applications and Worksharing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 661–677. [Google Scholar]

- Rusch, T.; Spitzhirn, M.; Sen, S.; Komenda, T. Quantifying the economic and ergonomic potential of simulated HRC systems in the focus of demographic change and skilled labor shortage. In Proceedings of the 2021 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Marina Bay Sands, Singapore, 13–16 December 2021; pp. 1377–1381. [Google Scholar]

- Papetti, A.; Ciccarelli, M.; Scoccia, C.; Germani, M. A multi-criteria method to design the collaboration between humans and robots. Procedia CIRP 2021, 104, 939–944. [Google Scholar] [CrossRef]

- Stecke, K.E.; Mokhtarzadeh, M. Balancing collaborative human–robot assembly lines to optimise cycle time and ergonomic risk. Int. J. Prod. Res. 2021, 60, 25–47. [Google Scholar] [CrossRef]

- Ranavolo, A.; Chini, G.; Draicchio, F.; Silvetti, A.; Varrecchia, T.; Fiori, L.; Tatarelli, A.; Rosen, P.H.; Wischniewski, S.; Albrecht, P.; et al. Human-Robot Collaboration (HRC) Technologies for reducing work-related musculoskeletal diseases in Industry 4.0. In Congress of the International Ergonomics Association; Springer: Berlin/Heidelberg, Germany, 2021; pp. 335–342. [Google Scholar]

- Advincula, B. User experience survey of innovative softwares in evaluation of industrial-related ergonomic hazards: A focus on 3D motion capture assessment. In Proceedings of the SPE Annual Technical Conference and Exhibition, Dubai, UAE, 23 September 2021. [Google Scholar]

- Dafflon, B.; Moalla, N.; Ouzrout, Y. The challenges, approaches, and used techniques of CPS for manufacturing in Industry 4.0: A literature review. Int. J. Adv. Manuf. Technol. 2021, 113, 2395–2412. [Google Scholar] [CrossRef]

- Maurice, P.; Schlehuber, P.; Padois, V.; Measson, Y.; Bidaud, P. Automatic selection of ergonomie indicators for the design of collaborative robots: A virtual-human in the loop approach. In Proceedings of the 2014 IEEE-RAS International Conference on Humanoid Robots, Madrid, Spain, 18–20 November 2014; pp. 801–808. [Google Scholar]

- Garcia, M.A.R.; Rojas, R.; Gualtieri, L.; Rauch, E.; Matt, D. A human-in-the-loop cyber-physical system for collaborative assembly in smart manufacturing. Procedia CIRP 2019, 81, 600–605. [Google Scholar] [CrossRef]

- Brunzini, A.; Papetti, A.; Messi, D.; Germani, M. A comprehensive method to design and assess mixed reality simulations. Virtual Real. 2022, 1–19. [Google Scholar] [CrossRef]

- Ciccarelli, M.; Papetti, A.; Cappelletti, F.; Brunzini, A.; Germani, M. Combining World Class Manufacturing system and Industry 4.0 technologies to design ergonomic manufacturing equipment. Int. J. Interact. Des. Manuf. 2022, 16, 63–279. [Google Scholar] [CrossRef]

- Liu, H.; Wang, L. Latest developments of gesture recognition for human–robot collaboration. In Advanced Human-Robot Collaboration in Manufacturing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 43–68. [Google Scholar]

- Askarpour, M.; Lestingi, L.; Longoni, S.; Iannacci, N.; Rossi, M.; Vicentini, F. Formally-based model-driven development of collaborative robotic applications. J. Intell. Robot. Syst. 2021, 102, 1–26. [Google Scholar] [CrossRef]

- Scoccia, C.; Ciccarelli, M.; Palmieri, G.; Callegari, M. Design of a Human-Robot Collaborative System: Methodology and Case Study. International Design Engineering Technical Conferences and Computers and Information in Engineering Conference. Am. Soc. Mech. Eng. 2021, 85437, V007T07A045. [Google Scholar]

- Dinges, L.; Al-Hamadi, A.; Hempel, T.; Al Aghbari, Z. Using facial action recognition to evaluate user perception in aggravated HRC scenarios. In Proceedings of the 12th International Symposium on Image and Signal Processing and Analysis (ISPA), Zagreb, Croatia, 13–15 September 2021; pp. 195–199. [Google Scholar]

- Yu, J.; Li, M.; Zhang, X.; Zhang, T.; Zhou, X. A multi-sensor gesture interaction system for human-robot cooperation. In Proceedings of the 2021 IEEE International Conference on Networking, Sensing and Control (ICNSC), Nanjing, China, 30 October–2 November 2021; Volume 1, pp. 1–6. [Google Scholar]

- Pandya, J.G.; Maniar, N.P. Computer Vision-Guided Human–Robot Collaboration for Industry 4.0: A Review. Recent Adv. Mech. Infrastruct. 2022, 147–155. [Google Scholar]

- Scoccia, C.; Menchi, G.; Ciccarelli, M.; Forlini, M.; Papetti, A. Adaptive real-time gesture recognition in a dynamic scenario for human-robot collaborative applications. In Proceedings of the 4th IFToMM ITALY Conference, Napoli, Italy, 7–9 September 2022; pp. 637–644. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).