Design and Control of a Lower Limb Rehabilitation Robot Based on Human Motion Intention Recognition with Multi-Source Sensor Information

Abstract

1. Introduction

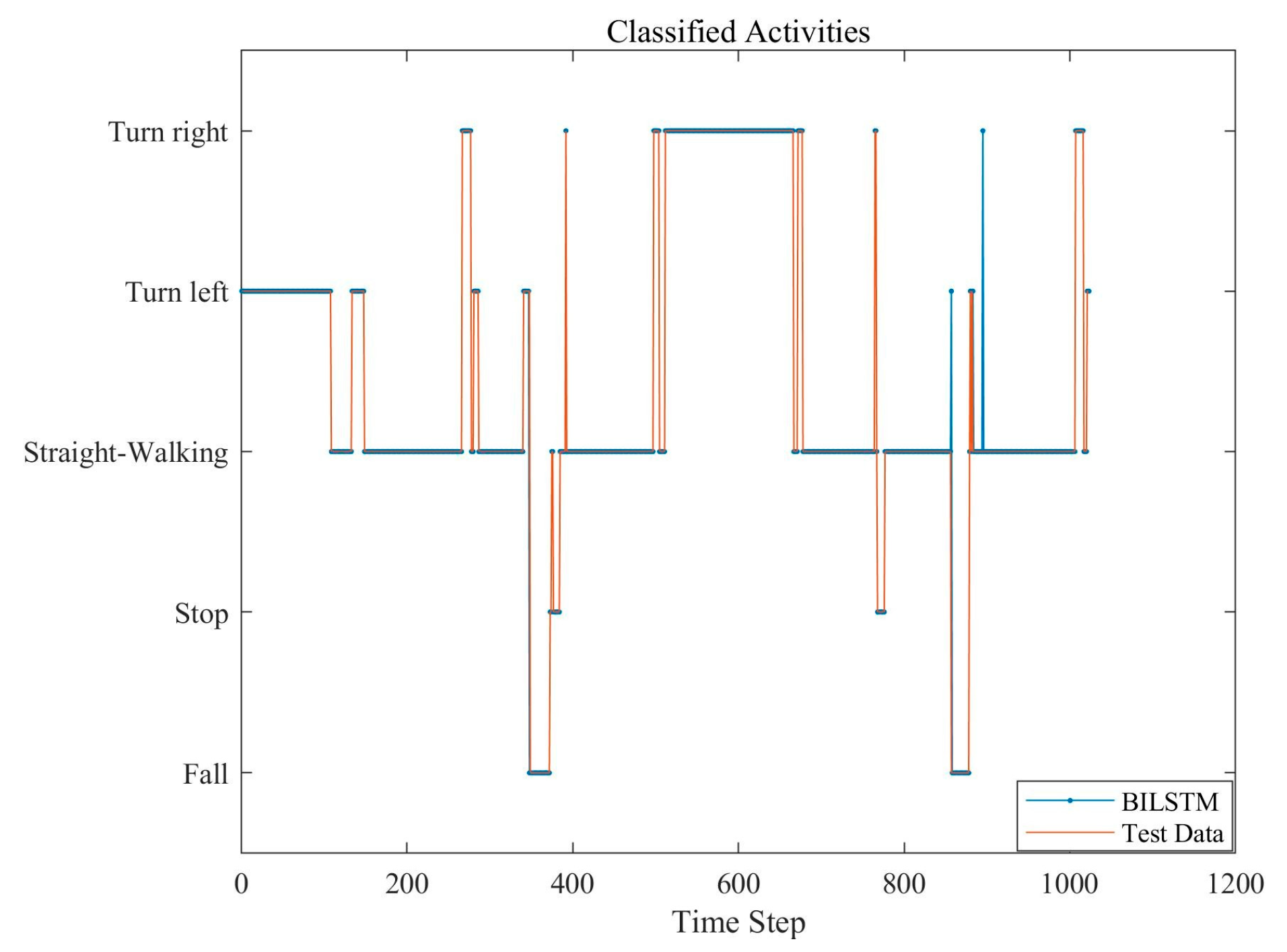

2. Robot System Design

2.1. Hardware Control Platform

2.2. Data Acquisition

3. Motion Intent Recognition Model

3.1. Feature Extraction

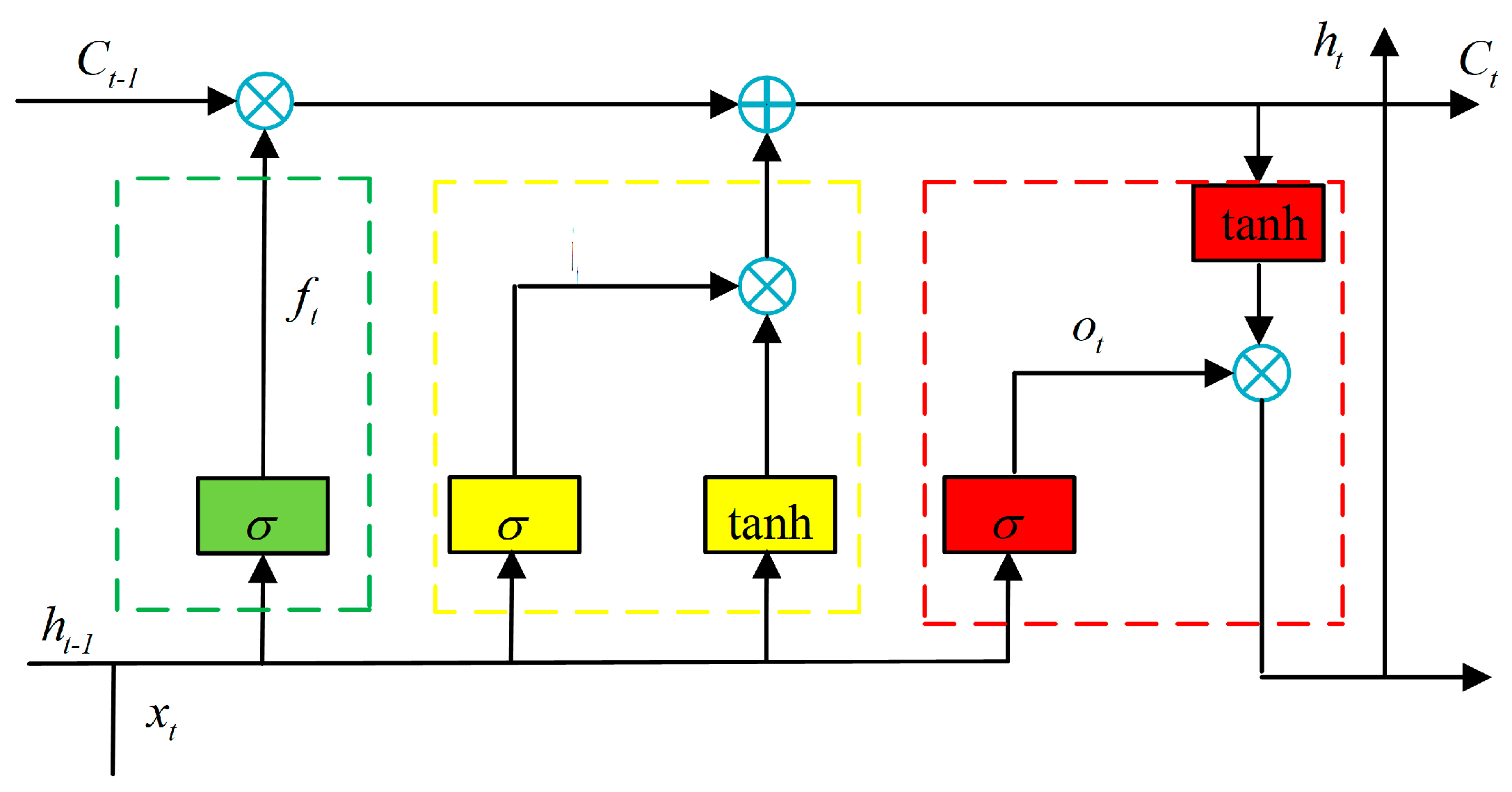

3.2. LSTM Intent Classification Model

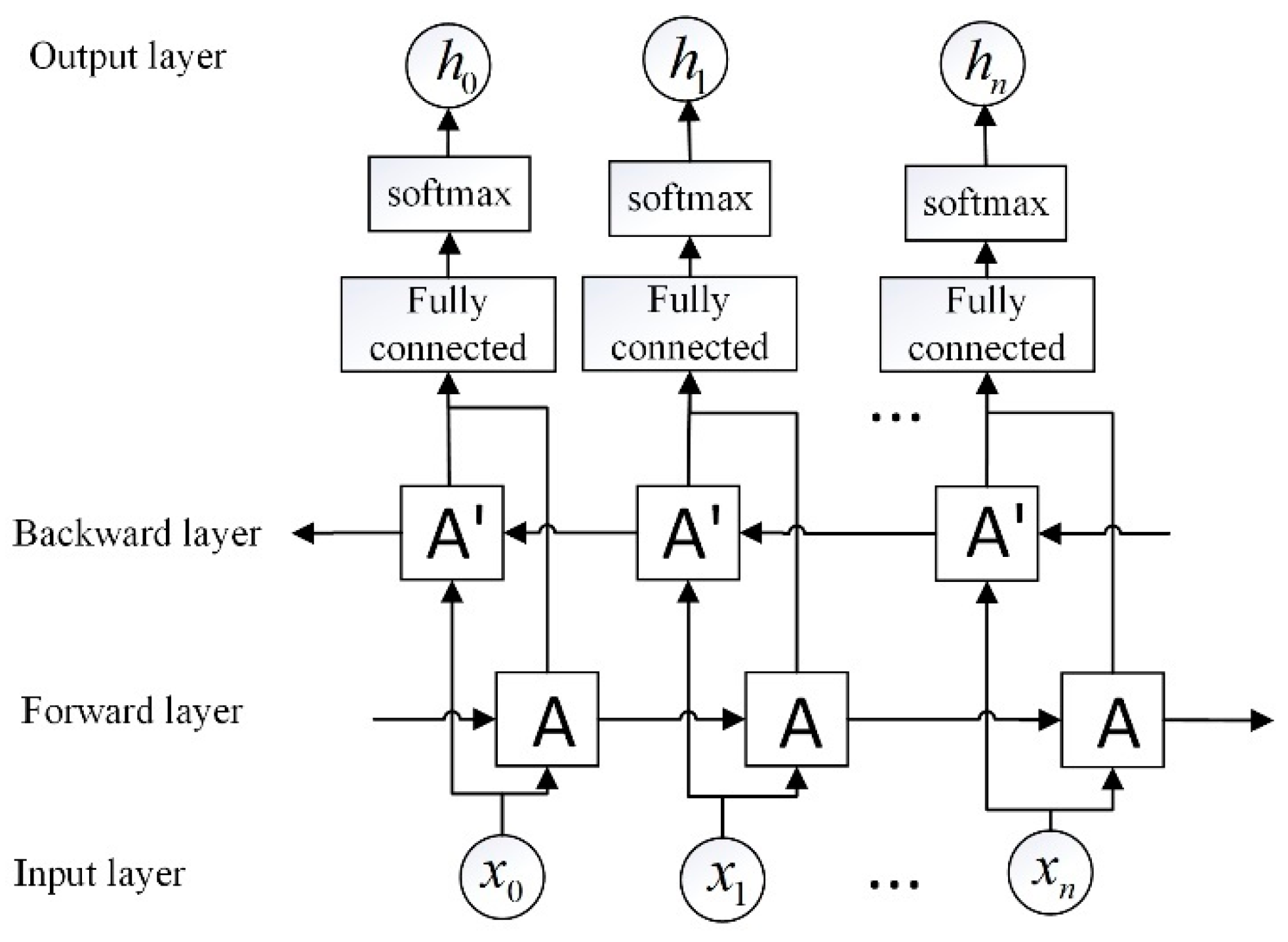

3.3. BILSTM Intent Classification Model

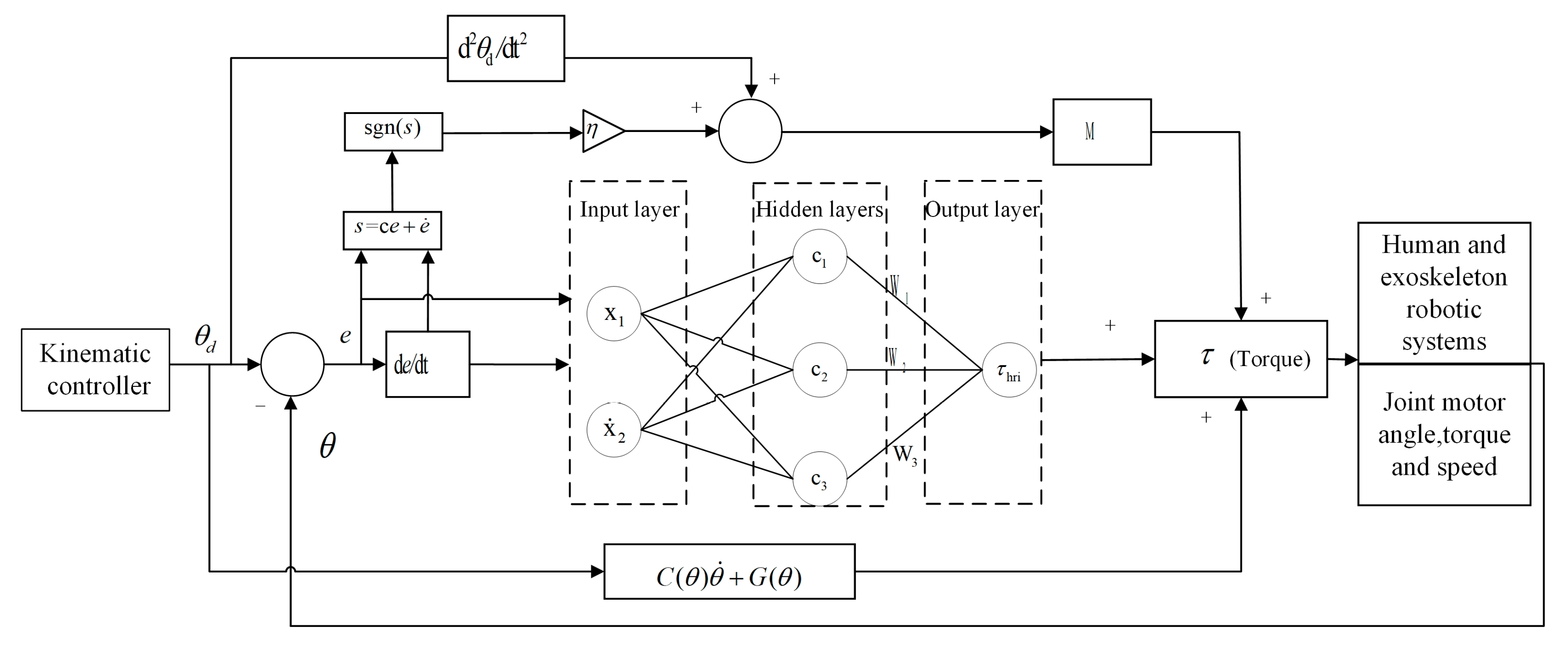

4. Robot Control System

4.1. Control Architecture

4.2. Dynamical Model

4.3. Controller Design

4.3.1. PID Control

4.3.2. RBF Adaptive Sliding Mode Control

5. Experiments and Results

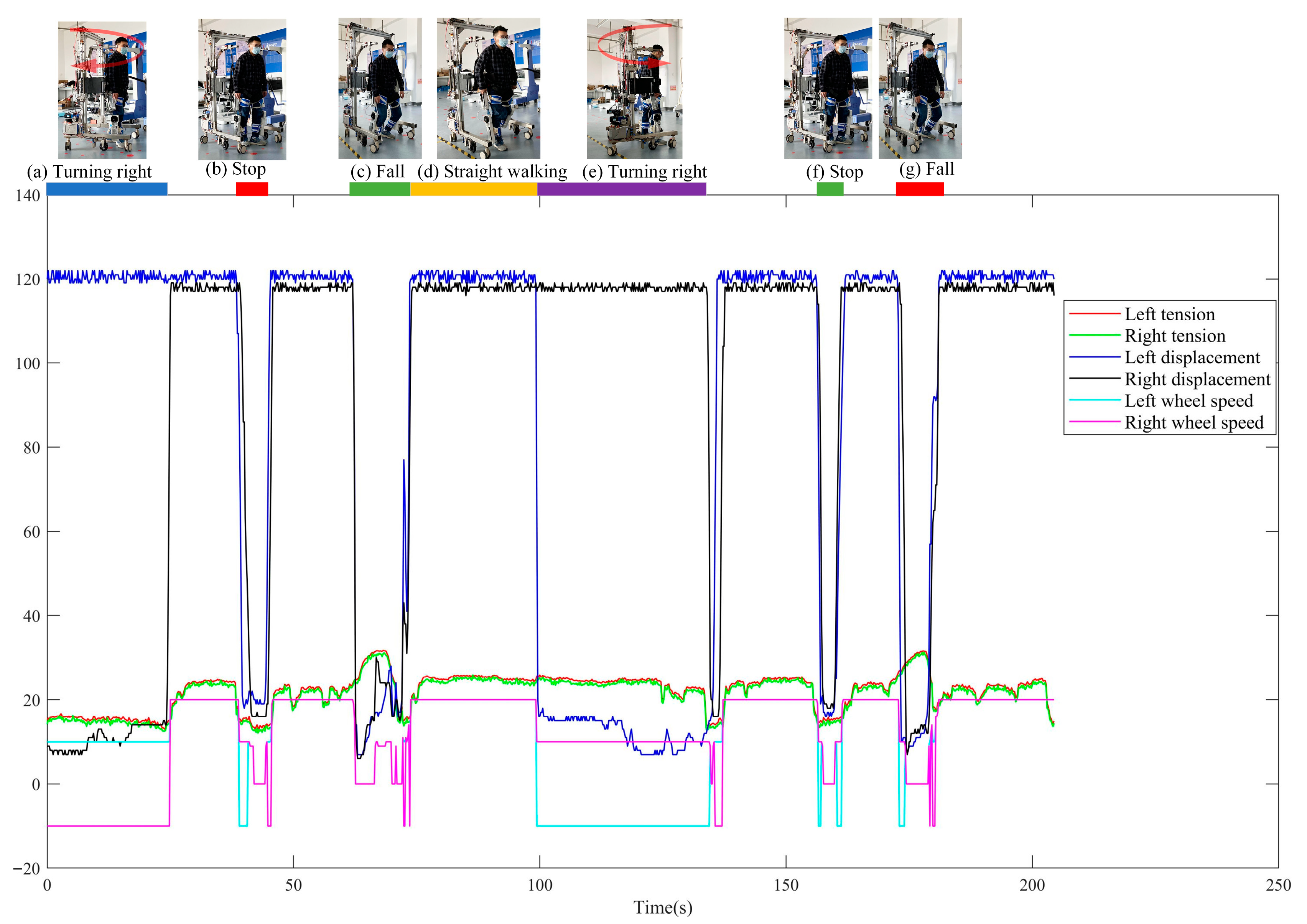

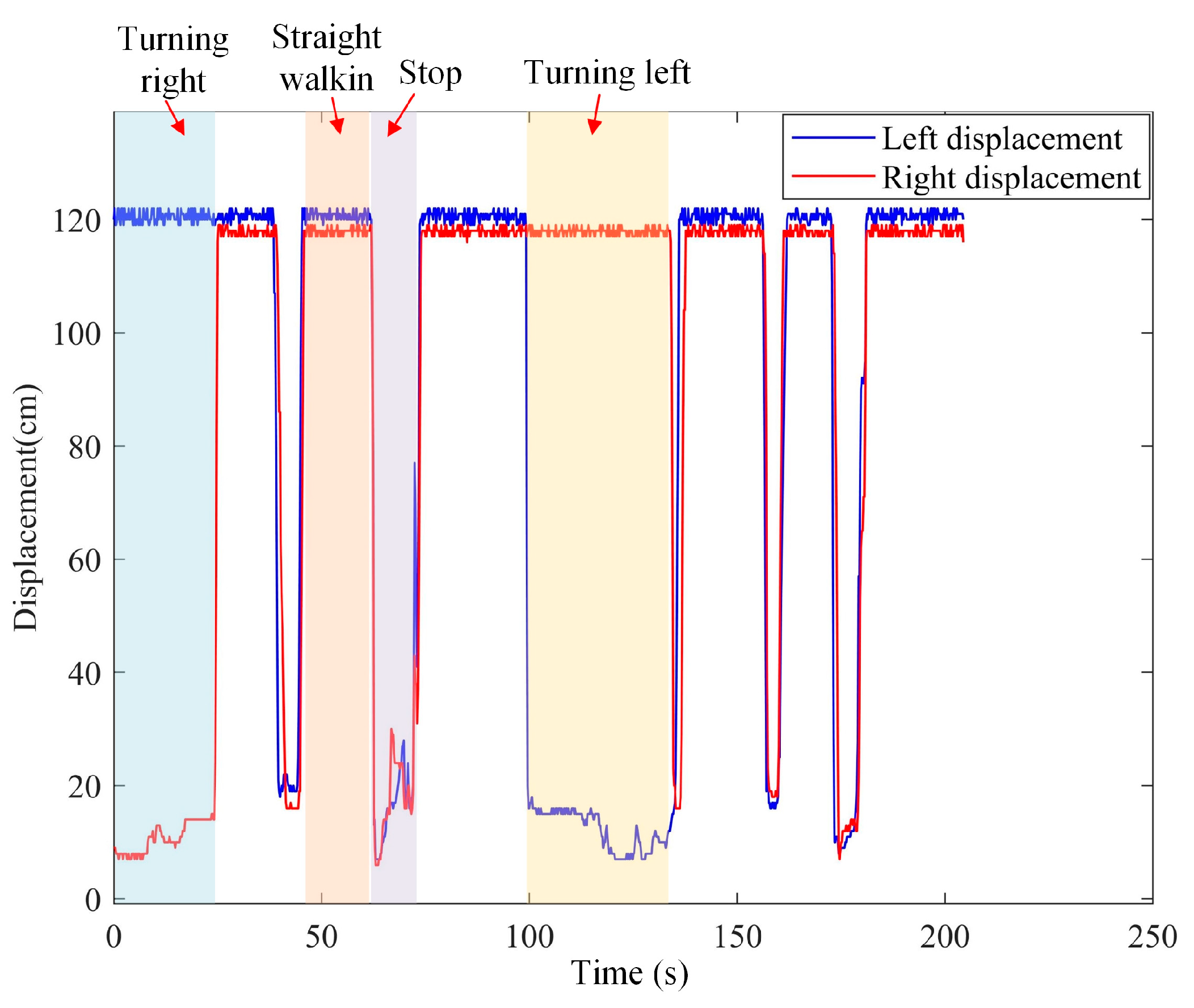

5.1. Motion Intention Recognition Experiment

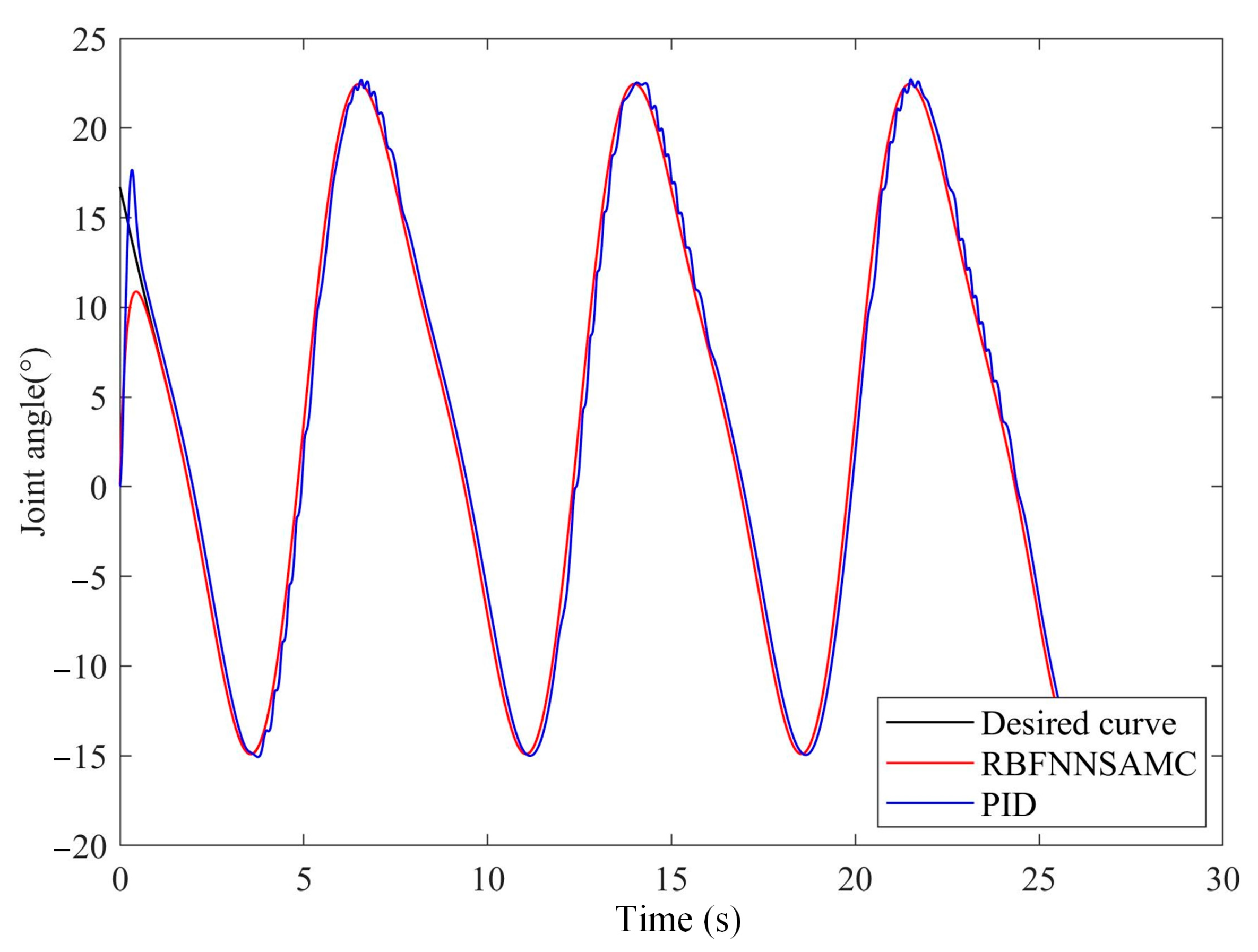

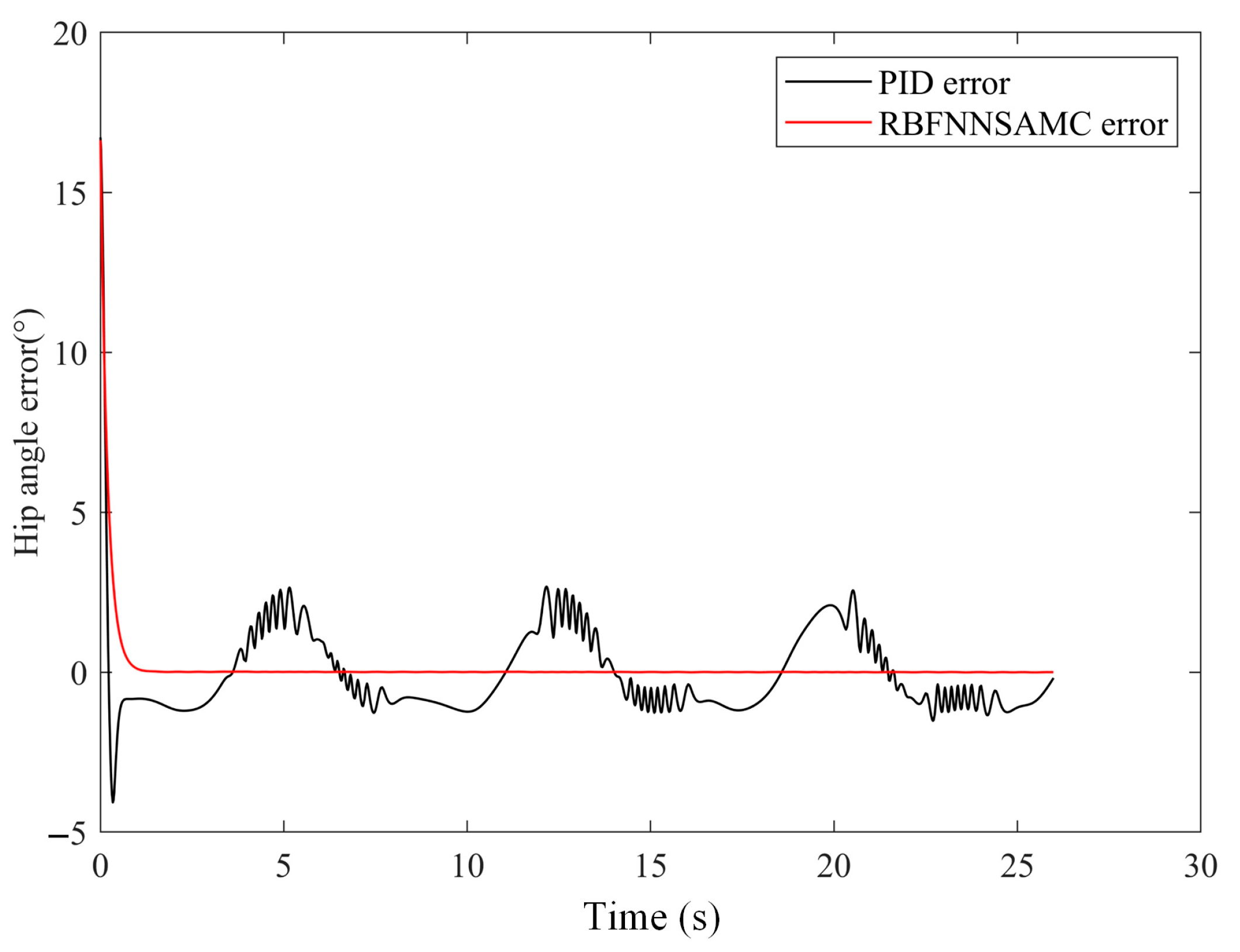

5.2. Tracking Control Experiments

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dong, F.; Li, H.; Feng, Y. Mechanism Design and Performance Analysis of a Sitting/Lying Lower Limb Rehabilitation Robot. Machines 2022, 10, 674. [Google Scholar] [CrossRef]

- Paraskevas, K.I. Prevention and Treatment of Strokes Associated with Carotid Artery Stenosis: A Research Priority. Ann. Transl. Med. 2020, 8, 1260. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Yue, Z.; Wang, J. Robotics in Lower-Limb Rehabilitation after Stroke. Behav. Neurol. 2017, 2017, 3731802. [Google Scholar] [CrossRef] [PubMed]

- Yang, T.; Gao, X. Adaptive Neural Sliding-Mode Controller for Alternative Control Strategies in Lower Limb Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 238–247. [Google Scholar] [CrossRef]

- Díaz, I.; Gil, J.J.; Sánchez, E. Lower-Limb Robotic Rehabilitation: Literature Review and Challenges. J. Robot. 2011, 2011, 759764. [Google Scholar] [CrossRef]

- Li, K.; Zhang, J.; Wang, L.; Zhang, M.; Li, J.; Bao, S. A Review of the Key Technologies for SEMG-Based Human-Robot Interaction Systems. Biomed. Signal Process. Control 2020, 62, 102074. [Google Scholar] [CrossRef]

- Bulea, T.C.; Kilicarslan, A.; Ozdemir, R.; Paloski, W.H.; Contreras-Vidal, J.L. Simultaneous Scalp Electroencephalography (EEG), Electromyography (EMG), and Whole-Body Segmental Inertial Recording for Multi-Modal Neural Decoding. J. Vis. Exp. 2013, 77, 50602. [Google Scholar] [CrossRef]

- Iqbal, N.; Khan, T.; Khan, M.; Hussain, T.; Hameed, T.; Bukhari, S.A.C. Neuromechanical Signal-Based Parallel and Scalable Model for Lower Limb Movement Recognition. IEEE Sens. J. 2021, 21, 16213–16221. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, W.; Xiao, W.; Liu, H.; De Silva, C.W.; Fu, C. Sequential Decision Fusion for Environmental Classification in Assistive Walking. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1780–1790. [Google Scholar] [CrossRef]

- Martinez-Hernandez, U.; Mahmood, I.; Dehghani-Sanij, A.A. Simultaneous Bayesian Recognition of Locomotion and Gait Phases With Wearable Sensors. IEEE Sens. J. 2018, 18, 1282–1290. [Google Scholar] [CrossRef]

- Ding, S.; Ouyang, X.; Liu, T.; Li, Z.; Yang, H. Gait Event Detection of a Lower Extremity Exoskeleton Robot by an Intelligent IMU. IEEE Sens. J. 2018, 18, 9728–9735. [Google Scholar] [CrossRef]

- Chinimilli, P.T.; Redkar, S.; Sugar, T. A Two-Dimensional Feature Space-Based Approach for Human Locomotion Recognition. IEEE Sens. J. 2019, 19, 4271–4282. [Google Scholar] [CrossRef]

- Gao, X.; Yang, T.; Peng, J. Logic-Enhanced Adaptive Network-Based Fuzzy Classifier for Fall Recognition in Rehabilitation. IEEE Access 2020, 8, 57105–57113. [Google Scholar] [CrossRef]

- Kanjo, E.; Younis, E.M.G.; Ang, C.S. Deep Learning Analysis of Mobile Physiological, Environmental and Location Sensor Data for Emotion Detection. Inf. Fusion 2019, 49, 46–56. [Google Scholar] [CrossRef]

- Pastor, F.; Garcia-Gonzalez, J.; Gandarias, J.M.; Medina, D.; Closas, P.; Garcia-Cerezo, A.J.; Gomez-de-Gabriel, J.M. Bayesian and Neural Inference on LSTM-Based Object Recognition From Tactile and Kinesthetic Information. IEEE Robot. Autom. Lett. 2021, 6, 231–238. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C. Dynamic State Estimation for the Advanced Brake System of Electric Vehicles by Using Deep Recurrent Neural Networks. IEEE Trans. Ind. Electron. 2020, 67, 9536–9547. [Google Scholar] [CrossRef]

- Siami-Namini, S.; Tavakoli, N.; Siami Namin, A. A Comparison of ARIMA and LSTM in Forecasting Time Series. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 1394–1401. [Google Scholar]

- Qiu, L.; Chen, Y.; Jia, H.; Zhang, Z. Query Intent Recognition Based on Multi-Class Features. IEEE Access 2018, 6, 52195–52204. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Wu, Q.; Chen, Y. Development of an Intention-Based Adaptive Neural Cooperative Control Strategy for Upper-Limb Robotic Rehabilitation. IEEE Robot. Autom. Lett. 2021, 6, 335–342. [Google Scholar] [CrossRef]

- Mehr, J.K.; Sharifi, M.; Mushahwar, V.K.; Tavakoli, M. Intelligent Locomotion Planning With Enhanced Postural Stability for Lower-Limb Exoskeletons. IEEE Robot. Autom. Lett. 2021, 6, 7588–7595. [Google Scholar] [CrossRef]

- Mohd Khairuddin, I.; Sidek, S.N.; Abdul Majeed, A.P.P.; Mohd Razman, M.A.; Ahmad Puzi, A.; Md Yusof, H. The Classification of Movement Intention through Machine Learning Models: The Identification of Significant Time-Domain EMG Features. PeerJ Comput. Sci. 2021, 7, e379. [Google Scholar] [CrossRef] [PubMed]

- Shi, Q.; Ying, W.; Lv, L.; Xie, J. Deep Reinforcement Learning-Based Attitude Motion Control for Humanoid Robots with Stability Constraints. Ind. Robot Int. J. Robot. Res. Appl. 2020, 47, 335–347. [Google Scholar] [CrossRef]

- Al-Shuka, H.F.N.; Rahman, M.H.; Leonhardt, S.; Ciobanu, I.; Berteanu, M. Biomechanics, Actuation, and Multi-Level Control Strategies of Power-Augmentation Lower Extremity Exoskeletons: An Overview. Int. J. Dyn. Control 2019, 7, 1462–1488. [Google Scholar] [CrossRef]

- Vantilt, J.; Giraddi, C.; Aertbelien, E.; De Groote, F.; De Schutter, J. Estimating Contact Forces and Moments for Walking Robots and Exoskeletons Using Complementary Energy Methods. IEEE Robot. Autom. Lett. 2018, 3, 3410–3417. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Warner, H.; La, H.; Mohammadi, H.; Simon, D.; Richter, H. State Estimation For An Agonistic-Antagonistic Muscle System. Asian J. Control 2019, 21, 354–363. [Google Scholar] [CrossRef]

- Wang, S.; Cao, J.; Yu, P.S. Deep Learning for Spatio-Temporal Data Mining: A Survey. IEEE Trans. Knowl. Data Eng. 2022, 34, 3681–3700. [Google Scholar] [CrossRef]

- Li, M.; Wang, Y.; Wang, Z.; Zheng, H. A Deep Learning Method Based on an Attention Mechanism for Wireless Network Traffic Prediction. Ad Hoc Netw. 2020, 107, 102258. [Google Scholar] [CrossRef]

- Xiang, Z.; Yan, J.; Demir, I. A Rainfall-Runoff Model With LSTM-Based Sequence-to-Sequence Learning. Water Resour. Res. 2020, 56, e2019WR025326. [Google Scholar] [CrossRef]

- Yao, S.; Luo, L.; Peng, H. High-Frequency Stock Trend Forecast Using LSTM Model. In Proceedings of the 2018 13th International Conference on Computer Science & Education (ICCSE), Colombo, Sri Lanka, 8–11 August 2018. [Google Scholar]

- Peng, T.; Zhang, C.; Zhou, J.; Nazir, M.S. An Integrated Framework of Bi-Directional Long-Short Term Memory (BiLSTM) Based on Sine Cosine Algorithm for Hourly Solar Radiation Forecasting. Energy 2021, 221, 119887. [Google Scholar] [CrossRef]

- Chen, C.-F.; Du, Z.-J.; He, L.; Shi, Y.-J.; Wang, J.-Q.; Xu, G.-Q.; Zhang, Y.; Wu, D.-M.; Dong, W. Development and Hybrid Control of an Electrically Actuated Lower Limb Exoskeleton for Motion Assistance. IEEE Access 2019, 7, 169107–169122. [Google Scholar] [CrossRef]

- Pérez-Sánchez, B.; Fontenla-Romero, O.; Guijarro-Berdiñas, B. A Review of Adaptive Online Learning for Artificial Neural Networks. Artif. Intell. Rev. 2018, 49, 281–299. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, B.; Pan, G.; Zhao, Y. A Novel Hybrid Model Based on VMD-WT and PCA-BP-RBF Neural Network for Short-Term Wind Speed Forecasting. Energy Convers. Manag. 2019, 195, 180–197. [Google Scholar] [CrossRef]

- Vostrý, M.; Lanková, B.; Zilcher, L.; Jelinková, J. The Effect of Individual Combination Therapy on Children with Motor Deficits from the Perspective of Comprehensive Rehabilitation. Appl. Sci. 2022, 12, 4270. [Google Scholar] [CrossRef]

- Bartík, P.; Vostrý, M.; Hudáková, Z.; Šagát, P.; Lesňáková, A.; Dukát, A. The Effect of Early Applied Robot-Assisted Physiotherapy on Functional Independence Measure Score in Post-Myocardial Infarction Patients. Healthcare 2022, 10, 937. [Google Scholar] [CrossRef]

| Hip Joint | Knee Joint | |

|---|---|---|

| Rated torque | 65 Nm | 45 Nm |

| Rotational Speed | 20 rpm | 25 rpm |

| Range of motion | PE 1: −10–25° | PE: 0–65° |

| GRU | LSTM | BILSTM | |

|---|---|---|---|

| Accuracy | 95.90% | 96.77% | 99.61% |

| Elapsed time | 0.053 s | 0.059 s | 0.066 s |

| GRU | LSTM | BILSTM | |

|---|---|---|---|

| Turn left | 99.30% | 100.00% | 99.30% |

| Straight walking | 99.36% | 99.52% | 99.68% |

| Turn right | 83.33% | 86.46% | 100% |

| Fall | 93.48% | 93.48% | 97.83% |

| Stop | 84.21% | 94.74% | 100% |

| Maximum Error | Average Error | Standard Deviation | ||||

|---|---|---|---|---|---|---|

| Hip | Knee | Hip | Knee | Hip | Knee | |

| PID | 16.718° | 4.556° | 1.405° | 1.822° | 2.235° | 1.497° |

| RBFNNSAMC | 16.628° | 2.996° | 0.197° | 0.037° | 1.486° | 0.269° |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, P.; Gao, X.; Miao, M.; Zhao, P. Design and Control of a Lower Limb Rehabilitation Robot Based on Human Motion Intention Recognition with Multi-Source Sensor Information. Machines 2022, 10, 1125. https://doi.org/10.3390/machines10121125

Zhang P, Gao X, Miao M, Zhao P. Design and Control of a Lower Limb Rehabilitation Robot Based on Human Motion Intention Recognition with Multi-Source Sensor Information. Machines. 2022; 10(12):1125. https://doi.org/10.3390/machines10121125

Chicago/Turabian StyleZhang, Pengfei, Xueshan Gao, Mingda Miao, and Peng Zhao. 2022. "Design and Control of a Lower Limb Rehabilitation Robot Based on Human Motion Intention Recognition with Multi-Source Sensor Information" Machines 10, no. 12: 1125. https://doi.org/10.3390/machines10121125

APA StyleZhang, P., Gao, X., Miao, M., & Zhao, P. (2022). Design and Control of a Lower Limb Rehabilitation Robot Based on Human Motion Intention Recognition with Multi-Source Sensor Information. Machines, 10(12), 1125. https://doi.org/10.3390/machines10121125