6-Dimensional Virtual Human-Machine Interaction Force Estimation Algorithm in Astronaut Virtual Training

Abstract

1. Introduction

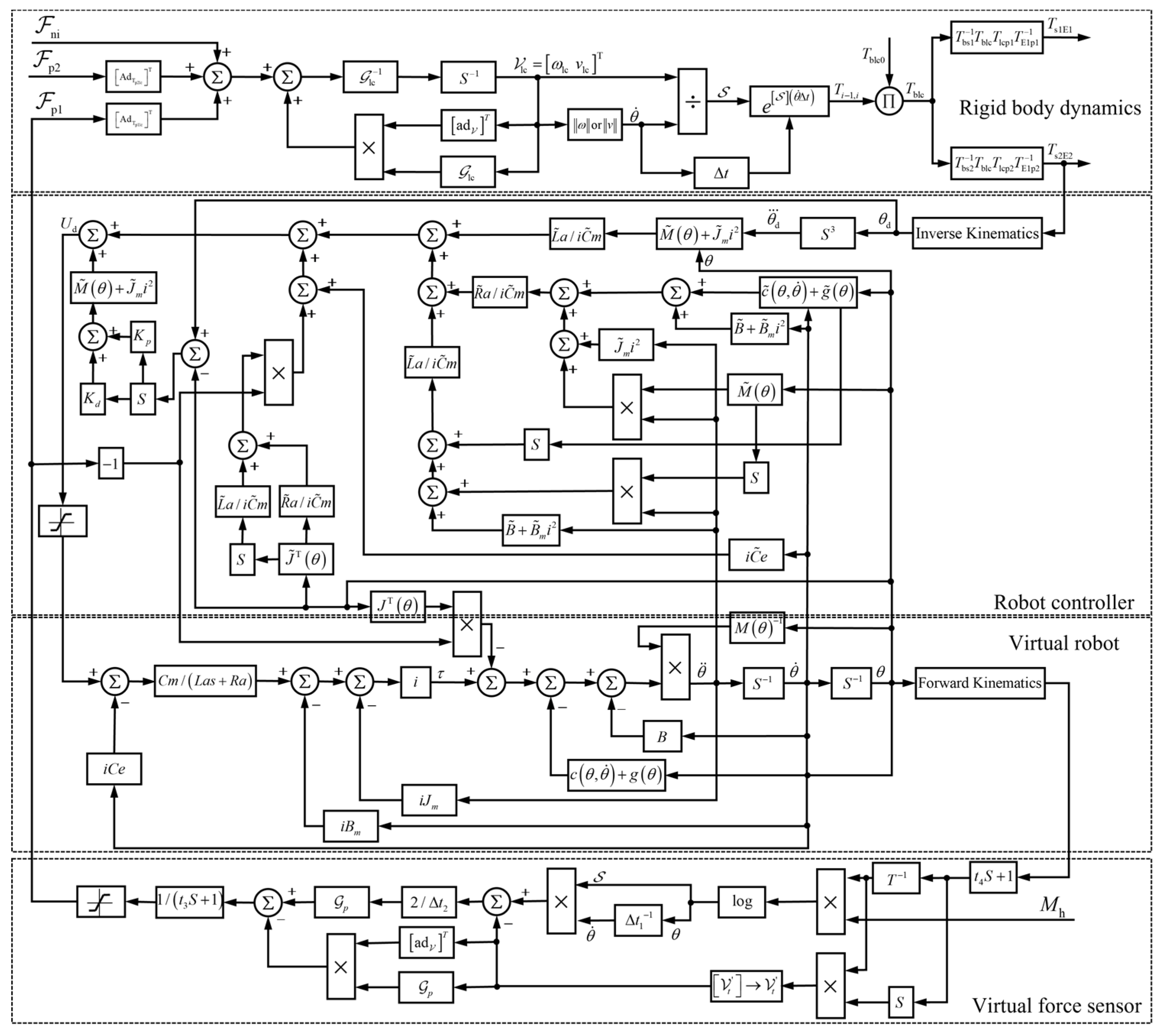

2. 6-Dimensional VHMIF Estimation Algorithm

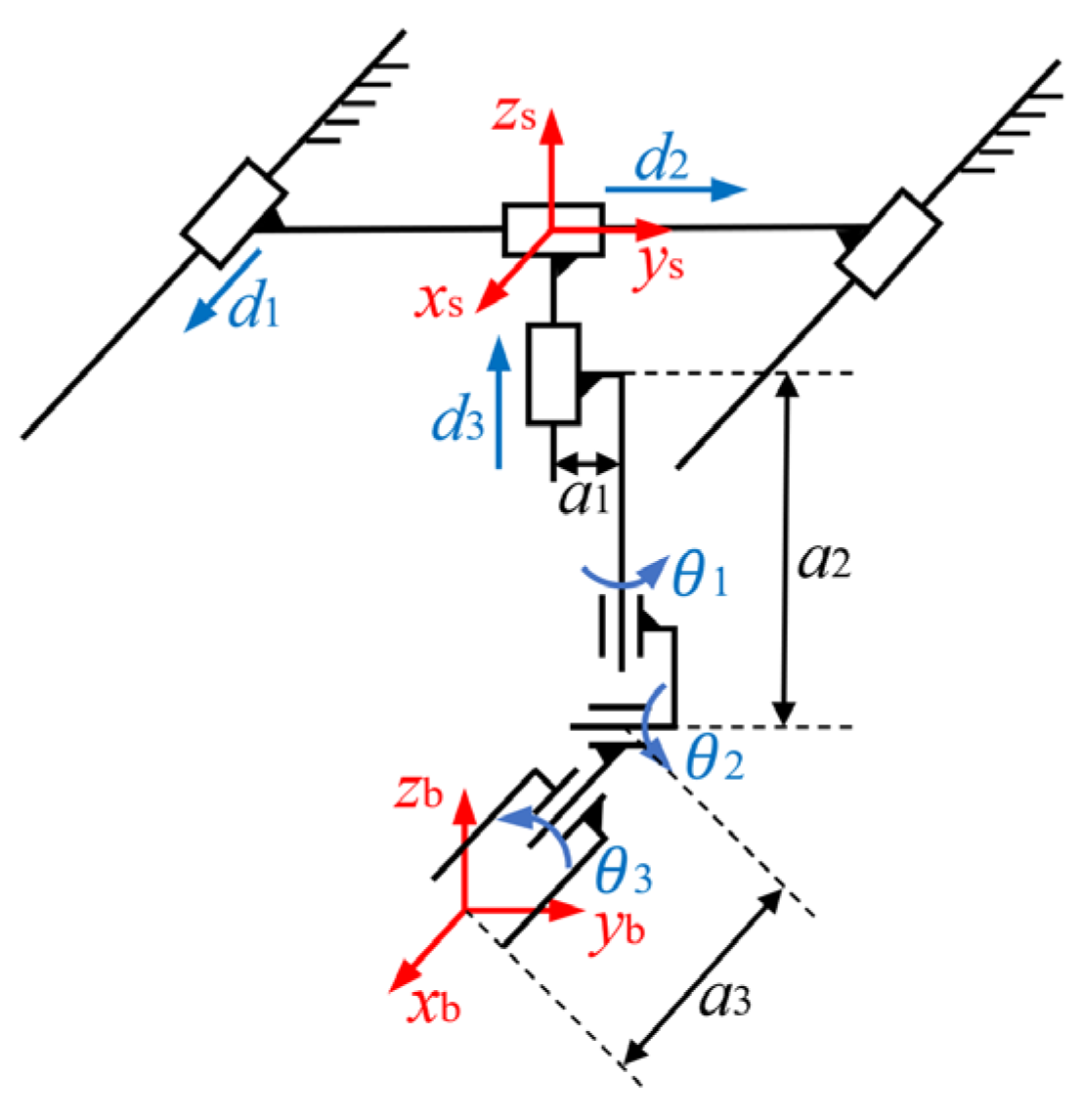

2.1. Virtual Robot Kinematics and Dynamics Model

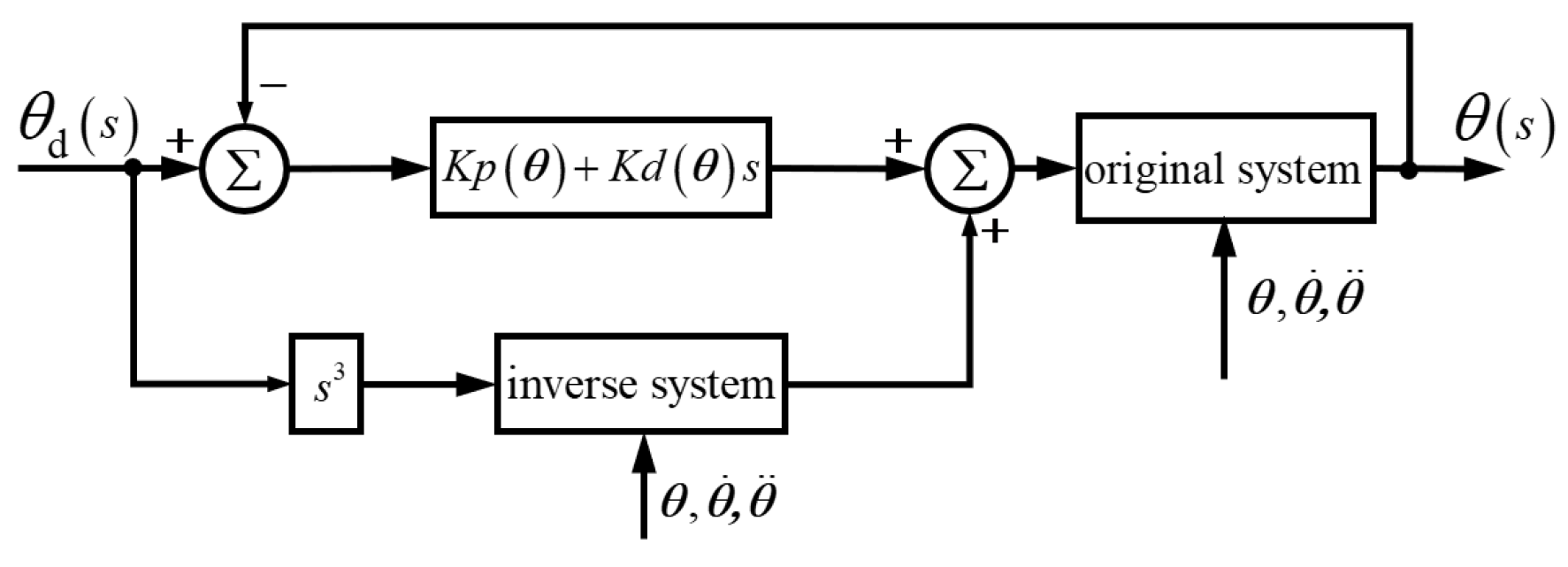

2.2. Virtual Robot Controller Design

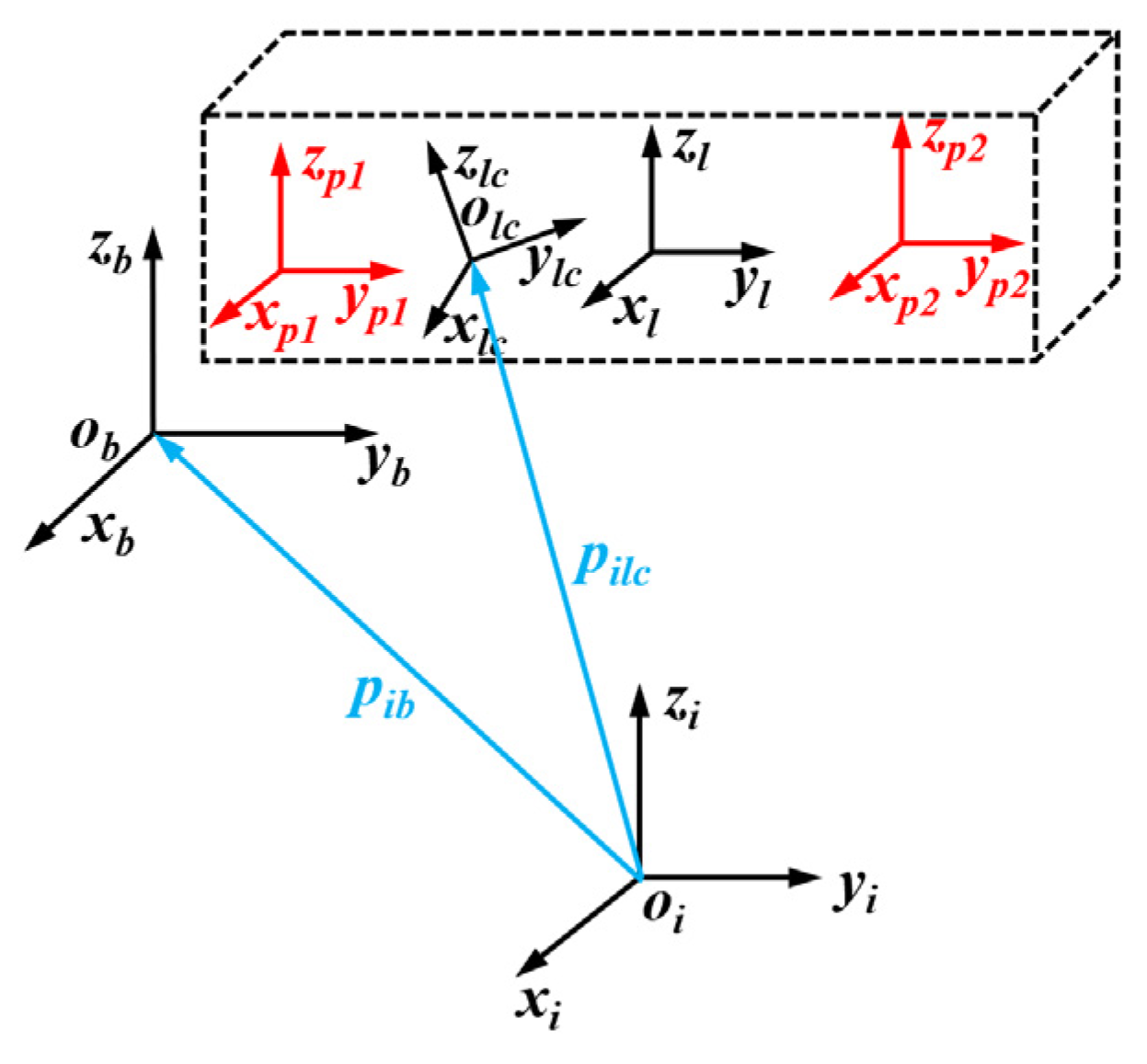

2.3. Rigid Body Dynamics Analysis of Loads in the Space Station

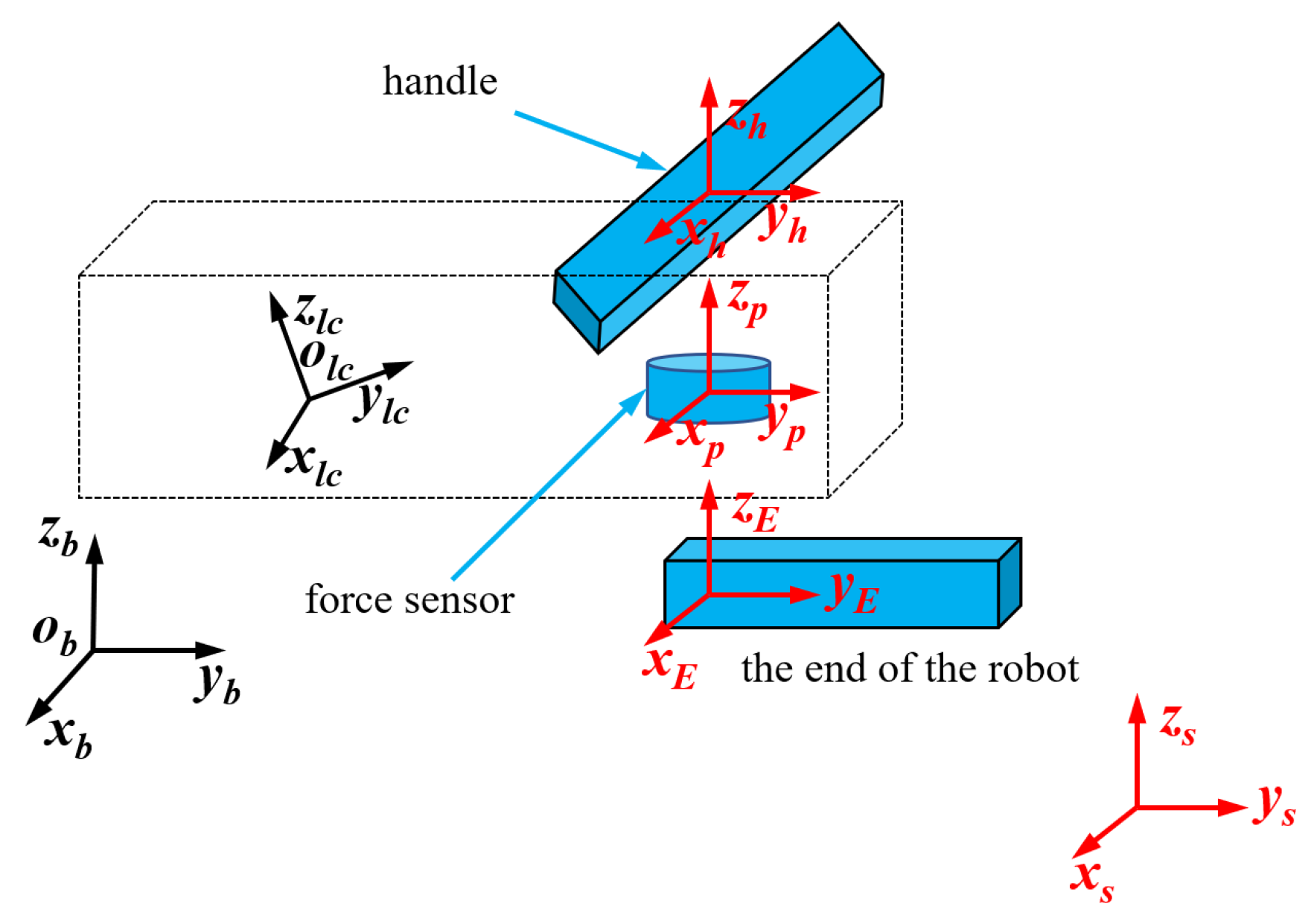

2.4. Design of Virtual Force Sensor

3. Results

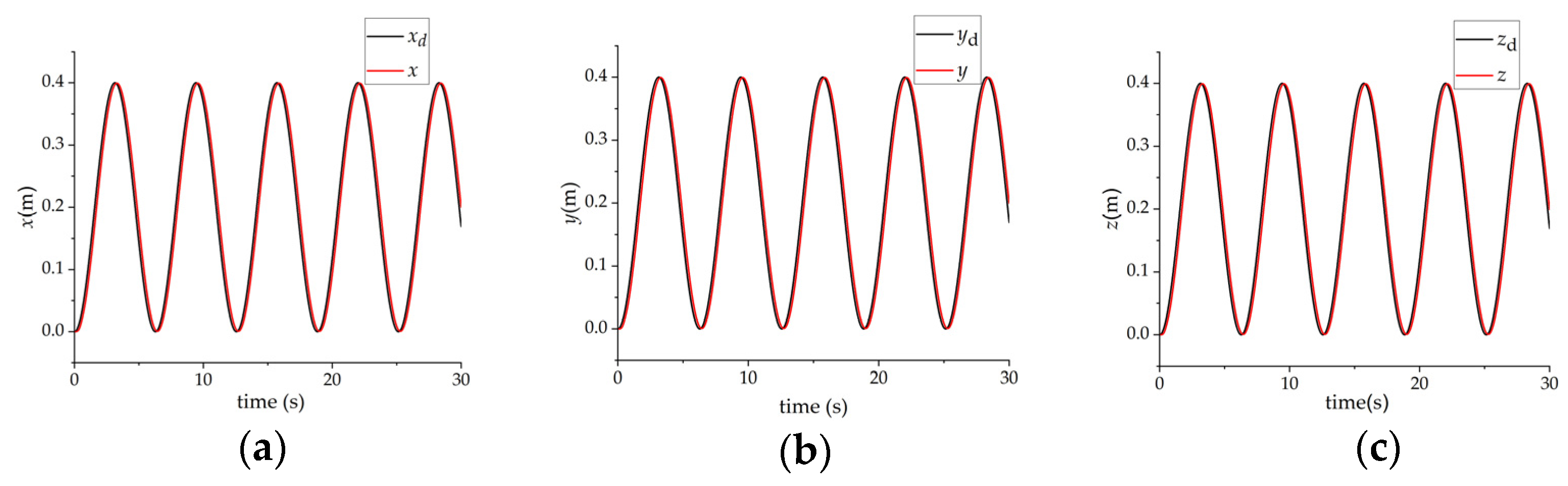

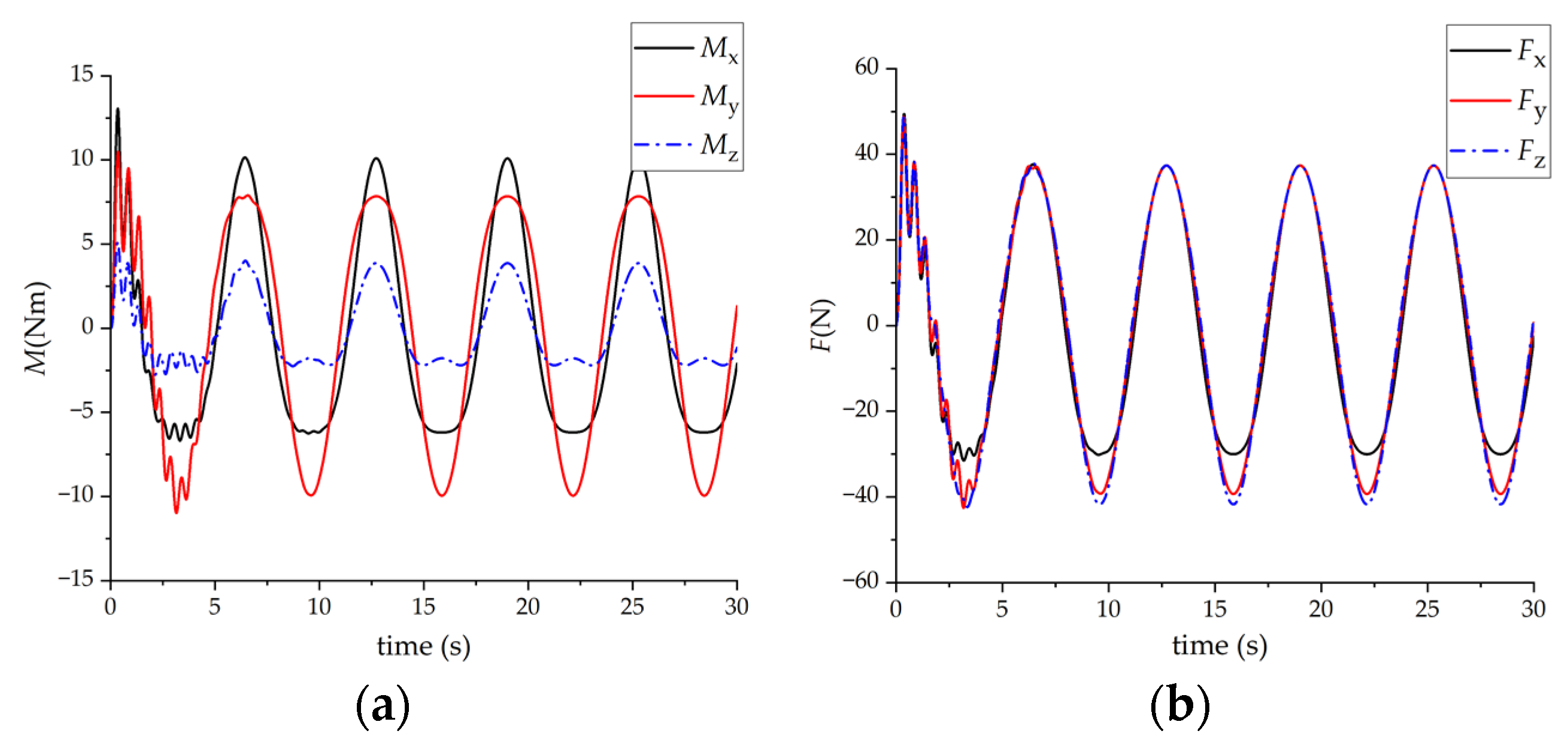

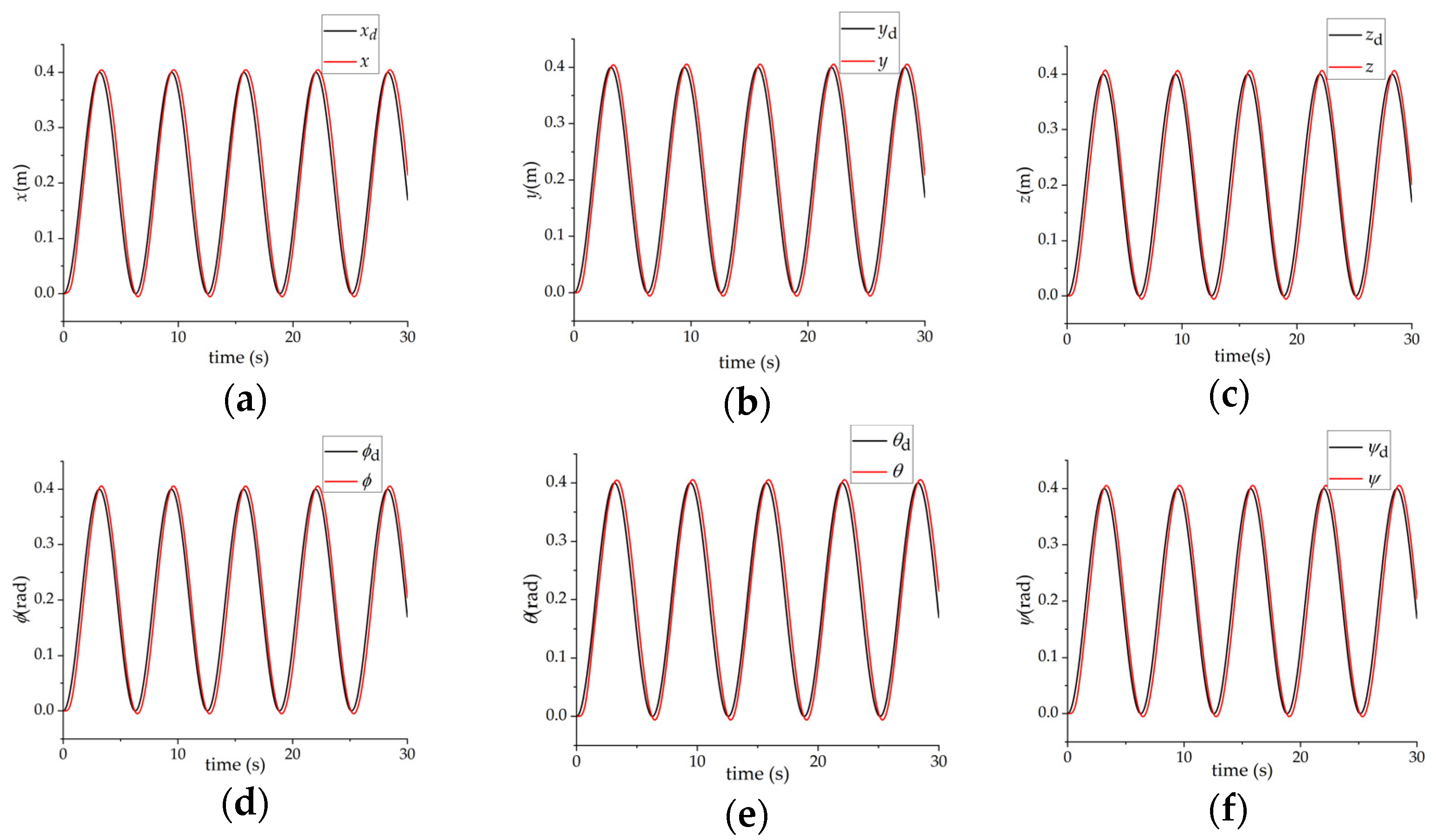

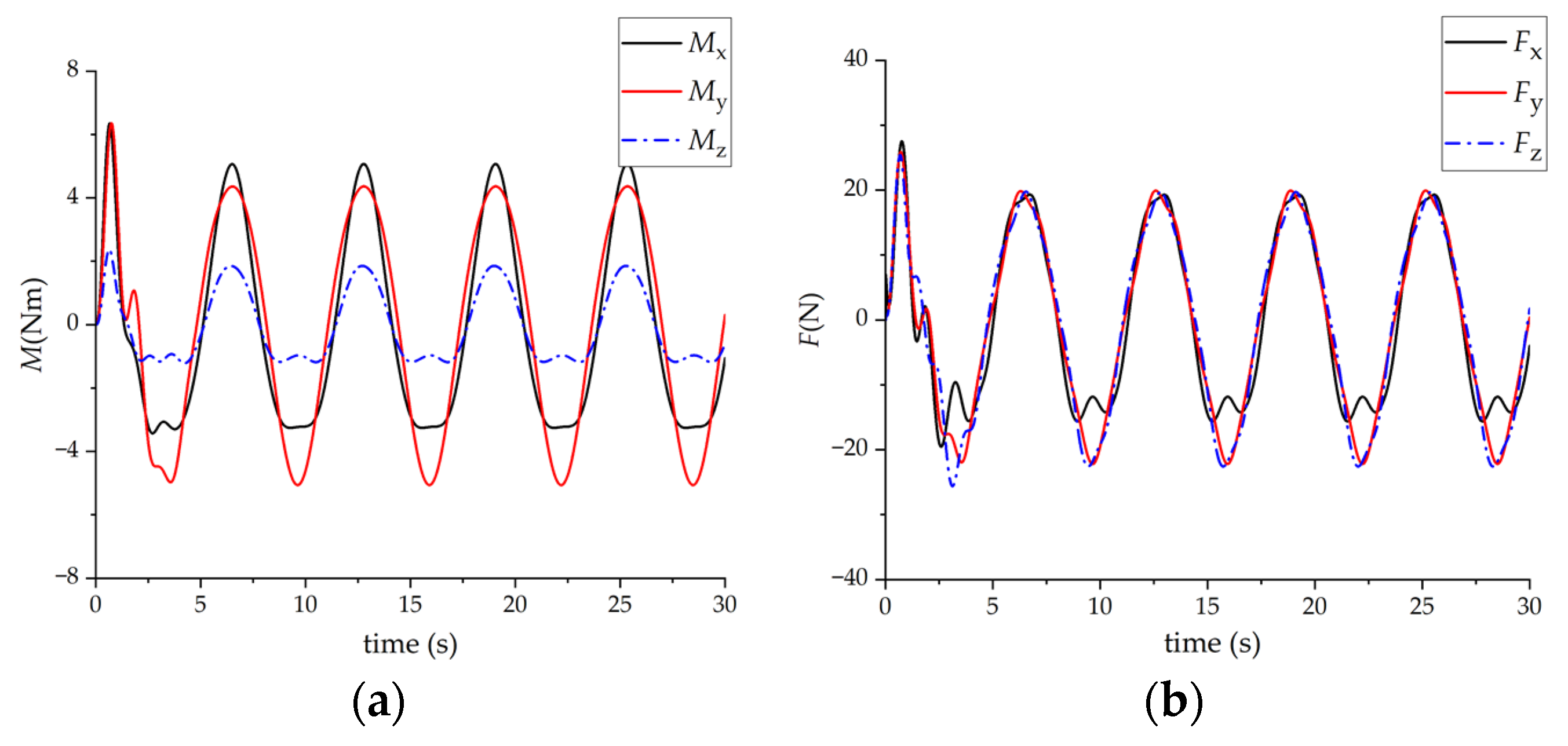

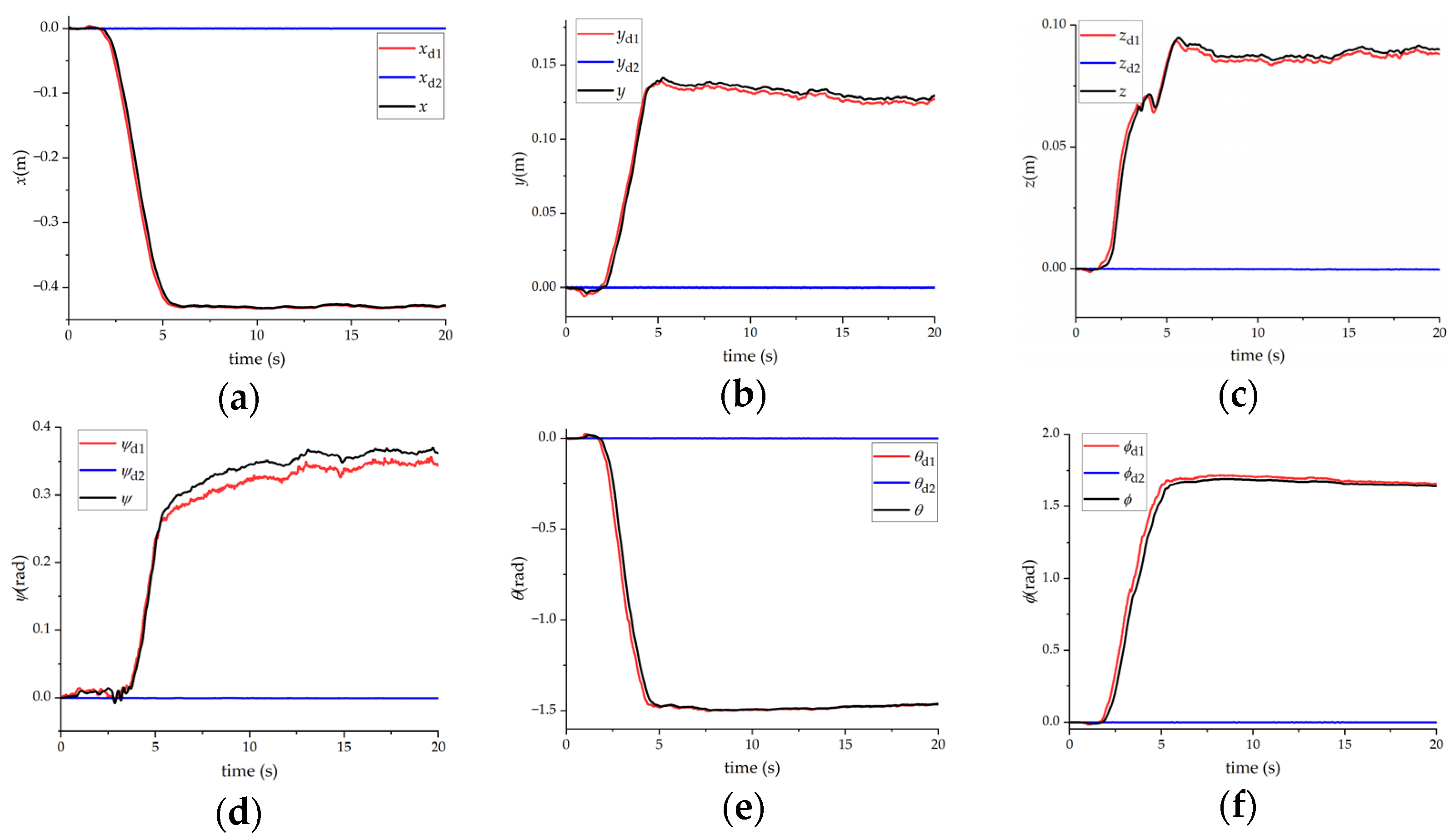

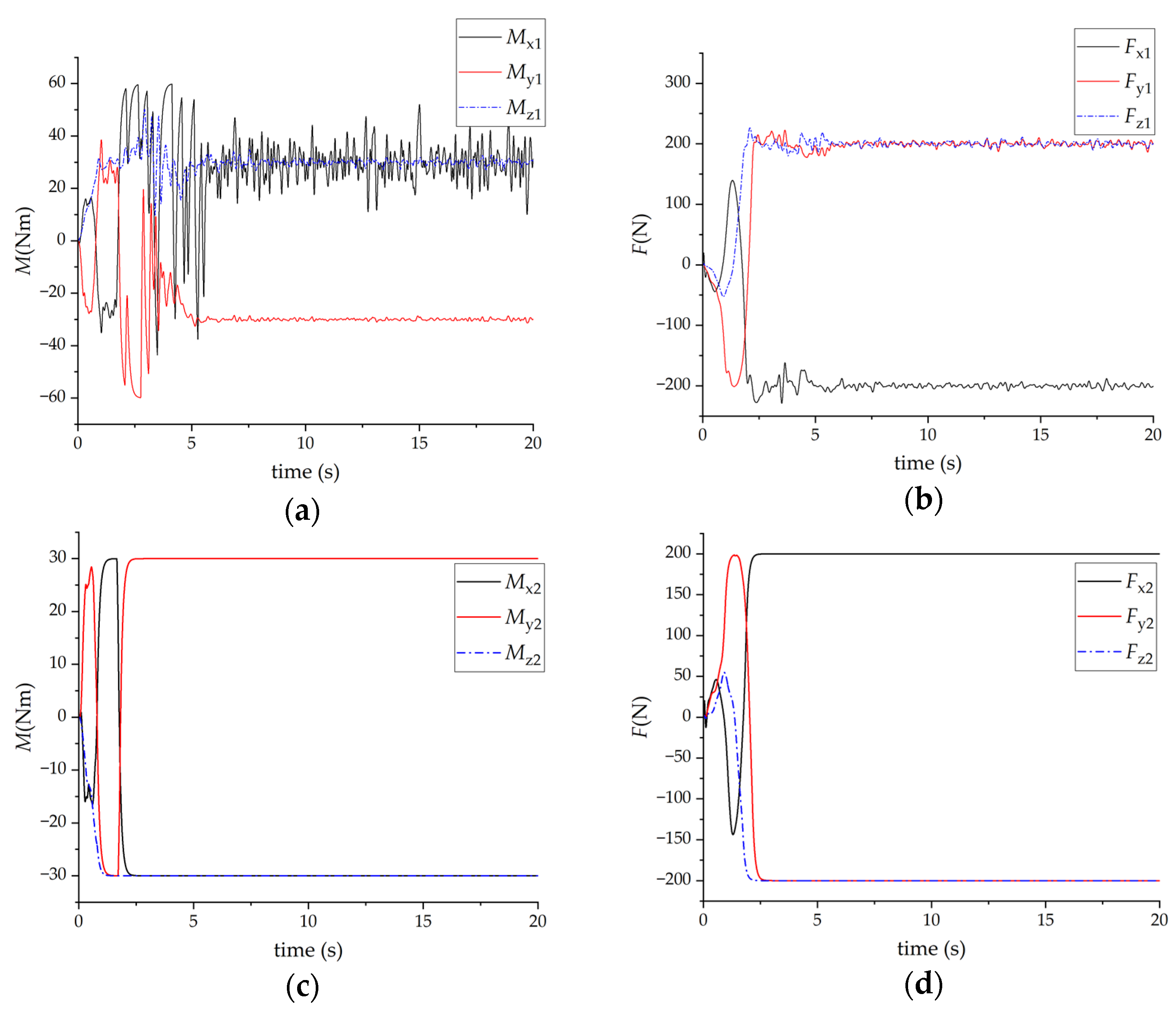

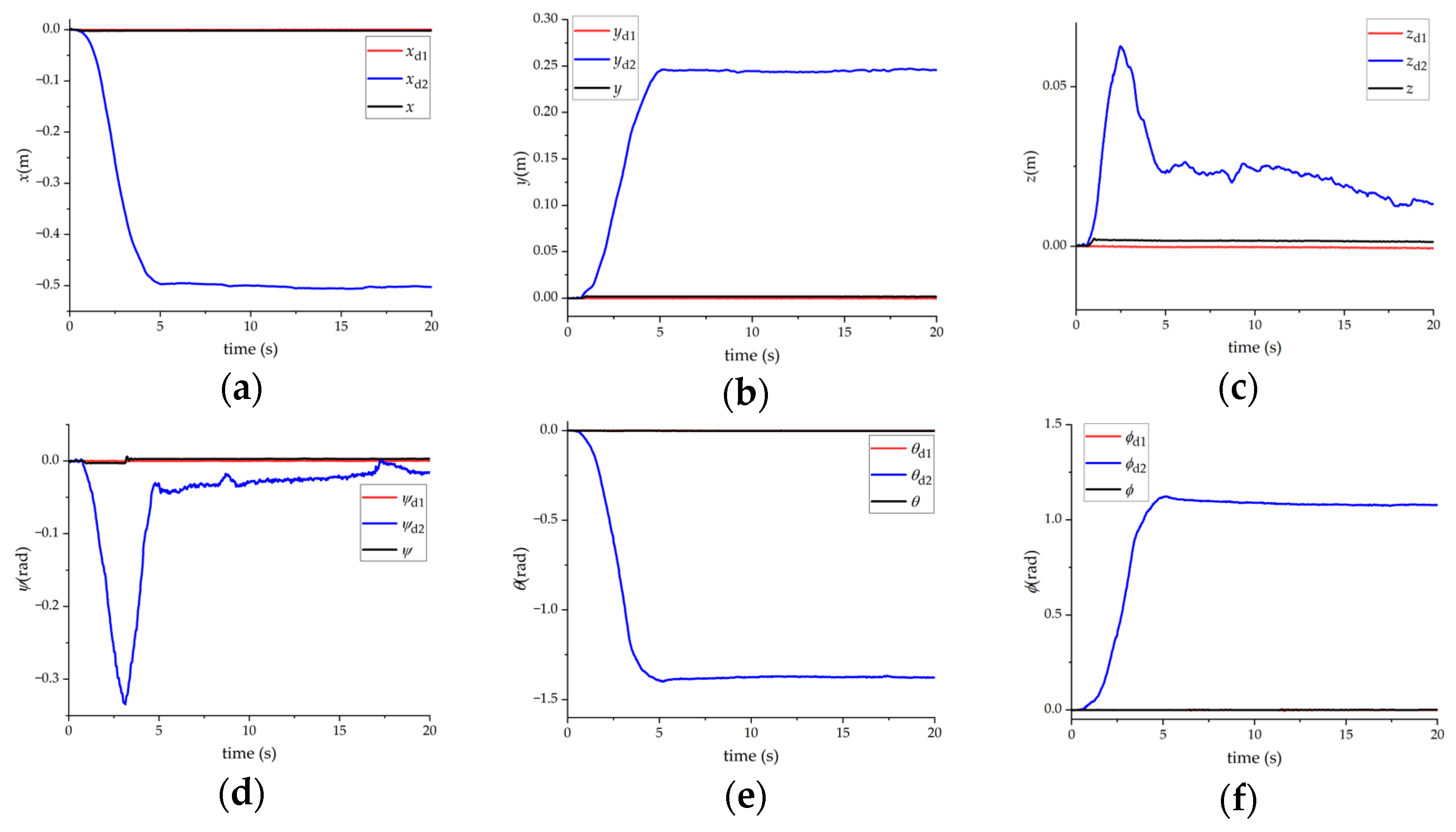

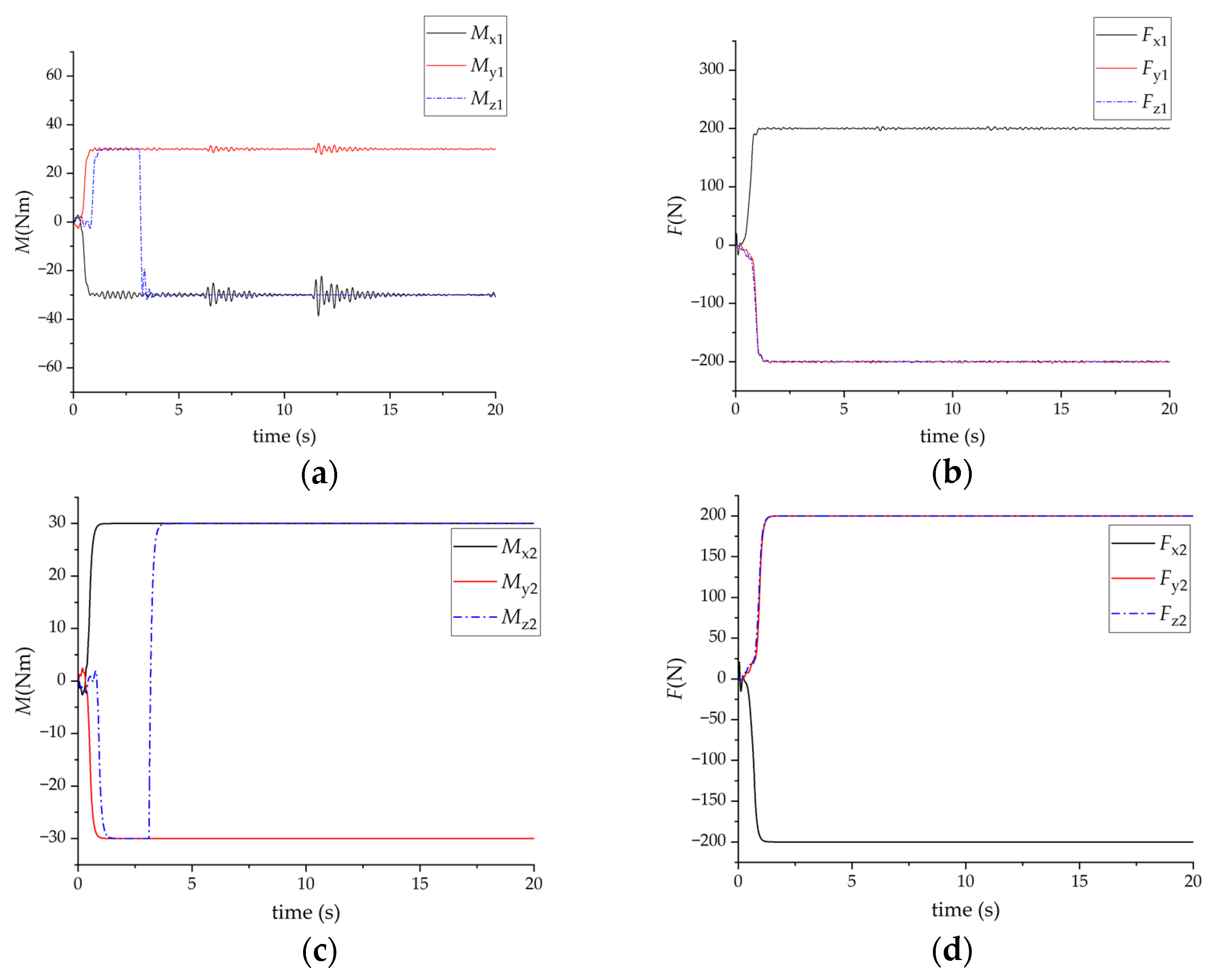

3.1. Simulation Results When a Single Person Carries Objects

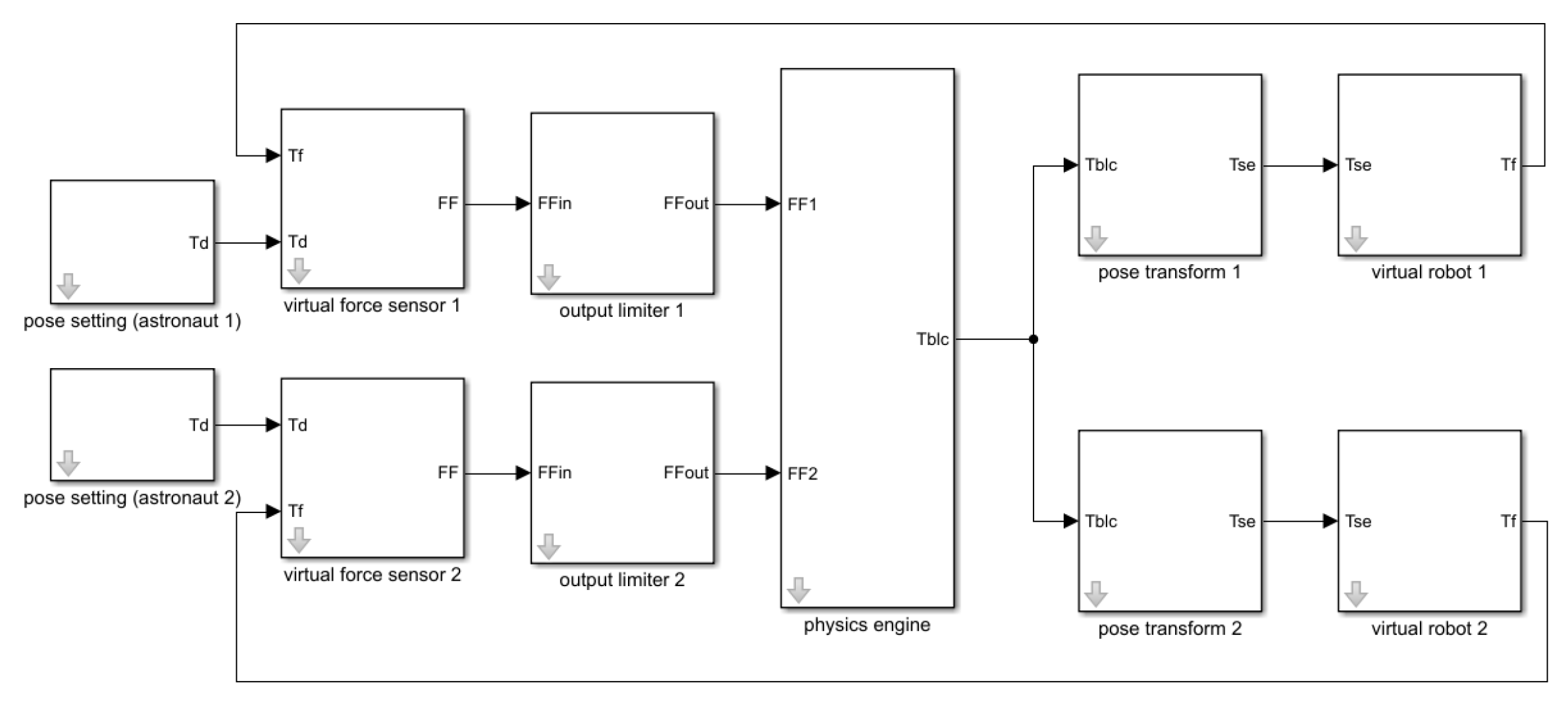

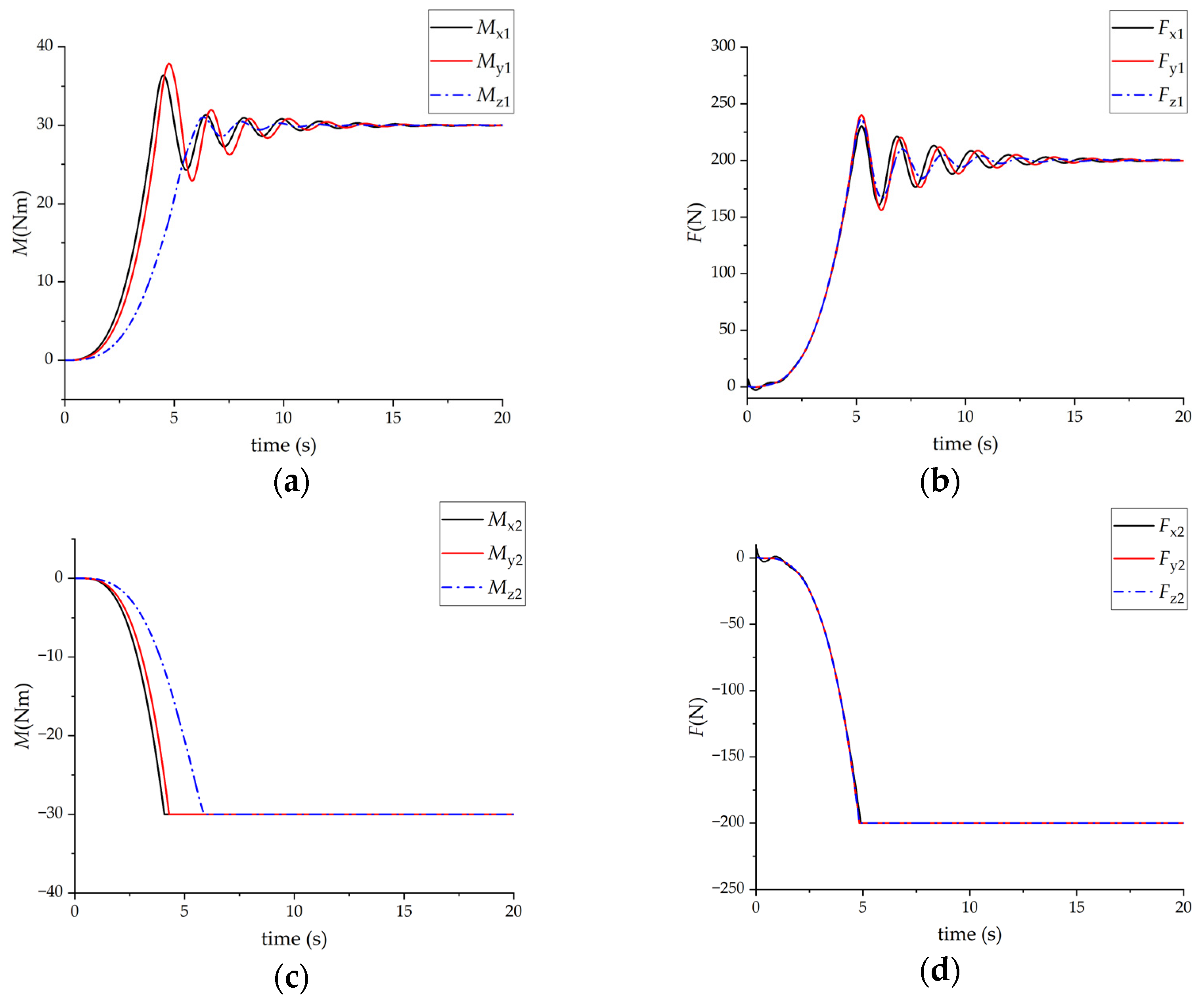

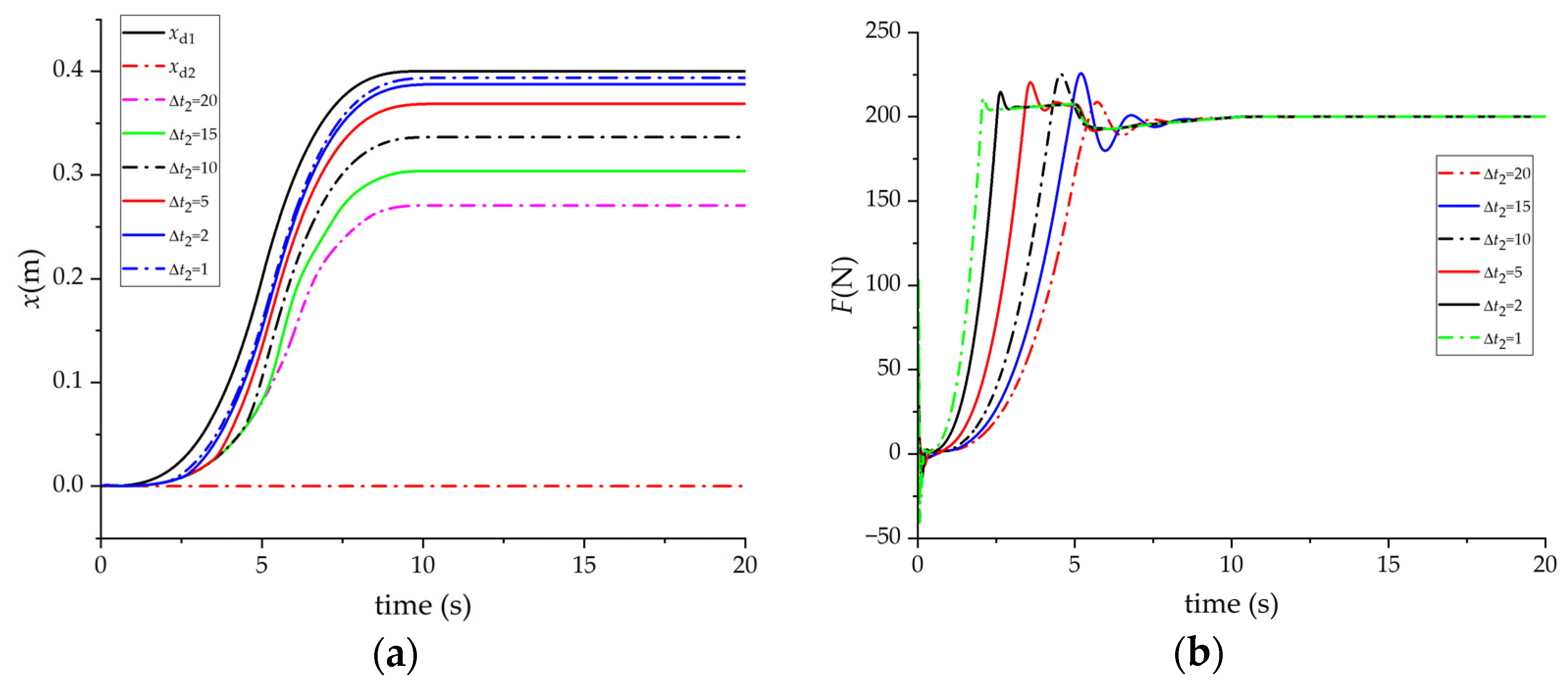

3.2. Simulation Results When Two People Cooperate in Carrying Objects

- (1)

- At the initial moment , the initial Euler angles of the virtual object are: ;

- (2)

- The goal of Astronaut 1 is to transport the virtual object to the pose corresponding to and . The goal of Astronaut 2 is to keep the pose of the virtual object constant.

- (3)

- Astronaut 1’s minimum and maximum output force screw, that is, the output limit is: (±60 Nm, ±60 Nm, ±60 Nm, ±400 N, ±400 N, ±400 N), Astronaut 2’s minimum and maximum output force screw, that is, the output limit is: (±30 Nm, ±30 Nm, ±30 Nm, ±200 N, ±200 N, ±200 N).

3.3. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclature

| the damping coefficient matrix of the robot | |

| the equivalent damping coefficient | |

| the damping coefficient matrix of the motor | |

| the Coriolis force and centrifugal force | |

| the back electromotive force constant matrix of the motor | |

| the motor torque constant matrix | |

| the force screw of the object acting on the load | |

| the object force screw exerted by the astronauts | |

| the object force screw produced by the non-inertial system | |

| the force screw exerted by the i-th astronaut on the load | |

| the external force screw applied to the end of the robot | |

| the moment of gravity | |

| the space inertia matrix of the load | |

| the space inertia matrix expressed in the proxy point coordinates system | |

| the transmission ratio matrix | |

| the current of the motor | |

| the Jacobian matrix of the forward kinematics | |

| the equivalent moment of inertia | |

| the motor moment of inertia matrix | |

| the generalized stiffness of the virtual force sensor | |

| the derivative coefficient matrix of the PD controller | |

| the proportional coefficient matrix of the PD controller | |

| the motor inductance matrix | |

| the inductance of the i-th joint motor | |

| the mass of the load | |

| the mass matrix of the robot | |

| the initial pose of the end coordinate system {b} | |

| the motor armature resistance matrix | |

| the screw axis of the load | |

| the screw coordinate of joint i | |

| the pose of the end coordinates system {b} | |

| the pose of the end effector of the i-th robot in the body coordinates system of the space station | |

| the pose of the payload’s centroid coordinates system {lc} in the space station body coordinates system {b} | |

| the initial pose of the payload’s centroid coordinates system {lc} in the space station body coordinates system {b} | |

| the poses of the agent point coordinates system {pi} in the space station body coordinates system {b} | |

| the pose of the base coordinates systems {si} of the robot in the body coordinates system {b} | |

| the pose of the i-th force sensor in the end effector coordinate system of the i-th robot | |

| the pose of the center of mass coordinates system of the load at the time i in the center of mass coordinates system of the load at a time (i − 1) | |

| the pose of the agent point coordinates system {pi} in the load center of mass coordinates system {lc} | |

| the pose of the handle coordinates system in the coordinates system of the agent point {p} | |

| the pose of the virtual robot’s end effector coordinates system {E} in the virtual robot’s base coordinates system | |

| the pose of the handle coordinates system in the base coordinates system of the virtual robot | |

| the sampling time of the system | |

| the first time-constant of the virtual force sensor | |

| the second time constant of the virtual force sensor | |

| the filter parameter of the virtual force sensor | |

| the differential constant of the virtual force sensor | |

| the input of the original virtual robot system | |

| the armature voltage of the motor | |

| The first part of the inverse system output | |

| The second part of the inverse system output | |

| the motion screw of the object at the center of the mass coordinates system of the load | |

| the joint angle matrix of the robot | |

| the angular velocity of the screw axis | |

| the angular velocity of the motor | |

| the angle/displacement command matrix | |

| the driving torque of robot joints | |

| the moment of inertia, Coriolis force, gravity moment, damping moment | |

| the torque generated by the motor that is applied to the external environment by the robot end link |

Appendix A

References

- Wang, L.; Lin, L.; Chang, Y.; Song, D. Velocity Planning for Astronaut Virtual Training Robot with High-Order Dynamic Constraints. Robotica 2020, 38, 2121–2137. [Google Scholar] [CrossRef]

- Lan, W.; Lingjie, L.; Ying, C.; Feng, X. Velocity Planning Algorithm in One-Dimensional Linear Motion for Astronaut Virtual Training. J. Astronaut. 2021, 42, 1600–1609. [Google Scholar]

- Lixun, Z.; Da, S.; Lailu, L.; Feng, X. Analysis of the Workspace of Flexible Cable Driven Haptic Interactive Robot. J. Astronaut. 2018, 39, 569–577. [Google Scholar]

- Da, S.; Lixun, Z.; Bingjun, W.; Yuan, G. The Control Strategy of Flexible Cable Driven Force Interactive Robot. Robot 2018, 40, 440–447. [Google Scholar]

- Xuewen, C.; Yuqing, L.; Xiuqing, Z.; Bohe, Z. Research on Virtual Training Simulation System of Astronaut Cooperative Operation in Space. J. Syst. Simul. 2013, 25, 2348–2354. [Google Scholar]

- Yunrong, Z.; Zhili, Z.; Xiangyang, L. Armament Research Foundation “Large complex equipment collaborative virtual maintenance training system basic technology research”. In Proceedings of the 2017 4th International Conference on Education, Management and Computing Technology (ICEMCT 2017), Hangzhou, China, 15 April 2017. [Google Scholar]

- Leoncini, P.; Sikorski, B.; Baraniello, V. Multiple NUI Device Approach to Full Body Tracking for Collaborative Virtual Environments. In Proceedings of the 4th International Conference, AVR 2017, Ugento, Italy, 12–15 June 2017. [Google Scholar]

- Kevin, D.; Uriel-Haile-Hernndez, B.; Rong, J. Experiences with Multi-modal Collaborative Virtual Laboratory (MMCVL). In Proceeding of 2017 IEEE Third International Conference on Multimedia Big Data (BigMM), Laguna Hills, CA, USA, 19–21 April 2017. [Google Scholar]

- Horst, R.; Alberternst, S.; Sutter, J. A Video-texture based Approach for Realistic Avatars of Co-located Users in Immersive Virtual Environments using Low-cost Hardware. In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Prague, Czech Republic, 25–27 February 2019. [Google Scholar]

- Lin, L.; Wang, L.; Chang, Y. Virtual Human Machine Interaction Force Algorithm in Astronaut Virtual Training. In Proceedings of the 2022 12th International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Changbai Mountain, China, 27–31 July 2022. [Google Scholar]

- Mancisidor, A.; Zubizarreta, A.; Cabanes, I.; Portillo, E.; Jung, J.H. Virtual Sensors for Advanced Controllers in Rehabilitation Robotics. Sensors 2018, 18, 785. [Google Scholar] [CrossRef] [PubMed]

- Buondonno, G.; De Luca, A. Combining real and virtual sensors for measuring interaction forces and moments acting on a robot. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016. [Google Scholar]

- Zhang, S.; Wang, S.; Jing, F.; Tan, M. A Sensorless Hand Guiding Scheme Based on Model Identification and Control for Industrial_Robot. IEEE Trans. Ind. Inform. 2019, 15, 5204–5213. [Google Scholar] [CrossRef]

- Roveda, L.; Bussolan, A.; Braghin, F.; Piga, D. 6D Virtual Sensor for Wrench Estimation in Robotized Interaction Tasks Exploiting Extended Kalman Filter. Machines 2020, 8, 67. [Google Scholar] [CrossRef]

- Magrini, E.; Flacco, F.; De Luca, A. Estimation of contact forces using a virtual force sensor. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014. [Google Scholar]

- Simoni, L.; Villagrossi, E.; Beschi, M.; Marini, A.; Visioli, A. On the use of a temperature based friction model for a virtual force sensor in industrial robot manipulators. In Proceedings of the 22nd IEEE International Conference on Emerging Technologies And Factory Automation (ETFA2017), Limassol, Cyprus, 12–15 September 2017. [Google Scholar]

- Yanjiang, H.; Jianhong, K.; Xianmin, Z. A Virtual Force Sensor for Robotic Manipulators Based on Dynamic Model. In Proceeding of International Conference on Intelligent Robotics, Harbin, China, 1–3 August 2022. [Google Scholar]

- García-Martínez, J.R.; Cruz-Miguel, E.E.; Carrillo-Serrano, R.V.; Mendoza-Mondragón, F.; Toledano-Ayala, M.; Rodríguez-Reséndiz, J. A PID-Type Fuzzy Logic Controller-Based Approachfor Motion Control Applications. Sensors 2020, 20, 5323. [Google Scholar] [CrossRef] [PubMed]

- Cabrera-Rufino, M.A.; Ramos-Arreguín, J.M.; Rodríguez-Reséndiz, J.; Gorrostieta-Hurtado, E.; Aceves-Fernandez, M. Implementation of ANN-Based Auto-Adjustable for a Pneumatic Servo System Embedded on FPGA. Micromachines 2022, 13, 890. [Google Scholar] [CrossRef] [PubMed]

- Manríquez-Padilla, C.G.; Zavala-Pérez, O.A.; Pérez-Soto, G.I.; Rodríguez-Reséndiz, J.; Camarillo-Gómez, K.A. Form-Finding Analysis of a Class 2 Tensegrity Robot. Appl. Sci. 2019, 9, 2948. [Google Scholar] [CrossRef]

- Odry, A.; Kecskes, I.; Csik, D.; Rodríguez-Reséndiz, J.; Carbone, G.; Sarcevic, P. Performance Evaluation of Mobile Robot Pose Estimation in MARG-Driven EKF. In Proceedings of the International Conference of IFToMM ITALY 2022, Naples, Italy, 7–9 September 2022. [Google Scholar]

- Srinivasan, H.; Gupta, S.; Sheng, W.; Chen, H. Estimation of hand force from surface Electromyography signals using Artificial Neural Network. In Proceedings of the 10th World Congress on Intelligent Control and Automation, Beijing, China, 6–8 July 2012. [Google Scholar]

- Azmoudeh, B. Developing T-Type Three Degree of Freedom Force Sensor to Estimate Wrist Muscles Forces. In Proceedings of the 2017 24th national and 2nd International Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 30 November–1 December 2017. [Google Scholar]

- Mascaro, S.; Asada, H. Photoplethysmograph fingernail sensors for measuring finger forces without haptic obstruction. IEEE Trans. Robot. Autom. 2001, 17, 698–708. [Google Scholar] [CrossRef]

- Hinatsu, S.; Yoshimoto, S.; Kuroda, Y.; Oshiro, O. Estimation of Fingertip Contact Force by Plethysmography in Proximal Part of Finger. Trans. Jpn. Soc. Med. Biol. Eng. 2017, 55, 115–124. [Google Scholar]

- Sato, Y.; Inoue, J.; Iwase, M.; Hatakeyama, S. Contact Force Estimation Based on Fingertip Image and Application to Human Machine Interface. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019. [Google Scholar]

- Lynch, K.M.; Park, F.C. Modern Robotics: Mechanics, Planning, and Control; Cambridge University Press: Cambridge, UK, 2017; pp. 245–254. [Google Scholar]

| No. | Tester 1 | Tester 2 | ||

|---|---|---|---|---|

| 1st | Move 1 | Still 2 | 0.02 | 0.5 |

| 2nd | Still | Move | 0.02 | 0.5 |

| Work | Rise Time 1 | Noise Value 2 | Solution Time 3 |

|---|---|---|---|

| Proposed | 1.3795 s | 5.5 N | 0.1852 s |

| [10] | 1.4412 s | 200 N | 0.1190 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, L.; Wang, L.; Chang, Y.; Zhang, L.; Xue, F. 6-Dimensional Virtual Human-Machine Interaction Force Estimation Algorithm in Astronaut Virtual Training. Machines 2023, 11, 46. https://doi.org/10.3390/machines11010046

Lin L, Wang L, Chang Y, Zhang L, Xue F. 6-Dimensional Virtual Human-Machine Interaction Force Estimation Algorithm in Astronaut Virtual Training. Machines. 2023; 11(1):46. https://doi.org/10.3390/machines11010046

Chicago/Turabian StyleLin, Lingjie, Lan Wang, Ying Chang, Lixun Zhang, and Feng Xue. 2023. "6-Dimensional Virtual Human-Machine Interaction Force Estimation Algorithm in Astronaut Virtual Training" Machines 11, no. 1: 46. https://doi.org/10.3390/machines11010046

APA StyleLin, L., Wang, L., Chang, Y., Zhang, L., & Xue, F. (2023). 6-Dimensional Virtual Human-Machine Interaction Force Estimation Algorithm in Astronaut Virtual Training. Machines, 11(1), 46. https://doi.org/10.3390/machines11010046