Author Contributions

Conceptualization, C.P., Z.F. and S.G.V.; software, C.P. and Z.F.; formal analysis, C.P., Z.F. and S.G.V.; writing—original draft preparation, C.P.; writing—review and editing, C.P., Z.F. and S.G.V.; project administration, S.G.V.; funding acquisition, S.G.V. All authors have read and agreed to the published version of the manuscript.

Figure 1.

Top view (from a satellite image) of a typical vineyard block: vines, rows between the vines, and headland space. The symbols represent a typical field coverage navigation task. The robot starts at an initial position near the entrance of a user-defined row (green dot); it traverses a set of rows (orange vertical arrows) toward a specific direction (red arrow points from left to right); and exits from a user-defined row (yellow star).

Figure 1.

Top view (from a satellite image) of a typical vineyard block: vines, rows between the vines, and headland space. The symbols represent a typical field coverage navigation task. The robot starts at an initial position near the entrance of a user-defined row (green dot); it traverses a set of rows (orange vertical arrows) toward a specific direction (red arrow points from left to right); and exits from a user-defined row (yellow star).

Figure 2.

Four common cases of the headland space at the exit from a vineyard block. (a) Configuration I: the rows between adjacent blocks are parallel; (b) configuration II: the rows between adjacent blocks are slightly slanted; (c) configuration III: the rows between adjacent blocks are almost perpendicular; and (d) configuration IV: there is no adjacent block.

Figure 2.

Four common cases of the headland space at the exit from a vineyard block. (a) Configuration I: the rows between adjacent blocks are parallel; (b) configuration II: the rows between adjacent blocks are slightly slanted; (c) configuration III: the rows between adjacent blocks are almost perpendicular; and (d) configuration IV: there is no adjacent block.

Figure 3.

Tree trunks are blocked by tall grass.

Figure 3.

Tree trunks are blocked by tall grass.

Figure 4.

The figure shows the orchard row frame {R}, the vehicle frame {V}, the template frame {T}, and the definition of lateral and heading offsets.

Figure 4.

The figure shows the orchard row frame {R}, the vehicle frame {V}, the template frame {T}, and the definition of lateral and heading offsets.

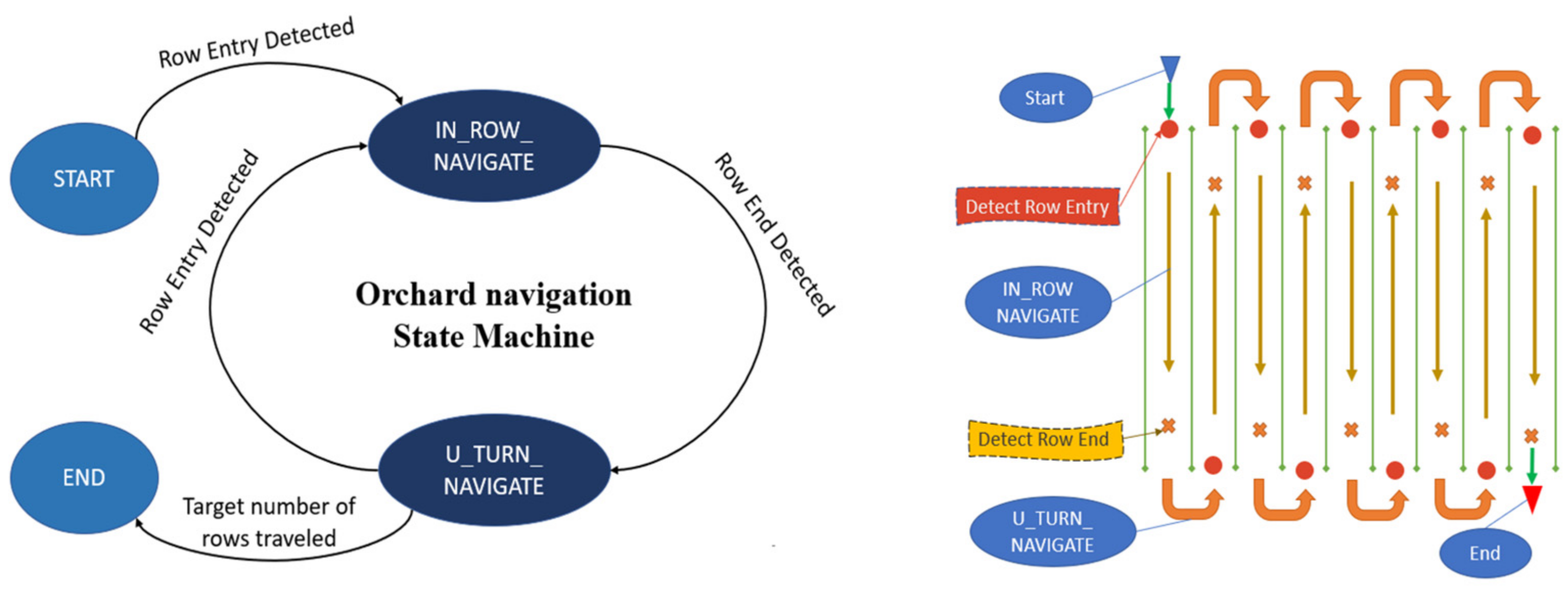

Figure 5.

State machine model for orchard navigation (left); robot states illustrated on a skeleton field map (right).

Figure 5.

State machine model for orchard navigation (left); robot states illustrated on a skeleton field map (right).

Figure 6.

Waypoints generated in different states. The blue points are generated in the “IN_ROW _NAVIGATE” state. The red points are generated in the “U_TURN_NAVIGATE” state.

Figure 6.

Waypoints generated in different states. The blue points are generated in the “IN_ROW _NAVIGATE” state. The red points are generated in the “U_TURN_NAVIGATE” state.

Figure 7.

Frequency histograms of the x-coordinates of the left and right (tree canopy) points in {T} when the robot is near the end of the row. The red bins represent the detected row-end segment. The stars are the manually measured row endpoints. The blue dashed line is the detected row centerline.

Figure 7.

Frequency histograms of the x-coordinates of the left and right (tree canopy) points in {T} when the robot is near the end of the row. The red bins represent the detected row-end segment. The stars are the manually measured row endpoints. The blue dashed line is the detected row centerline.

Figure 8.

Measuring the range of the depth camera in the designated mapping range.

Figure 8.

Measuring the range of the depth camera in the designated mapping range.

Figure 9.

Visualization of markers from ROS-RVIZ visualization package. The brown circle represents the detected end of the row. The cyan-colored dotted line shows the waypoints on the reference path. As the robot (blue box) enters a new row, a drift error would cause it to collide with the vine row to its left if it followed the reference path. In our approach, the robot is guided by a reactive path planner, which generates a path (red curve) that deviates from the reference path and guides the robot into the row, where navigation can rely on template localization.

Figure 9.

Visualization of markers from ROS-RVIZ visualization package. The brown circle represents the detected end of the row. The cyan-colored dotted line shows the waypoints on the reference path. As the robot (blue box) enters a new row, a drift error would cause it to collide with the vine row to its left if it followed the reference path. In our approach, the robot is guided by a reactive path planner, which generates a path (red curve) that deviates from the reference path and guides the robot into the row, where navigation can rely on template localization.

Figure 10.

The mobile robot that was used as the experimental platform.

Figure 10.

The mobile robot that was used as the experimental platform.

Figure 11.

The map of the tree rows (yellow lines) and an example trace of the robot path (blue points represent RTK-GPS positions) during an experiment.

Figure 11.

The map of the tree rows (yellow lines) and an example trace of the robot path (blue points represent RTK-GPS positions) during an experiment.

Figure 12.

(a) Estimated heading deviation; (b) number of valid measurements; and (c) row end distance estimation. In all figures, the horizontal axis is the actual distance of the robot to the end of the row.

Figure 12.

(a) Estimated heading deviation; (b) number of valid measurements; and (c) row end distance estimation. In all figures, the horizontal axis is the actual distance of the robot to the end of the row.

Figure 13.

Robot path for the first 10 rows. The blue curve corresponds to the “IN_ROW_NAVIGATE” state. The red dots represent the points where the robot detected the row entry. The green stars correspond to the positions where the robot detected the row end and entered the “U_TURN_NAVIGATE” state. The yellow diamonds correspond to the detected row ends on the robot’s turning sides.

Figure 13.

Robot path for the first 10 rows. The blue curve corresponds to the “IN_ROW_NAVIGATE” state. The red dots represent the points where the robot detected the row entry. The green stars correspond to the positions where the robot detected the row end and entered the “U_TURN_NAVIGATE” state. The yellow diamonds correspond to the detected row ends on the robot’s turning sides.

Figure 14.

The number of valid points (a), yaw deviations in rads (b), and row-end distance in meters (c) over time while traversing the first 10 rows of the experiment, and (d) shows the state of the robot: “0” represents “IN ROW NAVIGATE,” and “1” represents “U_TURN_NAVIGATE”. The dashed red line is the ground truth—ideal case—state, and the solid blue line is the computed state during the experiment.

Figure 14.

The number of valid points (a), yaw deviations in rads (b), and row-end distance in meters (c) over time while traversing the first 10 rows of the experiment, and (d) shows the state of the robot: “0” represents “IN ROW NAVIGATE,” and “1” represents “U_TURN_NAVIGATE”. The dashed red line is the ground truth—ideal case—state, and the solid blue line is the computed state during the experiment.

Figure 15.

Each plot shows the robot’s heading deviation—true vs. estimated heading (blue line), the valid points ratio—the number of valid points divided by 1000) (orange line), and the yaw angle—ground truth heading measured by GNSS (green dotted line) as functions of time while the robot performs a U-turn maneuver on the path shown in

Figure 13. The four plots correspond to the first four U-turns. The vertical red dashed line represents the time instant of transition from task T3 (U-turn maneuver) to T1 (enter the row).

Figure 15.

Each plot shows the robot’s heading deviation—true vs. estimated heading (blue line), the valid points ratio—the number of valid points divided by 1000) (orange line), and the yaw angle—ground truth heading measured by GNSS (green dotted line) as functions of time while the robot performs a U-turn maneuver on the path shown in

Figure 13. The four plots correspond to the first four U-turns. The vertical red dashed line represents the time instant of transition from task T3 (U-turn maneuver) to T1 (enter the row).

Figure 16.

Simulated row-ending conditions: the blue points are points measured during the experiment; the orange points are simulated tree row points; the red dashed line is the row’s centerline; and the green dashed line is at a distance of 7 m from the end of the row. The small black arrows are the estimated robot position while the robot moves along the row’s centerline. (

a) Simulated configuration II: the rows of vines in neighboring blocks are slanted to each other. The figure shows point clouds that correspond to

Figure 2b. The robot traverses a row in the right block (blue point cloud) and travels toward the left block (+X-axis). The vines of the block on the left (orange point cloud) are slanted with respect to the vines of the block on the right. The robot will perceive the orange points as it gets closer to the end of the (blue) row. The gap distance between the blocks (headland width) equals 4 m. (

b) Simulated configuration I: the rows of vines in neighboring blocks are parallel. The figure shows point clouds that correspond to

Figure 2a. The robot traverses a row in the right block (blue point cloud) and travels toward the left block (+X-axis). The vines of the block on the left (orange point cloud) are parallel with respect to the vines of the block on the right. The robot will perceive the orange points as it gets closer to the end of the (blue) row. The gap distance between the blocks (headland width) equals 4 m.

Figure 16.

Simulated row-ending conditions: the blue points are points measured during the experiment; the orange points are simulated tree row points; the red dashed line is the row’s centerline; and the green dashed line is at a distance of 7 m from the end of the row. The small black arrows are the estimated robot position while the robot moves along the row’s centerline. (

a) Simulated configuration II: the rows of vines in neighboring blocks are slanted to each other. The figure shows point clouds that correspond to

Figure 2b. The robot traverses a row in the right block (blue point cloud) and travels toward the left block (+X-axis). The vines of the block on the left (orange point cloud) are slanted with respect to the vines of the block on the right. The robot will perceive the orange points as it gets closer to the end of the (blue) row. The gap distance between the blocks (headland width) equals 4 m. (

b) Simulated configuration I: the rows of vines in neighboring blocks are parallel. The figure shows point clouds that correspond to

Figure 2a. The robot traverses a row in the right block (blue point cloud) and travels toward the left block (+X-axis). The vines of the block on the left (orange point cloud) are parallel with respect to the vines of the block on the right. The robot will perceive the orange points as it gets closer to the end of the (blue) row. The gap distance between the blocks (headland width) equals 4 m.

![Machines 11 00084 g016]()

Figure 17.

Valid points ratio (blue points) and deviation of estimated yaw angle (orange points) versus the x-coordinate of the robot in the simulation. As the robot approaches the end of the row (x gets closer to 25 m), more and more points from the neighboring block enter the FOV of the camera. (a) Simulated configuration II: slanted rows. Since the vines are not aligned, the points measured by the Lidar from the neighboring rows do not match the sensor template of the current block rows; hence, the valid points ratio and the yaw angle deviation change drastically. The row-end detection method is successful. (b) Simulated configuration I: parallel, aligned rows. Since the vines are aligned, and parallel, the points measured by the Lidar from the neighboring rows match the sensor template of the current block rows well. Hence, the valid points ratio and the yaw angle deviation do not change significantly. The row-end detection method fails.

Figure 17.

Valid points ratio (blue points) and deviation of estimated yaw angle (orange points) versus the x-coordinate of the robot in the simulation. As the robot approaches the end of the row (x gets closer to 25 m), more and more points from the neighboring block enter the FOV of the camera. (a) Simulated configuration II: slanted rows. Since the vines are not aligned, the points measured by the Lidar from the neighboring rows do not match the sensor template of the current block rows; hence, the valid points ratio and the yaw angle deviation change drastically. The row-end detection method is successful. (b) Simulated configuration I: parallel, aligned rows. Since the vines are aligned, and parallel, the points measured by the Lidar from the neighboring rows match the sensor template of the current block rows well. Hence, the valid points ratio and the yaw angle deviation do not change significantly. The row-end detection method fails.

Figure 18.

Simulated configuration I: neighboring blocks with parallel, aligned rows and gap distances (headland widths) equal to 5 m and 6 m for the left and right figures, respectively. The valid point ratios (blue points) and the deviation of yaw angles (orange points) are shown as functions of the x-coordinate of the robot. As the robot approaches the end of the row (x gets closer to 25 m), more and more points from the neighboring block enter the FOV of the camera. The row-end detection algorithm could barely work (it failed) when the headland width was 5 m (left) shows minor deviations in both metrics). Still, it was successful when the distance was 6 m (right) shows drastic changes in valid point ratios and yaw deviation).

Figure 18.

Simulated configuration I: neighboring blocks with parallel, aligned rows and gap distances (headland widths) equal to 5 m and 6 m for the left and right figures, respectively. The valid point ratios (blue points) and the deviation of yaw angles (orange points) are shown as functions of the x-coordinate of the robot. As the robot approaches the end of the row (x gets closer to 25 m), more and more points from the neighboring block enter the FOV of the camera. The row-end detection algorithm could barely work (it failed) when the headland width was 5 m (left) shows minor deviations in both metrics). Still, it was successful when the distance was 6 m (right) shows drastic changes in valid point ratios and yaw deviation).