Fuzzy Control of Self-Balancing, Two-Wheel-Driven, SLAM-Based, Unmanned System for Agriculture 4.0 Applications

Abstract

1. Introduction

- A fuzzy based controller was developed and tested for two-wheel-driven unmanned system.

- A novel SLAM algorithm was developed and tested in a greenhouse environment.

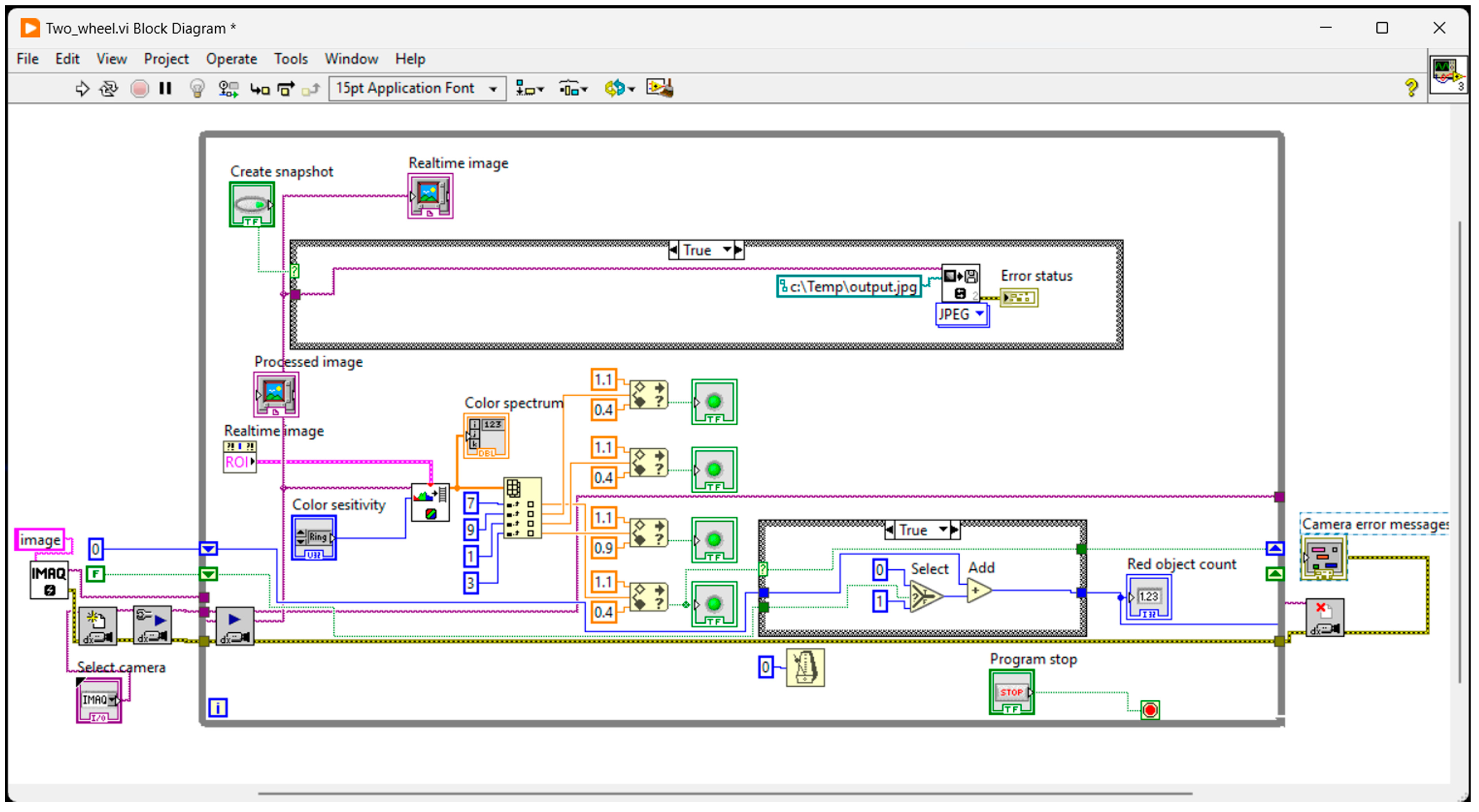

- A LabVIEW—Vision-based application was developed to grade and assess the ripeness of the greenhouse fruits, in this case tomatoes.

1.1. Related Work

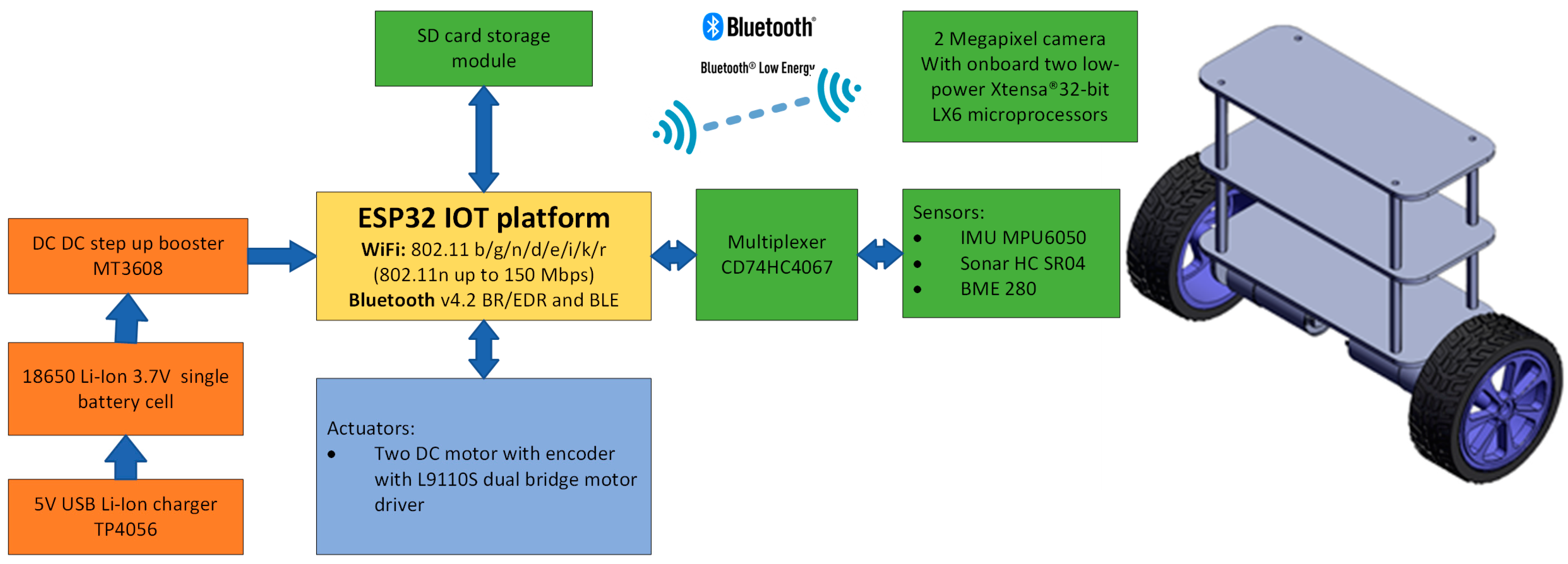

2. Materials and Methods

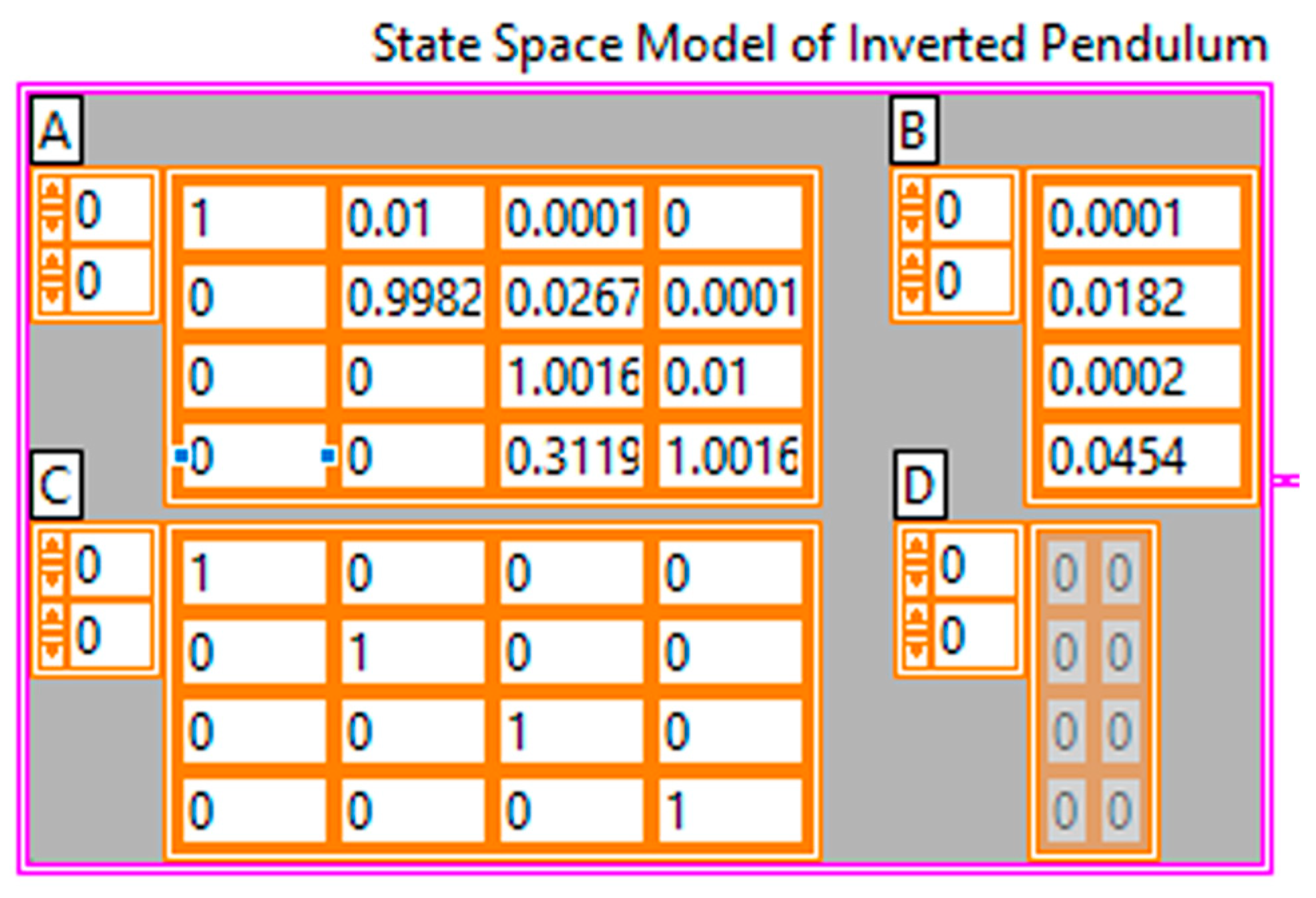

2.1. Transfer Function

2.2. State-Space Model

3. Results

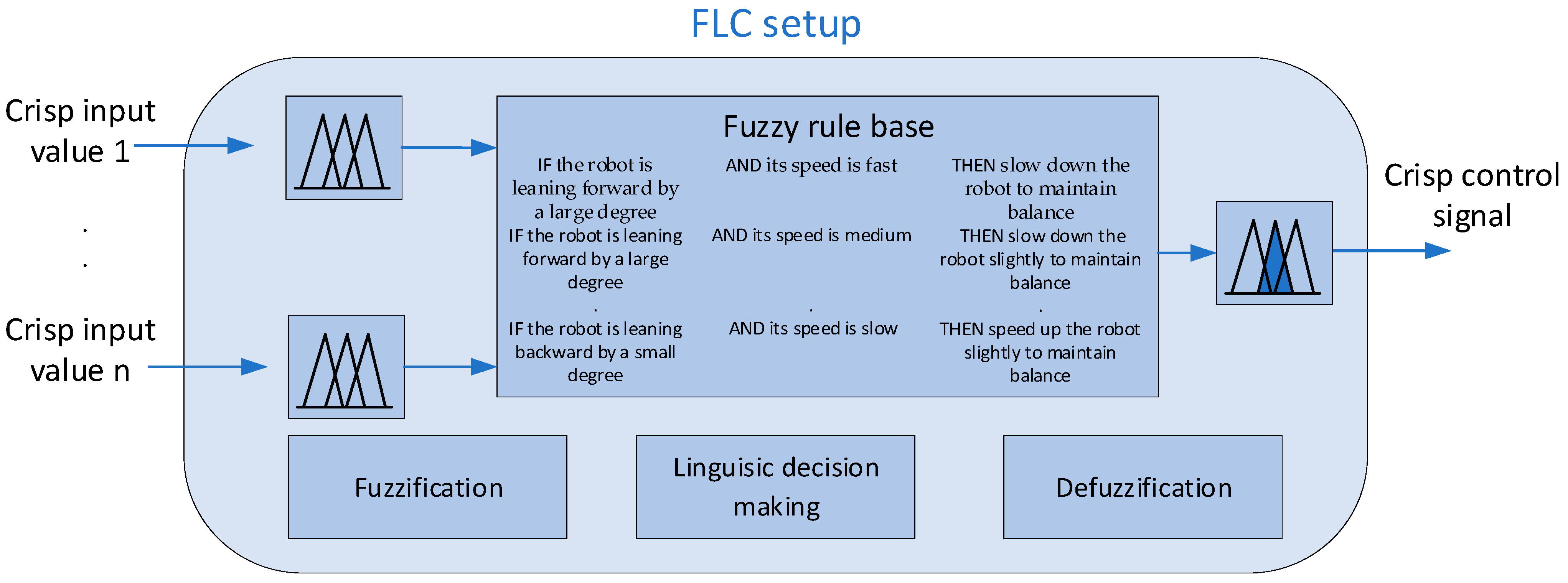

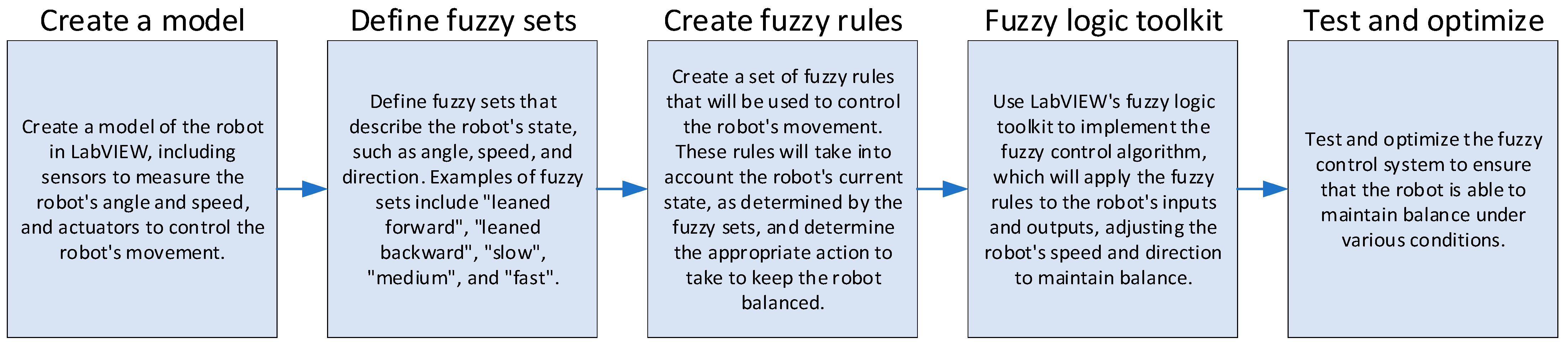

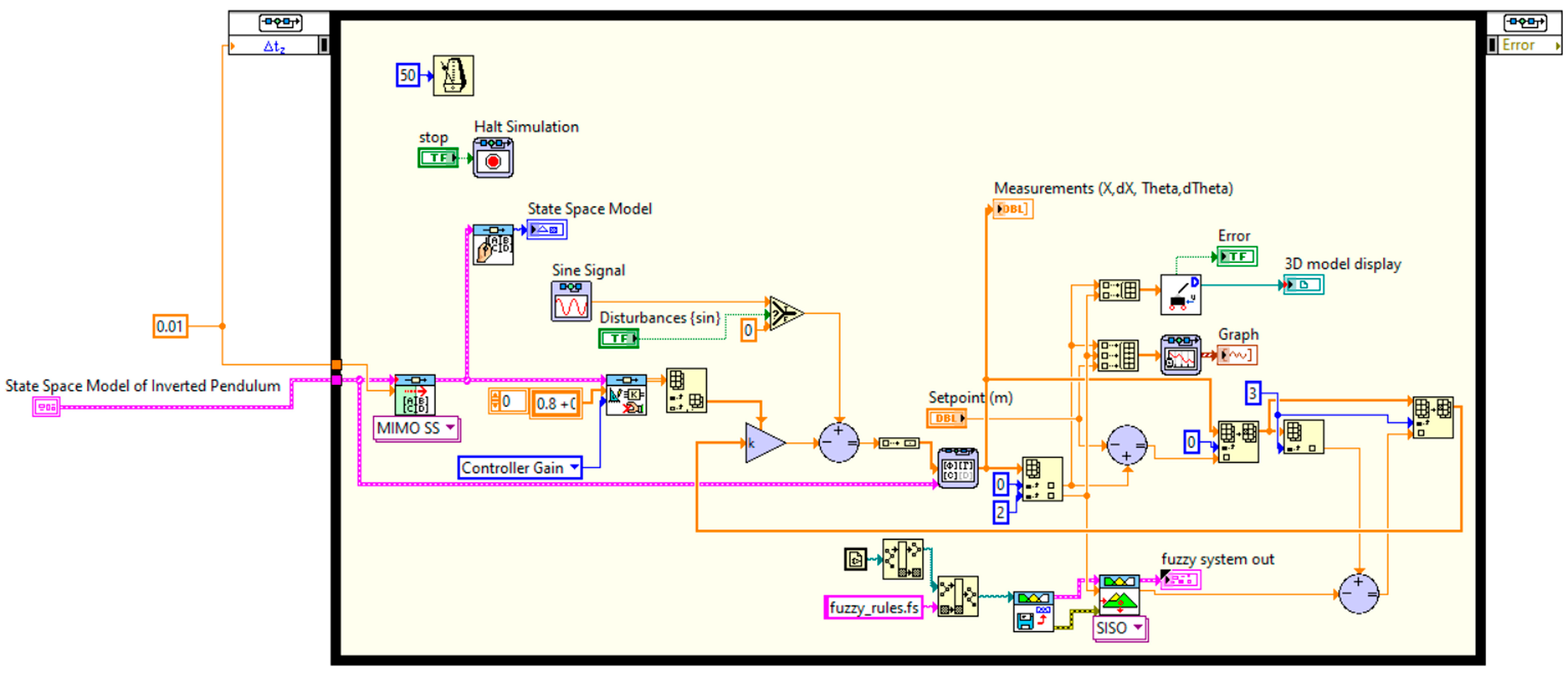

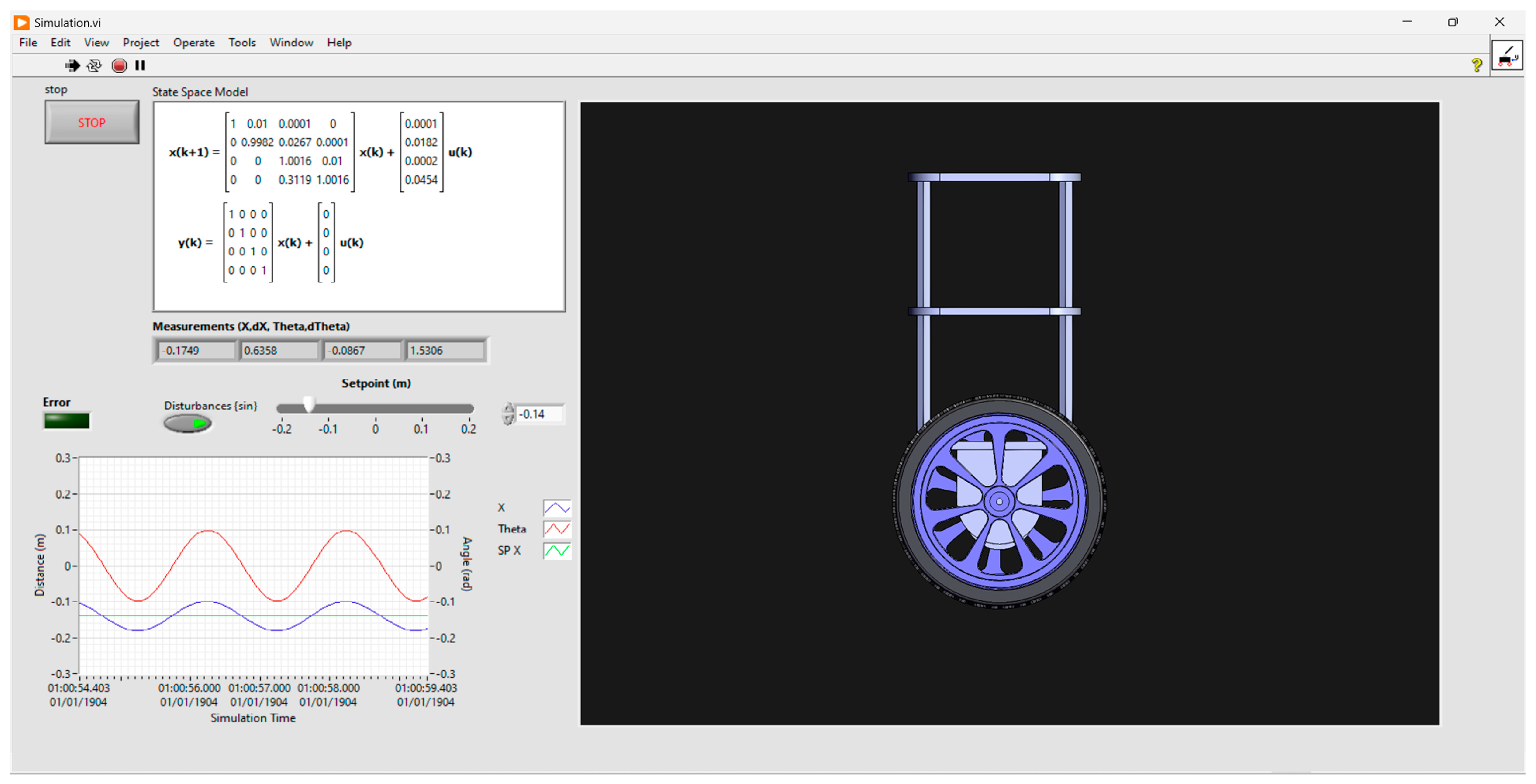

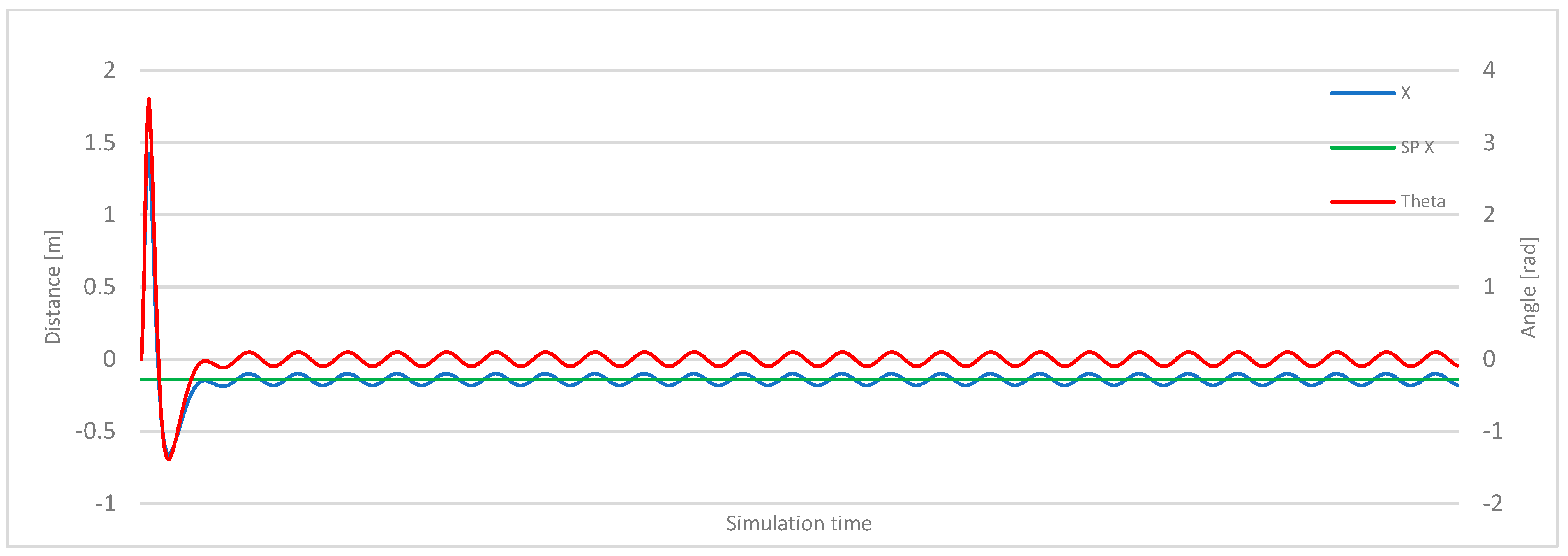

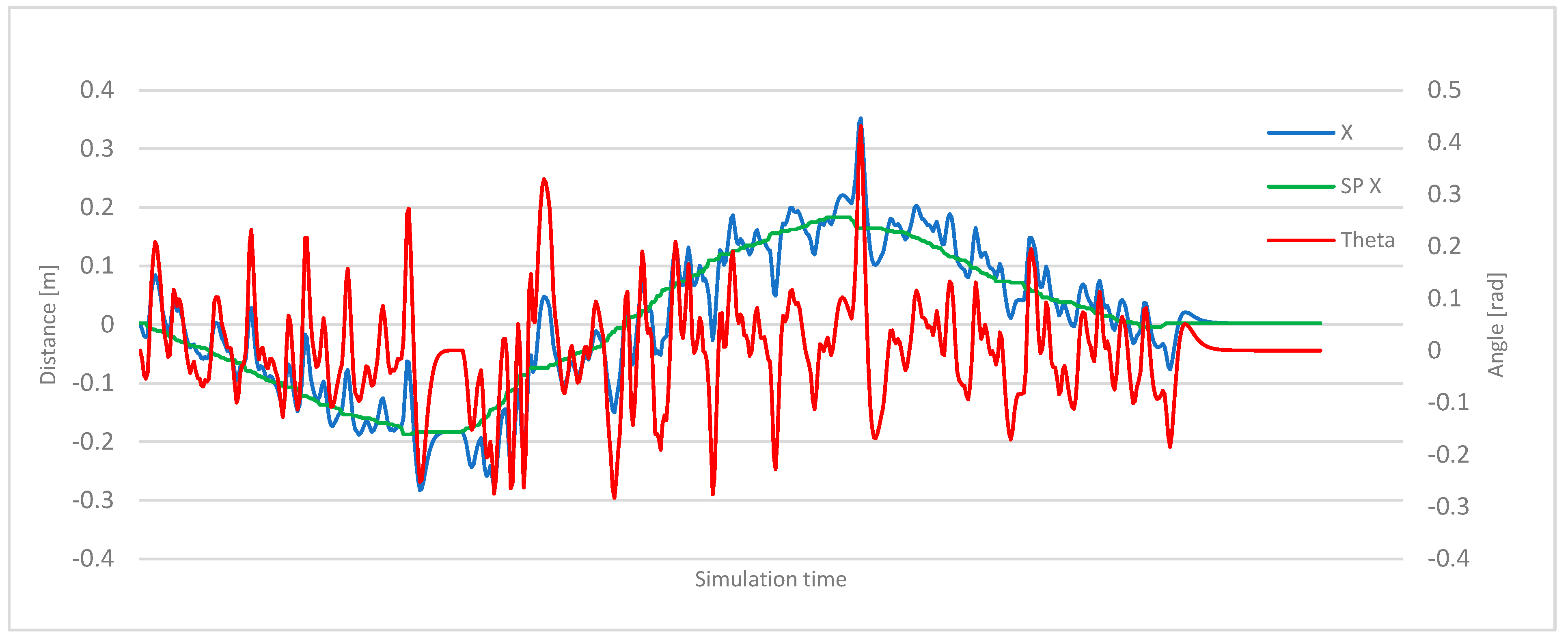

3.1. Fuzzy Control of Self-Balancing, Two-Wheel Robot

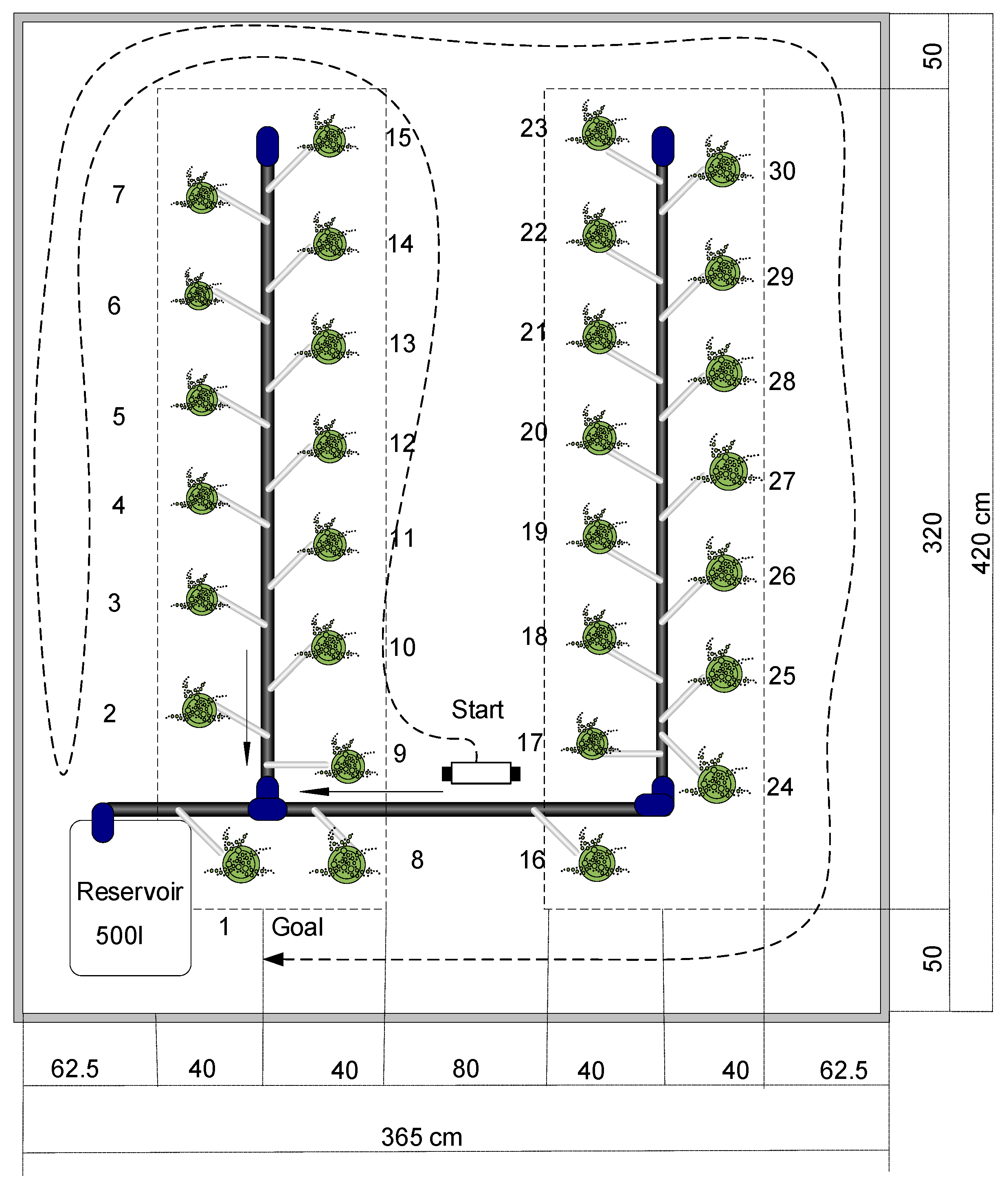

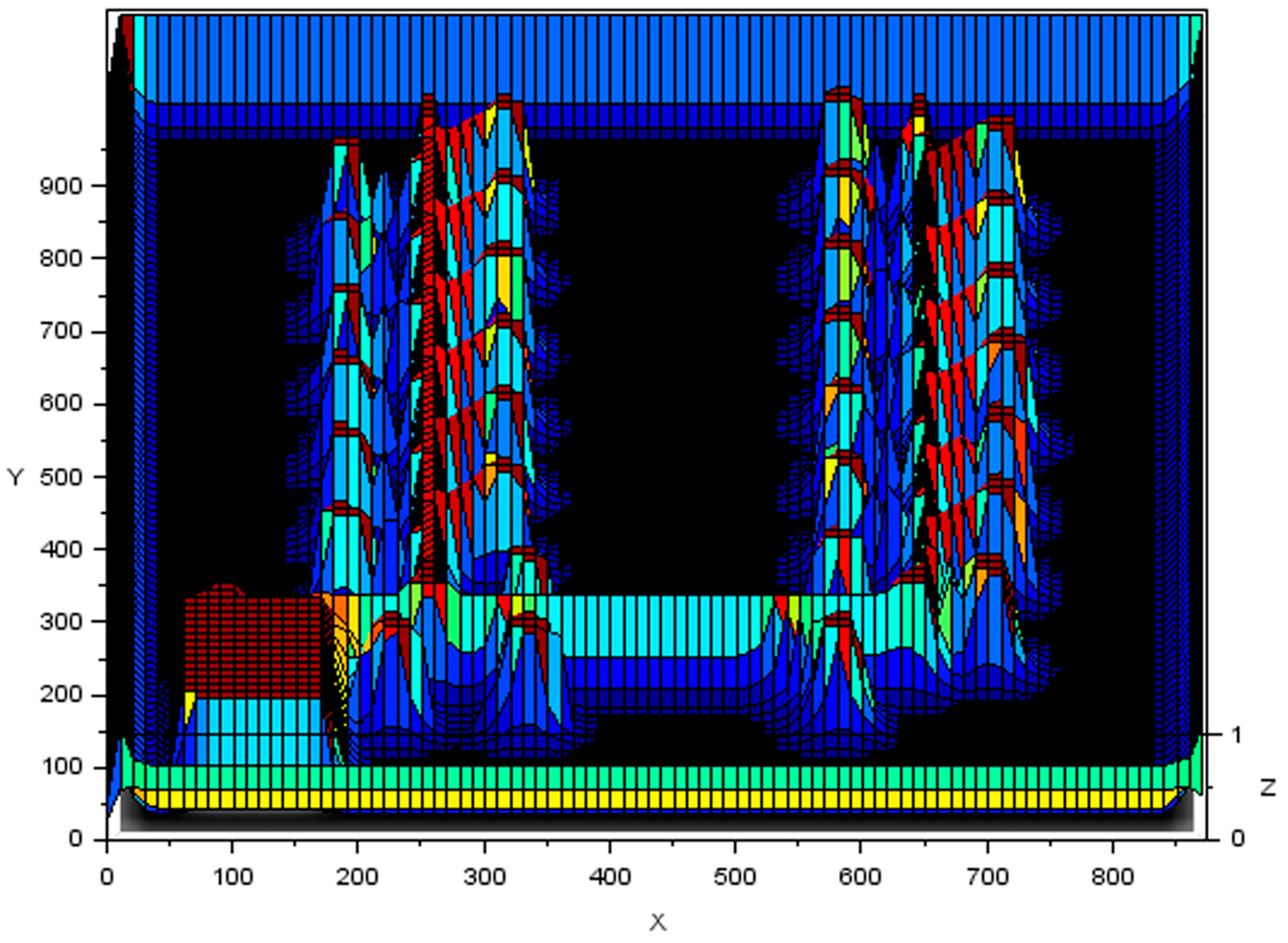

3.2. SLAM

- Control based on information obtained from internal sensors on the robot;

- Control based on information obtained from external sensors, where robots are controlled by a computer that represents the brain of all robots;

- Control based on information obtained from internal and external sensors, which represents a hybrid solution of the previous two solutions, and includes all the advantages of both.

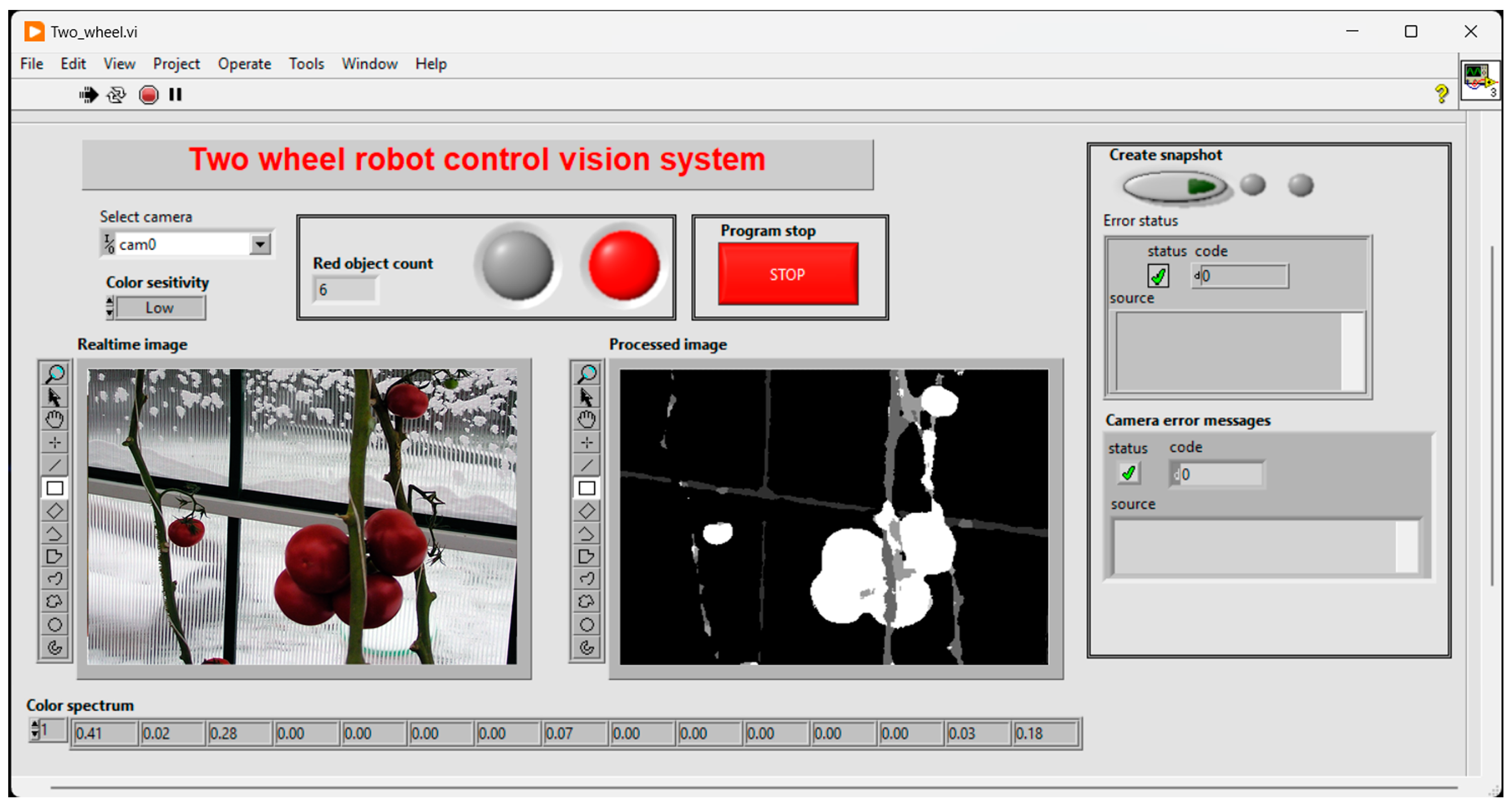

3.3. Computer Vision and Classification

4. Discussion

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yang, C.; Li, Z.; Cui, R.; Xu, B. Neural network-based motion control of an underactuated wheeled inverted pendulum model. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 2004–2016. [Google Scholar] [CrossRef] [PubMed]

- Yue, M.; Wang, S.; Sun, J.Z. Simultaneous balancing and trajectory tracking control for two-wheeled inverted pendulum vehicles: A composite control approach. Neurocomputing 2016, 191, 44–54. [Google Scholar] [CrossRef]

- Hwang, C.L.; Chiang, C.C.; Yeh, Y.W. Adaptive fuzzy hierarchical sliding-mode control for the trajectory tracking of uncertain underactuated nonlinear dynamic systems. IEEE Trans. Fuzzy Syst. 2014, 22, 286–299. [Google Scholar] [CrossRef]

- Shu, Y.; Bo, C.; Shen, G.; Zhao, C.; Li, L.; Zhao, F. Magicol: Indoor Localization Using Pervasive Magnetic Field and Opportunistic WiFi Sensing. IEEE J. Sel. Areas Commun. 2015, 33, 1443–1457. [Google Scholar] [CrossRef]

- Ouyang, G.; Abed-Meraim, K. Analysis of Magnetic Field Measurements for Indoor Positioning. Sensors 2022, 22, 4014. [Google Scholar] [CrossRef] [PubMed]

- Csik, D.; Odry, A.; Sarcevic, P. Comparison of RSSI-Based Fingerprinting Methods for Indoor Localization. In Proceedings of the IEEE International Symposium on Intelligent Systems and Informatics (SISY), Subotica, Serbia, 15–17 September 2022. [Google Scholar] [CrossRef]

- Sarcevic, P.; Csik, D.; Odry, A. Indoor 2D Positioning Method for Mobile Robots Based on the Fusion of RSSI and Magnetometer Fingerprints. Sensors 2023, 23, 1855. [Google Scholar] [CrossRef] [PubMed]

- Filip, I.; Pyo, J.; Lee, M.; Joe, H. LiDAR SLAM with a Wheel Encoder in a Featureless Tunnel Environment. Electronics 2023, 12, 1002. [Google Scholar] [CrossRef]

- Samodro, M.; Puriyanto, R.; Caesarendra, W. Artificial Potential Field Path Planning Algorithm in Differential Drive Mobile Robot Platform for Dynamic Environment. Int. J. Robot. Control. Syst. 2023, 3, 161–170. [Google Scholar] [CrossRef]

- Lei, L.; Ning, Z.; Yi, Z.; Yangjun, S. A* guiding DQN algorithm for automated guided vehicle pathfinding problem of robotic mobile fulfillment systems. Comput. Ind. Eng. 2023, 178, 109112. [Google Scholar] [CrossRef]

- Rubio, F.; Valero, F.; Llopis-Albert, C. A review of mobile robots: Concepts, methods, theoretical framework, and applications. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419839596. [Google Scholar] [CrossRef]

- Thakar, S.; Srinivasan, S.; Al-Hussaini, S.; Bhatt, P.M.; Rajendran, P.; Jung Yoon, Y.; Dhanaraj, N.; Malhan, R.K.; Schmid, M.; Krovi, V.N.; et al. A Survey of Wheeled Mobile Manipulation: A Decision-Making Perspective. ASME J. Mech. Robot. 2023, 15, 020801. [Google Scholar] [CrossRef]

- Sabzalian, M.H.; Alattas, K.A.; Aredes, M.; Alanazi, A.K.; Abo-Dief, H.M.; Mohammadzadeh, A.; Fekih, A. A New Immersion and Invariance Control and Stable Deep Learning Fuzzy Approach for Power/Voltage Control Problem. IEEE Access 2021, 10, 68–81. [Google Scholar] [CrossRef]

- Sabzalian, M.H.; Mohammadzadeh, A.; Rathinasamy, S.; Zhang, W. A developed observer-based type-2 fuzzy control for chaotic systems. Int. J. Syst. Sci. 2021. [Google Scholar] [CrossRef]

- Rastgar, H.; Naeimi, H.; Agheli, M. Characterization, validation, and stability analysis of maximized reachable workspace of radially symmetric hexapod machines. Mech. Mach. Theory 2019, 137, 315–335. [Google Scholar] [CrossRef]

- Yang, T.; Cabani, A.; Chafouk, H. A Survey of Recent Indoor Localization Scenarios and Methodologies. Sensors 2021, 21, 8086. [Google Scholar] [CrossRef] [PubMed]

- Filipenko, M.; Afanasyev, I. Comparison of Various SLAM Systems for Mobile Robot in an Indoor Environment. In Proceedings of the 9th IEEE International Conference on Intelligent Systems, Madeira, Portugal, 25–27 September 2018; pp. 400–407. [Google Scholar] [CrossRef]

- Available online: https://ctms.engin.umich.edu/CTMS/index.php?example=InvertedPendulum§ion=ControlStateSpace (accessed on 26 March 2023).

- Liu, J.; Wang, X. Advanced Sliding Mode Control for Mechanical Systems: Design, Analysis and Matlab Simulation; Springer: Berlin/Heidelberg, Germany, 2011; ISBN 978-3-642-20906-2. [Google Scholar] [CrossRef]

- Wang, G.; Ding, L.; Gao, H.; Deng, Z.; Liu, Z.; Yu, H. Minimizing the Energy Consumption for a Hexapod Robot Based on Optimal Force Distribution. IEEE Access 2019, 8, 5393–5406. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Gomez, J.J.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial and Multi-Map SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Júnior, G.P.C.; Rezende, A.M.; Miranda, V.R.; Fernandes, R.; Azpúrua, H.; Neto, A.A.; Pessin, G.; Freitas, G.M. EKF-LOAM: An Adaptive Fusion of LiDAR SLAM With Wheel Odometry and Inertial Data for Confined Spaces With Few Geometric Features. IEEE Trans. Autom. Sci. Eng. 2022, 19, 1458–1471. [Google Scholar] [CrossRef]

- Jang, H.; Kim, T.Y.; Lee, Y.C.; Song, Y.H.; Choi, H.R. Autonomous Navigation of In-Pipe Inspection Robot Using Contact Sensor Modules. IEEE/ASME Trans. Mechatron. 2022, 27, 4665–4674. [Google Scholar] [CrossRef]

- Piao, J.C.; Kim, S.D. Real-Time Visual-Inertial SLAM Based on Adaptive Keyframe Selection for Mobile AR Applications. IEEE Trans. Multimed. 2019, 21, 2827–2836. [Google Scholar] [CrossRef]

- Ni, X.; Li, C.; Jiang, H.; Takeda, F. Three-dimensional photogrammetry with deep learning instance segmentation to extract berry fruit harvestability traits. ISPRS J. Photogramm. Remote Sens. 2021, 171, 297–309. [Google Scholar] [CrossRef]

- Mukhiddinov, M.; Muminov, A.; Cho, J. Improved Classification Approach for Fruits and Vegetables Freshness Based on Deep Learning. Sensors 2022, 22, 8192. [Google Scholar] [CrossRef] [PubMed]

- Rizzo, M.; Marcuzzo, M.; Zangari, A.; Gasparetto, A.; Albarelli, A. Fruit ripeness classification: A survey. Artif. Intell. Agric. 2023, 7, 44–57. [Google Scholar] [CrossRef]

- Castro, W.; Oblitas, J.; De-La-Torre, M.; Cotrina, C.; Bazán, K.; Avila-George, H. Classification of cape gooseberry fruit according to its level of ripeness using machine learning techniques and different color spaces. IEEE Access 2019, 7, 27389–27400. [Google Scholar] [CrossRef]

- Zhu, L.; Spachos, P.; Pensini, E.; Plataniotis, K.N. Deep learning and machine vision for food processing: A survey. Curr. Res. Food Sci. 2021, 4, 233–249. [Google Scholar] [CrossRef] [PubMed]

- Yuesheng, F.; Jian, S.; Fuxiang, X.; Yang, B.; Xiang, Z.; Peng, G.; Zhengtao, W.; Shengqiao, X. Circular Fruit and Vegetable Classification Based on Optimized GoogLeNet. IEEE Access 2021, 9, 113599–113611. [Google Scholar] [CrossRef]

- Chiu, Y.C.; Chen, S.; Lin, J.F. Study of an autonomous fruit picking robot system in greenhouses. Eng. Agric. Environ. Food 2013, 6, 92–98. [Google Scholar] [CrossRef]

- Hu, C.; Liu, X.; Pan, Z.; Li, P. Automatic detection of single ripe tomato on plant combining faster R-CNN and intuitionistic fuzzy set. IEEE Access 2019, 7, 154683–154696. [Google Scholar] [CrossRef]

- Yue, X.Q.; Shang, Z.Y.; Yang, J.Y.; Huang, L.; Wang, Y.Q. A smart data-driven rapid method to recognize the strawberry maturity. Inf. Process. Agric. 2020, 7, 575–584. [Google Scholar] [CrossRef]

| IF | AND | THEN |

|---|---|---|

| If the robot is leaning forward by a large degree | its speed is fast | slow down the robot to maintain balance |

| If the robot is leaning forward by a large degree | its speed is medium | slow down the robot slightly to maintain balance |

| If the robot is leaning forward by a small degree | its speed is fast | maintain the current speed to maintain balance |

| If the robot is leaning forward by a small degree | its speed is medium | maintain the current speed to maintain balance |

| If the robot is leaning forward by a small degree | its speed is slow | speed up the robot slightly to maintain balance |

| If the robot is leaning backward by a large degree | its speed is fast | slow down the robot significantly to maintain balance |

| If the robot is leaning backward by a large degree | its speed is medium | slow down the robot moderately to maintain balance |

| If the robot is leaning backward by a small degree | its speed is fast | speed up the robot slightly to maintain balance |

| If the robot is leaning backward by a small degree | its speed is medium | maintain the current speed to maintain balance |

| If the robot is leaning backward by a small degree | its speed is slow | speed up the robot slightly to maintain balance |

| Module | Model |

|---|---|

| Chassis | Custom-built aluminum and 3D-printed PLA |

| Motor | DC motor |

| Motor driver | L9110S dual bridge |

| Controller | ESP32s |

| Sonar | HC-SR04 |

| IMU | MPU6050 |

| Temp./RH/Pressure sensor | BME280 |

| Camera | ESP32 Cam |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Simon, J. Fuzzy Control of Self-Balancing, Two-Wheel-Driven, SLAM-Based, Unmanned System for Agriculture 4.0 Applications. Machines 2023, 11, 467. https://doi.org/10.3390/machines11040467

Simon J. Fuzzy Control of Self-Balancing, Two-Wheel-Driven, SLAM-Based, Unmanned System for Agriculture 4.0 Applications. Machines. 2023; 11(4):467. https://doi.org/10.3390/machines11040467

Chicago/Turabian StyleSimon, János. 2023. "Fuzzy Control of Self-Balancing, Two-Wheel-Driven, SLAM-Based, Unmanned System for Agriculture 4.0 Applications" Machines 11, no. 4: 467. https://doi.org/10.3390/machines11040467

APA StyleSimon, J. (2023). Fuzzy Control of Self-Balancing, Two-Wheel-Driven, SLAM-Based, Unmanned System for Agriculture 4.0 Applications. Machines, 11(4), 467. https://doi.org/10.3390/machines11040467