Transfer Learning Based Fault Detection for Suspension System Using Vibrational Analysis and Radar Plots

Abstract

1. Introduction

| Reference | Deep Learning Techniques Used | Mechanical System |

|---|---|---|

| [31] | Optimized deep belief network | Rolling Bearing |

| [32] | Deep convolution neural network | |

| [33] | Ensemble learning method | |

| [34] | Stacked auto encoder | Gearbox |

| [35] | Transfer learning | Spark ignition engine |

| [36] | Stacked denoising auto encoder | Centrifugal Pumps |

| [37] | Deep belief network | Induction Motor |

| [38] | Artificial neural networks | Wind Turbines |

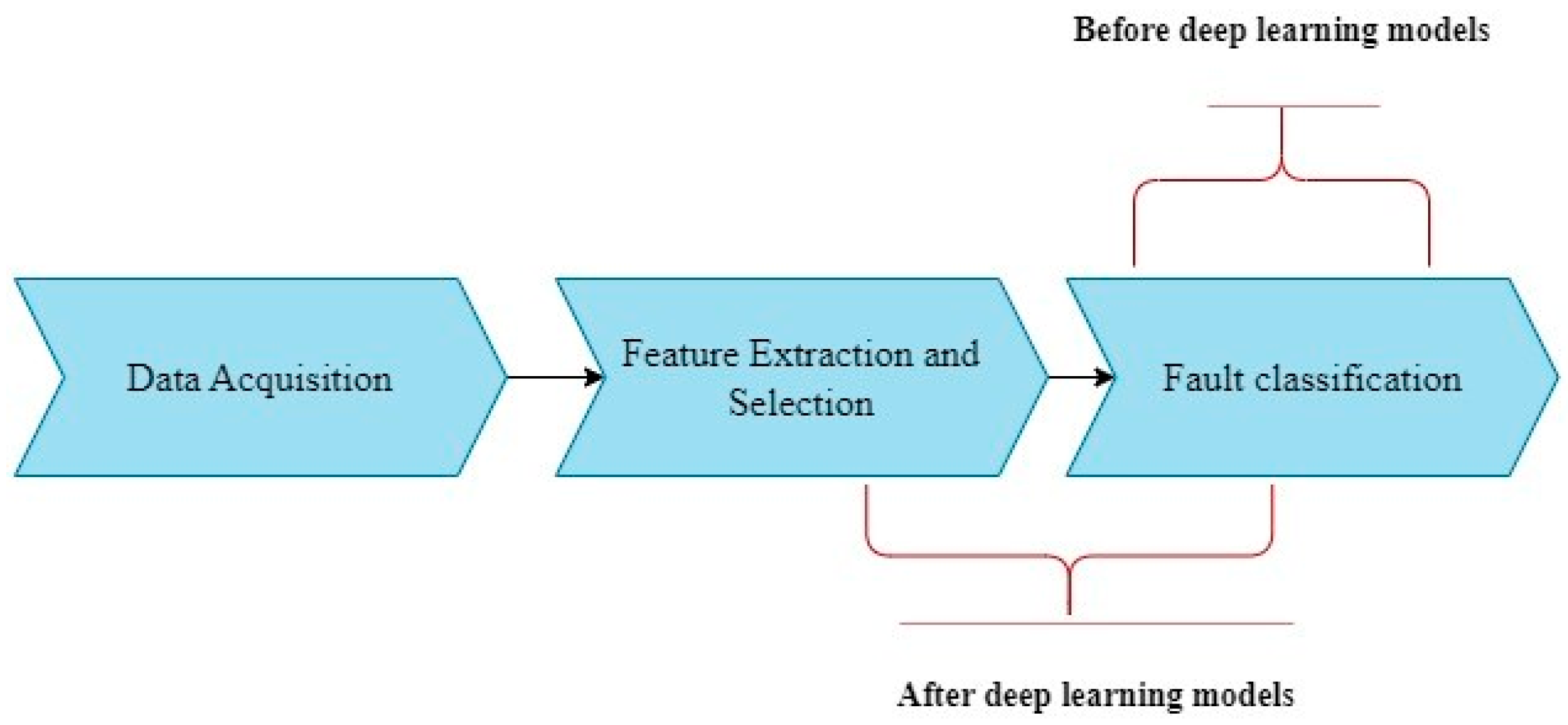

- The overall experimental process carried out in the experimental study is presented in Figure 2. Vibration signals acquired using a piezoelectric accelerometer were converted into radar plots and pre-processed to be compatible with transfer learning networks considered in the study.

- Post processing of the radar plots, pre-trained network performance was assessed for variations in hyperparameters such as solver, mini-batch-size, train-to-test ratio and initial learning rate.

- The best performing network with optimal hyperparameters accurately classifying eight suspension conditions was determined.

- The results obtained were then compared with various cutting-edge techniques to portray the superiority of the proposed methodology.

- The paper introduces a novel experimental method using radar plots to visualize vibration data, offering a simpler alternative to complex techniques such as FFT, Hilbert Huang transform and empirical mode decomposition.

- The study utilizes transfer learning with four pre-trained networks (VGG16, ResNet-50, AlexNet, and GoogLeNet) to classify radar plots representing eight suspension conditions.

- The radar plots are used to classify various suspension cases, including worn tie rod ball joint (TRBJ), strut wear (STWO), lower arm bush wear (LABW), low pressure in the wheel (LWP), strut external damage (STED), worn lower arm ball joint (LABJ) and strut mount faults (STMF).

- Hyperparameter variations were explored to optimize the performance of the pre-trained networks within the same dataset, resulting in the highest achievable classification accuracy for each network.

- The proposed approach enables the generation of reliable radar plots for accurate analysis of suspension system faults.

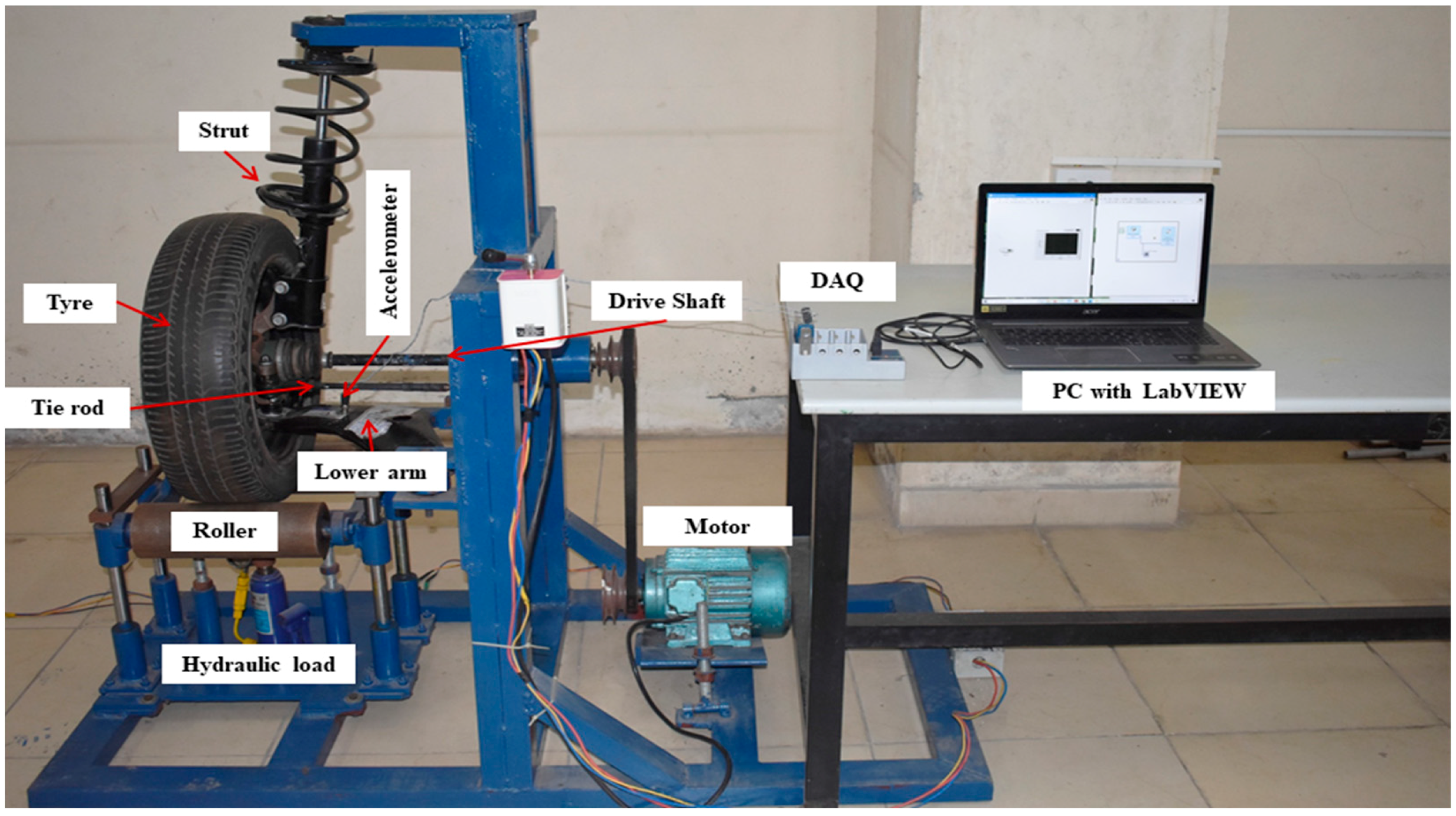

2. Features of the Experimental System

2.1. Experimental Setup

2.2. Data Acquisition Method

2.3. Experimental Procedure

- Strut mount fault: The suspension system exhibits a fault in the strut mounting in which the connection and positioning of the strut mount are found to be defective.

- Lower arm bush worn off: The bushing responsible for absorbing shocks and vibrations in the A-arm (also known as the control arm) has worn off. This can result in increased noise, reduced stability, and compromised handling of the suspension system.

- The lower arm ball joint worn off: The fault occurs when the lower A-arm ball joint wears off. It can cause excessive play in the suspension, leading to abnormal tire wear, poor steering response and potential loss of control.

- Tie rod ball joint worn off: This refers to when the joint connecting the steering rack to the steering knuckle wears off. It can result in unstable steering, vibration and difficulty maintaining proper wheel alignment.

- Low pressure in the wheel: This refers to a situation where the tire pressure in one or more wheels is significantly lower than the recommended level. Insufficient tire pressure can lead to reduced handling, decreased stability, increased tire wear and decreased fuel efficiency.

- Strut external damage: This refers to the condition wherein external damage to a strut, such as bends, dents or leaks, can compromise its structural integrity and affect the suspension’s ability to absorb shocks and maintain stability.

- Strut worn off: This refers to the condition when a strut becomes worn off which can result in increased bouncing, reduced stability, longer stopping distances and an overall compromised ride quality.

3. Experimental Preprocessing and Analysis of Pre-Trained Networks

Formation of Dataset and Preprocessing

4. Results and Discussion

4.1. Impact of Train Test Ratio

4.2. Impact of Solvers

4.3. Impact of Batch Size

4.4. Impact of Learning Rate

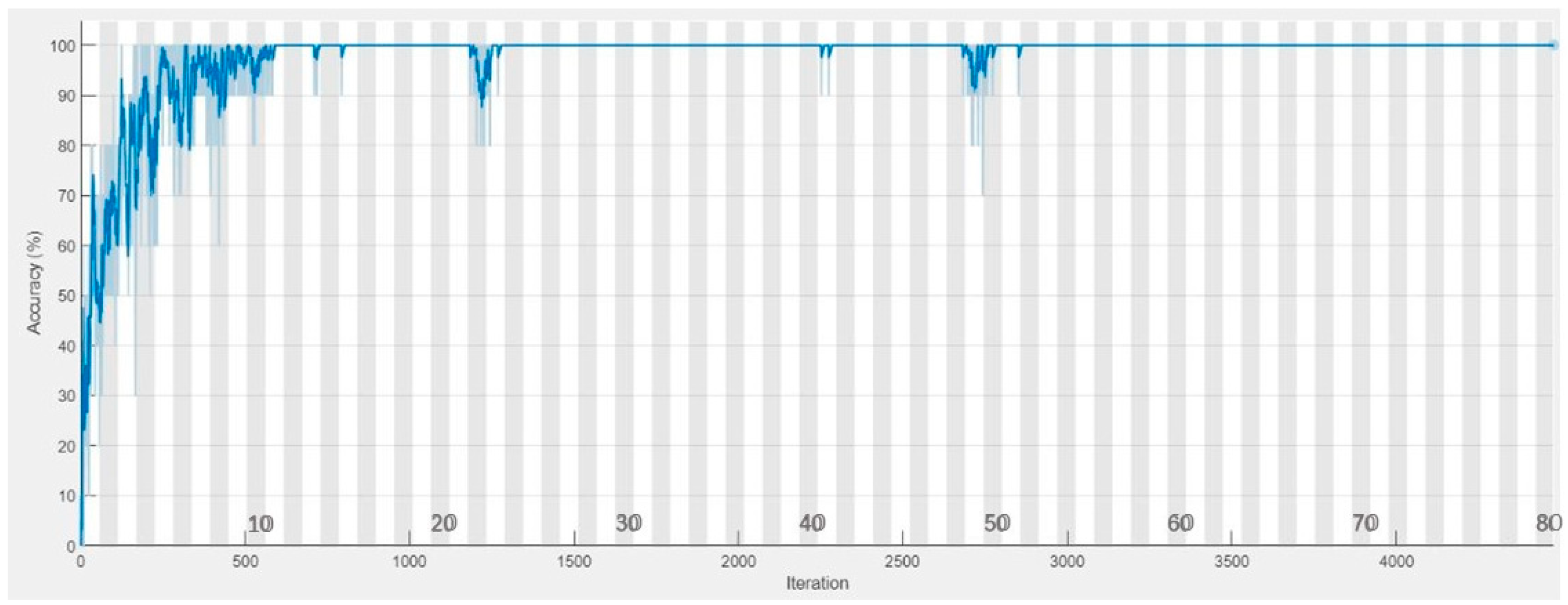

4.5. Impact of Number of Epochs

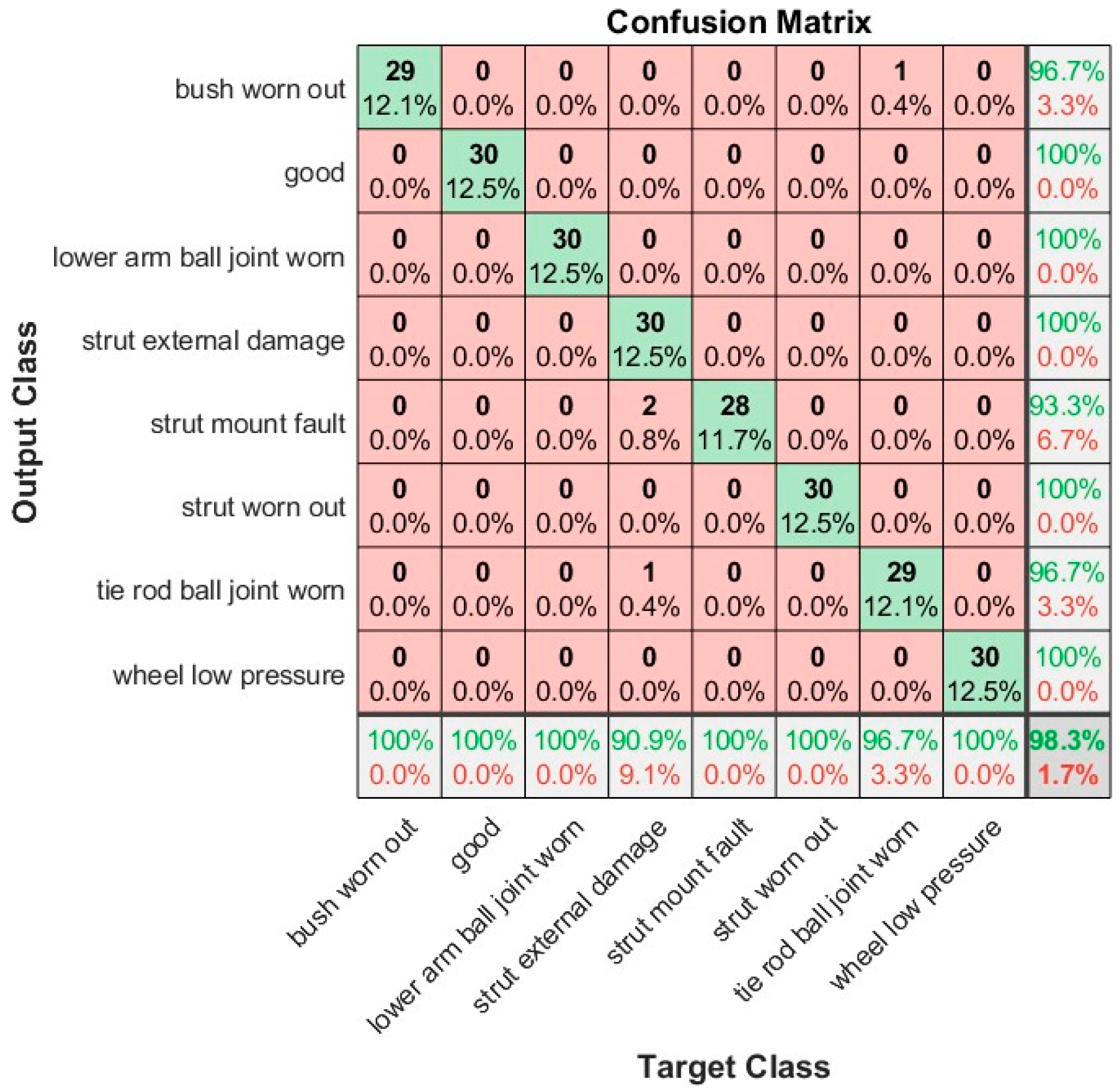

4.6. Comparative Examination of Trained Models

4.7. Comparison with Other State of the Art Techniques

- Bayes Net Classifier:

- -

- Balaji et al. [39] utilized the Bayes net classifier to detect faults in suspension systems.

- -

- Vibrational signals from a test bench were collected and used for classification.

- -

- The system was successfully classified into eight different conditions based on these signals.

- Random Forest and J48 Algorithm:

- -

- Balaji et al. [40] employed the Random Forest and J48 algorithms for fault classification and detection in suspension systems.

- -

- The focus was on vibration signals, and the study investigated the system under eight distinct conditions.

- Deep Semi-Supervised Feature Extraction:

- -

- Peng et al. [41] proposed a deep semi-supervised feature extraction method for fault detection in rail suspension systems.

- -

- This approach leveraged data from multiple sensors and was particularly effective when only one data class was available.

- -

- The study considered the system under two distinct conditions.

- KNN (K-Nearest Neighbors), Naïve Bayes, Ensemble Methods, Linear SVM (Support Vector Machine):

- -

- Ankrah et al. [42] investigated the application of KNN, Naïve Bayes, ensemble methods, and linear SVM for fault detection in railway suspensions.

- -

- Acceleration signals were used for classification, and the system was classified into three different classes.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, Y. Recent Innovations in Vehicle Suspension Systems. Recent Pat. Mech. Eng. 2012, 1, 206–210. [Google Scholar] [CrossRef]

- Fault Detection Methods: A Literature Survey. Available online: https://www.researchgate.net/publication/221412815_Fault_detection_methods_A_literature_survey (accessed on 8 June 2023).

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Li, N.; Nandi, A.K. Applications of Machine Learning to Machine Fault Diagnosis: A Review and Roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Bellali, B.; Hazzab, A.; Bousserhane, I.K.; Lefebvre, D. Parameter Estimation for Fault Diagnosis in Nonlinear Systems by ANFIS. Procedia Eng. 2012, 29, 2016–2021. [Google Scholar] [CrossRef]

- Pouliezos, A.D.; Stavrakakis, G.S. Parameter Estimation Methods for Fault Monitoring. In Real Time Fault Monitoring of Industrial Processes; Springer: Berlin/Heidelberg, Germany, 1994; pp. 179–255. [Google Scholar] [CrossRef]

- Fagarasan, I.; Iliescu, S.S. Parity Equations for Fault Detection and Isolation. In Proceedings of the 2008 IEEE International Conference on Automation, Quality and Testing, Robotics, AQTR 2008—THETA 16th Edition—Proceedings, Cluj-Napoca, Romania, 22–25 May 2008; Volume 1, pp. 99–103. [Google Scholar] [CrossRef]

- Gertler, J.J. Structured Parity Equations in Fault Detection and Isolation. In Issues of Fault Diagnosis for Dynamic Systems; Springer: London, UK, 2000; pp. 285–313. [Google Scholar] [CrossRef]

- Zhao, W.; Lv, Y.; Guo, X.; Huo, J. An Investigation on Early Fault Diagnosis Based on Naive Bayes Model. In Proceedings of the 2022 7th International Conference on Control and Robotics Engineering, ICCRE 2022 2022, Beijing, China, 15–17 April 2022; pp. 32–36. [Google Scholar] [CrossRef]

- Asadi Majd, A.; Samet, H.; Ghanbari, T. K-NN Based Fault Detection and Classification Methods for Power Transmission Systems. Prot. Control. Mod. Power Syst. 2017, 2, 1–11. [Google Scholar] [CrossRef]

- Application of J48 Algorithm for Gear Tooth Fault Diagnosis of Tractor Steering Hydraulic Pumps. Available online: https://www.researchgate.net/publication/251881132_Application_of_J48_algorithm_for_gear_tooth_fault_diagnosis_of_tractor_steering_hydraulic_pumps (accessed on 8 June 2023).

- Yin, S.; Gao, X.; Karimi, H.R.; Zhu, X. Study on Support Vector Machine-Based Fault Detection in Tennessee Eastman Process. Abstr. Appl. Anal. 2014, 2014, 836895. [Google Scholar] [CrossRef]

- Aldrich, C.; Auret, L. Fault Detection and Diagnosis with Random Forest Feature Extraction and Variable Importance Methods. IFAC Proc. Vol. 2010, 43, 79–86. [Google Scholar] [CrossRef]

- Mishra, K.M.; Huhtala, K.J. Fault Detection of Elevator Systems Using Multilayer Perceptron Neural Network. In Proceedings of the IEEE International Conference on Emerging Technologies and Factory Automation, ETFA 2019, Zaragoza, Spain, 10–13 September 2019; pp. 904–909. [Google Scholar] [CrossRef]

- Zhu, X.; Xia, Y.; Chai, S.; Shi, P. Fault Detection for Vehicle Active Suspension Systems in Finite-Frequency Domain. IET Control. Theory Appl. 2019, 13, 387–394. [Google Scholar] [CrossRef]

- Azadi, S.; Soltani, A. Fault Detection of Vehicle Suspension System Using Wavelet Analysis. Veh. Syst. Dyn. 2009, 47, 403–418. [Google Scholar] [CrossRef]

- Börner, M.; Isermann, R.; Schmitt, M. A Sensor and Process Fault Detection System for Vehicle Suspension Systems. Available online: https://books.google.com.hk/books?hl=en&lr=&id=auGbEAAAQBAJ&oi=fnd&pg=PA103&dq=Sensor+and+Process+Fault+Detection+System+for+Vehicle+Suspension+Systems&ots=xDSZYcKAgA&sig=y1LgiX6LS6lvPRiGBLg2Nug9elk&redir_esc=y#v=onepage&q=Sensor%20and%20Process%20Fault%20Detection%20System%20for%20Vehicle%20Suspension%20Systems&f=false (accessed on 8 June 2023).

- Liu, C.; Gryllias, K. A Semi-Supervised Support Vector Data Description-Based Fault Detection Method for Rolling Element Bearings Based on Cyclic Spectral Analysis. Mech. Syst. Signal Process 2020, 140, 106682. [Google Scholar] [CrossRef]

- Vasan, V.; Sridharan, N.V.; Prabhakaranpillai Sreelatha, A.; Vaithiyanathan, S. Tire Condition Monitoring Using Transfer Learning-Based Deep Neural Network Approach. Sensors 2023, 23, 2177. [Google Scholar] [CrossRef]

- Chakrapani, G.; Sugumaran, V. Transfer Learning Based Fault Diagnosis of Automobile Dry Clutch System. Eng. Appl. Artif. Intell. 2023, 117, 105522. [Google Scholar] [CrossRef]

- Tang, S.; Yuan, S.; Zhu, Y. Deep Learning-Based Intelligent Fault Diagnosis Methods toward Rotating Machinery. IEEE Access 2020, 8, 9335–9346. [Google Scholar] [CrossRef]

- Jegadeeshwaran, R.; Sugumaran, V. Fault Diagnosis of Automobile Hydraulic Brake System Using Statistical Features and Support Vector Machines. Mech. Syst. Signal Process 2015, 52–53, 436–446. [Google Scholar] [CrossRef]

- Ding, A.; Qin, Y.; Wang, B.; Jia, L.; Cheng, X. Lightweight Multiscale Convolutional Networks with Adaptive Pruning for Intelligent Fault Diagnosis of Train Bogie Bearings in Edge Computing Scenarios. IEEE Trans Instrum Meas 2023, 72, 3231325. [Google Scholar] [CrossRef]

- Tanyildizi, H.; Şengür, A.; Akbulut, Y.; Şahin, M. Deep Learning Model for Estimating the Mechanical Properties of Concrete Containing Silica Fume Exposed to High Temperatures. Front. Struct. Civ. Eng. 2020, 14, 1316–1330. [Google Scholar] [CrossRef]

- Ai, D.; Mo, F.; Cheng, J.; Du, L. Deep Learning of Electromechanical Impedance for Concrete Structural Damage Identification Using 1-D Convolutional Neural Networks. Constr. Build Mater. 2023, 385, 131423. [Google Scholar] [CrossRef]

- Fraz, M.M.; Jahangir, W.; Zahid, S.; Hamayun, M.M.; Barman, S.A. Multiscale Segmentation of Exudates in Retinal Images Using Contextual Cues and Ensemble Classification. Biomed. Signal Process Control 2017, 35, 50–62. [Google Scholar] [CrossRef]

- Mo, J.; Zhang, L.; Feng, Y. Exudate-Based Diabetic Macular Edema Recognition in Retinal Images Using Cascaded Deep Residual Networks. Neurocomputing 2018, 290, 161–171. [Google Scholar] [CrossRef]

- Ansari, A.H.; Cherian, P.J.; Caicedo, A.; Naulaers, G.; De Vos, M.; Van Huffel, S. Neonatal Seizure Detection Using Deep Convolutional Neural Networks. Int. J. Neural Syst. 2019, 29, 1850011. [Google Scholar] [CrossRef]

- Nogay, H.S.; Adeli, H. Detection of Epileptic Seizure Using Pretrained Deep Convolutional Neural Network and Transfer Learning. Eur. Neurol 2021, 83, 602–614. [Google Scholar] [CrossRef]

- Zhang, Y.; You, D.; Gao, X.; Zhang, N.; Gao, P.P. Welding Defects Detection Based on Deep Learning with Multiple Optical Sensors during Disk Laser Welding of Thick Plates. J. Manuf. Syst. 2019, 51, 87–94. [Google Scholar] [CrossRef]

- Zhang, Z.; Wen, G.; Chen, S. Weld Image Deep Learning-Based on-Line Defects Detection Using Convolutional Neural Networks for Al Alloy in Robotic Arc Welding. J. Manuf. Process 2019, 45, 208–216. [Google Scholar] [CrossRef]

- Shao, H.; Jiang, H.; Zhang, X.; Niu, M. Rolling Bearing Fault Diagnosis Using an Optimization Deep Belief Network. Meas. Sci. Technol. 2015, 26, 115002. [Google Scholar] [CrossRef]

- Huang, W.; Cheng, J.; Yang, Y.; Guo, G. An Improved Deep Convolutional Neural Network with Multi-Scale Information for Bearing Fault Diagnosis. Neurocomputing 2019, 359, 77–92. [Google Scholar] [CrossRef]

- Li, X.; Jiang, H.; Niu, M.; Wang, R. An Enhanced Selective Ensemble Deep Learning Method for Rolling Bearing Fault Diagnosis with Beetle Antennae Search Algorithm. Mech. Syst. Signal Process 2020, 142, 106752. [Google Scholar] [CrossRef]

- Liu, G.; Bao, H.; Han, B. A Stacked Autoencoder-Based Deep Neural Network for Achieving Gearbox Fault Diagnosis. Math Probl. Eng. 2018, 2018, 5105709. [Google Scholar] [CrossRef]

- Naveen Venkatesh, S.; Chakrapani, G.; Senapti, S.B.; Annamalai, K.; Elangovan, M.; Indira, V.; Sugumaran, V.; Mahamuni, V.S. Misfire Detection in Spark Ignition Engine Using Transfer Learning. Comput. Intell Neurosci. 2022, 2022, 7606896. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, Z.; Lu, C.; Ma, J.; Li, L. Fault Diagnosis for Centrifugal Pumps Using Deep Learning and Softmax Regression. In Proceedings of the World Congress on Intelligent Control and Automation (WCICA), Guilin, China, 12–15 June 2016; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2016; Volume 2016, pp. 165–169. [Google Scholar]

- Shao, S.Y.; Sun, W.J.; Yan, R.Q.; Wang, P.; Gao, R.X. A Deep Learning Approach for Fault Diagnosis of Induction Motors in Manufacturing. Chin. J. Mech. Eng. Engl. Ed. 2017, 30, 1347–1356. [Google Scholar] [CrossRef]

- Helbing, G.; Ritter, M. Deep Learning for Fault Detection in Wind Turbines. Renew. Sustain. Energy Rev. 2018, 98, 189–198. [Google Scholar] [CrossRef]

- Arun Balaji, P.; Sugumaran, V. A Bayes Learning Approach for Monitoring the Condition of Suspension System Using Vibration Signals. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1012, 012029. [Google Scholar] [CrossRef]

- Arun Balaji, P.; Sugumaran, V. Fault Detection of Automobile Suspension System Using Decision Tree Algorithms: A Machine Learning Approach. Proc. Inst. Mech. Eng. Part E J. Process Mech. Eng. 2023, 09544089231152698. [Google Scholar] [CrossRef]

- Peng, X.; Jin, X. Rail Suspension System Fault Detection Using Deep Semi-Supervised Feature Extraction with One-Class Data. Annu. Conf. PHM Soc. 2018, 10, 546. [Google Scholar] [CrossRef]

- Ankrah, A.A.; Kimotho, J.K.; Muvengei, O.M.; Ankrah, A.A.; Kimotho, J.K.; Muvengei, O.M. Fusion of Model-Based and Data Driven Based Fault Diagnostic Methods for Railway Vehicle Suspension. J. Intell. Learn. Syst. Appl. 2020, 12, 51–81. [Google Scholar] [CrossRef]

| Model Type | Name of the Model | Working Principle | Reference |

|---|---|---|---|

| Mathematical | Parameter Estimation | This model employs ordinary differential equations to find multiple parameters that portray variations when a fault is detected. These parameters are then compared to the optimal set, and the error is found | [4,5] |

| Parity equations with input/output models | This model employs the transfer function to find the output and polynomial error between the model and output | [6,7] | |

| Machine Learning | Naive Bayes | This model uses a machine learning algorithm for classification using the Bayes theorem. This classifier assumes strong independence between the features | [8] |

| kNN | KNN does not rely on any specific assumptions, earning its reputation as a non-parametric algorithm | [9] | |

| J48 | This algorithm results from improving and implementing the C4.5 algorithm | [10] | |

| SVM | SVM is another famous ML algorithm that forms a hyperplane to classify data | [11] | |

| Random Forest | The random forest algorithm employs multiple decision trees on subsets of the given data to improve the model’s and the dataset’s predictive accuracy | [12] | |

| MLP | MLP or Multilayer Perceptron is a model which uses the back propagations algorithm to classify data | [13] |

| Network | Number of Layers | Key Features | Input Size | Training Time |

|---|---|---|---|---|

| AlexNet | 8 layers (5 convolutional, 3 fully connected) | -Introduced the concept of deep learning for image classification | 227 × 227 | Medium |

| -Utilized rectified linear units (ReLU) for non-linearity | ||||

| -Used dropout regularization | ||||

| -Implemented local response normalization | ||||

| GoogleNet | 22 layers (27 including auxiliary classifiers) | -Introduced the concept of inception modules | 224 × 224 | High |

| -Utilized 1 × 1 convolutions to reduce the computational cost | ||||

| -Used global average pooling for dimensionality reduction | ||||

| -Employed multiple auxiliary classifiers for training | ||||

| VGG16 | 16 layers (13 convolutional, 3 fully connected) | -Utilized small 3 × 3 convolutional filters throughout | 224 × 224 | High |

| -Maintained a simple and uniform architecture | ||||

| -Achieved impressive performance on various image classification tasks | ||||

| -Increased depth with multiple convolutional layers | ||||

| ResNet50 | 50 layers (49 convolutional, 1 fully connected) | -Introduced residual connections to address vanishing gradient problem | 224 × 224 | High |

| -Utilized skip connections to pass activations forward | ||||

| -Enabled training of intense neural networks | ||||

| -Introduced the concept of residual blocks |

| Pretrained Model | Classification Accuracy for Different Split Ratio (%) | Mean Accuracy (%) | ||

|---|---|---|---|---|

| 0.70:0.30 | 0.75:0.25 | 0.80:0.20 | ||

| VGG-16 | 94.20 | 93.50 | 90.00 | 92.56 |

| GoogLeNet | 87.90 | 86.00 | 92.50 | 88.80 |

| AlexNet | 90.40 | 85.50 | 90.60 | 88.83 |

| ResNet-50 | 84.60 | 86.00 | 86.20 | 85.60 |

| Pretrained Model | Classification Accuracy for Different Solvers (%) | Mean Accuracy (%) | ||

|---|---|---|---|---|

| SGDM | ADAM | RMSPROP | ||

| VGG-16 | 94.20 | 55.40 | 67.90 | 72.50 |

| GoogLeNet | 92.50 | 93.10 | 96.30 | 93.96 |

| AlexNet | 90.60 | 82.50 | 89.40 | 87.50 |

| ResNet-50 | 86.20 | 90.00 | 93.80 | 90.00 |

| Pretrained Model | Classification Accuracy at Different Batch Sizes (%) | Mean Accuracy (%) | ||||

|---|---|---|---|---|---|---|

| 8 | 10 | 16 | 24 | 32 | ||

| VGG16 | 89.60 | 94.20 | 91.70 | 92.90 | 79.60 | 89.60 |

| GoogLeNet | 90.00 | 96.30 | 94.40 | 90.00 | 69.40 | 88.02 |

| AlexNet | 90.00 | 90.60 | 91.90 | 85.60 | 90.60 | 89.74 |

| ResNet-50 | 91.90 | 93.80 | 91.90 | 90.00 | 83.10 | 90.14 |

| Pretrained Model | Classification Accuracy for Different Learning Rates (%) | Mean Accuracy (%) | ||

|---|---|---|---|---|

| 0.0001 | 0.0003 | 0.001 | ||

| VGG-16 | 94.20 | 86.70 | 80.80 | 87.23 |

| GoogLeNet | 96.30 | 90.00 | 50.00 | 78.76 |

| AlexNet | 91.90 | 92.50 | 88.10 | 90.83 |

| ResNet-50 | 93.80 | 83.80 | 81.20 | 86.26 |

| Pretrained Model | Classification Accuracy for Different Numbers of Epochs (%) | Mean Accuracy (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | ||

| VGG-16 | 94.20 | 95.00 | 96.70 | 95.00 | 96.70 | 98.30 | 97.90 | 97.10 | 97.20 | 95.80 | 96.39 |

| GoogLeNet | 96.30 | 95.60 | 95.30 | 69.40 | 95.00 | 94.38 | 94.30 | 92.50 | 90.60 | 91.60 | 91.49 |

| AlexNet | 92.50 | 88.80 | 93.80 | 92.50 | 95.00 | 94.40 | 94.20 | 93.10 | 92.20 | 90.00 | 92.65 |

| ResNet-50 | 93.80 | 91.90 | 91.20 | 96.20 | 95.10 | 95.60 | 86.90 | 94.30 | 86.20 | 84.40 | 91.67 |

| Pretrained Model | Optimal Hyperparameter Configuration | ||||

|---|---|---|---|---|---|

| Split Ratio | Solver | Batch Size | Learning Rate | Epochs | |

| VGG-16 | 0.70:0.30 | SGDM | 10 | 0.0001 | 60 |

| GoogLeNet | 0.80:0.20 | RMSprop | 10 | 0.0001 | 10 |

| AlexNet | 0.80:0.20 | SGDM | 16 | 0.0003 | 50 |

| ResNet-50 | 0.80:0.20 | RMSprop | 10 | 0.0001 | 40 |

| Pretrained Model | Maximum Classification Accuracy Achieved (%) |

|---|---|

| VGG-16 | 98.30 |

| GoogLeNet | 96.30 |

| AlexNet | 95.00 |

| ResNet-50 | 96.20 |

| Reference | Technique Used | Total Number of Conditions | Types of Plots/Signals | Accuracy (%) |

|---|---|---|---|---|

| [39] | Bayes Net Machine learning classifier | 8 | Vibrational Signals | 88.40 |

| [40] | Random Forest | 8 | Vibration Signals | 95.88 |

| J48 Algorithm | 94.00 | |||

| [41] | Deep Semi Supervised method | 2 | Multi Sensor data | 85.00 |

| [42] | KNN | 3 | Acceleration Signals | 71.90 |

| Naïve Bayes | 78.10 | |||

| Ensemble | 81.30 | |||

| Linear SVM | 84.40 | |||

| Proposed Method | 8 | Vibration signals with radar plots | 98.30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sai, S.A.; Venkatesh, S.N.; Dhanasekaran, S.; Balaji, P.A.; Sugumaran, V.; Lakshmaiya, N.; Paramasivam, P. Transfer Learning Based Fault Detection for Suspension System Using Vibrational Analysis and Radar Plots. Machines 2023, 11, 778. https://doi.org/10.3390/machines11080778

Sai SA, Venkatesh SN, Dhanasekaran S, Balaji PA, Sugumaran V, Lakshmaiya N, Paramasivam P. Transfer Learning Based Fault Detection for Suspension System Using Vibrational Analysis and Radar Plots. Machines. 2023; 11(8):778. https://doi.org/10.3390/machines11080778

Chicago/Turabian StyleSai, Samavedam Aditya, Sridharan Naveen Venkatesh, Seshathiri Dhanasekaran, Parameshwaran Arun Balaji, Vaithiyanathan Sugumaran, Natrayan Lakshmaiya, and Prabhu Paramasivam. 2023. "Transfer Learning Based Fault Detection for Suspension System Using Vibrational Analysis and Radar Plots" Machines 11, no. 8: 778. https://doi.org/10.3390/machines11080778

APA StyleSai, S. A., Venkatesh, S. N., Dhanasekaran, S., Balaji, P. A., Sugumaran, V., Lakshmaiya, N., & Paramasivam, P. (2023). Transfer Learning Based Fault Detection for Suspension System Using Vibrational Analysis and Radar Plots. Machines, 11(8), 778. https://doi.org/10.3390/machines11080778