Learning-Based Planner for Unknown Object Dexterous Manipulation Using ANFIS

Abstract

:1. Introduction

2. Problem Statement

3. Proposed Manipulation Strategy

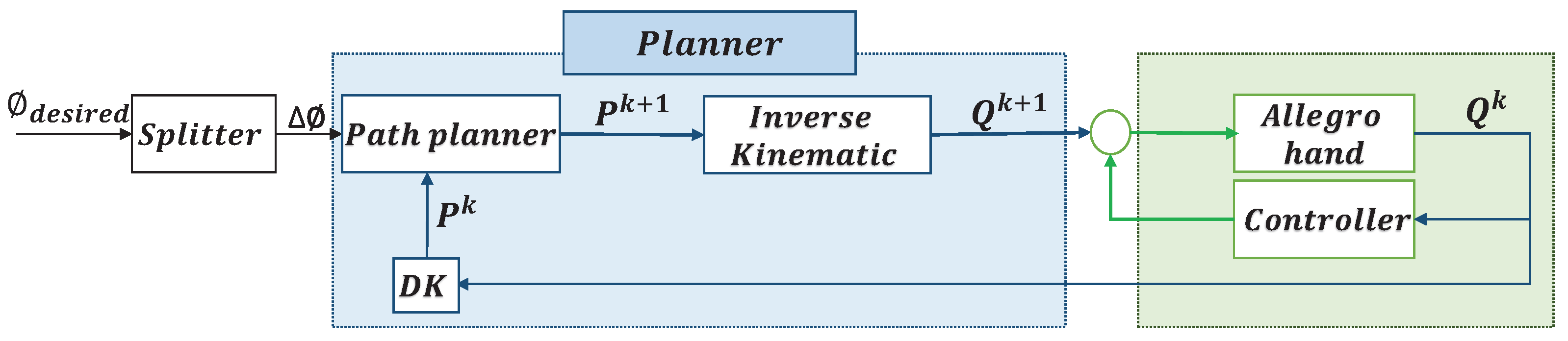

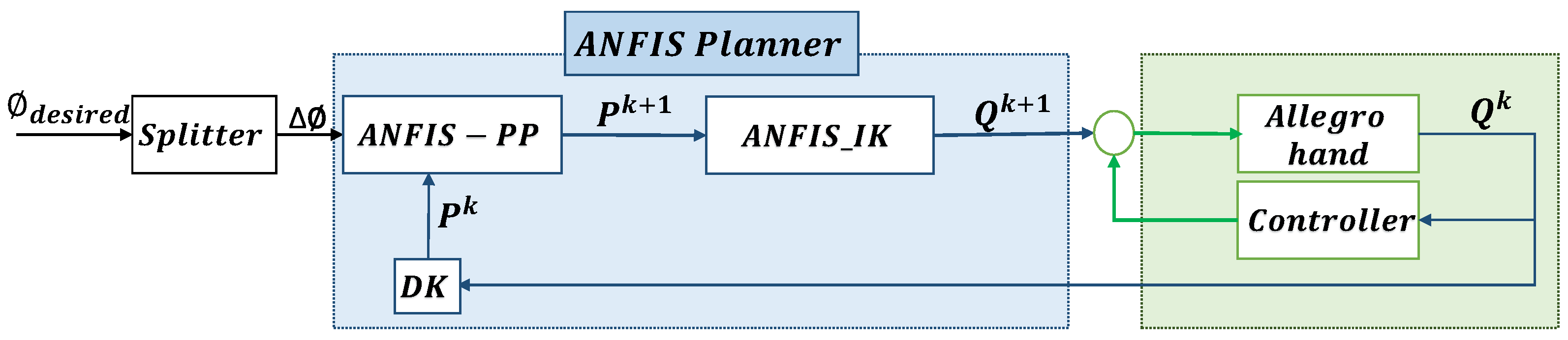

3.1. Overview of the Proposed Manipulation Strategy

- be the position of joint j of finger , with and .

- be the configuration of finger i.

- be the configuration of the hand.

- be the fingertip Cartesian coordinates of expressed in the hand reference system.

- be the set of all the fingertips of the hand represented in a matrix.

- Step 1: Input and output data generation and preparation.

- Step 2: Training the ANFIS structure.

- Step 3: Validate the ANFIS planner in simulation.

- Step 4: Validate the ANFIS planner in a real-world experiment.

3.2. Input and Output Data Generation and Preparation

3.3. Learn from Data Using the ANFIS Algorithm

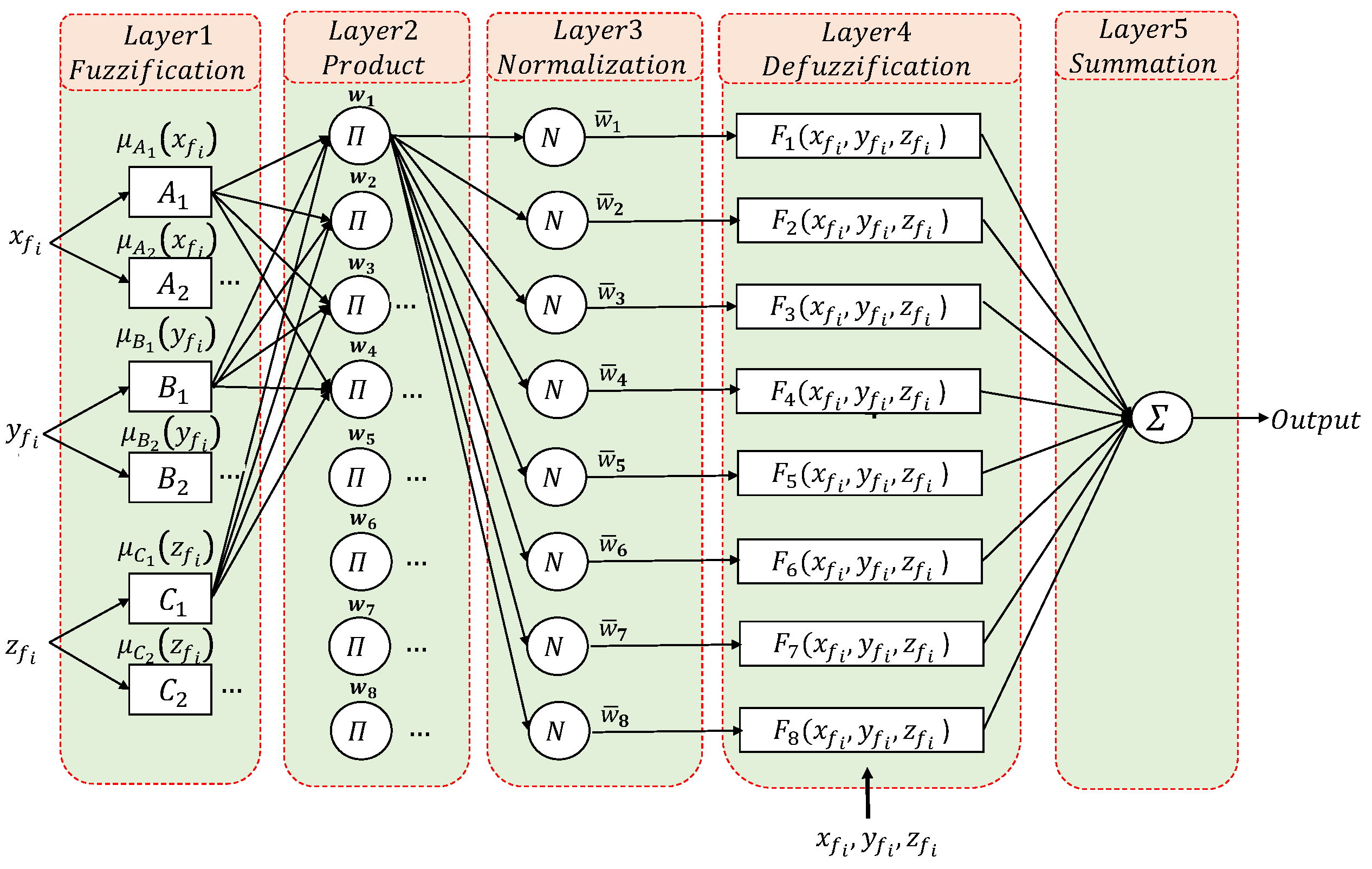

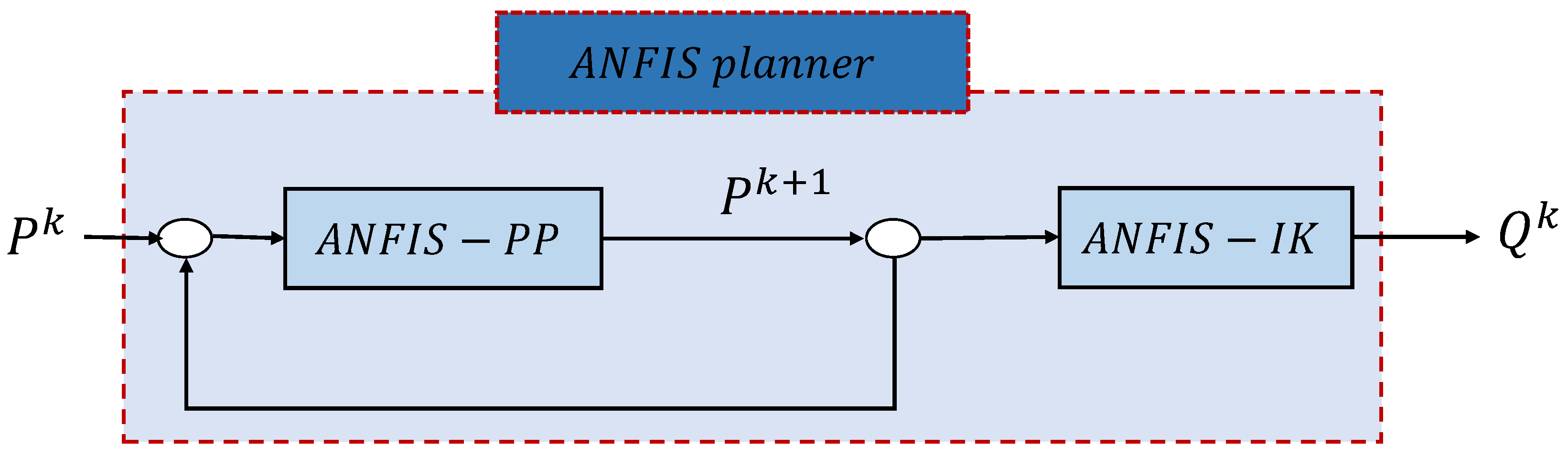

- The manipulation movements of each finger (ANFIS-PP). In this case, an ANFIS system is used to learn each of the coordinates of the next position of a fingertip (i.e., , and ) from the current ones (i.e., , and ). Thus, the input to the system in Figure 3 is a fingertip position and the output is a coordinate . This implies that the number of ANFIS systems involved in an ANFIS-PP is equal to three times the number of used fingers since a system as presented in Figure 3 is necessary for each of the three fingertip coordinates of each of the three fingers. Note that this means that the variation in each component of the fingertip depends on the current values of all three components.

- The inverse kinematics of the fingers (ANFIS-IK). In this case, an ANFIS system is used to learn each of the joint values of a finger from the Cartesian components of the fingertip position (i.e., , , and ). Thus, the input to the system in Figure 3 is a fingertip position and the output is . This implies that the number of ANFIS systems involved in an ANFIS-IK is equal to the number of joints of each finger.

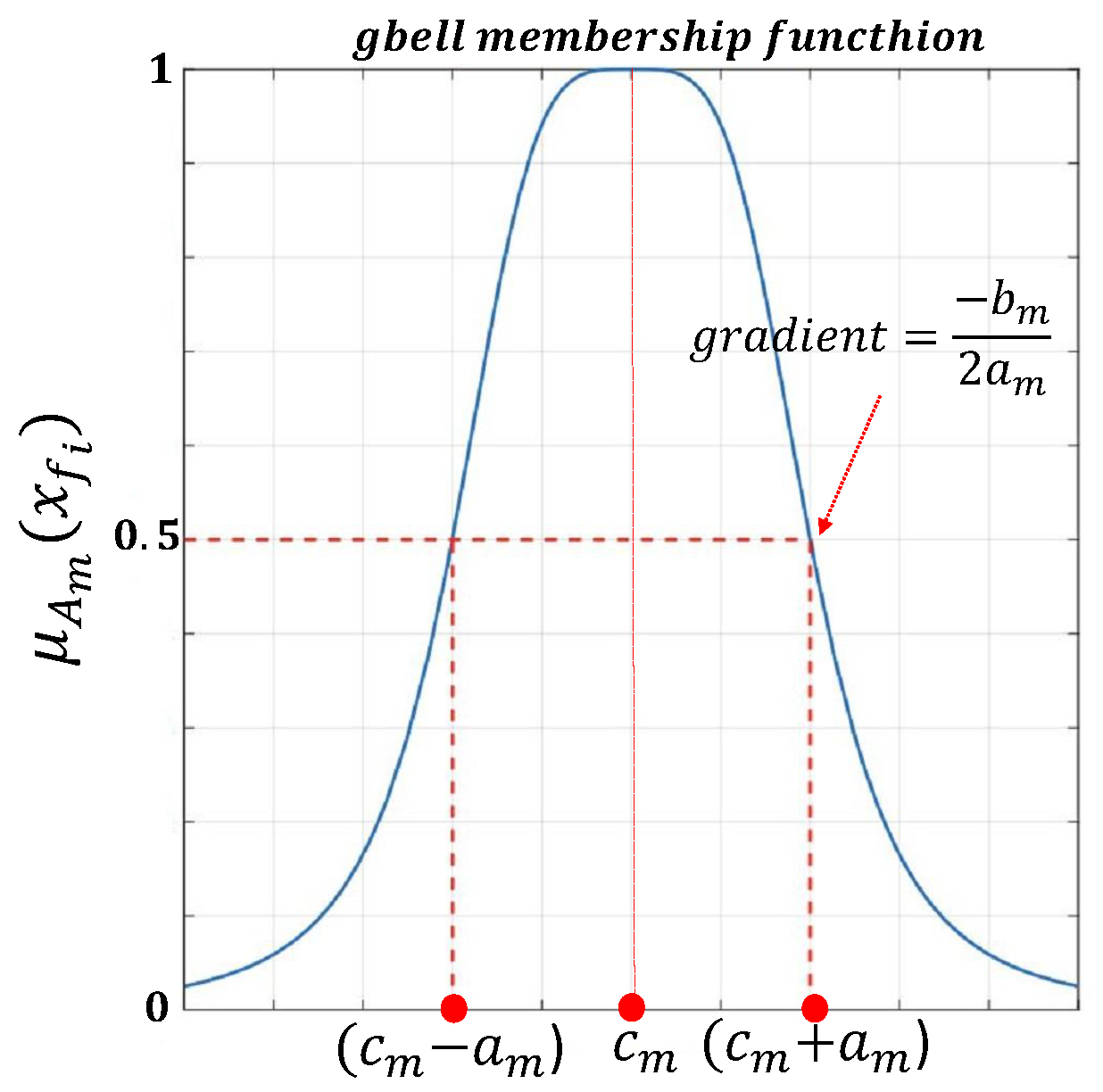

- Fuzzification layer: In this layer, there are a number of membership functions () for each input, i.e., , , and with . is a hyper-parameter selected by humans and based on compromising the accuracy and computational burden. In our particular applications, we choose . The selected type of these membership functions is a generalized Gaussian bell membership function (see Figure 4) defined as follows:where , and are the fuzzy membership degrees for each input variable, and , , are defined as premise parameters that determine the shape of the membership function and are optimized using back-propagation to minimize the error between the model output and the actual target values.

- Product Layer: In this layer, there are eight fixed nodes (resulting from three inputs and two membership functions for each one i.e., ). Each node represents a fuzzy rule, labeled , that outputs the firing strength of each rule , , which multiplies the incoming signals and sends the product out of the layer,

- Normalization layer: In this layer, there are again eight fixed nodes, labeled N, that output normalized firing strengths , computed as the ratio of the rules firing strength to the sum of all rule’s firing strengths:

- Defuzzification layer: In this layer, there are eight adaptive nodes with a function that outputs the weighted value of a given rule as:where , , , and are defined as consequent parameters that will be trained by a supervised learning algorithm.

- Summation layer: In this layer, a single fixed node, labeled , outputs one of the coordinates of the fingertip as the summation of all incoming signals, i.e., the output of the ANFIS system is either , , or depending on the case,

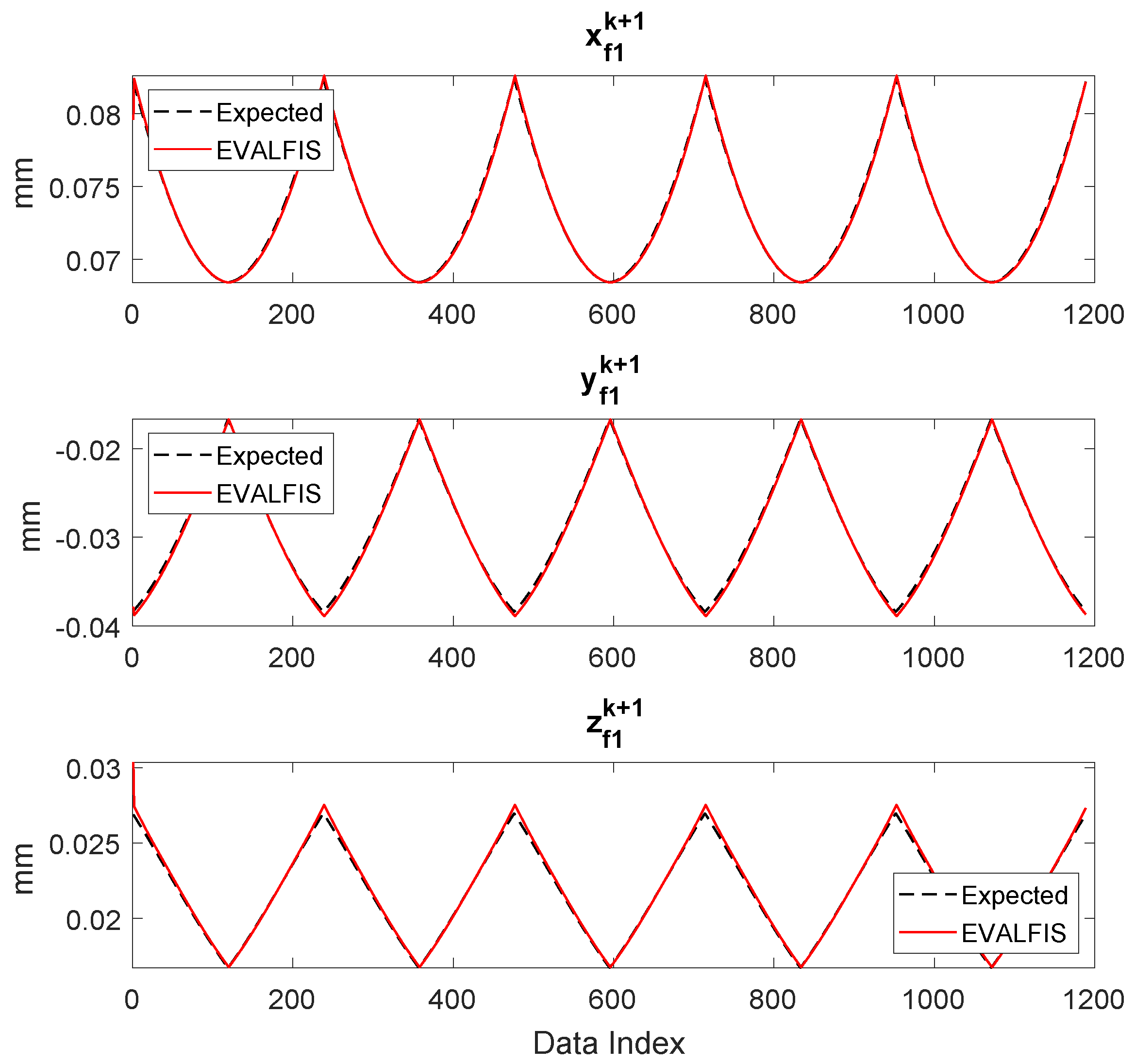

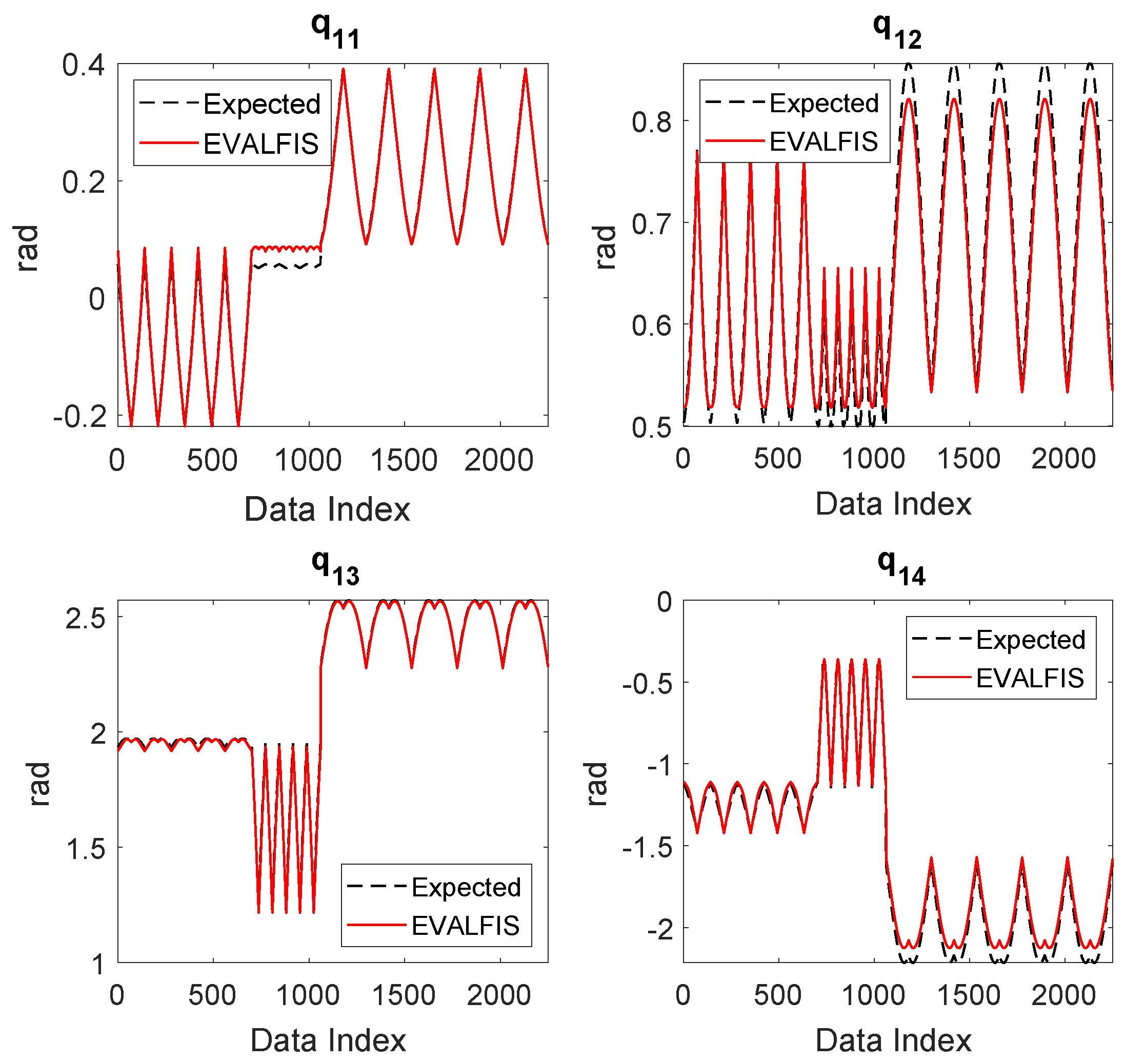

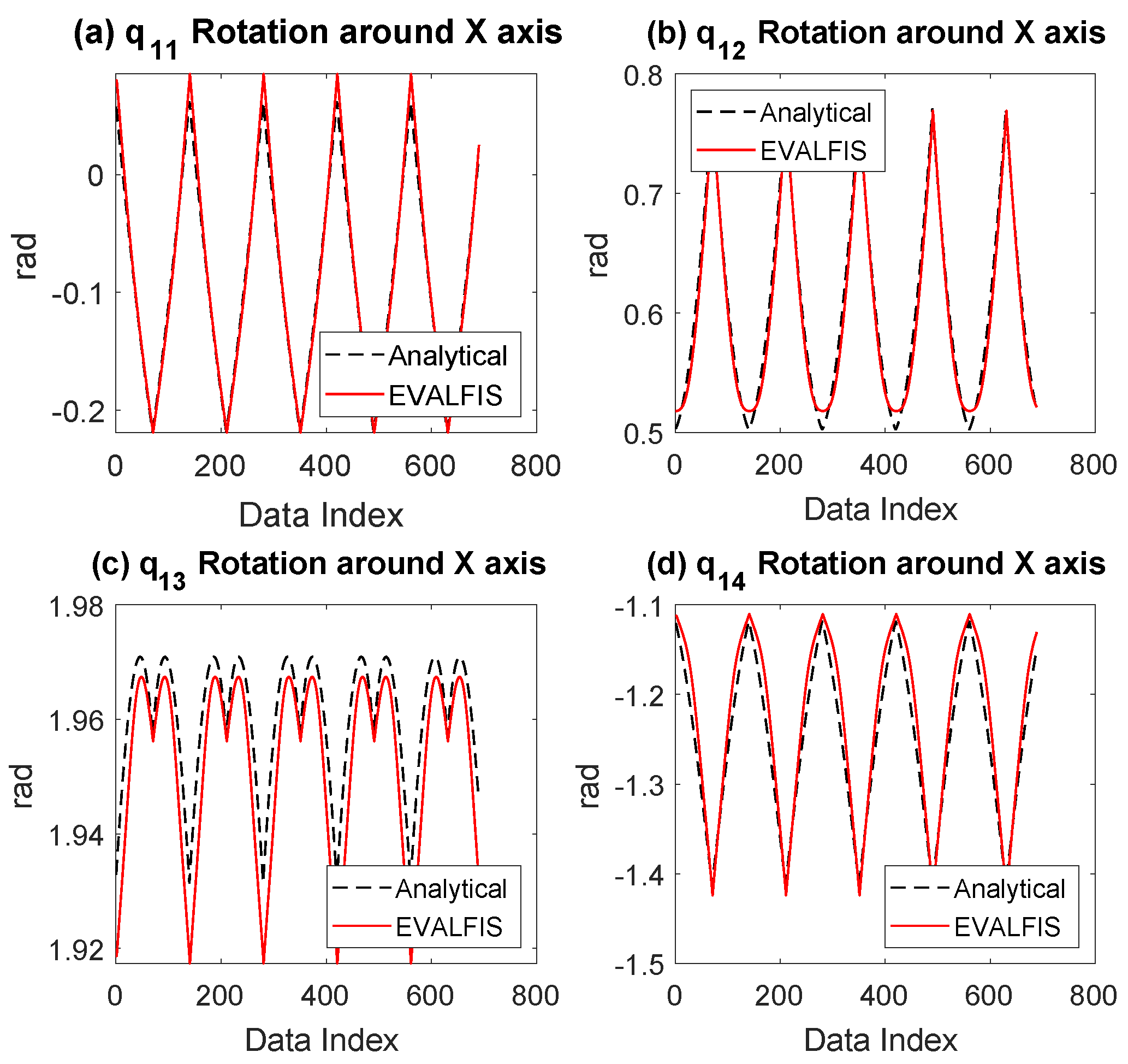

3.4. Validation of the Learned ANFIS Planner in Simulation

4. Experimental Validation

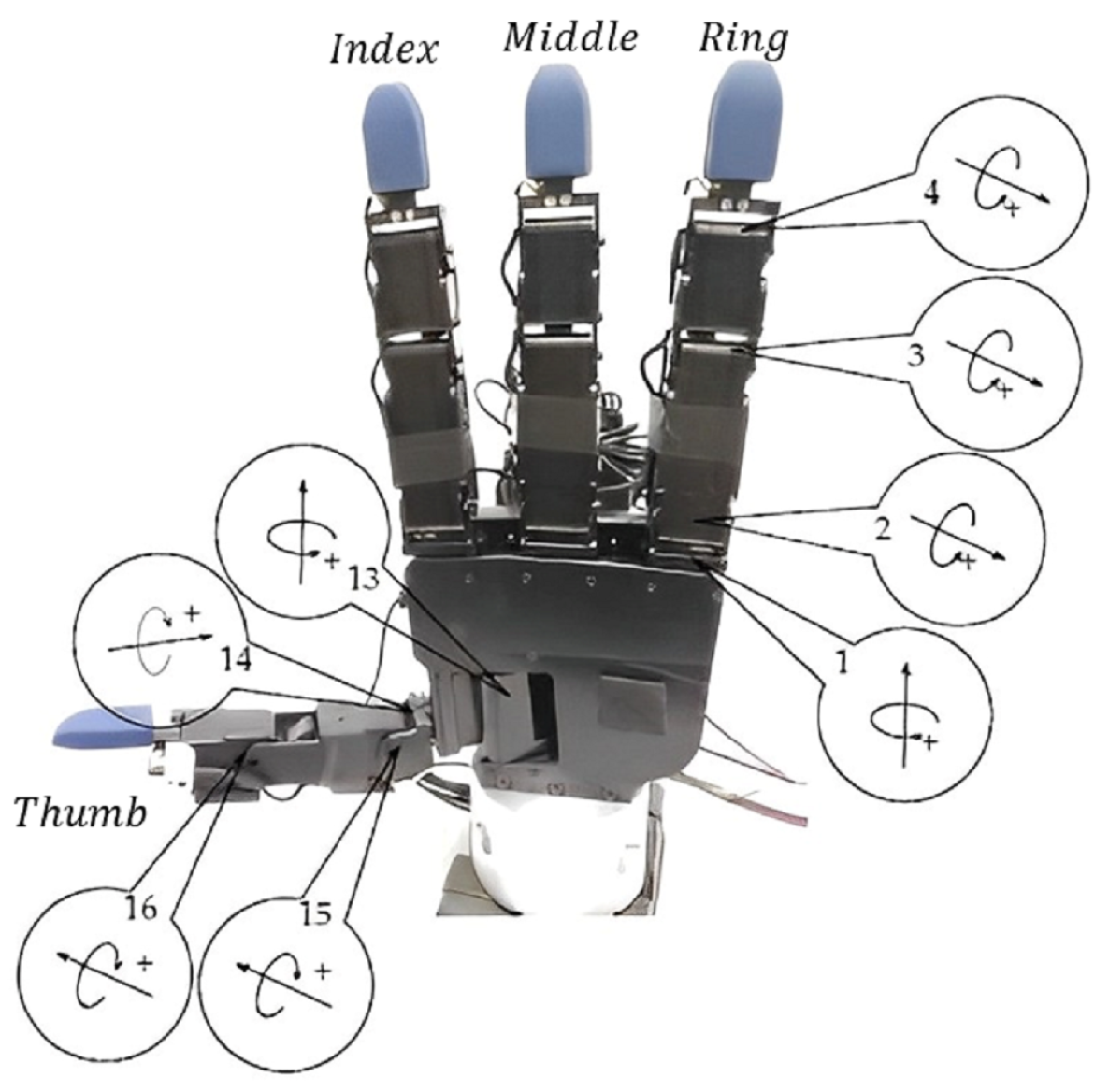

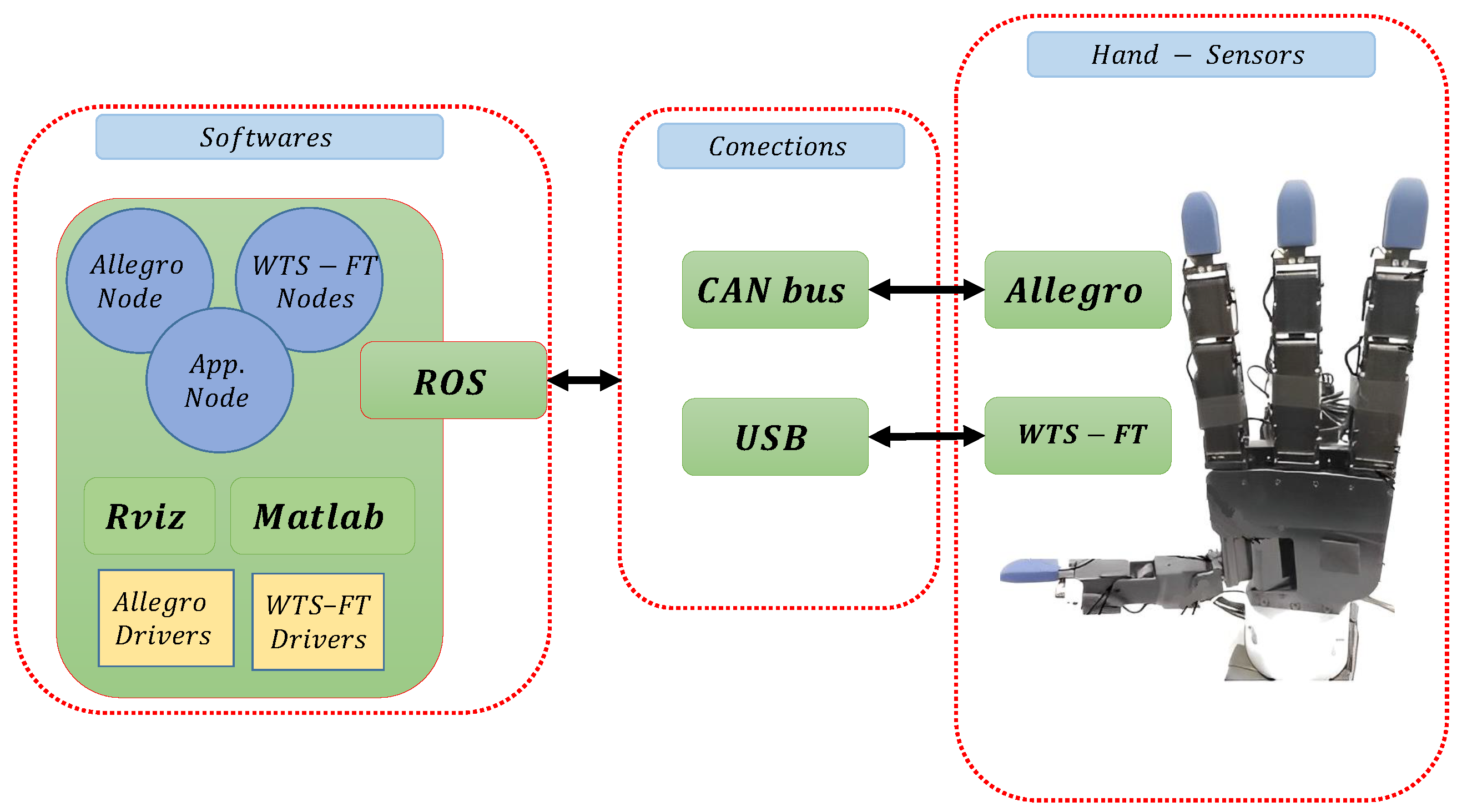

4.1. Hardware Set-Up

4.2. Implementation

- (a)

- A partition of the input space into a grid or fuzzy regions to facilitate the creation of fuzzy rules, overlapping the membership functions, and providing a structured way to represent and model the relationships between input and output variables in a fuzzy system.

- (b)

- The activation of each fuzzy rule, which is determined as a fuzzy AND operation on the membership values of the input variables associated with that rule.

- (c)

- The combination of rules which is performed by using a fuzzy OR operation. The OR operation typically involves summing up the contributions of each rule, weighted by its activation level.

- (d)

- The membership degree to be constrained between 0 and 1.

- (e)

- The integration of multiple optimization or learning techniques to enhance the training and performance of the ANFIS model, which is known as a hybrid method.Table 1 summarizes the specification of the ANFIS architecture.

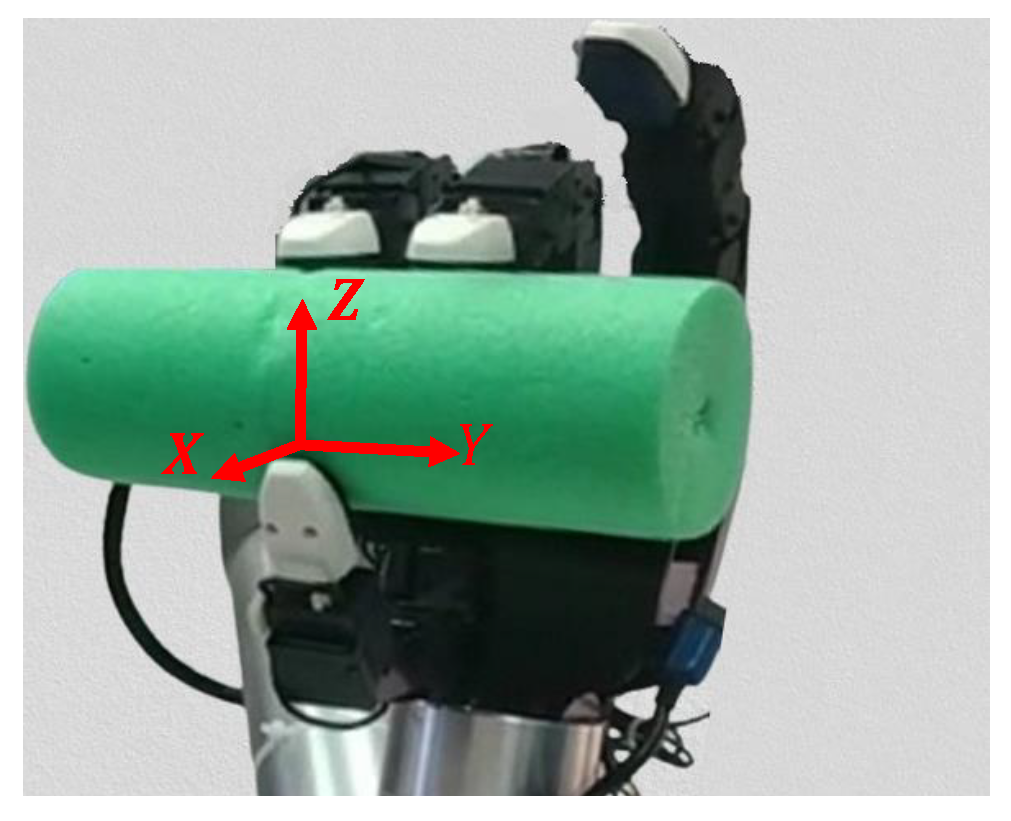

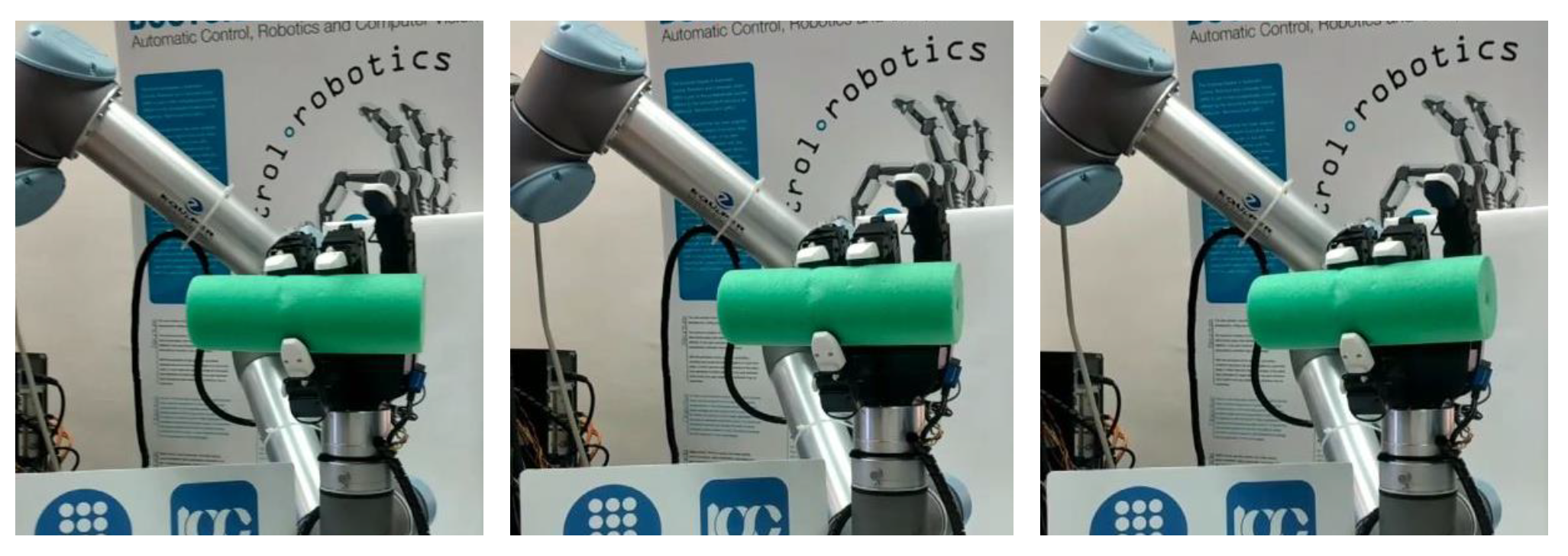

4.3. Description of the Experiment

4.4. Experimental Results

- Selecting the desired rotation axis, X, Y, or Z, by operator.

- Selecting (the desired rotation angle illustrated in Figure 2) by operator. The maximum rotational angles empirically achieved in our experiment around the X-, Y- or Z-axes were 35, 18, and 60 degrees, respectively.

- Defining (which is the rotation step) equal to 0.5 degree (see Figure 2).

- Importing initial fingertips point position from RVIZ.

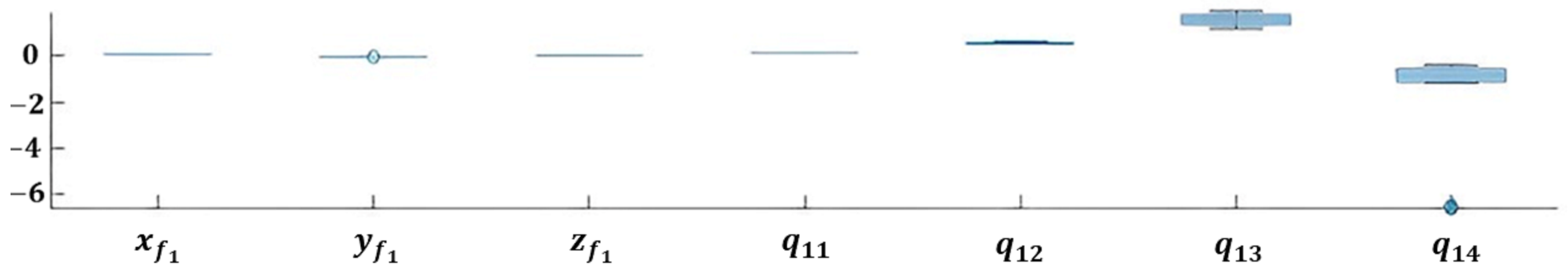

- Defining the desired number of rotation cycles.

- Executing the MATLAB code.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ceccarelli, M.; Ceccarelli, M. Fundamentals of the Mechanics of Robots; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Bicchi, A. Hands for dexterous manipulation and robust grasping: A difficult road toward simplicity. IEEE Trans. Robot. Autom. 2000, 16, 652–662. [Google Scholar] [CrossRef]

- Siciliano, B.; Khatib, O.; Kröger, T. Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2008; Volume 200. [Google Scholar]

- Rus, D. In-hand dexterous manipulation of piecewise-smooth 3-d objects. Int. J. Robot. Res. 1999, 18, 355–381. [Google Scholar] [CrossRef]

- Andrews, S.; Kry, P.G. Goal directed multi-finger manipulation: Control policies and analysis. Comput. Graph. 2013, 37, 830–839. [Google Scholar] [CrossRef]

- Dafle, N.C.; Rodriguez, A.; Paolini, R.; Tang, B.; Srinivasa, S.S.; Erdmann, M.; Mason, M.T.; Lundberg, I.; Staab, H.; Fuhlbrigge, T. Extrinsic dexterity: In-hand manipulation with external forces. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1578–1585. [Google Scholar]

- Bai, Y.; Liu, C.K. Dexterous manipulation using both palm and fingers. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1560–1565. [Google Scholar]

- Montaño, A.; Suárez, R. Dexterous manipulation of unknown objects using virtual contact points. Robotics 2019, 8, 86. [Google Scholar] [CrossRef]

- Han, L.; Trinkle, J. Dextrous manipulation by rolling and finger gaiting. In Proceedings of the 1998 IEEE International Conference on Robotics and Automation (Cat. No.98CH36146), Leuven, Belgium, 20 May 1998; Volume 1, pp. 730–735. [Google Scholar] [CrossRef]

- Funabashi, S.; Schmitz, A.; Sato, T.; Somlor, S.; Sugano, S. Robust in-hand manipulation of variously sized and shaped objects. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 257–263. [Google Scholar] [CrossRef]

- Liarokapis, M.; Dollar, A.M. Deriving dexterous, in-hand manipulation primitives for adaptive robot hands. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1951–1958. [Google Scholar] [CrossRef]

- Hong, J.; Lafferriere, G.; Mishra, B.; Tan, X. Fine manipulation with multifinger hands. In Proceedings of the IEEE International Conference on Robotics and Automation, Cincinnati, OH, USA, 13–18 May 1990; Volume 3, pp. 1568–1573. [Google Scholar] [CrossRef]

- Goodwine, B.; Burdick, J. Motion planning for kinematic stratified systems with application to quasi-static legged locomotion and finger gaiting. IEEE Trans. Robot. Autom. 2002, 18, 209–222. [Google Scholar] [CrossRef]

- Montaño, A.; Suárez, R. Improving grasping forces during the manipulation of unknown objects. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3490–3495. [Google Scholar]

- Montaño, A.; Suárez, R. Manipulation of unknown objects to improve the grasp quality using tactile information. Sensors 2018, 18, 1412. [Google Scholar] [CrossRef] [PubMed]

- Lepert, M.; Pan, C.; Yuan, S.; Antonova, R.; Bohg, J. In-Hand Manipulation of Unknown Objects with Tactile Sensing for Insertion. In Proceedings of the Embracing Contacts-Workshop at ICRA 2023, London, UK, 2 June 2023. [Google Scholar]

- Tian, H.; Song, K.; Li, S.; Ma, S.; Xu, J.; Yan, Y. Data-driven robotic visual grasping detection for unknown objects: A problem-oriented review. Expert Syst. Appl. 2023, 211, 118624. [Google Scholar] [CrossRef]

- Montaño, A.; Suárez, R. Commanding the object orientation using dexterous manipulation. In Robot 2015: Second Iberian Robotics Conference; Springer: Berlin/Heidelberg, Germany, 2016; pp. 69–79. [Google Scholar]

- Kroemer, O.; Leischnig, S.; Luettgen, S.; Peters, J. A kernel-based approach to learning contact distributions for robot manipulation tasks. Auton. Robot. 2018, 42, 581–600. [Google Scholar] [CrossRef]

- Jiang, Y.; Lim, M.; Zheng, C.; Saxena, A. Learning to place new objects in a scene. Int. J. Robot. Res. 2012, 31, 1021–1043. [Google Scholar] [CrossRef]

- Sturm, J.; Jain, A.; Stachniss, C.; Kemp, C.C.; Burgard, W. Operating articulated objects based on experience. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 2739–2744. [Google Scholar]

- Lenz, I.; Lee, H.; Saxena, A. Deep learning for detecting robotic grasps. Int. J. Robot. Res. 2015, 34, 705–724. [Google Scholar] [CrossRef]

- Schenck, C.; Tompson, J.; Levine, S.; Fox, D. Learning robotic manipulation of granular media. In Proceedings of the Conference on Robot Learning, PMLR, Mountain View, CA, USA, 13–15 November 2017; pp. 239–248. [Google Scholar]

- Konidaris, G.; Kaelbling, L.P.; Lozano-Perez, T. From skills to symbols: Learning symbolic representations for abstract high-level planning. J. Artif. Intell. Res. 2018, 61, 215–289. [Google Scholar] [CrossRef]

- Andrychowicz, O.M.; Baker, B.; Chociej, M.; Jozefowicz, R.; McGrew, B.; Pachocki, J.; Petron, A.; Plappert, M.; Powell, G.; Ray, A.; et al. Learning dexterous in-hand manipulation. Int. J. Robot. Res. 2020, 39, 3–20. [Google Scholar] [CrossRef]

- Schlagenhauf, C.; Bauer, D.; Chang, K.H.; King, J.P.; Moro, D.; Coros, S.; Pollard, N. Control of tendon-driven soft foam robot hands. In Proceedings of the 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), Beijing, China, 6–9 November 2018; pp. 1–7. [Google Scholar]

- Ren, T.; Dong, Y.; Wu, D.; Chen, K. Learning-based variable compliance control for robotic assembly. J. Mech. Robot. 2018, 10, 061008. [Google Scholar] [CrossRef]

- Detry, R.; Kraft, D.; Kroemer, O.; Bodenhagen, L.; Peters, J.; Krüger, N.; Piater, J. Learning grasp affordance densities. Paladyn 2011, 2, 1–17. [Google Scholar] [CrossRef]

- Hjelm, M.; Detry, R.; Ek, C.H.; Kragic, D. Representations for cross-task, cross-object grasp transfer. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 5699–5704. [Google Scholar]

- Lu, Q.; Hermans, T. Modeling grasp type improves learning-based grasp planning. IEEE Robot. Autom. Lett. 2019, 4, 784–791. [Google Scholar] [CrossRef]

- Konidaris, G.; Kuindersma, S.; Grupen, R.; Barto, A. Autonomous skill acquisition on a mobile manipulator. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 7–11 August 2011; Volume 25, pp. 1468–1473. [Google Scholar]

- Juett, J.; Kuipers, B. Learning to grasp by extending the peri-personal space graph. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 8695–8700. [Google Scholar]

- Su, Z.; Kroemer, O.; Loeb, G.E.; Sukhatme, G.S.; Schaal, S. Learning to switch between sensorimotor primitives using multimodal haptic signals. In Proceedings of the From Animals to Animats 14: 14th International Conference on Simulation of Adaptive Behavior, SAB 2016, Aberystwyth, UK, 23–26 August 2016; Proceedings 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 170–182. [Google Scholar]

- Arunachalam, S.P.; Silwal, S.; Evans, B.; Pinto, L. Dexterous imitation made easy: A learning-based framework for efficient dexterous manipulation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 5954–5961. [Google Scholar]

- Kumar, V.; Todorov, E.; Levine, S. Optimal control with learned local models: Application to dexterous manipulation. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 378–383. [Google Scholar]

- Li, M.; Yin, H.; Tahara, K.; Billard, A. Learning object-level impedance control for robust grasping and dexterous manipulation. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6784–6791. [Google Scholar]

- Gupta, A.; Eppner, C.; Levine, S.; Abbeel, P. Learning dexterous manipulation for a soft robotic hand from human demonstrations. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 3786–3793. [Google Scholar]

- Jang, J.S. ANFIS: Adaptive-network-based fuzzy inference system. IEEE Trans. Syst. Man Cybern. 1993, 23, 665–685. [Google Scholar] [CrossRef]

- Patel, D.; Cohen, K. Obstacle Avoidance and Target Tracking by Two Wheeled Differential Drive Mobile Robot Using ANFIS in Static and Dynamic Environment. In Fuzzy Information Processing 2020: Proceedings of the 2020 Annual Conference of the North American Fuzzy Information Processing Society, NAFIPS 2020; Springer: Berlin/Heidelberg, Germany, 2022; pp. 337–348. [Google Scholar]

- Pandey, A.; Kumar, S.; Pandey, K.K.; Parhi, D.R. Mobile robot navigation in unknown static environments using ANFIS controller. Perspect. Sci. 2016, 8, 421–423. [Google Scholar] [CrossRef]

- Pandey, A.; Kashyap, A.K.; Parhi, D.R.; Patle, B. Autonomous mobile robot navigation between static and dynamic obstacles using multiple ANFIS architecture. World J. Eng. 2019, 16, 275–286. [Google Scholar] [CrossRef]

- Fan, S.; Liu, Y.; Wu, K.; Chen, Z.; Jiang, Z.; Jiang, L.; Liu, H. Object shape recognition and grasping by five-fingered robotic hand based on E-ANFIS model. In Proceedings of the 2009 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guilin, China, 19–23 December 2009; pp. 1075–1080. [Google Scholar]

- Keskin, C.; Kıraç, F.; Kara, Y.E.; Akarun, L. Real time hand pose estimation using depth sensors. In Consumer Depth Cameras for Computer Vision: Research Topics and Applications; Springer: Berlin/Heidelberg, Germany, 2013; pp. 119–137. [Google Scholar]

- Ma, Y.; Mao, Z.H.; Jia, W.; Li, C.; Yang, J.; Sun, M. Magnetic hand tracking for human-computer interface. IEEE Trans. Magn. 2011, 47, 970–973. [Google Scholar] [CrossRef]

- Meattini, R.; Suárez, R.; Palli, G.; Melchiorri, C. Human to Robot Hand Motion Mapping Methods: Review and Classification. IEEE Trans. Robot. 2022, 39, 842–861. [Google Scholar] [CrossRef]

- Gioioso, G.; Salvietti, G.; Malvezzi, M.; Prattichizzo, D. Mapping Synergies From Human to Robotic Hands With Dissimilar Kinematics: An Approach in the Object Domain. IEEE Trans. Robot. 2013, 29, 825–837. [Google Scholar] [CrossRef]

- Salvietti, G.; Malvezzi, M.; Gioioso, G.; Prattichizzo, D. On the use of homogeneous transformations to map human hand movements onto robotic hands. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 5352–5357. [Google Scholar]

- Allegro Robotic Hand—Wonik Robotics. Available online: https://www.wonikrobotics.com/en/robot-hand (accessed on 21 November 2023).

- WTS-FT—Weiss Robotics GmbH & Co. Available online: https://weiss-robotics.com/company/ (accessed on 10 August 2019).

- Feix, T.; Romero, J.; Schmiedmayer, H.B.; Dollar, A.M.; Kragic, D. The grasp taxonomy of human grasp types. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 66–77. [Google Scholar] [CrossRef]

- Chen, Y.S.; Hung, Y.H.; Lee, M.Y.J.; Lai, C.J.; Chang, J.R.; Chien, C.Y. Identification of the Yield Rate by a Hybrid Fuzzy Control PID-Based Four-Stage Model: A Case Study of Optical Filter Industry. Axioms 2024, 13, 54. [Google Scholar] [CrossRef]

- Quigley, M.; Gerkeyy, B.; Conleyy, K.; Fausty, J.; Footey, T.; Leibsz, J.; Bergery, E.; Wheelery, R.; Ng, A. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

| Name | Type |

|---|---|

| Generate FIS type | Sugeno |

| The initial FIS model | Grid partition |

| Decision method for fuzzy logic operation AND (minimum) | Product |

| Decision method for fuzzy logic operation OR (maximum) | Probabilistic |

| Output defuzzification method | Weighted average |

| Number of membership functions for | 2 |

| Number of membership functions for | 2 |

| Number of membership functions for | 2 |

| Input membership function type | Gaussian bell |

| Output membership function type | Constant type |

| Number of rules | 8 |

| Train FIS optimization method | Hybrid |

| Number of epochs | 30 |

| 1 | 0.09363 | −0.03090 | 0.03066 | 0.16250 | 0.53036 | 1.91182 | −1.12669 |

| 2 | 0.09363 | −0.03090 | 0.03066 | 0.15810 | 0.52958 | 1.91366 | −1.12774 |

| 3 | 0.09363 | −0.03132 | 0.03061 | 0.15369 | 0.52887 | 1.91548 | −1.12886 |

| ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| 9000 | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ | ⋯ |

| ANFIS-PP | ANFIS-IK | ||||||

|---|---|---|---|---|---|---|---|

| Output | |||||||

| MSE | |||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sheikhsamad, M.; Suárez, R.; Rosell, J. Learning-Based Planner for Unknown Object Dexterous Manipulation Using ANFIS. Machines 2024, 12, 364. https://doi.org/10.3390/machines12060364

Sheikhsamad M, Suárez R, Rosell J. Learning-Based Planner for Unknown Object Dexterous Manipulation Using ANFIS. Machines. 2024; 12(6):364. https://doi.org/10.3390/machines12060364

Chicago/Turabian StyleSheikhsamad, Mohammad, Raúl Suárez, and Jan Rosell. 2024. "Learning-Based Planner for Unknown Object Dexterous Manipulation Using ANFIS" Machines 12, no. 6: 364. https://doi.org/10.3390/machines12060364

APA StyleSheikhsamad, M., Suárez, R., & Rosell, J. (2024). Learning-Based Planner for Unknown Object Dexterous Manipulation Using ANFIS. Machines, 12(6), 364. https://doi.org/10.3390/machines12060364