Enhanced Non-Destructive Testing of Small Wind Turbine Blades Using Infrared Thermography

Abstract

1. Introduction

1.1. Inferared Thermography (IRT) Non-Destructive Testing (NDT)

1.2. Object Detection and Localization

1.3. Contributions

1.4. Research Roadmap

2. Methodology

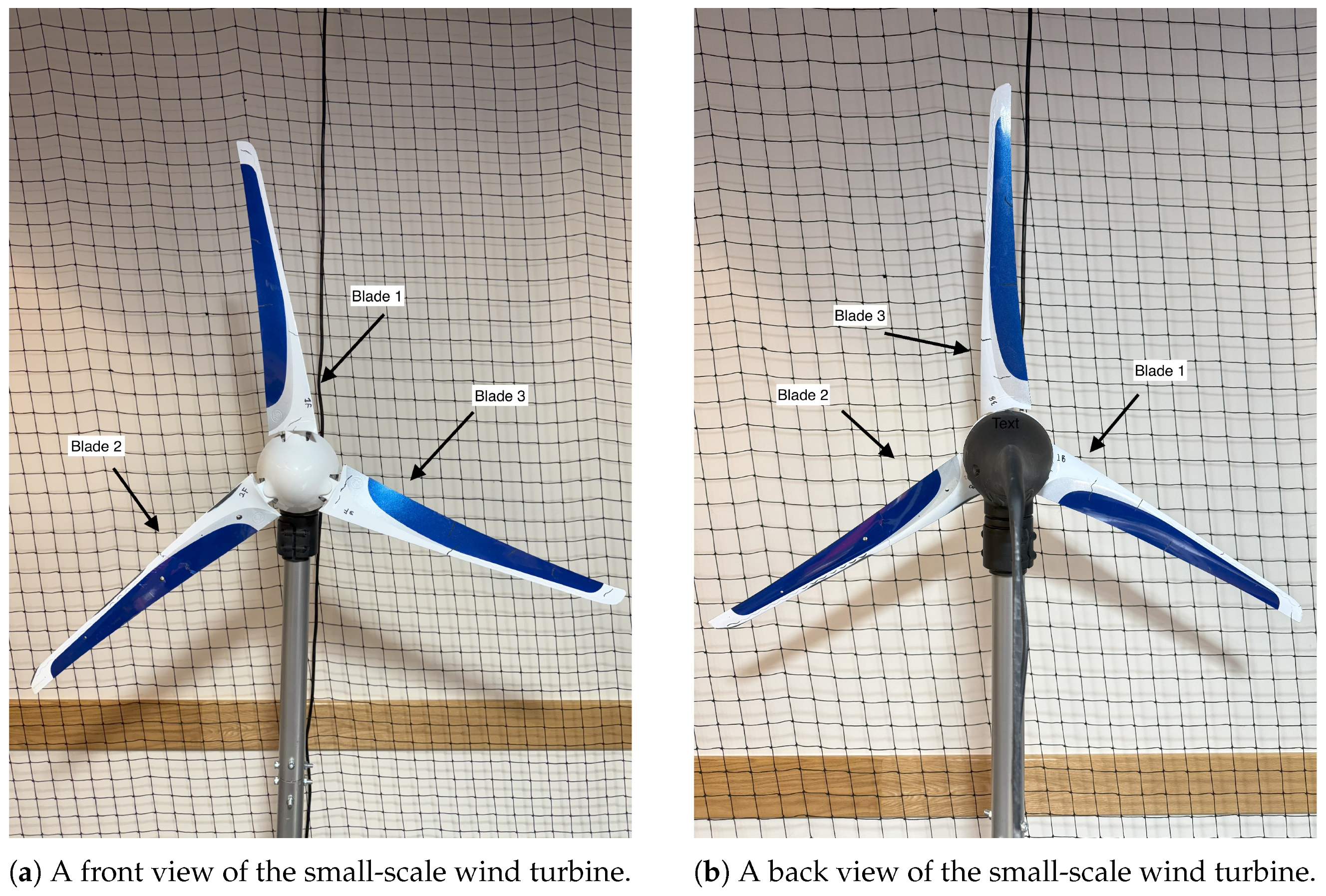

2.1. The Studied Wind Turbine Specification

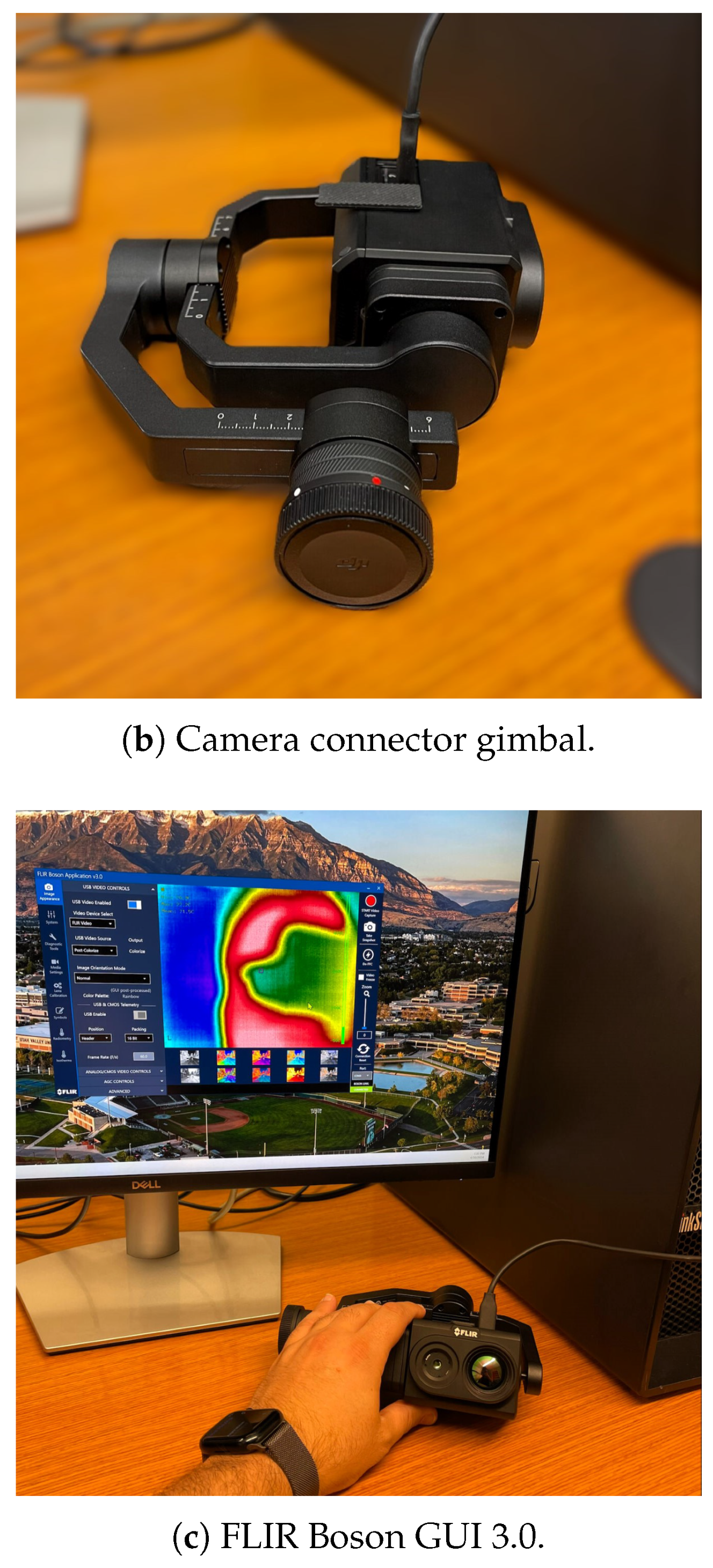

2.2. Camera Deployment and Imaging Setup

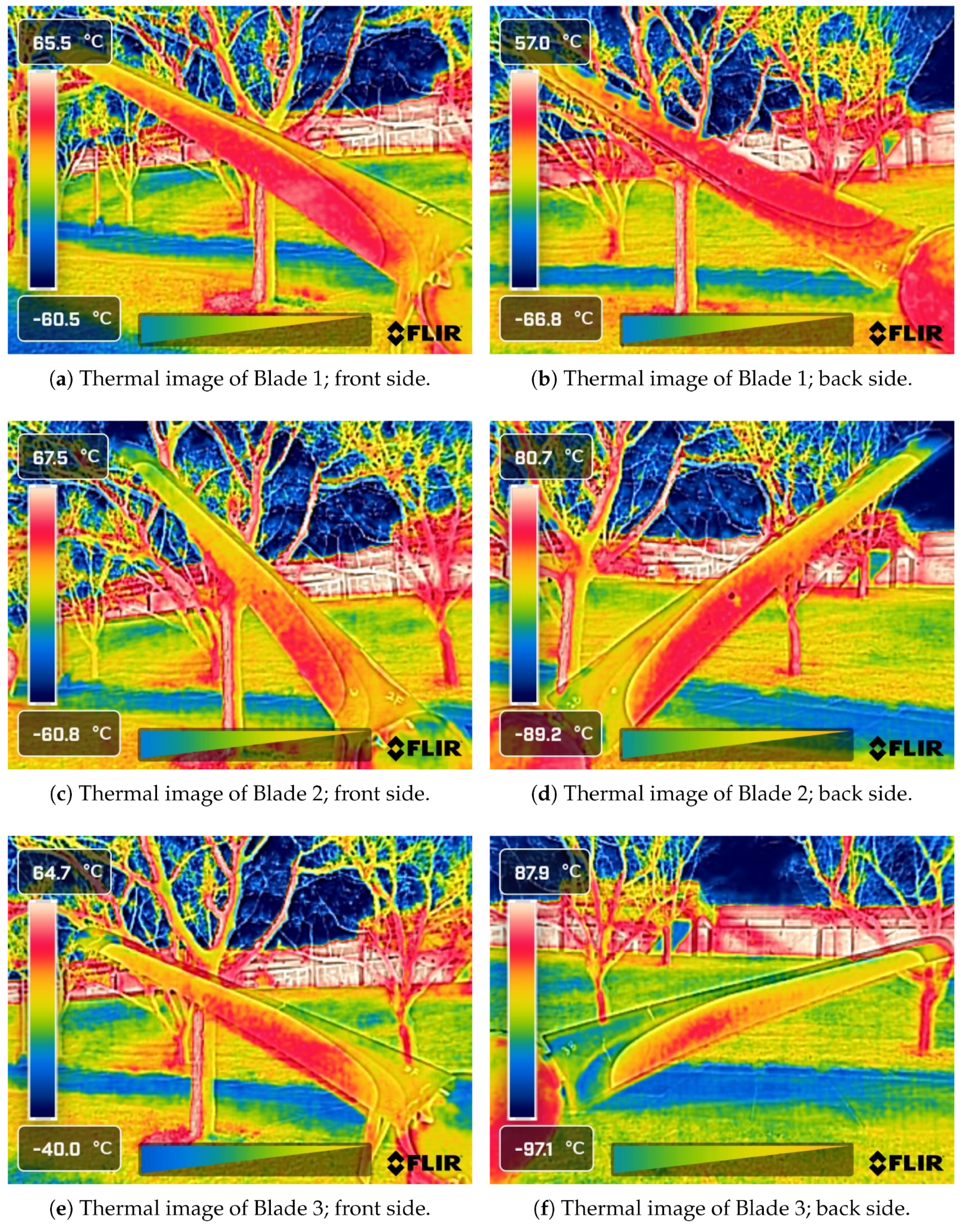

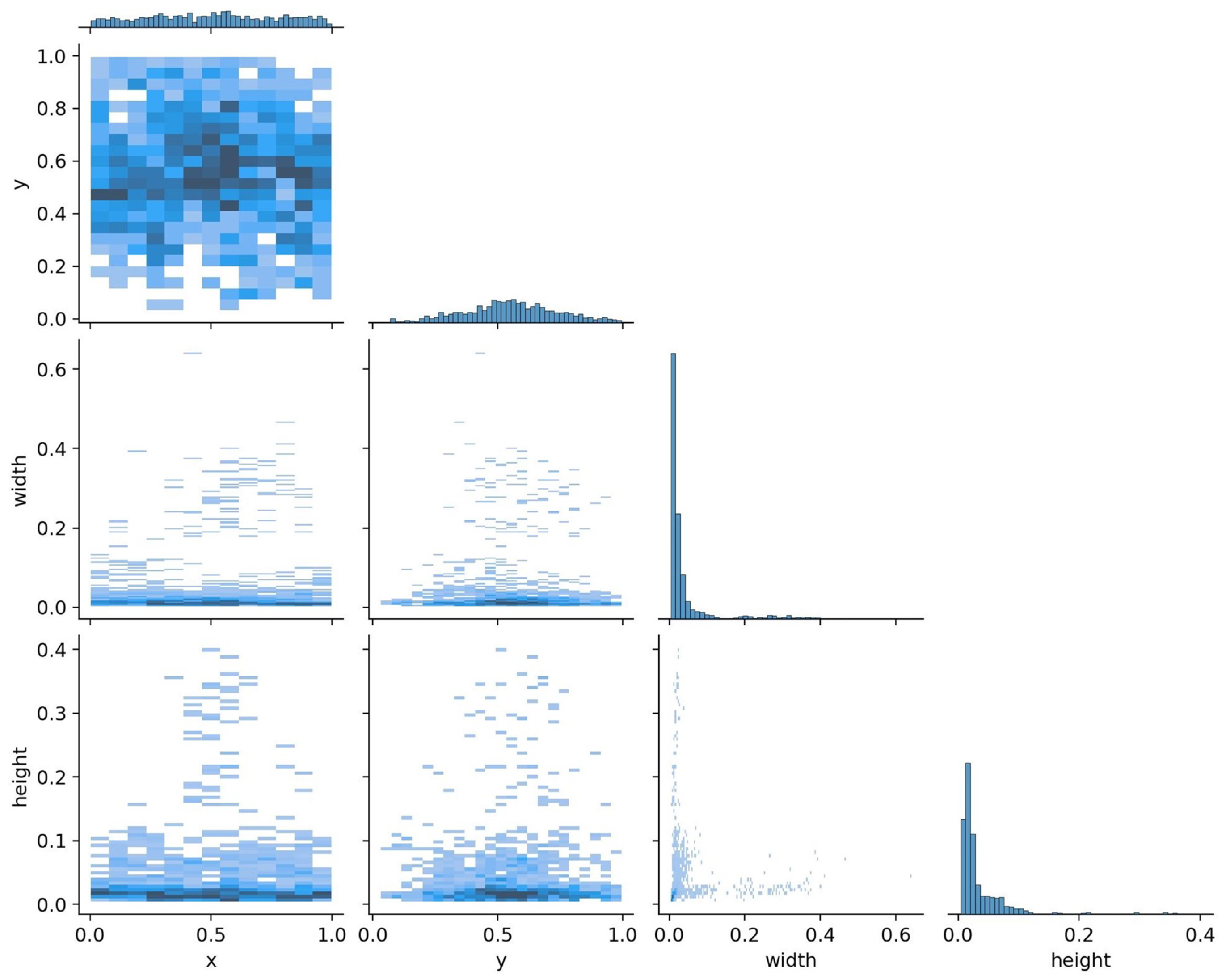

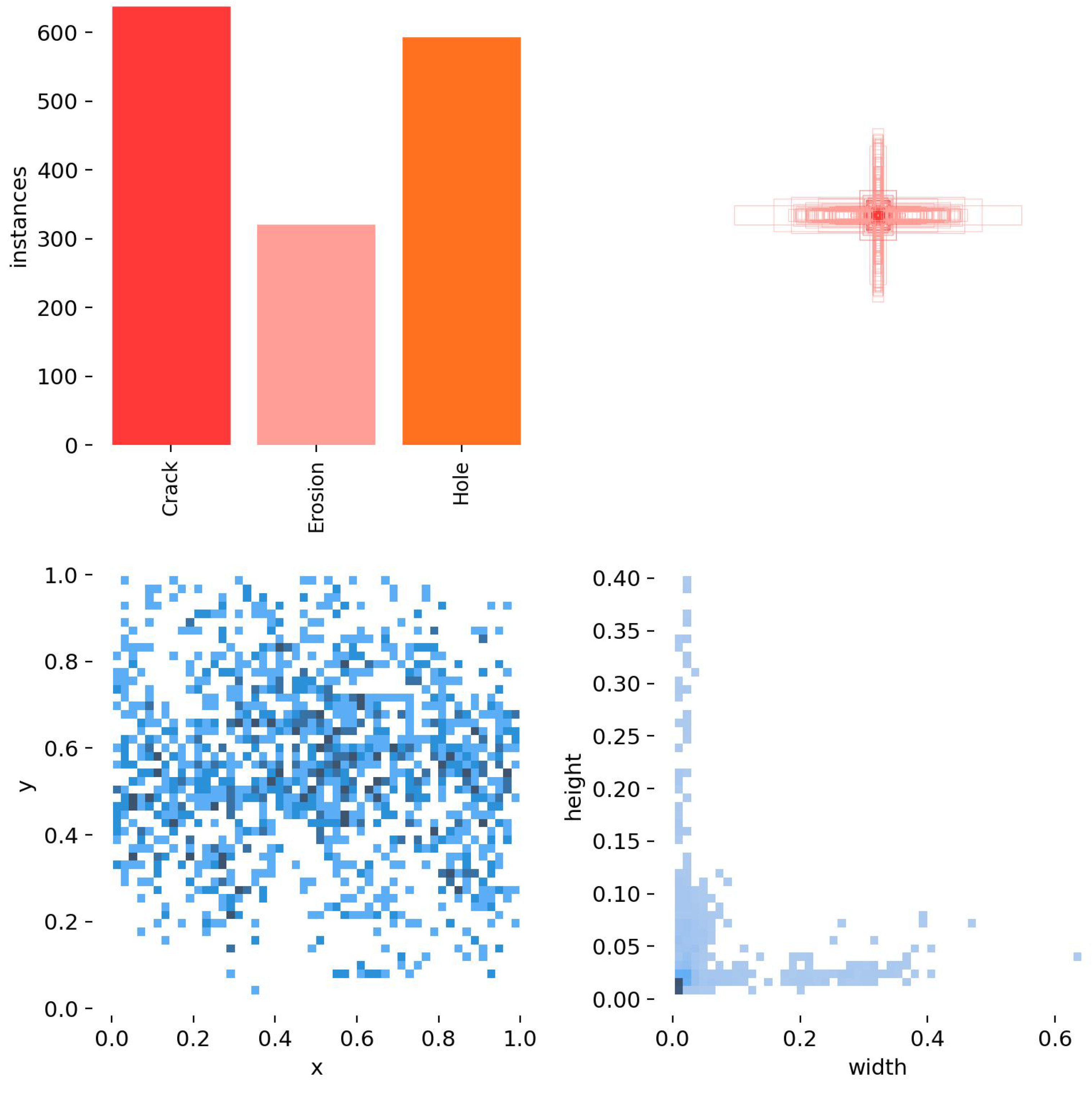

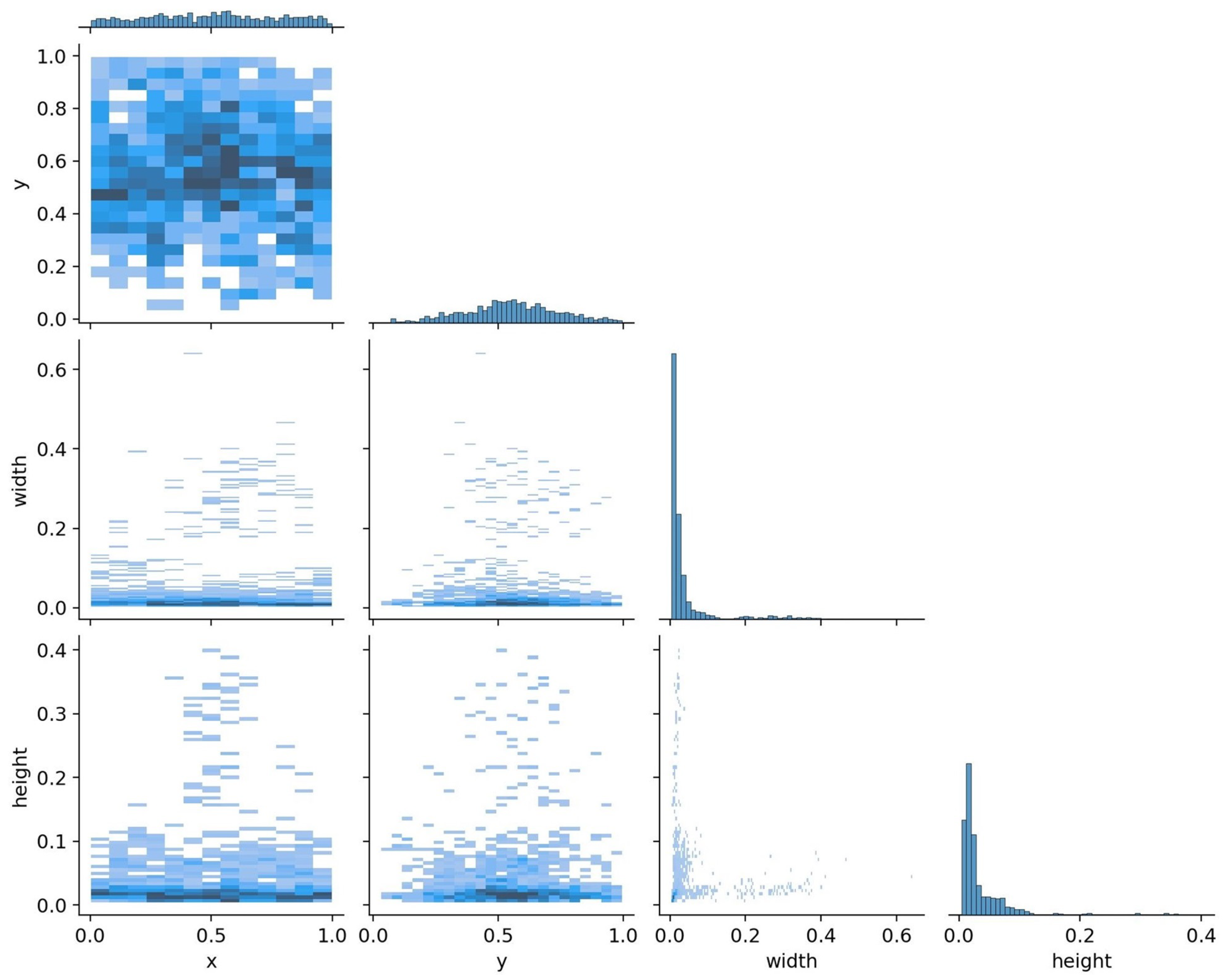

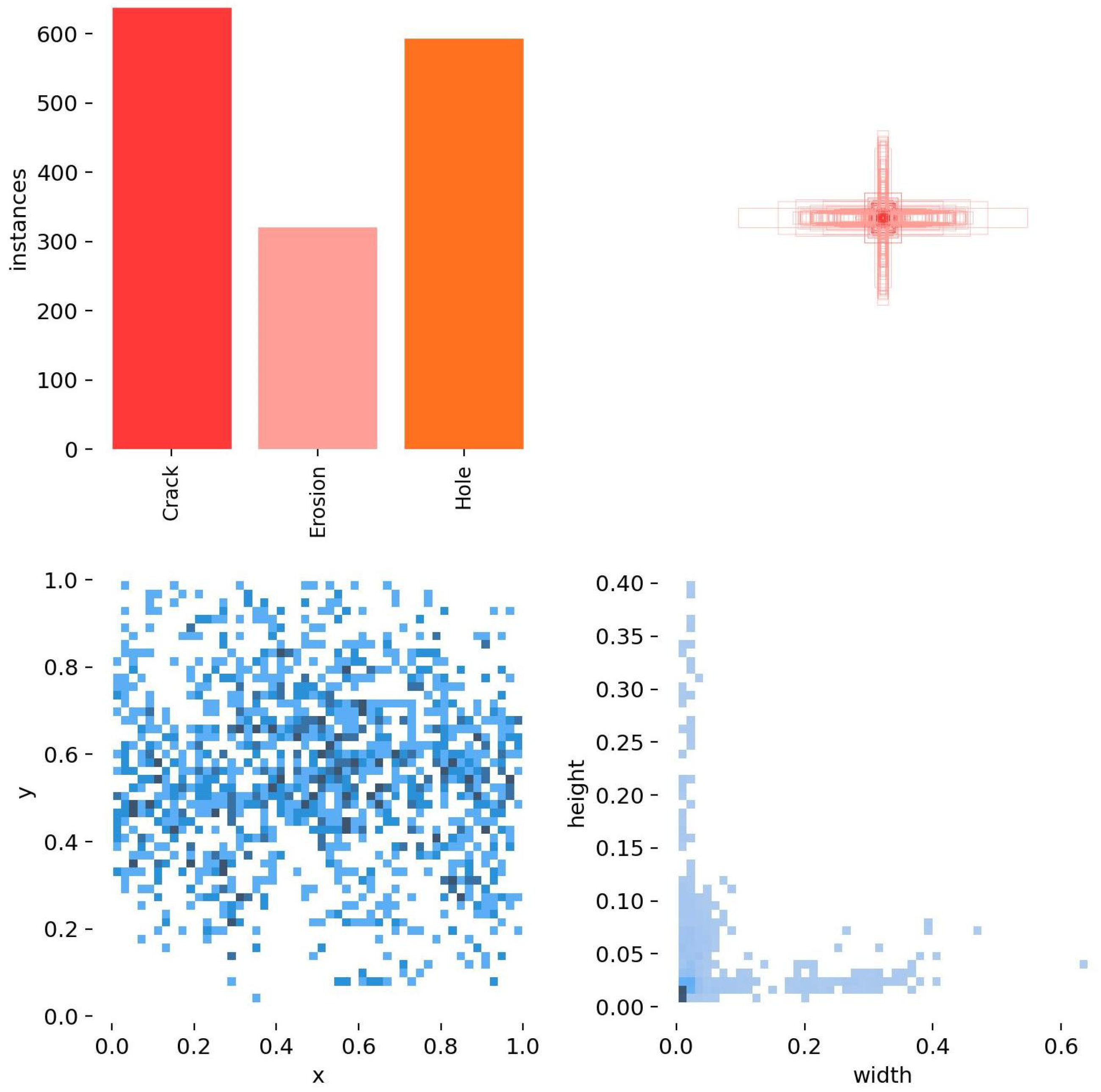

2.3. Dataset Creation Setup

2.4. Dataset Structure

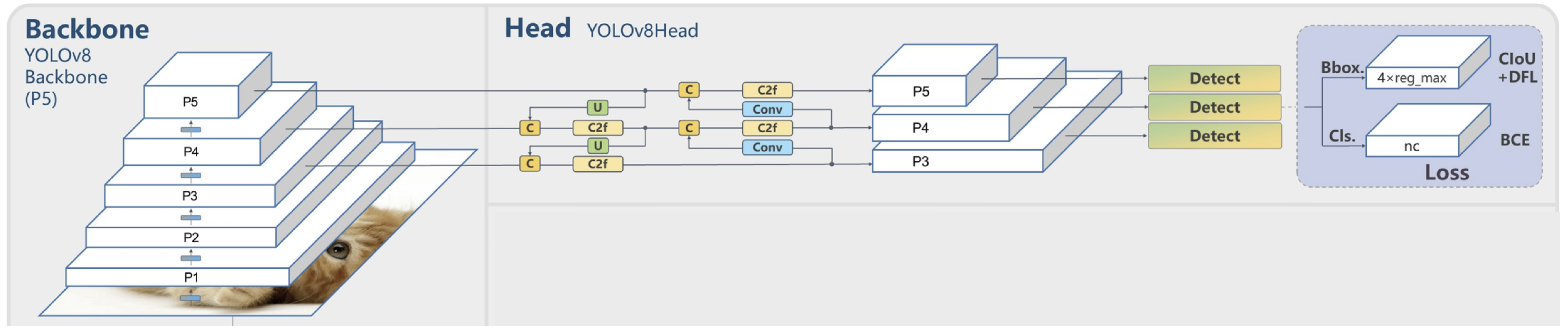

2.5. Models

- and represent the predicted and ground truth bounding box coordinates at the position , respectively.

- is the intersection over union (IoU) between the predicted and ground truth boxes.

- is a dynamic weighting factor that adjusts the contribution of each spatial location.

- is a modulation factor that helps balance the learning of different sized objects.

- is a normalization parameter for the coordinate differences.

- is an indicator function that equals 1 when a defect is present at the position .

3. Results and Analysis

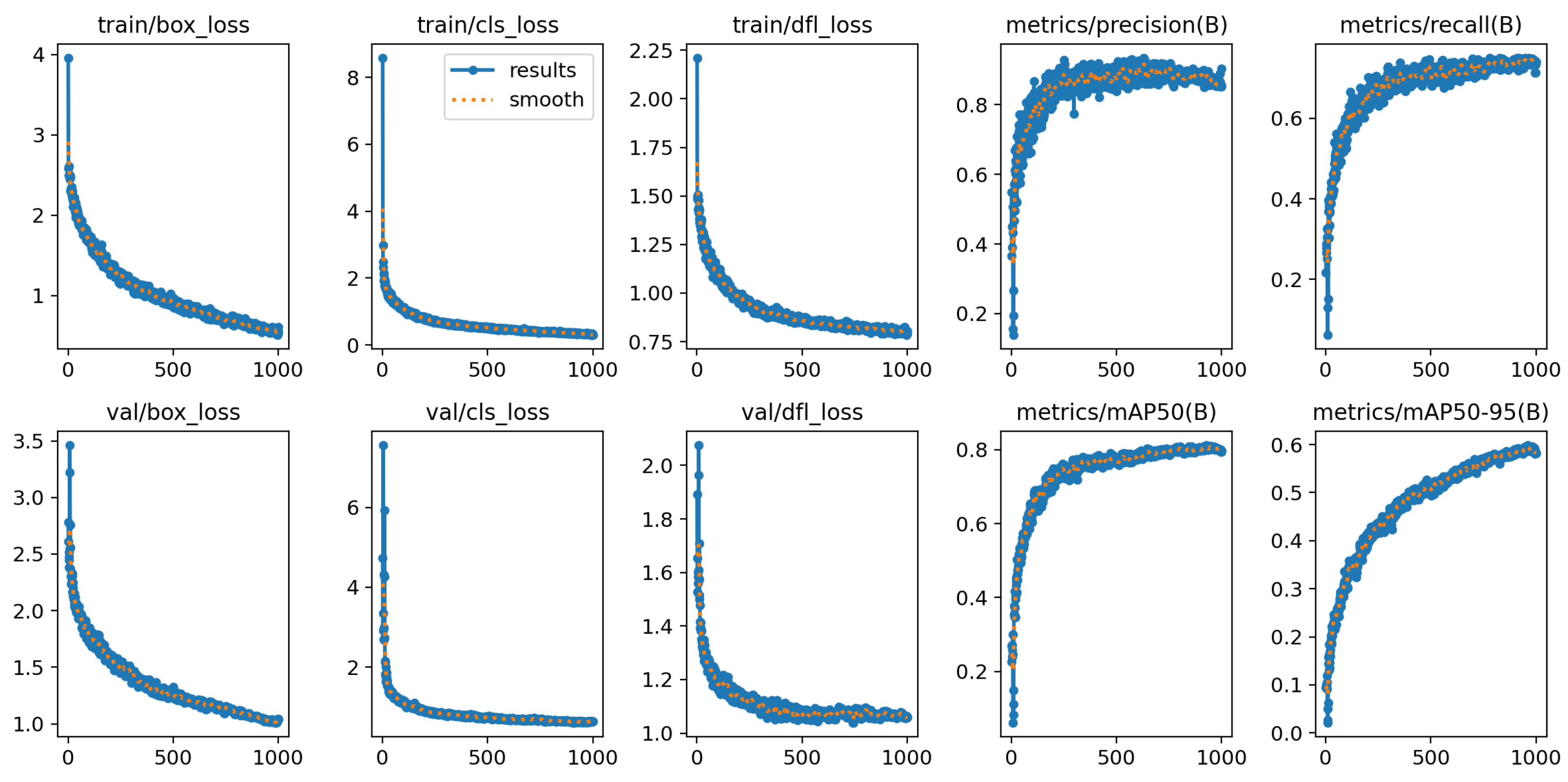

3.1. Parameter Tuning and Optimization

- Custom Dataset Preparation and Annotation: We developed a specialized dataset comprising thermal images of wind turbine blade defects, meticulously annotated to capture various defect types and severities. This dataset ensured that the model was trained on relevant and high-quality data specific to the application domain.

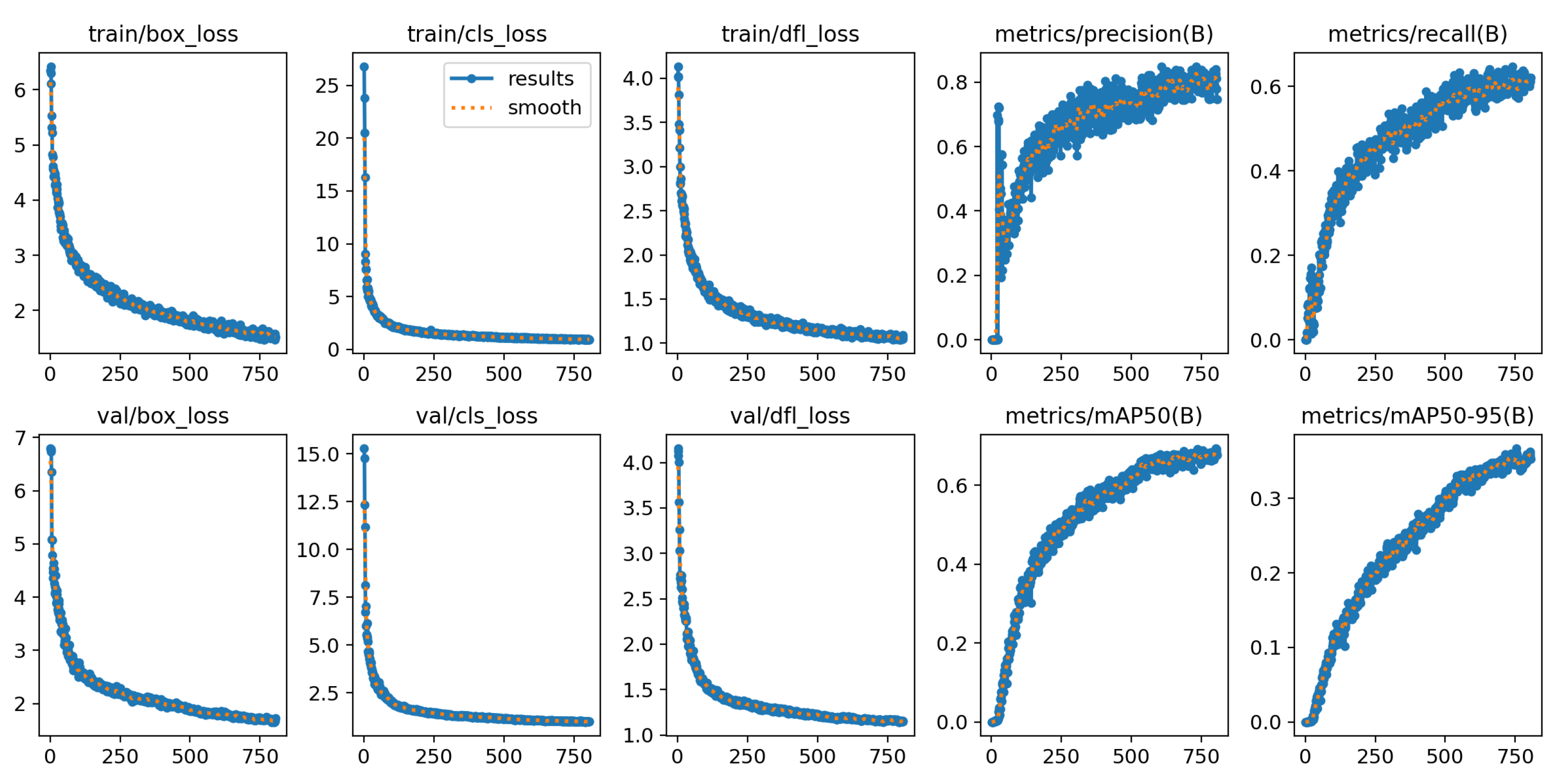

- Extensive Hyperparameter Optimization: Leveraging Bayesian optimization techniques, we systematically explored the hyperparameter space to identify the optimal configurations that enhanced model performance for our specific application. This included tuning parameters such as learning rates, batch sizes, and momentum coefficients.

- Modified Loss Function Weights: The loss functions were adjusted to prioritize accurate localization of defects, ensuring that the model emphasized critical areas during training. By assigning higher weights to certain loss components, the model became more sensitive to the nuances of defect detection.

- Specialized Data Augmentation Strategies: We implemented data augmentation methods tailored to the characteristics of thermal images, such as thermal noise addition, brightness variations, and geometric transformations. These strategies improved the model’s robustness and ability to generalize across different thermal imaging conditions.

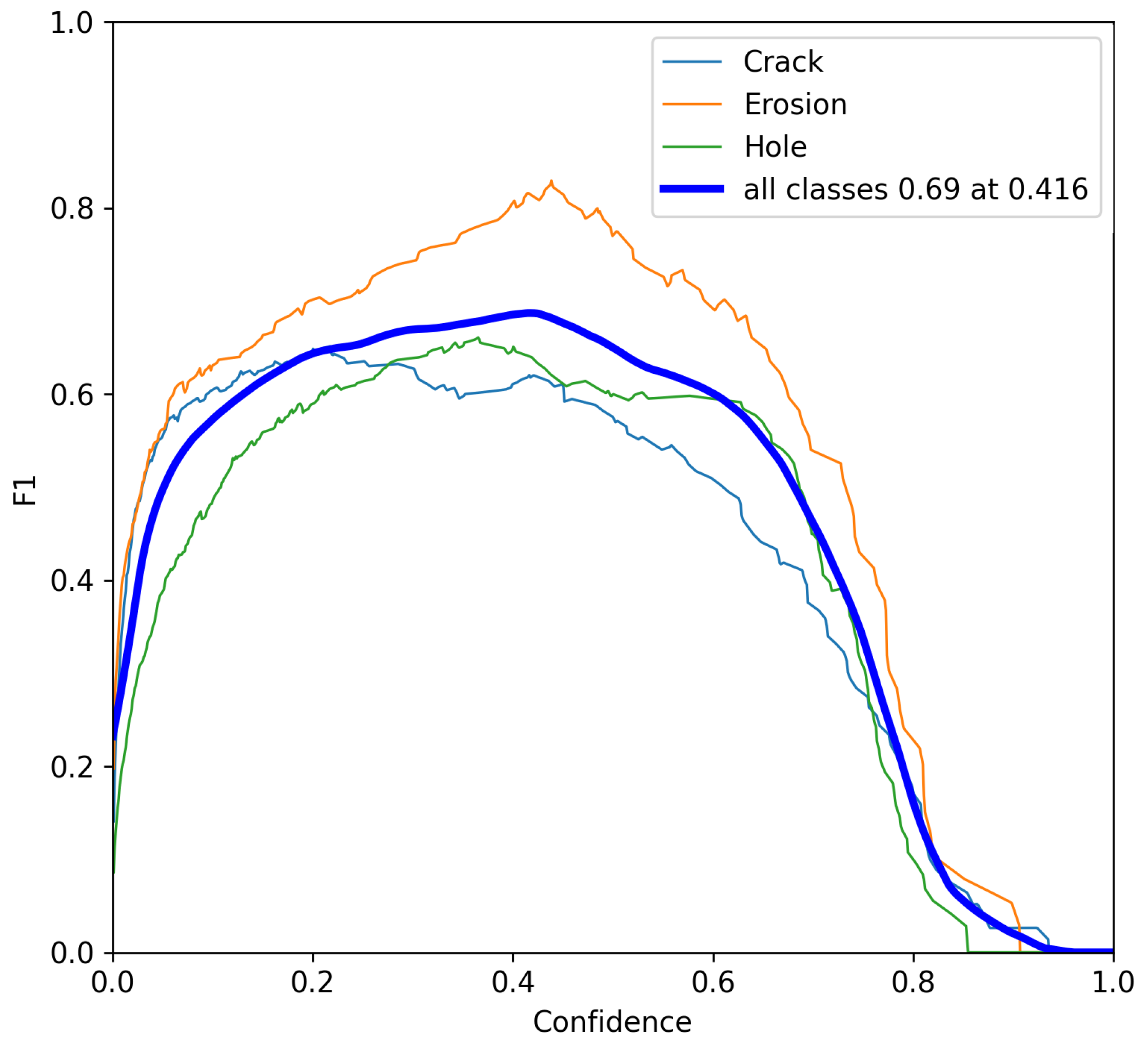

- Fine-Tuned Confidence Thresholds: Confidence thresholds were meticulously calibrated to balance precision and recall, optimizing the model’s defect detection accuracy in real-world scenarios. This calibration helped in reducing false positives and enhancing the reliability of detections.

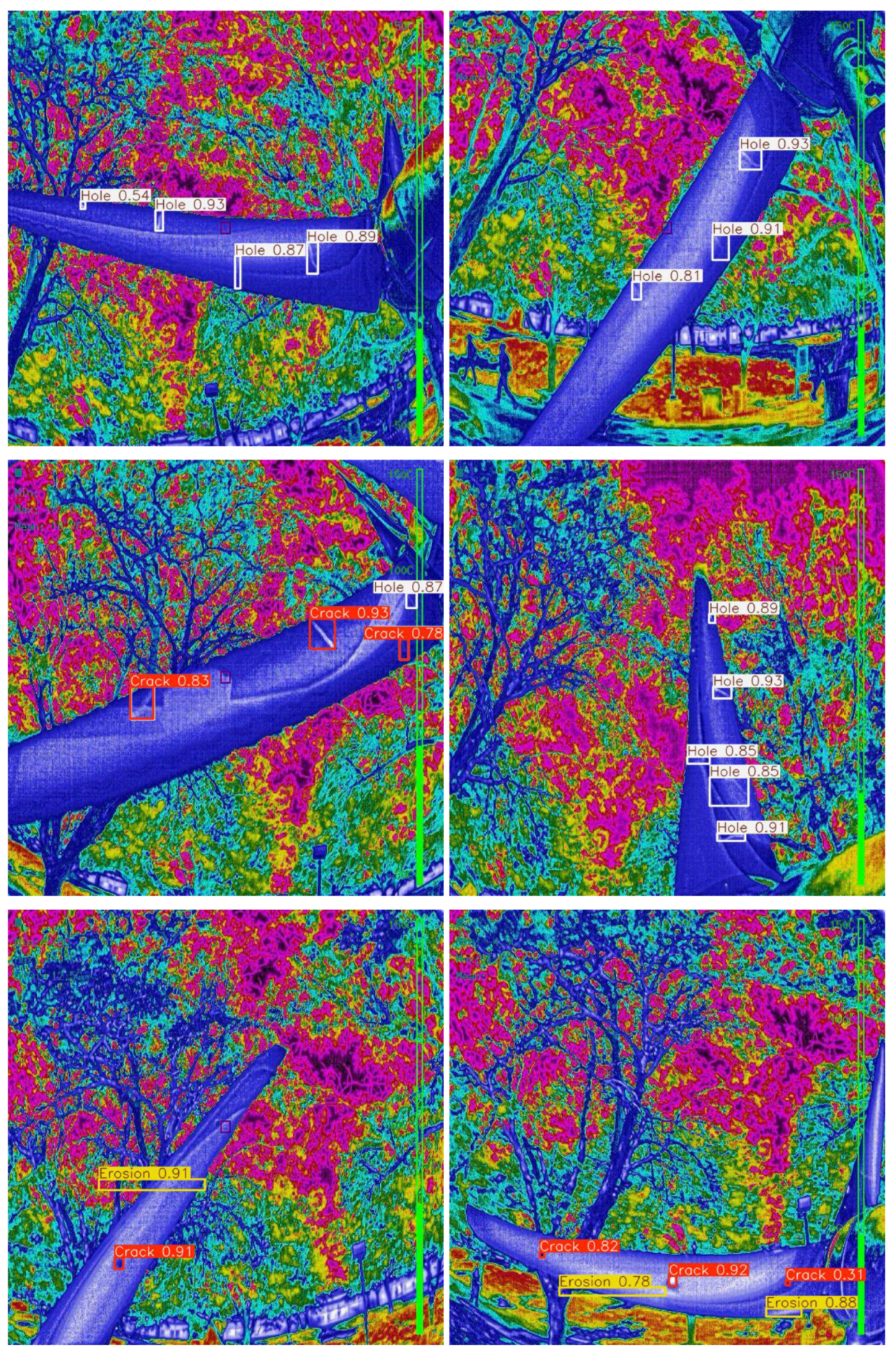

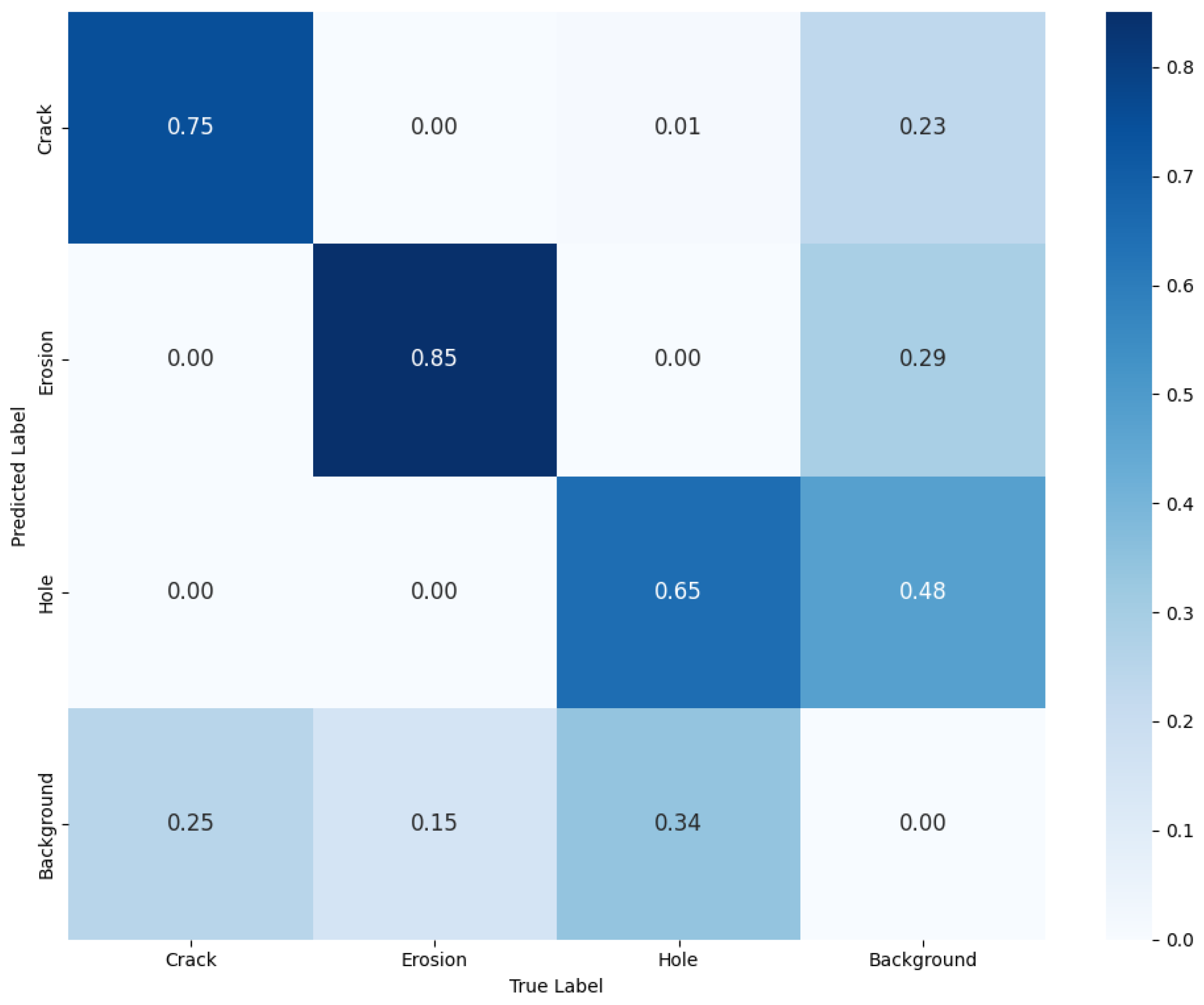

3.2. YOLOv8 Results

3.3. YOLOv9 Results

3.4. Comparative Analysis of YOLOv8 and YOLOv9 Performance

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviation | Definition |

| AI | Artificial Intelligence |

| CNN | Convolutional Neural Network |

| COCO | Common Objects in Context |

| DTAGAN | Dual-Threshold Attention-Guided GAN |

| FLIR | Forward-Looking InfraRed |

| GAN | Generative Adversarial Network |

| GELAN | Generalized Efficient Layer Aggregation Network |

| GRU | Gated Recurrent Unit |

| IR | Infrared |

| IRT | Infrared thermography |

| LCOE | Levelized Cost of Energy |

| MIR | Mid-infrared |

| NDT | Non-destructive testing |

| OCT | Optical Coherence Tomography |

| PCA | Principal Component Analysis |

| PGI | Programmable Gradient Information |

| PV | Photovoltaic |

| RoI | Region of interest |

| SVM | Support Vector Machine |

| UAV | Unmanned Aerial Vehicle |

| WTB | Wind turbine blade |

| YOLO | You Only Look Once |

References

- Memari, M.; Shakya, P.; Shekaramiz, M.; Seibi, A.C.; Masoum, M.A. Review on the Advancements in Wind Turbine Blade Inspection: Integrating Drone and Deep Learning Technologies for Enhanced Defect Detection. IEEE Access 2024, 12, 33236–33282. [Google Scholar] [CrossRef]

- Li, W.; Pan, Z.; Hong, N.; Du, Y. Defect detection of large wind turbine blades based on image stitching and improved Unet network. J. Renew. Sustain. Energy 2022, 15, 013302. [Google Scholar] [CrossRef]

- Clero, K.; Nadour, M.; Ed-Diny, S.; Achalhi, M.; Cherkaoui, M.; Ait Abdelali, H.; El Fkihi, S.; Benzakour, I.; Rziki, S.; Tagemouati, H.; et al. A Review of the Use of Thermal Imaging and Computer Vision for Pattern Recognition. Comput. Sci. Inf. Technol. 2023, 13, 121–136. [Google Scholar] [CrossRef]

- Yang, R.; He, Y.; Mandelis, A.; Wang, N.; Wu, X.; Huang, S. Induction Infrared Thermography and Thermal-Wave-RADAR Analysis for Imaging Inspection and Diagnosis of Blade Composites. IEEE Trans. Ind. Inform. 2018, 14, 5637–5647. [Google Scholar] [CrossRef]

- Smith, J.; Carter, D. Application of UAVs in detecting subsurface defects in wind turbine blades using thermal imaging. Wind Energy 2019, 22, 123–130. [Google Scholar]

- Jones, A.; Taylor, M. Deep learning for real-time detection of defects in wind turbine blades via thermal imaging. Renew. Energy 2020, 145, 2291–2301. [Google Scholar]

- Galleguillos, C.; Zorrilla, A.; Jimenez, A.; Diaz, L.; Montiano, Á.; Barroso, M.; Viguria, A.; Lasagni, F. Thermographic non-destructive inspection of wind turbine blades using unmanned aerial systems. Plast. Rubber Compos. 2015, 44, 98–103. [Google Scholar] [CrossRef]

- Doroshtnasir, M.; Worzewski, T.; Krankenhagen, R.; Rollig, M. On-site inspection of potential defects in wind turbine rotor blades with thermography. Wind Energy 2016, 19, 1407–1422. [Google Scholar] [CrossRef]

- Traphan, D.; Herráez, I.; Meinlschmidt, P.; Schlüter, F.; Peinke, J.; Gülker, G. Remote surface damage detection on rotor blades of operating wind turbines by means of infrared thermography. Wind Energy Sci. 2018, 3, 639–650. [Google Scholar] [CrossRef]

- Chen, X.; Shihavuddin, A.; Madsen, S.H.; Thomsen, K.; Rasmussen, S.; Branner, K. AQUADA: Automated Quantification of Damages in Composite Wind Turbine Blades for LCOE Reduction. Wind Energy 2021, 24, 535–548. [Google Scholar] [CrossRef]

- Xu, L.; Hu, J. A Method of Defect Depth Recognition in Active Infrared Thermography Based on GRU Networks. Appl. Sci. 2021, 11, 6387. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, J.; Ni, H.; Ren, F. Thermal Defect Detection for Substation Equipment Based on Infrared Image Using Convolutional Neural Network. Electronics 2021, 10, 1986. [Google Scholar] [CrossRef]

- Jiang, C.; Ren, H.; Ye, X.; Zhu, J.; Zeng, H.; Nan, Y.; Sun, M.; Ren, X.; Huo, H. Object Detection from UAV Thermal Infrared Images and Videos Using YOLO Models. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102912. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wang, C.; Gu, Y. Research on infrared nondestructive detection of small wind turbine blades. Results Eng. 2022, 15, 100570. [Google Scholar] [CrossRef]

- Yu, J.; He, Y.; Zhang, F.; Sun, G.; Hou, Y.; Liu, H.; Li, J.; Yang, R.; Wang, H. An infrared image stitching method for wind turbine blade using UAV flight data and U-Net. IEEE Sens. J. 2023, 23, 8727–8736. [Google Scholar] [CrossRef]

- Chen, X.; Sheiati, S.; Shihavuddin, A. AQUADA PLUS: Automated damage inspection of cyclic-loaded large-scale composite structures using thermal imagery and computer vision. Compos. Struct. 2023, 318, 117085. [Google Scholar] [CrossRef]

- de Oliveira, A.K.V.; Bracht, M.K.; Aghaei, M.; Rüther, R. Automatic fault detection of utility-scale photovoltaic solar generators applying aerial infrared thermography and orthomosaicking. Sol. Energy 2023, 252, 272–283. [Google Scholar] [CrossRef]

- Attallah, O.; Ibrahim, R.A.; Zakzouk, N.E. CAD system for inter-turn fault diagnosis of offshore wind turbines via multi-CNNs & feature selection. Renew. Energy 2023, 203, 870–880. [Google Scholar]

- Shao, H.; Li, W.; Cai, B.; Wan, J.; Xiao, Y.; Yan, S. Dual-threshold attention-guided GAN and limited infrared thermal images for rotating machinery fault diagnosis under speed fluctuation. IEEE Trans. Ind. Inform. 2023, 19, 9933–9942. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Tanda, G.; Migliazzi, M. Infrared thermography monitoring of solar photovoltaic systems: A comparison between UAV and aircraft remote sensing platforms. Therm. Sci. Eng. Prog. 2024, 48, 102379. [Google Scholar] [CrossRef]

- Petersen, C.R.; Fæster, S.; Bech, J.I.; Jespersen, K.M.; Israelsen, N.M.; Bang, O. Non-destructive and contactless defect detection inside leading edge coatings for wind turbine blades using mid-infrared optical coherence tomography. Wind Energy 2023, 26, 458–468. [Google Scholar] [CrossRef]

- Zhao, X.; Zhao, Y.; Hu, S.; Wang, H.; Zhang, Y.; Ming, W. Progress in Active Infrared Imaging for Defect Detection in the Renewable and Electronic Industries. Sensors 2023, 23, 8780. [Google Scholar] [CrossRef]

- Wang, Z.; Jiang, N.; Wen, R.; Sun, B. The Segmentation of Wind Turbine Defect Based on UAV Infrared Image. In Proceedings of the 4th Asian Quantitative InfraRed Thermography Conference, Abu Dhabi, United Arab Emirates, 30 October–3 November 2023. [Google Scholar]

- Li, X.; He, Y.; Wang, H.; Sun, G.; Yu, J.; Du, X.; Hu, M.; Wang, Y. Thermal Inspection of Subsurface Defects in Wind Turbine Blade Segments Under the Natural Solar Condition. IEEE Trans. Ind. Electron. 2023, 1, 11488–11497. [Google Scholar] [CrossRef]

- Zhou, W.; Wang, Z.; Zhang, M.; Wang, L. Wind Turbine Actual Defects Detection Based on Visible and Infrared Image Fusion. IEEE Trans. Instrum. Meas. 2023, 72, 3509208. [Google Scholar] [CrossRef]

- Zheng, S.; Li, L.; Chu, N.; Zhou, C.; Mohammad-Djafari, A. Abnormal temperature detection of blower components based on infrared video images analysis. IEEE Sens. J. 2024, 24, 1919–1928. [Google Scholar] [CrossRef]

- Jia, X.; Chen, X. AI-based optical-thermal video data fusion for near real-time blade segmentation in normal wind turbine operation. Eng. Appl. Artif. Intell. 2024, 127, 107325. [Google Scholar] [CrossRef]

- Sheiati, S.; Chen, X. Deep learning-based fatigue damage segmentation of wind turbine blades under complex dynamic thermal backgrounds. Struct. Health Monit. 2024, 23, 539–554. [Google Scholar] [CrossRef]

- Du, Y.; Zhou, S.; Jing, X.; Peng, Y.; Wu, H.; Kwok, N. Damage detection techniques for wind turbine blades: A review. Mech. Syst. Signal Process. 2020, 141, 106445. [Google Scholar] [CrossRef]

- Aminzadeh, A.; Dimitrova, M.; Meiabadi, M.S.; Karganroudi, S.S.; Taheri, H.; Ibrahim, H.; Wen, Y. Non-Contact Inspection Methods for Wind Turbine Blade Maintenance: Techno—Economic Review of Techniques for Integration with Industry 4.0. J. Nondestruct. Eval. 2023, 42, 54. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. In Computer Vision and Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Shen, F.; Zeng, G. Weighted residuals for very deep networks. In Proceedings of the 2016 3rd International Conference on Systems and Informatics (ICSAI), Shanghai, China, 19–21 November 2016; pp. 936–941. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, I.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Yao, Z.; Cao, Y.; Zheng, S.; Huang, G.; Lin, S. Cross-Iteration Batch Normalization. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 12326–12335. [Google Scholar]

- Ultralytics. YOLOv5: Object Detection at 640 × 640. Online Resource. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 20 January 2025).

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Li, C.; Wang, X.; Zhang, Y.; Chen, H.; Liu, J.; Zhao, T.; Xu, F.; Yang, L.; Sun, Q.; Wu, M. YOLOv6: An Incremental Improvement of YOLOv5 for Object Detection. Online Resource. 2023. Available online: https://github.com/meituan/YOLOv6 (accessed on 1 May 2024).

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-Time Flying Object Detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Ultralytics. Ultralytics Version 8.2.0. 2024. Available online: https://github.com/ultralytics (accessed on 1 May 2024).

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar]

| Model | Key Features | Advantages | Limitations |

|---|---|---|---|

| YOLOv1 | Single regression problem for object detection [14] | Fast processing speed, real-time detection | Lower accuracy compared to later versions |

| YOLOv2 | Batch normalization, high-resolution classifiers, new convolutional architecture [33] | Improved accuracy over YOLOv1, retains high speed | Still limited in handling small objects |

| YOLOv3 | Multi-scale predictions, deeper feature extractor (Darknet-53) [34] | Better detection across various object sizes, improved balance of speed and accuracy | Increased computational complexity |

| YOLOv4 | Weighted Residual Connections (WRCs), Cross-Stage Partial (CSP) connections, Cross Mini-Batch Normalization (CmBN) [35] | Enhanced robustness and generalization, effective across diverse scenarios | Larger model size |

| YOLOv5 | Adaptive anchor box calculation, compound scaling, extensive data augmentation [39] | Flexible architecture, ease of use, widely adopted | Proprietary modifications, unofficial continuation |

| YOLOv6 | Optimized network structure for real-time performance [41] | Improved speed–accuracy trade-off, efficient for real-time applications | Primarily focused on optimizing existing architectures |

| YOLOv7 | Improved feature extraction and fusion techniques [43] | Higher detection precision, better performance in complex environments | Incremental improvements over YOLOv6 |

| YOLOv8 * | FPN and PAN architectures, transfer learning [42] | High accuracy, better handling of small objects, improved contextual feature information | Higher training complexity, requires fine-tuning |

| YOLOv9 * | Programmable Gradient Information (PGI), Generalized Efficient Layer Aggregation Network (GELAN) [44] | Advanced gradient handling, optimal balance of accuracy and speed, lightweight | Newer model, requires more testing and validation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Memari, M.; Shekaramiz, M.; Masoum, M.A.S.; Seibi, A.C. Enhanced Non-Destructive Testing of Small Wind Turbine Blades Using Infrared Thermography. Machines 2025, 13, 108. https://doi.org/10.3390/machines13020108

Memari M, Shekaramiz M, Masoum MAS, Seibi AC. Enhanced Non-Destructive Testing of Small Wind Turbine Blades Using Infrared Thermography. Machines. 2025; 13(2):108. https://doi.org/10.3390/machines13020108

Chicago/Turabian StyleMemari, Majid, Mohammad Shekaramiz, Mohammad A. S. Masoum, and Abdennour C. Seibi. 2025. "Enhanced Non-Destructive Testing of Small Wind Turbine Blades Using Infrared Thermography" Machines 13, no. 2: 108. https://doi.org/10.3390/machines13020108

APA StyleMemari, M., Shekaramiz, M., Masoum, M. A. S., & Seibi, A. C. (2025). Enhanced Non-Destructive Testing of Small Wind Turbine Blades Using Infrared Thermography. Machines, 13(2), 108. https://doi.org/10.3390/machines13020108