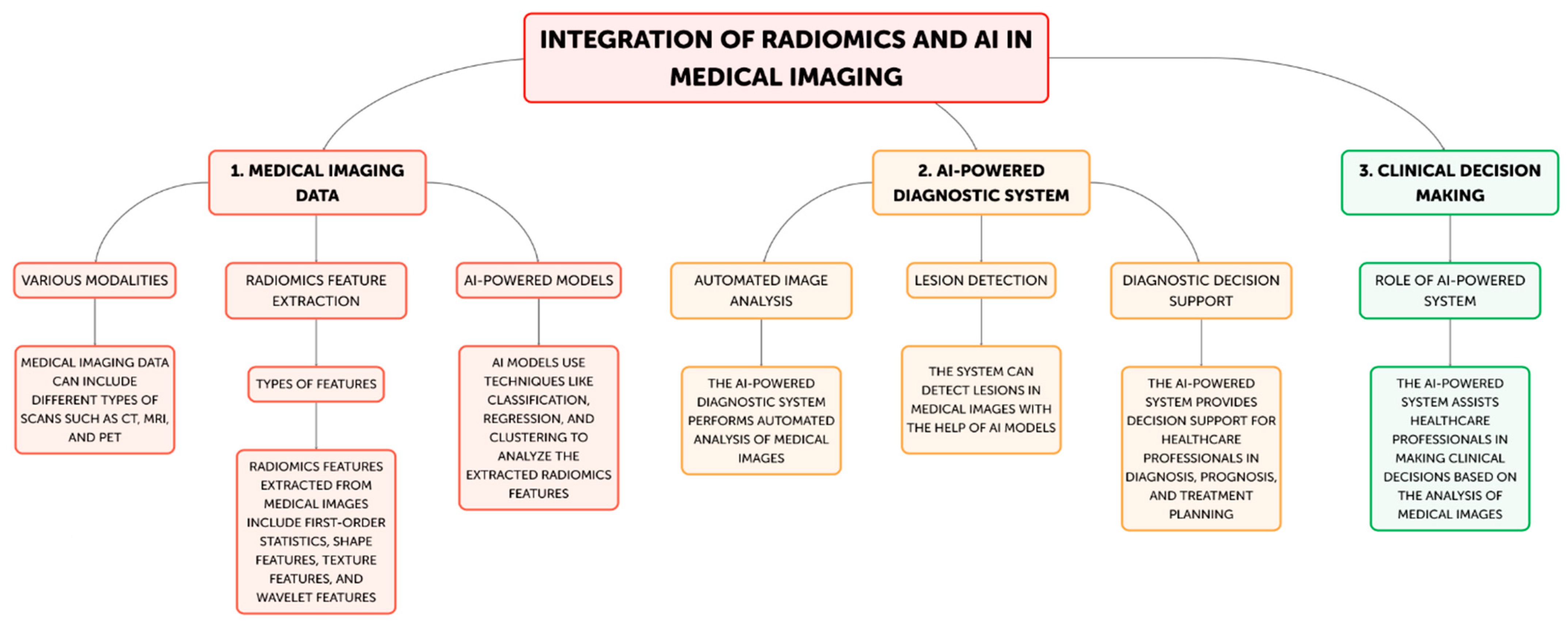

The Integration of Radiomics and Artificial Intelligence in Modern Medicine

Abstract

:1. Introduction

Aim of the Review

2. Materials and Methods

3. Results

4. Radiomics and Its Involvement of Quantitative Feature Extraction from Medical Images

4.1. Introduction

4.2. Discussion

4.3. Conclusions

5. Machine Learning, Deep Learning and Computer-Aided Diagnostic (CAD) Systems Approaches in Radiomics

5.1. Introduction

5.2. Discussion

5.3. Conclusions

6. The Effect of Radiomics and AI on Improving Workflow Automation and Efficiency, Optimize Clinical Trials and Patient Stratification

6.1. Introduction

6.2. Discussion

6.3. Conclusions

7. Predictive Modeling Improvement by Machine Learning in Radiomics

7.1. Introduction

7.2. Discusson

7.3. Conclusions

8. Multimodal Integration and Enhanced Deep Learning Architectures in Radiomics

8.1. Introduction

8.2. Discussion

9. Regulatory and Clinical Adoption Considerations for Radiomics-Based CAD

9.1. Introduction

9.2. Discussion

9.3. Conclusions

10. Future Directions and Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lambin, P.; Leijenaar, R.T.H.; Deist, T.M.; Peerlings, J.; de Jong, E.E.C.; van Timmeren, J.; Sanduleanu, S.; Larue, R.T.H.M.; Even, A.J.G.; Jochems, A.; et al. Radiomics: The bridge between medical imaging and personalized medicine. Nat. Rev. Clin. Oncol. 2017, 14, 749–762. [Google Scholar] [CrossRef] [PubMed]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images are more than pictures, they are data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef]

- Aerts, H.J.; Velazquez, E.R.; Leijenaar, R.T.; Parmar, C.; Grossmann, P.; Carvalho, S.; Bussink, J.; Monshouwer, R.; Haibe-Kains, B.; Rietveld, D.; et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat. Commun. 2014, 5, 4006. [Google Scholar] [CrossRef] [PubMed]

- Kumar, V.; Gu, Y.; Basu, S.; Berglund, A.; Eschrich, S.A.; Schabath, M.B.; Forster, K.; Aerts, H.J.; Dekker, A.; Fenstermacher, D.; et al. Radiomics: The process and the challenges. Magn. Reson. Imaging 2012, 30, 1234–1248. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Shen, D.; Wu, G.; Suk, H.I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Choy, G.; Khalilzadeh, O.; Michalski, M.; Do, S.; Samir, A.E.; Pianykh, O.S.; Geis, J.R.; Pandharipande, P.V.; Brink, J.A.; Dreyer, K.J. Current applications and future impact of machine learning in radiology. Radiology 2018, 288, 318–328. [Google Scholar] [CrossRef]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef] [PubMed]

- Parekh, V.; Jacobs, M.A. Radiomics: A new application from established techniques. Expert Rev. Precis. Med. Drug Dev. 2016, 1, 207–226. [Google Scholar] [CrossRef] [PubMed]

- Kickingereder, P.; Bickelhaupt, S.; Laun, F.B.; Stieltjes, B.; Shiee, N. Radiomic profiling of glioblastoma: Identifying an imaging predictor of patient survival with improved performance over established clinical and radiologic risk models. Radiology 2016, 280, 880–889. [Google Scholar] [CrossRef] [PubMed]

- Parmar, C.; Grossmann, P.; Bussink, J.; Lambin, P.; Aerts, H.J. Machine learning methods for quantitative radiomic biomarkers. Sci. Rep. 2015, 5, 13087. [Google Scholar] [CrossRef] [PubMed]

- Antropova, N.; Huynh, B.Q.; Giger, M.L. A deep feature fusion methodology for breast cancer diagnosis demon-strated on three imaging modality datasets. Med. Phys. 2017, 44, 5162–5171. [Google Scholar] [CrossRef] [PubMed]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Wang, G.; Gu, Z.; Khanal, S.; Zhang, J.; Hu, X. Radiomics and deep learning in clinical imaging: A perfect marriage? Cancer Lett. 2020, 471, 1–12. [Google Scholar]

- Huang, Y.Q.; Liang, C.H.; He, L.; Tian, J.; Liang, C.S.; Chen, X.; Ma, Z.L.; Liu, Z.Y. Development and validation of a ra-diomics nomogram for preoperative prediction of lymph node metastasis in colorectal cancer. J. Clin. Oncol. 2016, 34, 2157–2164. [Google Scholar] [CrossRef] [PubMed]

- Buvat, I.; Orlhac, F.; Soussan, M. Tumor texture analysis in PET: Where do we stand? J. Nucl. Med. 2015, 56, 1642–1644. [Google Scholar] [CrossRef]

- Nyflot, M.J.; Yang, F.; Byrd, D.; Bowen, S.R.; Sandison, G.A.; Kinahan, P.E. Quantitative radiomics: Impact of stochastic effects on textural feature analysis implies the need for standards. J. Med. Imaging 2015, 2, 041002. [Google Scholar] [CrossRef]

- Leijenaar, R.T.; Carvalho, S.; Velazquez, E.R.; Van Elmpt, W.J.; Parmar, C.; Hoekstra, O.S.; Hoekstra, C.J.; Boellaard, R.; Dekker, A.L.; Gillies, R.J.; et al. Stability of FDG-PET Radiomics features: An integrated analysis of test-retest and inter-observer variability. Acta Oncol. 2013, 52, 1391–1397. [Google Scholar] [CrossRef] [PubMed]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyö, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and mutation prediction from non-small cell lung cancer histopathology images using deep learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2019, 106, 249–259. [Google Scholar] [CrossRef] [PubMed]

- Lakhani, P.; Sundaram, B. Deep learning at chest radiography: Automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology 2017, 284, 574–582. [Google Scholar] [CrossRef] [PubMed]

- Prevedello, L.M.; Erdal, B.S.; Ryu, J.L.; Little, K.J.; Demirer, M.; Qian, S.; White, R.D. Automated critical test findings identification and online notification system using artificial intelligence in imaging. Radiology 2017, 285, 923–931. [Google Scholar] [CrossRef]

- Van Riel, S.J.; Sánchez, C.I.; Bankier, A.A.; Naidich, D.P.; Verschakelen, J.; Scholten, E.T.; de Jong, P.A.; Jacobs, C.; van Rikxoort, E.; Peters-Bax, L.; et al. Observer variability for classification of pulmonary nodules on low-dose CT images and its effect on nodule management. Radiology 2015, 277, 863–871. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Ruiz, A.; Lång, K.; Gubern-Merida, A.; Broeders, M.; Gennaro, G.; Clauser, P.; Helbich, T.H.; Chevalier, M.; Tan, T.; Mertelmeier, T.; et al. Stand-Alone Artificial Intelligence for Breast Cancer Detection in Mammography: Comparison with 101 Radiologists. J. Natl. Cancer Inst. 2019, 111, 916–922. [Google Scholar] [CrossRef]

- Langlotz, C.P.; Allen, B.; Erickson, B.J.; Kalpathy-Cramer, J.; Bigelow, K.; Cook, T.S.; Flanders, A.E.; Lungren, M.P.; Mendelson, D.S.; Rudie, J.D.; et al. A Roadmap for Foundational Research on Artificial Intelligence in Medical Imaging: From the 2018 NIH/RSNA/ACR/The Academy Workshop. Radiology 2019, 291, 781–791. [Google Scholar] [CrossRef] [PubMed]

- Trivizakis, E.; Manikis, G.C.; Nikiforaki, K.; Drevelegas, K.; Constantinides, M.; Drevelegas, A.; Marias, K. Extending 2-D Convolutional Neural Networks to 3-D for Advancing Deep Learning Cancer Classification with Application to MRI Liver Tumor Differentiation. IEEE J. Biomed Health Inform. 2020, 24, 840–850. [Google Scholar] [CrossRef]

- Sun, R.; Limkin, E.J.; Vakalopoulou, M.; Dercle, L.; Champiat, S.; Han, S.R.; Verlingue, L.; Brandao, D.; Lancia, A.; Ammari, S.; et al. A radiomics approach to assess tumour-infiltrating CD8 cells and response to anti-PD-1 or anti-PD-L1 immunotherapy: An imaging biomarker, retrospective multicohort study. Lancet Oncol. 2020, 21, 1433–1442. [Google Scholar] [CrossRef]

- Kickingereder, P.; Isensee, F.; Tursunova, I.; Petersen, J.; Neuberger, U.; Bonekamp, D.; Brugnara, G.; Schell, M.; Kessler, T.; Foltyn, M.; et al. Automated quantitative tumour response assessment of MRI in neuro-oncology with artificial neural networks: A multicentre, retrospective study. Lancet Oncol. 2019, 20, 728–740. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y. Radiomic analysis of contrast-enhanced CT predicts response to neoadjuvant chemotherapy in breast cancer: A multi-institutional study. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 79–88. [Google Scholar]

- Traverso, A.; Wee, L.; Dekker, A.; Gillies, R. Reproducibility and Replicability of Radiomic Features: A Systematic Review. Int. J. Radiat. Oncol. Biol. Phys. 2018, 102, 1143–1158. [Google Scholar] [CrossRef]

- Sanfilippo, F.; La Via, L.; Dezio, V.; Amelio, P.; Genoese, G.; Franchi, F.; Messina, A.; Robba, C.; Noto, A. Inferior vena cava distensibility from subcostal and trans-hepatic imaging using both M-mode or artificial intelligence: A prospective study on mechanically ventilated patients. Intensive Care Med. Exp. 2023, 11, 40. [Google Scholar] [CrossRef] [PubMed]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- La Via, L.; Sangiorgio, G.; Stefani, S.; Marino, A.; Nunnari, G.; Cocuzza, S.; La Mantia, I.; Cacopardo, B.; Stracquadanio, S.; Spampinato, S.; et al. The Global Burden of Sepsis and Septic Shock. Epidemiologia 2024, 5, 456–478. [Google Scholar] [CrossRef]

- Lao, J.; Chen, Y.; Li, Z.C.; Li, Q.; Zhang, J.; Liu, J.; Zhai, G. A deep learning-based radiomics model for prediction of survival in glioblastoma multiforme. Sci. Rep. 2020, 10, 10764. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Sun, X.; Wang, J.; Cui, Y.; Kato, F.; Shirato, H.; Ikeda, D.M.; Li, R. Identifying relations between imaging phenotypes and molecular subtypes of breast cancer: Model discovery and external validation. J. Magn. Reson. Imaging 2019, 50, 1017–1026. [Google Scholar] [CrossRef] [PubMed]

- Parmar, C.; Grossmann, P.; Rietveld, D.; Rietbergen, M.M.; Lambin, P.; Aerts, H.J.W.L. Radiomic Machine-Learning Classifiers for Prognostic Biomarkers of Head and Neck Cancer. Front. Oncol. 2018, 8, 227. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Coroller, T.P.; Grossmann, P.; Zeleznik, R.; Kumar, A.; Bussink, J.; Gillies, R.J.; Mak, R.H.; Aerts, H.J.W.L. Deep learning for lung cancer prognostication: A retrospective multi-cohort radiomics study. PLoS Med. 2020, 17, e1003350. [Google Scholar] [CrossRef]

- Sheller, M.J.; Edwards, B.; Reina, G.A.; Martin, J.; Pati, S.; Kotrotsou, A.; Milchenko, M.; Xu, W.; Marcus, D.; Colen, R.R.; et al. Federated learning in medicine: Facilitating multi-institutional collaborations without sharing patient data. Sci. Rep. 2020, 10, 12598. [Google Scholar] [CrossRef]

- Gao, J. Attention-based deep neural network for prostate cancer Gleason grade classification from MRI. Med. Phys. 2021, 48, 3875–3885. [Google Scholar]

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.-I. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef] [PubMed]

- Baumgartner, C.F.; Koch, L.M.; Tezcan, K.C.; Ang, J.X.; Konukoglu, E. Visual feature attribution using Wasserstein GANs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8309–8319. [Google Scholar]

- Park, J.E. External Validation of a Radiomics Model for the Prediction of Recurrence-Free Survival in Nasopharyngeal Carcinoma. Cancers 2020, 12, 2423. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. IEEE Trans. Med. Imaging 2019, 38, 1435–1446. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ardila, D.; Kiraly, A.P.; Bharadwaj, S.; Choi, B.; Reicher, J.J.; Peng, L.; Tse, D.; Etemadi, M.; Ye, W.; Corrado, G.; et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 2019, 25, 954–961. [Google Scholar] [CrossRef]

- Wolterink, J.M.; Leiner, T.; Viergever, M.A.; Išgum, I. Generative Adversarial Networks for Noise Reduction in Low-Dose CT. IEEE Trans. Med. Imaging 2017, 36, 2536–2545. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Tang, Y.; Nath, V.; Yang, D.; Myronenko, A.; Landman, B.; Roth, H.R.; Xu, D. UNETR: Transformers for 3D Medical Image Segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 574–584. [Google Scholar]

- Xu, Y.; Hosny, A.; Zeleznik, R.; Parmar, C.; Coroller, T.; Franco, I.; Mak, R.H.; Aerts, H.J. Deep Learning Predicts Lung Cancer Treatment Response from Serial Medical Imaging. Clin. Cancer Res. 2019, 25, 3266–3275. [Google Scholar] [CrossRef] [PubMed]

- Nie, D.; Lu, J.; Zhang, H.; Adeli, E.; Wang, J.; Yu, Z.; Liu, L.; Wang, Q.; Wu, J.; Shen, D. Multi-Channel 3D Deep Feature Learning for Survival Time Prediction of Brain Tumor Patients Using Multi-Modal Neuroimages. Sci. Rep. 2019, 9, 1103. [Google Scholar] [CrossRef]

- Gao, K.; Su, J.; Jiang, Z.; Zeng, L.L.; Feng, Z.; Shen, H.; Rong, P.; Xu, X.; Qin, J.; Yang, Y.; et al. Dual-Branch Combination Network (DCN): Towards Accurate Diagnosis and Lesion Segmentation of COVID-19 Using CT Images. Med. Image Anal. 2021, 67, 101836. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 4765–4774. [Google Scholar]

- Binder, A. Explainable AI for Breast Cancer Detection in Mammography: A Retrospective Cohort Study. Radiology 2021, 301, 695–704. [Google Scholar]

- Cheplygina, V.; De Bruijne, M.; Pluim, J.P. Not-so-supervised: A survey of semi-supervised, multi-instance, and transfer learning in medical image analysis. Med. Image Anal. 2019, 54, 280–296. [Google Scholar] [CrossRef]

- Yala, A.; Mikhael, P.G.; Strand, F.; Lin, G.; Smith, K.; Wan, Y.L.; Lamb, L.; Hughes, K.; Lehman, C.; Barzilay, R. Toward robust mammography-based models for breast cancer risk. Sci. Transl. Med. 2021, 13, eaba4373. [Google Scholar] [CrossRef] [PubMed]

- Kourou, K.; Exarchos, T.P.; Exarchos, K.P.; Karamouzis, M.V.; Fotiadis, D.I. Machine learning applications in cancer prognosis and prediction. Comput. Struct. Biotechnol. J. 2015, 13, 8–17. [Google Scholar] [CrossRef] [PubMed]

- Elhalawani, H.; Lin, T.A.; Volpe, S.; Chaovalitwongse, W.A.; Mohamed, A.S.; Balachandran, A.; Lai, S.Y. Radiomics and radiogenomics in head and neck oncology. Neuroimaging Clin. 2018, 28, 397–409. [Google Scholar]

- Kickingereder, P.; Bonekamp, D.; Nowosielski, M.; Kratz, A.; Sill, M.; Burth, S.; Wick, W. Radiogenomic profiling of glioblastoma: Identifying prognostic imaging biomarkers by multiparametric, multiregional analysis. Neuro-Oncology 2016, 18, 392–401. [Google Scholar]

- Grossmann, P.; Stringfield, O.; El-Hachem, N.; Bui, M.M.; Rios Velazquez, E.; Parmar, C.; Leijenaar, R.T.; Haibe-Kains, B.; Lambin, P.; Gillies, R.J.; et al. Defining the biological basis of radiomic phenotypes in lung cancer. Elife 2017, 6, e23421. [Google Scholar] [CrossRef]

| Study | Imaging Modality, Cancer Type | AI Technique | Radiomic Outcomes | Values | Advantages | Limitations |

|---|---|---|---|---|---|---|

| Aerts et al. (2014) [3] | CT Lung, Head and Neck | Radiomics, Machine Learning | Tumor phenotyping, Prognostic modeling | Quantitative imaging features associated with tumor genotype and clinical outcomes | Noninvasive, reproducible assessment of tumor characteristics | Requires large, well-annotated datasets for model training |

| Parmar et al. (2015) [11] | CT Lung | Radiomics, Machine Learning | Quantitative radiomic biomarkers | Identification of robust radiomic features predictive of clinical outcomes | Potential for risk stratification and personalized treatment planning | Feature selection and model validation remain challenging |

| Antropova et al. (2017) [12] | Mammography, Ultrasound, MRI Breast | Deep Feature Fusion | Breast cancer diagnosis | Improved breast cancer detection and characterization using multimodal imaging data | Leverages complementary information from different imaging modalities | Computational complexity and interpretability of deep learning models |

| Hosny et al. (2018) [13] | Multiple - | AI in Radiology | Potential of AI in radiology, challenges | Improved accuracy, efficiency, and consistency in medical image analysis | Opportunity to transform radiology practice and patient care | Concerns about data privacy, algorithmic bias, and ethical considerations |

| Wang et al. (2020) [14] | Multiple - | Radiomics, Deep Learning | Synergy of radiomics and deep learning | Leveraging the complementary strengths of radiomics and deep learning for clinical decision-making | Potential to enhance personalized medicine and precision diagnostics | Need for standardized protocols and interpretable hybrid models |

| Huang et al. (2016) [15] | CT Colorectal | Radiomics | Lymph node metastasis prediction in colorectal cancer | Preoperative prediction of lymph node involvement to guide treatment planning | Potential to improve surgical decision-making and avoid unnecessary procedures | Retrospective study design and need for prospective validation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maniaci, A.; Lavalle, S.; Gagliano, C.; Lentini, M.; Masiello, E.; Parisi, F.; Iannella, G.; Cilia, N.D.; Salerno, V.; Cusumano, G.; et al. The Integration of Radiomics and Artificial Intelligence in Modern Medicine. Life 2024, 14, 1248. https://doi.org/10.3390/life14101248

Maniaci A, Lavalle S, Gagliano C, Lentini M, Masiello E, Parisi F, Iannella G, Cilia ND, Salerno V, Cusumano G, et al. The Integration of Radiomics and Artificial Intelligence in Modern Medicine. Life. 2024; 14(10):1248. https://doi.org/10.3390/life14101248

Chicago/Turabian StyleManiaci, Antonino, Salvatore Lavalle, Caterina Gagliano, Mario Lentini, Edoardo Masiello, Federica Parisi, Giannicola Iannella, Nicole Dalia Cilia, Valerio Salerno, Giacomo Cusumano, and et al. 2024. "The Integration of Radiomics and Artificial Intelligence in Modern Medicine" Life 14, no. 10: 1248. https://doi.org/10.3390/life14101248