Abstract

We present an explainable artificial intelligence methodology for predicting mortality in patients. We combine clinical data from an electronic patient healthcare record system with factors relevant for severe mental illness and then apply machine learning. The machine learning model is used to predict mortality in patients with severe mental illness. Our methodology uses class-contrastive reasoning. We show how machine learning scientists can use class-contrastive reasoning to generate complex explanations that explain machine model predictions and data. An example of a complex class-contrastive explanation is the following: “The patient is predicted to have a low probability of death because the patient has self-harmed before, and was at some point on medications such as first-generation and second-generation antipsychotics. There are 11 other patients with these characteristics. If the patient did not have these characteristics, the prediction would be different”. This can be used to generate new hypotheses, which can be tested in follow-up studies. Diuretics seemed to be associated with a lower probability of mortality (as predicted by the machine learning model) in a group of patients with cardiovascular disease. The combination of delirium and dementia in Alzheimer’s disease may also predispose some patients towards a higher probability of predicted mortality. Our technique can be employed to create intricate explanations from healthcare data and possibly other areas where explainability is important. We hope this will be a step towards explainable AI in personalized medicine.

1. Introduction

Artificial intelligence and machine learning have become pervasive in healthcare. Unfortunately, many machine learning methods cannot explain why they made a particular prediction. Explainability is very important in critical domains like healthcare.

Black-box AI algorithms usually have high accuracy but are not explainable. Hence, there is a big research gap in explainable AI algorithms. We developed an explainable AI algorithm for black box models. We applied this to a practical problem of mortality in patients with severe mental illnesses.

In this work, we show how machine learning scientists can produce complex explanations from data and models. The complex explanations are generated based on class-contrastive reasoning [1,2]. An example of a class-contrastive explanation is: “This patient is predicted to be in a severely ill category because age is greater than 90 and the patient has a serious disease. If the age was less than 60, then the prediction would have been different”.

In a previous work [2], we applied class-contrastive analysis to explain machine learning models. Here, we extend this framework and show how machine learning scientists can generate more complex explanations from machine learning models.

We applied these techniques to generate complex explanations from the machine learning model. The machine learning model predicted mortality in patients with severe mental illness (SMI), which is of great public health significance [3]. It is difficult to predict who is at the greatest risk, and therefore who might benefit from interventions and treatments.

The research questions we aimed to address are the following: 1. create a supervised machine learning algorithm to predict mortality in patients with severe mental illness; 2. generate complex explanations to explain the predictions made by the machine learning algorithm.

Our main contribution is to develop a new explainable AI technique to generate complex explanations from black-box machine learning models. We then apply this to the practical problem of predicting mortality in patients with severe mental illness.

Our approach of generating complex explanations may be more broadly applicable to healthcare data and potentially other fields.

2. Data and Methods

2.1. Overview of Methods

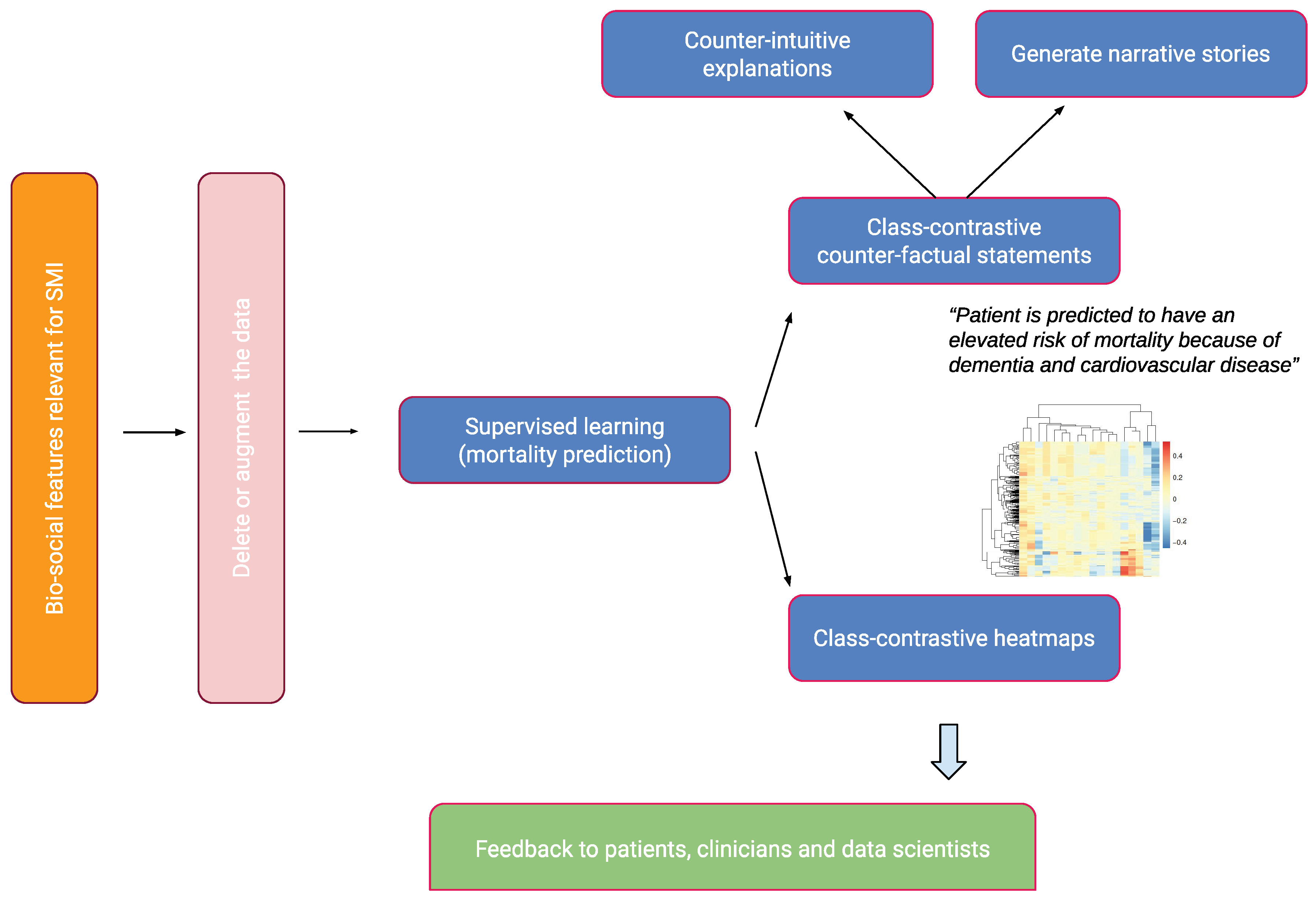

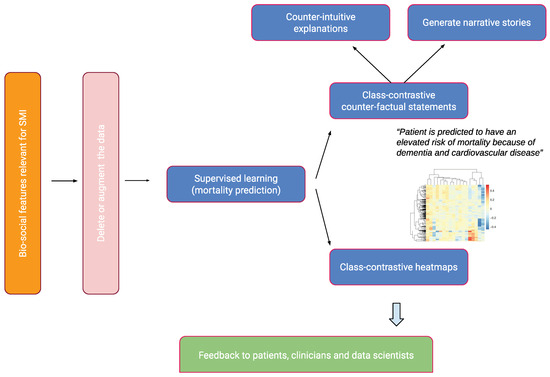

We give a brief overview of our approach in this section. This is summarized in Figure 1.

Figure 1.

An overview of the approach. Bio-social factors associated with severe mental illnesses (SMIs) come from electronic health records systems and are used to input statistical and machine learning algorithms. These models can be explained with class contradiction reasoning. Machine learning scientists use class-contrastive reasoning to generate complex explanations from data and models. Class-contrastive textual statements and heatmaps help domain experts (such as clinicians, patients, and data scientists) understand black-box models.

- We took de-identified data from an electronic patient record system for mental health.

- We established a collection of features that are not dependent on time, such as age, diagnosis categories, medication categories, and bio-social elements that are essential in severe mental illness (SMI). These features included data on mental health, personal history (e.g., previous suicide attempts and substance abuse), and social predisposing factors (e.g., lack of family support).

- We used these features to predict death during the time of observation.

- We then fit machine learning models and classical statistical models.

- We generated class-contrastive heatmaps to illustrate the explanations of statistical models and machine learning predictions, as well as accompanying statements to facilitate human understanding.

- Machine learning scientists can use these class-contrastive heatmaps to create complex explanations.

We employed artificial neural networks (autoencoders) to predict mortality for patients with schizophrenia. We then constructed a random forest model on top of the autoencoders to predict mortality. The random forest model utilized the reduced dimensions of the autoencoder as features to predict mortality. We conducted class-contrastive analysis for these machine learning models to make them interpretable.

2.2. Mental Health Clinical Record Database

We used data from the Cambridgeshire and Peterborough NHS Foundation Trust (CPFT) Research Database. This comprised electronic records from CPFT, the single provider of secondary care mental health services for Cambridgeshire. The records were de-identified using CRATE software v0.19.1 [4] under NHS Research Ethics approval (12/EE/0407, 17/EE/0442).

Data included patient demographics, mental health and physical co-morbidity diagnosis (via coded ICD-10 diagnosis, and analysis of free text through natural language processing [NLP] tools [5]).

Dates of death were derived from the National Health Service (NHS). There were a total of 1706 patients diagnosed with schizophrenia defined by coded ICD-10 diagnosis. We note that there was under-coding of schizophrenia diagnoses. We included all patients referred to secondary mental healthcare in CPFT from 2013 onward with a coded diagnosis of schizophrenia.

We extracted medicine information for each patient using natural language processing (using the GATE v8.6.1 software [5,6]) on clinical freetext data.

2.3. Data Input to Statistical Algorithms

The features that were used as input to our statistical and machine learning algorithms were age, gender, social predisposing factors, high-level diagnosis categories, and high-level medication categories. These included a range of bio-social factors that are important in SMI. All these features were used to predict mortality. The full list of features was as follows:

- High-level medication categories were created based on domain-specific knowledge from a clinician. These medication categories were as follows: second-generation antipsychotics (SGA: clozapine, olanzapine, risperidone, quetiapine, aripiprazole, asenapine, amisulpride, iloperidone, lurasidone, paliperidone, sertindole, sulpiride, ziprasidone, zotepine), first-generation antipsychotics (FGA: haloperidol, benperidol, chlorpromazine, flupentixol, fluphenazine, levomepromazine, pericyazine, perphenazine, pimozide, pipotiazine, prochlorperazine, promazine, trifluoperazine, zuclopenthixol), antidepressants (agomelatine, amitriptyline, bupropion, clomipramine, dosulepin, doxepin, duloxetine, imipramine, isocarboxazid, lofepramine, maprotiline, mianserin, mirtazapine, moclobemide, nefazodone, nortriptyline, phenelzine, reboxetine, tranylcypromine, trazodone, trimipramine, tryptophan, sertraline, citalopram, escitalopram, fluoxetine, fluvoxamine, paroxetine, vortioxetine and venlafaxine), diuretics (furosemide), thyroid medication (drug mention of levothyroxine), antimanic drugs (lithium), and medications for dementia (memantine and donepezil).

- Relevant co-morbidities we included were diabetes (inferred from ICD-10 codes E10, E11, E12, E13, and E14, and any mentions of the drugs metformin and insulin), cardiovascular diseases (inferred from ICD-10 diagnoses codes I10, I11, I26, I82, G45, and drug mentions of atorvastatin, simvastatin, and aspirin), respiratory illnesses (J44 and J45) and anti-hypertensives (mentions of drugs bisoprolol and amlodipine).

- We included all patients with a coded diagnosis of schizophrenia (F20). For these patients with schizophrenia, we also included any additional coded diagnosis from the following broad diagnostic categories: dementia in Alzheimer’s disease (ICD-10 code starting F00), delirium (F05), mild cognitive disorder (F06.7), depressive disorders (F32, F33), and personality disorders (F60).

- We also included relevant social factors: lack of family support (ICD-10 chapter code Z63) and personal risk factors (Z91: a code encompassing allergies other than to drugs and biological substances, medication noncompliance, a history of psychological trauma, and unspecified personal risk factors); alcohol and substance abuse (this was inferred from ICD-10 coded diagnoses of Z86, F10, F12, F17, F19, and references to thiamine, which is prescribed for alcohol abuse). Other features included were self-harm (ICD-10 codes T39, T50, X60, X61, X62, X63, X64, X78, and Z91.5), non-compliance and personal risk factors (Z91.1), referral to a crisis team at CPFT (recorded in the electronic healthcare record system), and any prior suicide attempts (in the last 6 months or any time in the past) coded in structured risk assessments.

These broad categories constituted our domain expert (clinician) based knowledge representation. We used these features to predict whether a patient had died during the time period observed (from first referral to CPFT to the present day). We note that we did not attempt to predict the risk of dying in a specific time period, for example in the next 1 year or in the next 90 days.

These data structures captured different information about the patient related to mental health, physical health, social factors, and predisposing factors.

2.4. Data Pre-Processing

Diagnosis codes were based on the International Classification of Diseases (ICD-10) coding system [7]. Age of patients was normalized (feature scaled). All categorical variables like diagnosis and medications (described above) were converted using a one-hot encoding scheme.

2.5. Machine Learning and Statistical Techniques

We used age (feature scaled) as a continuous predictor. There are a large number of categorical features (medications, co-morbidities, and other social and personal predisposing factors) that were encoded using one-hot representation.

We performed logistic regression using generalized linear models [8,9].

We also used artificial neural networks called autoencoders to integrate data from different sources, giving a holistic picture of the mental health, physical health, and social factors contributing to mortality in SMI [2].

Artificial neural networks are composed of computational nodes (artificial neurons) that are connected to form a network. Each node carries out a basic computation (like logistic regression). The nodes are arranged in layers. The input layer takes in the input features, transforms them, and passes them to one or more intermediate layers known as hidden layers. The output layer is used to generate a prediction (mortality).

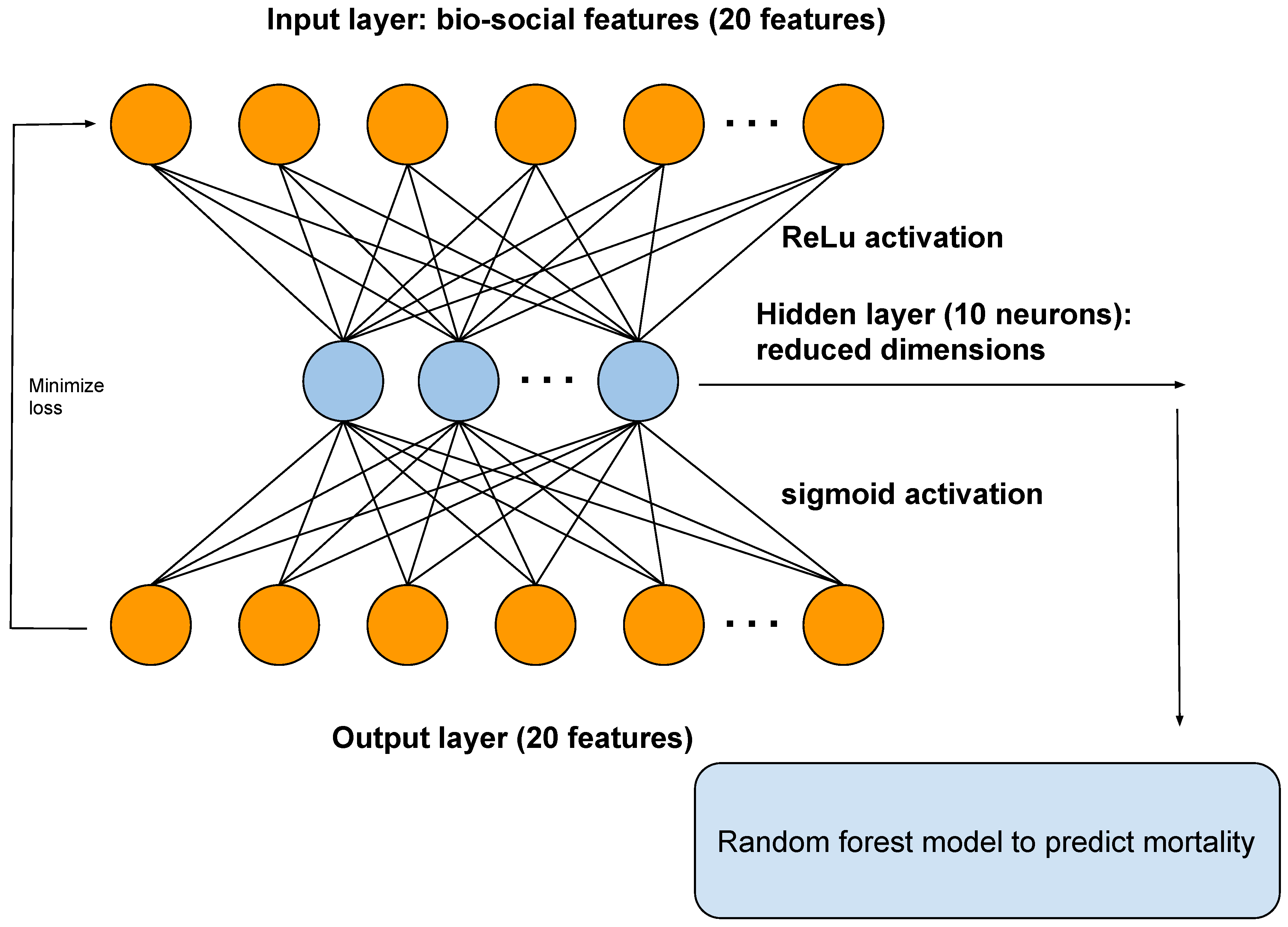

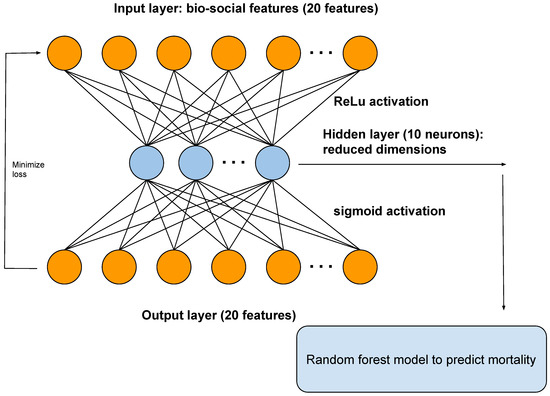

An autoencoder is a type of artificial neural network that reduces the number of neurons in the hidden layer compared to the input layer, thus performing dimensionality reduction. In our framework, the output of the hidden layer of the autoencoder is used as input to a random forest model (Figure 2).

Figure 2.

A diagram showing the architecture of the autoencoder. The autoencoder takes as input the bio-social features relevant for severe mental illness (SMI). The output layer is used to reconstruct the input. The hidden layer of the autoencoder is used for dimensionality reduction and as input to a random forest model, to predict mortality.

Random forests are machine learning models that build collections of decision trees [10]. Each decision tree is a series of choices based on the features. These decision trees are combined to build a collection (forest) that together has better predictive power than a single decision tree.

We performed a 50–25–25% training–test–validation split of the data. We performed 10-fold cross-validation with regularization to penalize model complexity. Additional details are available in Section Machine learning methods and summarized in Figure 2.

To predict mortality, we used the following models:

- Logistic regression model with all original input features.

- All the original input features used as input for the autoencoder. The reduced dimensions from an autoencoder were then used as input to a random forest model (Figure 2).

Class-Contrastive Reasoning

We use class-contrastive reasoning and class-contrastive heatmaps [2] to make our models interpretable. The process involved training the model on the training set. For each patient in the test set, we altered each categorical feature (or a set of features) from 0 to 1 or yes to no. We then measured the change in the predicted mortality probability of the model (on the test set). Predictions were made using the trained machine learning model on the test set.

We repeated this procedure independently for each feature and each patient. We did not retrain the model when we mutated the features. The change in probability was then visualized as a heatmap (class-contrastive heatmap).

In a class-contrastive heatmap, the rows represent patients and the columns represent the combination of features that are altered at the same time.

We note that age was a factor (predictor) in all the models we used; however, the class-contrastive heatmaps did not take this into account. This is because class-contrastive analysis only works with categorical features, and so it is not possible to modify age. Therefore, the class-contrastive heatmaps demonstrated the effect of changing features on the model’s predicted probability of mortality, in addition to the contribution of age.

2.6. Machine Learning Methods

We used artificial neural networks to predict mortality in patients with severe mental illness, specifically schizophrenia. We used an autoencoder to integrate data from different sources, giving a holistic picture of mental health, physical health, and social factors contributing to mortality in SMI [2].

The input features were age (normalized), gender, diagnosis categories, lifestyle risk factors, social factors, and medication categories. Categorical features (like medication categories) were encoded using a one-hot representation. This involves taking a vector that is as long as the number of unique values of the feature. Each position on this vector corresponds to a unique value taken on by the categorical feature. Whenever a categorical feature takes on a particular value (say true), we place a 1 (hot) corresponding to that position on the vector and 0 elsewhere.

We show the architecture of the autoencoder in Figure 2. The autoencoder has one hidden layer of 10 neurons. The autoencoder takes as input the bio-social features. The output layer is then used to reconstruct the input. The hidden layer of the autoencoder is used for dimensionality reduction. We also used the hidden layer as input to a random forest model.

We fed the output of a neural network to a random forest model because a similar model was used for neurological diseases [11]. This combined the advantages of neural networks with random forests. We also experimented with alternate models and architectures (see the Section Results, Subsection Additional analysis with simpler models).

We performed a 50–25–25% training–test–validation split of the data. The autoencoder used a Kullback–Leibler loss function (discussed below). We used the keras package version 2.3.0 [12] with the Tensorflow backend [13].

An artificial feed-forward neural network optimizes a loss function of the form:

This is the negative log-likelihood. There are d data points. The i th data point has a label denoted by and input feature vector represented by . The weights of the artificial neural network are represented by a vector . is a regularization parameter to prevent overfitting and reduce model complexity. is determined by 10-fold cross-validation. Shown here is the norm of the parameter vector.

An activation function is used to project the input matrix (X) into another feature space using weights (W). These weights are determined using backpropagation.

We used a ReLU (rectified linear unit) activation function for the hidden layers. The form of the ReLU function is shown below:

We added an penalty term for the weights to perform regularization and performed 10-fold cross-validation to select the regularization parameter ().

The autoencoder used a Kullback–Leibler loss function, which is a measure of discrepancy between the input layer and reconstructed hidden layer.

where there are m features in the input layer. u represents the input layer and v represents the hidden layer. The layers are calculated by applying the appropriate activation functions.

We also experimented with a categorical-cross entropy loss function for the autoencoder (shown below) [11]. However, our results did not change significantly.

The final cost function is given below:

where the vector represents all the weights of the artificial neural network. There are m features in the input layer. u represents the input layer and v represents the hidden layer. is a regularization parameter which prevents overfitting.

We initialized weights of the artificial neural network according to the Xavier initialization scheme [14] and used the Adadelta method of optimization [15]. An artificial neural network was trained on the training data for a number of epochs. Our neural networks were trained for 1000 epochs, which was assessed as being sufficient to reach convergence.

2.7. Software

All software was written in the R 4.2.1 [16] and Python 3.9.12 programming languages. The deep learning algorithm was implemented using the keras package version 2.3.0 [12] with the Tensorflow backend [13]. Logistic regression was performed using the glm function in R [9,17]. Heatmaps were visualized using the pheatmap package version 1.0.12 [18]. All our software is freely available here: https://github.com/neelsoumya/machine_learning_advanced_complex_stories, accessed on 3 April 2024. Code to perform similar analysis on a publicly available dataset is available here [19]: https://github.com/neelsoumya/complex_stories_explanations, accessed on 3 April 2024.

3. Results

3.1. Class-Contrastive Reasoning and Modifying the Training Data to Generate Explanations

A machine learning algorithm can be explained by both how the model itself explains predictions and how it uses the idiosyncrasies in the data to derive explanatory power. We sought to answer the following question: from which training data examples does the ability to make a particular prediction come from? We removed specific training data examples to answer this question.

In the first instance, we used class-contrastive reasoning on a logistic regression model. The logistic regression model was trained on the training data. We then changed one feature at a time on the test data and recorded the change in the model prediction (on the test set). In this scenario, the model was not retrained. The change in model predictions was visualized as a class-contrastive heatmap.

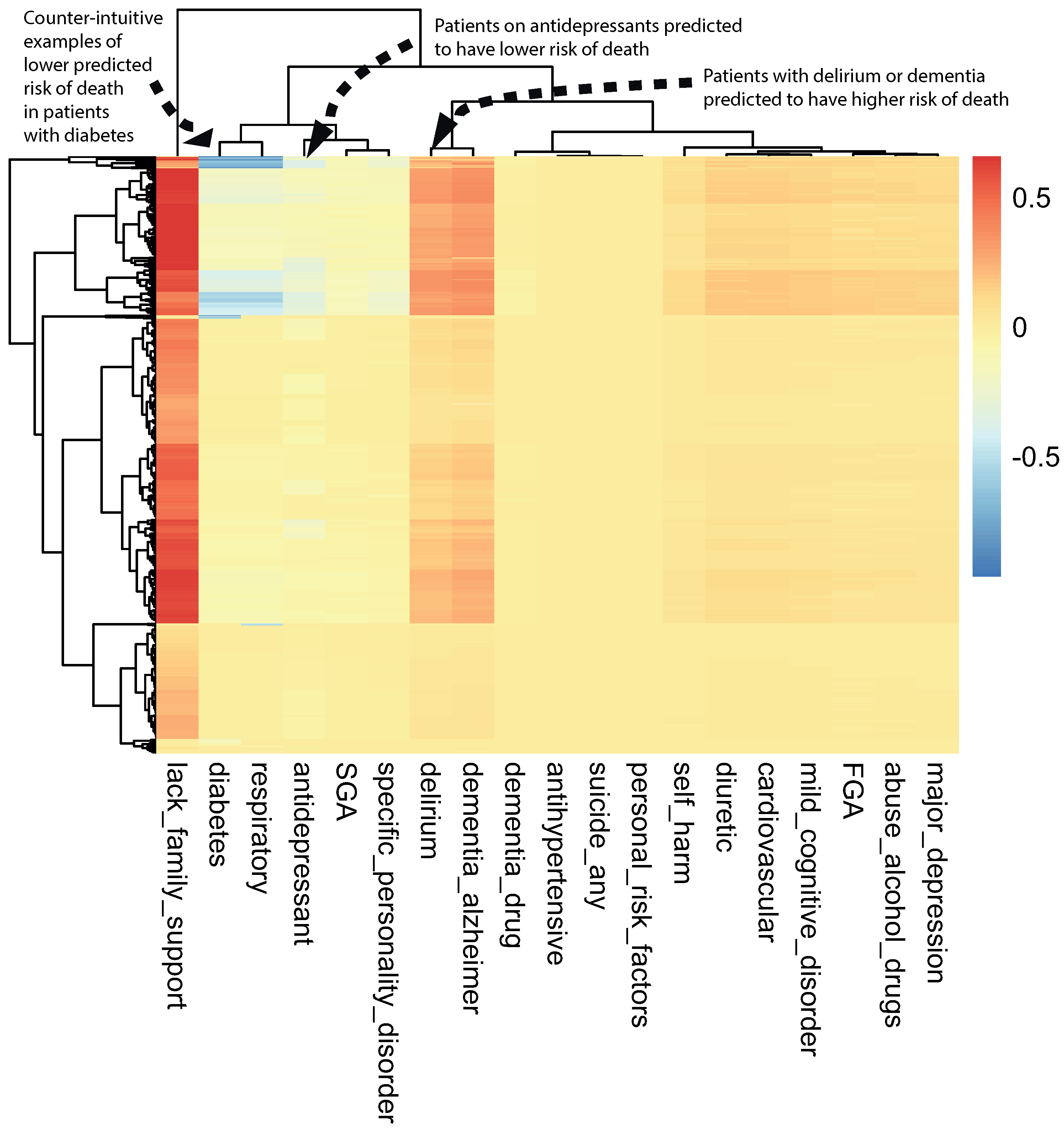

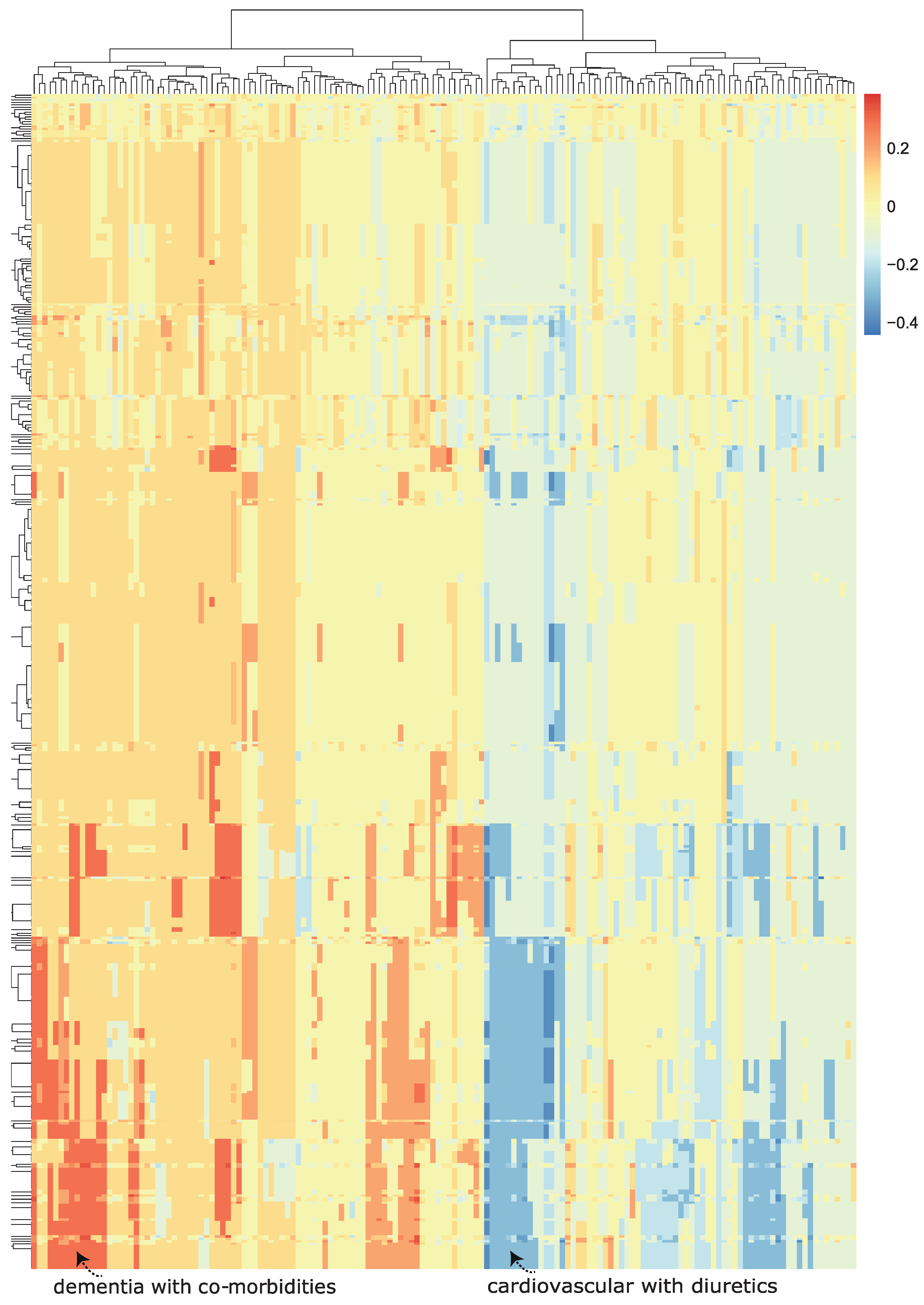

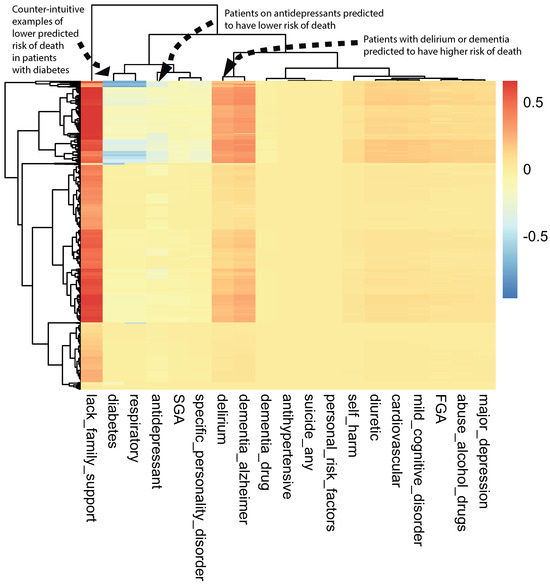

A class-contrastive heatmap is shown in Figure 3. This heatmap suggests some intuitive and counter-intuitive statements.

Figure 3.

A visualization of the amount of change predicted in the probability of death by setting a particular feature to 1 versus 0 (using a logistic regression model) on the test set. The rows represent patients and the columns represent features. The predictions were made using a logistic regression model on the test set. This class-contrastive heatmap also shows a hierarchical clustering dendrogram made using an Euclidean distance metric and complete linkage. The arrows show groups of patients with low predicted risk of mortality (shown in blue on the heatmap) using the logistic regression model. The arrow at the top left shows another group of patients taking antidepressants. These patients were predicted (using the logistic regression model) to have a lower risk of mortality. The arrow in the top left corner shows a group of patients having diabetes and still having low predicted risk of death: this is counter-intuitive.

We observed that a diagnosis of delirium or dementia seemed to predispose a group of patients towards a higher probability of predicted mortality (Figure 3). Additionally, the class-contrastive heatmap suggested that a group of patients on antidepressants were less likely to die during the period observed (Figure 3).

One counter-intuitive class-contrastive statement is “Use of or prescription of second-generation antipsychotics (SGA) and any prior suicide attempt is correlated with a decline in mortality”. This is a very counter-intuitive statement.

To get at why such a counter-intuitive statement was produced, we sought to understand which parts of the training data influenced the model to come up with this statement. The data and the model were entangled. In order to disentangle this, we carried out the following:

- we used case-based reasoning to determine which patients are similar and have these characteristics.

- we then removed these data from the training set.

- we retrained the statistical model on this modified data.

We hypothesized that the statistical model was learning a pattern from the data, since there is a subset of patients who are alive, have used SGA, and have attempted suicide. We removed all instances of co-occurrence of patients being alive, having attempted suicide, and also having used or been prescribed SGA (prior suicide attempt = 1, SGA = 1, and death flag = 0). There were 539 patients with these characteristics, who we removed. This is similar to case-based reasoning, since we identified 539 cases that were similar to each other. This left us with 1167 patients with schizophrenia.

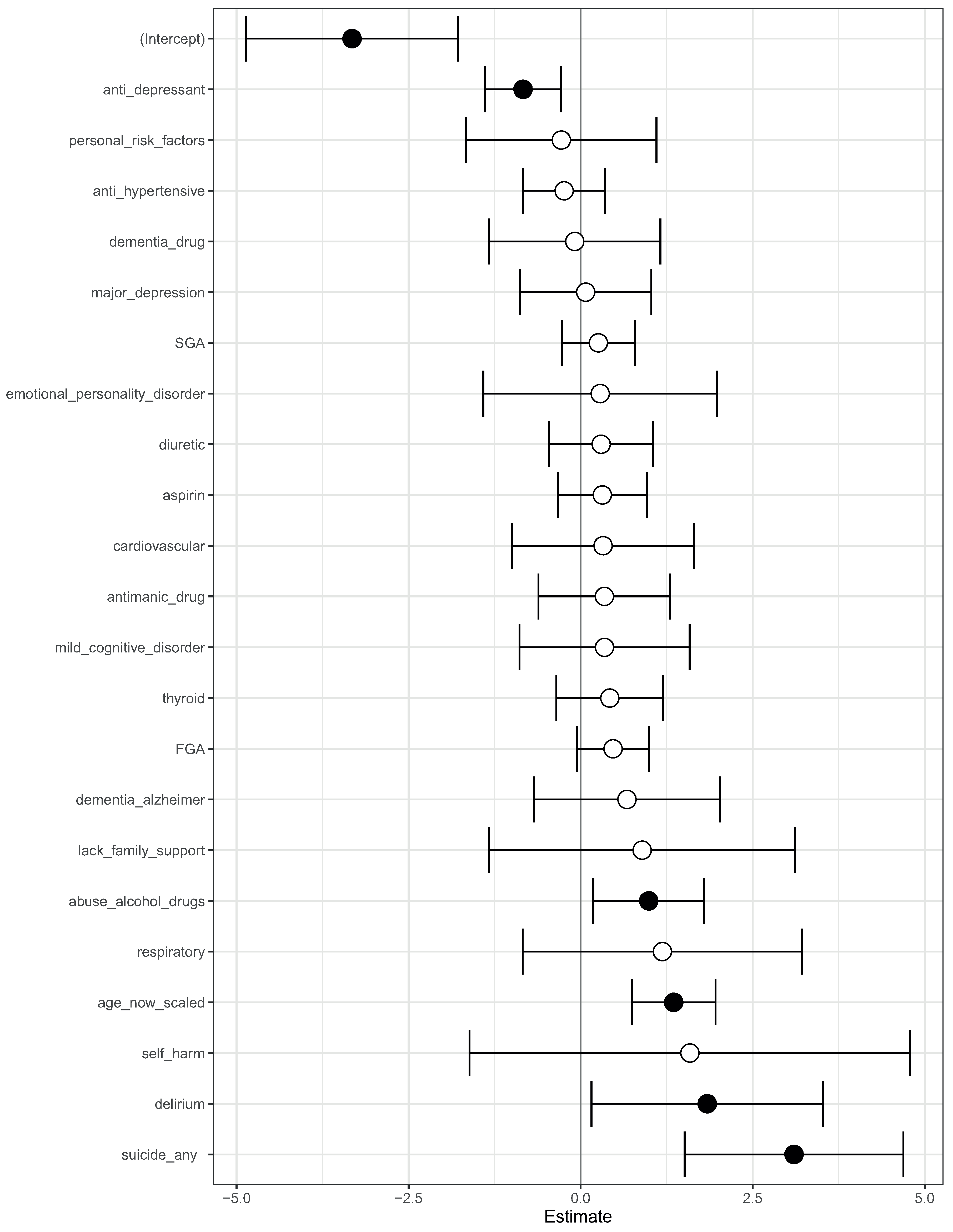

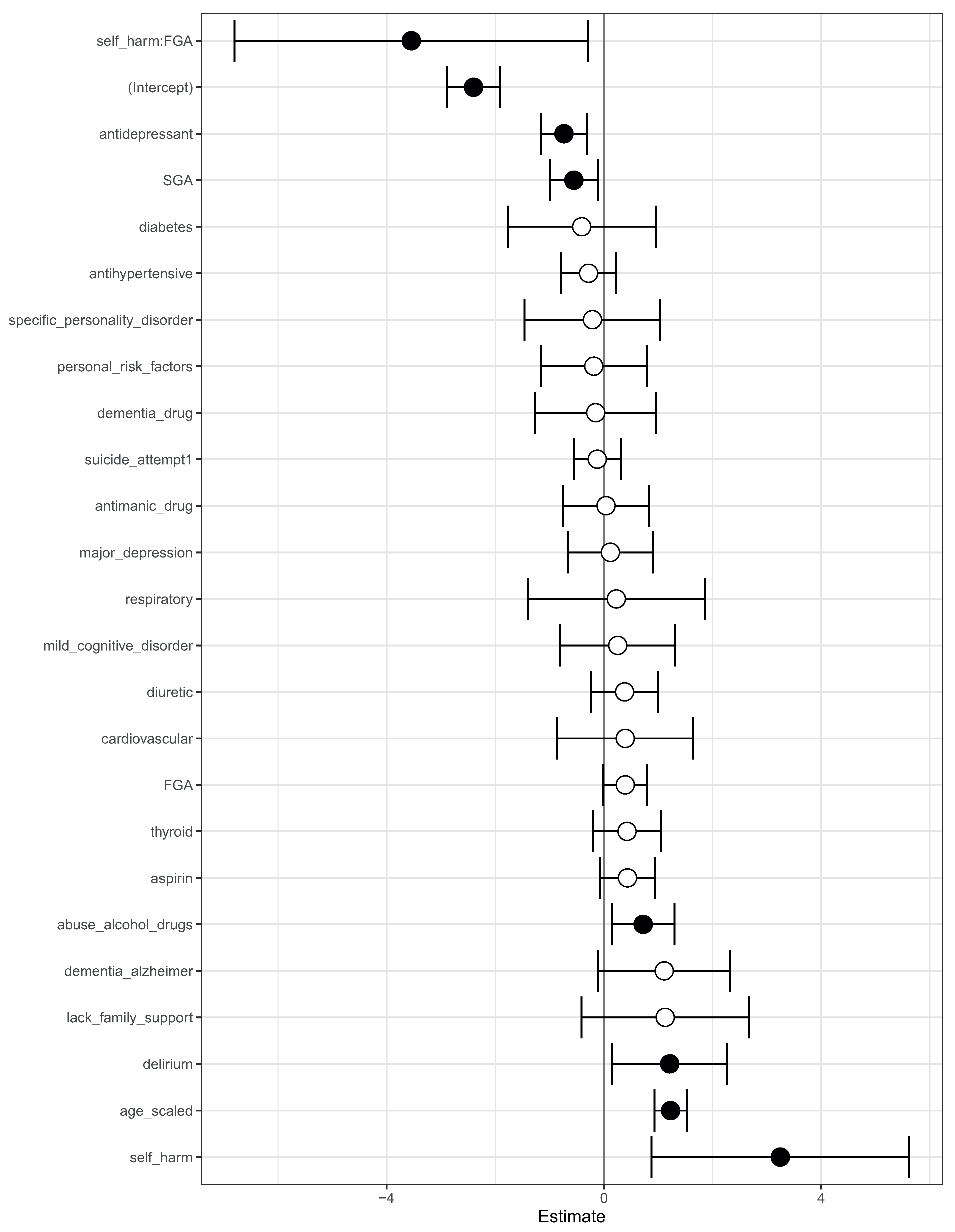

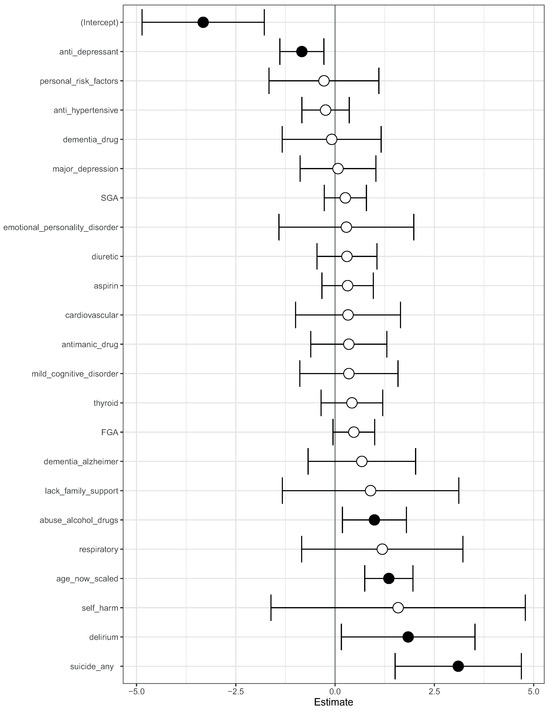

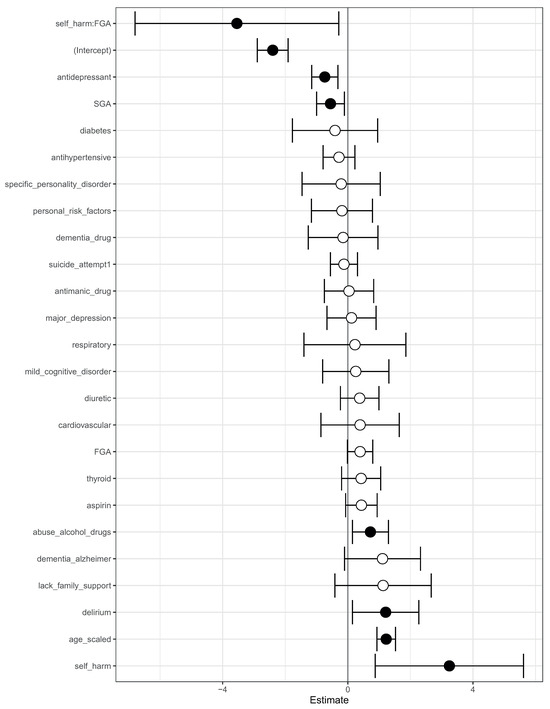

We then fit a logistic regression model on this modified data. The log-odds ratio from the model is shown in Figure 4. On this modified data, prior suicide attempt had a log-odds ratio greater than 1 (Figure 4). Hence, the apparent association of prior suicide attempts with lower mortality was removed after modifying the training data.

Figure 4.

A visualization of the log-odds ratios from a logistic regression model trained on the modified data, where all co-occurrences of prior suicide, SGA use, and death have been removed. Shown are confidence intervals and statistical significance (filled dark circles: p-value < 0.05, open circles: not significant).

This suggests that there may be a subset of patients with schizophrenia where prior suicide attempt is associated with reduced mortality. It may be that, after attempted suicide, these patients received very good care from mental health service providers. This is subject to verification in future follow-up studies in an independent cohort with more patients. Our objective here is only to raise hypotheses in an observational study that can be verified in follow-up studies.

We suggest that this data-centric approach can be used to explain machine learning models. This can complement the purely model-centric approach that has dominated techniques for explainable AI.

The counter-intuitive observations made on the test set could also be the result of imbalances in the training set. For example, a particular binary feature could be 1 for 1000 patients and 0 for 10 patients.

Finally, we note that age was a factor (predictor) in all the models we used; however, the class-contrastive heatmaps did not take this into account. This is because class-contrastive analysis only works with categorical features, and so it is not possible to modify age. Therefore, the class-contrastive heatmaps demonstrated the effect of changing features on the model’s predicted probability of mortality, in addition to the contribution of age.

3.2. Class-Contrastive Reasoning Applied to Machine Learning Models

We can also use this class-contrastive reasoning technique to explain machine learning models [2].

A machine learning model was trained on the training data and then used to predict mortality. We then took into account all pairs of features in the test set: for each pair of features in the test set, we then simultaneously changed the values from 0 to 1. We recorded the difference in the probability of mortality (predicted using the model on the test set). This process was then repeated for each pair of features and for each patient in the test set.

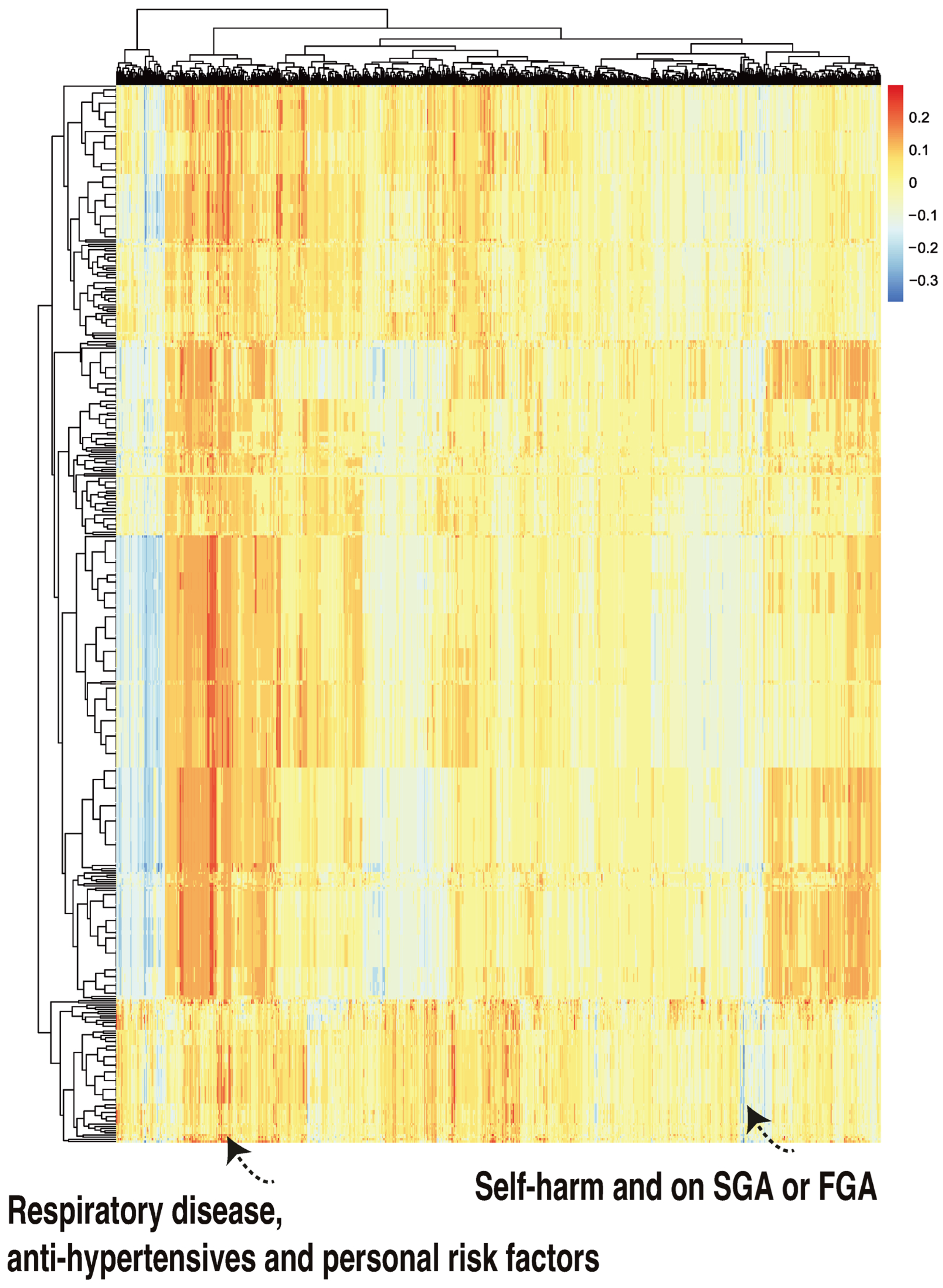

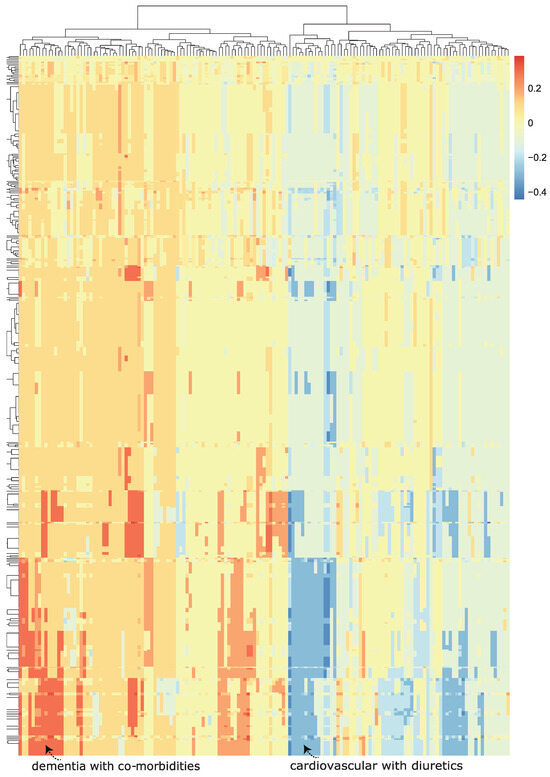

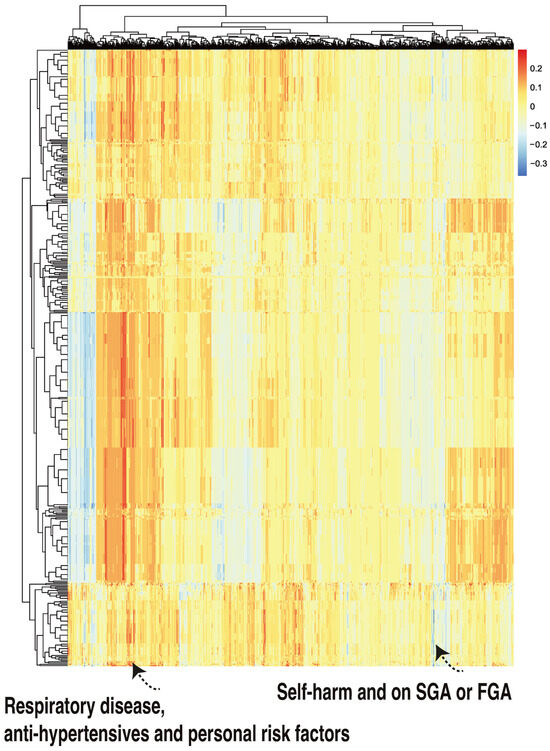

We then visualized the change in the model predicted probability of mortality (produced by the trained machine learning model in the now modified test set). We visualized this using a heatmap, which we call a class-contrastive heatmap (Figure 5).

Figure 5.

A class-contrastive heatmap for the deep learning model, showing the effect of interactions between features. This is a visualization of the amount of change predicted in the probability of death (from the deep learning model) by setting a particular combination of two features to 1 simultaneously (versus 0) on the test set. The rows represent patients, and the columns represent groupings of features (all combinations of two features). The predictions were made on the test set using a deep learning model. Delirium and dementia in Alzheimer’s disease seemed to increase the risk of death for some patients: this is shown in red in the lower left-hand corner of the heatmap. Diuretics appeared to be associated with a lower probability of predicted mortality in some patients with cardiovascular disease: see the blue region in the lower right-hand corner of the heatmap). The heatmap also shows a hierarchical clustering dendrogram: this was created using an Euclidean distance metric and complete linkage.

This heatmap shows the importance of combinations of features. For example, diuretics seemed to be associated with a lower probability of mortality (as predicted by the machine learning model) in a group of patients with cardiovascular disease. This is shown in the blue region of the heat map in the bottom right-hand corner (Figure 5). This is the region where the predicted probability of death is reduced most. The combination of delirium and dementia in Alzheimer’s disease may also predispose some patients towards a higher probability of predicted mortality (as predicted by the machine learning model on the test set). This is shown in the lower left region of the heatmap in red (Figure 5).

3.3. Generating Complex Explanations

We then applied class-contrastive reasoning to the deep learning model, where we simultaneously changed three features on the test set, from all being set to 1 to all being set to 0. We considered all combinations of three features on the test set. For a particular combination of three features, we set all of them simultaneously to 1. We then recorded the model predicted probability of mortality (for all patients in the test set). We then set those three features simultaneously to 0 and recorded the model predicted probability of mortality (for all patients in the test set). The difference in these two probabilities was also stored. This process was then repeated for all combinations of three features.

The rows of the class-contrastive heatmap represent patients, and the columns represent those abovementioned differences in probabilities. A class-contrastive heatmap for this is shown in Figure 6.

Figure 6.

A class-contrastive heatmap for the deep learning model, where three features were changed from 1 to 0 simultaneously for the test set. The heatmap shows the amount of change predicted for the probability of death by simultaneously setting 3 combinations of features to 1 versus 0. The rows represent patients and the columns represent all combinations of 3 features. The predictions were made with a deep learning model on the test set. The figure shows two groups of patients. The group in the left-hand corner is composed of patients with respiratory disease who are taking anti-hypertensives and have personal risk factors: they were predicted by the machine learning model to be at higher risk of mortality. The group in the right-hand corner of the heatmap is composed of patients who have self-harmed and were at some point on second-generation antipsychotic (SGA) or first-generation antipsychotic (FGA) medications. Counterintuitively, this group of patients was predicted (by the machine learning model) to have a lower risk of mortality.

There was one patient (Figure 6, right hand corner) who was predicted to have a reduced probability of mortality. The class-contrastive technique suggests that if the patient self harms and was at some point on SGA or FGA, this would lead to a lower risk of predicted mortality (as predicted by the machine learning model on the test set).

Using case based reasoning, we found 11 cases that were similar to each other (have self-harmed and were at some point on SGA and FGA). There are 11 such patients, of whom 1 has died. We hypothesized that the deep learning algorithm may have inferred that the simultaneous co-occurrence of these features was associated with protection (against mortality).

The class-contrastive explanation here is as follows: “The patient is predicted to have a low probability of death because the patient has self-harmed before, and was at some point on SGA or FGA. There are 11 other patients with these characteristics. If the patient did not have these characteristics, the prediction would be different”.

Hence, we can build ever more complex explanations and narratives using class-contrastive reasoning. These narratives can be used to build hypotheses that can be tested in future studies.

We complemented this class-contrastive analysis by fitting a simple logistic regression model with all main effects and an interaction effect between self-harm and SGA fit to the same data (Figure 7). We observed that self-harm interacted with FGA (p-value = 0.03). It is possible that patients who self-harmed were put on FGA and the medication had a protective effect. We note that our objective was only to raise novel hypotheses, which will ultimately need to be tested in future studies.

Figure 7.

Odds ratios from a logistic regression model to predict mortality. We showed the main effects and an interaction effect between self harm and use of first-generation antipsychotics (FGA). Also shown are the odds ratios corresponding to use of FGA and second-generation antipsychotics (SGA). Statistical significance is denoted by filled dark circles (p-value < 0.05) and open circles (not significant).

There was an imbalance between the number of patients who have self-harmed, are on SGA, and also on FGA (11 patients), and the number of patients who have not self-harmed, are not on SGA, and also not on FGA (152 patients). There were very few patients in one of these groups (11 patients). This has implications for inferring parameters in the machine learning and logistic regression model. Hence, these counter-intuitive narratives may have been generated because the machine learning algorithm was not able to understand the real importance of the combinations of the features, since they were very rare in the data. We explored this in more detail in the counter-intuitive narratives section below.

We note that our objective is to raise hypotheses and not posit novel mechanisms or generate novel clinical insights. Our approach can be used to generate new hypotheses that can be tested in follow-up studies with more data or interventional studies (randomized control trials).

3.4. Counter-Intuitive Narratives

Our approach is not without limitations. Machine learning scientists can use class-contrastive reasoning to generate increasingly complex explanations. However, in some instances this can lead to explanations that are counter-intuitive.

As progressively more complex stories are generated, there is less data to perform inference on for these complex and unique patterns. We hypothesize that this leads to counter-intuitive stories. These counter-intuitive statements (or counter-factual narratives) can also be adversarial examples and be generated due to spurious learning [20]: the machine learning model picking up spurious statistical patterns in the data. These may point to additional data collection efforts or motivate new hypotheses.

We outline such an example here. One complex explanation is as follows: “There are 3 patients with respiratory disease, on anti-hypertensives and having personal risk factors: they are predicted to be at a higher risk of mortality”. This is shown in Figure 6 (left hand corner). However, there were no patients in either the training set or test set with these features, i.e., having respiratory disease, being on anti-hypertensives, and having personal risk factors. Hence, the machine learning algorithm may have picked up a spurious pattern.

We hypothesize that these counter-intuitive stories can lead to contradictions, but they might also suggest follow-up studies and further investigations. Hence, this may be used to generate hypotheses that can be tested using additional data.

3.5. Additional Analysis with Simpler Models

In this section, we perform some additional analyses with simpler models.

The following models were employed for mortality prediction: (1) a random forest model using the original features (95% CI of AUC: [0.71, 0.79]), (2) application of PCA to the original features with the resulting dimensions utilized in a random forest model (95% CI of AUC: [0.51, 0.76]), and a logistic regression model (95% CI of AUC: [0.52, 0.77]), and (3) an regularized logistic regression model based on the original features (95% CI of AUC: [0.72, 0.74]). Principal component analysis (PCA) was applied to the original input features, extracting the foremost 10 principal components. These components were subsequently employed as inputs for both a logistic regression model and a random forest model, independently.

For the regularized logistic regression model, the regularization hyperparameter was optimized as previously mentioned. In brief, the dataset was divided into a training set (), a validation set (), and a test set (). The model underwent training on the training set and cross-validation on the validation set. Subsequently, the regularization parameter for the penalized logistic regression model was determined. The finalized model was then assessed on the test set. This methodology, involving the division of the dataset into training, validation, and test sets; training the model; and executing cross-validation, was repeated 10 times.

Our machine learning model (random forests using the reduced dimensions of an autoencoder) had an AUC of 0.80 (95% confidence interval [0.78, 0.82]).

Our objective was not to comprehensively evaluate all conceivable statistical models, but rather to provide a brief overview and analysis of selected techniques. We intended to implement class-contrastive analysis on a selection of machine learning models to demonstrate that their predictions can be elucidated in certain situations. It is important to clarify that our goal was not to prove that some machine learning models are superior to others. Our aim was to illustrate that black-box methods can be rendered interpretable through class-contrastive reasoning.

4. Discussion

4.1. Overview

Clinical data collected routinely in hospitals can help generate insights. We used a machine learning model to predict the mortality of patients with SMI using regularly collected clinical data. We present an approach where machine learning scientists can generate complex explanations of the machine learning model.

Using clinical information on physical, mental, personal, and social factors, we created profiles of patients. The machine learning model predicted patient mortality. The predictions were then explained by machine learning scientists by using class-contrastive reasoning. We extended previous work [2] to account for the more complex explanations of the data and the model.

We generated complex explanations to explain specific features of the data and the model. We used these as a mechanism to generate hypotheses, which can be investigated in follow-up studies with more patients.

4.2. Implications of Findings

The methodology described here can be used by machine learning scientists to visually inspect class-contrastive heatmaps and create complex explanations. These can be used to generate new hypotheses and design new follow-up studies.

Our class-contrastive framework highlights some counter-intuitive results. One counter-intuitive statement is as follows: “Features which if set to 0 provide most positive change in probability of predicted mortality are: any prior suicide attempt and use of SGA”.

We hypothesized that the machine learning model was learning a pattern from the data, since there is a subset of patients who are alive, have been prescribed SGA, and have attempted suicide. We removed all instances of co-occurrence of suicide attempt and prescription of SGA and the patient being alive, i.e., suicide = 1 and SGA = 1 and death flag = 0. There were 539 patients with these characteristics, who we removed. This is similar to case-based reasoning, since we identified 539 cases that were similar to each other. This left us with 1167 patients.

We then fit a logistic regression model on these modified data (Figure 4). Now, prior suicide attempt had a log-odds greater than 1. This now took away the apparent association of prior suicide with reduced mortality.

This suggests there may be a subset of patients where suicide attempt is associated with reduced mortality. It may be, that after attempted suicide, these patients received very good care from mental health service providers. This is subject to verification in future follow-up studies. Our objective was only to raise hypotheses in an observational study that can be verified in follow-up studies.

Diuretics seemed to be associated with a lower probability of mortality (as predicted by the machine learning model) in a group of patients with cardiovascular disease. This is shown in the blue region of the heat map in the bottom right-hand corner (Figure 5). The combination of delirium and dementia in Alzheimer’s disease may also predisposed some patients towards a higher probability of predicted mortality (as predicted by the machine learning model on the test set). This is shown in the lower left region of the heatmap in red (Figure 5).

4.3. Relevant Work

Our approach used elements of case-based and analogy-based reasoning [21]. Analogy based reasoning may be central to how humans generate insights into problems [22].

Many deep learning approaches can also pick up spurious statistical patterns in data [20]. We note that it is possible that many of the patterns so discovered are spurious. Our findings will need to be validated in future studies with more patient data, and they may also serve as adversarial examples. Our approach may help uncover weaknesses in learning spurious patterns from data using deep learning models.

There have also been efforts to characterize what kinds of shortcuts are being taken by machine learning algorithms [20,23]. Our approach may also help uncover shortcut learning in artificial neural networks.

The complex explanations that we suggest are similar to stories. Telling, understanding, and recombining stories is a powerful component of human intelligence [24,25]. Story generating systems are thought to underlie human intelligence [24]. Stories may help humans learn to generalize and specialize from small numbers of examples.

Story generating systems are a form of symbolic AI and are an evolution from earlier efforts to integrate symbols and logic in AI. Symbolic AI is thought to be a really important component of future AI systems that can reason [26,27].

We present an approach where machine learning scientists can work collaboratively with machines to generate stories from data and models. Our approach is a step towards computationally generating stories as explanations from machine learning models.

4.4. Limitations and Future Work

We used observational data from a clinic. We did not imply causation. Hence, our results should not be used to change clinical practice. Our objective was to raise hypotheses that will need to be tested in further studies with more patients or randomized controlled trials.

In this work, we generated narratives based on a visual inspection of class-contrastive heatmaps. This is subjective and may have led to bias. In future work, we will look at computationally building narratives. Computational techniques can also be used to explain disagreements or consensus between different kinds of statistical models. In future work, we will also look at automatically generating explanatory stories from data [1,2,28] that can engage humans [24,25,29].

Narratives can also be misleading and reflect cognitive biases. Future work will investigate how to protect against misuse of narratives and remove possible bias in stories. There also needs to be a pluralistic evidence base for generating narratives that accounts for multiple sources of evidence, and multiple viewpoints and data sources [30].

In high dimensions, there can be many adversarial examples and also many counterfactual explanations. Adversarial examples are similar to class-contrastive or counterfactual explanations. We acknowledge that the class-contrastive examples and the stories we generate may indeed be artifacts of the training process, training data, and the model: these could be adversarial examples that have no meaning. Hence, these explanations may not represent possible worlds or map to realistic scenarios.

Our approach may also uncover noise. This can lead to counter-intuitive narratives (see the counter-intuitive narratives section). Distinguishing legitimate discoveries from pure noise will require further studies with more patients.

Confounding effects also complicate explainability. For example, there are also side effects of medications such as SGA that may lead to cardiovascular diseases, which may be subsequently managed using diuretics. Hence, our approach may have uncovered these relationships between SGA, cardiovascular disease, and diuretic use.

We note that we also did not control for the multiple comparisons that were made implicitly during the process of generating complex explanations.

Our work also suffers from the limitation of a low sample size. Our results should be validated in another cohort with more patients. We also used simpler statistical models to ensure that we did not overfit to our data (see the Results, additional analysis subsection with simpler models).

Finally, we note that our approach of finding the basis of counter-intuitive predictions and shaping the data could also used by malicious adversaries to inject the precise kinds of training data needed to change the way a machine learning model behaves.

4.5. Concluding Remarks

The nature of explanations is multi-faceted and complex [31]. We focused on class-contrastive techniques to generate complex explanations in a machine learning model. The model was used to predict mortality in patients with SMI.

We showed how machine learning scientists can use class-contrastive reasoning to generate complex explanations. As an example of a complex explanation, we showed the following: `The patient is predicted to have a low probability of death because the patient has self-harmed before, and was at some point on SGA or FGA. There are 11 other patients with these characteristics. If the patient did not have these characteristics, the prediction would be different”.

A class-contrastive statement for one randomly selected patient is as follows: “The selected patient is at high-risk of mortality because the patient has dementia in Alzheimer’s disease and has cardiovascular disease. If the patient did not have both of these characteristics, the predicted risk will be much lower”.

We can build ever more complex explanations using class-contrastive reasoning. These complex explanations can be used to build hypotheses that can be tested in future studies. We think of our approach as a hypothesis generating mechanism, rather than an aid for decision making for clinicians.

The complex explanations generated by us are similar to stories. Stories have the ability to engage humans [24,25]. Story generating systems potentially also underlie human intelligence [24]. The work presented here can be used to computationally build narratives that explain data and models [25,32,33]. Data scientists can use human-in-the-loop computational techniques to generate explanatory stories and narratives from data and models.

Our work combined classical approaches to AI with more modern deep learning techniques [34]. Approaches like these that combine the strengths of deep learning with traditional AI approaches (like structured rule-based representations, case-based reasoning, and commonsense reasoning) are likely to yield promising results in all fields of applied machine learning [35].

Explainability in machine learning models suffers from a number of keylimitations [36,37,38]. Some of these limitations have to do with the nature of high-dimensional data and the counter-intuitive nature of the high-dimensions that the model and data reside in [39,40]. Others have to do with how counter-intuitive predictions that may arise due to idiosyncrasies in the data. These issues are entangled in complex ways. Our method provides a step towards disentangling some of these issues. We note that our method does not completely solve these issues but we hope it may provide a fruitful next step towards the development of more practical explainable AI techniques.

We note that our aim was not to improve accuracy (with these machine learning techniques) but produce a constellation of interpretable methods: these could range from classical statistical techniques (such as logistic regression) to modern machine learning techniques. More details on the accuracy and comparisons of our machine learning technique with other methods are available in [2].

Our framework could also be very useful for clinical decision support systems. These techniques could ultimately be used to build a conversational AI that could explain its predictions to a domain expert. This would need comprehensive evaluation and testing in a clinical setting.

Our approach generates complex explanations that are human understandable from black-box machine learning algorithms. We suggest that this approach could also be applied in other critical domains where explainability is important. Such domains include finance and algorithms used in governance and civil administration.

In summary, we present an approach where machine learning scientists interrogate models and build complex explanations from clinical data and models. Our methodology may be broadly applicable to domains where the explainability of machine learning models is important.

Funding

S.B. acknowledges funding from the Accelerate Programme for Scientific Discovery Research Fellowship. This work was also partially funded by an MRC Mental Health Data Pathfinder grant (MC_PC_17213). The views expressed are those of the author(s) and not necessarily those of the funders. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board (or Ethics Committee) of Cambridgeshire and Peterborough NHS Foundation Trust (protocol code: REC references 12/EE/0407, 17/EE/0442, 22/EE/0264; IRAS project ID 237953 and date of approval: 14 November 2017).

Informed Consent Statement

The CPFT Research Database operates under UK NHS Research Ethics approvals (REC references 12/EE/0407, 17/EE/0442; IRAS project ID 237953). All methods were carried out in accordance with relevant guidelines and regulations. All experimental protocols were approved by a named institutional and/or licensing committee. Informed consent was obtained from all subjects and/or their legal guardian(s).

Data Availability Statement

This study reports on human clinical data which cannot be published directly due to reasonable privacy concerns, as per NHS research ethics approvals and NHS information governance rules. The CPFT Research Database is private. This study reports on human clinical data which cannot be published directly due to reasonable privacy concerns, as per NHS research ethics approvals and NHS information governance rules. All our software is freely available here: https://github.com/neelsoumya/machine_learning_advanced_complex_stories, accessed on 3 April 2024. Code to perform similar analysis on a publicly available dataset is available here: https://github.com/neelsoumya/complex_stories_explanations, accessed on 3 April 2024.

Acknowledgments

We thank Rudolf Cardinal, Daniel Porter, Jenny Nelder, Shanquan Chen, Sol Lim, Li Zhang, Athina Aruldass and Jonathan Lewis for their support during this project. This work is dedicated to the memory of Tathagata Sengupta.

Conflicts of Interest

The author has no conflicts of interest to disclose.

References

- Sokol, K.; Flach, P. Conversational Explanations of Machine Learning Predictions through Class-contrastive Counterfactual Statements. Int. Jt. Conf. Artif. Intell. Organ. 2018, 7, 5785–5786. [Google Scholar] [CrossRef]

- Banerjee, S.; Lio, P.; Jones, P.B.; Cardinal, R.N. A class-contrastive human-interpretable machine learning approach to predict mortality in severe mental illness. NPJ Schizophr. 2021, 7, 60. [Google Scholar] [CrossRef] [PubMed]

- Olfson, M.; Gerhard, T.; Huang, C.; Crystal, S.; Stroup, T.S. Premature mortality among adults with schizophrenia in the United States. JAMA Psychiatry 2015, 72, 1172–1181. [Google Scholar] [CrossRef] [PubMed]

- Cardinal, R.N. Clinical records anonymisation and text extraction (CRATE): An open-source software system. BMC Med. Inform. Decis. Mak. 2017, 17, 50. [Google Scholar] [CrossRef] [PubMed]

- Cunningham, H.; Tablan, V.; Roberts, A.; Bontcheva, K. Getting More Out of Biomedical Documents with GATE’s Full Lifecycle Open Source Text Analytics. PLoS Comput. Biol. 2013, 9, e1002854. [Google Scholar] [CrossRef] [PubMed]

- Sultana, J.; Chang, C.K.; Hayes, R.D.; Broadbent, M.; Stewart, R.; Corbett, A.; Ballard, C. Associations between risk of mortality and atypical antipsychotic use in vascular dementia: A clinical cohort study. Int. J. Geriatr. Psychiatry 2014, 29, 1249–1254. [Google Scholar] [CrossRef] [PubMed]

- WHO. The ICD-10 Classification of Mental and Behavioural Disorders: Clinical Descriptions and Diagnostic Guidelines; World Health Organization: Geneva, Switzerland, 1992. [Google Scholar]

- Winter, B. Linear models and linear mixed effects models in R with linguistic applications. arXiv 2013, arXiv:1308.5499. [Google Scholar]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- Gareth, J.; Daniela, W.; Trevor, H.; Robert, T. Introduction to Statistical Learning with Applications in R; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Beaulieu-Jones, B.K.; Greene, C.S. Semi-supervised learning of the electronic health record for phenotype stratification. J. Biomed. Inform. 2016, 64, 168–178. [Google Scholar] [CrossRef]

- Chollet, F. Keras, Version 2.3.0. 2015. Available online: https://github.com/keras-team/keras (accessed on 1 June 2019).

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the 13th International Conference on Artificial Intelligence and Statistics (AISTATS’10), Sardinia, Italy, 13–15 May 2010; Volume 9, pp. 249–256. [Google Scholar]

- Zeiler, M.D. ADADELTA: An Adaptive Learning Rate Method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018. [Google Scholar]

- Kuznetsova, A.; Brockhoff, P.B.; Christensen, R.H.B. lmerTest Package: Tests in Linear Mixed Effects Models. J. Stat. Softw. 2017, 82, 1–26. [Google Scholar] [CrossRef]

- Kolde, R. Pheatmap: Pretty Heatmaps, Version 1.0.12. 2018. Available online: https://cran.r-project.org/web/packages/pheatmap/ (accessed on 1 June 2019).

- Yang, Y.; Banerjee, S. Generating complex explanations from machine learning models using class-contrastive reasoning. medRxiv 2023. Available online: https://www.medrxiv.org/content/10.1101/2023.10.06.23296591v1 (accessed on 3 April 2024).

- Geirhos, R.; Jacobsen, J.H.; Michaelis, C.; Zemel, R.; Brendel, W.; Bethge, M.; Wichmann, F.A. Shortcut Learning in Deep Neural Networks. Nat. Mach. Intell. 2020, 2, 665–673. [Google Scholar] [CrossRef]

- Koton, P. Using Experience in Learning and Problem Solving. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1988. [Google Scholar]

- Langley, P.; Jones, R. A computational model of scientific insight. In The Nature of Creativity: Contemporary Psychological Perspectives; Sternberg, R.J., Ed.; Cambridge University Press: Cambridge, UK, 1997; pp. 177–201. [Google Scholar]

- Olah, C.; Cammarata, N.; Schubert, L.; Goh, G.; Petrov, M.; Carter, S. Zoom In: An Introduction to Circuits. Distill 2020, 5, e00024.001. [Google Scholar] [CrossRef]

- Winston, P.H. The next 50 years: A personal view. Biol. Inspired Cogn. Archit. 2012, 1, 92–99. [Google Scholar] [CrossRef]

- Winston, P.H. The strong story hypothesis and the directed perception hypothesis. Assoc. Adv. Artif. Intell. 2011, FS-11-01, 345–352. [Google Scholar]

- Davis, E.; Marcus, G. Commonsense reasoning and commonsense knowledge in artificial intelligence. Commun. ACM 2015, 58, 92–103. [Google Scholar] [CrossRef]

- Marcus, G. The Next Decade in AI: Four Steps Towards Robust Artificial Intelligence. arXiv 2020, arXiv:2002.06177. [Google Scholar]

- Banerjee, S. A Framework for Designing Compassionate and Ethical Artificial Intelligence and Artificial Consciousness. Interdiscip. Descr. Complex Syst. 2020, 18, 85–95. [Google Scholar] [CrossRef]

- Banerjee, S.; Alsop, P.; Jones, L.; Cardinal, R.N. Patient and public involvement to build trust in artificial intelligence: A framework, tools, and case studies. Patterns 2022, 3, 100506. [Google Scholar] [CrossRef]

- Dillon, S.; Craig, C. Storylistening: Narrative Evidence and Public Reasoning; Routledge: Oxfordshire, UK, 2021. [Google Scholar]

- Lenzen, V.F.; Craik, K.J.W. The Nature of Explanation. Philos. Rev. 1944, 53, 503. [Google Scholar] [CrossRef]

- Winston, P.H. The right way. Adv. Cogn. Syst. 2012, 1, 23–36. [Google Scholar]

- Winston, P. Genesis Project. Available online: https://robohub.org/professor-patrick-winston-former-director-of-mits-artificial-intelligence-laboratory-dies-at-76/ (accessed on 1 June 2019).

- Elhanashi, A.; Saponara, S.; Dini, P.; Zheng, Q.; Morita, D.; Raytchev, B. An integrated and real-time social distancing, mask detection, and facial temperature video measurement system for pandemic monitoring. J. Real-Time Image Process. 2023, 20, 1–14. [Google Scholar] [CrossRef]

- Shoham, Y. Viewpoint: Why knowledge representation matters. Commun. ACM 2016, 59, 47–49. [Google Scholar] [CrossRef]

- Software Vendors Are Pushing Explainable A.I., But Those Explanations May Be Meaningless | Fortune. Available online: https://fortune-com.cdn.ampproject.org/c/s/fortune.com/2022/03/22/ai-explainable-radiology-medicine-crisis-eye-on-ai/amp/ (accessed on 6 June 2024).

- Krishna, S.; Han, T.; Gu, A.; Pombra, J.; Jabbari, S.; Wu, S.; Lakkaraju, H. The Disagreement Problem in Explainable Machine Learning: A Practitioner’s Perspective. arXiv 2022, arXiv:2202.01602. [Google Scholar]

- Ghassemi, M.; Oakden-Rayner, L.; Beam, A.L. The false hope of current approaches to explainable artificial intelligence in health care. Lancet 2021, 3, E745–E750. [Google Scholar] [CrossRef] [PubMed]

- Gilmer, J.; Metz, L.; Faghri, F.; Schoenholz, S.S.; Raghu, M.; Wattenberg, M.; Goodfellow, I. Adversarial Spheres. arXiv 2018, arXiv:1801.02774. [Google Scholar]

- Kůrková, V. Some insights from high-dimensional spheres: Comment on “The unreasonable effectiveness of small neural ensembles in high-dimensional brain” by Alexander N. Gorban et al. Phys. Life Rev. 2019, 29, 98–100. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).