Personalized Medicine in Urolithiasis: AI Chatbot-Assisted Dietary Management of Oxalate for Kidney Stone Prevention

Abstract

:1. Introduction

2. Materials and Methods

Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Scales, C.D., Jr.; Smith, A.C.; Hanley, J.M.; Saigal, C.S. Prevalence of kidney stones in the United States. Eur. Urol. 2012, 62, 160–165. [Google Scholar] [CrossRef] [PubMed]

- Stamatelou, K.K.; Francis, M.E.; Jones, C.A.; Nyberg, L.M.; Curhan, G.C. Time trends in reported prevalence of kidney stones in the United States: 1976–1994. Kidney Int. 2003, 63, 1817–1823. [Google Scholar] [CrossRef] [PubMed]

- Hill, A.J.; Basourakos, S.P.; Lewicki, P.; Wu, X.; Arenas-Gallo, C.; Chuang, D.; Bodner, D.; Jaeger, I.; Nevo, A.; Zell, M.; et al. Incidence of Kidney Stones in the United States: The Continuous National Health and Nutrition Examination Survey. J. Urol. 2022, 207, 851–856. [Google Scholar] [CrossRef] [PubMed]

- Thongprayoon, C.; Krambeck, A.E.; Rule, A.D. Determining the true burden of kidney stone disease. Nat. Rev. Nephrol. 2020, 16, 736–746. [Google Scholar] [CrossRef] [PubMed]

- Trinchieri, A.; Coppi, F.; Montanari, E.; Del Nero, A.; Zanetti, G.; Pisani, E. Increase in the prevalence of symptomatic upper urinary tract stones during the last ten years. Eur. Urol. 2000, 37, 23–25. [Google Scholar] [CrossRef] [PubMed]

- Cheungpasitporn, W.; Rossetti, S.; Friend, K.; Erickson, S.B.; Lieske, J.C. Treatment effect, adherence, and safety of high fluid intake for the prevention of incident and recurrent kidney stones: A systematic review and meta-analysis. J. Nephrol. 2016, 29, 211–219. [Google Scholar] [CrossRef] [PubMed]

- Salciccia, S.; Maggi, M.; Frisenda, M.; Finistauri Guacci, L.; Hoxha, S.; Licari, L.C.; Viscuso, P.; Gentilucci, A.; Del Giudice, F.; DE Berardinis, E.; et al. Translation and validation of the Italian version of the Wisconsin Stone Quality of Life Questionnaire (I-WISQOL) for assessing quality of life in patients with urolithiasis. Minerva Urol. Nephrol. 2023, 75, 501–507. [Google Scholar] [CrossRef]

- Penniston, K.L.; Nakada, S.Y. Development of an instrument to assess the health related quality of life of kidney stone formers. J. Urol. 2013, 189, 921–930. [Google Scholar] [CrossRef]

- Singh, P.; Enders, F.T.; Vaughan, L.E.; Bergstralh, E.J.; Knoedler, J.J.; Krambeck, A.E.; Lieske, J.C.; Rule, A.D. Stone Composition among First-Time Symptomatic Kidney Stone Formers in the Community. Mayo Clin. Proc. 2015, 90, 1356–1365. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.H.; Chi, Z.P.; Huang, R.; Huang, H.; Liu, G.; Zhang, Y.; Yang, H.; Lin, J.; Yang, T.; et al. The Handling of Oxalate in the Body and the Origin of Oxalate in Calcium Oxalate Stones. Urol. Int. 2020, 104, 167–176. [Google Scholar] [CrossRef]

- Trinchieri, A. Epidemiology of urolithiasis: An update. Clin. Cases Miner Bone Metab. 2008, 5, 101–106. [Google Scholar]

- Franceschi, V.R.; Nakata, P.A. Calcium oxalate in plants: Formation and function. Annu. Rev. Plant Biol. 2005, 56, 41–71. [Google Scholar] [CrossRef] [PubMed]

- Holmes, R.P.; Goodman, H.O.; Assimos, D.G. Contribution of dietary oxalate to urinary oxalate excretion. Kidney Int. 2001, 59, 270–276. [Google Scholar] [CrossRef] [PubMed]

- Crivelli, J.J.; Mitchell, T.; Knight, J.; Wood, K.D.; Assimos, D.G.; Holmes, R.P.; Fargue, S. Contribution of Dietary Oxalate and Oxalate Precursors to Urinary Oxalate Excretion. Nutrients 2020, 13, 62. [Google Scholar] [CrossRef] [PubMed]

- Pearle, M.S.; Goldfarb, D.S.; Assimos, D.G.; Curhan, G.; Denu-Ciocca, C.J.; Matlaga, B.R.; Monga, M.; Penniston, K.L.; Preminger, G.M.; Turk, T.M.; et al. Medical management of kidney stones: AUA guideline. J. Urol. 2014, 192, 316–324. [Google Scholar] [CrossRef] [PubMed]

- Kubala, J.A.R. Low Oxalate Diet: Overview, Food Lists, and How It Works. Available online: https://www.healthline.com/nutrition/low-oxalate-diet (accessed on 15 December 2023).

- Toshi, N. What Is a Low Oxalate Diet? How Does the Body Get Benefits from It? Available online: https://pharmeasy.in/blog/what-is-a-low-oxalate-diet-how-does-the-body-get-benefits-from-it/ (accessed on 15 December 2023).

- Peerapen, P.; Thongboonkerd, V. Kidney Stone Prevention. Adv. Nutr. 2023, 14, 555–569. [Google Scholar] [CrossRef] [PubMed]

- Noonan, S.C.; Savage, G.P. Oxalate content of foods and its effect on humans. Asia Pac. J. Clin. Nutr. 1999, 8, 64–74. [Google Scholar]

- Kuckelman, I.J.; Yi, P.H.; Bui, M.; Onuh, I.; Anderson, J.A.; Ross, A.B. Assessing AI-Powered Patient Education: A Case Study in Radiology. Acad. Radiol. 2024, 31, 338–342. [Google Scholar] [CrossRef]

- Eid, K.; Eid, A.; Wang, D.; Raiker, R.S.; Chen, S.; Nguyen, J. Optimizing Ophthalmology Patient Education via ChatBot-Generated Materials: Readability Analysis of AI-Generated Patient Education Materials and the American Society of Ophthalmic Plastic and Reconstructive Surgery Patient Brochures. Ophthalmic Plast. Reconstr. Surg. 2023, 16, 10–97. [Google Scholar] [CrossRef]

- Han, R.; Todd, A.; Wardak, S.; Partridge, S.R.; Raeside, R. Feasibility and Acceptability of Chatbots for Nutrition and Physical Activity Health Promotion among Adolescents: Systematic Scoping Review with Adolescent Consultation. JMIR Hum. Factors 2023, 10, e43227. [Google Scholar] [CrossRef]

- Maher, C.A.; Davis, C.R.; Curtis, R.G.; Short, C.E.; Murphy, K.J. A Physical Activity and Diet Program Delivered by Artificially Intelligent Virtual Health Coach: Proof-of-Concept Study. JMIR Mhealth Uhealth 2020, 8, e17558. [Google Scholar] [CrossRef] [PubMed]

- Qarajeh, A.; Tangpanithandee, S.; Thongprayoon, C.; Suppadungsuk, S.; Krisanapan, P.; Aiumtrakul, N.; Garcia Valencia, O.A.; Miao, J.; Qureshi, F.; Cheungpasitporn, W. AI-Powered Renal Diet Support: Performance of ChatGPT, Bard AI, and Bing Chat. Clin. Pract. 2023, 13, 1160–1172. [Google Scholar] [PubMed]

- OpenAI. ChatGPT-3.5. Available online: https://chat.openai.com/ (accessed on 20 August 2023).

- Google. An Important Next Step on Our AI Journey. Available online: https://blog.google/technology/ai/bard-google-ai-search-updates/ (accessed on 20 August 2023).

- Edge, M. Bing Chat. Available online: https://www.microsoft.com/en-us/edge/features/bing-chat?form=MT00D8 (accessed on 20 August 2023).

- Miao, J.; Thongprayoon, C.; Suppadungsuk, S.; Krisanapan, P.; Radhakrishnan, Y.; Cheungpasitporn, W. Chain of Thought Utilization in Large Language Models and Application in Nephrology. Medicina 2024, 60, 148. [Google Scholar] [CrossRef]

- Mesko, B.; Topol, E.J. The imperative for regulatory oversight of large language models (or generative AI) in healthcare. NPJ Digit. Med. 2023, 6, 120. [Google Scholar] [CrossRef] [PubMed]

- Yu, P.; Xu, H.; Hu, X.; Deng, C. Leveraging Generative AI and Large Language Models: A Comprehensive Roadmap for Healthcare Integration. Healthcare 2023, 11, 2776. [Google Scholar] [CrossRef]

- OpenAI. Introducing ChatGPT. Available online: https://openai.com/blog/chatgpt (accessed on 20 August 2023).

- OpenAI. GPT-4 is OpenAI’s Most Advanced System, Producing Safer and More Useful Responses. Available online: https://openai.com/gpt-4 (accessed on 20 August 2023).

- Miao, J.; Thongprayoon, C.; Suppadungsuk, S.; Garcia Valencia, O.A.; Qureshi, F.; Cheungpasitporn, W. Innovating Personalized Nephrology Care: Exploring the Potential Utilization of ChatGPT. J. Pers. Med. 2023, 13, 1681. [Google Scholar] [CrossRef]

- Miao, J.; Thongprayoon, C.; Garcia Valencia, O.A.; Krisanapan, P.; Sheikh, M.S.; Davis, P.W.; Mekraksakit, P.; Suarez, M.G.; Craici, I.M.; Cheungpasitporn, W. Performance of ChatGPT on Nephrology Test Questions. Clin. J. Am. Soc. Nephrol. 2023, 19, 35–43. [Google Scholar] [CrossRef]

- Al-Ashwal, F.Y.; Zawiah, M.; Gharaibeh, L.; Abu-Farha, R.; Bitar, A.N. Evaluating the Sensitivity, Specificity, and Accuracy of ChatGPT-3.5, ChatGPT-4, Bing AI, and Bard against Conventional Drug-Drug Interactions Clinical Tools. Drug Healthc. Patient Saf. 2023, 15, 137–147. [Google Scholar] [CrossRef]

- Bargagli, M.; Tio, M.C.; Waikar, S.S.; Ferraro, P.M. Dietary Oxalate Intake and Kidney Outcomes. Nutrients 2020, 12, 2673. [Google Scholar] [CrossRef]

- Dias, R.; Torkamani, A. Artificial intelligence in clinical and genomic diagnostics. Genome Med. 2019, 11, 70. [Google Scholar] [CrossRef]

- De Riso, G.; Cocozza, S. Artificial Intelligence for Epigenetics: Towards Personalized Medicine. Curr. Med. Chem. 2021, 28, 6654–6674. [Google Scholar] [CrossRef] [PubMed]

- Shmatko, A.; Ghaffari Laleh, N.; Gerstung, M.; Kather, J.N. Artificial intelligence in histopathology: Enhancing cancer research and clinical oncology. Nat. Cancer 2022, 3, 1026–1038. [Google Scholar] [CrossRef]

- Stoleru, C.A.; Dulf, E.H.; Ciobanu, L. Automated detection of celiac disease using Machine Learning Algorithms. Sci. Rep. 2022, 12, 4071. [Google Scholar] [CrossRef] [PubMed]

- Kroner, P.T.; Engels, M.M.; Glicksberg, B.S.; Johnson, K.W.; Mzaik, O.; van Hooft, J.E.; Wallace, M.B.; El-Serag, H.B.; Krittanawong, C. Artificial intelligence in gastroenterology: A state-of-the-art review. World J. Gastroenterol. 2021, 27, 6794–6824. [Google Scholar] [CrossRef]

- Chalasani, S.H.; Syed, J.; Ramesh, M.; Patil, V.; Pramod Kumar, T.M. Artificial intelligence in the field of pharmacy practice: A literature review. Explor. Res. Clin. Soc. Pharm. 2023, 12, 100346. [Google Scholar] [CrossRef]

- Lin, E.; Lin, C.H.; Lane, H.Y. Precision Psychiatry Applications with Pharmacogenomics: Artificial Intelligence and Machine Learning Approaches. Int. J. Mol. Sci. 2020, 21, 969. [Google Scholar] [CrossRef] [PubMed]

- Bond, A.; McCay, K.; Lal, S. Artificial intelligence & clinical nutrition: What the future might have in store. Clin. Nutr. ESPEN 2023, 57, 542–549. [Google Scholar] [CrossRef]

- Nguyen, P.H.; Tran, L.M.; Hoang, N.T.; Truong, D.T.T.; Tran, T.H.T.; Huynh, P.N.; Koch, B.; McCloskey, P.; Gangupantulu, R.; Folson, G.; et al. Relative validity of a mobile AI-technology-assisted dietary assessment in adolescent females in Vietnam. Am. J. Clin. Nutr. 2022, 116, 992–1001. [Google Scholar] [CrossRef]

- Arslan, N.C.; Gundogdu, A.; Tunali, V.; Topgul, O.H.; Beyazgul, D.; Nalbantoglu, O.U. Efficacy of AI-Assisted Personalized Microbiome Modulation by Diet in Functional Constipation: A Randomized Controlled Trial. J. Clin. Med. 2022, 11, 6612. [Google Scholar] [CrossRef]

- Aiumtrakul, N.; Thongprayoon, C.; Suppadungsuk, S.; Krisanapan, P.; Miao, J.; Qureshi, F.; Cheungpasitporn, W. Navigating the Landscape of Personalized Medicine: The Relevance of ChatGPT, BingChat, and Bard AI in Nephrology Literature Searches. J. Pers. Med. 2023, 13, 1457. [Google Scholar] [CrossRef]

- Zuniga Salazar, G.; Zuniga, D.; Vindel, C.L.; Yoong, A.M.; Hincapie, S.; Zuniga, A.B.; Zuniga, P.; Salazar, E.; Zuniga, B. Efficacy of AI Chats to Determine an Emergency: A Comparison between OpenAI’s ChatGPT, Google Bard, and Microsoft Bing AI Chat. Cureus 2023, 15, e45473. [Google Scholar] [CrossRef] [PubMed]

- Safdar, N.M.; Banja, J.D.; Meltzer, C.C. Ethical considerations in artificial intelligence. Eur. J. Radiol. 2020, 122, 108768. [Google Scholar] [CrossRef] [PubMed]

- Katirai, A. The ethics of advancing artificial intelligence in healthcare: Analyzing ethical considerations for Japan’s innovative AI hospital system. Front. Public Health 2023, 11, 1142062. [Google Scholar] [CrossRef] [PubMed]

- Arambula, A.M.; Bur, A.M. Ethical Considerations in the Advent of Artificial Intelligence in Otolaryngology. Otolaryngol. Head Neck Surg. 2020, 162, 38–39. [Google Scholar] [CrossRef]

- Cacciamani, G.E.; Chen, A.; Gill, I.S.; Hung, A.J. Artificial intelligence and urology: Ethical considerations for urologists and patients. Nat. Rev. Urol. 2023, 21, 50–59. [Google Scholar] [CrossRef]

- Shoja, M.M.; Van de Ridder, J.M.M.; Rajput, V. The Emerging Role of Generative Artificial Intelligence in Medical Education, Research, and Practice. Cureus 2023, 15, e40883. [Google Scholar] [CrossRef]

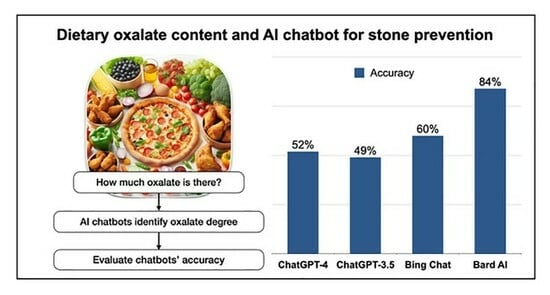

| Oxalate Content | GPT-4 | GPT-3.5 | Bing | Bard | p-Value |

|---|---|---|---|---|---|

| Overall (n = 549) | 280 (52%) | 266 (49%) | 325 (60%) | 451 (84%) | <0.001 |

| Low (n = 277) | 190 (69%) | 232 (84%) | 217 (78%) | 264 (95%) | <0.001 |

| Moderate (n = 83) | 54 (65%) | 26 (31%) | 43 (52%) | 64 (77%) | <0.001 |

| High (n = 179) | 36 (20%) | 8 (4%) | 65 (36%) | 123 (69%) | <0.001 |

| Pairwise Comparison | Overall | Dietary Oxalate Content | ||

|---|---|---|---|---|

| Low | Moderate | High | ||

| GPT-4 vs. GPT-3.5 | 0.298 | <0.001 | <0.001 | <0.001 |

| GPT-4 vs. Bing | 0.003 | 0.001 | 0.14 | <0.001 |

| GPT-4 vs. Bard | <0.001 | <0.001 | 0.16 | <0.001 |

| GPT-3.5 vs. Bing | <0.001 | <0.001 | 0.01 | <0.001 |

| GPT-3.5 vs. Bard | <0.001 | <0.001 | <0.001 | <0.001 |

| Bing vs. Bard | <0.001 | <0.001 | 0.001 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aiumtrakul, N.; Thongprayoon, C.; Arayangkool, C.; Vo, K.B.; Wannaphut, C.; Suppadungsuk, S.; Krisanapan, P.; Garcia Valencia, O.A.; Qureshi, F.; Miao, J.; et al. Personalized Medicine in Urolithiasis: AI Chatbot-Assisted Dietary Management of Oxalate for Kidney Stone Prevention. J. Pers. Med. 2024, 14, 107. https://doi.org/10.3390/jpm14010107

Aiumtrakul N, Thongprayoon C, Arayangkool C, Vo KB, Wannaphut C, Suppadungsuk S, Krisanapan P, Garcia Valencia OA, Qureshi F, Miao J, et al. Personalized Medicine in Urolithiasis: AI Chatbot-Assisted Dietary Management of Oxalate for Kidney Stone Prevention. Journal of Personalized Medicine. 2024; 14(1):107. https://doi.org/10.3390/jpm14010107

Chicago/Turabian StyleAiumtrakul, Noppawit, Charat Thongprayoon, Chinnawat Arayangkool, Kristine B. Vo, Chalothorn Wannaphut, Supawadee Suppadungsuk, Pajaree Krisanapan, Oscar A. Garcia Valencia, Fawad Qureshi, Jing Miao, and et al. 2024. "Personalized Medicine in Urolithiasis: AI Chatbot-Assisted Dietary Management of Oxalate for Kidney Stone Prevention" Journal of Personalized Medicine 14, no. 1: 107. https://doi.org/10.3390/jpm14010107