A CNN-Based Method for Enhancing Boring Vibration with Time-Domain Convolution-Augmented Transformer

Abstract

:Simple Summary

Abstract

1. Introduction

2. Dataset Materials

2.1. Data Collection and Treatment

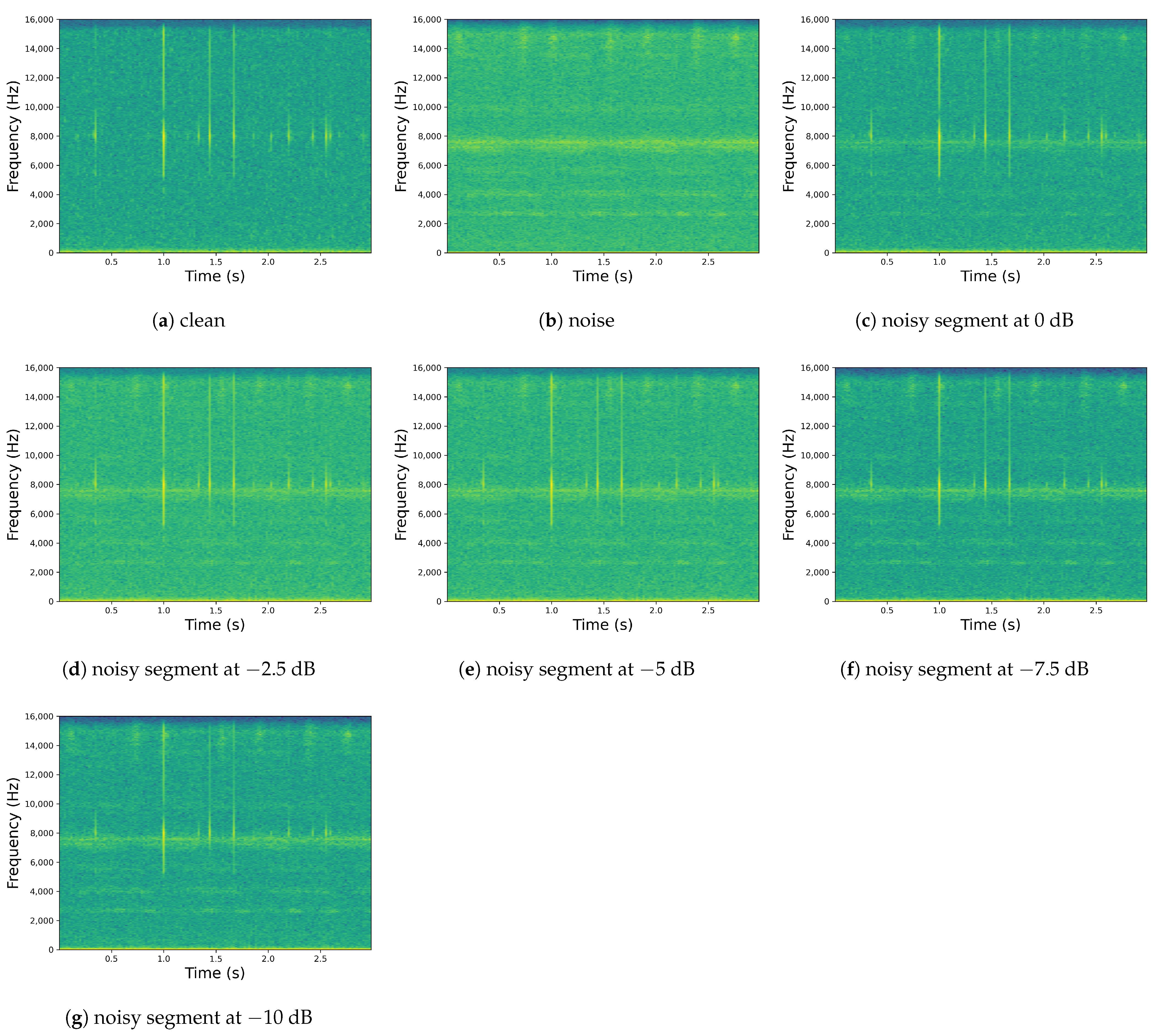

2.2. Environmental Noise Collection

2.3. Dataset Construction

3. Methods

3.1. Problem Formulation

3.2. Model Architecture

3.3. Loss Functions

3.4. Evaluation Metrics

3.5. Implementation Details

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bonan, G.B. Forests and Climate Change: Forcings, Feedbacks, and the Climate Benefits of Forests. Science 2008, 320, 1444–1449. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Torun, P. Effects of environmental factors and forest management on landscape-scale forest storm damage in Turkey. Ann. For. Sci. 2020, 77, 39. [Google Scholar] [CrossRef]

- Woodcock, P.; Cottrell, J.E.; Buggs, R.J.A.; Quine, C.P. Mitigating pest and pathogen impacts using resistant trees: A framework and overview to inform development and deployment in Europe and North America. For. Int. J. For. Res. 2017, 91, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Logan, J.A.; Régnière, J.; Powell, J.A. Assessing the impacts of global warming on forest pest dynamics. Front. Ecol. Environ. 2003, 1, 130–137. [Google Scholar] [CrossRef]

- Shi, H.; Chen, Z.; Zhang, H.; Li, J.; Liu, X.; Ren, L.; Luo, Y. A Waveform Mapping-Based Approach for Enhancement of Trunk Borers’ Vibration Signals Using Deep Learning Model. Insects 2022, 13, 596. [Google Scholar] [CrossRef] [PubMed]

- Herrick, N.J.; Mankin, R. Acoustical detection of early instar Rhynchophorus ferrugineus (Coleoptera: Curculionidae) in Canary Island date palm, Phoenix canariensis (Arecales: Arecaceae). Fla. Entomol. 2012, 95, 983–990. [Google Scholar] [CrossRef]

- Nahrung, H.F.; Liebhold, A.M.; Brockerhoff, E.G.; Rassati, D. Forest insect biosecurity: Processes, patterns, predictions, pitfalls. Annu. Rev. Entomol. 2023, 68, 211–229. [Google Scholar] [CrossRef]

- Preti, M.; Verheggen, F.; Angeli, S. Insect pest monitoring with camera-equipped traps: Strengths and limitations. J. Pest Sci. 2021, 94, 203–217. [Google Scholar] [CrossRef]

- Mankin, R.; Hagstrum, D.; Guo, M.; Eliopoulos, P.; Njoroge, A. Automated Applications of Acoustics for Stored Product Insect Detection, Monitoring, and Management. Insects 2021, 12, 259. [Google Scholar] [CrossRef]

- Al-Manie, M.; Alkanhal, M. Acoustic Detection of the Red Date Palm Weevil. Trans. Eng. Comput. Technol. 2004, 2, 345–348. [Google Scholar]

- Fiaboe, K.; Mankin, R.; Roda, A.; Kairo, M.; Johanns, C. Pheromone-Food-Bait Trap and Acoustic Surveys of Rhynchophorus ferrugineus (Coleoptera: Curculionidae) in Curacao1. Fla. Entomol. 2011, 94, 766–773. [Google Scholar] [CrossRef]

- Neethirajan, S.; Karunakaran, C.; Jayas, D.; White, N. Detection techniques for stored-product insects in grain. Food Control 2007, 18, 157–162. [Google Scholar] [CrossRef]

- Banga, K.S.; Kotwaliwale, N.; Mohapatra, D.; Giri, S.K. Techniques for insect detection in stored food grains: An overview. Food Control 2018, 94, 167–176. [Google Scholar] [CrossRef]

- Mankin, R.W.; Jetter, E.; Rohde, B.; Yasir, M. Performance of a Low-Cost Acoustic Insect Detector System with Sitophilus oryzae (Coleoptera: Curculionidae) in Stored Grain and Tribolium castaneum (Coleoptera: Tenebrionidae) in Flour. J. Econ. Entomol. 2020, 113, 3004–3010. [Google Scholar] [CrossRef]

- Zhou, H.; He, Z.; Sun, L.; Zhang, D.; Zhou, H.; Li, X. Improved Power Normalized Cepstrum Coefficient Based on Wavelet Packet Decomposition for Trunk Borer Detection in Harsh Acoustic Environment. Appl. Sci. 2021, 11, 2236. [Google Scholar] [CrossRef]

- Mulimani, M.; Koolagudi, S.G. Robust acoustic event classification using fusion fisher vector features. Appl. Acoust. 2019, 155, 130–138. [Google Scholar] [CrossRef]

- Boll, S. Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans. Acoust. Speech Signal Process. 1979, 27, 113–120. [Google Scholar] [CrossRef] [Green Version]

- Azirani, A.A.; Le Bouquin Jeannès, R.; Faucon, G. Speech enhancement using a wiener filtering under signal presence uncertainty. In Proceedings of the 1996 8th European Signal Processing Conference (EUSIPCO 1996), Trieste, Italy, 10–13 September 1996; pp. 1–4. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, H.; Jiang, Q.; Ren, L.; Chen, Z.; Luo, Y.; Li, J. Acoustic Denoising Using Artificial Intelligence for Wood-Boring Pests Semanotus bifasciatus Larvae Early Monitoring. Sensors 2022, 22, 3861. [Google Scholar] [CrossRef]

- Xiao, F.; Guan, J.; Kong, Q.; Wang, W. Time-domain Speech Enhancement with Generative Adversarial Learning. arXiv 2021, arXiv:cs.SD/2103.16149. [Google Scholar]

- Karar, M.E.; Reyad, O.; Abdel-Aty, A.H.; Owyed, S.; Hassan, M.F. Intelligent IoT-Aided early sound detection of red palmWeevils. Comput. Mater. Contin. 2021, 69, 4095–4111. [Google Scholar]

- Li, H.; Xu, Y.; Ke, D.; Su, K. μ-law SGAN for generating spectra with more details in speech enhancement. Neural Netw. 2021, 136, 17–27. [Google Scholar] [CrossRef]

- Rethage, D.; Pons, J.; Serra, X. A Wavenet for Speech Denoising. arXiv 2018, arXiv:cs.SD/1706.07162. [Google Scholar]

- Stoller, D.; Ewert, S.; Dixon, S. Wave-U-Net: A Multi-Scale Neural Network for End-to-End Audio Source Separation. arXiv 2018, arXiv:cs.SD/1806.03185. [Google Scholar]

- Chen, J.; Wang, Z.; Tuo, D.; Wu, Z.; Kang, S.; Meng, H. FullSubNet+: Channel Attention FullSubNet with Complex Spectrograms for Speech Enhancement. arXiv 2022, arXiv:cs.SD/2203.12188. [Google Scholar]

- Wang, D. On Ideal Binary Mask As the Computational Goal of Auditory Scene Analysis. In Speech Separation by Humans and Machines; Springer: Boston, MA, USA, 2005. [Google Scholar]

- Sun, L.; Du, J.; Dai, L.R.; Lee, C.H. Multiple-target deep learning for LSTM-RNN based speech enhancement. In Proceedings of the 2017 Hands-Free Speech Communications and Microphone Arrays (HSCMA), San Francisco, CA, USA, 1–3 March 2017; pp. 136–140. [Google Scholar] [CrossRef]

- Williamson, D.S.; Wang, Y.; Wang, D. Complex Ratio Masking for Monaural Speech Separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 483–492. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:cs.CL/1706.03762. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:cs.CL/1810.04805. [Google Scholar]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Gulati, A.; Qin, J.; Chiu, C.C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y.; et al. Conformer: Convolution-augmented transformer for speech recognition. arXiv 2020, arXiv:2005.08100. [Google Scholar]

- Herms, D.A.; McCullough, D.G. Emerald Ash Borer Invasion of North America: History, Biology, Ecology, Impacts, and Management. Annu. Rev. Entomol. 2014, 59, 13–30. [Google Scholar] [CrossRef] [Green Version]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A.; Bottou, L. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the ICML’15: Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the ICML’10: Proceedings of the 27th International Conference on International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Oord, A.v.d.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. Wavenet: A generative model for raw audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- Graves, A.; Mohamed, A.R.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Park, S.R.; Lee, J. A fully convolutional neural network for speech enhancement. arXiv 2016, arXiv:1609.07132. [Google Scholar]

- Rix, A.W.; Beerends, J.G.; Hollier, M.P.; Hekstra, A.P. Perceptual evaluation of speech quality (PESQ)-a new method for speech quality assessment of telephone networks and codecs. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), Salt Lake City, UT, USA, 7–11 May 2001; Volume 2, pp. 749–752. [Google Scholar]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. A short-time objective intelligibility measure for time-frequency weighted noisy speech. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 4214–4217. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the NIPS’19: Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

| Loss Functions | Average SNR (dB) | Average SegSNR (dB) | Average LLR | Epochs |

|---|---|---|---|---|

| L1 | 12.23 | 11.50 | 0.5356 | 10 |

| MSE | 12.32 | 11.77 | 0.2257 | 52 |

| Negative-SNR | 14.37 | 13.17 | 0.1638 | 35 |

| Models | Param. (M) | Average SNR (dB) | Average SegSNR (dB) | Average LLR |

|---|---|---|---|---|

| Noisy | - | −5.14 | −5.96 | −6.6067 |

| T-ENV | 4.23 | 10.57 | 9.89 | 0.1978 |

| T-LSTM-ENV | 5.28 | 10.99 | 9.99 | 0.4534 |

| T-BiLSTM-ENV | 6.99 | 12.17 | 11.10 | 0.5216 |

| T-CENV × 1 | 6.02 | 14.03 | 12.83 | 0.3245 |

| T-CENV × 2 | 7.81 | 14.37 | 13.17 | 0.1638 |

| T-CENV × 3 | 9.60 | 13.66 | 12.47 | 0.3398 |

| T-CENV × 4 | 11.04 | 11.01 | 10.06 | 0.6883 |

| Models | 0 dB | −2.5 dB | −5 dB | −7.5 dB | −10 dB |

|---|---|---|---|---|---|

| T-LSTM-ENV | 12.38 | 11.88 | 11.18 | 10.27 | 9.24 |

| T-BiLSTM-ENV | 13.30 | 12.88 | 12.80 | 11.64 | 10.21 |

| T-CENV | 17.84 | 16.12 | 14.27 | 12.58 | 11.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Li, J.; Cai, G.; Chen, Z.; Zhang, H. A CNN-Based Method for Enhancing Boring Vibration with Time-Domain Convolution-Augmented Transformer. Insects 2023, 14, 631. https://doi.org/10.3390/insects14070631

Zhang H, Li J, Cai G, Chen Z, Zhang H. A CNN-Based Method for Enhancing Boring Vibration with Time-Domain Convolution-Augmented Transformer. Insects. 2023; 14(7):631. https://doi.org/10.3390/insects14070631

Chicago/Turabian StyleZhang, Huarong, Juhu Li, Gaoyuan Cai, Zhibo Chen, and Haiyan Zhang. 2023. "A CNN-Based Method for Enhancing Boring Vibration with Time-Domain Convolution-Augmented Transformer" Insects 14, no. 7: 631. https://doi.org/10.3390/insects14070631

APA StyleZhang, H., Li, J., Cai, G., Chen, Z., & Zhang, H. (2023). A CNN-Based Method for Enhancing Boring Vibration with Time-Domain Convolution-Augmented Transformer. Insects, 14(7), 631. https://doi.org/10.3390/insects14070631