Near Real-Time 3D Reconstruction and Quality 3D Point Cloud for Time-Critical Construction Monitoring

Abstract

1. Introduction

2. 3D Reconstruction Methods used in Construction Applications

2.1. 3D Reconstruction of Construction Site Using Terrestrial Laser Scanner

2.2. 3D Reconstruction of Construction Site Using Photogrammetry

3. Enhanced Direct Sparse Odometry with Loop Closure for Near Real-Time 3D Reconstruction and Quality 3D Point Cloud

3.1. VSLAM and LDSO

3.2. Framework of the Original LDSO

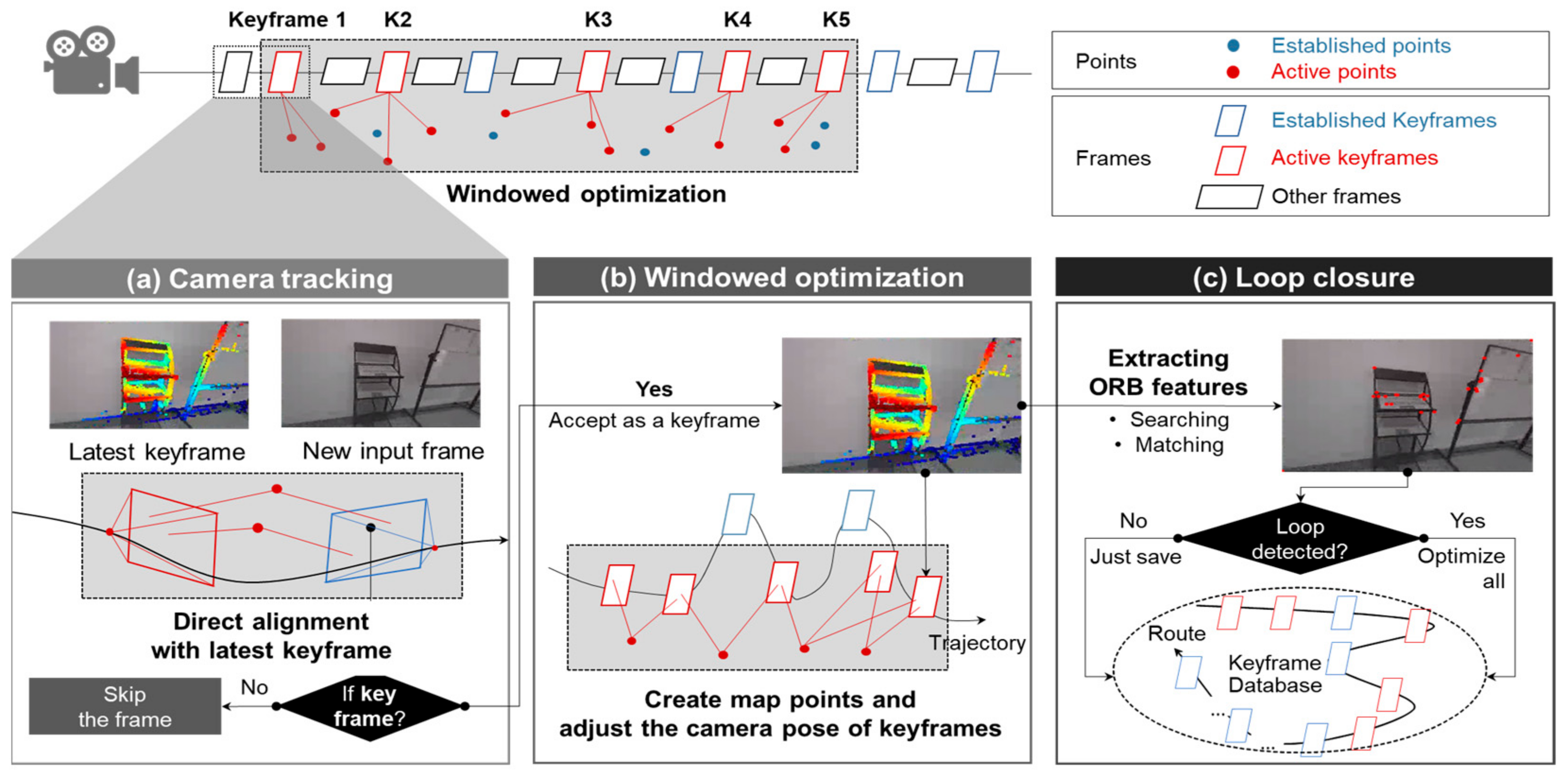

- Module 1, camera tracking (Figure 1a): Camera tracking is the process of obtaining the camera pose (i.e., position and orientation) for each frame. In LDSO, camera tracking is realized as follows. First, one out of every few frames is selected as a keyframe in LDSO. These keyframes act as critical positions in the trajectory, and the camera pose for each of these keyframes is accurately calculated in Module 2. Second, when a new frame is captured by the camera, the camera pose of this frame is calculated by directly aligning this frame with the latest keyframe. The alignment is processed by conventional two-frame direct image alignment, which is referred to as the direct method [52]. If the new input frame meets the requirements to be considered a keyframe (e.g., sufficient changes in camera viewpoint and motion), it is then assigned as such and used for 3D reconstruction in Module 2 (Figure 1b) and Module 3 (Figure 1c).

- Module 2, windowed optimization (Figure 1b): Windowed optimization is used to refine the camera pose accuracy of keyframes and create map points. Once a new keyframe is assigned, it is added to a sliding window containing between five and seven keyframes at all times. Pixels with sufficient gradient intensity are then selected from each keyframe, maintaining distribution across each frame, for triangulation. Point positions and camera poses are both optimized for the keyframes in each window, using a process similar to bundle adjustment in SfM, though the objective function in this optimization is a photometric error rather than a reprojection error (as it would be for SfM bundle adjustment). Following optimization, the outlying keyframe in the window—that which is furthest away from the other keyframes in the same window—is removed from the window (marginalized). The marginalized frame is, however, saved in a database to detect a loop.

- Module 3, loop closing (Figure 1c): Loop closure reduces the error accumulated when estimating overall camera pose trajectory, which occurs because LDSO estimates camera pose frame by frame. This can lead to trajectory drift in the end result. LDSO prevents this drift issue via loop closure. Module 3 utilizes the ORB features and packs a portion of the pixels selected in Module 2 into a Bag of Words (BoW) database [55]. The keyframes with the ORB features are then queried in the database to find the optimal loop candidates. Once a loop is detected and validated, the global poses of all keyframes are optimized together via graph optimization [56].

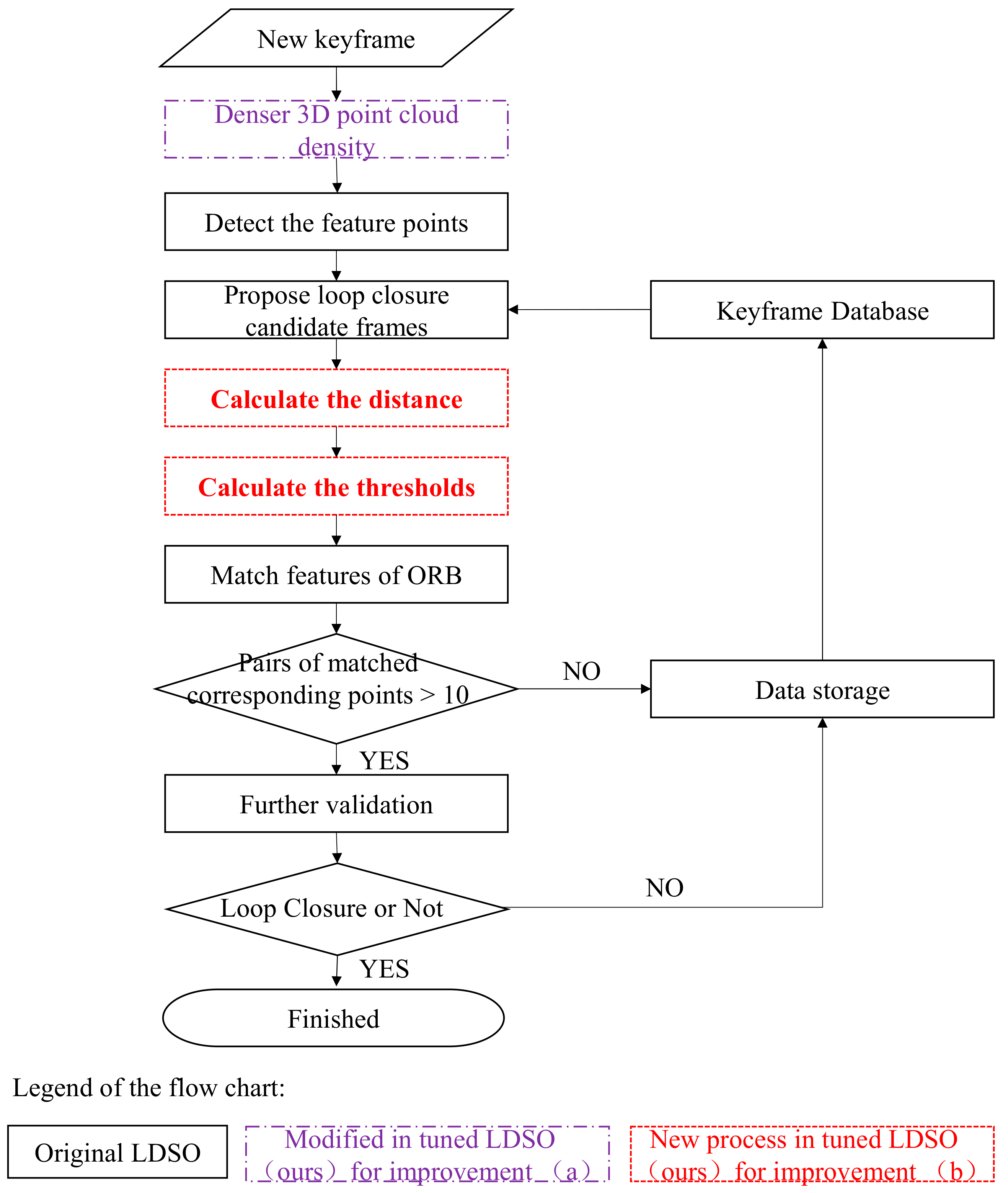

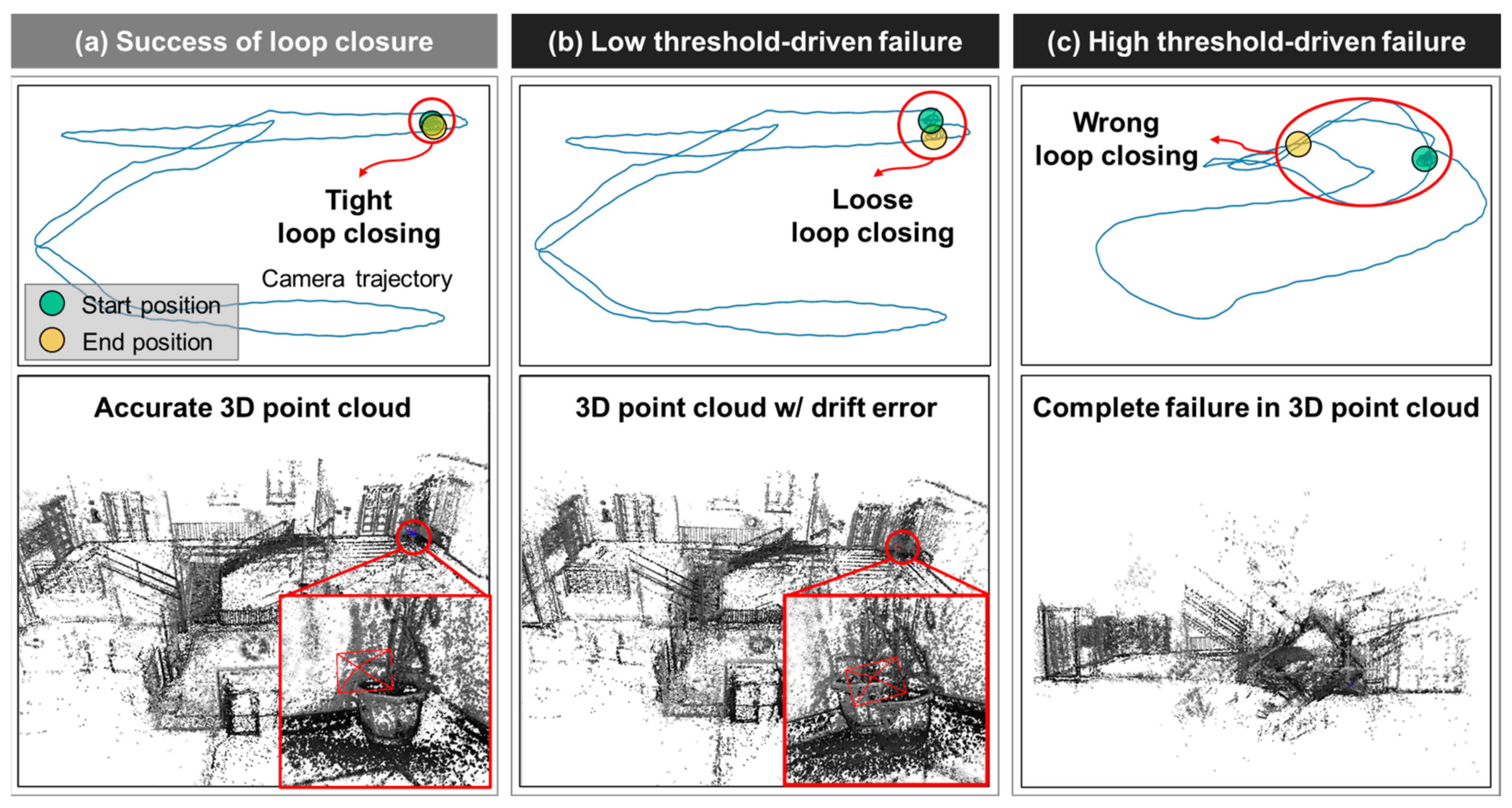

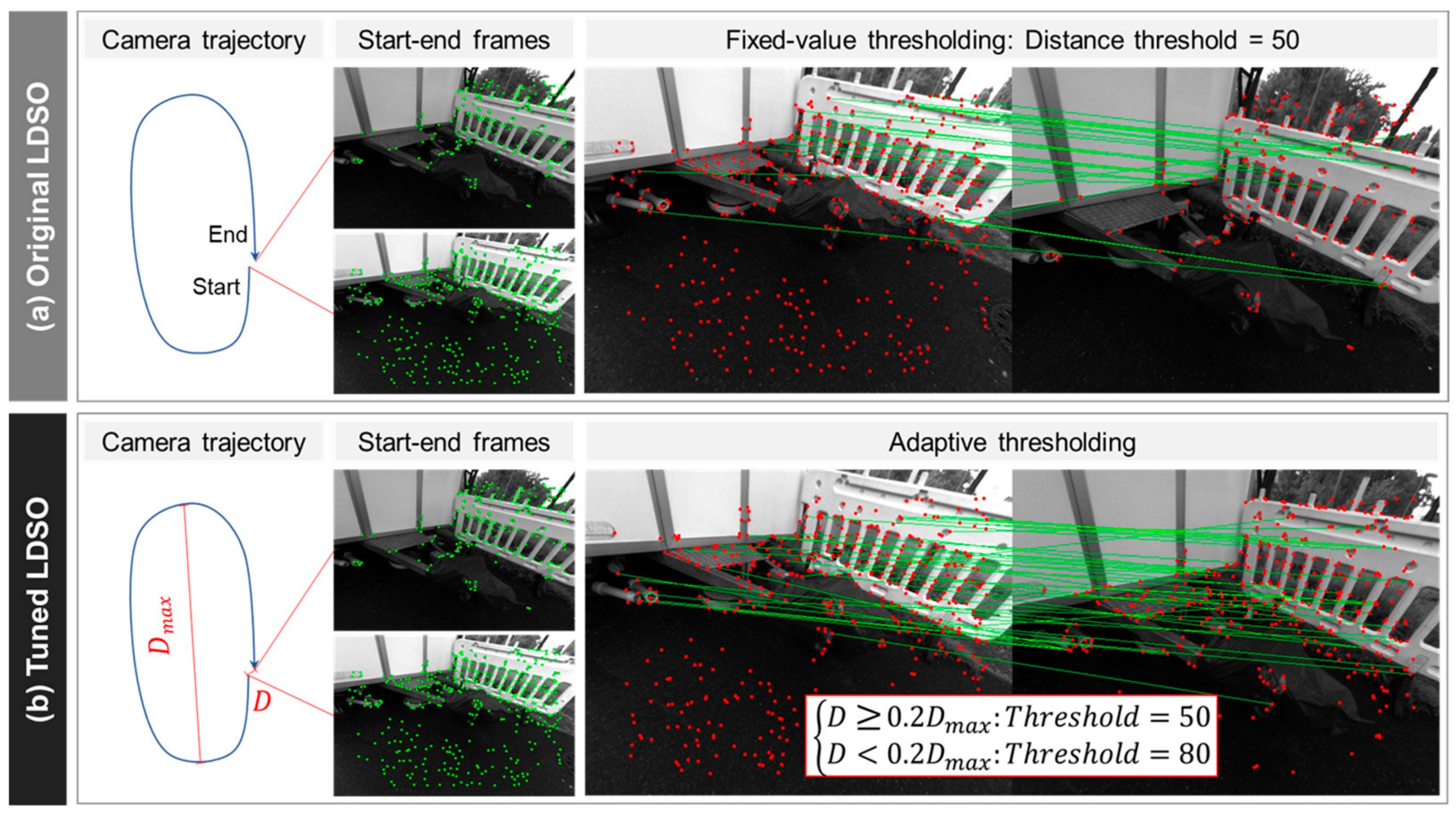

3.3. Enhancing the Original LDSO for Denser 3D Point Cloud and More Robust Loop Closure

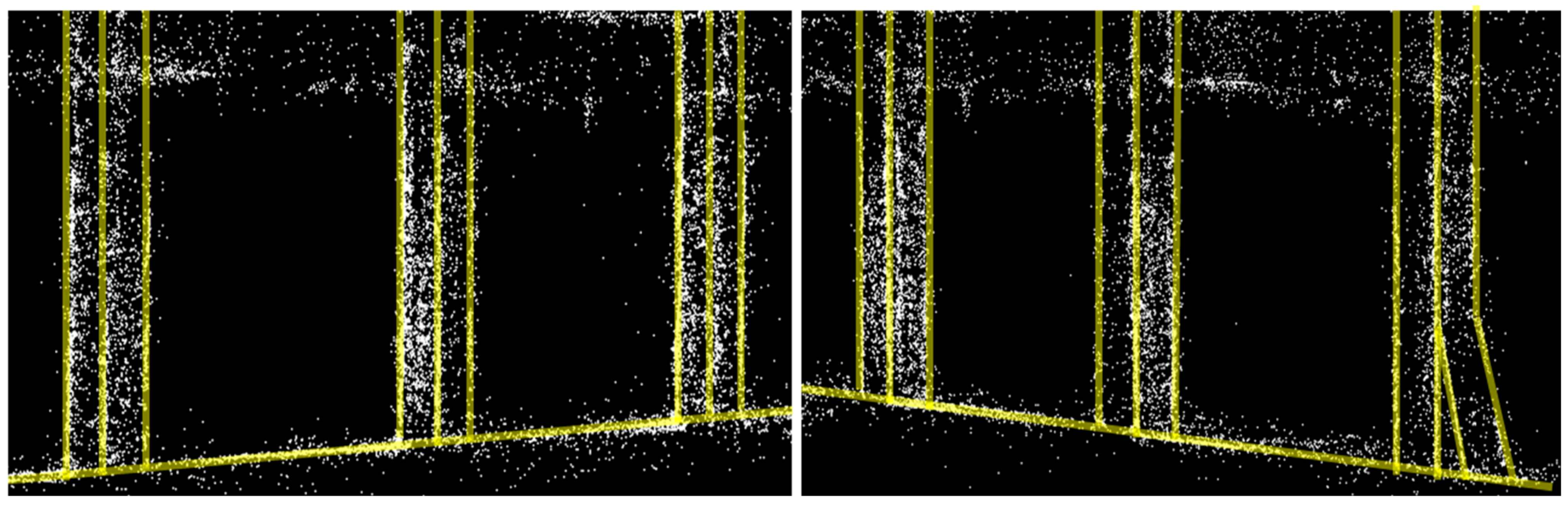

3.3.1. Denser 3D Point Cloud Density

3.3.2. More Robust Loop Closure

4. Field Test and Performance Evaluation

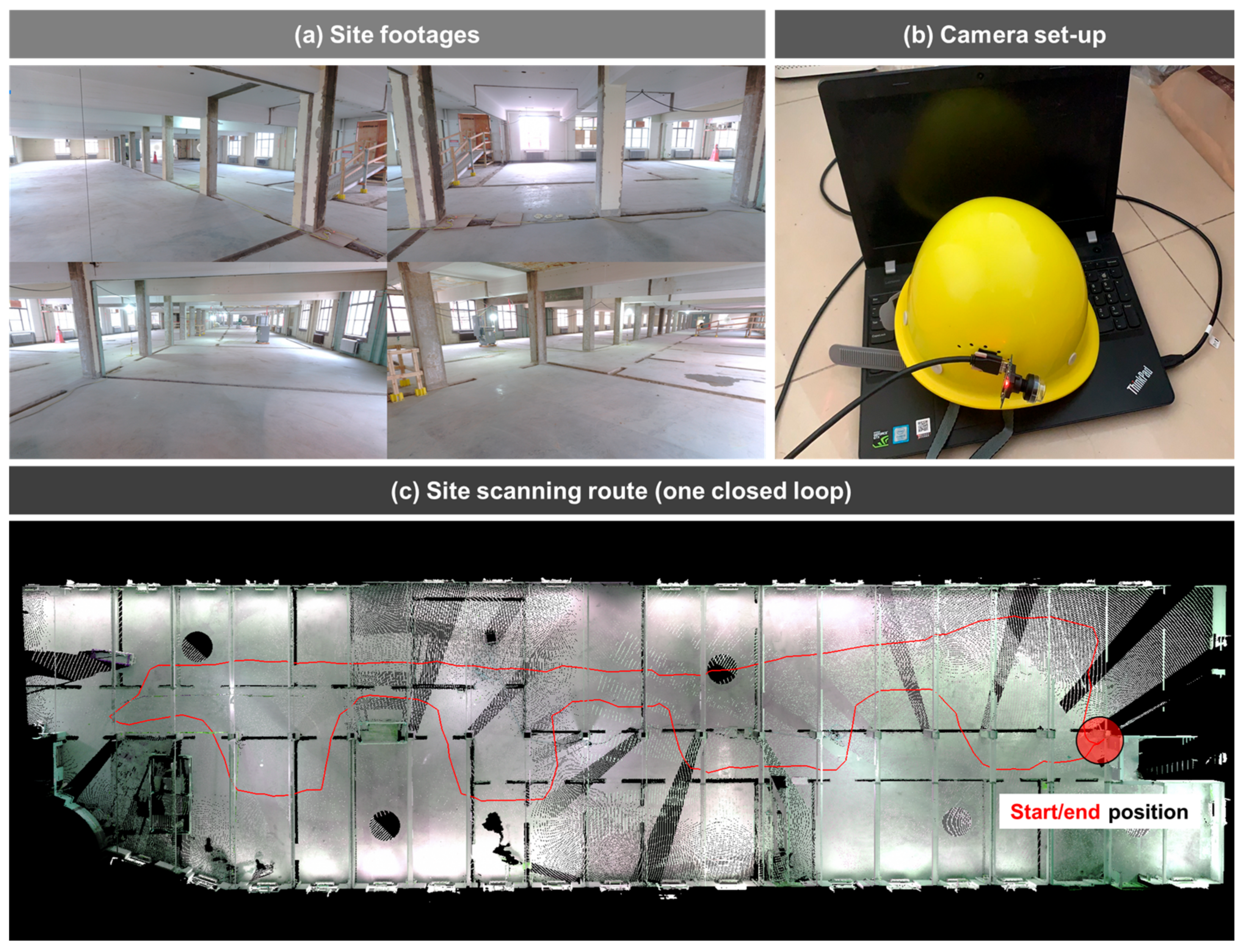

4.1. Test Setting

4.2. Evaluation Criteria

4.3. Evaluation Metrics

- The Average # of Points Per Unit Surface Area (APS, EA/m2) for point density at object scale: We measured a reconstructed object’s (e.g., column) APS to assess its point density. The point density per unit surface area (m2) is first calculated for each surface of the element; the APS of the object then denotes the average of the point density values across all the object’s surfaces (Equation (3)).where = the # of points on the ith surface; = the area of ith surface (unit: m2); m = the total # of surfaces.

- Hausdorff Distance (cm) for cloud-to-cloud distance at site scale: To evaluate the reconstructed 3D point cloud’s overall discrepancy from the ground truth model, we measured the intervening Hausdorff Distance, which is the most widely applied metric in evaluating the distance between two 3D point clouds [21,33]. The Hausdorff Distance is the average value of the nearest distances between the ground truth model and the reconstructed 3D point cloud (Equation (3)). Each point in the ground truth model is matched to its nearest point in the reconstructed 3D point cloud and the distance between the two is measured. The Hausdorff Distance is the average value of all these nearest distances, which represents the overall discrepancy of the reconstructed 3D point cloud to its ground truth (Equation (4)).where = the ith point of ground truth 3D point cloud; = points of reconstructed 3D point cloud; m = the total # of points of ground truth 3D point cloud.

- Frames Per Second (FPS, f/s) for overall running speed: A camera streams a digital image to a computer every 0.033 s, a rate totaling 30 FPS. The near real-time 3D reconstruction thus requires an FPS of around 30. We measured the tuned LDSO’s FPS during the field test and compared it to the real-time standard, thereby demonstrating its potential for use in the near real-time 3D reconstruction of a construction site.

4.4. Object-Scale Evaluation and Result

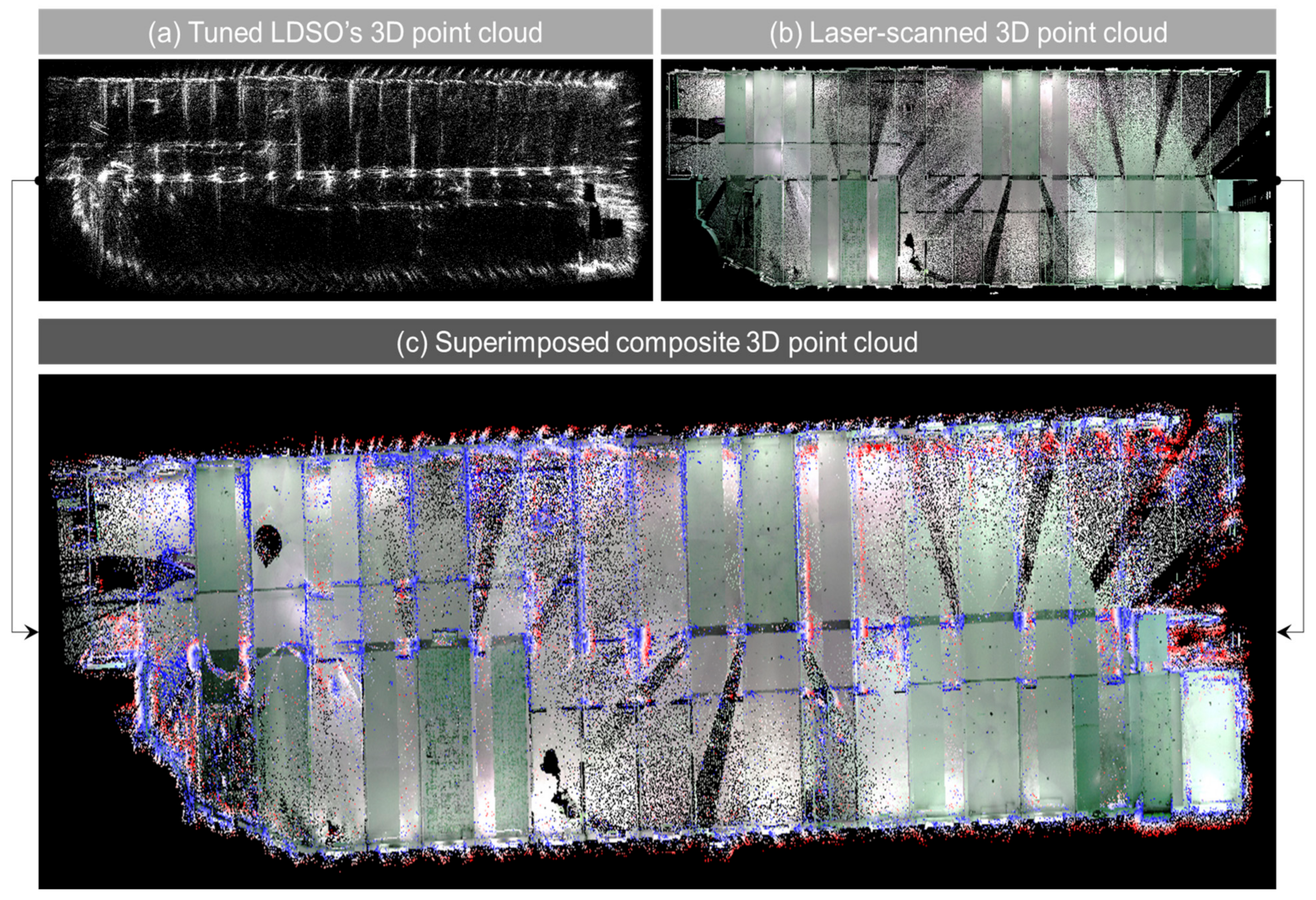

4.5. Site-Scale Evaluation and Result

4.6. Overall Running Speed

5. Discussion: Near Real-Time 3D Reconstruction for Time-Critical Construction Monitoring Tasks

5.1. Online 3D Reconstruction: Simultaneous Scanning and Visualization

5.2. Near Real-Time 3D Reconstruction for Regular and Timely Monitoring

5.3. Improvement Point toward Real Field Applications: Real-Time Data Transmission

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brilakis, I.; Fathi, H.; Rashidi, A. Progressive 3D reconstruction of infrastructure with videogrammetry. Autom. Constr. 2011, 20, 884–895. [Google Scholar] [CrossRef]

- Ma, Z.; Liu, S. A review of 3D reconstruction techniques in civil engineering and their applications. Adv. Eng. Inform. 2018, 37, 163–174. [Google Scholar] [CrossRef]

- Fathi, H.; Dai, F.; Lourakis, M. Automated as-built 3D reconstruction of civil infrastructure using computer vision: Achievements, opportunities, and challenges. Adv. Eng. Inform. 2015, 29, 149–161. [Google Scholar] [CrossRef]

- McCoy, A.P.; Golparvar-Fard, M.; Rigby, E.T. Reducing Barriers to Remote Project Planning: Comparison of Low-Tech Site Capture Approaches and Image-Based 3D Reconstruction. J. Arch. Eng. 2014, 20, 05013002. [Google Scholar] [CrossRef]

- Sung, C.; Kim, P.Y. 3D terrain reconstruction of construction sites using a stereo camera. Autom. Constr. 2016, 64, 65–77. [Google Scholar] [CrossRef]

- Siebert, S.; Teizer, J. Mobile 3D mapping for surveying earthwork projects using an Unmanned Aerial Vehicle (UAV) system. Autom. Constr. 2014, 41, 1–14. [Google Scholar] [CrossRef]

- Pučko, Z.; Šuman, N.; Rebolj, D. Automated continuous construction progress monitoring using multiple workplace real time 3D scans. Adv. Eng. Inform. 2018, 38, 27–40. [Google Scholar] [CrossRef]

- Han, K.; Degol, J.; Golparvar-Fard, M. Geometry- and Appearance-Based Reasoning of Construction Progress Monitoring. J. Constr. Eng. Manag. 2018, 144, 04017110. [Google Scholar] [CrossRef]

- Puri, N.; Turkan, Y. Bridge construction progress monitoring using lidar and 4D design models. Autom. Constr. 2020, 109, 102961. [Google Scholar] [CrossRef]

- Puri, Y.; Nisha, T. A Review of Technology Supplemented Progress Monitoring Techniques for Transportation Construction Projects. In Construction Research Congress 2018; American Society of Civil Engineers: Reston, VA, USA, 2018; pp. 512–521. [Google Scholar]

- Golparvar-Fard, M.; Peña-Mora, F.; Savarese, S. D4AR-A 4-Dimensional Augmented Reality Model for Automating Construction Progress Monitoring Data Collection, Processing and Communication. J. Inf. Technol. Constr. 2009, 14, 129–153. [Google Scholar]

- Han, K.K.; Golparvar-Fard, M. Appearance-based material classification for monitoring of operation-level construction progress using 4D BIM and site photologs. Autom. Constr. 2015, 53, 44–57. [Google Scholar] [CrossRef]

- Lin, J.J.; Lee, J.Y.; Golparvar-Fard, M. Exploring the Potential of Image-Based 3D Geometry and Appearance Reasoning for Automated Construction Progress Monitoring. In Computing in Civil Engineering 2019; American Society of Civil Engineers: Reston, VA, USA, 2019; pp. 162–170. [Google Scholar] [CrossRef]

- Gholizadeh, P.; Behzad, E.; Memarian, B. Monitoring Physical Progress of Indoor Buildings Using Mobile and Terrestrial Point Clouds. In Construction Research Congress 2018; ASCE: Reston, VA, USA, 2018; pp. 602–611. [Google Scholar]

- Tang, P.; Huber, D.; Akinci, B. Characterization of Laser Scanners and Algorithms for Detecting Flatness Defects on Concrete Surfaces. J. Comput. Civ. Eng. 2011, 25, 31–42. [Google Scholar] [CrossRef]

- Olsen, M.J.; Kuester, F.; Chang, B.J.; Hutchinson, T.C. Terrestrial Laser Scanning-Based Structural Damage Assessment. J. Comput. Civ. Eng. 2010, 24, 264–272. [Google Scholar] [CrossRef]

- Rabah, M.; Elhattab, A.; Fayad, A. Automatic concrete cracks detection and mapping of terrestrial laser scan data. NRIAG J. Astron. Geophys. 2013, 2, 250–255. [Google Scholar] [CrossRef]

- Khaloo, A.; Lattanzi, D.; Cunningham, K.; Dell’Andrea, R.; Riley, M. Unmanned aerial vehicle inspection of the Placer River Trail Bridge through image-based 3D modelling. Struct. Infrastruct. Eng. 2018, 14, 124–136. [Google Scholar] [CrossRef]

- Liu, Y.-F.; Cho, S.; Spencer, B.F.; Fan, J.-S. Concrete Crack Assessment Using Digital Image Processing and 3D Scene Reconstruction. J. Comput. Civ. Eng. 2016, 30, 04014124. [Google Scholar] [CrossRef]

- Torok, M.M.; Golparvar-Fard, M.; Kochersberger, K.B. Image-Based Automated 3D Crack Detection for Post-disaster Building Assessment. J. Comput. Civ. Eng. 2014, 28, A4014004. [Google Scholar] [CrossRef]

- Ghahremani, K.; Khaloo, A.; Mohamadi, S.; Lattanzi, D. Damage Detection and Finite-Element Model Updating of Structural Components through Point Cloud Analysis. J. Aerosp. Eng. 2018, 31, 04018068. [Google Scholar] [CrossRef]

- Isailović, D.; Stojanovic, V.; Trapp, M.; Richter, R.; Hajdin, R.; Döllner, J. Bridge damage: Detection, IFC-based semantic enrichment and visualization. Autom. Constr. 2020, 112, 103088. [Google Scholar] [CrossRef]

- Xiong, X.; Adan, A.; Akinci, B.; Huber, D. Automatic creation of semantically rich 3D building models from laser scanner data. Autom. Constr. 2013, 31, 325–337. [Google Scholar] [CrossRef]

- Valero, E.; Adán, A.; Bosché, F. Semantic 3D Reconstruction of Furnished Interiors Using Laser Scanning and RFID Technology. J. Comput. Civ. Eng. 2016, 30, 04015053. [Google Scholar] [CrossRef]

- Uslu, B.; Golparvar-Fard, M.; de la Garza, J.M. Image-Based 3D Reconstruction and Recognition for Enhanced Highway Condition Assessment. Comput. Civ. Eng. 2011, 67–76. [Google Scholar] [CrossRef]

- Xuehui, A.; Li, Z.; Zuguang, L.; Chengzhi, W.; Pengfei, L.; Zhiwei, L. Dataset and benchmark for detecting moving objects in construction sites. Autom. Constr. 2021, 122, 103482. [Google Scholar] [CrossRef]

- Fang, Y.; Cho, Y.K.; Chen, J. A framework for real-time pro-active safety assistance for mobile crane lifting operations. Autom. Constr. 2016, 72, 367–379. [Google Scholar] [CrossRef]

- Nahangi, M.; Yeung, J.; Haas, C.T.; Walbridge, S.; West, J. Automated assembly discrepancy feedback using 3D imaging and forward kinematics. Autom. Constr. 2015, 56, 36–46. [Google Scholar] [CrossRef]

- Rodríguez-Gonzálvez, P.; Rodríguez-Martín, M.; Ramos, L.F.; González-Aguilera, D. 3D reconstruction methods and quality assessment for visual inspection of welds. Autom. Constr. 2017, 79, 49–58. [Google Scholar] [CrossRef]

- Wang, Q. Automatic checks from 3D point cloud data for safety regulation compliance for scaffold work platforms. Autom. Constr. 2019, 104, 38–51. [Google Scholar] [CrossRef]

- Jog, G.M.; Koch, C.; Golparvar-Fard, M.; Brilakis, I. Pothole Properties Measurement through Visual 2D Recognition and 3D Reconstruction. Comput. Civ. Eng. 2012, 2012, 553–560. [Google Scholar]

- Fard, M.G.; Pena-Mora, F. Application of visualization techniques for construction progress monitoring. In Proceedings of the International Workshop on Computing in Civil Engineering 2007, Pittsburgh, PA, USA, 24–27 July 2007; pp. 216–223. [Google Scholar]

- Khaloo, A.; Lattanzi, D. Hierarchical Dense Structure-from-Motion Reconstructions for Infrastructure Condition Assessment. J. Comput. Civ. Eng. 2017, 31, 04016047. [Google Scholar] [CrossRef]

- Popescu, C.; Täljsten, B.; Blanksvärd, T.; Elfgren, L. 3D reconstruction of existing concrete bridges using optical methods. Struct. Infrastruct. Eng. 2019, 15, 912–924. [Google Scholar] [CrossRef]

- Rashidi, A.; Dai, F.; Brilakis, I.; Vela, P. Optimized selection of key frames for monocular videogrammetric surveying of civil infrastructure. Adv. Eng. Inform. 2013, 27, 270–282. [Google Scholar] [CrossRef]

- Dai, F.; Rashidi, A.; Brilakis, I.; Vela, P. Comparison of Image-Based and Time-of-Flight-Based Technologies for Three-Dimensional Reconstruction of Infrastructure. J. Constr. Eng. Manag. 2013, 139, 69–79. [Google Scholar] [CrossRef]

- Gao, X.; Wang, R.; Demmel, N.; Cremers, D. LDSO: Direct Sparse Odometry with Loop Closure. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2198–2204. [Google Scholar] [CrossRef]

- Jaselskis, E.J.; Gao, Z.; Walters, R.C. Improving Transportation Projects Using Laser Scanning. J. Constr. Eng. Manag. 2005, 131, 377–384. [Google Scholar] [CrossRef]

- Oskouie, P.; Becerik-Gerber, B.; Soibelman, L. Automated measurement of highway retaining wall displacements using terrestrial laser scanners. Autom. Constr. 2016, 65, 86–101. [Google Scholar] [CrossRef]

- Zhu, Z.; Brilakis, I. Comparison of Civil Infrastructure Optical-Based Spatial Data Acquisition Techniques. In Computing in Civil Engineering (2007); ASCE: Reston, VA, USA, 2007; pp. 737–744. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Bohn, J.; Teizer, J.; Savarese, S.; Peña-Mora, F. Evaluation of image-based modeling and laser scanning accuracy for emerging automated performance monitoring techniques. Autom. Constr. 2011, 20, 1143–1155. [Google Scholar] [CrossRef]

- Schonberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Saputra, M.R.U.; Markham, A.; Trigoni, N. Visual SLAM and Structure from Motion in Dynamic Environments: A survey. ACM Comput. Surv. 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Maalek, R.; Ruwanpura, J.; Ranaweera, K. Evaluation of the State-of-the-Art Automated Construction Progress Monitoring and Control Systems. In Construction Research Congress 2014; American Society of Civil Engineers: Reston, VA, USA, 2014; pp. 1023–1032. [Google Scholar] [CrossRef]

- Tang, P.; Huber, D.; Akinci, B.; Lipman, R.; Lytle, A. Automatic reconstruction of as-built building information models from laser-scanned point clouds: A review of related techniques. Autom. Constr. 2010, 19, 829–843. [Google Scholar] [CrossRef]

- Choi, J. Detecting and Classifying Cranes Using Camera-Equipped UAVs for Monitoring Crane-Related Safety Hazards. In Computing in Civil Engineering 2017; ASCE: Reston, VA, USA, 2017; pp. 442–449. [Google Scholar]

- Kim, H.; Kim, K.; Kim, H. Data-driven scene parsing method for recognizing construction site objects in the whole image. Autom. Constr. 2016, 71, 271–282. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Agarwal, S.; Snavely, N.; Simon, I.; Seitz, S.; Szeliski, R. Building Rome in a Day. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 27 September–4 October 2009; pp. 72–79. [Google Scholar] [CrossRef]

- Gherardi, R.; Farenzena, M.; Fusiello, A. Improving the Efficiency of Hierarchical Structure-and-Motion. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1594–1600. [Google Scholar]

- Crandall, D.; Owens, A.; Snavely, N.; Huttenlocher, D. Discrete-continuous optimization for large-scale structure from motion. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3001–3008. [Google Scholar] [CrossRef]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine Learning for High-Speed Corner Detection. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 430–443. [Google Scholar]

- Gálvez-López, D.; Tardos, J.D. Bags of Binary Words for Fast Place Recognition in Image Sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2o: A General Framework for Graph Optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Rebolj, D.; Pučko, Z.; Babič, N.Č.; Bizjak, M.; Mongus, D. Point cloud quality requirements for Scan-vs-BIM based automated construction progress monitoring. Autom. Constr. 2017, 84, 323–334. [Google Scholar] [CrossRef]

- Engel, J.; Usenko, V.; Cremers, D. A Photometrically Calibrated Benchmark For Monocular Visual Odometry. arXiv 2016, arXiv:1607.02555. [Google Scholar]

- IDS Products. Available online: https://en.ids-imaging.com/our-corporate-culture.html (accessed on 9 November 2022).

- FARO. FARO® Focus Laser Scanners. Available online: https://www.faro.com/products/construction-bim/faro-focus/ (accessed on 9 November 2022).

| Device | Data Processing Approach | Applications | Limitations |

|---|---|---|---|

| Terrestrial LiDAR | Reconstruct 3D digital model from 3D point cloud | ||

| Camera | SfM-based algorithm to extract 3D point cloud | ||

| VSLAM-based algorithm to extract 3D point cloud |

|

|

| Input Video | The Total # of Reconstructed Points | Percentage Increase (%) | Input Video | The Total Number of Reconstructed Points | Percentage Increase (%) | ||

|---|---|---|---|---|---|---|---|

| Original | Tuned | Original | Tuned | ||||

| 1 | 415,115 | 658,739 | 58.7% | 26 | 175,279 | 271,803 | 55.1% |

| 2 | 317,696 | 495,536 | 56.0% | 27 | 568,437 | 923,535 | 62.5% |

| 3 | 494,039 | 784,489 | 58.8% | 28 | 431,847 | 667,609 | 54.6% |

| 4 | 604,279 | 957,752 | 58.5% | 29 | 1,029,730 | 1,704,825 | 65.6% |

| 5 | 537,430 | 869,660 | 61.8% | 30 | 368,949 | 610,472 | 65.5% |

| 6 | 467,547 | 749,573 | 60.3% | 31 | 658,455 | 1,102,686 | 67.5% |

| 7 | 330,907 | 525,820 | 58.9% | 32 | 616,615 | 1,032,057 | 67.4% |

| 8 | 397,017 | 623,610 | 57.1% | 33 | 557,479 | 918,824 | 64.8% |

| 9 | 207,816 | 325,823 | 56.8% | 34 | 1,028,383 | 1,711,655 | 66.4% |

| 10 | 190,855 | 300,680 | 57.5% | 35 | 140,482 | 214,230 | 52.5% |

| 11 | 273,686 | 433,063 | 58.2% | 36 | 154,730 | 238,362 | 54.1% |

| 12 | 374,671 | 586,421 | 56.5% | 37 | 282,092 | 439,258 | 55.7% |

| 13 | 270,700 | 428,325 | 58.2% | 38 | 349,997 | 541,363 | 54.7% |

| 14 | 221,763 | 353,276 | 59.3% | 39 | 368,639 | 573,842 | 55.7% |

| 15 | 466,194 | 759,135 | 62.8% | 40 | 379,725 | 582,686 | 53.4% |

| 16 | 290,473 | 467,898 | 61.1% | 41 | 464,620 | 743,132 | 59.9% |

| 17 | 419,206 | 677,186 | 61.5% | 42 | 736,967 | 1,194,749 | 62.1% |

| 18 | 512,087 | 809,279 | 58.0% | 43 | 480,989 | 795,431 | 65.4% |

| 19 | 1,066,310 | 1,731,553 | 62.4% | 44 | 305,705 | 505,551 | 65.4% |

| 20 | 977,046 | 1,599,733 | 63.7% | 45 | 631,486 | 1,036,933 | 64.2% |

| 21 | 1,305,839 | 2,171,135 | 66.3% | 46 | 677,427 | 1,115,721 | 64.7% |

| 22 | 1,340,949 | 2,269,298 | 69.2% | 47 | 600,759 | 1,007,490 | 67.7% |

| 23 | 523,515 | 867,994 | 65.8% | 48 | 605,129 | 1,017,770 | 68.2% |

| 24 | 526,644 | 860,967 | 63.5% | 49 | 547,252 | 878,302 | 60.5% |

| 25 | 778,354 | 1,272,857 | 63.5% | 50 | 811,535 | 1,336,625 | 64.7% |

| The average of percentage increase (%) | 61.05% | ||||||

| The standard deviation of percentage increase (%) | 4.40% | ||||||

| LDSOs | Total Numcber of Videos | Total Number of Successful Loop Closure | Average Success Rate |

|---|---|---|---|

| Original | 50 | 7 | 14% |

| Tuned | 50 | 37 | 74% |

| Column # | Aspect Ratio Error (ARE, %) | Average # of Points/Unit Surface Area (APS, EA/m2) | ||

|---|---|---|---|---|

| XY | XZ | YZ | ||

| 1 | 0.71 | 4.16 | 4.9 | 722.72 |

| 2 | 1.87 | 2.76 | 0.83 | 1514.36 |

| 3 | 0.32 | 0.57 | 0.89 | 1801.47 |

| 4 | 0.15 | 3.4 | 3.25 | 687.45 |

| 5 | 1.64 | 1.46 | 0.16 | 2050.04 |

| 6 | 1.14 | 1.94 | 0.82 | 1475.80 |

| 7 | 1.55 | 7.78 | 9.45 | 1027.89 |

| 8 | 2.22 | 0.04 | 2.18 | 2367.51 |

| 9 | 0.41 | 0.18 | 0.6 | 1273.17 |

| 10 | 3.05 | 0.4 | 3.46 | 696. 47 |

| 11 | 3.72 | 2.95 | 0.88 | 1415.09 |

| 12 | 1.3 | 0.66 | 0.65 | 1242.82 |

| 13 | 2.04 | 4.8 | 2.86 | 896.63 |

| 14 | 3.23 | 2.12 | 5.42 | 1205.09 |

| 15 | 0.03 | 2.81 | 2.84 | 335.42 |

| Average | 1.56 | 2.40 | 2.61 | 1286.82 |

| Standard deviation | 1.11 | 2.02 | 2.42 | 529.82 |

| Coefficient variations | 0.71 | 0.84 | 0.92 | 0.41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Kim, D.; Lee, S.; Zhou, L.; An, X.; Liu, M. Near Real-Time 3D Reconstruction and Quality 3D Point Cloud for Time-Critical Construction Monitoring. Buildings 2023, 13, 464. https://doi.org/10.3390/buildings13020464

Liu Z, Kim D, Lee S, Zhou L, An X, Liu M. Near Real-Time 3D Reconstruction and Quality 3D Point Cloud for Time-Critical Construction Monitoring. Buildings. 2023; 13(2):464. https://doi.org/10.3390/buildings13020464

Chicago/Turabian StyleLiu, Zuguang, Daeho Kim, Sanghyun Lee, Li Zhou, Xuehui An, and Meiyin Liu. 2023. "Near Real-Time 3D Reconstruction and Quality 3D Point Cloud for Time-Critical Construction Monitoring" Buildings 13, no. 2: 464. https://doi.org/10.3390/buildings13020464

APA StyleLiu, Z., Kim, D., Lee, S., Zhou, L., An, X., & Liu, M. (2023). Near Real-Time 3D Reconstruction and Quality 3D Point Cloud for Time-Critical Construction Monitoring. Buildings, 13(2), 464. https://doi.org/10.3390/buildings13020464