Machine Learning Method Based on Symbiotic Organism Search Algorithm for Thermal Load Prediction in Buildings

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Provision

2.2. Assessment Formulas

2.3. Methodology

2.4. Hybridization

- (a)

- Selection of an appropriate ANN structure;

- (b)

- Introduction of the determined ANN to the intended algorithm as the problem function to be optimized;

- (c)

- Exposure of the training data to the hybrid model;

- (d)

- Deciding on the proper parameters of the optimization algorithm (cost function, population size (NP), and number of iterations (NIter));

- (e)

- Running and saving the required results.

3. Results and Discussion

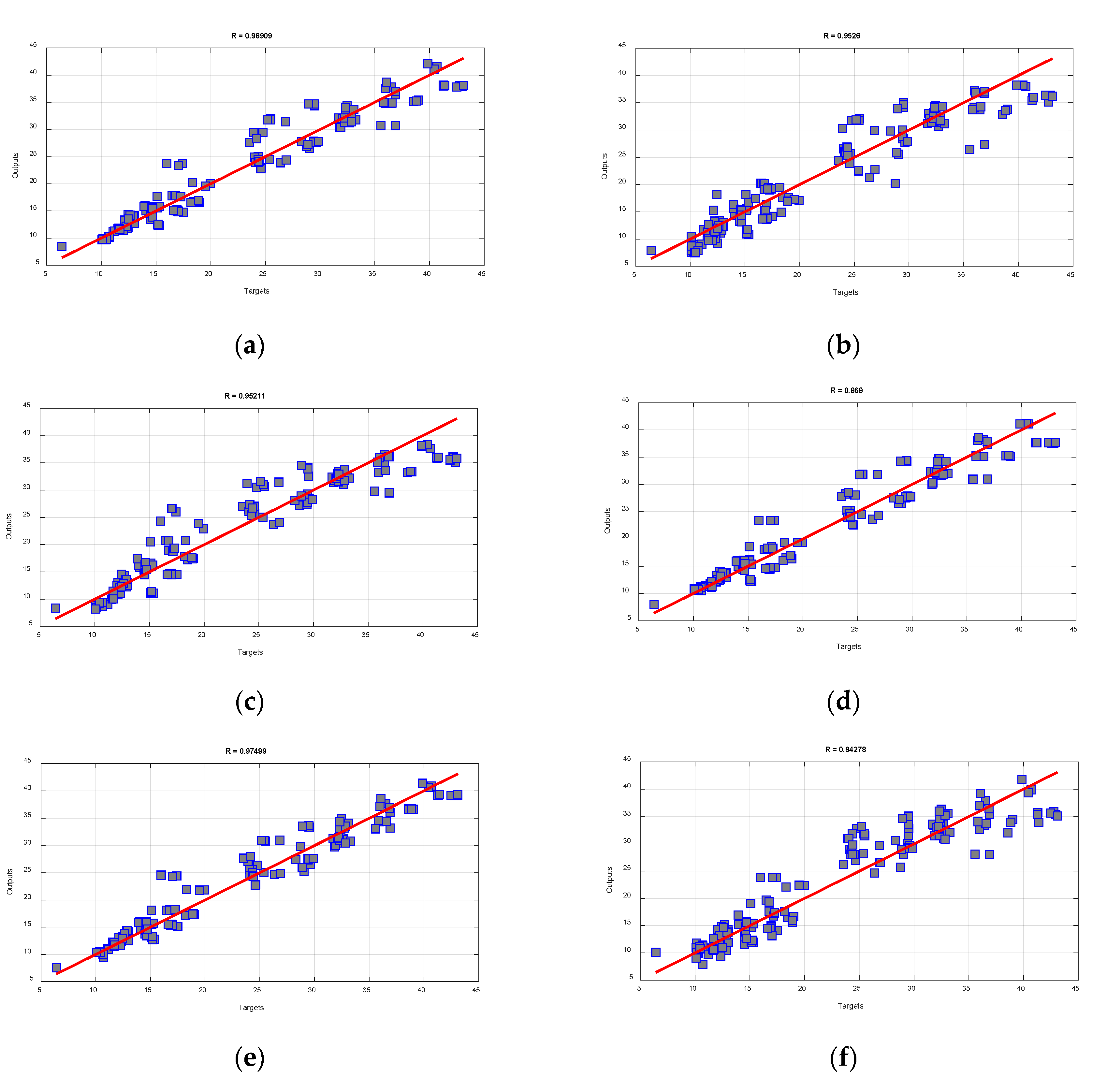

3.1. SOS–ANN Performance

3.2. A Comparative Validation

3.3. Interpretation and Discussion

3.4. Literature Comparison

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| ANN | Artificial neural network |

| SVM | Support vector machine |

| LSTM | Long- and short-term memory |

| TLBO | Teaching–learning-based optimization |

| BBO | Biogeography-based optimization |

| HGSO | Henry gas solubility optimization |

| HBO | Heap-based optimizer |

| ASO | Atom search optimization |

| CFOA | Cuttlefish optimization algorithm |

| SFS | Stochastic fractal search |

| SOS | Symbiotic organism search |

| PO | Political optimizer |

| HL | Heating load |

| CL | Cooling load |

| HVAC | Heating, ventilation, and air conditioning |

| MAPE | Mean absolute percentage error |

| MAE | Mean absolute error |

| RMSE | Root-mean-square error |

| R | Pearson correlation index |

| CR | Relative compactness |

| SA | Surface area |

| SW | Wall area |

| SR | Roof area |

| HT | Overall height |

| O | Orientation |

| SG | Glazing area |

| DSG | Glazing area distribution |

References

- Wolfram, C.; Shelef, O.; Gertler, P. How will energy demand develop in the developing world? J. Econ. Perspect. 2012, 26, 119–138. [Google Scholar] [CrossRef] [Green Version]

- Keho, Y. What drives energy consumption in developing countries? The experience of selected African countries. Energy Policy 2016, 91, 233–246. [Google Scholar] [CrossRef]

- Energy, G. CO2 Status Report; IEA (International Energy Agency): Paris, France, 2019. [Google Scholar]

- Serghides, D.; Dimitriou, S.; Kyprianou, I. Mediterranean Hospital Energy Performance Mapping: The Energy Auditing as a Tool Towards Zero Energy Healthcare Facilities. In Sustainable Energy Development and Innovation; Springer: Berlin/Heidelberg, Germany, 2022; pp. 419–429. [Google Scholar]

- Li, Y.; Kubicki, S.; Guerriero, A.; Rezgui, Y. Review of building energy performance certification schemes towards future improvement. Renew. Sustain. Energy Rev. 2019, 113, 109244. [Google Scholar] [CrossRef]

- Li, X.; Liu, S.; Zhao, L.; Meng, X.; Fang, Y. An integrated building energy performance evaluation method: From parametric modeling to GA-NN based energy consumption prediction modeling. J. Build. Eng. 2022, 45, 103571. [Google Scholar] [CrossRef]

- Ghadimi, M.; Ghadamian, H.; Hamidi, A.; Shakouri, M.; Ghahremanian, S. Numerical analysis and parametric study of the thermal behavior in multiple-skin façades. Energy Build. 2013, 67, 44–55. [Google Scholar] [CrossRef]

- Ghadamian, H.; Ghadimi, M.; Shakouri, M.; Moghadasi, M.; Moghadasi, M. Analytical solution for energy modeling of double skin façades building. Energy Build. 2012, 50, 158–165. [Google Scholar] [CrossRef]

- Shakouri, M.; Ghadamian, H. Energy Demand Analysis for Office Building Using Simulation Model and Statistical Method. Distrib. Gener. Altern. Energy J. 2022, 37, 1577–1612. [Google Scholar] [CrossRef]

- Liu, X.; Tong, D.; Huang, J.; Zheng, W.; Kong, M.; Zhou, G. What matters in the e-commerce era? Modelling and mapping shop rents in Guangzhou, China. Land Use Policy 2022, 123, 106430. [Google Scholar] [CrossRef]

- Han, Y.; Yan, X.; Piroozfar, P. An overall review of research on prefabricated construction supply chain management. Eng. Constr. Archit. Manag. 2022; ahead-of-print. [Google Scholar] [CrossRef]

- Han, Y.; Xu, X.; Zhao, Y.; Wang, X.; Chen, Z.; Liu, J. Impact of consumer preference on the decision-making of prefabricated building developers. J. Civ. Eng. Manag. 2022, 28, 166–176. [Google Scholar] [CrossRef]

- Gu, M.; Cai, X.; Fu, Q.; Li, H.; Wang, X.; Mao, B. Numerical Analysis of Passive Piles under Surcharge Load in Extensively Deep Soft Soil. Buildings 2022, 12, 1988. [Google Scholar] [CrossRef]

- Kordestani, H.; Zhang, C.; Masri, S.F.; Shadabfar, M. An empirical time-domain trend line-based bridge signal decomposing algorithm using Savitzky–Golay filter. Struct. Control Health Monit. 2021, 28, e2750. [Google Scholar] [CrossRef]

- Fu, Q.; Gu, M.; Yuan, J.; Lin, Y. Experimental study on vibration velocity of piled raft supported embankment and foundation for ballastless high speed railway. Buildings 2022, 12, 1982. [Google Scholar] [CrossRef]

- Li, S. Efficient algorithms for scheduling equal-length jobs with processing set restrictions on uniform parallel batch machines. Math. Bios. Eng 2022, 19, 10731–10740. [Google Scholar] [CrossRef]

- Lu, S.; Guo, J.; Liu, S.; Yang, B.; Liu, M.; Yin, L.; Zheng, W. An improved algorithm of drift compensation for olfactory sensors. Appl. Sci. 2022, 12, 9529. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, W.; Yang, J. Analysis of stochastic process to model safety risk in construction industry. J. Civ. Eng. Manag. 2021, 27, 87–99. [Google Scholar] [CrossRef]

- Liu, L.; Li, Z.; Fu, X.; Liu, X.; Li, Z.; Zheng, W. Impact of Power on Uneven Development: Evaluating Built-Up Area Changes in Chengdu Based on NPP-VIIRS Images (2015–2019). Land 2022, 11, 489. [Google Scholar] [CrossRef]

- Chen, J.; Tong, H.; Yuan, J.; Fang, Y.; Gu, R. Permeability prediction model modified on kozeny-carman for building foundation of clay soil. Buildings 2022, 12, 1798. [Google Scholar] [CrossRef]

- Zhan, C.; Dai, Z.; Soltanian, M.R.; de Barros, F.P. Data-Worth Analysis for Heterogeneous Subsurface Structure Identification With a Stochastic Deep Learning Framework. Water Resour. Res. 2022, 58, e2022WR033241. [Google Scholar] [CrossRef]

- Dang, W.; Guo, J.; Liu, M.; Liu, S.; Yang, B.; Yin, L.; Zheng, W. A semi-supervised extreme learning machine algorithm based on the new weighted kernel for machine smell. Appl. Sci. 2022, 12, 9213. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, Z.; Chen, G.; Zhang, L.; Yang, Y.; Yao, C.; Wang, J.; Yao, J. Training effective deep reinforcement learning agents for real-time life-cycle production optimization. J. Pet. Sci. Eng. 2022, 208, 109766. [Google Scholar] [CrossRef]

- Fumo, N. A review on the basics of building energy estimation. Renew. Sustain. Energy Rev. 2014, 31, 53–60. [Google Scholar] [CrossRef]

- Sharif, S.A.; Hammad, A. Developing surrogate ANN for selecting near-optimal building energy renovation methods considering energy consumption, LCC and LCA. J. Build. Eng. 2019, 25, 100790. [Google Scholar] [CrossRef]

- Seo, J.; Kim, S.; Lee, S.; Jeong, H.; Kim, T.; Kim, J. Data-driven approach to predicting the energy performance of residential buildings using minimal input data. Build. Environ. 2022, 214, 108911. [Google Scholar] [CrossRef]

- Seyedzadeh, S.; Rahimian, F.P.; Glesk, I.; Roper, M. Machine learning for estimation of building energy consumption and performance: A review. Vis. Eng. 2018, 6, 1–20. [Google Scholar] [CrossRef]

- Shao, M.; Wang, X.; Bu, Z.; Chen, X.; Wang, Y. Prediction of energy consumption in hotel buildings via support vector machines. Sustain. Cities Soc. 2020, 57, 102128. [Google Scholar] [CrossRef]

- Kardani, N.; Bardhan, A.; Kim, D.; Samui, P.; Zhou, A. Modelling the energy performance of residential buildings using advanced computational frameworks based on RVM, GMDH, ANFIS-BBO and ANFIS-IPSO. J. Build. Eng. 2021, 35, 102105. [Google Scholar] [CrossRef]

- Adedeji, P.A.; Akinlabi, S.; Madushele, N.; Olatunji, O.O. Hybrid adaptive neuro-fuzzy inference system (ANFIS) for a multi-campus university energy consumption forecast. Int. J. Ambient Energy 2022, 43, 1685–1694. [Google Scholar] [CrossRef]

- Ngo, N.-T.; Pham, A.-D.; Truong, T.T.H.; Truong, N.-S.; Huynh, N.-T.; Pham, T.M. An ensemble machine learning model for enhancing the prediction accuracy of energy consumption in buildings. Arab. J. Sci. Eng. 2022, 47, 4105–4117. [Google Scholar] [CrossRef]

- Elias, R.; Issa, R.R. Artificial-Neural-Network-Based Model for Predicting Heating and Cooling Loads on Residential Buildings. In Computing in Civil Engineering; ASCE: Reston, VA, USA, 2021; pp. 140–147. [Google Scholar]

- Jang, J.; Han, J.; Leigh, S.-B. Prediction of heating energy consumption with operation pattern variables for non-residential buildings using LSTM networks. Energy Build. 2022, 255, 111647. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, L.; Qian, J. Application of Combined Models Based on Empirical Mode Decomposition, Deep Learning, and Autoregressive Integrated Moving Average Model for Short-Term Heating Load Predictions. Sustainability 2022, 14, 7349. [Google Scholar] [CrossRef]

- Koschwitz, D.; Frisch, J.; Van Treeck, C. Data-driven heating and cooling load predictions for non-residential buildings based on support vector machine regression and NARX Recurrent Neural Network: A comparative study on district scale. Energy 2018, 165, 134–142. [Google Scholar] [CrossRef]

- Tien Bui, D.; Moayedi, H.; Anastasios, D.; Kok Foong, L. Predicting heating and cooling loads in energy-efficient buildings using two hybrid intelligent models. Appl. Sci. 2019, 9, 3543. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Zheng, W.; Zhang, S.; Li, H.; Liu, Z.; Zhang, G.; Yang, X. Thermal load prediction and operation optimization of office building with a zone-level artificial neural network and rule-based control. Appl. Energy 2021, 300, 117429. [Google Scholar] [CrossRef]

- Shah, P.; Sekhar, R.; Kulkarni, A.J.; Siarry, P. Metaheuristic Algorithms in Industry 4.0; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar]

- Moayedi, H.; Mehrabi, M.; Mosallanezhad, M.; Rashid, A.S.A.; Pradhan, B. Modification of landslide susceptibility mapping using optimized PSO-ANN technique. Eng. Comput. 2019, 35, 967–984. [Google Scholar] [CrossRef]

- Asadi Nalivan, O.; Mousavi Tayebi, S.A.; Mehrabi, M.; Ghasemieh, H.; Scaioni, M. A hybrid intelligent model for spatial analysis of groundwater potential around Urmia Lake, Iran. Stoch. Environ. Res. Risk Assess. 2022, 1–18. [Google Scholar] [CrossRef]

- Pachauri, N.; Ahn, C.W. In Regression Tree Ensemble Learning-Based Prediction of the Heating and Cooling Loads of Residential Buildings; Building Simulation 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–15. [Google Scholar]

- Almutairi, K.; Algarni, S.; Alqahtani, T.; Moayedi, H.; Mosavi, A. A TLBO-Tuned Neural Processor for Predicting Heating Load in Residential Buildings. Sustainability 2022, 14, 5924. [Google Scholar] [CrossRef]

- Xu, Y.; Li, F.; Asgari, A. Prediction and optimization of heating and cooling loads in a residential building based on multi-layer perceptron neural network and different optimization algorithms. Energy 2022, 240, 122692. [Google Scholar] [CrossRef]

- Moayedi, H.; Mosavi, A. Synthesizing multi-layer perceptron network with ant lion biogeography-based dragonfly algorithm evolutionary strategy invasive weed and league champion optimization hybrid algorithms in predicting heating load in residential buildings. Sustainability 2021, 13, 3198. [Google Scholar] [CrossRef]

- Guo, Z.; Moayedi, H.; Foong, L.K.; Bahiraei, M. Optimal modification of heating, ventilation, and air conditioning system performances in residential buildings using the integration of metaheuristic optimization and neural computing. Energy Build. 2020, 214, 109866. [Google Scholar] [CrossRef]

- Wu, H.; Zhou, Y.; Luo, Q.; Basset, M.A. Training feedforward neural networks using symbiotic organisms search algorithm. Comput. Intell. Neurosci. 2016, 2016, 9063065. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Miao, F.; Yao, L.; Zhao, X. Evolving convolutional neural networks by symbiotic organisms search algorithm for image classification. Appl. Soft Comput. 2021, 109, 107537. [Google Scholar] [CrossRef]

- Tsanas, A.; Xifara, A. Accurate quantitative estimation of energy performance of residential buildings using statistical machine learning tools. Energy Build. 2012, 49, 560–567. [Google Scholar] [CrossRef]

- Pessenlehner, W.; Mahdavi, A. Building Morphology, Transparence, and Energy Performance; AIVC: Sint-Stevens-Woluwe, Belgium, 2003. [Google Scholar]

- Huang, Y.; Niu, J.-L.; Chung, T.-M. Comprehensive analysis on thermal and daylighting performance of glazing and shading designs on office building envelope in cooling-dominant climates. Appl. Energy 2014, 134, 215–228. [Google Scholar] [CrossRef]

- Papadopoulos, S.; Azar, E.; Woon, W.-L.; Kontokosta, C.E. Evaluation of tree-based ensemble learning algorithms for building energy performance estimation. J. Build. Perform. Simul. 2018, 11, 322–332. [Google Scholar] [CrossRef]

- Moayedi, H.; Mosavi, A. Suggesting a stochastic fractal search paradigm in combination with artificial neural network for early prediction of cooling load in residential buildings. Energies 2021, 14, 1649. [Google Scholar] [CrossRef]

- Zheng, S.; Lyu, Z.; Foong, L.K. Early prediction of cooling load in energy-efficient buildings through novel optimizer of shuffled complex evolution. Eng. Comput. 2020, 38, 105–119. [Google Scholar] [CrossRef]

- Wu, D.; Foong, L.K.; Lyu, Z. Two neural-metaheuristic techniques based on vortex search and backtracking search algorithms for predicting the heating load of residential buildings. Eng. Comput. 2020, 38, 647–660. [Google Scholar] [CrossRef]

- Cheng, M.-Y.; Prayogo, D. Symbiotic organisms search: A new metaheuristic optimization algorithm. Comput. Struct. 2014, 139, 98–112. [Google Scholar] [CrossRef]

- Zhou, Y.; Wu, H.; Luo, Q.; Abdel-Baset, M. Automatic data clustering using nature-inspired symbiotic organism search algorithm. Knowl.-Based Syst. 2019, 163, 546–557. [Google Scholar] [CrossRef]

- Abdullahi, M.; Ngadi, M.A. Symbiotic organism search optimization based task scheduling in cloud computing environment. Future Gener. Comput. Syst. 2016, 56, 640–650. [Google Scholar] [CrossRef]

- Mehrabi, M.; Pradhan, B.; Moayedi, H.; Alamri, A. Optimizing an adaptive neuro-fuzzy inference system for spatial prediction of landslide susceptibility using four state-of-the-art metaheuristic techniques. Sensors 2020, 20, 1723. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, Y.; Hu, H.; Song, C.; Wang, Z. Predicting compressive strength of manufactured-sand concrete using conventional and metaheuristic-tuned artificial neural network. Measurement 2022, 194, 110993. [Google Scholar] [CrossRef]

- Mehrabi, M.; Moayedi, H. Landslide susceptibility mapping using artificial neural network tuned by metaheuristic algorithms. Environ. Earth Sci. 2021, 80, 1–20. [Google Scholar] [CrossRef]

- Mehrabi, M. Landslide susceptibility zonation using statistical and machine learning approaches in Northern Lecco, Italy. Nat. Hazards 2021, 111, 901–937. [Google Scholar] [CrossRef]

- Liao, L.; Du, L.; Guo, Y. Semi-supervised SAR target detection based on an improved faster R-CNN. Remote Sens. 2021, 14, 143. [Google Scholar] [CrossRef]

- Amasyali, K.; El-Gohary, N. Machine learning for occupant-behavior-sensitive cooling energy consumption prediction in office buildings. Renew. Sustain. Energy Rev. 2021, 142, 110714. [Google Scholar] [CrossRef]

- Moayedi, H.; Mehrabi, M.; Bui, D.T.; Pradhan, B.; Foong, L.K. Fuzzy-metaheuristic ensembles for spatial assessment of forest fire susceptibility. J Environ. Manag. 2020, 260, 109867. [Google Scholar] [CrossRef]

- Nguyen, H.; Mehrabi, M.; Kalantar, B.; Moayedi, H.; Abdullahi, M.a.M. Potential of hybrid evolutionary approaches for assessment of geo-hazard landslide susceptibility mapping. Geomat. Nat. Hazards Risk 2019, 10, 1667–1693. [Google Scholar] [CrossRef] [Green Version]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Atashpaz-Gargari, E.; Lucas, C. Imperialist competitive algorithm: An algorithm for optimization inspired by imperialistic competition. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; IEEE: New York, NY, USA, 2007; pp. 4661–4667. [Google Scholar]

- Bayraktar, Z.; Komurcu, M.; Werner, D.H. Wind Driven Optimization (WDO): A novel nature-inspired optimization algorithm and its application to electromagnetics. In Proceedings of the 2010 IEEE Antennas and Propagation Society International Symposium, Toronto, ON, Canada, 11–17 July 2010; IEEE: New York, NY, USA, 2010; pp. 1–4. [Google Scholar]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Dhiman, G.; Kumar, V. Multi-objective spotted hyena optimizer: A Multi-objective optimization algorithm for engineering problems. Knowl.-Based Syst. 2018, 150, 175–197. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Moayedi, H.; Nguyen, H.; Foong, L. Nonlinear evolutionary swarm intelligence of grasshopper optimization algorithm and gray wolf optimization for weight adjustment of neural network. Eng. Comput. 2019, 37, 1265–1275. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper optimisation algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef] [Green Version]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Zhou, G.; Moayedi, H.; Bahiraei, M.; Lyu, Z. Employing artificial bee colony and particle swarm techniques for optimizing a neural network in prediction of heating and cooling loads of residential buildings. J. Clean. Prod. 2020, 254, 120082. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report-tr06; Erciyes University, Engineering Faculty, Computer Engineering Department: Kayseri, Turkey, 2005. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN′95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Yang, X.-S. Firefly algorithm. Nat.-Inspired Metaheuristic Algorithms 2008, 20, 79–90. [Google Scholar]

- Kashan, A.H. A new metaheuristic for optimization: Optics inspired optimization (OIO). Comput. Oper. Res. 2015, 55, 99–125. [Google Scholar] [CrossRef]

- Duan, Q.; Gupta, V.K.; Sorooshian, S. Shuffled complex evolution approach for effective and efficient global minimization. J. Optim. Theory Appl. 1993, 76, 501–521. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

| Parameter | CR | SA | SW | SR | HT | O | SG | DSG | HL |

|---|---|---|---|---|---|---|---|---|---|

| Range | [0.6, 0.9] | [514.5, 808.5] | [245.0, 416.5] | [110.2, 220.5] | [3.5, 7.0] | [2.0, 5.0] | [0.0, 0.4] | [0.0, 5.0] | [6.01, 43.10] |

| ASO | CFOA | HBO | HGSO | PO | SFS | SOS |

|---|---|---|---|---|---|---|

| NP = 400 NIter = 1000 Depth weight = 50 Multiplier weight = 0.2 | NP = 500 NIter = 1000 | NP = 300 NIter = 1000 Intensification = 1 | NP = 300 NIter = 1000 No. of groups = 5 No. of independent runs = 1 | NP = 100 NIter = 1000 Lambda = 1 Areas = 3 | NP = 400 NIter = 1000 Max. diffusion = 2 Walk = 1 | NP = 500 NIter = 1000 |

| Type | Model | Network Results | |||||

|---|---|---|---|---|---|---|---|

| Training | Testing | ||||||

| MAE | RMSE | R | MAE | RMSE | R | ||

| Benchmark | PO–ANN | 1.663 | 2.348 | 0.972 | 1.775 | 2.463 | 0.969 |

| HBO–ANN | 2.301 | 3.084 | 0.953 | 2.395 | 3.119 | 0.952 | |

| HGSO–ANN | 2.152 | 2.912 | 0.957 | 2.234 | 3.054 | 0.952 | |

| ASO–ANN | 1.642 | 2.351 | 0.972 | 1.803 | 2.469 | 0.969 | |

| SFS–ANN | 1.493 | 2.002 | 0.980 | 1.648 | 2.222 | 0.974 | |

| CFOA–ANN | 2.377 | 3.148 | 0.950 | 2.559 | 3.358 | 0.942 | |

| Vs. | |||||||

| Proposed | SOS–ANN | 1.004 | 1.314 | 0.991 | 1.201 | 1.487 | 0.989 |

| Study | Used Algorithm | Abbreviation | Developer |

|---|---|---|---|

| Tien Bui, et al. [36] | Genetic algorithm | GA | Holland [66] |

| Imperialist competitive algorithm | ICA | Atashpaz-Gargari and Lucas [67] | |

| Guo, et al. [45] | Wind-driven optimization | WDO | Bayraktar, et al. [68] |

| Whale optimization algorithm | WOA | Mirjalili and Lewis [69] | |

| Spotted hyena optimization | SHO | Dhiman and Kumar [70] | |

| Salp swarm algorithm | SSA | Mirjalili, et al. [71] | |

| Moayedi, et al. [72] | Grasshopper optimization algorithm | GOA | Saremi, et al. [73] |

| Gray wolf optimization | GWO | Mirjalili, et al. [74] | |

| Zhou, et al. [75] | Artificial bee colony | ABC | Karaboga [76] |

| Particle swarm optimization | PSO | Kennedy and Eberhart [77] | |

| Almutairi, et al. [42] | Firefly algorithm | FA | Yang [78] |

| Optics-inspired optimization | OIO | Kashan [79] | |

| Shuffled complex evolution | SCE | Duan, et al. [80] | |

| Teaching–learning-based optimization | TLBO | Rao, et al. [81] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nejati, F.; Zoy, W.O.; Tahoori, N.; Abdunabi Xalikovich, P.; Sharifian, M.A.; Nehdi, M.L. Machine Learning Method Based on Symbiotic Organism Search Algorithm for Thermal Load Prediction in Buildings. Buildings 2023, 13, 727. https://doi.org/10.3390/buildings13030727

Nejati F, Zoy WO, Tahoori N, Abdunabi Xalikovich P, Sharifian MA, Nehdi ML. Machine Learning Method Based on Symbiotic Organism Search Algorithm for Thermal Load Prediction in Buildings. Buildings. 2023; 13(3):727. https://doi.org/10.3390/buildings13030727

Chicago/Turabian StyleNejati, Fatemeh, Wahidullah Omer Zoy, Nayer Tahoori, Pardayev Abdunabi Xalikovich, Mohammad Amin Sharifian, and Moncef L. Nehdi. 2023. "Machine Learning Method Based on Symbiotic Organism Search Algorithm for Thermal Load Prediction in Buildings" Buildings 13, no. 3: 727. https://doi.org/10.3390/buildings13030727