Abstract

Integrating artificial intelligence (AI) in the construction industry could revolutionise workplace safety and efficiency. However, this integration also carries complex socio-legal implications that require further investigation. Presently, there is a research gap in the socio-legal dimensions of AI use to enhance health and safety regulations and protocols for the construction sector in the United Kingdom, particularly in understanding how the existing legal frameworks can adapt to AI integration effectively. Comprehensive research is indispensable to identify where the existing regulations may fall short or require more specificity in addressing the unique implications introduced by AI technologies. This article aims to address the pressing socio-legal challenges surrounding the integration of AI in the UK construction industry, specifically in enhancing health and safety protocols on construction sites, through a systematic review encompassing the PRISMA protocol. The review has identified that the existing legal and regulatory framework provides a strong foundation for risk management. Still, it needs to sufficiently account for the socio-legal dimensions introduced by AI deployment and how AI may evolve in the future. The Health and Safety Executive (HSE) will require standardised authorities to effectively oversee the use of AI in the UK construction industry. This will enable the HSE to collect data related to AI processes and carry out technical, empirical, and governance audits. The provision of sufficient resources and the empowerment of the HSE within the context of the construction industry are critical factors that must be taken into consideration to ensure effective oversight of AI implementation.

1. Introduction

The UK Government’s 2023 white paper suggests a pro-innovation approach to AI regulation, emphasising responsible development, transparency, and accountability [1]. The paper proposes a risk-based framework to facilitate AI adoption, while addressing ethical concerns and fostering a competitive AI landscape. In the UK context, the legal landscape is adapting to accommodate the digital transformation within the construction sector through initiatives such as the National Digital Twin and the Construction Playbook [2]. However, studies highlight critical socio-legal concerns regarding the potential marginalisation of human roles in project management due to reliance on technology, underscoring the need for legal safeguards [3,4].

The socio-legal implications of AI in society need to be examined, including ethical dilemmas concerning algorithmic decision-making and legal frameworks governing privacy, data protection, liability and intellectual property rights [5].

Addressing these dimensions is crucial for ensuring that AI development and deployment align with ethical principles, legal standards and societal values, fostering responsible innovation and equitable outcomes [6,7].

Our study aims to fill the research gap concerning the socio-legal aspects of AI integration in the UK construction industry. The use of AI in the UK construction industry has sparked significant socio-legal concerns, including regarding legal liability, regulation, ethics, data privacy, worker training, and accessibility. Our aim is to comprehensively investigate the social and legal challenges of incorporating AI in construction sector health and safety in the UK. This review will discuss the potential challenges and benefits of AI integration into the construction health and safety sphere and consider if the existing laws on AI could impede the construction industry’s endeavours to enhance health and safety standards in the sector based on the results of the systematic review. The authors offer speculation on how construction health and safety law and regulation may need to adapt as AI systems technology advances.

2. The Socio-Legal Challenges of Deploying AI

While AI has reached the stage where it can complete specific tasks better and faster than humans, it has yet to develop the maturity and sophistication needed for independent use in complicated areas such as the law. Examples of this abound. While a Colombian judge has used ChatGPT to assist him in the resolution of a court case, the AI’s contribution was supplemented by the judges’ research and reference to precedent [8]. The judge in question was satisfied with the quality of the assistance provided by ChatGPT and likened its services to that of an efficient and reliable legal secretary [8]. However, the case caused much stir among legal experts who doubted the general level of judicial literacy and in particular questioned Judge Padilla’s sense of responsibility and ethics [9]. The ‘moral panic in law’ [9] caused by this decision did not subside with time, perhaps because of a New York personal injury case where the lawyer of one of the parties used the services of ChatGPT to produce cases supporting their argument. The judge discovered that the AI fabricated the case citations used by the lawyer and penalised him heavily [9]. The case illustrates that AI’s integration into legal practice must be undertaken responsibly and with constant oversight. More broadly, the two cases demonstrate that, despite the benefits to the legal profession of AI in its ability to execute comprehensive legal research at high speed in a structured and organised manner and at a meagre cost, it may fall short of societal expectations of receiving ethical and responsible legal services.

This is concerning, particularly considering that in the next five years, AI is projected to revolutionise the use of computers by permitting interaction with and instruction of an AI agent through natural language [10]. Future AI will have the ability to consolidate and replace a multitude of software applications (apps), thus making the interaction with an AI agent less complicated and easier [10].

This innovation would be particularly relevant to the construction industry, not least because it will remove some of the barriers to wider AI use. AI use in law has been contemplated since the 1960s [11]. AI advancement may lead to innovation in the law and changing practices and processes [12]. However, the further integration of AI in any industry requires acknowledgement that AI is inherently risky [13]. Although the risk factor could be managed by better programming, the concern remains that the nature of AI is essentially contra-human; it is an artificial intellect completely devoid of humanity, empathy and social awareness of the kind we would ascribe to a physical person. This should be added to the issue of machine bias, which could affect fundamental rights. These downsides of AI will only be amplified by the further development of smart technologies and advances in electronic personhood [13].

The current position of the law is that AI bears no legal responsibility for its actions, and responsibility remains with the human in charge. This is worrying because improperly programmed and supervised AI may endanger human safety. The lack of regulatory intervention reinforces the importance of AI–human cooperation and human supervision of AI (the so-called ‘four-eyes principle’) to reduce or mitigate risks related to AI use [13]. The difficulties with assigning legal rights and responsibility in human–AI cooperation are evident from the ‘monkey-taking-a-selfie’ [14] and the ‘Uber testing self-driving cars’ cases [15]. In the selfie case, a photographer who set up his wildlife camera could not claim the copyright on a selfie taken by a macaque or the monkey itself since copyright does not extend to animals [15] This illustrates the challenges in properly determining authorship or co-authorship degrees between human and non-human animals. While on the facts, the issue concerned the right to protection under copyright law, the case’s implications are broader. They may be extended to the division of responsibility between AI and humans [15].

The issue of responsibility was dealt with more clearly in the Uber case, where a fatal accident caused by failing sensory equipment was held to have been caused by the human co-driver’s criminal negligence. It was deemed the human’s responsibility to supervise the machine’s work and take over from it, if necessary [16]. The decision raises concern that the regulator has not properly addressed the need to prevent the endangering of human life [17]. In addition to the legal repercussions of not regulating a potentially dangerous AI, one must consider the black box problem or the fact that we do not and cannot (yet) keep track of how AI makes its decisions [18,19]. This becomes even more concerning in light of humans’ tendency to ‘over trust robots’ [20], even when they realise that their knowledge about something is superior to that of the robot [20]. This is relevant to the construction industry since studies show that ‘64% of people trust a robot more than their manager’ [21] as they see algorithms as superior in providing unbiased information, maintaining work schedules, problem-solving and managing a budget [21].

Humans’ cognitive bias towards over-reliance on robots, coupled with the lack of complete control over and knowledge about robots’ decision-making processes, strengthens arguments that autonomous machines’ legal responsibility may need to be better regulated. There is a need for global AI regulation to tackle existing and anticipated issues [12], noting the shortcomings of the current framework, which make it ‘ill-equipped to solve the hot issues created by the ever-increasing technological advances in AI’ [21]. However, this goal has yet to be achieved, and as far as UK law is concerned, AI regulation is still in its nascent stage.

The Construction-Specific Issues

The use of AI in construction site safety practices is increasing. However, the deployment of AI in health and safety regulation raises various socio-legal concerns. Scholars highlight the ‘responsibility’ and ‘liability question’, where human actors hold the ultimate ‘accountability’, even though AI offers valuable insights. There is potential for ‘bias in AI algorithms and datasets’, which can impact fairness and equity in health and safety enforcement. Addressing these biases is crucial to ensure fairness and effectiveness in AI-driven health and safety regulation. ‘Privacy’ and ‘data protection’ are also crucial in the deployment of AI for monitoring and surveillance purposes. Concerns about the potential encroachment upon ‘workers’ privacy rights’ raise the need for safeguards to protect sensitive personal data. Finally, the rapid pace of technological advancement in AI presents challenges for regulatory compliance and keeping up with evolving capabilities. A robust legal framework is necessary to govern the use of AI in health and safety regulation and ensure compliance with existing legislation.

3. The Review Strategy

Grey literature sources can provide valuable insights and data that may not be available through traditional academic channels. Therefore, conducting a systematic literature review that includes grey literature sources is essential for advancing knowledge and addressing gaps in under-researched areas.

3.1. Method

A systematic literature review was conducted to investigate the socio-legal implications of utilising artificial intelligence (AI) to improve occupational health and safety within the UK construction industry. The review mainly relied on grey literature sources, such as websites, blogs, and industry reports, which are not published through traditional academic channels. In order to conduct a thorough and impartial grey literature search, it is imperative to formulate a comprehensive search strategy that delineates the relevant search terms, resources, websites and limits involved in the process.

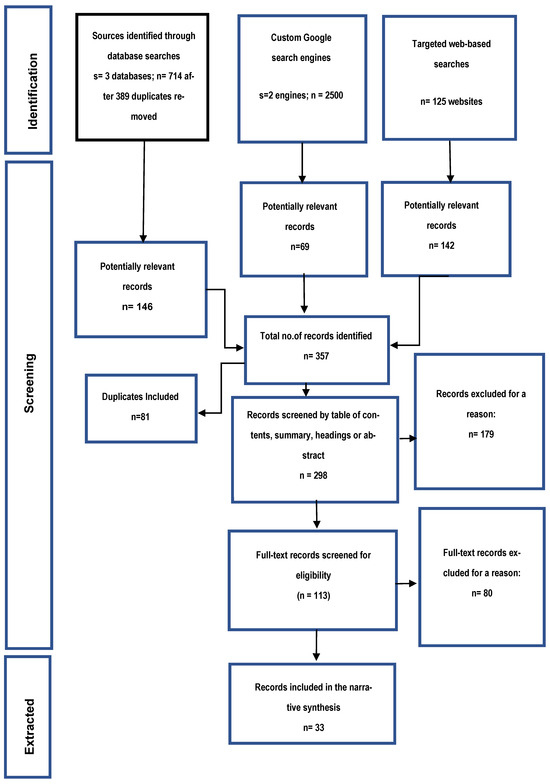

Such a strategy provides a well-defined framework and increased transparency to the search methodology, ensuring adherence to the prescribed systematic review reporting standards, such as the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) protocol [22]. Our methodology was also guided by PRISMA guidelines to ensure a transparent and replicable review process. The PRISMA flow diagram is illustrated in Figure 1.

Figure 1.

Systematic review process.

By meticulously documenting each stage of the search process and creating a detailed search plan, one can effectively manage their time and mitigate the risk of introducing any biases into the search results.

3.1.1. Research Questions

To conduct the review, the researchers first defined clear research questions that guided the research process and ensured comprehensive and precise results. These questions were designed to address the socio-legal implications of AI adoption in the context of occupational health and safety within the UK construction industry. We developed the following research questions to guide this systematic literature review:

- What are the benefits and challenges of deploying AI from the socio-legal and ethical perspective, particularly in the context of the division of responsibilities and rights, and the need for a general and/or construction sector-based legislative intervention?

- What is the status of the current regulatory framework in the construction industry, particularly whether the legislative provisions on AI hinder the UK construction industry’s efforts to improve the state of health and safety in the sector?

- How will construction health and safety law and regulation adapt to technological advancement?

3.1.2. Identification of Sources

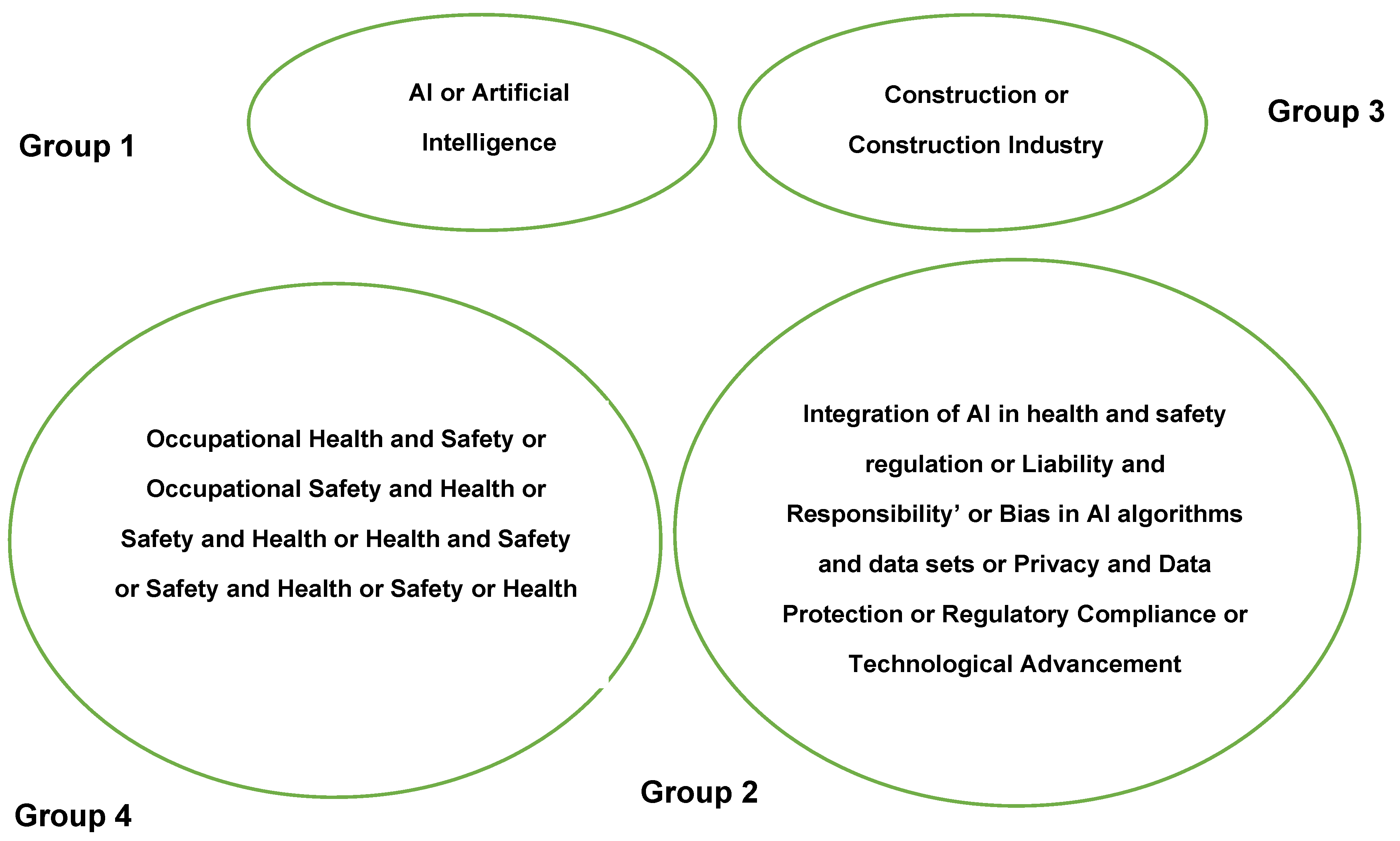

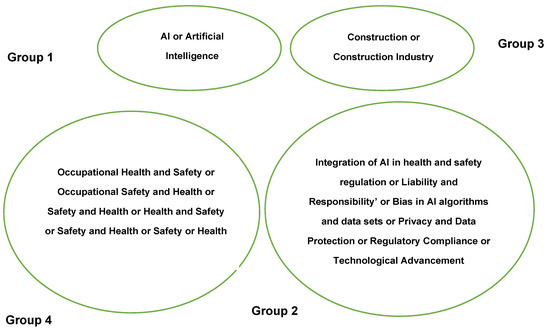

We conducted a search using specific keywords related to AI, occupational health and safety, the construction industry, and socio-legal aspects, guided by our research questions. These keywords were based on the socio-legal issues identified in Section 2, which were narrowed down to the construction industry discipline. The search keywords were divided into four categories. The first category was related to artificial intelligence, the second category focused on the [identified] socio-legal issues, the third category was specific to the construction industry, and the fourth category centred around health and safety. Figure 2 presents a visual representation of these categories.

Figure 2.

Keywords for systematic literature review.

The set of search strings applied to verify the title, abstract, and keywords of the articles collected from grey literature database are: (TITLE-ABS-KEY (“construction industry ” OR “construction ” OR “Artificial Intelligence ” OR “AI”) AND TITLE-ABS-KEY (“Integration of AI in health and safety regulation” OR “liability and responsibility” OR “bias in AI algorithms and data sets” OR “privacy and data protection” OR “regulatory compliance” OR “technological advancement”) AND TITLE-ABS-KEY (“Occupational Health and Safety” OR “Occupational Safety and Health” OR “Safety and Health” OR “Health and Safety” OR “Safety” OR “Health”)).

To focus on recent research, we limited the results to publications from 2017 (the past seven years at the time of data collection), including news articles, new releases and blogs. Our search was conducted between 1 February 2024 and 1 April 2024. Therefore, this review did not cover articles published after 1 April 2024.

Eligibility Criteria

The review’s eligibility criteria were defined in the grey literature search plan and are shown in Table 1. These criteria demonstrate that the usual method of systematically searching academic journal databases is inappropriate for adequately addressing the study review’s research questions.

Table 1.

Review eligibility criteria.

3.1.3. Information Sources and Searching Strategies

A plan was created to search for grey literature that included two different searching techniques: (1) searching through grey literature databases, and (2) using customised Google search engines. These complementary strategies were used to minimise the risk of omitting relevant sources. Each database and search engine utilise unique algorithms to generate their relevance rating schemes, and using a variety of these sources is likely to result in a more extensive search. It is essential to document every stage of the grey literature search process to maintain transparency and comprehensiveness. Therefore, all assumptions, decisions, and challenges encountered throughout the review were recorded.

Search Strategy #1

Researchers use various strategies to search for relevant documents during a review. These databases provide indexing and peer review for both online and print resources. However, the search functionalities and filters available for retrieving results vary widely across different databases.

Therefore, researchers need to adjust their search terms to match the databases they use. One of the strategies is to explore databases that catalogue grey literature documents. These databases provide indexing and sometimes even peer review for online and print resources. However, the search functionalities and filters available for retrieving results can vary widely across different databases. The search was conducted using three databases: CORE, LexisNexis, and Westlaw. After searching, researchers reviewed the titles and abstracts of the documents against the inclusion and exclusion criteria set beforehand. Any potentially relevant documents were then retrieved in full text for further screening. The results of the database searches were exported to an Excel spreadsheet, and duplicates were excluded using the ‘remove duplicates’ function. The titles of all search results were reviewed in Excel, similarly to how a title screen works in a traditional review of peer-reviewed academic articles. Relevant titles were highlighted in Excel and retained for further screening.

Search Strategy #2

In the research, two search approaches were used. The first approach involved searching legal databases, such as CORE, LexisNexis, and Westlaw. The second method utilised in this case was modified from Godin et al. [22]. It involved using a customised Google search engine to search the UK government publication repository. Under this strategy, the authors screened the first ten pages of each search’s hits, using the title and short text underneath to identify relevant records. These records were then bookmarked in Google Chrome and entered into an Excel spreadsheet.

The bookmarks were filed under a sub-folder named after the specific search strategy and into a main folder named after the search engine used. This approach helped identify the websites through which search terms and engines were used and prevented the same record from being identified repeatedly. Titles that were identified as potentially relevant were retained for further screening. The search terms and the number of results retrieved and screened for each search strategy were also recorded.

3.1.4. Assessment of Eligibility and Selection of Studies

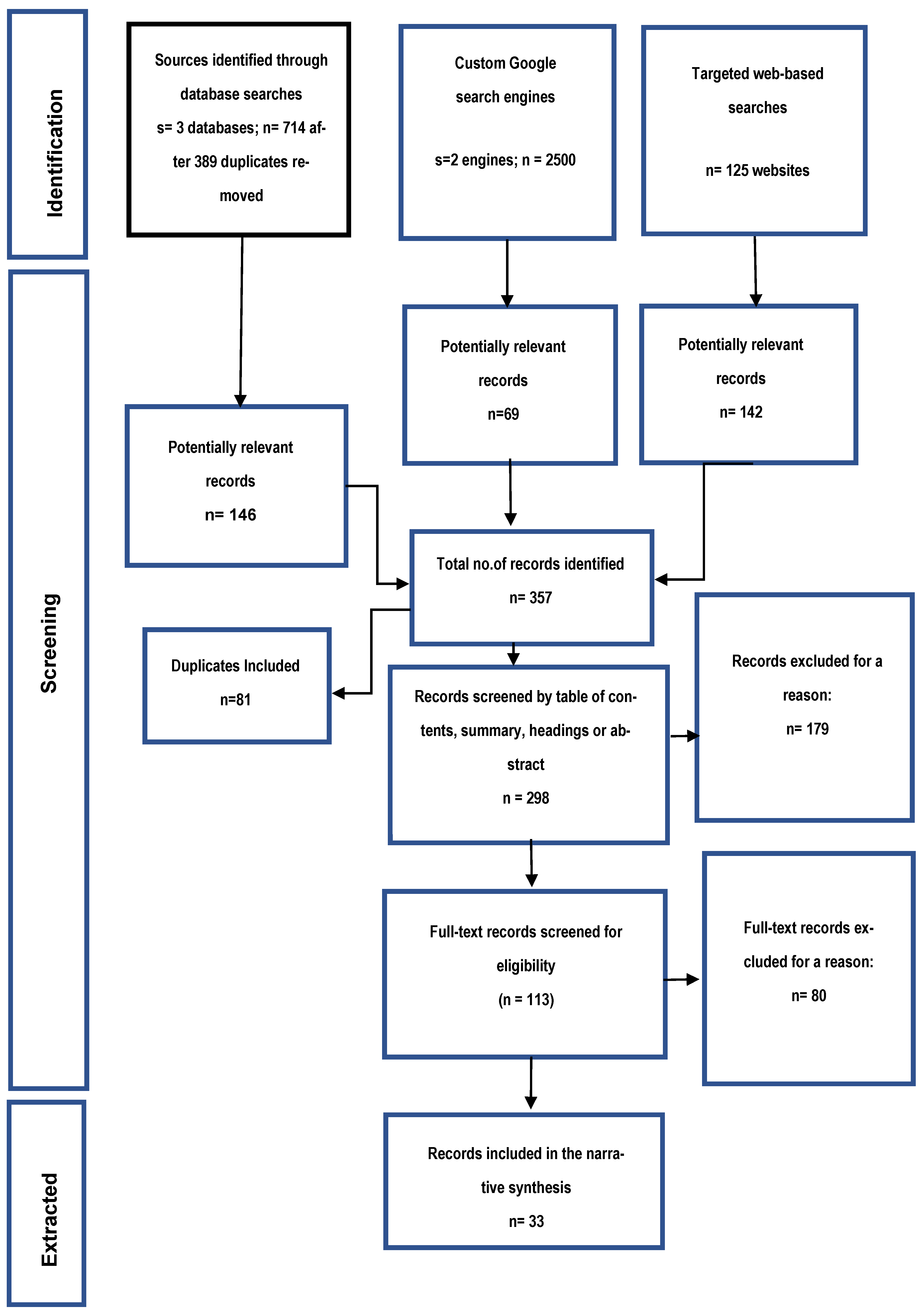

PRISMA guidelines recommend using a study flow diagram to represent the screening and study selection process. This process was applied to the grey literature search methods (Figure 3). In this study’s grey literature search, relevant documents were recorded in an Excel sheet. After reviewing abstracts, executive summaries or tables of contents, the full text of all items that passed the first stage of screening was reviewed. A numeric code was given for each reason for excluding items, and a screening code was recorded for each item at both stages of screening. Finally, all items that remained following full-text screening were included in the review.

Figure 3.

An illustration of the three phases of the review, including the number of records screened and included in the thematic synthesis.

3.1.5. The Synthesis of Results

Upon conducting a comprehensive review of the publications included in the review, we meticulously extracted pertinent data. The data extracted pertained to various aspects of the publications, such as the source organisation, year of publication, authors, intended audience, goals and objectives, cited resources and evidence.

Throughout the entire process, we made a conscious effort to exclusively extract data relevant to AI usage in construction occupational health and safety performance while consciously excluding information concerning the deployment of AI within the construction environment in general, as it did not align with the objectives of the review.

4. Results

We conducted research on the implementation of AI in health and safety management, along with the legal and regulatory factors that could affect AI adoption in the construction industry. Our search yielded 714 records, which were narrowed down to 33 articles after eliminating duplicates. Among these, 26 articles and sources discuss the advantages and challenges of using AI for health and safety management, while 13 articles and sources focus on the regulatory framework that could impact AI integration in construction health and safety. In the following sections, we provide a synthesis under these themes. Table 2 describes other characteristics of the included publications.

Table 2.

Document characteristics.

The search strategies used were comprehensive and efficient, and targeted web searches were found to be the most effective way to identify relevant publications. Although Excel was a suitable means of record management, it had limitations, such as the inability to export directly from Google search engines. Despite these limitations, the ‘sort’ function in Excel proved exceptionally helpful in sorting records by screening decision codes and organisation names.

It is essential to carefully examine the precisely synthesised data from the systematic review to address research questions and objectives effectively. This involves conducting a thematic analysis to present the findings concisely and comprehensibly. In addition, insights from various sources, including grey literature, are utilised in the ensuing sections.

4.1. Use of AI in the UK Construction Industry: Benefits and Challenges

The construction industry has traditionally been seen as reactive, low-tech, reliant on human experience, and fragmented, and it has developed on a project-by-project basis [23]. It is also true that AI was first used in UK construction law in 1988, based on Capper and Susskind’s expert rule-based system [24]. This system complemented the Latent Damages Act 1986 [25], helping construction lawyers digest the complicated legislation. AI is now widely used in the UK construction industry for project management and planning. It allows managers to tap into and re-think existing data, which makes the construction process more efficient, speedy and organised [25]. In particular, using advanced algorithms facilitates the analysis of ‘vast amounts of data to predict project timelines, budget requirements and potential risks’ [26]. The ability to predict outcomes and track the time taken by each task is particularly relevant to on-site health and safety.

AI may also help on-site health and safety by improving communication in the construction area and alerting workers to risks, such as when they are about to enter a dangerous area. AI can ensure that only authorised personnel may enter dangerous areas in this context. In addition, AI can directly improve on-site health and safety by identifying and analysing potential hazards, ensuring that protocols are complied with, and monitoring and flagging unsafe activities [23]. It could also alert building site managers to potential issues so that they can resolve any risks before anyone gets hurt. Additionally, it can help construction managers navigate work vehicles better and avoid on-site accidents due to collisions [27].

AI integration with tools such as building information modelling (BIM) would improve the planning stages of a project, making them more efficient and accurate. BIM helps envision different outcomes and make changes in the pre-construction stage [26]. Digitalisation leads to timelier project completion while reducing waste [23]. These gains are indirectly related to safety in the workplace. For example, in an industry plagued by ‘skilled labour shortages, an ageing workforce, a strain on materials, a delayed supply chain and tighter margins than ever before’ [26]. The drive towards efficiency, cost cuts, and profits can be detrimental to the workforce. Leveraging the benefits of AI could counteract the above and enable efficiency without jeopardising the health and safety of workers.

AI also actively contributes to workers’ safety by using cameras (both on-site and wearables), monitoring workers’ health indicators, detecting high-risk situations, and alerting workers to possible accidents in real-time [26]. AI powers robotics, which facilitates the application of numerous automated solutions, such as automated bricklaying or surveying sites with drones. This adds to the construction industry’s safety because it delegates dangerous jobs from humans to a machine. Doing so also makes the construction process much faster since human operators no longer perform many difficult and time-consuming operations [26].

Linked to that, AI algorithms help with predictive maintenance of construction equipment. This is done by analysing machinery sensors’ data, thus predicting equipment failures. This could protect workers while also saving time and money by avoiding machinery downtime and repair costs, prolonging the use of equipment and contributing to the project’s success [23]. Besides optimising equipment and construction schedules, AI helps with education, on-the-job training of employees and instilling best practices. AI can help construction novices overcome their lack of experience through quicker, more efficient and streamlined onboarding and ‘leveraging the experience of construction veterans through systematic data capture’ [23].

AI also helps optimise the supply chain in the industry by reducing project waste and ensuring that there will be sufficient materials when they are needed. By employing predictive analytics, AI can foresee which materials will be in demand and efficiently manage inventory. Avoiding waste makes the project more sustainable and environmentally friendly, complying with the existing environmental protection legislation. Finally, AI can add to the project’s positive environmental impact by designing energy-efficient buildings [28]. AI may also directly affect workers’ safety. For example, construction businesses in the UK started implementing new AI-powered H&S equipment, such as the Wear Health exoskeleton.

This scanning technology protects workers’ lives and health. It is very useful in heavy lifting and carrying conditions or when repetitive movements, such as ‘lifting, stretching or reaching overhead for long periods’, are needed [28]. In particular, industry practitioners praise exoskeleton suits for helping workers avoid work-related injuries. The suits’ main contribution is in combatting fatigue and improving task endurance by ‘easing pressure on the lower back and core, [… and] helping to lighten the burden put on the body and back from heavy lifting’ [28]. The suits also feed employers with real-time data about the nature and degree of on-site health and safety risks [24]. AI’s potential to ensure better workplace safety is of great importance, given that the construction industry is high risk and workers’ safety remains a big concern. However, AI is a nascent technology that may expose its users to vulnerabilities. For example, AI may create issues related to privacy and confidentiality, which conflict with existing data protection legislation. The problem is especially pronounced in open-use platforms such as ChatGPT, which need to be more secure, where no confidence or data privacy can be ensured, and where the uploaded or inserted data may be viewed and used by others, at times incompetently or maliciously. While private platforms can be used for the same purposes, it is essential to check their settings to achieve the required level of security and privacy. This is important for the construction industry, where the efficiency of health and safety protocols depends on the integrity of the available data. Lack of privacy and security could significantly endanger the construction process and the health and lives of those working on site.

Another problem is generative AI’s ability to hallucinate or fabricate information, making it ever more important to use it only under human supervision [29]. Ethics should also be considered since AI systems self-learn from the data that has historically populated the Internet. So, they are vulnerable to becoming biased and unfair. This means that AI should be used cautiously, and be fully calibrated and supervised to minimise systemic issues [29]. As a last consideration, generative AI is not industry-specific and could be updated. It can also be explicitly designed with a view of a specific law or as a legal-tech tool, or it could be more general. It can be informed by underlying data that may or may not be legally focused and updated with the latest legal developments [29].

This means that AI systems must be used in an informed manner and without excessive reliance. This is particularly relevant to the area of health and safety in construction sites where a small error or underestimation of risk may claim the life or harm the health of workers. Despite the apparent need, the UK still needs to regulate AI. This has left the domestic construction industry, which relies on AI for its competitiveness and safety, behind its counterparts in the EU and overseas. Nevertheless, there are some positive developments, and the following section will outline the status quo of the UK AI regulatory and policy framework.

4.2. The Role of Existing Regulations in Addressing Societal Concerns

4.2.1. UK Health and Safety Legislation in the Construction Industry

The UK construction industry is heavily and comprehensively regulated, including with regard to health and safety. The regulatory framework addresses health and safety as part of legislative provisions in the areas of construction design and management [30], the use of substances hazardous to health [31], lifting operations and lifting equipment [32], provision and use of work equipment [33], the use of head protection on construction sites [34], work at height [35], as well as manual handling operations [36], control of noise [37] and vibrations at work [38]. Additionally, the UK has legislated directly on health and safety issues. Some of the most notable instruments include The Health and Safety (Consultation with Employees) Regulations 1996 [39], Health and Safety at Work, etc., Act 1974 [40], The Management of Health and Safety at Work Regulations 1999 [41] and The Construction (Health, Safety and Welfare) Regulations 1996 [42]. Furthermore, UK employers are legally bound [43] to report to the construction regulator, the Health and Safety Executive (HSE), ‘certain types of accidents that happen to their employees [including] deaths, serious injuries and dangerous occurrences immediately [as well as] occupational ill-health issues and diseases’ [44]. Similar obligations apply to on-site project management teams when there are certain dangerous occurrences and where an injury has been sustained by ‘a self-employed worker or member of the public’ [45]. In addition, there are certain health and safety obligations, imposed on employees [46].

The UK imposes stringent ‘health and safety obligations on all parties involved in a project’ [47]. Risks arising from construction projects can be mitigated through insurance, such as ‘public liability insurance, professional indemnity insurance and contractor’s all risks insurance, [all of which] are available to protect parties against unforeseen events, accidents and liabilities’ [48]. Besides health and safety regulations, the UK construction industry is subject to building regulations involving contract and dispute resolution law and issues and regulations related to payment and adjudication [49].

The plethora of regulations makes it evident that the government has internalised the idea that health and safety on the construction site are of great societal concern. It is also clear that the legislator has attempted to provide a comprehensive framework, addressing all possible health and safety weaknesses in the industry. It is arguable, however, that the existence of so many legal obligations makes it challenging for construction companies to comply and remain competitive, delivering the efficiencies and profits expected from them. AI systems could make a qualitative change in the construction industry’s performance by helping businesses find their way in the labyrinth of legal obligations. Ultimately, this would promote a safer and more ethically sound domestic construction environment. However, the sector will be much more open to benefit from the potential of AI if there is more legal certainty regarding the implications of AI use.

The Potential Role of AI in Supporting H&S Regulatory Compliance

Artificial intelligence holds significant potential for supporting the UK’s health and safety regulations within the construction industry by providing advanced monitoring, predictive analysis, and risk assessment capabilities. For instance, AI-powered systems can enhance compliance with regulations such as the Control of Substances Hazardous to Health (COSHH) Regulations by analysing data from material safety data sheets, sensor readings, and workplace incident reports to identify potential exposure risks and recommend appropriate control measures. Additionally, AI can support compliance with the Work at Height Regulations by analysing data from sensors and drones to monitor workers’ activities at elevated locations, detect potential fall hazards, and alert supervisors in real time to take preventive actions. Moreover, AI-enabled predictive analytics can forecast potential safety risks associated with factors such as weather conditions, equipment usage, and worker behaviour, facilitating proactive risk management and accident prevention by regulations such as the Construction (Design and Management) Regulations (CDM).

4.3. The Upcoming AI Regulatory Framework

The UK still needs a dedicated AI regime related to the construction industry. Only recently has the UK government needed to address the issue of AI regulation. This changed with the publication of a white paper on AI in 2023 [44], which outlined the Government’s plans for a ‘pro-innovation’ domestic regulatory framework. The paper did not provide a legal definition of AI, leaving such systems to be defined by a combination of two criteria: adaptivity by training and self-learning and autonomy, meaning the ability to make decisions without express human intent or supervision [50]. Notably, the white paper’s drafters chose to make it context-specific and focus on AI use and outcomes rather than on AI technology, even extending the approach to failures to use AI [50].

They also stopped short of proposing legislative changes or imposing ‘liability or accountability in the AI supply chain, […] leav[ing] this issue to regulators’ [50]. The lack of intervention was motivated by the Government’s appreciation of the sheer volume of liability and accountability regimes that are not harmonised across jurisdictions. This may prove to be a potential weakness of the proposed regime. While the paper did envision that regulators may be placed under a statutory duty to regard the five cross-sectoral principles (discussed below) and the Government intends to ensure the regime’s coherence by putting in place a cross-sectoral monitoring system, this may prove insufficient to tackle the speed and scope of AI development. Thus, the lack of clearly defined legal obligations may create safety and security challenges, particularly for high-risk industries such as construction. These concerns are amplified by the Government’s plans to maintain the territorial application of the current legal framework [44], even though the AI supply chain is international, and this can easily undermine the UK legislative efforts. The white paper reflected on the Government’s ambition to ensure that the UK will become a global leader in building, testing and using AI technology, turning the UK into ‘the smartest, healthiest, safest and happiest place to live and work’ [44].

While the white paper did not single out any specific industry, the provisions of the proposed AI regulation are relevant to the health and safety aspect of construction law, and the Government’s aspirations, if put into practice, could make the construction industry more efficient, safer and healthier. The white paper states that AI ‘will support people to carry out their existing jobs by helping to improve workforce efficiency and workplace safety’ [44]. Safety has been singled out as one of the values that could be threatened by unchecked AI development, along with ‘security, fairness, privacy and agency, human rights, societal well-being and prosperity’ [44]. The Government acknowledges that safety risks caused by AI may include ‘physical damage to humans and property, as well as damage to mental health’ [44]. In particular, the paper acknowledges the need for close monitoring of ‘safety risks specific to AI technologies… [since a]s the capability and adoption of AI increases, it may pose new and substantial risks that are unaddressed by existing rules’ [44]. To this end, the white paper’s admission that AI technology can give rise to many and varied risks depending on the industry and that these risks must be identified, assessed and mitigated assures that AI systems will not be deployed without due diligence and according to the objectives of the relevant laws. The white paper serves as a blueprint for the Artificial Intelligence (Regulation) Bill [HL] [45] and the Initial Guidance for Regulators, published by the Office for Science, Innovation and Technology on 6 February 2024 [45]. This is why its proposals merit consideration, including their possible impact on the existing health and safety requirements applicable to the domestic construction industry.

The discussion will make it clear that while the proposed regulatory framework is geared to be industry-neutral, the five cross-sectoral principles promoted by the white paper are relevant to any AI-employing industry, including the construction industry [47]. For example, the first of these five principles—’safety, security and robustness’—is particularly relevant to health and safety on the construction site. To this end, the Government acknowledges AI’s ability ‘to autonomously develop new capabilities and functions’ [44] and sees this as a significant risk, particularly in critical infrastructure sectors or domains [44]. The white paper states that ‘it will be important for all regulators to assess the likelihood that AI could pose a risk to safety in their sector or domain and take a proportionate approach to manage it’ [44]. For example, the white paper focuses on guaranteeing the safety, security and resilience of all AI systems throughout their life cycle by timely and continuous identification and assessment and proportionate management of sector-specific risks. Applied to the construction industry, this principle would guarantee that AI systems deployed in the industry will be rigorously supervised in real-time. The second principle is also relevant as it refers to the transparency and explainability of the information concerning the AI system. This reflects the emerging FAT principle (fairness, accountability and transparency) in machine learning [48] and a ‘growing understanding of the importance of legitimacy, fairness, ethical and human-centric approaches’[48].

The drafters of the paper intend that the two concepts, taken together, would guarantee that the relevant parties will be informed about the AI system, and they will be able ‘to access, interpret and understand the decision-making processes’ [47]. The principle of AI transparency is particularly topical as AI often provides efficiency and protection, as may be the case with the construction industry, at the cost of privacy [51]. In the construction industry, there is a trade-off between confidentiality of workers’ data and the management’s ability to protect them from incidents to ensure a more efficient construction process through the deployment of AI. In this sense, transparency about AI use becomes an essential part of the process. Making it more ethical diminishes the likelihood that the information asymmetry between the parties will result in unfair outcomes.

In the construction industry, accessibility of information is particularly valuable because it enables those directly affected by the use of AI to enforce the rights conferred on them by the proposed legislation. This will enhance safety at the construction site since the possibility of redress will make employers less inclined to overlook health and safety requirements. The rest of the cross-sectoral principles build on the first two. These include the principles of fairness, accountability and governance, and contestability and redress. The fairness principle asserts that AI systems should respect the legal rights of both legal and physical persons and should not promote unfair discrimination and market outcomes.

The last requirement envisions the interaction of AI systems with domestic privacy, consumer protection, human rights or financial regulation requirements [51]. Traditionally seen as based on transparency [51], the accountability and governance principles require that businesses re-think their governance strategies and take measures to increase their accountability across the lifecycle of the AI system. The objective is to achieve effective oversight of AI systems. Lastly, the principle of contestability and redress empowers those affected by the decisions of an AI system to contest it and to contest an AI outcome that has either caused them harm or has created a material risk of harm [51]. Future regulation is intended to be AI-specific rather than to tackle the challenges of digital technology more broadly. The new framework aspires to ‘drive growth and prosperity, […] increase public trust in AI [and] strengthen […] the UK’s position as a global leader in AI’ [51]. The push towards safer, better-regulated AI sits well with the construction industry’s 2025 vision, which significantly emphasises increasing health and safety in the sector and, in particular, diminishing occupational illnesses and work-related injuries [52]. Even though this strategy paper was published in 2013, it did acknowledge the need for greater investment in smart construction and digital design [52]. It also recognised that the role of digital technologies in improving on- and offsite health and safety will only grow more important in the future [52] by making a specific mention of the role of innovation and noting that ‘[t]he radical changes promised by the rise of the digital economy will have profound implications for UK construction’ [52].

4.4. Latest and Future Developments

In February 2024, the UK government released a detailed response on regulating artificial intelligence (AI) [53]. The government’s approach is to balance innovation and safety, ensuring that AI is developed, designed, and used responsibly. The framework is based on non-statutory and cross-sectoral principles that aim to provide a comprehensive guide for regulators to follow. The UK’s regulatory principles for AI include leveraging existing regulatory authorities and frameworks, establishing a central function to monitor and evaluate AI risks, and promoting coherence [54]. The first principle aims to use existing regulations in different sectors that can be applied to AI, like data protection regulation, to ensure that AI is not developed or used in ways that are harmful to individuals or society. The second principle will establish a central function to coordinate and monitor AI risks. This function will be responsible for identifying and addressing AI-related risks, developing guidelines for AI development and use, and providing support to regulators, industry, and the public. The third principle aims to promote coherence in the regulatory landscape. This includes working with international organisations to develop common standards, sharing best practices among regulators, and creating a common language for AI-related terms and concepts. Moreover, to help innovators comply with legal and regulatory obligations, the UK is launching the AI and Digital Hub in 2024 [55].

The hub aims to provide a one-stop-shop for innovators to access guidance on regulatory requirements, compliance tools, and case studies [56]. The hub will foster cooperation among regulators, industry, and academia to ensure that AI is developed and used responsibly. The UK’s AI regulatory approach will depend on the effectiveness of these measures. The government has decided not to follow the EU regulations on AI safety and will instead rely on voluntary commitments from businesses. However, the government recognises that not all AI should be treated the same way and has maintained the five cross-sectoral principles outlined in the original white paper. The approach to AI regulation will be context and sector-based, and it will be up to the HSE (sector regulator) to decide what measures should be taken to ensure risk-free AI deployment in the construction industry. According to stage-one guidance, these measures may include providing guidance, creating tools, encouraging sharing of information and supporting transparency [57]. Regulators will be provided with funds to implement appropriate measures and will be required to provide updates on their assessment of sector-related AI risks, steps taken to address them, AI expertise, capacity, structures, and plans for the coming year. Given the construction industry’s need for legal certainty, it is necessary to examine the regulator’s powers and coverage to identify any gaps or weaknesses. This means that the responsibility to decide what measures should be taken to ensure risk-free AI deployment in the construction industry falls on the HSE as the sector’s regulator [58]. The Government's stage-one guidance advises that these measures may include the ‘provision of guidance, creation of tools, encouragement to share information and support transparency and the application of voluntary measures, directed towards developers and deployers of AI’.

Regulators are provided with funds to deploy such measures as they deem appropriate, subject to providing an update on their assessment of the sector-related AI risks, the steps undertaken to address this, their AI expertise, capacity and structures and plan for the coming year [58]. In the construction industry, placing so much responsibility on the HSE and the industry’s need for legal certainty makes examining the regulator’s powers and coverage for any existing gaps or weaknesses necessary. The use of AI technologies in construction has the potential to significantly improve safety outcomes. However, it is essential to ensure that these technologies are deployed in a responsible and ethical manner. Regulatory frameworks are critical to managing the introduction of AI in safety-critical contexts. Industry reports, such as those from the HSE, highlight the importance of regulation in mitigating risks associated with the deployment of AI in construction. Reliability, accountability, and fairness are valid concerns associated with AI systems that lack adequate regulation. To address these concerns, policymakers need to establish standards for AI development, testing, and implementation. Oversight mechanisms must also be put in place to monitor compliance with health and safety regulations.

The UK government’s stage-two guidance, expected in summer 2024, is likely to introduce objective tests to measure the level of maturity of AI risks. The construction industry must be proactive in assessing and managing risks associated with AI deployment to remain abreast of potential regulatory changes. By implementing robust regulatory frameworks, policymakers can ensure that AI technologies are deployed responsibly, protecting the well-being of the construction workforce while maximising the benefits of innovation in health and safety. Nevertheless, the question remains: how will law and regulation evolve as AI technology advances?

Is It Viable to Establish AI as a Legal Entity?

AI has advanced significantly in recent years, leading to discussions about whether AI systems can be held legally responsible for accidents and safety lapses on construction sites [59]. This theoretical topic has raised questions about the legal and societal framework required for such a scenario to become a reality. One of the significant changes necessary to establish legal responsibility for AI systems would be to recognise them as legal entities capable of bearing responsibility [60]. This would require establishing legal personhood for AI, which is currently not recognised under the law [61]. Additionally, the autonomy level of AI systems would need to be advanced enough to make independent decisions without human input, which is a significant challenge given current technological capabilities and ethical boundaries. New regulatory frameworks and ethical guidelines would also need to be developed specifically to address the implications of machines’ autonomous decision-making [62]. This is because there is currently no legal framework for holding AI systems accountable for their actions. Therefore, it is essential to establish clear guidelines and regulations to ensure that AI systems operate within ethical and legal boundaries. Moreover, practical mechanisms, such as special insurance schemes or financial provisions, would have to be instituted to manage penalties and liabilities autonomously incurred by such entities [63]. This would require a significant shift in the way that insurance and liability are currently managed, as AI systems are not recognised as legal entities. While theoretically conceivable, this shift would require profound legal, ethical, and technological advancements and significant public and political support [64,65]. Until then, the responsibility for the actions of AI systems on construction sites will likely remain firmly with the humans and organisations who design, deploy, and oversee them. Therefore, it is essential to prioritise responsible use and deployment of AI systems to ensure safety and minimise the risk of accidents and safety lapses on construction sites.

5. Research Limitations

A systematic review to improve occupational health and safety in the UK construction sector through responsible AI usage may face several limitations and biases. Accessing grey literature sources can be challenging, resulting in incomplete coverage and potential bias. Language bias is possible if the review only includes English-language publications. The review’s scope may unintentionally leave out relevant studies or grey literature sources, which can lead to knowledge gaps. Additionally, temporal bias can occur due to the rapid evolution of AI technology, rendering older studies irrelevant to current practices. Addressing these limitations and biases is essential to ensure that the review’s findings and conclusions are robust. It is important to note that a systematic review aimed at improving occupational health and safety in the UK construction sector through the responsible use of AI may face several limitations and biases. One of the main challenges is accessing grey literature sources, which can be difficult and result in incomplete coverage and potential bias. There may also be language bias if the review only includes English-language publications. Furthermore, the scope of the review may unintentionally leave out relevant studies or grey literature sources, leading to knowledge gaps. In addition, a temporal bias may occur due to the rapid evolution of AI technology, making older studies irrelevant to current practices. It is essential to address these limitations and biases to ensure that the review’s findings and conclusions are reliable.

6. Summary and Conclusions

Based on a PRISMA-based systematic review of grey literature, this article explores the legal and social concerns associated with the appropriate implementation of AI in the UK’s construction industry. The review’s findings indicate that the Health and Safety Executive (HSE) requires standardised authorities to effectively oversee the use of AI in the UK construction industry. This recommendation can help to ensure the safety and security of workers and the public, while also facilitating the efficient and ethical use of AI in construction. The existing legal and regulatory framework provides a strong foundation for risk management. Still, it needs to sufficiently account for the socio-legal dimensions introduced by AI deployment and how AI may evolve in the future.

As AI evolves, the prospect of AI systems being held legally responsible for accidents and safety lapses on construction sites remains a topic of significant theoretical discussion and legal exploration. However, several substantial legal and societal framework changes would be necessary for such a scenario to materialise. This includes the potential establishment of legal personhood for AI, where systems could be recognised as legal entities capable of bearing responsibility. Additionally, the autonomy level of AI systems would need to be advanced enough to make independent decisions without human input, challenging current technological capabilities and ethical boundaries.

New regulatory frameworks and ethical guidelines would also need to be developed specifically to address the implications of machines’ autonomous decision-making. Moreover, practical mechanisms, such as special insurance schemes or financial provisions, would have to be instituted to manage penalties and liabilities autonomously incurred by such entities. While theoretically conceivable, this shift would require profound legal, ethical, and technological advancements and significant public and political support. Until then, legal responsibility for the actions of AI systems on construction sites will likely remain firmly with the humans and organisations who design, deploy, and oversee them.

According to the article, the UK government has introduced a comprehensive framework to regulate the development and usage of AI in a manner that is both innovative and secure. This framework will make use of existing regulatory authorities and structures, establish a central function to monitor and assess AI-related risks, and promote coherence in regulatory policies. To facilitate the regulatory requirements, compliance tools, and case studies, the UK is set to launch the AI and Digital Hub.

The HSE is responsible for ensuring safe AI deployment in the construction industry. The UK government’s stage-two guidance, expected in the summer of 2024, will introduce objective tests to measure the level of maturity of AI risks. To ensure that AI technology is implemented responsibly, it is essential to recognise that the HSE may require additional resources and empowerment.

The review has emphasised the critical importance of setting up standardised authorities for the Health and Safety Executive (HSE), which is responsible for overseeing the use of AI in the construction industry. The establishment of these authorities will allow the HSE to collect comprehensive and accurate data related to AI processes and carry out technical, empirical, and governance audits. This will help to ensure that AI systems are designed and implemented in a safe and responsible manner. To achieve effective AI oversight, it is crucial that the HSE is appropriately resourced and empowered to yield positive results.

This may involve investing in additional staff, training and tools to enhance the HSE’s capacity to monitor AI systems and identify potential risks or issues. The HSE must be further equipped with the necessary powers to enforce safety standards and hold companies accountable for any breaches of these standards. Overall, the successful implementation of standardised authorities for the HSE will play a vital role in ensuring that AI is used safely, responsibly and ethically in the construction industry. Future studies should also evaluate the ongoing legislative efforts, such as those by the UK Government, and assess their adequacy in addressing the intricacies of AI deployment within construction safety.

Funding

This research received no external funding.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Winfield, M. Legal Implications of Digitization in the Construction Industry. In Industry 4.0 for the Built Environment: Methodologies, Technologies and Skills; Springer International Publishing: Cham, Switzerland, 2021; pp. 391–411. [Google Scholar]

- HM Government. The Construction Playbook. Cabinet Office. 2020. Available online: https://www.gov.uk/government/publications/the-construction-playbook (accessed on 18 February 2024).

- Shojaei, R.S.; Burgess, G. Non-technical inhibitors: Exploring the adoption of digital innovation in the UK construction industry. Technol. Forecast. Soc. Change 2022, 185, 122036. [Google Scholar] [CrossRef]

- Yuri, T.; Nikolai, K.; Fatima, T.; Sayana, B. Law and Digital Transformation. Leg. Issues Digit. Age 2021, 4, 3–20. [Google Scholar]

- Weber-Lewerenz, B. Corporate digital responsibility (CDR) in construction engineering—ethical guidelines for the application of digital transformation and artificial intelligence (AI) in user practice. SN Appl. Sci. 2021, 3, 801. [Google Scholar] [CrossRef]

- Abioye, S.O.; Oyedele, L.O.; Akanbi, L.; Ajayi, A.; Delgado, J.M.D.; Bilal, M.; Akinade, O.O.; Ahmed, A. Artificial intelligence in the construction industry: A review of present status, opportunities and future challenges. J. Build. Eng. 2021, 44, 103299. [Google Scholar] [CrossRef]

- Wang, K.; Guo, F.; Zhang, C.; Schaefer, D. From Industry 4.0 to Construction 4.0: Barriers to the digital transformation of engineering and construction sectors. Eng. Constr. Archit. Manag. 2022. ahead-of-print. [Google Scholar]

- CBS News. Colombian Judge Uses ChatGPT in Ruling on Child’s Medical Rights Case. 2023. Available online: https://www.cbsnews.com/news/colombian-judge-uses-chatgpt-in-ruling-on-childs-medical-rights-case/ (accessed on 18 February 2024).

- The Week. Colombian Judge Uses ChatGPT in Ruling, Triggers Debate. 2023. Available online: https://www.theweek.in/news/sci-tech/2023/02/03/colombian-judge-uses-chatgpt-in-ruling-triggers-debate.amp.html (accessed on 18 February 2024).

- Sinclair, S.; Merken, S. New York Lawyers Sanctioned for Using Fake ChatGPT Cases in Legal Brief. Reuters. 2023. Available online: https://www.reuters.com/legal/new-york-lawyers-sanctioned-using-fake-chatgpt-cases-legal-brief-2023-06-22/ (accessed on 18 February 2024).

- Gates, B. AI is About to Completely Change How You Use Computers. Gates Notes. 2023. Available online: https://www.gatesnotes.com/AI agents?WT.mc_id=20230907090000_AI-Agents_BG EM_&WT.tsrc=BGEM (accessed on 15 February 2024).

- Lawlor, R.C. What Computers Can Do: Analysis and Prediction of Judicial Decisions. Am. Bar Assoc. J. 1963, 49, 337. [Google Scholar]

- Henz, P. Ethical and legal responsibility for Artificial Intelligence. Springer, Discover Artificial Intelligence, 2021. Available online: https://link.springer.com/article/10.1007/s44163-021-00002-4 (accessed on 18 February 2024).

- Gordon, J.S. AI and Law: Ethical, Legal, and Socio-Political Implications. AI & Society 2021, 36, 403–404. Available online: https://link.springer.com/article/10.1007/s00146-021-01194-0 (accessed on 18 February 2024).

- Slotkin, J. ‘Monkey Selfie’ Lawsuit Ends with Settlement between PETA, Photographer. 2017. Available online: https://www.npr.org/sections/thetwo-way/2017/09/12/550417823/-animal-rights-advocates-photographer-compromise-over-ownership-of-monkey-selfie (accessed on 19 February 2024).

- Marshall, A. Why Wasn’t Uber Charged in a Fatal Self-Driving Car Crash?’ The Wired. 2020. Available online: https://www.wired.com/story/why-not-uber-charged-fatal-self-driving-car-crash/ (accessed on 19 February 2024).

- Domonoske, C. Monkey Cannot Own Copyright to His Selfie, Federal Judge Says’. The Tqo-Way. 2016. Available online: https://www.npr.org/sections/thetwo-way/2016/01/07/462245189/federal-judge-says-monkey-cant-own-copyright-to-his-selfie (accessed on 19 February 2024).

- Appel, G.; Neelbauer, J.; Schweidel, D.A. ‘Generative AI Has an Intellectual Property Problem’. Harvard Business Review. 2023. Available online: https://hbr.org/2023/04/generative-ai-has-an-intellectual-property-problem (accessed on 19 February 2024).

- Blouin, L. ‘AI’s Mysterious ‘Black Box’ Problem, Explained’ University of Michigan-Dearborn, 2023. Available online: https://umdearborn.edu/news/ais-mysterious-black-box-problem-explained (accessed on 19 February 2024).

- Robinette, P.; Li, W.; Allen, R.; Howard, A.M.; Wagner, A.R. ‘Over Trust of Robots in Emergency Evacuation Scenarios’. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; Available online: https://ieeexplore.ieee.org/abstract/document/7451740 (accessed on 19 February 2024).

- Bertallee, C. ‘New Study: 64% of People Trust a Robot More than Their Manager’. Oracle. 2019. Available online: https://www.oracle.com/corporate/pressrelease/robots-at-work-101519.html (accessed on 19 February 2024).

- Sarkis-Onofre, R.; Catalá-López, F.; Aromataris, E.; Lockwood, C. How to properly use the PRISMA Statement. Syst. Rev. 2021, 10, 117. [Google Scholar] [CrossRef] [PubMed]

- Godin, K.; Stapleton, J.; Kirkpatrick, S.I.; Hanning, R.M.; Leatherdale, S.T. Applying systematic review search methods to the grey literature: A case study examining guidelines for school-based breakfast programs in Canada. Syst. Rev. 2015, 4, 138. [Google Scholar] [CrossRef] [PubMed]

- Oren, M. ‘4 Ways AI is Revolutionising the Construction Industry’. WEF. 2023. Available online: https://www.weforum.org/agenda/2023/06/4-ways-ai-is-revolutionising-the-construction-industry/ (accessed on 21 February 2024).

- Capper, P.; Susskind, R. Latent Damage Law: The Expert System. In Butterworths Law; Butterworths: Oxford, UK, 1988. [Google Scholar]

- HM Government. Latent Damages Act. 1986. Available online: https://www.legislation.gov.uk/ukpga/1986/37#:~:text=An%20Act%20to%20amend%20the,property%20occurring%20before%20he%20takes (accessed on 18 February 2024).

- Julianna Xoe Widlund. ‘AI in the UK Construction Industry’. Civils.ai. 2023. Available online: https://civils.ai/blog/ai-in-the-uk-construction-industry#:~:text=Enhanced%20Safety%20with%20AI%20Monitoring&text=AI%20technology%20is%20being%20employed,time%20alerts%20to%20prevent%20accidents (accessed on 18 February 2024).

- Garrate, C. How is AI Changing the Construction Industry?’ AI Magazine. 2021. Available online: https://aimagazine.com/ai-applications/how-ai-changing-construction-industry. (accessed on 22 February 2024).

- Sharp, G. ‘AI has Arrived in the UK. How Will it Make the Construction Sector Safer?’ LinkedIn. 2019. Available online: https://www.linkedin.com/pulse/ai-has-arrived-uk-how-make-construction-sector-safer-graham-sharp (accessed on 22 February 2024).

- HM Government. The Control of Substances Hazardous to Health Regulations. 2002, No. 2677. Available online: https://www.legislation.gov.uk/uksi/2002/2677/regulation/7/made (accessed on 15 February 2024).

- HSE. The Lifting Operations and Lifting Equipment Regulations. 1998, No. 2307. Available online: https://www.hse.gov.uk/work-equipment-machinery/loler.htm (accessed on 15 February 2024).

- HSE. The Provision and Use of Work Equipment Regulations. 1998, No. 2306. Available online: https://www.hse.gov.uk/work-equipment-machinery/puwer.htm#:~:text=PUWER%20requires%20that%20equipment%20provided,adequate%20information%2C%20instruction%20and%20training (accessed on 15 February 2024).

- HM Government. The Construction (Head Protection) Regulations. 1989, No. 2209. Available online: https://www.legislation.gov.uk/uksi/1989/2209/made (accessed on 15 February 2024).

- HM Government. The Work at Height Regulations. 2005, No. 735. Available online: https://www.legislation.gov.uk/uksi/2005/735/contents/made (accessed on 15 February 2024).

- HSE. The Manual Handling Operations Regulations. 1992, No. 2793. Available online: https://www.hse.gov.uk/pubns/books/l23.htm (accessed on 15 February 2024).

- HM Government. The Control of Noise at Work Regulations. 2005, No. 1643. Available online: https://www.legislation.gov.uk/uksi/2005/1643/contents/made (accessed on 15 February 2024).

- HM Government. The Control of Vibration at Work Regulations. 2005, No. 1093. Available online: https://www.legislation.gov.uk/uksi/2005/1093/contents/made (accessed on 15 February 2024).

- HM Government. The Health and Safety (Consultation with Employees) Regulations. 1996, No. 1513. Available online: https://www.legislation.gov.uk/uksi/1996/1513/contents/made (accessed on 15 February 2024).

- HM Government. Health and Safety at Work etc. Act. 1974, c.37. Available online: https://www.legislation.gov.uk/ukpga/1974/37/contents (accessed on 15 February 2024).

- HM Government. The Management of Health and Safety at Work Regulations. 1999, No. 3242. Available online: https://www.legislation.gov.uk/uksi/1999/3242/contents/made (accessed on 15 February 2024).

- HM Government. The Construction (Health, Safety and Welfare) Regulations. 1996, No. 1592. Available online: https://www.legislation.gov.uk/uksi/1996/1592/contents/made (accessed on 15 February 2024).

- HM Government. The Reporting of Injuries, Diseases and Dangerous Occurrences Regulations. 1995, No. 1471. Available online: https://www.legislation.gov.uk/uksi/1995/3163/contents/made (accessed on 15 February 2024).

- Health and Safety Executive. In Health and Safety in Construction, 3rd ed.; HSE, 2006. Available online: https://www.hse.gov.uk/pubns/priced/hsg150.pdf (accessed on 18 February 2024).

- See the Updated Outcome at: Office for Artificial Intelligence, ‘A Pro-Innovation Approach to AI Regulation’. White Paper. 2023. Available online: https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach/white-paper (accessed on 20 February 2024).

- Artificial Intelligence (Regulation) Bill [HL] HL Bill 11, 58/4. Available online: https://bills.parliament.uk/publications/53068/documents/4030 (accessed on 20 February 2024).

- Office for Science, Innovation and Technology, ‘Implementing the UK’s AI Regulatory Principles: Initial Guidance for Regulators’. 2024. Available online: https://www.gov.uk/government/publications/implementing-the-uks-ai-regulatory-principles-initial-guidance-for-regulators (accessed on 20 February 2024).

- Melby, B.M.; Pierides, M.; Mulligan, J. ‘UK Government Publishes AI Regulatory Framework’. Morgan Lewis. 2023. Available online: https://www.morganlewis.com/blogs/sourcingatmorganlewis/2023/04/uk-government-publishes-ai-regulatory-framework (accessed on 19 February 2024).

- FAT/ML. Fairness, Accountability, and Transparency in Machine Learning. 2018. Available online: https://www.fatml.org/ (accessed on 20 February 2024).

- HM Government. The Housing Grants, Construction and Regeneration Act 1996, c. 53, Supplemented by the Scheme for Construction Contracts Regulations 1996, 1998, No. 649. Available online: https://www.legislation.gov.uk/ukpga/1996/53/contents (accessed on 15 February 2024).

- Sinclair, S. ‘AI is About to Completely Change Construction Law’. Fenwick Elliott. 2023. Available online: https://www.fenwickelliott.com/research-insight/annual-review/2023/ai-change-construction-law#footnote2_lr32dw9 (accessed on 15 February 2024).

- Larsson, S. ‘The Socio-Legal Relevance of Artificial Intelligence’. Lund University. 2019. Available online: https://lucris.lub.lu.se/ws/portalfiles/portal/72918424/Larsson_2019_Socio_Legal_Relevance_of_AI_FINAL_Web.pdf (accessed on 20 February 2024).

- Larsson, S.; Heintz, F. Transparency in Artificial Intelligence. Internet Policy Rev. 2020, 9. Available online: https://policyreview.info/concepts/transparency-artificial-intelligence (accessed on 20 February 2024). [CrossRef]

- HM Government. Construction 2025: Industrial Strategy for Construction—Government and Industry in Partnership. 2013. Available online: https://www.gov.uk/government/publications/construction-2025-strategy (accessed on 22 February 2024).

- HM Government. ‘Introduction to AI Assurance’. 2024. Available online: https://www.gov.uk/government/publications/introduction-to-ai-assurance/introduction-to-ai-assurance (accessed on 22 February 2024).

- Department for Science, Innovation & Technology, ‘Portfolio of AI Assurance Techniques’. Gov.UK. 2023. Available online: https://www.gov.uk/guidance/cdei-portfolio-of-ai-assurance-techniques (accessed on 23 February 2024).

- Department for Science, Innovation & Technology, ‘Consultation Outcome A Pro-Innovation Approach to AI Regulation: Government Response’ HM Government. 2024. Available online: https://www.gov.uk/government/consultations/ai-regulation-a-pro-innovation-approach-policy-proposals/outcome/a-pro-innovation-approach-to-ai-regulation-government-response#introduction, (accessed on 23 February 2024).

- Gallardo, C. ‘AI Startups Stay Cool as UK Signals ‘Binding’ Safety Requirements’. Sifted. 2024. Available online: https://sifted.eu/articles/ai-startups-stay-cool-as-uk-signals-binding-safety-requirements (accessed on 22 February 2024).

- Keeling, E.; Brown, J.F. ‘What is in Store for UK AI: The Long Awaited Government Response is Here’. Allen & Overy. 2024. Available online: https://www.allenovery.com/en-gb/global/blogs/tech-talk/what-is-in-store-for-uk-ai-the-long-awaited-government-response-is-here#:~:text=In%20the%20Response%2C%20the%20Government,in%20today’ s%20most%20advanced%20models (accessed on 23 February 2024).

- Anonymous. Construction and Manufacturing Law and AI—The Rise of the Machines. Available at Construction and Manufacturing Law and AI—The Rise of the Machines. 2017. Available online: https://www.fisherscogginswaters.co.uk/ (accessed on 23 February 2024).

- Easen, N. Construction: An Industry Ripe for Tech Disruption. 2017. Available online: https://www.raconteur.net/business/construction-an-industry-ripe-for-tech-disruption. (accessed on 23 February 2024).

- Hunt, J. This Company’s Robots Are Making Everything—and Reshaping the World. 2017. Available online: https://www.bloomberg.com/news/features/2017-10-18/this-company-s-robots-are-making-everything-and-reshaping-the-world (accessed on 23 February 2024).

- Blair, G. Why Japan Will Profit the Most from Artificial Intelligence. 2017. Available online: http://www.scmp.com/week-asia/business/article/2104809/why-japan-will-profit-most-artificial-intelligence (accessed on 23 February 2024).

- Krywko, J. Scientists Believe They’ve Nailed the Combination that Could Help Robots FEEL Love. 2018. Available online: https://qz.com/838420/scientists-built-a-robot-that-feels-emotion-and-can-understand-if-you-love-it-or-not/ (accessed on 23 February 2024).

- Beltramini, E. Life 3.0. Being human in the age of artificial intelligence, by max tegmark. Relig. Theol. 2019, 26, 169–171. [Google Scholar] [CrossRef]

- UK Parliament. What Impact is AI Having on Ethics and the Law? 2017. Available online: https://www.parliament.uk/business/committees/committees-a-z/lords-select/ai-committee/news-parliament-2017/ethics-and-law-evidence-session/ (accessed on 24 February 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).