Building and Eroding the Citizen–State Relationship in the Era of Algorithmic Decision-Making: Towards a New Conceptual Model of Institutional Trust

Abstract

1. Introduction

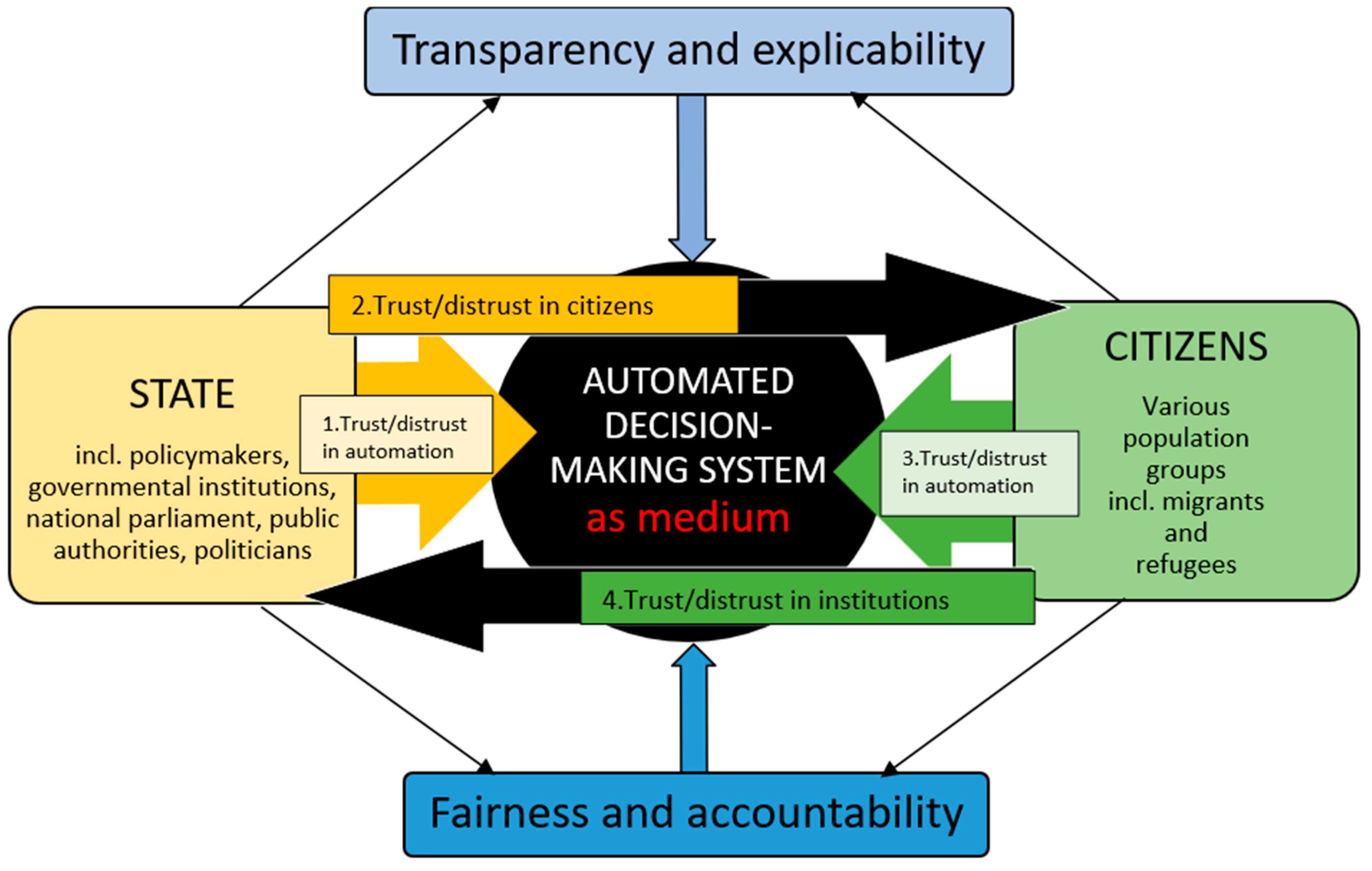

2. Institutional Trust

3. Institutional (Dis)trust in and Through Algorithmic Systems?

4. Literature Material

5. Discussion in the Sample of Studies on Automated Decision-Making

5.1. Policymakers Trust Automated Systems to Provide Services Efficiently and Equally

5.2. Policymakers’ Distrust of Citizens Drives the Implementation of Automated Decision-Making Systems for Administration

The trust in the citizens we serve is too low among public agencies in Sweden. The system is based on the notion that the majority cheat. The control system is designed with that in mind. Our activities are organized for the majority instead of the minority.(p. 7)

5.3. The Perspectives of Vulnerable Citizens Are Missing in the Discussions on Trust in Automated Systems

5.4. Do Discriminating Systems Erode Vulnerable Citizens’ Trust in Administrative Operations?

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Adam-Troian, Jais, Maria Chayinska, Maria Paola Paladino, Ödzen Melis Uluğ, Jeroen Vaes, and Pascal Wagner-Egger. 2023. Of Precarity and Conspiracy: Introducing a Socio-functional Model of Conspiracy Beliefs. British Journal of Social Psychology 62: 136–59. [Google Scholar] [CrossRef] [PubMed]

- Agar, Jon. 2003. The Government Machine. A Revolutionary History of the Computer. Cambridge: MIT Press. [Google Scholar]

- AlgorithmWatch. 2019. Automating Society Taking Stock of Automated Decision-Making in the EU. A Report by AlgorithmWatch in cooperation with Bertelsmann Stiftung, Supported by the Open Society Foundations, 1st ed. Berlin: AW AlgorithmWatch GmbH. Available online: www.algorithmwatch.org/automating-society (accessed on 6 March 2025).

- Anderson, Mark, and Karën Fort. 2022. Human Where? A New Scale Defining Human Involvement in Technology Communities from an Ethical Standpoint. International Review of Information Ethics 31: hal-03762035. [Google Scholar] [CrossRef]

- Araujo, Theo, Natali Helberger, Sanne Kruikemeier, and Claes H. De Vreese. 2020. In AI We Trust? Perceptions about Automated Decision-making by Artificial Intelligence. AI & Society 35: 611–23. [Google Scholar]

- Behnam Shad, Klaus. 2023. Artificial Intelligence-related Anomies and Predictive Policing: Normative (Dis)orders in Liberal Democracies. AI & Society, 1–12. [Google Scholar] [CrossRef]

- Belle, Vaishak, and Ioannis Papantonis. 2021. Principles and Practice of Explainable Machine Learning. Frontiers in Big Data 4: 688969. [Google Scholar] [CrossRef]

- Berg, Monika, and Tobias Johansson. 2020. Building Institutional Trust Through Service Experiences—Private Versus Public Provision. Journal of Public Administration Research and Theory 30: 290–306. [Google Scholar] [CrossRef]

- Bovens, Mark, and Stavros Zouridis. 2002. From Street-level to System-level Bureaucracies: How Information and Communication Technology is Transforming Administrative Discretion and Constitutional Control. Public Administration Review 6: 174–84. [Google Scholar] [CrossRef]

- Burrell, Jenna. 2016. How the Machine ‘Thinks’: Understanding Opacity in Machine Learning Algorithms. Big Data & Society 3: 1–12. [Google Scholar]

- Carney, Terry. 2019. Robo-debt Illegality: The Seven Veils of Failed Guarantees of the Rule of Law? Alternative Law Journal 44: 4–10. [Google Scholar] [CrossRef]

- Carney, Terry. 2023. The Automated Welfare State: Challenges for Socioeconomic Rights of the Marginalised. In Money, Power, and AI: Automated Banks and Automated States. Edited by Zophia Bednarz and Monika Zalnieriute. Cambridge: Cambridge University Press, pp. 95–115. [Google Scholar]

- Casey, Simone J. 2022. Towards Digital Dole Parole: A Review of Digital Self-service Initiatives in Australian Employment Services. Australian Journal of Social Issues 57: 111–24. [Google Scholar] [CrossRef]

- Choi, Intae. 2016. Digital Era Governance: IT Corporations, the State, and e-Government. Abingdon: Taylor & Francis. [Google Scholar]

- Coglianese, Cary. 2023. Law and Empathy in the Automated State. In Money, Power, and AI: Automated Banks and Automated States. Edited by Zophia Bednarz and Monika Zalnieriute. Cambridge: Cambridge University Press, pp. 173–88. [Google Scholar]

- Cookson, Clive. 2018. Artificial Intelligence Faces Public Backlash, Warns Scientist. Financial Times. June 9. Available online: https://www.ft.com/content/0b301152-b0f8-11e8-99ca-68cf89602132 (accessed on 6 March 2025).

- Cordella, Antonio, and Niccolò Tempini. 2015. E-government and Organizational Change: Reappraising the Role of ICT and Bureaucracy in Public Service Delivery. Government Information Quarterly 32: 279–86. [Google Scholar] [CrossRef]

- Crouch, Colin. 2020. Post-Democracy After the Crises. Cambridge: Polity Press. [Google Scholar]

- Danaher, John. 2016. The Threat of Algocracy: Reality, Resistance and Accommodation. Philosophy & Technology 29: 245–68. [Google Scholar]

- de Bruijn, Hans, Martijn Warnier, and Martijn Janssen. 2022. The Perils and Pitfalls of Explainable AI: Strategies for Explaining Algorithmic Decision-Making. Government Information Quarterly 39: 1–8. [Google Scholar] [CrossRef]

- De Sousa, Weslei G., Elis R. P. De Melo, Paulo H. De Souza Bermejo, Rafael A. Sousa Farias, and Adalmir O. Gomes. 2019. How and Where is Artificial Intelligence in the Public Sector Going? A Literature Review and Research Agenda. Government Information Quarterly 36: 101392. [Google Scholar] [CrossRef]

- Dencik, Lina, Arne Hintz, Joanna Redden, and Emiliano Treré. 2022. Data Justice. Thousand Oaks: Sage Publications. [Google Scholar]

- Du, Mengnan, Ninghao Liu, and Xia Hu. 2019. Techniques for Interpretable Machine Learning. Communications of the ACM 63: 68–77. [Google Scholar] [CrossRef]

- Elish, Madeleine C., and Danah Boyd. 2018. Situating Methods in the Magic of Big Data and AI. Communication Monographs 85: 57–80. [Google Scholar] [CrossRef]

- Engstrom, David F., Daniel E. Ho, Catherine M. Sharkey, and Mariano-Florentino Cuéllar. 2020. Government by Algorithm: Artificial Intelligence in Federal Administrative Agencies. New York: NYU School of Law, pp. 20–54. [Google Scholar] [CrossRef]

- Ervasti, Heikki, Antti Kouvo, and Takis Venetoklis. 2019. Social and Institutional Trust in Times of Crisis: Greece, 2002–2011. Social Indicators Research 141: 1207–31. [Google Scholar] [CrossRef]

- Eubanks, Virginia. 2018. Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor. New York: St. Martin’s Press. [Google Scholar]

- European Commission. 2023. Automated Decision-Making Impacting Society. The Pervasiveness of Digital Technologies Is Leading to an Increase in Automated Decision-Making. Available online: https://knowledge4policy.ec.europa.eu/foresight/automated-decision-making-impacting-society_en (accessed on 16 February 2025).

- European Committee for Democracy and Governance. 2021. Study on the Impact of Digital Transformation on Democracy and Good Governance. Available online: https://rm.coe.int/study-on-the-impact-of-digital-transformation-on-democracy-and-good-go/1680a3b9f9 (accessed on 6 March 2025).

- European Parliament. 2022. Digitalisation and Administrative Law. European Added Value Assessment. Available online: https://www.europarl.europa.eu (accessed on 6 March 2025).

- Gaffney, Stephen, and Michelle Millar. 2020. Rational Skivers or Desperate Strivers? The Problematisation of Fraud in the Irish Social Protection System. Critical Social Policy 40: 69–88. [Google Scholar] [CrossRef]

- Giddens, Anthony. 1991. The Consequences of Modernity. Cambridge: Polity Press. [Google Scholar]

- Goggin, Gerard, and Karen Soldatić. 2022. Automated Decision-making, Digital Inclusion and Intersectional Disabilities. New Media & Society 24: 384–400. [Google Scholar]

- Griffiths, Rita. 2021. Universal Credit and Automated Decision Making: A Case of the Digital Tail Wagging the Policy Dog? Social Policy and Society: A Journal of the Social Policy Association 23: 1–18. [Google Scholar] [CrossRef]

- Grimmelikhuijsen, Stephan. 2022. Explaining Why the Computer Says No: Algorithmic Transparency Affects the Perceived Trustworthiness of Automated Decision-making. Public Administration Review 23: 1–18. [Google Scholar] [CrossRef]

- Guszcza, James, David Schweidel, and Shantanu Dutta. 2014. The Personalized and the Personal: Socially Responsible Innovation through Big Data. Deloitte Review 14: 95–109. [Google Scholar]

- Győrffy, Dóra. 2013. Institutional Trust and Economic Policy Lessons from the History of the Euro: Lessons from the History of the Euro. Amsterdam: Amsterdam University Press. [Google Scholar]

- Hadwick, David, and Shimeng Lan. 2021. Lessons to Be Learned from the Dutch Childcare Allowance Scandal: A Comparative Review of Algorithmic Governance by Tax Administrations in the Netherlands, France and Germany. World Tax Journal 13: 1–53. [Google Scholar] [CrossRef]

- Harcourt, Bernard E. 2007. Against Prediction: Profiling, Policing, and Punishing in an Actuarial Age. Chicago: Chicago University Press. [Google Scholar]

- Hardin, Russel. 1999. Do We Want to Trust the Government? In Democracy and Trust. Edited by Mark E. Warren. Cambridge: Cambridge University Press, pp. 22–41. [Google Scholar]

- Helberger, Natali, Theo Araujo, and Claes H. De Vreese. 2020. Who is the Fairest of Them All? Public Attitudes and Expectations regarding Automated Decision-making. Computer Law & Security Review 39: 105456. [Google Scholar]

- Hetherington, Marc J. 2004. Why Trust Matters: Declining Political Trust and the Demise of American Liberalism. Princeton: Princeton University Press. [Google Scholar]

- Inglehart, Ronald, and Christian Wenzel. 2005. Modernization, Cultural Change, and Democracy: The Human Development Sequence. Cambridge: Cambridge University Press. [Google Scholar]

- Jackson, Jonathan, and Jacinta M. Gau. 2015. Carving up Concepts? Differentiating between Trust and Legitimacy in Public Attitudes towards Legal Authority. In Interdisciplinary Perspectives on Trust: Towards Theoretical and Methodological Integration. Edited by Ellie Shockley, Tess M. S. Neal, Lisa M. PytlikZillig and Brian H. Bornstein. Berlin and Heidelberg: Springer International Publishing AG, pp. 49–69. [Google Scholar]

- Janssen, Marijn, and Jaroen Van den Hoven. 2015. Big and Open Linked Data (BOLD) in Government: A Challenge to Transparency and Privacy? Government Information Quarterly 32: 363–68. [Google Scholar] [CrossRef]

- Johansson, Bengt, Jacob Sohlberg, Peter Esaiasson, and Marina Ghersetti. 2021. Why Swedes Don’t Wear Face Masks during the Pandemic—A Consequence of Blindly Trusting the Government. Journal of International Crisis and Risk Communication Research 4: 335–58. [Google Scholar] [CrossRef]

- Kaun, Anne. 2021. Suing the Algorithm: The Mundanization of Automated Decision- Making In Public Services through Litigation. Information, Communication & Society 25: 2046–62. [Google Scholar]

- Kaun, Anne, Anders O. Larsson, and Anu Masso. 2024. Automating Public Administration: Citizens’ Attitudes towards Automated Decision-making across Estonia, Sweden, and Germany. Information, Communication & Society 27: 314–32. [Google Scholar] [CrossRef]

- Kitchin, Rob. 2017. Thinking Critically About and Researching Algorithms. Information, Communication & Society 20: 14–29. [Google Scholar]

- Kroll, Joshua Joanna Huey A., Salon Barocas, Edward W. Felten, Joel R. Reidenberg, David G. Robinson, and Harlan Yu. 2017. Accountable Algorithms. University of Pennsylvania Law Review 165: 633–705. [Google Scholar]

- Larsson, Karl K., and Marit Haldar. 2021. Can Computers Automate Welfare? Norwegian Efforts to Make Welfare Policy More Effective. Journal of Extreme Anthropology 5: 56–77. [Google Scholar] [CrossRef]

- Lee, Min K. 2018. Understanding Perception of Algorithmic Decisions: Fairness, Trust, And Emotion in Response to Algorithmic Management. Big Data & Society 5: 2053951718756684. [Google Scholar]

- Lipsky, Michael. 2010. Street-Level Bureaucracy: Dilemmas of the Individual in Public Services. New York: Russell Sage Foundation. [Google Scholar]

- Madhavan, Poornima, and Douglas A. Wiegmann. 2007. Effects of Information Source, Pedigree, and Reliability on Operator Interaction with Decision Support Systems. Human Factors 49: 773–85. [Google Scholar] [CrossRef]

- McNamara, Jim. 2018. Organizational Listening: The Missing Essential Ingredient in Public Communication. Lausanne: Peter Lang. [Google Scholar]

- Meijer, Albert, and Stephan Grimmelikhuijsen. 2020. Responsible and accountable algorithmization. How to generate citizen trust in governmental usage of algorithms. In The Algorithmic Society: Technology, Power, and Knowledge. Edited by Marc Schuilenburg and Rik Peeters. London: Routledge. [Google Scholar]

- Miller, Tim. 2019. Explanation in Artificial Intelligence: Insights from the Social Sciences. Artificial Intelligence 267: 1–38. [Google Scholar] [CrossRef]

- Minas, Renate. 2014. One-stop Shops: Increasing Employability and Overcoming Welfare State Fragmentation? International Journal of Social Welfare 23: S40–S53. [Google Scholar] [CrossRef]

- Mökander, Jakob, Jessica Morley, Mariarosaria Taddeo, and Luciano Floridi. 2021. Ethics-Based Auditing of Automated Decision-Making Systems: Nature, Scope, and Limitations. Science and Engineering Ethics 27: 44–44. [Google Scholar] [CrossRef]

- Neal, Tess M. S., Ellie Shockley, and Oliver Schilke. 2016. The “Dark Side” of Institutional Trust. In Interdisciplinary Perspectives on Trust. Edited by Ellie Shockley, Tess Neal, Lisa PytlikZillig and Brian Bornstein. Cham: Springer. [Google Scholar] [CrossRef]

- OECD. 2024. OECD Survey on Drivers of Trust in Public Institutions—2024 Results: Building Trust in a Complex Policy Environment. Paris: OECD Publishing. [Google Scholar] [CrossRef]

- Offe, Claus. 1999. How Can We Trust Our Fellow Citizens? In Democracy and Trust. Edited by Mark E. Warren. Cambridge: Cambridge University Press, pp. 42–87. [Google Scholar]

- O’Neill, Cathy. 2016. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. New York: Crown Publishing. [Google Scholar]

- O’Neill, Onora. 2002. A Question of Trust. Cambridge: Cambridge University Press. [Google Scholar]

- Pasquale, Frank. 2015. The Black Box Society: The Secret Algorithms That Control Money and Information. Harvard: Harvard University Press. [Google Scholar]

- Peeters, Rik. 2020. The Agency of Algorithms: Understanding Human-Algorithm Interaction in Administrative Decision-Making. Information Polity 25: 507–22. [Google Scholar] [CrossRef]

- Petersen, Anette, Lars R. Christensen, and Thomas T. Hildebrandt. 2020. The Role of Discretion in the Age of Automation. Computer Supported Cooperative Work 29: 303–33. [Google Scholar] [CrossRef]

- Pérez-Morote, Rosario, Carolina Pontones-Rosa, and Montserrat Núñez-Chicharro. 2020. The Effects of E-Government Evaluation, Trust and the Digital Divide in the Levels of E-Government Use in European Countries. Technological Forecasting and Social Change 154: 119973. [Google Scholar] [CrossRef]

- Power, Martin, Eoin Devereux, and Majka Ryan. 2022. Framing and Shaming: The 2017 Welfare Cheats, Cheat Us All Campaign. Social Policy and Society 21: 646–56. [Google Scholar] [CrossRef]

- Ranerup, Agneta, and Helle Z. Henriksen. 2019. Value Positions Viewed Through the Lens of Automated Decision-making: The Case of Social Services. Government Information Quarterly 36: 101377. [Google Scholar] [CrossRef]

- Redden, Joanna, Jessica Brand, Ina Sander, and Harry Warne. 2022. Automating Public Services: Learning from Cancelled Systems. Carnegie: Data Justice Lab. [Google Scholar]

- Reddick, Christopher G. 2005. Citizen Interaction with E-Government: From the Streets to Servers? Government Information Quarterly 22: 38–57. [Google Scholar] [CrossRef]

- Robinson, Stephen C. 2020. Trust, Transparency, and Openness: How Inclusion of Cultural Values Shapes Nordic National Public Policy Strategies for Artificial Intelligence (AI). Technology in Society 63: 101421. [Google Scholar] [CrossRef]

- Rothstein, Bo. 2011. The Quality of Government: Corruption, Social Trust and Inequality in International Perspective. Chicago: Chicago University Press. [Google Scholar]

- Rudin, Cynthia. 2019. Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead. Nature Machine Intelligence 1: 206–15. [Google Scholar] [CrossRef]

- Shockley, Ellie, and Steven Shepherd. 2016. Compensatory Institutional Trust: A “Dark Side” of Trust. In Interdisciplinary Perspectives on Trust. Edited by Ellie Shockley, Tess Neal, Lisa PytlikZillig and Brian Bornstein. Cham: Springer. [Google Scholar] [CrossRef]

- Sleep, Lyndal N. 2022. From Making Automated Decision Making Visible to Mapping the Unknowable Human: Counter-mapping Automated Decision Making in Social Services in Australia. Qualitative Inquiry 28: 848–58. [Google Scholar] [CrossRef]

- Social Protection and Human Rights. 2015. Disadvantaged and Vulnerable Groups. Available online: https://socialprotection-humanrights.org/key-issues/disadvantaged-and-vulnerable-groups/ (accessed on 6 March 2025).

- Spadaro, Giuliana, Katharina Gangl, Jan-Willem Van Prooijen, Paul A. M. Van Lange, and Christina O. Mosso. 2020. Enhancing Feelings of Security: How Institutional Trust Promotes Interpersonal Trust. PLoS ONE 15: e0237934. [Google Scholar] [CrossRef]

- Standing, Guy. 2011. The Precariat: The New Dangerous Class. London: Bloomsbury. [Google Scholar]

- Sun, Tara Q., and Rony Medaglia. 2019. Mapping The Challenges of Artificial Intelligence in the Public Sector: Evidence From Public Healthcare. Government Information Quarterly 36: 368–83. [Google Scholar] [CrossRef]

- Tammpuu, Piia, and Anu Masso. 2018. ‘Welcome to the Virtual State’: Estonian e-residency and the Digitalised State as a Commodity. European Journal of Cultural Studies 21: 543–60. [Google Scholar] [CrossRef]

- Veale, Michael, and Irina Brass. 2019. Administration by Algorithm? Public Management Meets Public Sector Machine Learning. In Algorithmic Regulation. Edited by Karen Yeung and Martin Lodge. Oxford: Oxford University Press. Available online: https://ssrn.com/abstract=3375391 (accessed on 6 March 2025).

- Warren, Mark E. 1999. Introduction. In Democracy and Trust. Edited by Mark E. Warren. Cambridge: Cambridge University Press, pp. 1–21. [Google Scholar]

- Widlak, Arjan, and Rik Peeters. 2020. Administrative Errors and the Burden of Correction and Consequence: How Information Technology Exacerbates the Consequences of Bureaucratic Mistakes for Citizens. International Journal of Electronic Governance 12: 40–56. [Google Scholar] [CrossRef]

- Wirtz, Bernd, Jan C. Weyerer, and Carolin Geyer. 2019. Artificial Intelligence and the Public Sector—Applications and Challenges. International Journal of Public Administration 42: 596–615. [Google Scholar] [CrossRef]

- Yang, Kaifeng, and Marc Holzer. 2006. The performance–trust link: Implications for performance measurement. Public Administration Review 66: 114–26. [Google Scholar] [CrossRef]

- Yildiz, Mete. 2007. E-Government Research: Reviewing the Literature, Limitations, and Ways Forward. Government Information Quarterly 24: 646–65. [Google Scholar] [CrossRef]

- Zak, Paul J., and Stephen Knack. 2001. Trust and Growth. The Economic Journal 111: 295–321. [Google Scholar] [CrossRef]

- Zalnieriute, Monika, Lyria Bennet Moses, and George Williams. 2019. The Rule of Law and Automation of Government Decision-Making. The Modern Law Review 82: 425–55. [Google Scholar] [CrossRef]

- Zouridis, Stavros, Marlies Van Eck, and Mark Bovens. 2020. Automated Discretion. In Discretion and The Quest for Controlled Freedom. Edited by Tony Evans and Peter Hupe. London: Palgrave Macmillan. [Google Scholar]

| Article | Article Type | Objective | Results | Four Dimensions of Institutional Trust Model |

|---|---|---|---|---|

| Araujo et al. (2020): In AI we Trust? Perceptions about Automated Decision-Making by Artificial Intelligence | Scenario-based survey experiment | To analyse which personal characteristics can be linked to perceptions of fairness, usefulness and risk in automatic decision-making. | People are concerned about risks and have mixed opinions about the fairness and usefulness of automated at the societal level. | (3) citizens’ trust or distrust in automated decision-making |

| Helberger et al. (2020): Who Is the Fairest of Them All? Public Attitudes and Expectations Regarding Automated Decision-Making. | Original article (empirical survey and interview data) | To examine how the ongoing substitution of human decision-makers with ADM systems raises a question of ADM fairness. | A greater number of respondents considered AI to be a fairer decision-maker. | (3) citizens’ trust or distrust in automated decision-making |

| Goggin and Soldatić (2022): Automated Decision-Making, Digital Inclusion and Intersectional Disabilities. | Discussion article | To gain a critical understanding of automatic decision-making through disability and intersectionality to frame the terms and agenda of digital inclusion for the future. | The study showed that an intersectional understanding of disabilities is not grasped in digital inclusion. | (1) policymakers’ trust or distrust in automated decision-making (4) how the implementation of automated decision-making impacts the (dis)trust of (vulnerable) citizens in government |

| Griffiths (2021): Universal Credit and Automated Decision Making: A Case of the Digital Tail Wagging the Policy Dog? | Discussion article | To discuss digitalisation in welfare and questions of administrative burden and the wider effects and impacts on claimants. | The study showed that increasing digitalisation in public services brings an unnecessary administrative burden and other challenges to citizens. | (1) policymakers’ trust or distrust in automated decision-making (4) how the implementation of automated decision-making impacts the (dis)trust of (vulnerable) citizens in government |

| Grimmelikhuijsen (2022): Explaining Why the Computer Says No: Algorithmic Transparency Affects the Perceived Trustworthiness of Automated Decision-Making. | Original article (empirical survey and interview data) | To discuss how citizens view algorithmic versus human decision-making. | The study concluded that accessibility is not enough to foster citizens’ trust in automated decision-making. | (3) citizens’ trust or distrust in automated decision-making |

| Kaun (2021): Suing the Algorithm: The Mundanization of Automated Decision- Making in Public Services through Litigation | Qualitative research based on in-depth interviews and court rulings | To analyse how different, partly conflicting definitions of what automatic decision-making in social services is and does are negotiated between multiple actors. | The article showed how different sociotechnical imaginaries related to automatic decision-making are established and stabilised. | (1) policymakers’ trust or distrust in automated decision-making (4) how the implementation of automated decision-making impacts the (dis)trust of citizens in government |

| Larsson and Haldar (2021): Can Computers Automate Welfare? Norwegian Efforts to Make Welfare Policy More Effective | Discussion: theoretical with an empirical case | To raise questions about the uncritical digitalisation of public services and the ability of welfare organisations to support healthy and inclusive societies. | The study argued that when developing automated digital public services, proactive automation should be precise in its delivery, inclusive of all citizens and still support welfare-orientated policies that are independent of the requirements of the digital system. | (1) policymakers’ trust or distrust in automated decision-making (4) how the implementation of automated decision-making impacts the (dis)trust of citizens in government |

| Mökander et al. (2021): Ethics-Based Auditing of Automated Decision-Making Systems: Nature, Scope and Limitations | Review | To analyse the feasibility and efficacy of ethics-based auditing as a governance mechanism that allows organisations to operationalise their ethical commitments and validate claims made about their ADM systems. | The study concluded that ethics-based auditing should be considered an integral component of multifaced approaches to managing the ethical risks posed by ADM systems. | (4) how the implementation of automated decision-making impacts the (dis)trust of citizens in government |

| Ranerup and Henriksen (2019): Value Positions Viewed through the Lens of Automated Decision-Making: The Case of Social Services | Discussion: Theoretical with an empirical case | To discuss which instances of value positions and their divergence appear when ADM is used in municipal social assistance. | The study showed that automated systems has partly increased accountability, decreased costs and enhanced efficiency with a focus on citizens. | (1) policymakers’ trust or distrust in automated decision-making (2) how the (dis)trust of citizens drives the implementation of automated systems (3) citizens’ trust or distrust in automated decision-making |

| Sleep (2022): From Making Automated Decision Making Visible to Mapping the Unknowable Human: Counter-Mapping Automated Decision Making in Social Services in Australia | Descriptive article | To reflect on the act of counter-mapping ADM in social services in Australia. | The future automatic decision-making mapping needs to focus on making visible those who are subject to the decisions of automated systems but is usually made unknowable by the over-confident calculability of dominant automatic decision-making discourses. | (4) how the implementation of automated decision-making impacts the trust of (vulnerable)citizens in government |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Parviainen, J.; Koski, A.; Eilola, L.; Palukka, H.; Alanen, P.; Lindholm, C. Building and Eroding the Citizen–State Relationship in the Era of Algorithmic Decision-Making: Towards a New Conceptual Model of Institutional Trust. Soc. Sci. 2025, 14, 178. https://doi.org/10.3390/socsci14030178

Parviainen J, Koski A, Eilola L, Palukka H, Alanen P, Lindholm C. Building and Eroding the Citizen–State Relationship in the Era of Algorithmic Decision-Making: Towards a New Conceptual Model of Institutional Trust. Social Sciences. 2025; 14(3):178. https://doi.org/10.3390/socsci14030178

Chicago/Turabian StyleParviainen, Jaana, Anne Koski, Laura Eilola, Hannele Palukka, Paula Alanen, and Camilla Lindholm. 2025. "Building and Eroding the Citizen–State Relationship in the Era of Algorithmic Decision-Making: Towards a New Conceptual Model of Institutional Trust" Social Sciences 14, no. 3: 178. https://doi.org/10.3390/socsci14030178

APA StyleParviainen, J., Koski, A., Eilola, L., Palukka, H., Alanen, P., & Lindholm, C. (2025). Building and Eroding the Citizen–State Relationship in the Era of Algorithmic Decision-Making: Towards a New Conceptual Model of Institutional Trust. Social Sciences, 14(3), 178. https://doi.org/10.3390/socsci14030178