1. Introduction

For legged robots, powered prosthetics, and powered exoskeletons to be successful, one needs appropriate swing-leg control in the presence of model uncertainty (e.g., uncertainty in inertia and damping). This is typically achieved by setting up a reference trajectory for the swing leg and then using a high-gain feedback controller to track the reference trajectory. However, it is worth noting that it is sufficient for the swing leg to achieve a set velocity at a given instant of the swing instead of tracking a series of reference points at multiple points in the swing. In this paper, we achieve swing-leg control by tracking a set point, the velocity at a chosen instant, instead of a reference trajectory. This alternate formulation is simpler because it requires a few measurements (typically one or two per step), few computations (offline design of feedback gain), and low-bandwidth control (typically of the order of 1 or 2 Hz). We call such a control event-based, intermittent, discrete control.

Another challenge, especially with powered prosthetics and exoskeletons, is that the parameters of the control need to be tuned for a specific individual based on the dynamics of their able leg. Although a high-gain-tracking controller may achieve desired outcomes, it might amplify the sensor noise, leading to instability. Alternately, careful tuning of the feedback gains may achieve acceptable performance, but such hand-tuning is potentially time consuming. A more customizable method is to have an adaptive controller that self-tunes itself using on-board measurements. In this paper, we further develop an adaptive control layer to enable automatic tuning of the discrete controller.

We present an event-based, intermittent, discrete adaptive controller for controlling systems using low-bandwidth measurements and control while achieving an appropriate performance index. We demonstrate results on the benchmark problem of swinging of a pendulum in a simulation and experiments. In the future, we expect to apply the control method on a legged robot and a hip exoskeleton.

2. Background and Related Work

One of the earliest hypotheses was that the human leg swing is ballistic, i.e., there is no active control during the leg swing [

1,

2]. However, later works found that a pure ballistic swing underestimated the swing time and step length and did not accurately predict the ground reaction forces [

3]. Later works found that the swing leg is not totally passive, as it entails a metabolic cost and there is muscle activity for initiation and propagation of the swing leg [

4].

Active control of exoskeletons and prostheses relies on tracking a reference motion using a high-gain feedback controller [

5] or just loose tracking of a set of reference points in the swing [

6]. In the latter approach, one divides the swing phase into a series of phases. In each phase, there are set points that are loosely tracked using a low-gain proportional–derivative controller. A more extreme approach is to tune a controller once per step by using measurements obtained during the step. For example, by modulating the ankle push-off based on the average progression speed, it is possible to achieve balance control [

7]. Instead of actively injecting energy, there are artificial devices that extract energy using magneto-rheological dampers to achieve swing control [

8].

The ballistic swing model of humans inspired passive dynamic walking robots. Such robots can walk downhill with no external torque input or power, relying solely on the mass, inertia, and geometry of their legs [

9]. However, such robots can only walk on shallow slopes, limiting them to slow speeds and short step lengths [

10]. Increasing the slope increases the walking speed, but requires active control of the hip joint [

11]. Alternately, to enable walking at human speed on level grounds, one needs to tune the leg frequency using either active control or a hip spring and an ankle push-off to overcome energy losses during the foot strike [

12,

13].

Similarly to that of exoskeletons and prostheses, the control of legged robots ranges from tight trajectory tracking to once-per-step control. The most prominent idea has been the hybrid zero-dynamics approach in which one defines a set of canonical output functions using heuristics [

14], optimization [

15], or human data [

16]. These outputs can be tracked using a high-gain feedback controller. An alternate approach is loosely tracking distinct set points during the gait cycle using a low-gain feedback control so that the tracking is approximate [

17]. A more extreme approach, the once-per-step control, is the modulation of a single control parameter (e.g., ankle push-off) during the gait cycle [

18]. For hopping robots, it has been shown that control of the hop height, the forward speed, and the torso using three independent controllers can achieve highly robust running for one-, two-, and four-legged robots [

19].

Adaptive control of artificial legs is achieved with tight trajectory tracking using a model reference adaptive control that tunes the controller based on tracking errors [

20] or a model identification adaptive control in which the model is taught from the errors [

21]. Other approaches have considered optimal control using dynamic programming [

22], extremum-seeking control [

23], and reinforcement-learning-based approaches [

24].

The concept of tracking set points instead of trajectory tracking has similarities with past work done on controlling lightly damped systems, such as point-to-point movement of a crane using a hoist. One such application is the point-to-point movement of cranes using a cable hoist, where improper movement of the crane leads to unnecessary vibration of the payload at the end of the movement [

25]. One method is the ‘posicast’ method, in which the input command is delayed in such a way that it causes vibration produced by the pre-delay input signal [

26,

27]. Another method is called ‘input shaping’ or command shaping, in which two impulses that are separated in time are convolved with the input command. The timing of the impulse is so chosen that the vibration induced by the first one is exactly cancelled by the second one, leading to cancellation of the vibration [

28,

29]. Both methods—the posicast and the input shaping method—tune the feedforward command to achieve vibration suppression.

The method proposed in this paper is closest to ‘intermittent control’ [

30]. Here, the control is made up of the multiplication of a time-based part and a basis function. The time-based part is optimized for the steady-state response or feedforward response, while the basis function is tuned based on measurement errors. The control is intermittent because the basis function is not continuously tuned, but only based on errors at events in the control cycle. Intermittent control has been used for inverted pendulum control [

31] and to explain humans balancing a stick on their hands [

32], as well as human standing balance [

33].

In this paper, we present the event-based, intermittent, discrete adaptive controller design. Here, we measure the system state during events in the motion (e.g., vertically downward position for a pendulum swing). Based on these measurements, we compute control parameters that turn the control ON intermittently (e.g., torque is ON for a few milliseconds during the pendulum swing). The controller is discrete in the sense that the time intervals between measurements are typical of the order of the natural frequency of the system. Then, we add an adaptive controller that tunes the control parameters using sensor measurements. This paper extends our event-based, intermittent, discrete controller design, which is similar to the intermittent control approach [

34], to adaptive control, and this is the main novelty of the paper.

The flow of the paper is as follows. We present the methods for event-based, intermittent control and then extend them to perform adaptive control in

Section 3. Next, we present results on simulations and hardware in

Section 4. The discussion follows in

Section 5, and this is followed by the conclusion in

Section 6.

3. Methods

3.1. Overview of Control

Figure 1 summarizes the control idea. We show the nominal motion of the system using a black dashed line. This corresponds to the use of the nominal control parameter

. Since we are specifically looking at periodic motion, the performance variable

z, which is a function of the state, is

at the instant

k and

. When the system deviates from the nominal, the state at section

k is

z. Now, we have to find the correction to the nominal control parameter

such that the new control parameter

brings the performance variable close to

at the event

.

There are few things in the controller that differ from traditional feedback control. First, the time interval between the events k and is typically at the order of the natural time constant of the system and not at the bandwidth provided by the computing and hardware of the system. Second, we take measurements of the system z and make control corrections at key instances, again at the order of the natural frequency of the system. Finally, the controller regulates the system to the performance index—here, —at key instants instead of tight trajectory tracking. We now describe the specifics of the controller.

3.2. Event-Based, Intermittent, Discrete Control

Let the state of the system be , the control be , and the continuous system dynamics be defined by F with . Let the control be parameterized by the free variable U (e.g., gain, amplitude, set point) such that , where f is a function of state, time, or a combination of both. Assume that there is a nominal control that leads to some nominal motion for the states .

In the problems considered in this paper, we are not interested in tight tracking of the reference trajectory . Rather, we want to track a suitable performance variable (e.g., energy, cartesian position, joint angle) at some state-based or time-based event (e.g., , , where g is some nonlinear function) such that at some instant. The overall outlook of the event-based control paradigm is as follows. We measure the system state at some state-based or time-based event. Let us assume that the state has deviated from its nominal value, . The goal then is to find the differential control parameters such that we minimize the deviation . The control is intermittent, as we set the free parameter at the events using the measurement and regulation error.

We can linearize the system at the nominal value of the performance index

z between the events

k and

to get

where

and

. since the goal is to reduce

. We achieve this in a single measurement and control cycle by choosing

, assuming

. Such a control that achieves full correction of disturbances in a single step is known as a one-step deadbeat controller (e.g., see [

35], p. 201).

3.3. Adaptive Control

In the previous section, we showed how to choose a control if the model

F of the system is fully known. However, there may be modeling errors; as such, we may only know an imperfect model

. In this case, our estimates for the linearized parameters are

and

. Thus, we will have an error

where

are weights and

is the regressor. We now define a cost function

where

is the normalizing factor with a user-chosen small positive constant,

.

The update in the weight

can now be obtained by gradient descent:

where

is a user-tuned gain. This can also be a function of iteration, i.e.,

. It can be shown that the adaptive law above guarantees that the weights

and error

e are bounded and

(e.g., see [

36], Chapter 3).

3.4. Control of a Pendulum

We demonstrate the event-based, intermittent, discrete adaptive control for velocity control of a pendulum. First, we describe the model in continuous time, and then we describe the discrete version, followed by adaptive control.

3.4.1. Model

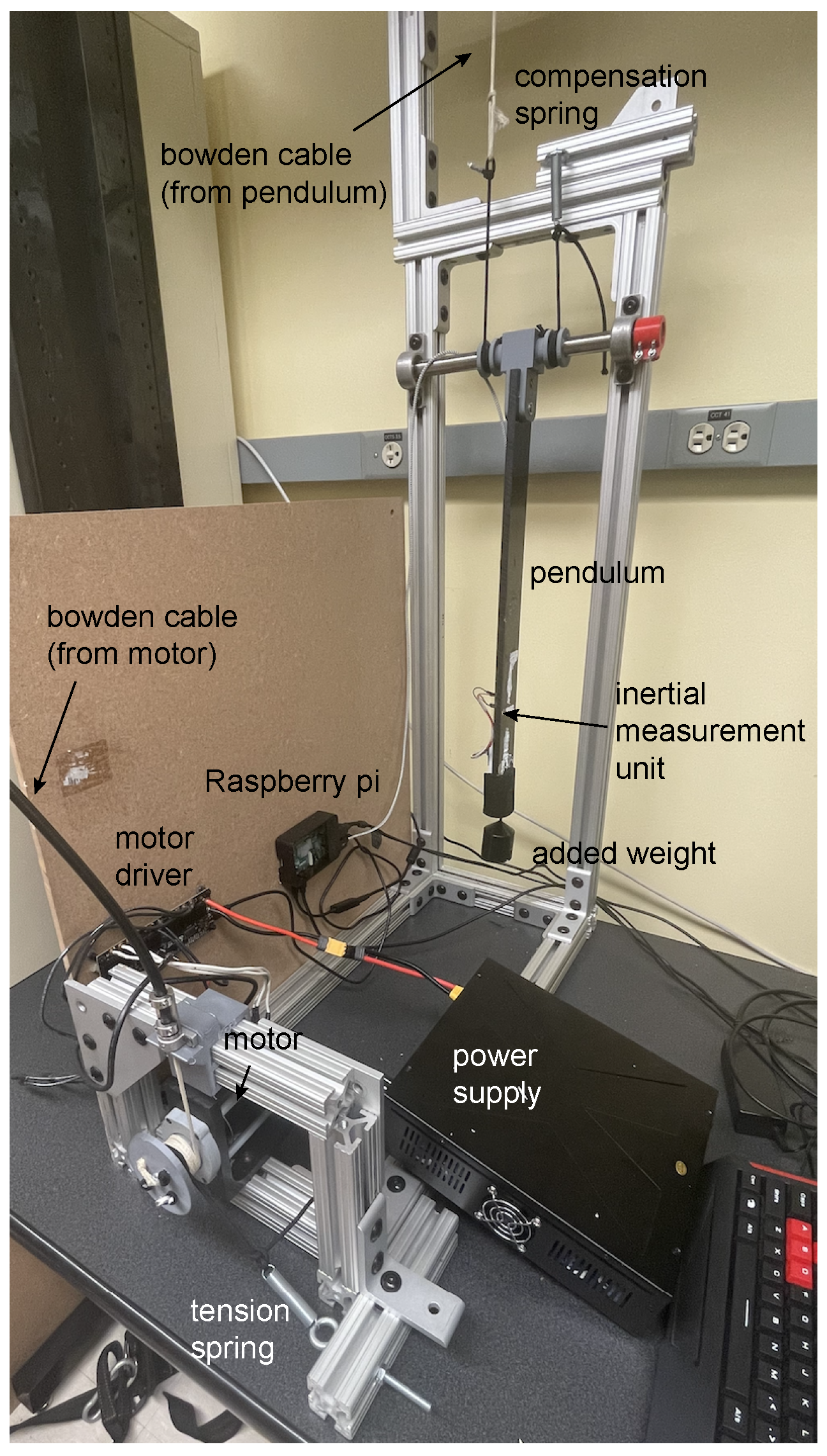

The pendulum is shown in

Figure 2. The equation of motion is given by

where the mass is

m, damping is

c, length is

ℓ, gravity is

g, torque is

T, and angle is

(counter-clockwise is positive). This can be written in state-space form as

where

,

, and

. Our model is inaccurate, as we have not accounted for the inertia and we have used an approximate friction model.

3.4.2. Discrete-Time Model

To obtain the discrete-time model, we choose our performance variable to be energy. Thus, . We measure and regulate the system energy at . We use a constant torque for sec.

Assuming a baseline model, we first use non-linear root solving to find the control input

that leads to a steady oscillation with energy

for the nominal model. Thereafter, by using finite difference, we can find the constants

and

to obtain the discrete state equation

where

,

,

, and

.

The adaptive control law may be obtained from Equation (

4) as follows.

where

and

are the projection signals to keep the parameters

and

bounded,

and

, and

and

.

where

and

[

36].

3.5. Linearized Models and Adaptations per Swing

Figure 3 shows one complete swing of the pendulum. The pendulum at section

i and

is in the vertical downward position, and at the instance

and

, it is in the extreme position. We develop three linearized models as follows. We have three different models that differ in the location of the sections and in the number of measurements and corrections made per section.

3.5.1. One Model/One Measurement/One Adaptation (1Mo-1Me-1Ad) per Period

Here, we have one model in one swing period (see

Figure 3).

We measure the system energy once: when the pendulum is in the vertically downward position and moving to the right (see

Figure 3a). We use this measurement to adapt the model

and

using Equations (

8) and (

9). Thus, we have one model, one measurement, and one adaptation per swing.

3.5.2. Two Models/Two Measurements/One Adaptation (2Mo-2Me-1Ad) per Period

Here, we have two models in one swing period (see

Figure 3).

We measure the system energy twice: when the pendulum is in the vertically downward position and moving to the right and left (see

Figure 3a,c). We use these two measurements to adapt the model

,

,

, and

using Equations (

8) and (

9). Thus, we have two models, two measurements, and one adaptation per model per swing.

3.5.3. Two Models/Two Measurements/Two Adaptations (2Mo-2Me-2Ad) per Period

Here, we have two models in one swing period (see

Figure 3). This is done by exploiting the symmetry in 2Mo-2Me-1Ad by observing that

and

.

We measure the system energy twice: when the pendulum is in the vertically downward position and moving to the right and left (see

Figure 3a,c). We use this measurement to adapt the model parameters

and

twice using Equations (

8) and (

9). Thus, we have two models, two measurements, and two adaptations per model per swing.

We expect that adaptations using 2Mo-2Me-2Ad are at least two times faster compared to 1Mo-1Me-1Ad and 2Mo-2Me-1Ad. It is also noted that for directionally dependent model (e.g., friction), one cannot exploit the symmetry, and hence, 2Mo-2Me-2Ad could give poor results.

5. Discussion

We presented an event-based, intermittent, discrete control framework for low-bandwidth control of systems to achieve set-point regulation. We measured the system state at events during motion (e.g., angular velocity when the pendulum is vertical). These measurements triggered the controller to turn ON intermittently (e.g., constant torque for pre-specified seconds). The controller then achieved set-point regulation during the movement cycle. We added an adaptive control layer that tuned the model parameters using measurement errors, making the system robust to uncertainty. The framework was demonstrated in simulation and hardware experiments by regulating the velocity of a pendulum.

Unlike traditional discrete control, which is understood to be a discrete approximation of the continuous control, our controller is truly discrete with time between measurements, and the control is approximately of the order of the natural time period of the system. For example, in the case of the pendulum, the natural time period is

s. We take one measurement for 1Mo-1Me-1Ad or two measurements per 2 s for 2Mo-2Me-1Ad/2Mo-2Me-2Ad. We use the resulting errors to tune the control parameters to achieve set-point regulation. Such time delays are natural in biological systems due to the slowness of chemical-based nerve conduction, of neural computation, and of delays in muscle activation [

41]. Other controllers that can handle time delays are the posicast controller [

26], act and wait controller [

42], and intermittent controller [

31].

The two model parameters and model the sensitivities of the state and controls over a finite horizon and predict the system state in the future for the state and control at the current time. Thus, the framework is predictive. A predictive framework is very sensitive to the model parameters. By creating an adaptive framework where we tune the model parameters using measurements, we can achieve robustness to parameter uncertainty.

The discrete control framework that we advocate can make the system deadbeat, which is the full correction of disturbances in finite time (e.g., see [

35], p. 201). The classical continuous controllers (e.g., proportional or proportional-derivative control) lead to exponential decay and can never achieve full correction in finite time (e.g., see [

43], pp. 416–417). In the absence of parameter uncertainty, the framework can lead to deadbeat control in a single measurement/control cycle [

34]. In this paper, where we had model uncertainty, we could still achieve deadbeat control in 2–5 discrete time intervals.

We anticipate the use of such an event-based, discrete controller for swing-leg control in legged robots, prostheses, and exoskeletons. In the past, we successfully used the controller for creating walking gaits that led to a distance record [

17]. In such tasks, it is important to achieve certain objectives, such as step length or step frequency, rather than tracking. In addition, since the controller is relatively simple and uses a low bandwidth, it requires relatively simple sensors and computers. Another important task is to achieve deadbeat control, which the controller achieves in two swings in the absence of uncertainty (see 2Mo-2Me-2Ad). Finally, for prostheses and exoskeletons, one needs to customize the controller for different people, which can be achieved by adapting the model using measurement errors, as was done here.

The major limitation of the approach is that it is sensitive to: (1) the performance index; (2) the choice of events; (3) the choice of control parameters; (4) the sensors used for control. These parameters are task- and system-dependent and are generally chosen by a design. We provide some heuristics in Section 2.2 in the ref. [

34] However, as of more recently, more automated methods based on hyper-parameter tuning may also be used [

44]. In addition, it is unclear how the system would perform in the presence of noisy measurements, although our limited experiments show that some smoothing of the sensor measurements can lead to acceptable performance. One potential solution is to use a Kalman filter where the model is updated as the adaptive control updates the parameters. Finally, note that the controller is only useful when we are interested in loosely enforcing tracking during the tasks and not for tight trajectory tracking, as required in some other tasks.