A Machine Vision-Based Method for Monitoring Scene-Interactive Behaviors of Dairy Calf

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Setup and Image Collection

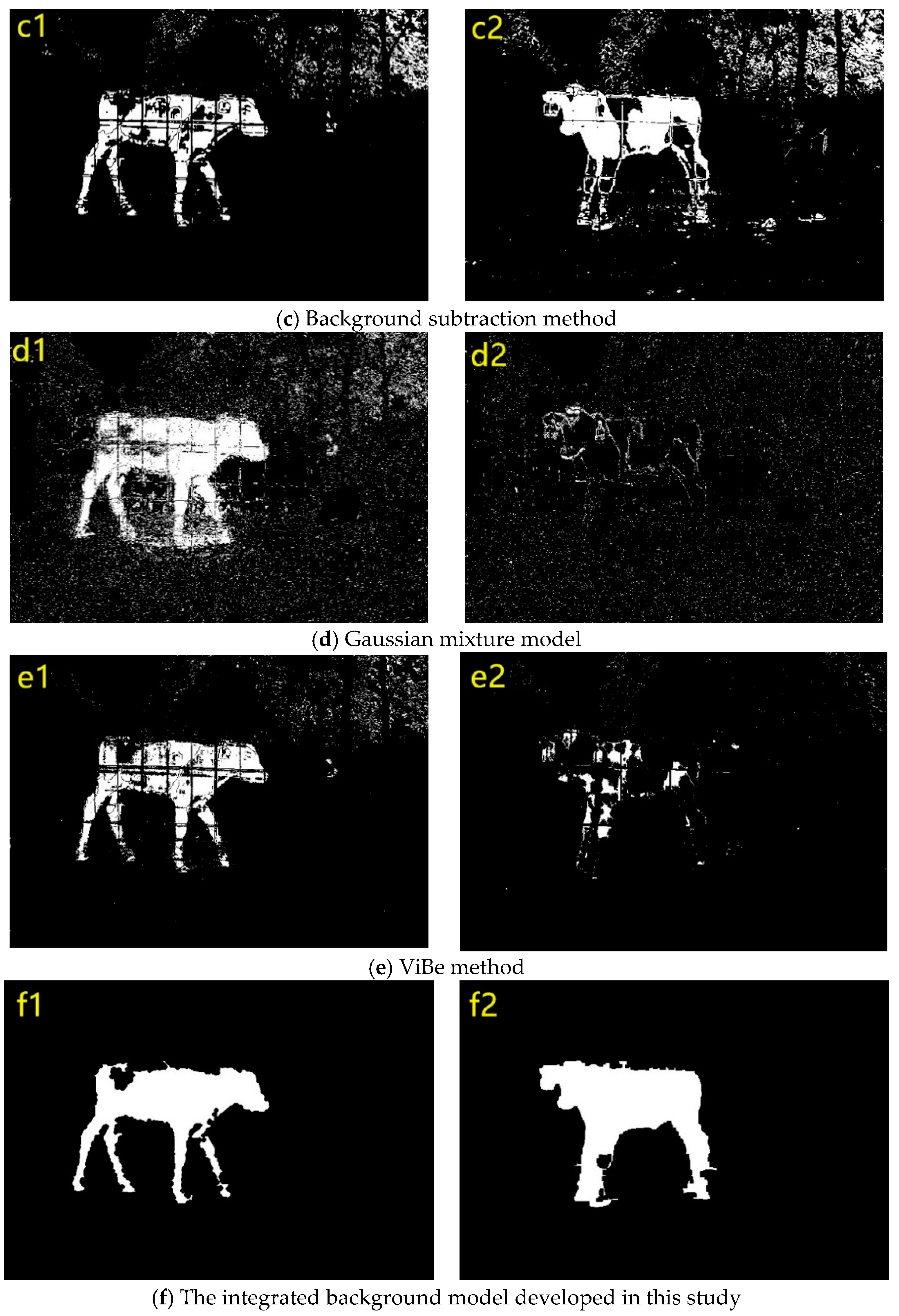

2.2. Calf-Target Detection Method

2.3. Features Extraction Method of Calf Scene-Interactive Behaviors

2.4. Feeding and Drinking Behaviors Monitoring and Analysis

3. Results and Discussion

3.1. Target Detection Results

3.2. Recognition of Entering/Leaving Behaviors in Resting Area

3.3. Feeding and Drinking Behaviors Identification

4. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- United Nations. World Population Prospects. 2017. Available online: https://population.un.org/wpp/Publications/Files/WPP2017_DataBooklet.pdf (accessed on 15 November 2019).

- Zhang, H.; Wang, Y.; Chang, Y.; Luo, H.; Brito, L.F.; Dong, Y.; Shi, R.; Wang, Y.; Dong, G.; Liu, L. Mortality-Culling Rates of Dairy Calves and Replacement Heifers and Its Risk Factors in Holstein Cattle. Animals 2019, 9, 730. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, D.J.; Liu, D.; Zhao, K.X. Review of perceiving animal information and behavior in precision livestock farming. Trans. Chin. Soc. Agric. Mach. 2016, 47, 231–244. [Google Scholar]

- Chapinal, N.; Tucker, C.B. Validation of an automated method to count steps while cows stand on a weighing platform and its application as a measure to detect lameness. J. Dairy Sci. 2012, 95, 6523–6528. [Google Scholar] [CrossRef] [PubMed]

- Hoffmann, G.; Ammon, C.; Rose-Meierhöfer, S.; Burfeind, O.; Heuwieser, W.; Berg, W. Monitoring the body temperature of cows and calves using video recordings from an infrared thermography camera. Vet. Res. Commun. 2013, 37, 91–99. [Google Scholar] [CrossRef]

- Li, D.; Chen, Y.; Zhang, K.; Li, Z. Mounting Behaviour Recognition for Pigs Based on Deep Learning. Sensors 2019, 19, 4924. [Google Scholar] [CrossRef] [Green Version]

- Porto, S.M.C.; Arcidiacono, C.; Anguzza, U.; Cascone, G. A computer vision-based system for the automatic detection of lying behaviour of dairy cows in free-stall barns. Biosyst. Eng. 2013, 115, 184–194. [Google Scholar] [CrossRef]

- Dell, A.I.; Bender, J.A.; Branson, K.; Couzin, I.D.; de Polavieja, G.G.; Noldus, L.P.J.J.; Pérez-Escudero, A.; Perona, P.; Straw, A.D.; Wikelski, M.; et al. Automated image-based tracking and its application in ecology. Trends Ecol. Evol. 2014, 29, 417–428. [Google Scholar] [CrossRef]

- Smith, D.; Rahman, A.; Bishop-Hurley, G.J.; Hills, J.; Shahriar, S.; Henry, D.; Rawnsley, R. Behavior classification of cows fitted with motion collars: Decomposing multi-class classification into a set of binary problems. Comput. Electron. Agric. 2016, 131, 40–50. [Google Scholar] [CrossRef]

- GonzaLez, L.A.; Bishop-Hurley, G.J.; Handcock, R.N.; Crossman, C. Behavioral classification of data from collars containing motion sensors in grazing cattle. Comput. Electron. Agric. 2015, 110, 91–102. [Google Scholar] [CrossRef]

- Alsaaod, M.; Römer, C.; Kleinmanns, J.; Hendriksen, K.; Rose-Meierhöfer, S.; Plümer, L.; Büscher, W. Electronic detection of lameness in dairy cows through measuring pedometric activity and lying behavior. Appl. Anim. Behav. Sci. 2012, 142, 134–141. [Google Scholar] [CrossRef]

- Reith, S.; Brandt, H.; Hoy, S. Simultaneous analysis of activity and rumination time, based on collar-mounted sensor technology, of dairy cows over the peri-estrus period. Livest. Sci. 2014, 170, 219–227. [Google Scholar] [CrossRef]

- Ahn, S.J.; Ko, D.M.; Choi, K.S. Cow Behavior Recognition Using Motion History Image Feature. Image Anal. Recognit. 2017, 10317, 626–633. [Google Scholar]

- Jabbar, K.A.; Hansen, M.F.; Smith, M.L.; Smith, L.N. Early and non-intrusive lameness detection in dairy cows using 3-dimensional video. Biosyst. Eng. 2017, 153, 63–69. [Google Scholar] [CrossRef]

- Poursaberi, A.; Bahr, C.; Nuffel, A.V.; Nuffel, A.V.; Berckmans, D. Original paper: Real-time automatic lameness detection based on back posture extraction in dairy cattle: Shape analysis of cow with image processing techniques. Comput. Electron. Agric. 2010, 74, 110–119. [Google Scholar] [CrossRef]

- Porto, S.M.C.; Arcidiacono, C.; Anguzza, U.; Cascone, G. The automatic detection of dairy cow feeding and standing behaviours in free-stall barns by a computer vision-based system. Biosyst. Eng. 2015, 133, 46–55. [Google Scholar] [CrossRef]

- Gu, J.Q.; Wang, Z.H.; Gao, R.H.; Wu, H.R. Recognition Method of Cow Behavior Based on Combination of Image and Activities. Trans. Chin. Soc. Agric. Mach. 2017, 48, 145–151. [Google Scholar]

- Wen, C.J.; Wang, S.S.; Zhao, X.; Wang, M.; Ma, L.; Liu, Y.T. Visual Dictionary for Cows Sow Behavior Recognition. Trans. Chin. Soc. Agric. Mach. 2014, 45, 266–274. [Google Scholar]

- Guo, Y.Y.; He, D.J.; Song, H.B. Region detection of lesion area of knee based on colour edge detection and bilateral projection. Biosyst. Eng. 2018, 173, 19–31. [Google Scholar] [CrossRef]

- Weissbrod, A.; Shapiro, A.; Vasserman, G.; Edry, L.; Dayan, M.; Yitzhaky, A.; Hertzberg, L.; Feinerman, O.; Kimchi, T. Automated long-term tracking and social behavioural phenotyping of animal colonies within a semi-natural environment. Nat. Commun. 2013, 4, 2018. [Google Scholar] [CrossRef] [Green Version]

- Lao, F.D.; Teng, G.H.; Li, J.; Yu, L.G.; Li, Z. Behavior recognition method for individual laying hen based on computer vision. Trans. Chin. Soc. Agric. Eng. 2012, 28, 157–163. [Google Scholar]

- Yang, Q.M.; Xiao, D.Q.; Zhang, G.X. Pig Drinking Behavior Recognition Based on Machine Vision. Trans. Chin. Soc. Agric. Mach. 2018, 49, 232–238. [Google Scholar]

- Benvenutti, M.A.; Coates, T.W.; Imaz, A.; Flesch, T.K.; Hill, J.; Charmley, E.; Hepworth, G.; Chen, D. The use of image analysis to determine the number and position of cattle at a water point. Comput. Electron. Agric. 2015, 118, 24–27. [Google Scholar] [CrossRef]

- Meunier, B.; Pradel, P.; Sloth, K.H.; Cirié, C.; Delval, E.; Mialon, M.M.; Veissier, I. Image analysis to refine measurements of dairy cow behaviour from a real-time location system. Biosyst. Eng. 2018, 173, 32–44. [Google Scholar] [CrossRef]

- Zhao, X.W. Prevention and Control Measures for the Frequent Diseases of Newborn Calves. Shandong J. Anim. Sci. Vet. Med. 2014, 35, 52–53. [Google Scholar]

- He, D.J.; Meng, F.C.; Zhao, K.X.; Zhang, Z. Recognition of Calf Basic Behaviors Based on Video Analysis. Trans. Chin. Soc. Agric. Mach. 2016, 47, 294–300. [Google Scholar]

- Zhao, K.X.; He, D.J. Target detection method for moving cows based on background subtraction. Int. J. Agric. Biol. Eng. 2015, 8, 42–49. [Google Scholar]

- Yin, X.; Wang, B.; Li, W.; Liu, Y.; Zhang, M. Background Subtraction for Moving Cameras based on trajectory-controlled segmentation and Label Inference. KSII Trans. Internet Inf. 2015, 9, 4092–4107. [Google Scholar]

- Hua, Y.; Liu, W.; University, L.T. Moving object detection algorithm of improved Gaussian mixture model. J. Comput. Appl. 2014, 34, 580–584. [Google Scholar] [CrossRef] [Green Version]

- Ye, Y.; Cao, M.; Feng, Y. EVibe: An improved Vibe algorithm for detecting moving objects. Chin. J. Sci. Instrum. 2014, 35, 924–931. [Google Scholar]

- Otsu, N. A threshold selection method from gray level histograms. IEEE Trans. Syst. Man Cybern. 1979, 62–66. [Google Scholar] [CrossRef] [Green Version]

| Actual Behavior | Classification Results | ||||

|---|---|---|---|---|---|

| Entering the Resting Area | Leaving the Resting Area | Still | Turning | Missed Detection | |

| (1) Entering the resting area | 94.38 | - | - | - | 5.62 |

| (2) Leaving the resting area | - | 92.86 | - | - | 7.14 |

| (3) Static behavior | 0.43 | 0.57 | 96.85 | 1.43 | 0.72 |

| (4) Turning around | - | - | 5.56 | 93.51 | 0.93 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Y.; He, D.; Chai, L. A Machine Vision-Based Method for Monitoring Scene-Interactive Behaviors of Dairy Calf. Animals 2020, 10, 190. https://doi.org/10.3390/ani10020190

Guo Y, He D, Chai L. A Machine Vision-Based Method for Monitoring Scene-Interactive Behaviors of Dairy Calf. Animals. 2020; 10(2):190. https://doi.org/10.3390/ani10020190

Chicago/Turabian StyleGuo, Yangyang, Dongjian He, and Lilong Chai. 2020. "A Machine Vision-Based Method for Monitoring Scene-Interactive Behaviors of Dairy Calf" Animals 10, no. 2: 190. https://doi.org/10.3390/ani10020190