Automated Collection and Analysis of Infrared Thermograms for Measuring Eye and Cheek Temperatures in Calves

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Development of Eye and Cheek Algorithm

2.2. Validation of the Eye and Cheek Algorithm as an Automated Method for Thermal Infrared Image Analysis

2.2.1. Animals

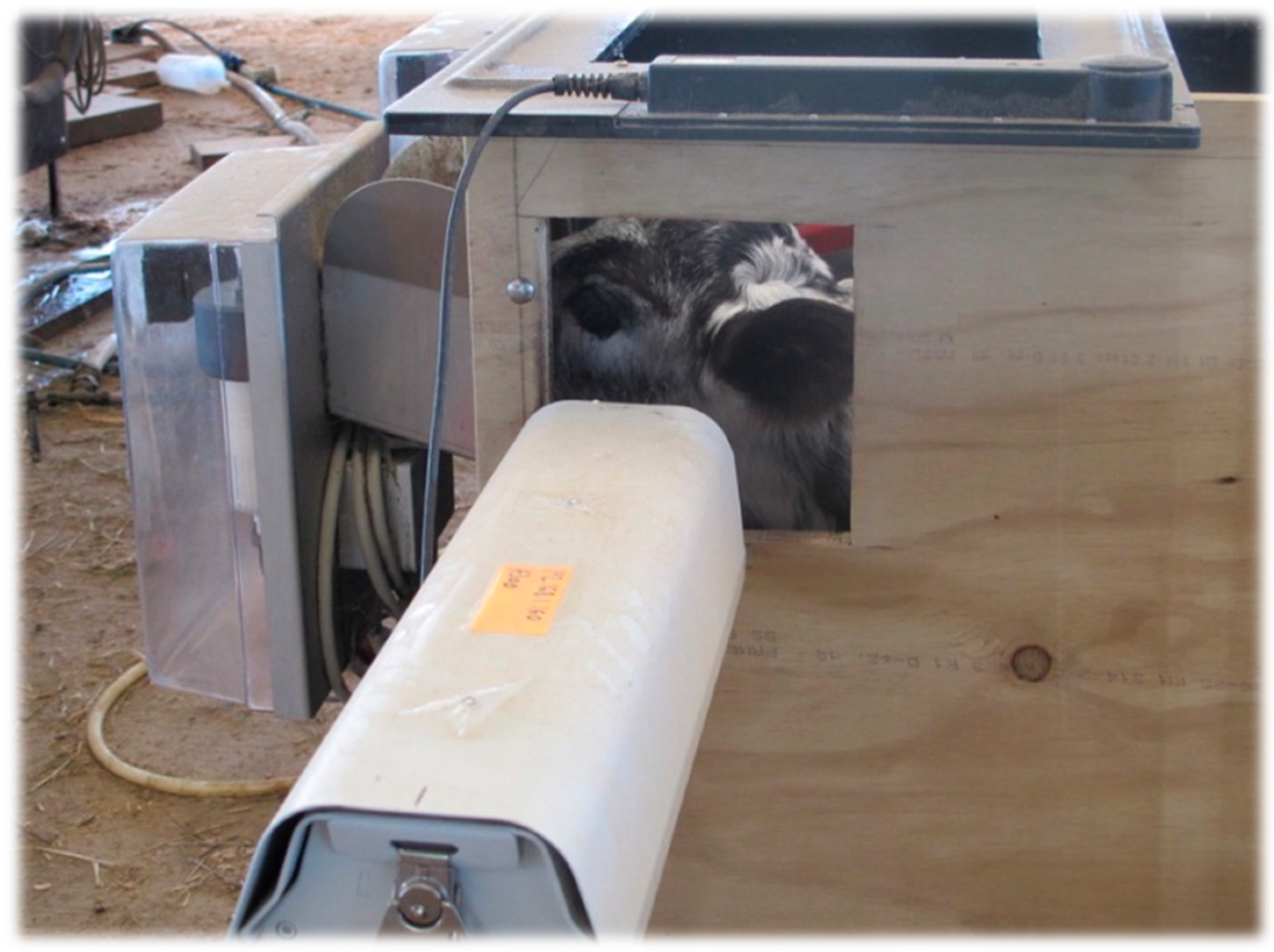

2.2.2. Automated Thermal Infrared Image Collection and Analysis

2.2.3. Manual Image Analysis

2.3. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lowe, G.L.; Sutherland, M.A.; Waas, J.R.; Schaefer, A.L.; Cox, N.R.; Stewart, M. Physiological and behavioral responses as indicators for early disease detection in dairy calves. J. Dairy. Sci. 2019, 102, 5389–5402. [Google Scholar] [CrossRef] [PubMed]

- Hamadani, H.; Kahn, A.A. Automation in livestock farming—A technological revolution. Int. J. Adv. Res. 2015, 3, 1335–1344. [Google Scholar]

- Stewart, M.; Wilson, M.T.; Schaefer, A.L.; Huddart, F.J.; Sutherland, M.A. The use of infrared thermography and accelerometers for remote monitoring of dairy cow health and welfare. J. Dairy. Sci. 2017, 100, 3893–3901. [Google Scholar] [CrossRef] [PubMed]

- Usamentiaga, R.; Venegas, P.; Guerediaga, J.; Vega, L.; Molleda, J.; Bulnes, F.G. Infrared thermography for temperature measurement and non-destructive testing. Sensors. 2014, 14, 12305–12348. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cook, N.J.; Schaefer, A.L. Infrared thermography and disease surveillance. In Thermography: Current Status and Advances in Livestock Animals and in Veterinary Medicine; Luzi, F., Mitchell, M., Nanni Costa, L., Redaelli, V., Eds.; Fondazione Iniziative Zooprofilattiche: Brescia, Lombardy, 2013; Volume 92, pp. 79–92. [Google Scholar]

- Mitchell, M.A. Thermal imaging in physiology: Theoretical and practical considerations. In Thermography: Current Status and Advances in Livestock Animals and in Veterinary Medicine; Luzi, F., Mitchell, M., Nanni Costa, L., Redaelli, V., Eds.; Fondazione Iniziative Zooprofilattiche: Brescia, Lombardy, 2013; Volume 92, pp. 47–68. [Google Scholar]

- Rekant, S.I.; Lyons, M.A.; Pacheco, J.M.; Arxt, J.; Rodriguez, L.L. Veterinary applications of infrared thermography. Am. J. Vet. Res. 2015, 77, 98–107. [Google Scholar] [CrossRef] [PubMed]

- Luzi, F.; Mitchell, M.; Costa, L.N.; Redaelli, V. Thermography: Current status and advances in livestock animals and veterinary medicine; Fondazione Iniziative Zooprofilattiche e zootecniche: Brescia, Italy, 2013. [Google Scholar]

- Pérez de Diego, A.C.; Sánchez-Cordón, P.J.; Pedrera, M.; Martínez-López, B.; Gómez-Villamandos, J.C.; Sánchez-Vizcaíno, J.M. The use of infrared thermography as a non-invasive method for fever detection in sheep infected with blue tongue virus. Vet. J. 2013, 1, 182–186. [Google Scholar] [CrossRef] [PubMed]

- Dunbar, M.R.; Johnson, S.R.; Rhyan, J.C.; McCollum, M. Use of infrared thermography to detect thermographic changes in mule deer (Odocoileus hemionus) experimentally infected with foot-and-mouth disease. J. Zoo Wildl. Med. 2009, 40, 296–301. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dunbar, M.R.; MacCarthy, K.A. Use of infrared thermography to detect signs of rabies in raccoons (Procyon lotor). J. Zoo Wildl. Med. 2006, 37, 518–523. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grossbard, B.P.; Loughin, C.A.; Marion, D.J.; Marino, L.J.; Sackman, J.; Umbaugh, S.E.; Solt, P.S.; Afruz, J.; Leando, P.; Lesser, M.L.; et al. Medical thermal imaging of type I thoracolumbar disk disease in chondrodystrophic dogs. Vet. Surg. 2014, 43, 869–876. [Google Scholar] [CrossRef] [PubMed]

- Hilsberg, S.K.; Gôltenboth, R.; Eulenberger, K. Infrared thermography of zoo animals, first experience in its use for pregnancy diagnosis. Verh. Ber. Erkrg. Zootiere. 1997, 38, 187–190. [Google Scholar]

- Lawrence, T.E.; Sire, M.F.; Dikeman, M.E.; Hunt, M.C.; Hogge, S.B.; James, B.W. Utilizing infrared thermography to predict pork quality. Kans. Aes. Res. Publ. 2001, 131–134. [Google Scholar] [CrossRef] [Green Version]

- Colak, A.; Polat, B.; Okumus, Z.; Kaya, M.; Yanmaz, L.E.; Hayirli, A. Early detection of mastitis using infrared thermography in dairy cows. J. Dairy Sci. 2008, 91, 4244–4248. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nikkah, A.; Plaizier, J.C.; Einarson, M.S.; Berry, R.J.; Scott, S.L.; Kennedy, A.D. Infrared thermography and visual examination of hooves of dairy cows in two stages of lactation. J. Dairy Sci. 2005, 88, 2749–2753. [Google Scholar] [CrossRef] [Green Version]

- Alsaaod, M.; Büscher, W. Detection of hoof lesions using digital infrared thermography in dairy cows. J. Dairy Sci. 2012, 95, 735–742. [Google Scholar] [CrossRef]

- Stewart, M.; Stafford, K.J.; Dowling, S.K.; Schaefer, A.L.; Webster, J.R. Eye temperature and heart rate variability of calves disbudded with or without local anaesthetic. Physiol. Behav. 2007, 93, 789–797. [Google Scholar] [CrossRef]

- Stewart, M.; Verkerk, G.A.; Stafford, K.J.; Schaefer, A.L.; Webster, J.R. Noninvasive assessment of autonomic activity for evaluation of pain in calves, using surgical castration as a model. J. Dairy Sci. 2010, 93, 3602–3609. [Google Scholar] [CrossRef]

- Stewart, M.; Schaefer, A.L.; Haley, D.B.; Colyn, J.J.; Cook, N.J.; Stafford, K.J.; Webster, J.R. Infrared thermography as a non-invasive method for detecting fear-related responses of cattle to different handling procedures. Anim. Welf. 2008, 17, 387–393. [Google Scholar]

- Schaefer, A.L.; Jones, S.D.M.; Tong, A.K.W.; Vincent, B.W. The effects of fasting and transportation on beef cattle. 1. Acid-base-electrolyte balance and infrared heat loss of beef cattle. Livest. Prod. Sci. 1988, 20, 15–24. [Google Scholar] [CrossRef]

- Schaefer, A.L.; Cook, N.J.; Tessaro, S.V.; Deregt, D.; Desroches, G.; Dubeski, P.L.; Tong, A.K.W.; Godson, D.L. Early detection and prediction of infection using infrared thermography. Can. J. Anim. Sci. 2004, 84, 73–80. [Google Scholar] [CrossRef]

- Schaefer, A.L.; Cook, N.J.; Church, J.S.; Basarb, B.; Perry, B.; Miller, C.; Tong, A.K.W. The use of infrared thermography as an early indicator of bovine respiratory disease complex in calves. Res. Vet. Sci. 2007, 83, 376–384. [Google Scholar] [CrossRef]

- Schaefer, A.L.; Cook, N.J.; Bench, C.; Chabot, J.B.; Colyn, J.; Liu, T.; Okine, E.K.; Stewart, M.; Webster, J.R. The non-invasive and automated detection of bovine respiratory disease onset in receiver calves using infrared thermography. Res. Vet. Sci. 2012, 93, 928–935. [Google Scholar] [CrossRef] [PubMed]

- Lowe, G.L.; Schaefer, A.L.; Waas, J.R.; Wilson, M.T.; Sutherland, M.A.; Stewart, M. The use of infrared thermography and feeding behaviour for early disease detection in New Zealand dairy calves. Proc. NZSAP 2016, 76, 177–179. [Google Scholar]

- Martello, L.S.; Silva, S.L.; Gomes, R.C.; Corte, R.R.P.S.; Leme, P.R. Infrared thermography as a tool to evaluate body surface temperature and its relationship with feed efficiency in Bos indicus cattle in tropical conditions. Int. J. Biometeorol. 2016, 60, 173–181. [Google Scholar] [CrossRef] [PubMed]

- Montanholi, Y.R.; Swanson, K.C.; Schenkel, F.S.; McBride, B.W.; Caldwell, T.R.; Miller, S.P. On the determination of residual feed intake and associations of infrared thermography with efficiency and ultrasound traits in beef bulls. Livest. Sci. 2009, 125, 22–30. [Google Scholar] [CrossRef]

- Montanholi, Y.R.; Swanson, K.C.; Palme, R.; Schenkel, F.S.; McBride, B.W.; Lu, D.; Miller, S.P. Assessing feed efficiency in beef steers through feeding behaviour, infrared thermography and glucocorticoids. Animal 2010, 4, 692–701. [Google Scholar] [CrossRef] [PubMed]

- Lowe, G.L.; Sutherland, M.A.; Waas, J.R.; Schaefer, A.L.; Cox, N.R.; Stewart, M. Infrared thermography—A non-invasive method of measuring respiration rate in calves. Animals 2019, 9, 535. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Viola, P.; Jones, M. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Bradski, G. The opencv library. Dr. Dobbs. J. 2000, 25, 120–125. [Google Scholar]

- Sutherland, M.A.; Lowe, G.L.; Huddart, F.J.; Waas, J.R.; Stewart, M. Unpublished Work. 2018.

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2001, 1, 511–518. [Google Scholar]

- Redaelli, V.; Ludwig, N.; Costa, L.N.; Crosta, L.; Riva, J.; Luzi, F. Potential application of thermography (IRT) in animal production and for animal welfare. A case report of working dogs. Ann. Ist. Super. Sanità. 2014, 50, 147–152. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lowe, G.; McCane, B.; Sutherland, M.; Waas, J.; Schaefer, A.; Cox, N.; Stewart, M. Automated Collection and Analysis of Infrared Thermograms for Measuring Eye and Cheek Temperatures in Calves. Animals 2020, 10, 292. https://doi.org/10.3390/ani10020292

Lowe G, McCane B, Sutherland M, Waas J, Schaefer A, Cox N, Stewart M. Automated Collection and Analysis of Infrared Thermograms for Measuring Eye and Cheek Temperatures in Calves. Animals. 2020; 10(2):292. https://doi.org/10.3390/ani10020292

Chicago/Turabian StyleLowe, Gemma, Brendan McCane, Mhairi Sutherland, Joe Waas, Allan Schaefer, Neil Cox, and Mairi Stewart. 2020. "Automated Collection and Analysis of Infrared Thermograms for Measuring Eye and Cheek Temperatures in Calves" Animals 10, no. 2: 292. https://doi.org/10.3390/ani10020292

APA StyleLowe, G., McCane, B., Sutherland, M., Waas, J., Schaefer, A., Cox, N., & Stewart, M. (2020). Automated Collection and Analysis of Infrared Thermograms for Measuring Eye and Cheek Temperatures in Calves. Animals, 10(2), 292. https://doi.org/10.3390/ani10020292