Dogs (Canis familiaris) Gaze at Our Hands: A Preliminary Eye-Tracker Experiment on Selective Attention in Dogs

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Subjects

2.2. Apparatus and Experimental Set ups

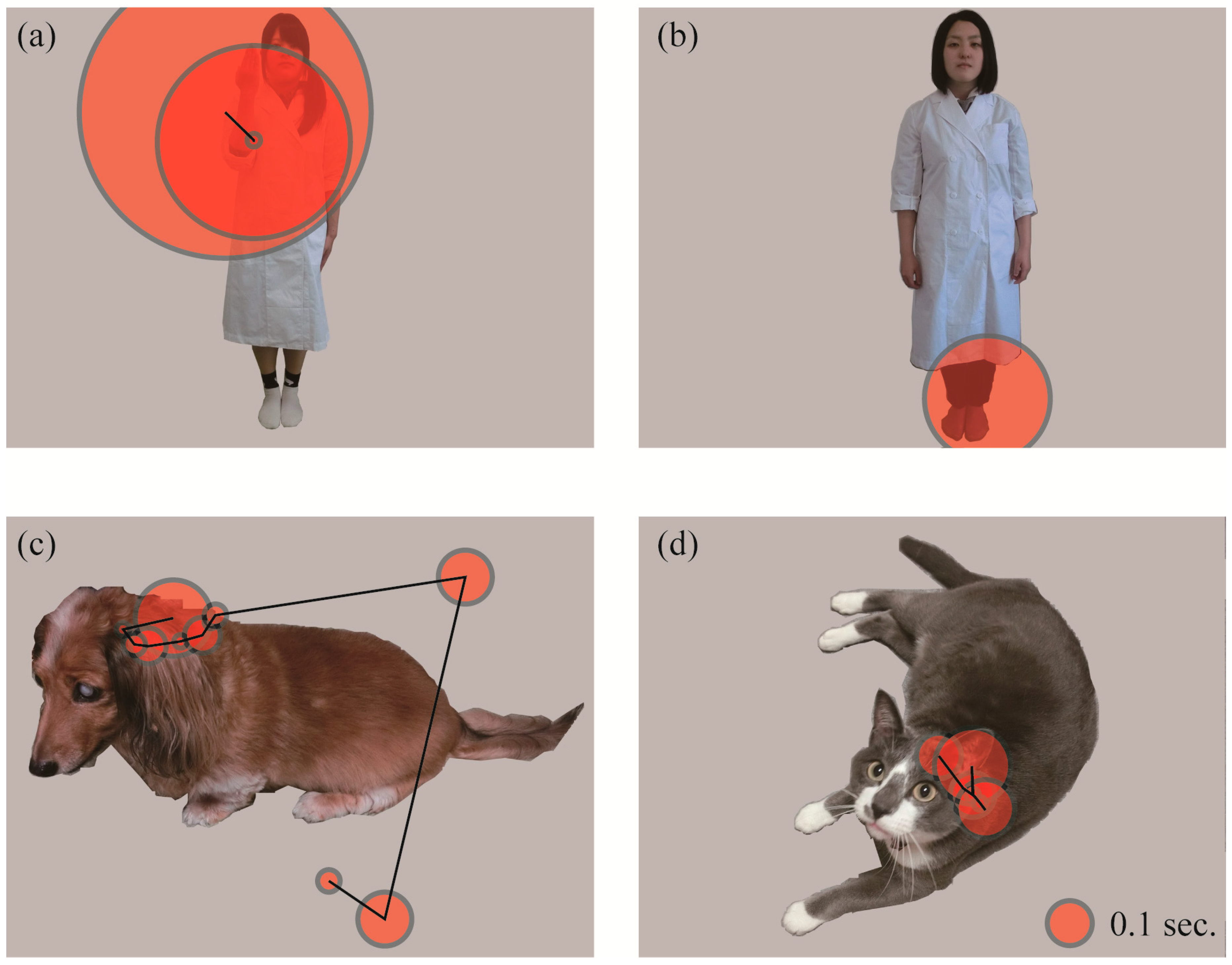

2.3. Stimuli

2.4. Experimental Procedures

2.5. Data Analyses

3. Results

3.1. Species

3.2. Human Familiarity

3.3. Hand Sign

4. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Miklósi, Á.; Topál, J. What does it take to become ‘best friends’? Evolutionary changes in canine social competence. Trends Cogn. Sci. 2013, 17, 287–294. [Google Scholar] [CrossRef]

- Pitteri, E.; Mongillo, P.; Carnier, P.; Marinelli, L.; Huber, L. Part-based and configural processing of owner’s face in dogs. PLoS ONE 2014, 9, e108176. [Google Scholar] [CrossRef] [PubMed]

- Miklósi, Á. Dog Behavior, Evolution, and Cognition; Oxford University Press: Oxford, UK, 2007. [Google Scholar]

- Virány, Z.; Gácsi, M.; Kubinyi, E.; Topál, J.; Belényi, B.; Ujfalussy, D.; Miklósi, Á. Comprehension of human pointing gestures in young human-reared wolves (Canis lupus) and dogs (Canis familiaris). Anim. Cogn. 2008, 11, 373. [Google Scholar] [CrossRef] [PubMed]

- Winters, S.; Dubuc, C.; Higham, J.P. Perspectives: The looking time experimental paradigm in studies of animal visual perception and cognition. Ethology 2015, 121, 625–640. [Google Scholar] [CrossRef]

- Kano, F.; Tomonaga, M. How chimpanzees look at pictures: A comparative eye-tracking study. Proc. R. Soc. B-Biol. Sci. 2009, 276, 1949–1955. [Google Scholar] [CrossRef]

- Kano, F.; Tomonaga, M. Face scanning in chimpanzees and humans: Continuity and discontinuity. Anim. Behav. 2010, 79, 227–235. [Google Scholar] [CrossRef]

- Kano, F.; Call, J.; Tomonaga, M. Face and eye scanning in gorillas (Gorilla gorilla), orangutans (Pongo abelii), and humans (Homo sapiens): Unique eye-viewing patterns in humans among hominids. J. Comp. Psychol. 2012, 126, 388–398. [Google Scholar] [CrossRef]

- Schwarz, J.S.; Sridharan, D.; Knudsen, E.I. Magnetic tracking of eye position in freely behaving chickens. Front. Syst. Neurosci. 2013, 7, 91. [Google Scholar] [CrossRef][Green Version]

- Tyrrell, L.P.; Butler, S.R.; Yorzinski, J.L.; Fernández-Juricic, E. A novel system for bi-ocular eye-tracking in vertebrates with laterally placed eyes. Methods Ecol. Evol. 2014, 5, 1070–1077. [Google Scholar] [CrossRef]

- Yorzinski, J.L.; Patricelli, G.L.; Babcock, J.S.; Pearson, J.M.; Platt, M.L. Through their eyes: Selective attention in peahens during courtship. J. Exp. Biol. 2013, 216, 3035–3046. [Google Scholar] [CrossRef]

- Somppi, S.; Törnqvist, H.; Hänninen, L.; Krause, C.; Vainio, O. Dogs do look at images: Eye tracking in canine cognition research. Anim. Cogn. 2012, 15, 163–174. [Google Scholar] [CrossRef] [PubMed]

- Somppi, S.; Törnqvist, H.; Hänninen, L.; Krause, C.M.; Vainio, O. How dogs scan familiar and inverted faces: An eye movement study. Anim. Cogn. 2014, 17, 793–803. [Google Scholar] [CrossRef] [PubMed]

- Somppi, S.; Törnqvist, H.; Kujala, M.V.; Hänninen, L.; Krause, C.M.; Vainio, O. Dogs evaluate threatening facial expressions by their biological validity—Evidence from gazing patterns. PLoS ONE 2016, 11, e0143047. [Google Scholar] [CrossRef] [PubMed]

- Kis, A.; Hernádi, A.; Miklósi, B.; Kanizsár, O.; Topál, J. The way dogs (Canis familiaris) look at human emotional faces is modulated by oxytocin. An eye-tracking study. Front Behav. Neurosci. 2017, 11, 210. [Google Scholar] [CrossRef]

- Téglás, E.; Gergely, A.; Kupán, K.; Miklósi, Á.; Topál, J. Dogs’ gaze following is tuned to human communicative signals. Curr. Biol. 2012, 22, 209–212. [Google Scholar] [CrossRef]

- Törnqvist, H.; Somppi, S.; Koskela, A.; Krause, C.M.; Vainio, O.; Kujala, M.V. Comparison of dogs and humans in visual scanning of social interaction. R. Soc. Open Sci. 2015, 2, 150341. [Google Scholar] [CrossRef]

- Pryor, K. Don’t Shoot the Dog!: The New Art of Teaching and Training; Simon & Schuster: New York, NY, USA, 1984. [Google Scholar]

- Siniscalchi, M.; D’Ingeo, S.; Fornelli, S.; Quaranta, A. Relationship between visuospatial attention and paw preference in dogs. Sci. Rep. 2016, 6, 31682. [Google Scholar] [CrossRef]

- Dobson, A.J. An Introduction to Generalized Linear Models, 2nd ed.; Chapman & Hall: London, UK, 2002. [Google Scholar]

- Akaike, H. A new look at the statistical model identification. IEEE Trans. Autom. Control 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Topál, J.; Miklósi, Á.; Gácsi, M.; Dóka, A.; Pongrácz, P.; Kubinyi, E.; Virány, Z.; Csányi, V. The dog as a model for understanding human social behavior. In Advances in the Study of Behavior; Brockmann, H.J., Roper, T.J., Naguib, M., Wynne-Edwards, K.E., Mitani, J.C., Simmons, L.W., Eds.; Academic Press: Burlington, NJ, USA, 2009; Volume 39, pp. 71–116. [Google Scholar]

- Vas, J.; Topál, J.; Gácsi, M.; Miklósi, Á.; Csányi, V. A friend or an enemy? Dogs’ reaction to an unfamiliar person showing behavioural cues of threat and friendliness at different times. Appl. Anim. Behav. Sci. 2005, 94, 99–115. [Google Scholar] [CrossRef]

- Quaranta, A.; Siniscalchi, M.; Vallortigara, G. Asymmetric tail-wagging responses by dogs to different emotive stimuli. Curr. Biol. 2007, 17, R199–R201. [Google Scholar] [CrossRef]

- Siniscalchi, M.; Lusito, R.; Vallortigara, G.; Quaranta, A. Seeing left- or right-asymmetric tail wagging produces different emotional responses in dogs. Curr. Biol. 2013, 23, 2279–2282. [Google Scholar] [CrossRef] [PubMed]

- Adachi, I.; Kuwahata, H.; Fujita, K. Dogs recall their owner’s face upon hearing the owner’s voice. Anim. Cogn. 2007, 10, 17–21. [Google Scholar] [CrossRef]

- Williams, F.J.; Mills, D.S.; Guo, K. Development of a head-mounted, eye-tracking system for dogs. J. Neurosci. Methods 2011, 194, 259–265. [Google Scholar] [CrossRef] [PubMed]

- Péter, A.; Miklósi, Á.; Pongrácz, P. Domestic dogs’ (Canis familiaris) understanding of projected video images of a human demonstrator in an object-choice task. Ethology 2013, 119, 898–906. [Google Scholar] [CrossRef]

- Hattori, Y.; Kano, F.; Tomonaga, M. Differential sensitivity to conspecific and allospecific cues in chimpanzees and humans: A comparative eye-tracking study. Biol. Lett. 2010, 6, 610–613. [Google Scholar] [CrossRef] [PubMed]

| ID | Breed | Age (Years) | Sex | Neutering |

|---|---|---|---|---|

| 1 a | Labrador retriever | 13 | Male | Neutered |

| 2 a | Norfolk terrier | 5 | Female | Neutered |

| 3 a | Mixed c | 2 | Male | Neutered |

| 4 b | Unidentified d | 18 | Female | Neutered |

| 5 b | Shetland sheepdog | 9 | Male | Neutered |

| 6 b | German shepherd | 2 | Male | Neutered |

| 7 b | Mixed e | 1 | Female | Intact |

| Responsible Factor | ||||||

|---|---|---|---|---|---|---|

| Fixation number | Total fixation duration | |||||

| Likelihood ratio test | Likelihood ratio test | |||||

| Fixed factor | AIC a | χ2 | p | AIC a | χ2 | p |

| Analysis comparing the three species | ||||||

| Species and AOI | 491.0 | 106.9 | ||||

| AOI | 547.8 | 72.80 | <0.001 | 93.67 | 34.21 | <0.001 |

| Species | 623.0 | 149.97 | <0.001 | 121.8 | 69.25 | <0.001 |

| Analysis comparing human familiarity | ||||||

| Familiarity and AOI | 172.2 | 46.3 | ||||

| AOI | 168.3 | 4.09 | 0.39 | 27.0 | 1.08 | 0.90 |

| Familiarity | 170.3 | 10.02 | 0.12 | 21.3 | 7.07 | 0.31 |

| Analysis comparing stimuli with and without a hand sign | ||||||

| Hand-sign and AOI | 97.1 | 59.42 | ||||

| AOI | 102.8 | 9.77 | 0.008 | 53.35 | 3.11 | 0.21 |

| Hand-sign | 126.7 | 33.6 | <0.001 | 62.72 | 12.8 | 0.002 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ogura, T.; Maki, M.; Nagata, S.; Nakamura, S. Dogs (Canis familiaris) Gaze at Our Hands: A Preliminary Eye-Tracker Experiment on Selective Attention in Dogs. Animals 2020, 10, 755. https://doi.org/10.3390/ani10050755

Ogura T, Maki M, Nagata S, Nakamura S. Dogs (Canis familiaris) Gaze at Our Hands: A Preliminary Eye-Tracker Experiment on Selective Attention in Dogs. Animals. 2020; 10(5):755. https://doi.org/10.3390/ani10050755

Chicago/Turabian StyleOgura, Tadatoshi, Mizuki Maki, Saki Nagata, and Sanae Nakamura. 2020. "Dogs (Canis familiaris) Gaze at Our Hands: A Preliminary Eye-Tracker Experiment on Selective Attention in Dogs" Animals 10, no. 5: 755. https://doi.org/10.3390/ani10050755

APA StyleOgura, T., Maki, M., Nagata, S., & Nakamura, S. (2020). Dogs (Canis familiaris) Gaze at Our Hands: A Preliminary Eye-Tracker Experiment on Selective Attention in Dogs. Animals, 10(5), 755. https://doi.org/10.3390/ani10050755