The Future of Artificial Intelligence in Monitoring Animal Identification, Health, and Behaviour

Abstract

:Simple Summary

Abstract

1. Introduction

1.1. The History of Technology

1.1.1. Chronological Background

1.1.2. Locations of Employed Technology

1.2. Recent Technological Advancements

1.2.1. Purposes for Technological Advances

1.2.2. Technology Types

1.3. Current Limitations and Proposed Novel Technology

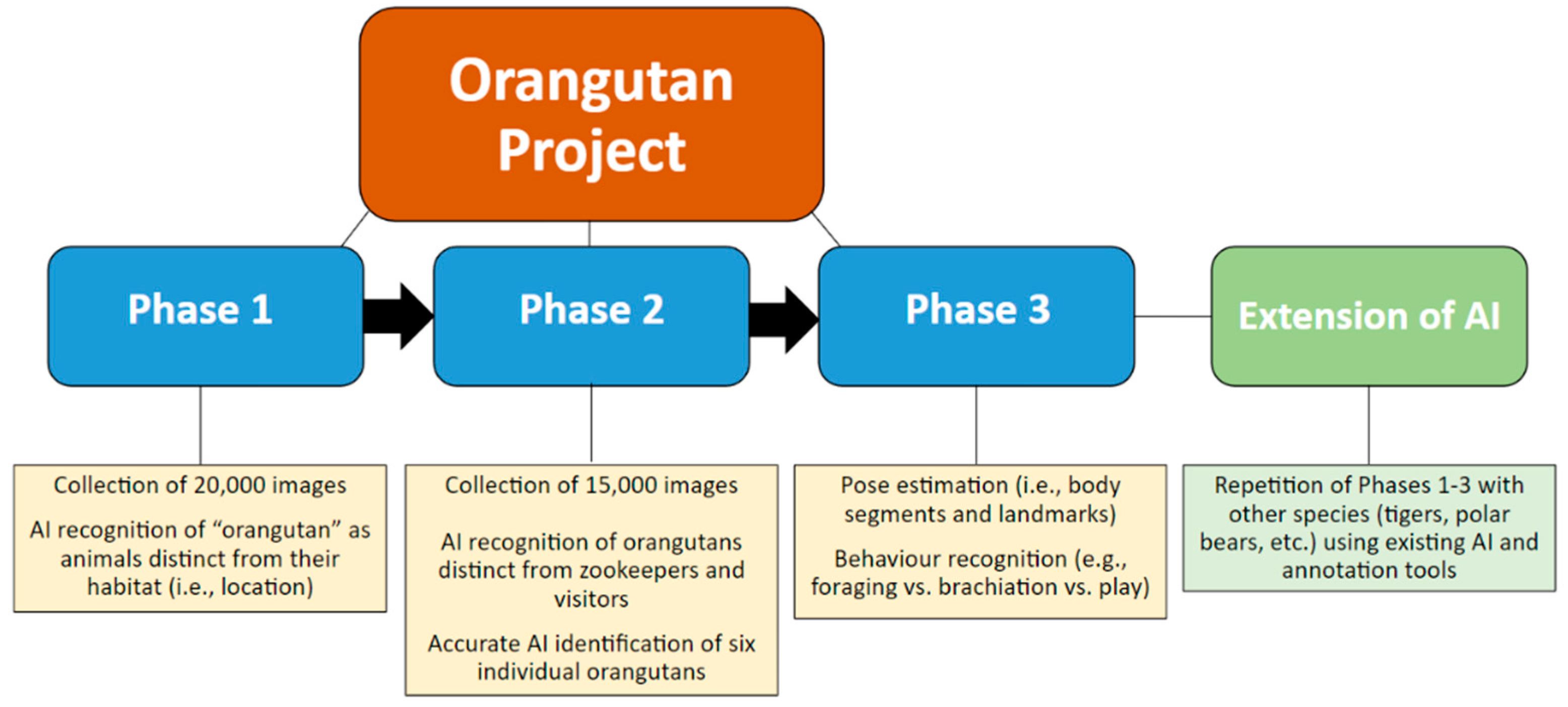

2. Materials and Methods

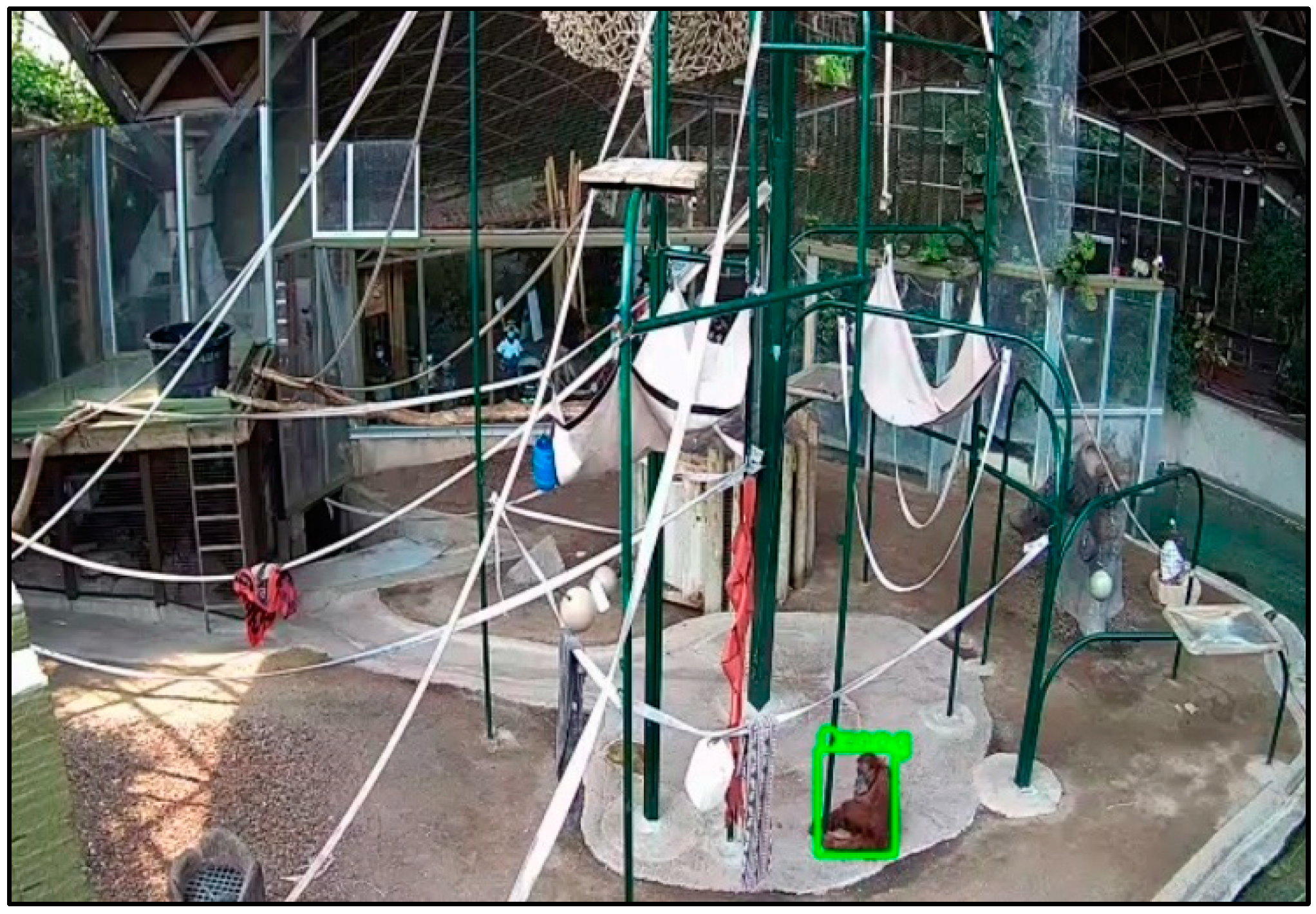

2.1. Dataset

2.2. Implementation

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Category | Code | Description |

|---|---|---|

| Foraging | F | Consumption of water or plant matter (e.g., leaves, soft vine barks, soft stalks, and round fleshy parts). Marked by insertion of plant matter into the mouth with the use of the hands. It starts with the use of the hands to pick plant matter from a bunch or pile, to pick apart plant matter and to break plant matter into small pieces. The hands are then used to bring plant matter into the mouth. This is followed by chewing (i.e., open and close movement of the jaw whilst the plant matter is either partially or fully in the mouth). This is culminated by swallowing; that is, the plant matter is no longer in the mouth nor outside the animal and the animal moves to get more. The bout stops when there is a pause in the behaviour >3 s or another behaviour is performed. |

| Brachiation | BR | Arm-over-arm movement along bars or climbing structures. The orangutan is suspended fully from only one or two hands (Full BR) or with the support of a third limb (Partial BR). The movement is slow and there is no air time between alternating grasps on the bars (i.e., at no point will the orangutan be mid-air without support). |

| Locomotion | L | The orangutan moves with the use of limbs from one point in the exhibit to the next point at least within a metre away from the origin. The orangutan may end up in the same location as the origin, but along the path should have gone at least a metre away from the origin. The orangutan may be locomoting bipedally or quadrupedally on plane surfaces such as platforms and the ground. If the orangutan is on climbing structures but is supported by all four limbs, the movement is classified as locomotion. |

| Object Play | OP | Repetitive manipulation and inspection (visual and/or tactile) of inedible objects which are not part of another individual’s body. The individual is visibly engaged (i.e., the facial/head orientation is on the object being manipulated). Inspection or manipulation is done by mouth, hands or feet. Movement may appear like other behavioural categories but the size/speed of movements of limbs are exaggerated. |

| Fiddling | FD | Slow and repetitive manipulation of an object with no apparent purpose or engagement (i.e., The orangutan may appear like staring in space and not paying attention to the movement). The orientation of the head must not be facing the object being manipulated. Manipulation may be subtle repetitive finger movements along the object being manipulated. |

| Inactive | I | The animal stays in the same spot or turns around but does not go beyond a metre from origin. The animal is not engaged in self-directed behaviours, foraging, hiding, defecation, urination, scanning behaviours, or social interaction. The animal may be lying prone, supine, sideways, upright sitting, or quadrupedal, but remains stationary. |

| Affiliative | AF | The animal engages in social interactions with another individual such as allogrooming, begging for food, food sharing, hugging, tolerance. Behaviours would appear to maintain bond as seen by maintenance of close proximity. These behaviours do not have audible vocalizations or vigorous movements. |

| Agonistic | AG | Social interactions with individuals where distance from each other is the outcome unless there is a physical confrontation or fight. The animal may be engaged in, rejection of begging, or avoidance, or vigorously grabbing food from the grasp of the receiver of the interaction. Characterized by vigorous movements towards or away from the other individual. |

| Keeper Directed | KD | Staring, following, locomoting towards the keeper, or obtaining food from the keeper. Attention/ head orientation must be placed on the keeper. The keeper should be visible around the perimeter of the exhibit or in the keeper’s cage. |

| Guest Directed | GD | Staring, following, or moving towards the guests. Attention must be placed on the guest. Volunteers (humans in white shirts and beige trousers are considered guests) |

| Self Directed Baby Directed | SD BD | Inspection of hair, body, or mouth with hands, feet, mouth or with the use of water or objects such as sticks or non-food enrichment. The body part being inspected is prod repeatedly by any of the abovementioned implements. The animal may scratch, squeeze, poke, or pinch the body part being inspected. Attention does not have to be on the body part. Physical inspection of infant offspring, including limbs, face, and hair of baby, using mouth and/or hands. Attention does not have to on the infant or their body part(s). |

| Tech Directed | TD | The animal uses or waits at a computer touchscreen enrichment. |

| Hiding | H | The animal covers itself with a blanket, a leaf, or goes in the bucket such that only a portion of the head is visible. The animal remains stationary. |

| Urination | U | Marked by the presence of darker wet spot on the ground. Urine flows from the hind of the orangutan. The orangutan may be hanging on climbing structures using any combination of limbs or may be sitting at the edge of the moat, platform, or on a bar with the hind facing where the urine would land. |

| Object Manipulation | OM | Moving objects with limbs or the mouth from one point in the enclosure to the other point. There is a very clear purpose that usually stops once the purpose has been achieved (e.g., filling a water bottle). |

| Scanning | SC | The animal makes a short sweeping movement of the head and the eyes stay forward following the gaze. The attention has to be on anything outside the exhibit. The animal may be sitting on the floor or bipedally/quadrupedally locomoting towards a window or the edge of the exhibit. |

| Patrolling | PT | The animal follows a repeated path around a portion or the entirety of the perimeter of the exhibit. The animal seems vigilant with repeated scans as movement happens. |

| Defecation | D | Marked by the presence of fecal matter on the floor. Feces drops from the hind of the orangutan. The orangutan may be hanging on climbing structures using any combination of limbs or may be sitting at the edge of the moat, platform, or on a bar with the hind facing where the feces would land. The orangutan may also reach around such that the feces would land on the palm and the orangutan would drop the collected feces on the floor. The orangutan may also gradually orient the upper body from an upright sitting position to a more acute prone posture. |

| Agitated Movement | AM | Locomotion that is fast, with fast scanning of surroundings, may or may not stop at a destination. Usually follows after a loud noise. Brachiation along the bars is hasty and may involve short air time. Scans towards the keeper’s kitchen or the entrance to the exhibit may be possible. |

References

- Rossing, W. Cow identification for individual feeding in or outside the milking parlor. In Proceedings of the Symposium on Animal Identification Systems and their Applications, Wageningen, The Netherlands, 8–9 April 1976. [Google Scholar]

- Rossing, W.; Maatje, K. Automatic data recording for dairy herd management. In Proceedings of the International Milking Machine Symposium, Louisville, KY, USA, 21–23 February 1978. [Google Scholar]

- Eradus, W.J.; Jansen, M.B. Animal identification and monitoring. Comput. Electron. Agric. 1999, 24, 91–98. [Google Scholar] [CrossRef]

- Helwatkar, A.; Riordan, D.; Walsh, J. Sensor technology for animal health monitoring. Int. J. Smart Sens. Intell. Syst. 2014, 7, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Tan, N.H.; Wong, R.Y.; Desjardins, A.; Munson, S.A.; Pierce, J. Monitoring pets, deterring intruders, and casually spying on neighbors: Everyday uses of smart home cameras. CHI Conf. Hum. Factors Comput. Syst. 2022, 617, 1–25. [Google Scholar] [CrossRef]

- Dupuis-Desormeaux, M.; Davidson, Z.; Mwololo, M.; Kisio, E.; MacDonald, S.E. Comparing motion capture cameras versus human observer monitoring of mammal movement through fence gaps: A case study from Kenya. Afr. J. Ecol. 2016, 54, 154–161. [Google Scholar] [CrossRef]

- Heimbürge, S.; Kanitz, E.; Otten, W. The use of hair cortisol for the assessment of stress in animals. Gen. Comp. Endocrinol. 2019, 270, 10–17. [Google Scholar] [CrossRef]

- Inoue, E.; Inoue-Murayama, M.; Takenaka, O.; Nishida, T. Wild chimpanzee infant urine and saliva sampled noninvasively usable for DNA analyses. Primates 2007, 48, 156–159. [Google Scholar] [CrossRef]

- Touma, C.; Palme, R. Measuring fecal glucocorticoid metabolites in mammals and birds: The importance of validation. Ann. N. Y. Acad. Sci. 2005, 1046, 54–74. [Google Scholar] [CrossRef]

- Brown, D.D.; Kays, R.; Wikelski, M.; Wilson, R.; Klimley, A.P. Observing the unwatchable through acceleration logging of animal behavior. Anim. Biotelemetry 2013, 1, 20. [Google Scholar] [CrossRef] [Green Version]

- Fehlmann, G.; O’Riain, M.J.; Hopkins, P.W.; O’Sullivan, J.; Holton, M.D.; Shepard, E.L.; King, A.J. Identification of behaviours from accelerometer data in a wild social primate. Anim. Biotelemetry 2017, 5, 47. [Google Scholar] [CrossRef] [Green Version]

- Butcher, P.A.; Colefax, A.P.; Gorkin, R.A.; Kajiura, S.M.; López, N.A.; Mourier, J.; Raoult, V. The drone revolution of shark science: A review. Drones 2021, 5, 8. [Google Scholar] [CrossRef]

- Oleksyn, S.; Tosetto, L.; Raoult, V.; Joyce, K.E.; Williamson, J.E. Going batty: The challenges and opportunities of using drones to monitor the behaviour and habitat use of rays. Drones 2021, 5, 12. [Google Scholar] [CrossRef]

- Giles, A.B.; Butcher, P.A.; Colefax, A.P.; Pagendam, D.E.; Mayjor, M.; Kelaher, B.P. Responses of bottlenose dolphins (Tursiops spp.) to small drones. Aquat. Conserv. Mar. Freshw. Ecosyst. 2021, 31, 677–684. [Google Scholar] [CrossRef]

- Brisson-Curadeau, É.; Bird, D.; Burke, C.; Fifield, D.A.; Pace, P.; Sherley, R.B.; Elliott, K.H. Seabird species vary in behavioural response to drone census. Sci. Rep. 2017, 7, 17884. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Burghardt, T.; Ćalić, J. Analysing animal behaviour in wildlife videos using face detection and tracking. IEE Proc. Vis. Image Signal Processing 2006, 153, 305–312. [Google Scholar] [CrossRef] [Green Version]

- Zviedris, R.; Elsts, A.; Strazdins, G.; Mednis, A.; Selavo, L. Lynxnet: Wild animal monitoring using sensor networks. In International Workshop on Real-World Wireless Sensor Networks; Springer: Berlin/Heidelberg, Germany, 2010; pp. 170–173. [Google Scholar] [CrossRef] [Green Version]

- Zheng, X.; Owen, M.A.; Nie, Y.; Hu, Y.; Swaisgood, R.R.; Yan, L.; Wei, F. Individual identification of wild giant pandas from camera trap photos—A systematic and hierarchical approach. J. Zool. 2016, 300, 247–256. [Google Scholar] [CrossRef]

- Rast, W.; Kimmig, S.E.; Giese, L.; Berger, A. Machine learning goes wild: Using data from captive individuals to infer wildlife behaviours. PLoS ONE 2020, 15, e0227317. [Google Scholar] [CrossRef]

- Bezerra, B.M.; Bastos, M.; Souto, A.; Keasey, M.P.; Eason, P.; Schiel, N.; Jones, G. Camera trap observations of nonhabituated critically endangered wild blonde capuchins, Sapajus flavius (formerly Cebus flavius). Int. J. Primatol. 2014, 35, 895–907. [Google Scholar] [CrossRef]

- Schofield, D.; Nagrani, A.; Zisserman, A.; Hayashi, M.; Matsuzawa, T.; Biro, D.; Carvalho, S. Chimpanzee face recognition from videos in the wild using deep learning. Sci. Adv. 2019, 5, eaaw0736. [Google Scholar] [CrossRef] [Green Version]

- Wark, J.D.; Cronin, K.A.; Niemann, T.; Shender, M.A.; Horrigan, A.; Kao, A.; Ross, M.R. Monitoring the behavior and habitat use of animals to enhance welfare using the ZooMonitor app. Anim. Behav. Cogn. 2019, 6, 158–167. [Google Scholar] [CrossRef]

- Chen, P.; Swarup, P.; Matkowski, W.M.; Kong, A.W.K.; Han, S.; Zhang, Z.; Rong, H. A study on giant panda recognition based on images of a large proportion of captive pandas. Ecol. Evol. 2020, 10, 3561–3573. [Google Scholar] [CrossRef]

- Kumar, A.; Hancke, G.P. A zigbee-based animal health monitoring system. IEEE Sens. J. 2014, 15, 610–617. [Google Scholar] [CrossRef]

- Salman, M.D. (Ed.) Animal Disease Surveillance and Survey Systems: Methods and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Brust, C.A.; Burghardt, T.; Groenenberg, M.; Kading, C.; Kuhl, H.S.; Manguette, M.L.; Denzler, J. Towards Automated Visual Monitoring of Individual Gorillas in the Wild. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2820–2830. [Google Scholar]

- Browning, E.; Bolton, M.; Owen, E.; Shoji, A.; Guilford, T.; Freeman, R. Predicting animal behaviour using deep learning: GPS data alone accurately predict diving in seabirds. Methods Ecol. Evol. 2018, 9, 681–692. [Google Scholar] [CrossRef] [Green Version]

- Smith, K.; Martinez, A.; Craddolph, R.; Erickson, H.; Andresen, D.; Warren, S. An Integrated Cattle Health Monitoring System. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 4659–4662. [Google Scholar] [CrossRef]

- MacDonald, S.E.; Ritvo, S. Comparative cognition outside the laboratory. Comp. Cogn. Behav. Rev. 2016, 11, 49–61. [Google Scholar] [CrossRef] [Green Version]

- Valletta, J.J.; Torney, C.; Kings, M.; Thornton, A.; Madden, J. Applications of machine learning in animal behaviour studies. Anim. Behav. 2017, 124, 203–220. [Google Scholar] [CrossRef]

- Gernat, T.; Jagla, T.; Jones, B.M.; Middendorf, M.; Robinson, G.E. Automated monitoring of animal behaviour with barcodes and convolutional neural networks. BioRxiv 2020, 1–30. [Google Scholar] [CrossRef]

- Claridge, A.W.; Mifsud, G.; Dawson, J.; Saxon, M.J. Use of infrared digital cameras to investigate the behaviour of cryptic species. Wildl. Res. 2005, 31, 645–650. [Google Scholar] [CrossRef]

- Gelardi, V.; Godard, J.; Paleressompoulle, D.; Claidière, N.; Barrat, A. Measuring social networks in primates: Wearable sensors vs. direct observations. BioRxiv 2020, 1–20. [Google Scholar] [CrossRef]

- Neethirajan, S. Recent advances in wearable sensors for animal health management. Sens. Bio-Sens. Res. 2017, 12, 15–29. [Google Scholar] [CrossRef] [Green Version]

- McShea, W.J.; Forrester, T.; Costello, R.; He, Z.; Kays, R. Volunteer-run cameras as distributed sensors for macrosystem mammal research. Landsc. Ecol. 2016, 31, 55–66. [Google Scholar] [CrossRef]

- Dong, R.; Carter, M.; Smith, W.; Joukhadar, Z.; Sherwen, S.; Smith, A. Supporting Animal Welfare with Automatic Tracking of Giraffes with Thermal Cameras. In Proceedings of the 29th Australian Conference on Computer-Human Interaction, Brisbane, Australia, 28 November–1 December 2017; pp. 386–391. [Google Scholar] [CrossRef]

- Loos, A.; Ernst, A. An automated chimpanzee identification system using face detection and recognition. EURASIP J. Image Video Process. 2013, 2013, 49. [Google Scholar] [CrossRef]

- Witham, C.L. Automated face recognition of rhesus macaques. J. Neurosci. Methods 2018, 300, 157–165. [Google Scholar] [CrossRef] [PubMed]

- Duhart, C.; Dublon, G.; Mayton, B.; Davenport, G.; Paradiso, J.A. Deep Learning for Wildlife Conservation and Restoration Efforts. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 1–4. [Google Scholar]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Packer, C.; Clune, J. Automatically identifying wild animals in camera trap images with deep learning. Proc. Natl. Acad. Sci. USA 2017, 115, E5716–E5725. [Google Scholar] [CrossRef] [Green Version]

- Patil, H.; Ansari, N. Smart surveillance and animal care system using IOT and deep learning. SSRN 2020, 1–6. [Google Scholar] [CrossRef]

- Pons, P.; Jaen, J.; Catala, A. Assessing machine learning classifiers for the detection of animals’ behavior using depth-based tracking. Expert Syst. Appl. 2017, 86, 235–246. [Google Scholar] [CrossRef]

- Schindler, F.; Steinhage, V. Identification of animals and recognition of their actions in wildlife videos using deep learning techniques. Ecol. Inform. 2021, 61, 101215. [Google Scholar] [CrossRef]

- Willi, M.; Pitman, R.T.; Cardoso, A.W.; Locke, C.; Swanson, A.; Boyer, A.; Fortson, L. Identifying animal species in camera trap images using deep learning and citizen science. Methods Ecol. Evol. 2019, 10, 80–91. [Google Scholar] [CrossRef] [Green Version]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Lentini, A.M.; Crawshaw, G.J.; Licht, L.E.; McLelland, D.J. Pathologic and hematologic responses to surgically implanted transmitters in eastern massasauga rattlesnakes (Sistrurus catenatus catenatus). J. Wildl. Dis. 2011, 47, 107–125. [Google Scholar] [CrossRef]

- Snijders, L.; Weme, L.E.N.; de Goede, P.; Savage, J.L.; van Oers, K.; Naguib, M. Context-dependent effects of radio transmitter attachment on a small passerine. J. Avian Biol. 2017, 48, 650–659. [Google Scholar] [CrossRef]

- Pagano, A.M.; Durner, G.M.; Amstrup, S.C.; Simac, K.S.; York, G.S. Long-distance swimming by polar bears (Ursus maritimus) of the southern Beaufort Sea during years of extensive open water. Can. J. Zool. 2012, 90, 663–676. [Google Scholar] [CrossRef] [Green Version]

- Goodman, M.; Porter, C.A.; Czelusniak, J.; Page, S.L.; Schneider, H.; Shoshani, J.; Groves, C.P. Toward a phylogenetic classification of primates based on DNA evidence complemented by fossil evidence. Mol. Phylogenetics Evol. 1998, 9, 585–598. [Google Scholar] [CrossRef] [PubMed]

- Chen, F.C.; Li, W.H. Genomic divergences between humans and other hominoids and the effective population size of the common ancestor of humans and chimpanzees. Am. J. Hum. Genet. 2001, 68, 444–456. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- World Wildlife Fund (n.d.). Available online: https://www.worldwildlife.org/species/orangutan (accessed on 17 May 2022).

- Gkioxari, G.; Girshick, R.; Dollár, P.; He, K. Detecting and Recognizing Human-Object Interactions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8359–8367. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ward, S.J.; Sherwen, S.; Clark, F.E. Advances in applied zoo animal welfare science. J. Appl. Anim. Welf. Sci. 2018, 21, 23–33. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Orangutan Name | Birth Date | Current Age (June 2022) | Biological Sex | Birth Place | Defining Features |

|---|---|---|---|---|---|

| Puppe | 1967/09/07 | 54 | F | Sumatra | Dark face with dimpled cheeks; yellow hair around ears and on chin; reddish-orange hair, matted hair on back/legs; medium body size; always haunched with elderly gait/shuffle; curled/stumpy feet |

| Ramai | 1985/10/04 | 36 | F | Toronto Zoo | Oval-shaped face with flesh colour on eyelids (almost white); red hair that falls on forehead; pronounced nipples; medium body size |

| Sekali | 1992/08/18 | 29 | F | Toronto Zoo | Uniformly dark face (cheeks/mouth), with flesh coloured upper eyelids and dots on upper lip; horizontal lines/wrinkles under eyes; long, hanging, smooth orange hair with “bowl-cut”, and lighter orange hair to the sides of the mouth; mixed light and dark orange hair on back (light spot at neck); medium-sized body; brachiates throughout the enclosure |

| Budi | 2006/01/18 | 16 | M | Toronto Zoo | Wide, dark face with pronounced flanges (i.e., cheek pads); dark brown, thick/shaggy hair on body and arms, wavy hair on front of shoulders; large/thick body; stance with rolled shoulders; often climbing throughout enclosure |

| Kembali | 2006/07/24 | 15 | M | Toronto Zoo | Oval/long face with flesh colour around eyes and below nose; dark skin on nose and forehead; small flange bulges; hanging throat; reddish-orange shaggy hair with skin breaks on shoulders and inner arm joints; thicker hair falls down cheeks; large/lanky body; strong, upright gait; often brachiating throughout the enclosure |

| Jingga | 2006/12/15 | 15 | F | Toronto Zoo | Oval/long face with flesh colour around eyes and mouth; full lips; reddish-orange hair with skin breaks on shoulders, inner arm joints, and buttocks; thicker hair falls on forehead and cheeks; medium-small body size |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Congdon, J.V.; Hosseini, M.; Gading, E.F.; Masousi, M.; Franke, M.; MacDonald, S.E. The Future of Artificial Intelligence in Monitoring Animal Identification, Health, and Behaviour. Animals 2022, 12, 1711. https://doi.org/10.3390/ani12131711

Congdon JV, Hosseini M, Gading EF, Masousi M, Franke M, MacDonald SE. The Future of Artificial Intelligence in Monitoring Animal Identification, Health, and Behaviour. Animals. 2022; 12(13):1711. https://doi.org/10.3390/ani12131711

Chicago/Turabian StyleCongdon, Jenna V., Mina Hosseini, Ezekiel F. Gading, Mahdi Masousi, Maria Franke, and Suzanne E. MacDonald. 2022. "The Future of Artificial Intelligence in Monitoring Animal Identification, Health, and Behaviour" Animals 12, no. 13: 1711. https://doi.org/10.3390/ani12131711

APA StyleCongdon, J. V., Hosseini, M., Gading, E. F., Masousi, M., Franke, M., & MacDonald, S. E. (2022). The Future of Artificial Intelligence in Monitoring Animal Identification, Health, and Behaviour. Animals, 12(13), 1711. https://doi.org/10.3390/ani12131711