The Development of Object Recognition Requires Experience with the Surface Features of Objects

Abstract

:Simple Summary

Abstract

1. Introduction

Using Automated Controlled Rearing to Study the Origins of Object Recognition

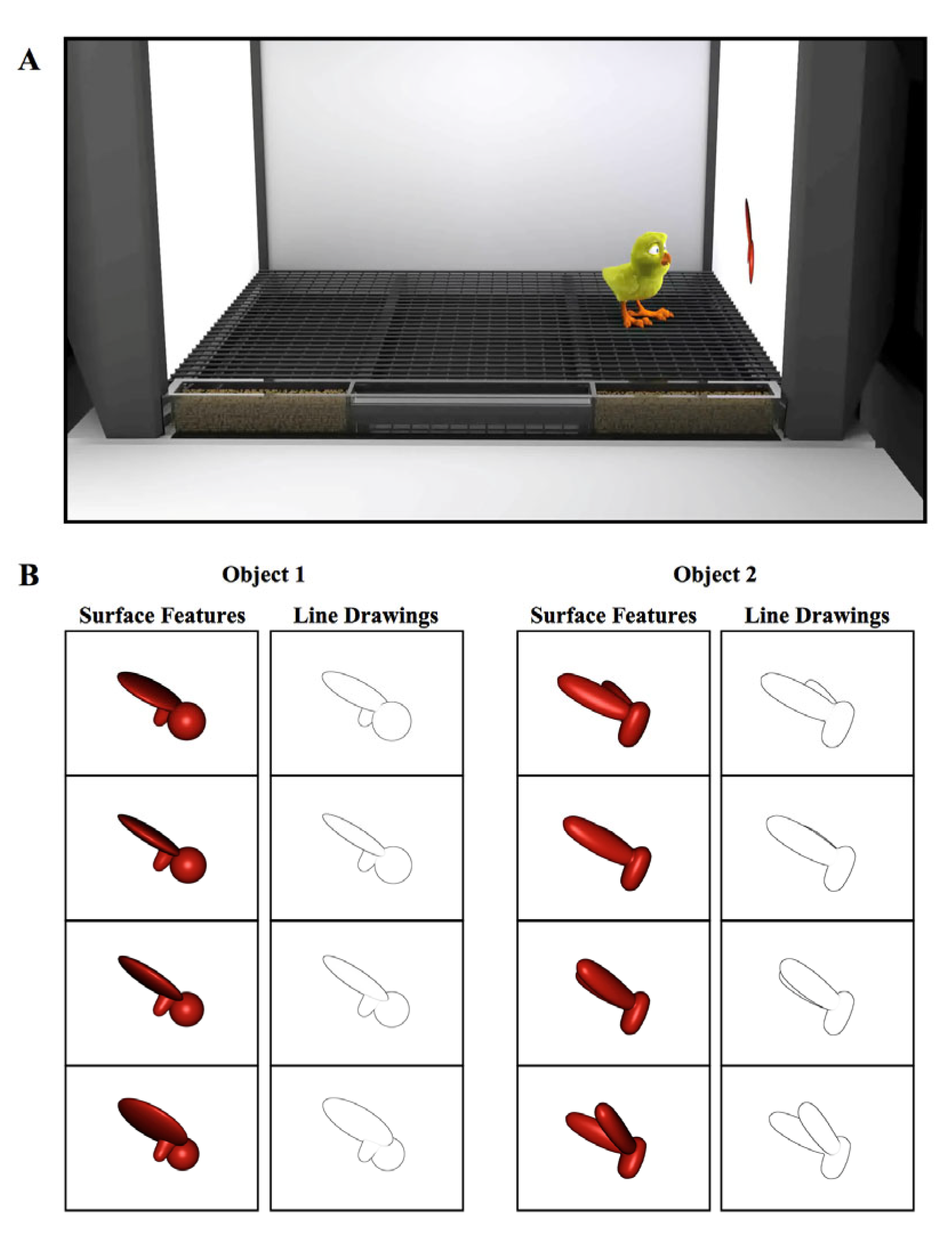

2. Experiment 1

2.1. Materials and Methods

2.1.1. Subjects

2.1.2. Controlled-Rearing Chambers

2.1.3. Procedure

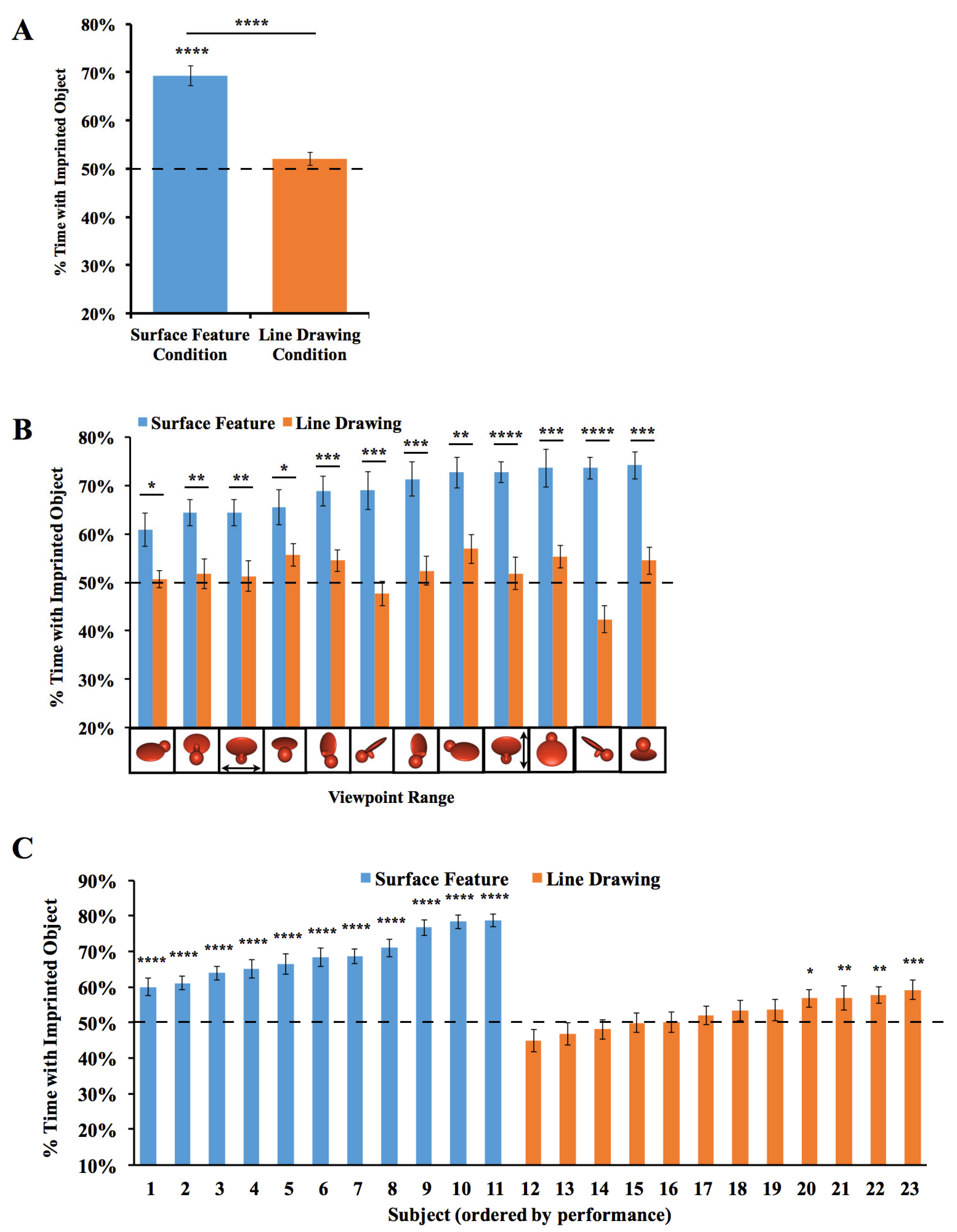

2.2. Results

2.3. Discussion

3. Experiment 2

3.1. Materials and Methods

3.2. Results and Discussion

3.3. Measuring the Strength of the Imprinting Response in Experiments 1 and 2

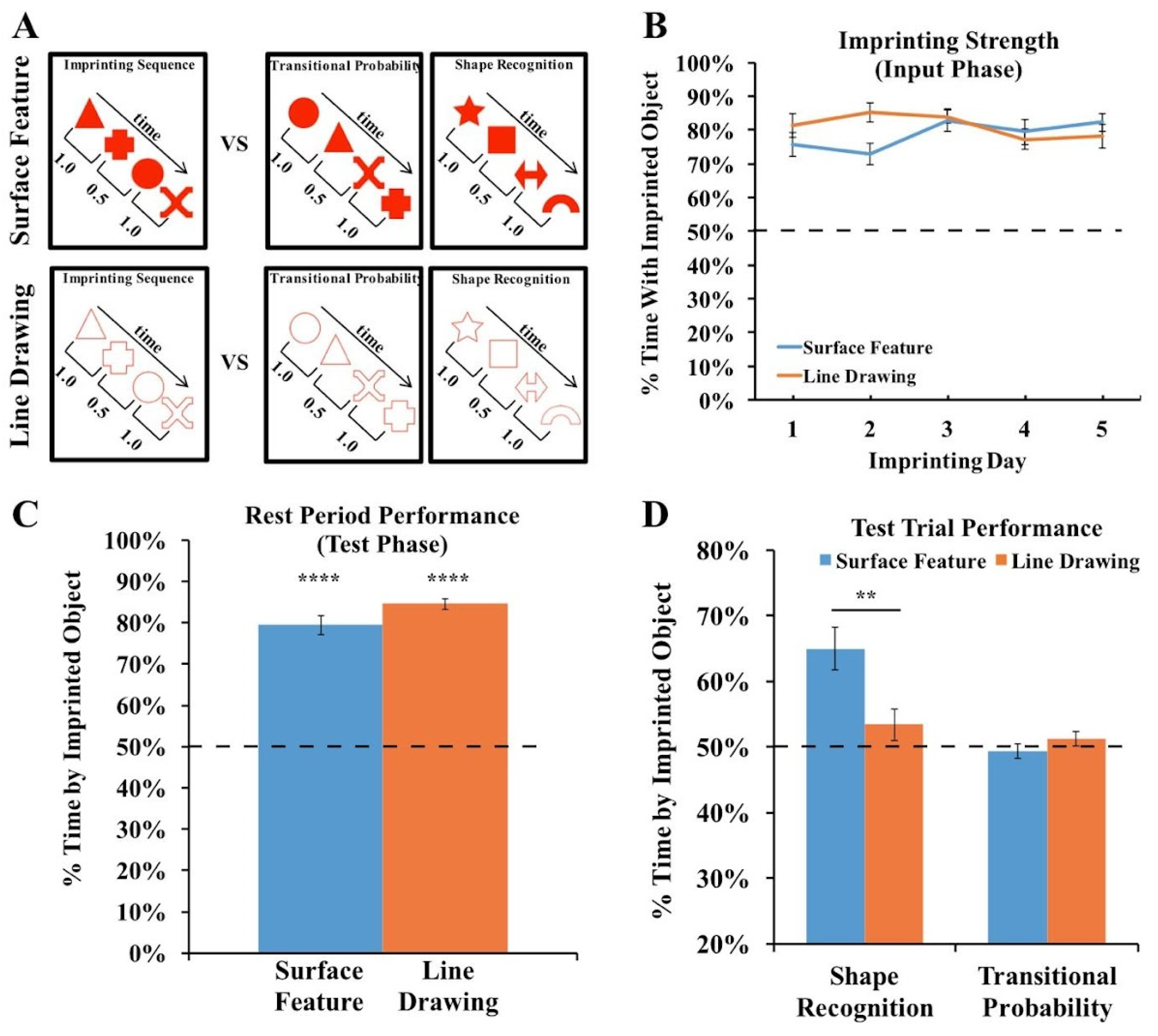

4. Experiment 3

4.1. Materials and Methods

4.2. Results and Discussion

5. General Discussion

Limitations of This Study and Directions for Future Research

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- DiCarlo, J.J.; Zoccolan, D.; Rust, N.C. How does the brain solve visual object recognition? Neuron 2012, 73, 415–434. [Google Scholar] [CrossRef] [PubMed]

- Prasad, A.; Wood, S.M.; Wood, J.N. Using automated controlled rearing to explore the origins of object permanence. Dev. Sci. 2019, 22, e12796. [Google Scholar] [CrossRef] [PubMed]

- Wood, J.N. A Smoothness constraint on the development of object recognition. Cognition 2016, 153, 140–145. [Google Scholar] [CrossRef]

- Wood, J.N.; Wood, S.M.W. The development of newborn object recognition in fast and slow visual worlds. Proc. R. Soc. B 2016, 283, 20160166. [Google Scholar] [CrossRef]

- Wood, J.N.; Wood, S.M. The development of invariant object recognition requires visual experience with temporally smooth objects. Cogn. Sci. 2018, 42, 1391–1406. [Google Scholar] [CrossRef]

- Biederman, I. Recognition-by-components: A theory of human image understanding. Psychol. Rev. 1987, 94, 115. [Google Scholar] [CrossRef] [PubMed]

- Biederman, I.; Ju, G. Surface versus edge-based determinants of visual recognition. Cogn. Psychol. 1988, 20, 38–64. [Google Scholar] [CrossRef]

- Ishai, A.; Ungerleider, L.G.; Martin, A.; Haxby, J.V. The representation of objects in the human occipital and temporal cortex. J. Cogn. Neurosci. 2000, 12, 35–51. [Google Scholar] [CrossRef]

- Walther, D.B.; Chai, B.; Caddigan, E.; Beck, D.M.; Fei-Fei, L. Simple line drawings suffice for functional MRI decoding of natural scene categories. Proc. Natl. Acad. Sci. USA 2011, 108, 9661–9666. [Google Scholar] [CrossRef]

- Yonas, A.; Arterberry, M.E. Infants perceive spatial structure specified by line junctions. Perception 1994, 23, 1427–1435. [Google Scholar] [CrossRef]

- Goodnow, J. Children Drawing; Harvard University Press: Cambridge, MA, USA, 1977; ISBN 0-674-49214-5. [Google Scholar]

- Clottes, J. Chauvet Cave (ca. 30,000 BC). In Heilbrunn Timeline of Art History; The Metropolitan Museum of Art: New York, NY, USA, 2000; Volume 2010. [Google Scholar]

- Kennedy, J.M.; Ross, A.S. Outline picture perception by the Songe of Papua. Perception 1975, 4, 391–406. [Google Scholar] [CrossRef]

- Itakura, S. Recognition of line-drawing representations by a chimpanzee (Pan troglodytes). J. Gen. Psychol. 1994, 121, 189–197. [Google Scholar] [CrossRef]

- Tanaka, M. Recognition of pictorial representations by chimpanzees (Pan troglodytes). Anim. Cogn. 2007, 10, 169–179. [Google Scholar] [CrossRef]

- Wasserman, E.A.; Gagliardi, J.L.; Cook, B.R.; Kirkpatrick-Steger, K.; Astley, S.L.; Biederman, I. The pigeon’s recognition of drawings of depth-rotated stimuli. J. Exp. Psychol. Anim. Behav. Process. 1996, 22, 205. [Google Scholar] [CrossRef]

- Sayim, B.; Cavanagh, P. What line drawings reveal about the visual brain. Front. Hum. Neurosci. 2011, 5, 118. [Google Scholar] [CrossRef]

- Hayward, W.G.; Williams, P. Viewpoint dependence and object discriminability. Psychol. Sci. 2000, 11, 7–12. [Google Scholar] [CrossRef] [PubMed]

- Naor-Raz, G.; Tarr, M.J.; Kersten, D. Is color an intrinsic property of object representation? Perception 2003, 32, 667–680. [Google Scholar] [CrossRef] [PubMed]

- Rossion, B.; Pourtois, G. Revisiting Snodgrass and Vanderwart’s object pictorial set: The role of surface detail in basic-level object recognition. Perception 2004, 33, 217–236. [Google Scholar] [CrossRef] [PubMed]

- Tarr, M.J.; Kersten, D.; Bülthoff, H.H. Why the visual recognition system might encode the effects of illumination. Vis. Res. 1998, 38, 2259–2275. [Google Scholar] [CrossRef]

- Vuong, Q.C.; Peissig, J.J.; Harrison, M.C.; Tarr, M.J. The role of surface pigmentation for recognition revealed by contrast reversal in faces and Greebles. Vis. Res. 2005, 45, 1213–1223. [Google Scholar] [CrossRef] [PubMed]

- Wurm, L.H.; Legge, G.E.; Isenberg, L.M.; Luebker, A. Color improves object recognition in normal and low vision. J. Exp. Psychol. Hum. Percept. Perform. 1993, 19, 899. [Google Scholar] [CrossRef] [PubMed]

- Price, C.J.; Humphreys, G.W. The effects of surface detail on object categorization and naming. Q. J. Exp. Psychol. 1989, 41, 797–828. [Google Scholar] [CrossRef] [PubMed]

- Spelke, E.S. Principles of object perception. Cogn. Sci. 1990, 14, 29–56. [Google Scholar] [CrossRef]

- Wood, J.N. Newborn chickens generate invariant object representations at the onset of visual object experience. Proc. Natl. Acad. Sci. USA 2013, 110, 14000–14005. [Google Scholar] [CrossRef] [PubMed]

- Wood, S.M.; Wood, J.N. Using automation to combat the replication crisis: A case study from controlled-rearing studies of newborn chicks. Infant Behav. Dev. 2019, 57, 101329. [Google Scholar] [CrossRef]

- Wood, S.M.W.; Wood, J.N. A chicken model for studying the emergence of invariant object recognition. Front. Neural Circuits 2015, 9, 7. [Google Scholar] [CrossRef] [PubMed]

- Horn, G. Pathways of the past: The imprint of memory. Nat. Rev. Neurosci. 2004, 5, 108–120. [Google Scholar] [CrossRef]

- Wood, J.N. Newly hatched chicks solve the visual binding problem. Psychol. Sci. 2014, 25, 1475–1481. [Google Scholar] [CrossRef] [PubMed]

- Wood, S.M.; Wood, J.N. One-shot object parsing in newborn chicks. J. Exp. Psychol. Gen. 2021, 150, 2408. [Google Scholar] [CrossRef] [PubMed]

- Wood, J.N. Characterizing the information content of a newly hatched chick’s first visual object representation. Dev. Sci. 2015, 18, 194–205. [Google Scholar] [CrossRef]

- Wood, J.N.; Wood, S.M. Measuring the speed of newborn object recognition in controlled visual worlds. Dev. Sci. 2017, 20, e12470. [Google Scholar] [CrossRef] [PubMed]

- Jarvis, E.D.; Güntürkün, O.; Bruce, L.; Csillag, A.; Karten, H.; Kuenzel, W.; Medina, L.; Paxinos, G.; Perkel, D.J.; Shimizu, T. Avian brains and a new understanding of vertebrate brain evolution. Nat. Rev. Neurosci. 2005, 6, 151–159. [Google Scholar] [CrossRef] [PubMed]

- Güntürkün, O.; Bugnyar, T. Cognition without cortex. Trends Cogn. Sci. 2016, 20, 291–303. [Google Scholar] [CrossRef] [PubMed]

- Karten, H.J. Neocortical evolution: Neuronal circuits arise independently of lamination. Curr. Biol. 2013, 23, R12–R15. [Google Scholar] [CrossRef]

- Stacho, M.; Herold, C.; Rook, N.; Wagner, H.; Axer, M.; Amunts, K.; Güntürkün, O. A cortex-like canonical circuit in the avian forebrain. Science 2020, 369, eabc5534. [Google Scholar] [CrossRef] [PubMed]

- Dugas-Ford, J.; Rowell, J.J.; Ragsdale, C.W. Cell-type homologies and the origins of the neocortex. Proc. Natl. Acad. Sci. USA 2012, 109, 16974–16979. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Brzozowska-Prechtl, A.; Karten, H.J. Laminar and columnar auditory cortex in avian brain. Proc. Natl. Acad. Sci. USA 2010, 107, 12676–12681. [Google Scholar] [CrossRef] [PubMed]

- Calabrese, A.; Woolley, S.M. coding principles of the canonical cortical microcircuit in the avian brain. Proc. Natl. Acad. Sci. USA 2015, 112, 3517–3522. [Google Scholar] [CrossRef] [PubMed]

- Shanahan, M.; Bingman, V.P.; Shimizu, T.; Wild, M.; Güntürkün, O. Large-scale network organization in the avian forebrain: A connectivity matrix and theoretical analysis. Front. Comput. Neurosci. 2013, 7, 89. [Google Scholar] [CrossRef]

- Wood, S.M.; Johnson, S.P.; Wood, J.N. Automated study challenges the existence of a foundational statistical-learning ability in newborn chicks. Psychol. Sci. 2019, 30, 1592–1602. [Google Scholar] [CrossRef]

- Zoccolan, D.; Oertelt, N.; DiCarlo, J.J.; Cox, D.D. A rodent model for the study of invariant visual object recognition. Proc. Natl. Acad. Sci. USA 2009, 106, 8748–8753. [Google Scholar] [CrossRef]

- Bateson, P.; Jaeckel, J.B. Chicks’ preferences for familiar and novel conspicuous objects after different periods of exposure. Anim. Behav. 1976, 24, 386–390. [Google Scholar] [CrossRef]

- Ham, A.D.; Osorio, D. Colour preferences and colour vision in poultry chicks. Proc. R. Soc. B Biol. Sci. 2007, 274, 1941–1948. [Google Scholar] [CrossRef] [PubMed]

- Johnson, M.; Bolhuis, J.; Horn, G. Interaction between acquired preferences and developing predispositions during imprinting. Anim. Behav. 1985, 33, 1000–1006. [Google Scholar] [CrossRef]

- Miura, M.; Nishi, D.; Matsushima, T. Combined predisposed preferences for colour and biological motion make robust development of social attachment through imprinting. Anim. Cogn. 2020, 23, 169–188. [Google Scholar] [CrossRef] [PubMed]

- Nakamori, T.; Maekawa, F.; Sato, K.; Tanaka, K.; Ohki-Hamazaki, H. Neural basis of imprinting behavior in chicks. Dev. Growth Differ. 2013, 55, 198–206. [Google Scholar] [CrossRef]

- Wood, J.N.; Prasad, A.; Goldman, J.G.; Wood, S.M. Enhanced learning of natural visual sequences in newborn chicks. Anim. Cogn. 2016, 19, 835–845. [Google Scholar] [CrossRef]

- Bateson, P.; Horn, G.; Rose, S. Effects of early experience on regional incorporation of precursors into RNA and protein in the chick brain. Brain Res. 1972, 39, 449–465. [Google Scholar] [CrossRef]

- Horn, G.; McCabe, B.; Bateson, P. An autoradiographic study of the chick brain after imprinting. Brain Res. 1979, 168, 361–373. [Google Scholar] [CrossRef]

- Horn, G. Review lecture: Neural mechanisms of learning: An analysis of imprinting in the domestic chick. Proc. R. Soc. Lond. Ser. B. Biol. Sci. 1981, 213, 101–137. [Google Scholar]

- McCabe, B.; Horn, G.; Bateson, P. Effects of restricted lesions of the chick forebrain on the acquisition of filial preferences during imprinting. Brain Res. 1981, 205, 29–37. [Google Scholar] [CrossRef]

- McCabe, B.; Cipolla-Neto, J.; Horn, G.; Bateson, P. Amnesic effects of bilateral lesions placed in the hyperstriatum ventrale of the chick after imprinting. Exp. Brain Res. 1982, 48, 13–21. [Google Scholar] [CrossRef]

- Horn, G.; McCabe, B.J. Predispositions and preferences. Effects on imprinting of lesions to the chick brain. Anim. Behav. 1984, 32, 288–292. [Google Scholar] [CrossRef]

- Versace, E.; Martinho-Truswell, A.; Kacelnik, A.; Vallortigara, G. Priors in animal and artificial intelligence: Where does learning begin? Trends Cogn. Sci. 2018, 22, 963–965. [Google Scholar] [CrossRef]

- Wood, J.N. Spontaneous preference for slowly moving objects in visually naïve animals. Open Mind 2017, 1, 111–122. [Google Scholar] [CrossRef]

- Matteucci, G.; Zoccolan, D. Unsupervised experience with temporal continuity of the visual environment is causally involved in the development of V1 complex cells. Sci. Adv. 2020, 6, eaba3742. [Google Scholar] [CrossRef] [PubMed]

- Hubel, D.H.; Wiesel, T.N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Receptive fields and functional architecture of monkey striate cortex. J. Physiol. 1968, 195, 215–243. [Google Scholar] [CrossRef]

- Lee, D.; Gujarathi, P.; Wood, J.N. Controlled-rearing studies of newborn chicks and deep neural networks. arXiv 2021, arXiv:2112.06106. [Google Scholar]

- McGraw, J.; Lee, D.; Wood, J. Parallel development of social preferences in fish and machines. arXiv 2023, arXiv:2305.11137. [Google Scholar]

- Pandey, L.; Wood, S.M.; Wood, J.N. Are vision transformers more data hungry than newborn visual systems? arXiv 2023, arXiv:2312.02843. [Google Scholar]

- Pak, D.; Lee, D.; Wood, S.M.; Wood, J.N. A newborn embodied turing test for view-invariant object recognition. arXiv 2023, arXiv:2306.05582. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wood, J.N.; Wood, S.M.W. The Development of Object Recognition Requires Experience with the Surface Features of Objects. Animals 2024, 14, 284. https://doi.org/10.3390/ani14020284

Wood JN, Wood SMW. The Development of Object Recognition Requires Experience with the Surface Features of Objects. Animals. 2024; 14(2):284. https://doi.org/10.3390/ani14020284

Chicago/Turabian StyleWood, Justin Newell, and Samantha Marie Waters Wood. 2024. "The Development of Object Recognition Requires Experience with the Surface Features of Objects" Animals 14, no. 2: 284. https://doi.org/10.3390/ani14020284

APA StyleWood, J. N., & Wood, S. M. W. (2024). The Development of Object Recognition Requires Experience with the Surface Features of Objects. Animals, 14(2), 284. https://doi.org/10.3390/ani14020284