Characterization of Vegetation Physiognomic Types Using Bidirectional Reflectance Data

Abstract

:1. Introduction

2. Materials and Methods

2.1. Processing of Satellite Data

2.2. Preparation of Ground Truth Data

2.3. Machine Learning and Cross-Validation

3. Results and Discussion

3.1. Cross-Validation Results

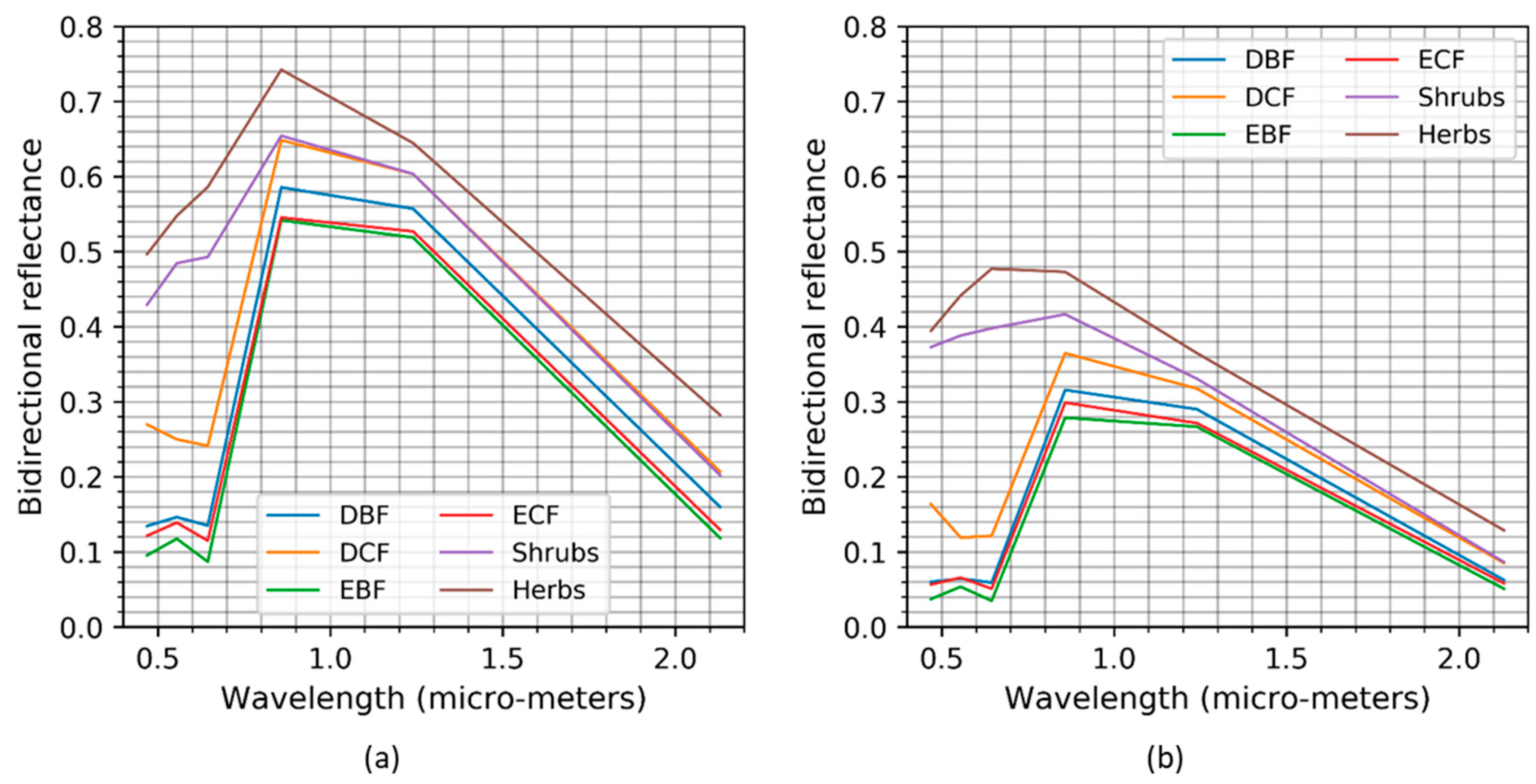

3.2. Comparison of the Spectral Profiles

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ohba, H. The flora of Japan and the implication of global climatic change. J. Plant Res. 1994, 107, 85–89. [Google Scholar] [CrossRef]

- Gehrig-Fasel, J.; Guisan, A.; Zimmermann, N.E. Tree line shifts in the Swiss Alps: Climate change or land abandonment? J. Veg. Sci. 2007, 18, 571–582. [Google Scholar] [CrossRef]

- Seim, A.; Treydte, K.; Trouet, V.; Frank, D.; Fonti, P.; Tegel, W.; Panayotov, M.; Fernández-Donado, L.; Krusic, P.; Büntgen, U. Climate sensitivity of Mediterranean pine growth reveals distinct east-west dipole: East-west dipole in climate sensitivity of Mediterranean pines. Int. J. Clim. 2015, 35, 2503–2513. [Google Scholar] [CrossRef]

- Beard, J.S. The Physiognomic Approach. In Classification of Plant Communities; Whittaker, R.H., Ed.; Springer: Dordrecht, The Netherlands, 1978; pp. 33–64. ISBN 978-94-009-9183-5. [Google Scholar]

- Stuart, N.; Barratt, T.; Place, C. Classifying the Neotropical savannas of Belize using remote sensing and ground survey. J. Biogeogr. 2006, 33, 476–490. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Q.; Zhao, L.; Wu, X.; Yue, G.; Zou, D.; Nan, Z.; Liu, G.; Pang, Q.; Fang, H.; et al. Mapping the vegetation distribution of the permafrost zone on the Qinghai-Tibet Plateau. J. Mt. Sci. 2016, 13, 1035–1046. [Google Scholar] [CrossRef]

- Betbeder, J.; Rapinel, S.; Corpetti, T.; Pottier, E.; Corgne, S.; Hubert-Moy, L. Multitemporal classification of TerraSAR-X data for wetland vegetation mapping. J. Appl. Remote Sens. 2014, 8, 083648. [Google Scholar] [CrossRef]

- Schwieder, M.; Leitão, P.J.; da Cunha Bustamante, M.M.; Ferreira, L.G.; Rabe, A.; Hostert, P. Mapping Brazilian savanna vegetation gradients with Landsat time series. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 361–370. [Google Scholar] [CrossRef]

- Vanselow, K.; Samimi, C. Predictive Mapping of Dwarf Shrub Vegetation in an Arid High Mountain Ecosystem Using Remote Sensing and Random Forests. Remote Sens. 2014, 6, 6709–6726. [Google Scholar] [CrossRef] [Green Version]

- Torbick, N.; Ledoux, L.; Salas, W.; Zhao, M. Regional Mapping of Plantation Extent Using Multisensor Imagery. Remote Sens. 2016, 8, 236. [Google Scholar] [CrossRef]

- Fu, B.; Wang, Y.; Campbell, A.; Li, Y.; Zhang, B.; Yin, S.; Xing, Z.; Jin, X. Comparison of object-based and pixel-based Random Forest algorithm for wetland vegetation mapping using high spatial resolution GF-1 and SAR data. Ecol. Indic. 2017, 73, 105–117. [Google Scholar] [CrossRef]

- Carpenter, G.A.; Gopal, S.; Macomber, S.; Martens, S.; Woodcock, C.E. A neural network method for mixture estimation for vegetation mapping. Remote Sens. Environ. 1999, 70, 138–152. [Google Scholar] [CrossRef]

- Zhang, C.; Xie, Z. Combining object-based texture measures with a neural network for vegetation mapping in the Everglades from hyperspectral imagery. Remote Sens. Environ. 2012, 124, 310–320. [Google Scholar] [CrossRef]

- Antropov, O.; Rauste, Y.; Astola, H.; Praks, J.; Häme, T.; Hallikainen, M.T. Land cover and soil type mapping from spaceborne PolSAR data at L-band with probabilistic neural network. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5256–5270. [Google Scholar] [CrossRef]

- Ross, I. The Radiation Regime and Architecture of Plant Stands; Tasks for Vegetation Sciences; Dr. W. Junk Publishers: The Hague, The Netherlands; Kluwer Boston: Boston, MA, USA, 1981; ISBN 978-90-6193-607-7. [Google Scholar]

- Roujean, J.-L.; Leroy, M.; Deschamps, P.-Y. A bidirectional reflectance model of the Earth’s surface for the correction of remote sensing data. J. Geophys. Res. 1992, 97, 20455. [Google Scholar] [CrossRef]

- Li, X.; Strahler, A.H. Geometric-optical bidirectional reflectance modeling of the discrete crown vegetation canopy: Effect of crown shape and mutual shadowing. IEEE Trans. Geosci. Remote Sens. 1992, 30, 276–292. [Google Scholar] [CrossRef]

- Wanner, W.; Li, X.; Strahler, A.H. On the derivation of kernels for kernel-driven models of bidirectional reflectance. J. Geophys. Res. 1995, 100, 21077. [Google Scholar] [CrossRef]

- Lucht, W.; Schaaf, C.B.; Strahler, A.H. An algorithm for the retrieval of albedo from space using semiempirical BRDF models. IEEE Trans. Geosci. Remote Sens. 2000, 38, 977–998. [Google Scholar] [CrossRef]

- Schaaf, C.B.; Gao, F.; Strahler, A.H.; Lucht, W.; Li, X.; Tsang, T.; Strugnell, N.C.; Zhang, X.; Jin, Y.; Muller, J.-P.; et al. First operational BRDF, albedo nadir reflectance products from MODIS. Remote Sens. Environ. 2002, 83, 135–148. [Google Scholar] [CrossRef] [Green Version]

- Liang, S.; Fang, H.; Chen, M.; Shuey, C.J.; Walthall, C.; Daughtry, C.; Morisette, J.; Schaaf, C.; Strahler, A. Validating MODIS land surface reflectance and albedo products: Methods and preliminary results. Remote Sens. Environ. 2002, 83, 149–162. [Google Scholar] [CrossRef]

- Jin, Y. Consistency of MODIS surface bidirectional reflectance distribution function and albedo retrievals: 2. Validation. J. Geophys. Res. 2003, 108. [Google Scholar] [CrossRef] [Green Version]

- Samain, O.; Roujean, J.; Geiger, B. Use of a Kalman filter for the retrieval of surface BRDF coefficients with a time-evolving model based on the ECOCLIMAP land cover classification. Remote Sens. Environ. 2008, 112, 1337–1346. [Google Scholar] [CrossRef]

- Liu, J.; Schaaf, C.; Strahler, A.; Jiao, Z.; Shuai, Y.; Zhang, Q.; Roman, M.; Augustine, J.A.; Dutton, E.G. Validation of Moderate Resolution Imaging Spectroradiometer (MODIS) albedo retrieval algorithm: Dependence of albedo on solar zenith angle. J. Geophys. Res. 2009, 114. [Google Scholar] [CrossRef] [Green Version]

- Román, M.O.; Gatebe, C.K.; Schaaf, C.B.; Poudyal, R.; Wang, Z.; King, M.D. Variability in surface BRDF at different spatial scales (30 m–500 m) over a mixed agricultural landscape as retrieved from airborne and satellite spectral measurements. Remote Sens. Environ. 2011, 115, 2184–2203. [Google Scholar] [CrossRef]

- Friedl, M.A.; Sulla-Menashe, D.; Tan, B.; Schneider, A.; Ramankutty, N.; Sibley, A.; Huang, X. MODIS Collection 5 global land cover: Algorithm refinements and characterization of new datasets. Remote Sens. Environ. 2010, 114, 168–182. [Google Scholar] [CrossRef]

- Kobayashi, T.; Tateishi, R.; Alsaaideh, B.; Sharma, R.C.; Wakaizumi, T.; Miyamoto, D.; Bai, X.; Long, B.D.; Gegentana, G.; Maitiniyazi, A.; et al. Production of Global Land Cover Data—GLCNMO2013. J. Geogr. Geol. 2017, 9, 1. [Google Scholar] [CrossRef]

- Sharma, R.C.; Hara, K.; Hirayama, H.; Harada, I.; Hasegawa, D.; Tomita, M.; Geol Park, J.; Asanuma, I.; Short, K.M.; Hara, M.; et al. Production of Multi-Features Driven Nationwide Vegetation Physiognomic Map and Comparison to MODIS Land Cover Type Product. Adv. Remote Sens. 2017, 6, 54–65. [Google Scholar] [CrossRef]

- Sharma, R.C.; Hara, K.; Hirayama, H. Improvement of Countrywide Vegetation Mapping over Japan and Comparison to Existing Maps. Adv. Remote Sens. 2018, 07, 163–170. [Google Scholar] [CrossRef]

- Sharma, R.C.; Hara, K.; Hirayama, H. A Machine Learning and Cross-Validation Approach for the Discrimination of Vegetation Physiognomic Types Using Satellite Based Multispectral and Multitemporal Data. Scientifica 2017, 2017, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kimes, D.S.; Newcomb, W.W.; Nelson, R.F.; Schutt, J.B. Directional Reflectance Distributions of a Hardwood and Pine Forest Canopy. IEEE Trans. Geosci. Remote Sens. 1986, GE-24, 281–293. [Google Scholar] [CrossRef]

- Sandmeier, S.R.; Deering, D.W. A new approach to derive canopy structure information for boreal forests using spectral BRDF data. In IEEE 1999 International Geoscience and Remote Sensing Symposium. IGARSS’99 (Cat. No.99CH36293); IEEE: Hamburg, Germany, 1999; Volume 1, pp. 410–412. [Google Scholar]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubühler, M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Matasci, G.; Longbotham, N.; Pacifici, F.; Kanevski, M.; Tuia, D. Understanding angular effects in VHR imagery and their significance for urban land-cover model portability: A study of two multi-angle in-track image sequences. ISPRS J. Photogramm. Remote Sens. 2015, 107, 99–111. [Google Scholar] [CrossRef] [Green Version]

- Rautiainen, M.; Lang, M.; Mõttus, M.; Kuusk, A.; Nilson, T.; Kuusk, J.; Lükk, T. Multi-angular reflectance properties of a hemiboreal forest: An analysis using CHRIS PROBA data. Remote Sens. Environ. 2008, 112, 2627–2642. [Google Scholar] [CrossRef]

- Bacour, C.; Bréon, F.-M. Variability of biome reflectance directional signatures as seen by POLDER. Remote Sens. Environ. 2005, 98, 80–95. [Google Scholar] [CrossRef]

- Lacaze, R. Retrieval of vegetation clumping index using hot spot signatures measured by POLDER instrument. Remote Sens. Environ. 2002, 79, 84–95. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.M.; Menges, C.H.; Leblanc, S.G. Global mapping of foliage clumping index using multi-angular satellite data. Remote Sens. Environ. 2005, 97, 447–457. [Google Scholar] [CrossRef]

- Pocewicz, A.; Vierling, L.A.; Lentile, L.B.; Smith, R. View angle effects on relationships between MISR vegetation indices and leaf area index in a recently burned ponderosa pine forest. Remote Sens. Environ. 2007, 107, 322–333. [Google Scholar] [CrossRef]

- Sharma, R.C.; Kajiwara, K.; Honda, Y. Automated extraction of canopy shadow fraction using unmanned helicopter-based color vegetation indices. Trees 2013, 27, 675–684. [Google Scholar] [CrossRef]

- Song, X.; Feng, W.; He, L.; Xu, D.; Zhang, H.-Y.; Li, X.; Wang, Z.-J.; Coburn, C.A.; Wang, C.-Y.; Guo, T.-C. Examining view angle effects on leaf N estimation in wheat using field reflectance spectroscopy. ISPRS J. Photogramm. Remote Sens. 2016, 122, 57–67. [Google Scholar] [CrossRef]

- Liu, X.; Liu, L. Influence of the canopy BRDF characteristics and illumination conditions on the retrieval of solar-induced chlorophyll fluorescence. Int. J. Remote Sens. 2018, 39, 1782–1799. [Google Scholar] [CrossRef]

| Spectral | Angular (SZA, VZA, RAA) | Temporal |

|---|---|---|

| 6 | ➀ 0°, 0°, 0° | 11 |

| ➁ 45°, 0°, 0° | ||

| ➂ 45°, 45°, 0° | ||

| ➃ 45°, 45°, 180° | ||

| Total features = 6 × 4 × 11 = 264 | ||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, R.C.; Hara, K. Characterization of Vegetation Physiognomic Types Using Bidirectional Reflectance Data. Geosciences 2018, 8, 394. https://doi.org/10.3390/geosciences8110394

Sharma RC, Hara K. Characterization of Vegetation Physiognomic Types Using Bidirectional Reflectance Data. Geosciences. 2018; 8(11):394. https://doi.org/10.3390/geosciences8110394

Chicago/Turabian StyleSharma, Ram C., and Keitarou Hara. 2018. "Characterization of Vegetation Physiognomic Types Using Bidirectional Reflectance Data" Geosciences 8, no. 11: 394. https://doi.org/10.3390/geosciences8110394

APA StyleSharma, R. C., & Hara, K. (2018). Characterization of Vegetation Physiognomic Types Using Bidirectional Reflectance Data. Geosciences, 8(11), 394. https://doi.org/10.3390/geosciences8110394