Eliciting Learner Knowledge: Enabling Focused Practice through an Open-Source Online Tool

Abstract

:1. Introduction

1.1. Importance of Surfacing Students’ Conceptions

1.2. Teacher Questioning That Promotes Student-Centered STEM Instruction

1.3. Question Types That Discourage Student-Centered STEM Instruction

1.4. Practicing Eliciting and Probing Learner Knowledge through Simulations

1.5. Role Plays

1.6. Challenges of Role Plays

1.7. Eliciting Learner Knowledge (ELK)

- What types of questioning strategies do PSTs employ during the ELK simulation?

- How do PSTs perceive the goal(s) and authenticity of the ELK simulation and what have they learned from participating in the ELK simulation?

2. Materials and Methods

2.1. Study Context

2.2. Transcript Data (RQ1)

2.3. Survey Data (RQ2)

2.4. Data Analytic Methods

2.5. Patterns of Questioning Strategies (RQ1)

2.6. PST Perceptions of ELK Analytic Approach (RQ2)

2.7. Sample

3. Results

3.1. Learning from Playing the Role of the Teacher

3.2. Learning from Playing the Role of the Student

3.3. Authenticity of ELK

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Gwet’s AC Results

| Argumentation Strategy | Gwet’s AC1 |

| Priming | 0.999 |

| Eliciting | 1.000 |

| Probing | 1.000 |

| Revoicing | 1.000 |

| You-Focused Questions | 1.000 |

| Evaluating | 1.000 |

| Telling | 0.998 |

| Funneling | 0.999 |

| Yes/No Questions | 1.000 |

Appendix B. Survey Questions

References

- Lampert, M.; Franke, M.L.; Kazemi, E.; Ghousseini, H.; Turrou, A.C.; Beasley, H.; Cunard, A.; Crowe, K. Keeping It Complex: Using Rehearsals to Support Novice Teacher Learning of Ambitious Teaching. J. Teach. Educ. 2013, 64, 226–243. [Google Scholar] [CrossRef]

- Anderson, N.; Slama, R. Using Digital Clinical Simulations and Authoring Tools to Support Teachers in Eliciting Learners’ Mathematical Knowledge. In Connected Learning Summit. 2020. Available online: https://kilthub.cmu.edu/ndownloader/files/25990886#page=18 (accessed on 9 July 2021).

- Kalinec-Craig, C. The Rights of the Learner: A Framework for Promoting Equity through Formative Assessment in Mathematics Education. Democr. Educ. 2017, 25, 1–11. [Google Scholar]

- TeachingWorks Eliciting and Interpreting—TeachingWorks Resource Library. Available online: https://library.teachingworks.org/curriculum-resources/teaching-practices/eliciting-and-interpreting/ (accessed on 11 March 2022).

- Windschitl, M.; Thompson, J.; Braaten, M.; Stroupe, D. Proposing a core set of instructional practices and tools for teachers of science. Sci. Educ. 2012, 96, 878–903. [Google Scholar] [CrossRef]

- Jacobs, V.R.; Lamb, L.L.C.; Philipp, R.A. Professional Noticing of Children’s Mathematical Thinking. J. Res. Math. Educ. 2010, 41, 169–202. [Google Scholar] [CrossRef]

- National Research Council. How People Learn: Brain, Mind, Experience, and School, Expanded ed.; National Academy Press: Washington, DC, USA, 2000; p. 374. [Google Scholar]

- Kranzfelder, P.; Bankers-Fulbright, J.L.; García-Ojeda, M.E.; Melloy, M.; Mohammed, S.; Warfa, A.-R.M. Undergraduate Biology Instructors Still Use Mostly Teacher-Centered Discourse Even When Teaching with Active Learning Strategies. BioScience 2020, 70, 901–913. [Google Scholar] [CrossRef]

- Pimentel, D.S.; McNeill, K.L. Conducting Talk in Secondary Science Classrooms: Investigating Instructional Moves and Teachers’ Beliefs. Sci. Educ. 2013, 97, 367–394. [Google Scholar] [CrossRef]

- Campbell, T.; Schwarz, C.; Windschitl, M. What We Call Misconceptions May Be Necessary Stepping-Stones Toward Making Sense of the World. Sci. Scope 2016, 83, 69–74. [Google Scholar]

- Larkin, D. Misconceptions about “misconceptions”: Preservice secondary science teachers’ views on the value and role of student ideas. Sci. Educ. 2012, 96, 927–959. [Google Scholar] [CrossRef]

- Grossman, P.; Hammerness, K.; McDonald, M. Redefining teaching, re-imagining teacher education. Teach. Teach. 2009, 15, 273–289. [Google Scholar] [CrossRef]

- Michaels, S.; O’Connor, C. Conceptualizing Talk Moves as Tools: Professional Development Approaches for Academically Productive Discussions. In Socializing Intelligence Through Academic Talk and Dialogue; Resnick, L.B., Asterhan, C.S.C., Clarke, S.N., Eds.; American Educational Research Association: Chicago, IL, USA, 2015; pp. 347–361. [Google Scholar]

- Harlen, W. Teaching Science for Understanding in Elementary and Middle Schools; Heinemann: Portsmouth, NH, USA, 2015; p. 176. [Google Scholar]

- Crow, M.L.; Nelson, L.P. The Effects of Using Academic Role-Playing in a Teacher Education Service-Learning Course. Int. J. Role Play. 2015, 5, 26–34. [Google Scholar]

- Fernández, M.L. Investigating how and what prospective teachers learn through microteaching lesson study. Teach. Teach. Educ. 2010, 26, 351–362. [Google Scholar] [CrossRef]

- Brown, A.L.; Lee, J.; Collins, D. Does student teaching matter? Investigating pre-service teachers’ sense of efficacy and preparedness. Teach. Educ. 2015, 26, 77–93. [Google Scholar] [CrossRef]

- Shaughnessy, M.; Boerst, T.A.; Farmer, S.O. Complementary assessments of prospective teachers’ skill with eliciting student thinking. J. Math. Teach. Educ. 2019, 22, 607–638. [Google Scholar] [CrossRef]

- Levin, D.M.; Grant, T.; Hammer, D. Attending and Responding to Student Thinking in Science. Am. Biol. Teach. 2012, 74, 158–162. [Google Scholar] [CrossRef]

- Sussman, A.; Hammerman, J.K.L.; Higgins, T.; Hochberg, E.D. Questions to Elicit Students’ Mathematical Ideas. Teach. Child. Math. 2019, 25, 306–312. [Google Scholar] [CrossRef]

- Sadler, P.M.; Sonnert, G.; Coyle, H.P.; Cook-Smith, N.; Miller, J.L. The Influence of Teachers’ Knowledge on Student Learning in Middle School Physical Science Classrooms. Am. Educ. Res. J. 2013, 50, 1020–1049. [Google Scholar] [CrossRef]

- Schuster, L.; Anderson, N.C. Good Questions for Math Teaching: Why Ask Them and what to Ask, Grades 5–8; Math Solutions: Portsmouth, NH, USA, 2005; p. 206. [Google Scholar]

- Duckor, B. Formative assessment in seven good moves. In On Formative Assessment: Readings from Educational Leadership; ASCD: Alexandria, VA, USA, 2016. [Google Scholar]

- Anderson, N.; Chapin, S.; O’Connor, C. Classroom Discussions: Seeing Math Discourse in Action, Grades K-6; Math Solutions: Sausalito, CA, USA, 2011. [Google Scholar]

- Franke, M.L.; Webb, N.M.; Chan, A.G.; Ing, M.; Freund, D.; Battey, D. Teacher Questioning to Elicit Students’ Mathematical Thinking in Elementary School Classrooms. J. Teach. Educ. 2009, 60, 380–392. [Google Scholar] [CrossRef]

- Shaughnessy, M.; Boerst, T.A. Uncovering the Skills That Preservice Teachers Bring to Teacher Education: The Practice of Eliciting a Student’s Thinking. J. Teach. Educ. 2018, 69, 40–55. [Google Scholar] [CrossRef]

- Chapin, S.H.; O’Connor, C.; Anderson, N.C. Classroom Discussions Using Math Talk in Elementary Classrooms; Math Solutions: Boston, MA, USA, 2003; pp. 1–3. [Google Scholar]

- Lee, Y.; Kinzie, M.B. Teacher question and student response with regard to cognition and language use. Instr. Sci. 2012, 40, 857–874. [Google Scholar] [CrossRef]

- Jacobs, V.R.; Martin, H.A.; Ambrose, R.C.; Philipp, R.A. Warning Signs! Teach. Child. Math. 2014, 21, 107–113. [Google Scholar] [CrossRef]

- Levy, S. “Yes” and “No” Questions Are Not Allowed: Probing Questions to Extend Discussion. Available online: https://busyteacher.org/24154-yes-and-no-questions-are-not-allowed-probing.html (accessed on 11 June 2022).

- Berland, L.K.; Hammer, D. Framing for scientific argumentation. J. Res. Sci. Teach. 2012, 49, 68–94. [Google Scholar] [CrossRef]

- Molinari, L.; Mameli, C. Process quality of classroom discourse: Pupil participation and learning opportunities. Int. J. Educ. Res. 2013, 62, 249–258. [Google Scholar] [CrossRef]

- Chernikova, O.; Heitzmann, N.; Stadler, M.; Holzberger, D.; Seidel, T.; Fischer, F. Simulation-Based Learning in Higher Education: A Meta-Analysis. Rev. Educ. Res. 2020, 90, 499–541. [Google Scholar] [CrossRef]

- Issenberg, S.B.; Mcgaghie, W.C.; Petrusa, E.R.; Gordon, D.L.; Scalese, R.J. Features and uses of high-fidelity medical simulations that lead to effective learning: A BEME systematic review. Med. Teach. 2005, 27, 10–28. [Google Scholar] [CrossRef]

- Raymond, C.; Usherwood, S. Assessment in Simulations. J. Political Sci. Educ. 2013, 9, 157–167. [Google Scholar] [CrossRef]

- van Merrienboer, J.; Kirschner, P. Ten Steps to Complex Learning: A Systematic Approach to Four-Component Instructional Design, 3rd ed.; Routledge: New York, NY, USA, 2017. [Google Scholar]

- Paas, F.; van Merriënboer, J.J.G. Cognitive-Load Theory: Methods to Manage Working Memory Load in the Learning of Complex Tasks. Curr. Dir. Psychol. Sci. 2020, 29, 394–398. [Google Scholar] [CrossRef]

- Reich, J. Teaching Drills: Advancing Practice-Based Teacher Education through Short, Low-Stakes, High-Frequency Practice. J. Technol. Teach. Educ. 2022, 30, 217–228. [Google Scholar]

- Crookall, D.; Oxford, R.; Saunders, D. Towards a Reconceptualization of Simulation: From Representation to Reality. Simul./Games Learn. 1987, 17, 147–170. [Google Scholar]

- Richards, J.C. Conversational Competence through Role Play Activities. RELC J. 1985, 16, 82–91. [Google Scholar] [CrossRef]

- Kilgour, P.W.; Reynaud, D.; Northcote, M.T.; Shields, M. Role-Playing as a Tool to Facilitate Learning, Self Reflection and Social Awareness in Teacher Education. Int. J. Innov. Interdiscip. Res. 2015, 14, 8–20. [Google Scholar]

- Dotger, B.H.; Dotger, S.C.; Maher, M.J. From Medicine to Teaching: The Evolution of the Simulated Interaction Model. Innov. High. Educ. 2010, 35, 129–141. [Google Scholar] [CrossRef]

- Dotger, B.H. I Had No Idea: Clinical Simulations for Teacher Development; IAP: Charlotte, NC, USA, 2013; p. 183. [Google Scholar]

- Thompson, M.; Owho-Ovuakporie, K.; Robinson, K.; Kim, Y.J.; Slama, R.; Reich, J. Teacher Moments: A Digital Simulation for Preservice Teachers to Approximate Parent–Teacher Conversations. J. Digit. Learn. Teach. Educ. 2019, 35, 144–164. [Google Scholar] [CrossRef]

- Self, E.A. Designing and Using Clinical Simulations to Prepare Teachers for Culturally Responsive Teaching. Ph.D. Thesis, Vanderbilt University, Nashville, TN, USA, 2016. [Google Scholar]

- Dieker, L.A.; Straub, C.L.; Hughes, C.E.; Hynes, M.C.; Hardin, S. Learning from Virtual Students. Educ. Leadersh. 2014, 71, 54–58. [Google Scholar]

- Mikeska, J.N.; Howell, H. Simulations as practice-based spaces to support elementary teachers in learning how to facilitate argumentation-focused science discussions. J. Res. Sci. Teach. 2020, 57, 1356–1399. [Google Scholar] [CrossRef]

- Baruch, Y. Role-play Teaching: Acting in the Classroom. Manag. Learn. 2006, 37, 43–61. [Google Scholar] [CrossRef]

- Keezhatta, M.S. Efficacy of Role-Play in Teaching and Formative Assessment for Undergraduate English-Major Students in Saudi Arabia. Arab. World Engl. J. 2020, 11, 549–566. [Google Scholar] [CrossRef]

- Gibson, D.; Christensen, R.; Tyler-Wood, T.; Knezek, G. SimSchool: Enhancing Teacher Preparation through Simulated Classrooms. In Proceedings of the Society for Information Technology & Teacher Education International Conference, Nashville, TN, USA, 7 March 2011; Association for the Advancement of Computing in Education (AACE): Nashville, TN, USA, 2011; pp. 1504–1510. [Google Scholar]

- Shaughnessy, M.; Boerst, T.; Ball, D.L. Simulating Teaching: New Possibilities for Assessing Teaching Practices. In Proceedings of the Annual Meeting of the North American Chapter of the International Group for the Psychology of Mathematics Education, East Lansing, MI, USA, 5–8 November 2015; International Group for the Psychology of Mathematics Education: East Lansing, MI, USA, 2015; Volume 4. [Google Scholar]

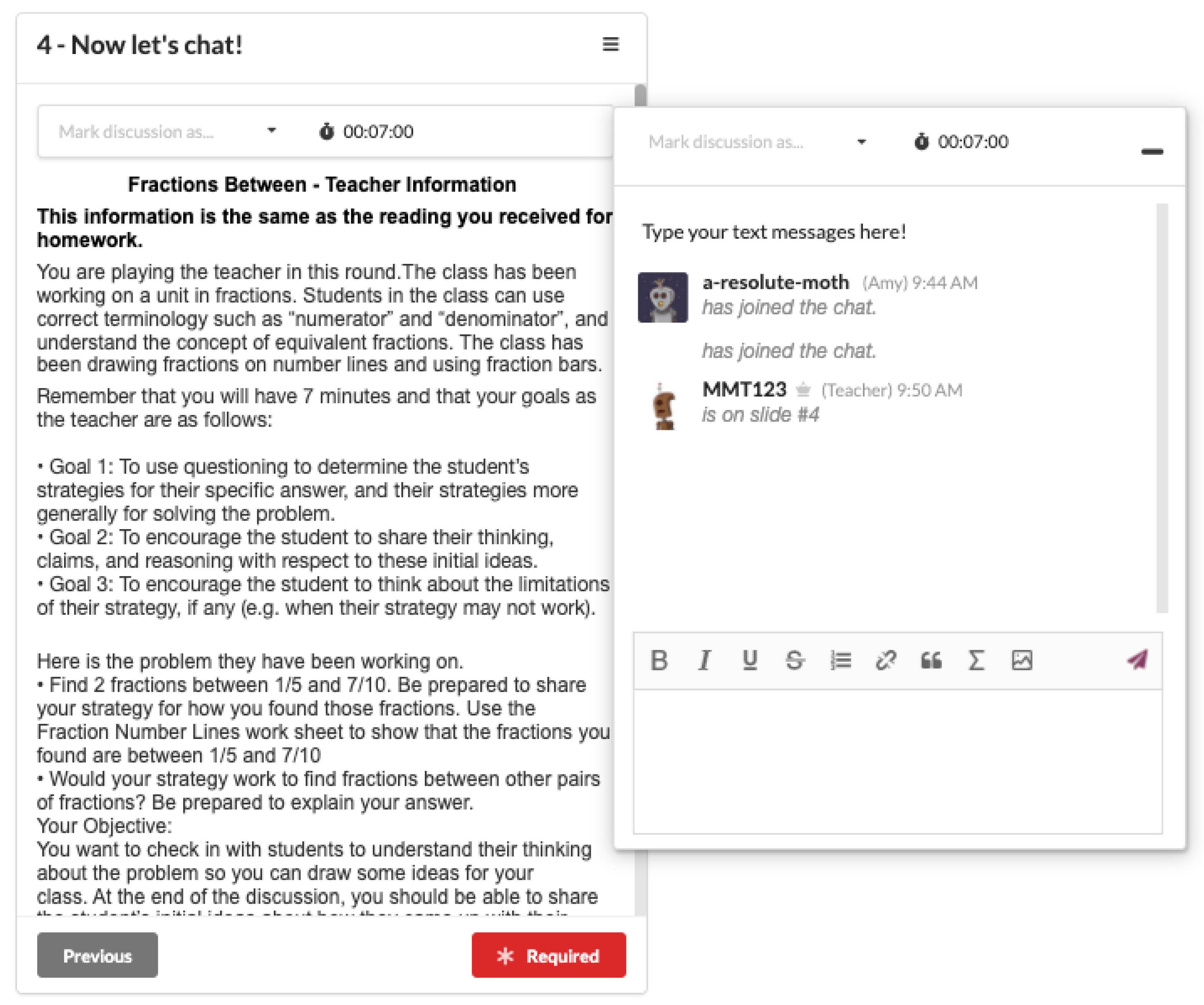

- Wang, X.; Thompson, M.; Yang, K.; Roy, D.; Koedinger, K.R.; Rose, C.P.; Reich, J. Practice-Based Teacher Questioning Strategy Training with ELK: A Role-Playing Simulation for Eliciting Learner Knowledge. Proc. ACM Hum. Comput. Interact. 2021, 5 (CSCW), 1–27. [Google Scholar] [CrossRef]

- Mikeska, J.N.; Shekell, C.; Maltese, A.V.; Reich, J.; Thompson, M.; Howell, H.; Lottero-Perdue, P.S.; Rogers, M.P. Exploring the Potential of an Online Suite of Practice-Based Activities for Supporting Preservice Elementary Teachers in Learning How to Facilitate Argumentation-Focused Discussions in Mathematics and Science. In Proceedings of the Society for Information Technology & Teacher Education International Conference, San Diego, CA, USA, 11–15 April 2022; pp. 2000–2010. [Google Scholar]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Bengtsson, M. How to plan and perform a qualitative study using content analysis. Nurs. Open 2016, 2, 8–14. [Google Scholar] [CrossRef]

- Wongpakaran, N.; Wongpakaran, T.; Wedding, D.; Gwet, K.L. A comparison of Cohen’s Kappa and Gwet’s AC1 when calculating inter-rater reliability coefficients: A study conducted with personality disorder samples. BMC Med. Res. Methodol. 2013, 13, 61. [Google Scholar] [CrossRef]

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Med. 2012, 3, 276–282. [Google Scholar] [CrossRef]

- Mikeska, J.N.; Howell, H. Authenticity perceptions in virtual environments. Inf. Learn. Sci. 2021, 122, 480–502. [Google Scholar] [CrossRef]

- Jimenez, A.M.; Zepeda, S.J. A Comparison of Gwet’s AC1 and Kappa When Calculating Inter-Rater Reliability Coefficients in a Teacher Evaluation Context. J. Educ. Hum. Resour. 2020, 38, 290–300. [Google Scholar] [CrossRef]

| Title | Level | Primary Question | Student 1 | Student 2 |

|---|---|---|---|---|

| Conservation of Matter | Elementary Science | Is matter conserved when paper is crumpled or water is frozen into ice? | Charlie: Thinks matter increases because water volume increases upon freezing. | Dana: Thinks matter is conserved because weight does not change. |

| Finding Fractions Between | Elementary Math | How can we find a fraction between two numbers? | Amy: Finds the least common denominator. | Scott: Finds numbers between the two numerators and between the two denominators. |

| Keeping the Heat | Secondary Science | Which cup did the best job of keeping the hot chocolate hot (foam or paper)? | Victor: Foam cup is best because does not let the cold into the cup (cold as a substance). | Rosa: Foam cup is best because the heat bounces off the walls better in the foam cup than in the paper cup. |

| Rate of Strawberry Picking | Secondary Math | How can we calculate the rate of strawberry picking given a table of values? | Braden: Calculates rate using proportional relationships (does not show steps). | Emilie: Calculates rate using a table. |

| Semester | TE | University Site | Content Area | Methods Course Type | Credit Hours | Course Format |

|---|---|---|---|---|---|---|

| Spring 2021 | TESci1 | U1 | Science | Elementary | 3 credits | Synchronous, online |

| TESci2 | U2 | Science | Elementary | 3 credits | Synchronous, online | |

| TEMat1 | U3 | Mathematics | Elementary | 1 credit | Synchronous, online | |

| TEMat2 | U1 | Mathematics | Elementary | 2 credits | Hybrid, online | |

| Fall 2021 | TSSci3 | U1 | Science | Secondary | 3 credits | In-person |

| TSSci4 | U4 | Science | Secondary | 3 credits | In-person | |

| TSMat3 | U5 | Mathematics | Secondary | 4 credits | In-person | |

| TSMat4 | U6 | Mathematics | Secondary | 3 credits | In-person |

| Strategy | Example Teacher Question or Other Prompt |

|---|---|

| Productive strategies for eliciting learner knowledge: | |

| Priming | “Let’s discuss the freezing water demonstration.” |

| Eliciting | “What did you observe when we froze the water in the plastic bottle?“ |

| Probing | “Why do you think the volume of the water increased?” |

| Revoicing | (After a student says, “Something must be happening with the water molecules.”) “So what you’re saying is that something is happening with the water molecules? What could be happening?” |

| You-focused questions | “What do you think heat is?” |

| Counterproductive strategies for eliciting learner knowledge: | |

| Evaluating | “Great! The water volume does increase.“ |

| Telling | “Water volume increases when the water becomes ice.” |

| Funneling | (After a student says, “I think the volume of the water increased but the mass stayed the same.”) “So are you saying that matter was conserved?” |

| Yes/No questions | “Did you add or take away any paper when you crumpled it up?” |

| Statistic | Elementary Only | Secondary Only | Elementary + Secondary |

|---|---|---|---|

| Number of participants | 26 | 31 | 57 |

| Number of conversations | 59 | 35 | 94 |

| Mean number of lines for the entire conversation length | 14.4 | 17.2 | 15.3 |

| Number of lines for the entire conversation length standard deviation | 6.64 | 4.63 | 6.10 |

| Mean number of teacher lines | 7.5 | 8.4 | 7.8 |

| Number of teacher lines standard deviation | 3.49 | 2.14 | 3.07 |

| Questioning Strategy | Elementary Mean (Standard Deviation) | Secondary Mean (Standard Deviation) | Mean − Elem + Secondary (Standard Deviation) |

|---|---|---|---|

| Productive strategies for questioning | |||

| Priming | 0.39 (0.64) | 0.2 (0.58) | 0.32 (0.62) |

| Eliciting | 1.56 (0.67) | 1.29 (0.51) | 1.45 (0.63) |

| Probing | 4.71 (2.54) | 5.26 (1.86) | 4.88 (2.33) |

| Revoicing | 0.56 (0.67) | 0.54 (0.87) | 0.55 (0.75) |

| You-Focused Questions | 4.40 (2.48) | 4.63 (1.93) | 4.49 (2.30) |

| Counterproductive strategies for questioning | |||

| Evaluating | 0.88 (1.18) | 1.66 (1.72) | 1.16 (1.45) |

| Telling | 0.25 (0.77) | 0.11(0.32) | 0.2 (0.64) |

| Funneling | 0.25 (0.54) | 0.11(0.32) | 0.2 (0.47) |

| Yes/no question | 2.17 (1.60) | 1.0 (1.2) | 1.7 (1.6) |

| Role | Line | Codes |

|---|---|---|

| Teacher A | Can you tell me what you remember from our changing paper and freezing water investigations? | eliciting, you-focused |

| Student | I remember the water we froze into ice. The water and ice weighed the same. | |

| Student | The paper was flat, crumpled, and ripped. | |

| Teacher A | I remember that too. Based off that information, what can you tell me about the amount of matter from the beginning of the experiment to the end? | probing, you-focused, |

| Student | The matter stayed the same because the weight stayed the same from beginning to end in both experiments. | |

| Teacher A | What evidence do you have to support your claim that matter was conserved in both investigations? | probing, you-focused, |

| Student | In the paper experiment, the weight was 4.6 g when it started flat. When it was crumpled it was still 4.6 g. When it was ripped into tiny pieces it was still 4.6 g. | |

| Teacher A | I like your supporting evidence. Do you believe that matter is conserved in things besides paper and water? | evaluating, probing, yes/no question, you-focused |

| Student | Yes. |

| Role | Line | Codes |

|---|---|---|

| Teacher B | Hi Charlie, what do you think about the paper? Do you think we gained or lost matter? | eliciting, you-focused |

| Student | I think when we crumpled up the paper we got more. | |

| Teacher B | What makes you think that? | probing, you-focused |

| Student | The crumpled up paper takes up more space than the flat paper. | |

| Teacher B | Okay, good observation. Did you add or take away any paper when you crumpled it up? | probing, evaluating, yes/no question, you-focused |

| Student | No, I did not | |

| Teacher B | So, for the paper, if nothing was added, do you think changing the shape also changes the amount of matter we have? | probing, you-focused |

| Student | No, it will not | |

| Teacher B | Perfect! Now same for the water, did we add or take any water out of the bottle when we froze it? | eliciting, evaluating |

| Student | No, the cap stayed on! | |

| Teacher B | Exactly! So the same can be said for the water, just because we changed the shape or state of it, we still have the same amount of matter. | evaluating, telling |

| Categories | Percentage Mentioned by PSTs (N = 57) | Sample Quote |

|---|---|---|

| Have the teacher practice questioning | 43% | The goal was to get the teachers to practice engaging in student-led conversations that would allow them to explain and elaborate on their work. |

| Understand student thought processes | 41% | To elicit student responses that encouraged them to prove their claim with reasoning and support. To have students explain their thoughts. |

| Have students explain their thinking | 38% | To get my students to explain their work and justify their steps. |

| Guide to specific conclusion (e.g., teach/explain) | 16% | The goals were to lead the student in a discussion and hopefully help them to draw the correct conclusion. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thompson, M.; Leonard, G.; Mikeska, J.N.; Lottero-Perdue, P.S.; Maltese, A.V.; Pereira, G.; Hillaire, G.; Waldron, R.; Slama, R.; Reich, J. Eliciting Learner Knowledge: Enabling Focused Practice through an Open-Source Online Tool. Behav. Sci. 2022, 12, 324. https://doi.org/10.3390/bs12090324

Thompson M, Leonard G, Mikeska JN, Lottero-Perdue PS, Maltese AV, Pereira G, Hillaire G, Waldron R, Slama R, Reich J. Eliciting Learner Knowledge: Enabling Focused Practice through an Open-Source Online Tool. Behavioral Sciences. 2022; 12(9):324. https://doi.org/10.3390/bs12090324

Chicago/Turabian StyleThompson, Meredith, Griffin Leonard, Jamie N. Mikeska, Pamela S. Lottero-Perdue, Adam V. Maltese, Giancarlo Pereira, Garron Hillaire, Rick Waldron, Rachel Slama, and Justin Reich. 2022. "Eliciting Learner Knowledge: Enabling Focused Practice through an Open-Source Online Tool" Behavioral Sciences 12, no. 9: 324. https://doi.org/10.3390/bs12090324

APA StyleThompson, M., Leonard, G., Mikeska, J. N., Lottero-Perdue, P. S., Maltese, A. V., Pereira, G., Hillaire, G., Waldron, R., Slama, R., & Reich, J. (2022). Eliciting Learner Knowledge: Enabling Focused Practice through an Open-Source Online Tool. Behavioral Sciences, 12(9), 324. https://doi.org/10.3390/bs12090324