Ethical Risk Factors and Mechanisms in Artificial Intelligence Decision Making

Abstract

:1. Introduction

2. Literature Reviews

2.1. Research on the Ethical Risks of Artificial Intelligence Decision Making

2.2. Research on the Ethical Risk Governance of Artificial Intelligence Decision Making

3. Identification of Ethical Risk Factors for AI Decision Making Based on Rooting Theory

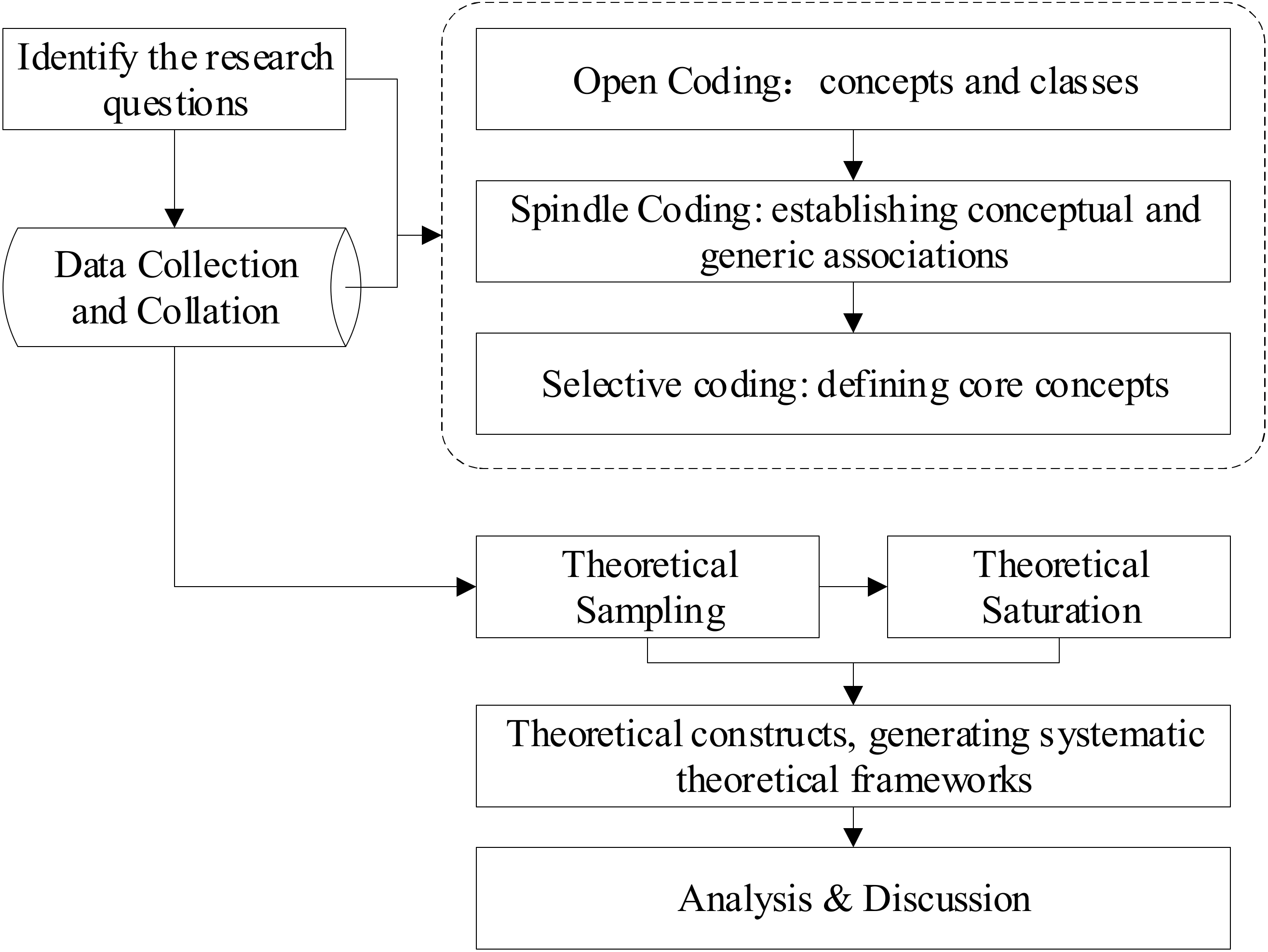

3.1. Research Methods

3.2. Data Collection and Collation

3.3. Research Process

3.3.1. Open Coding

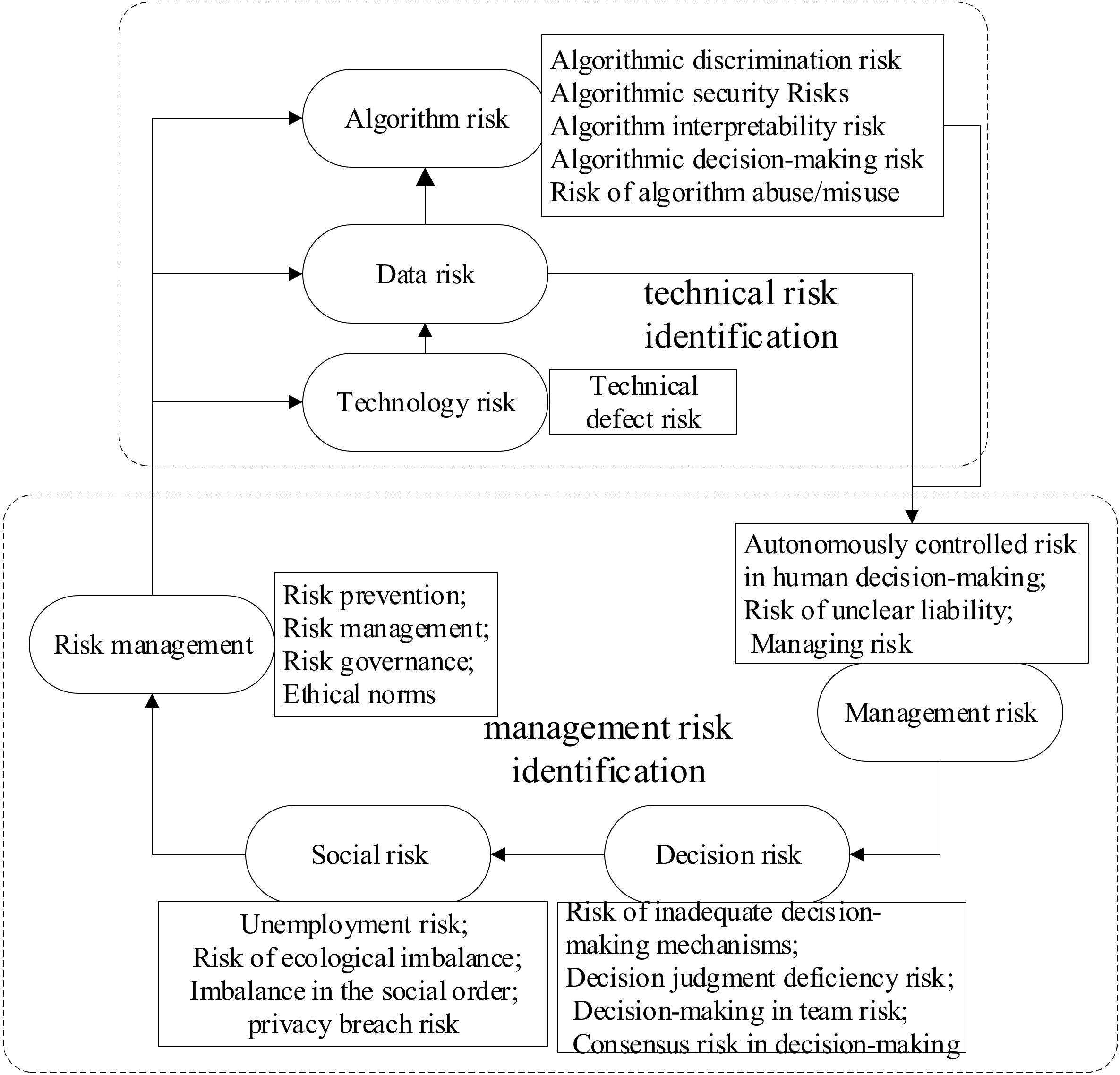

3.3.2. Spindle Coding

3.3.3. Selective Coding and Theoretical Models

3.3.4. Theoretical Saturation

4. Mechanisms for Ethical Risks in Artificial Intelligence Decision Making Based on System Dynamics

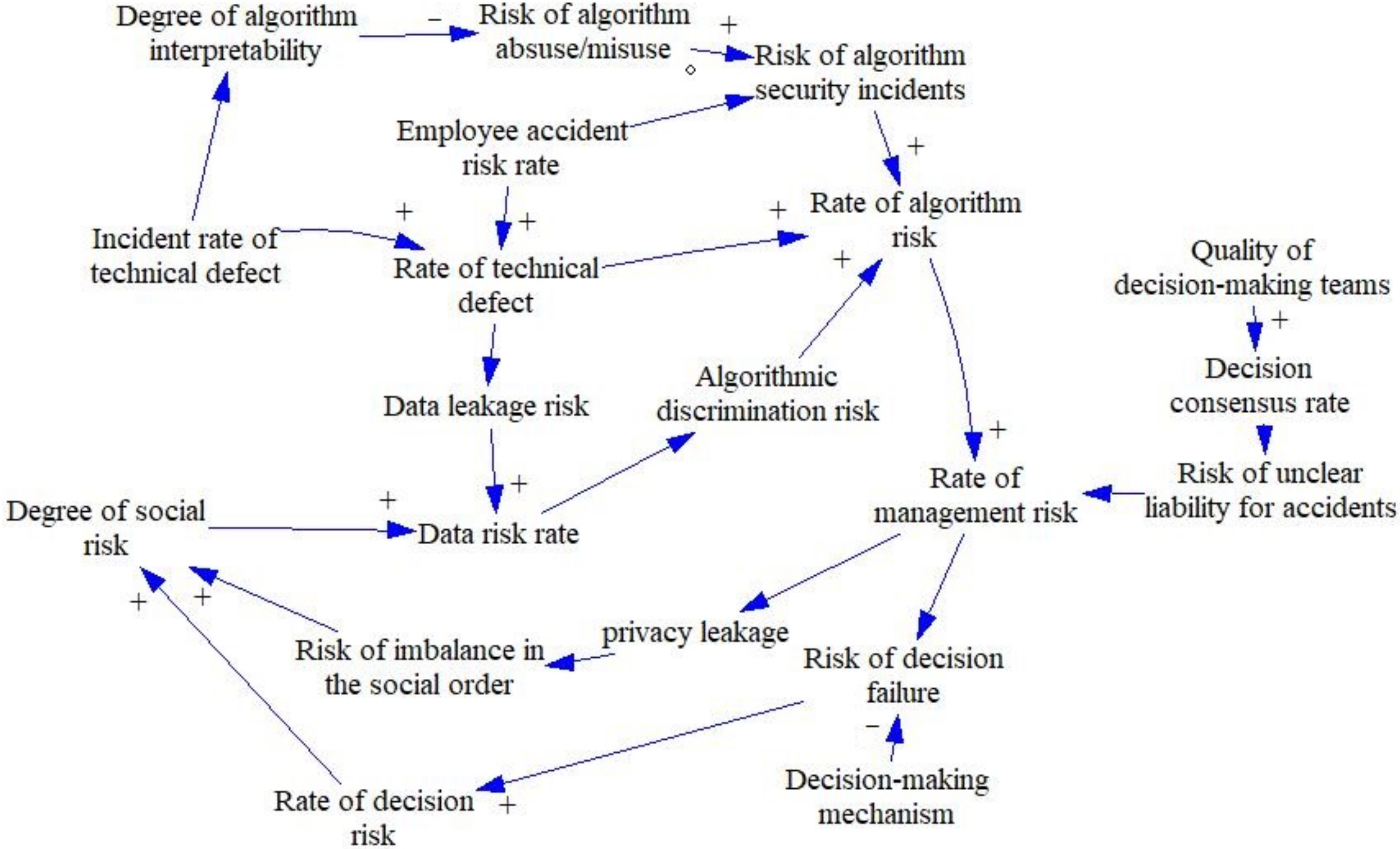

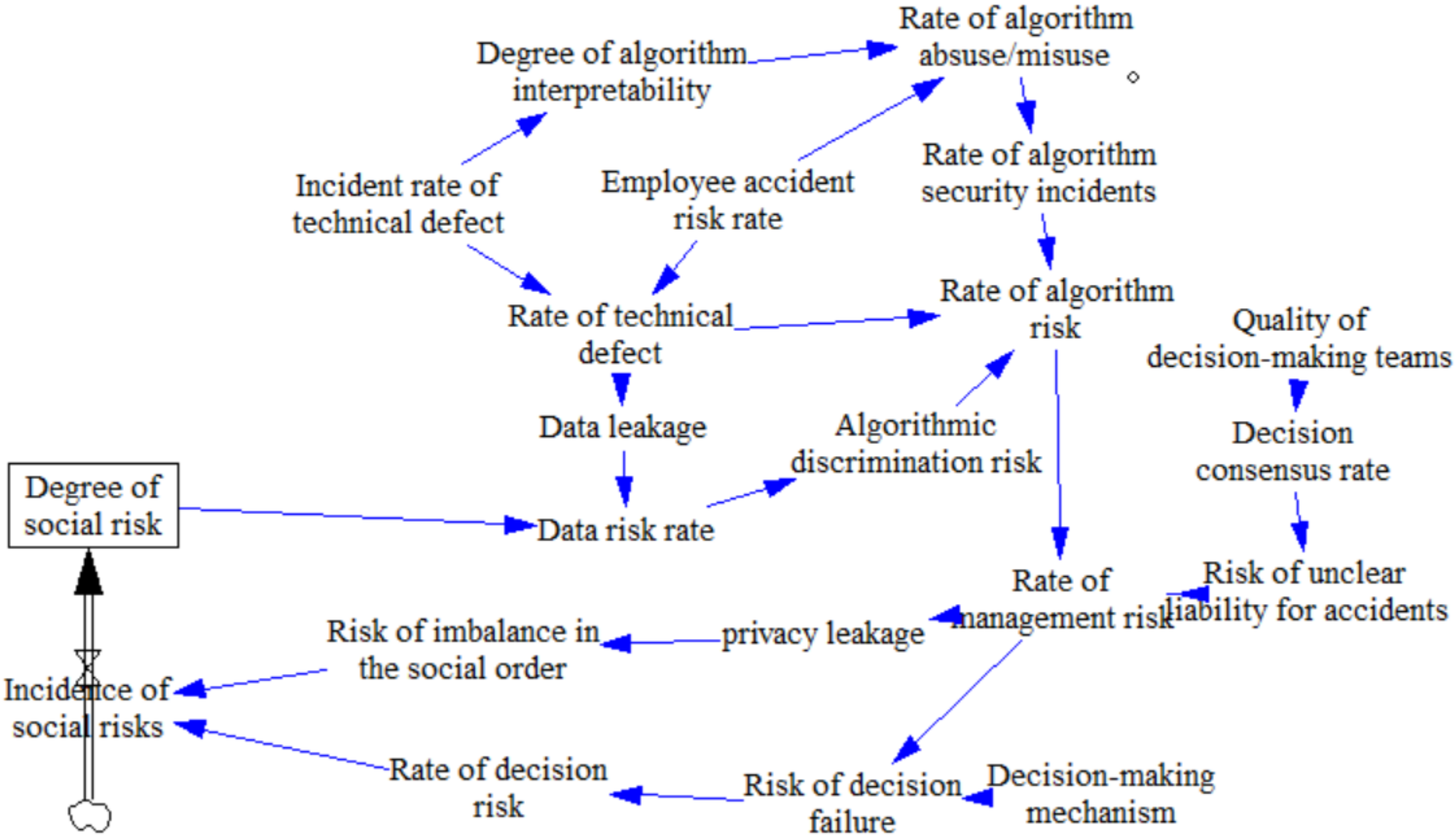

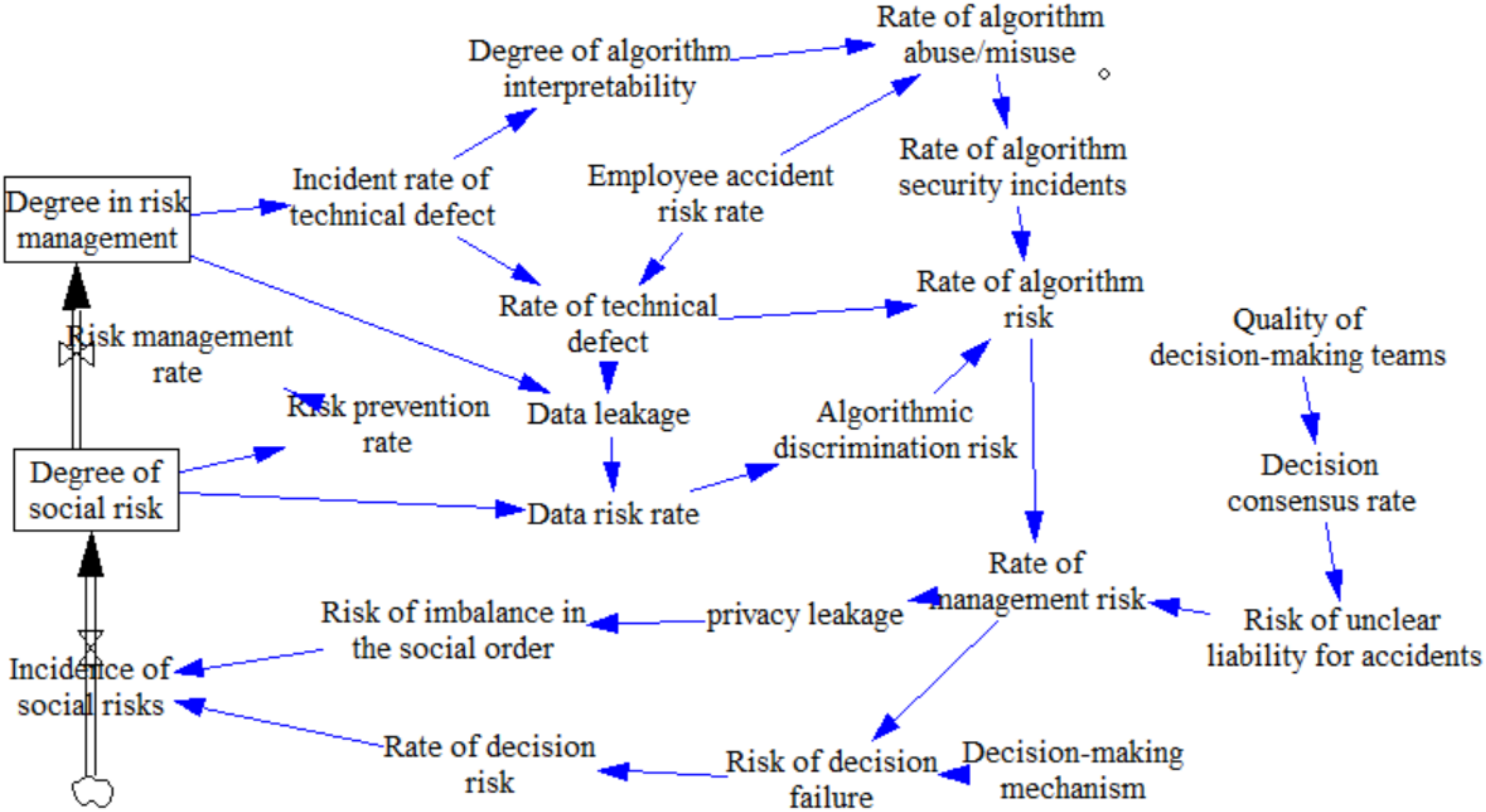

4.1. Causal Construction

4.1.1. Risk Subsystem Causality Analysis

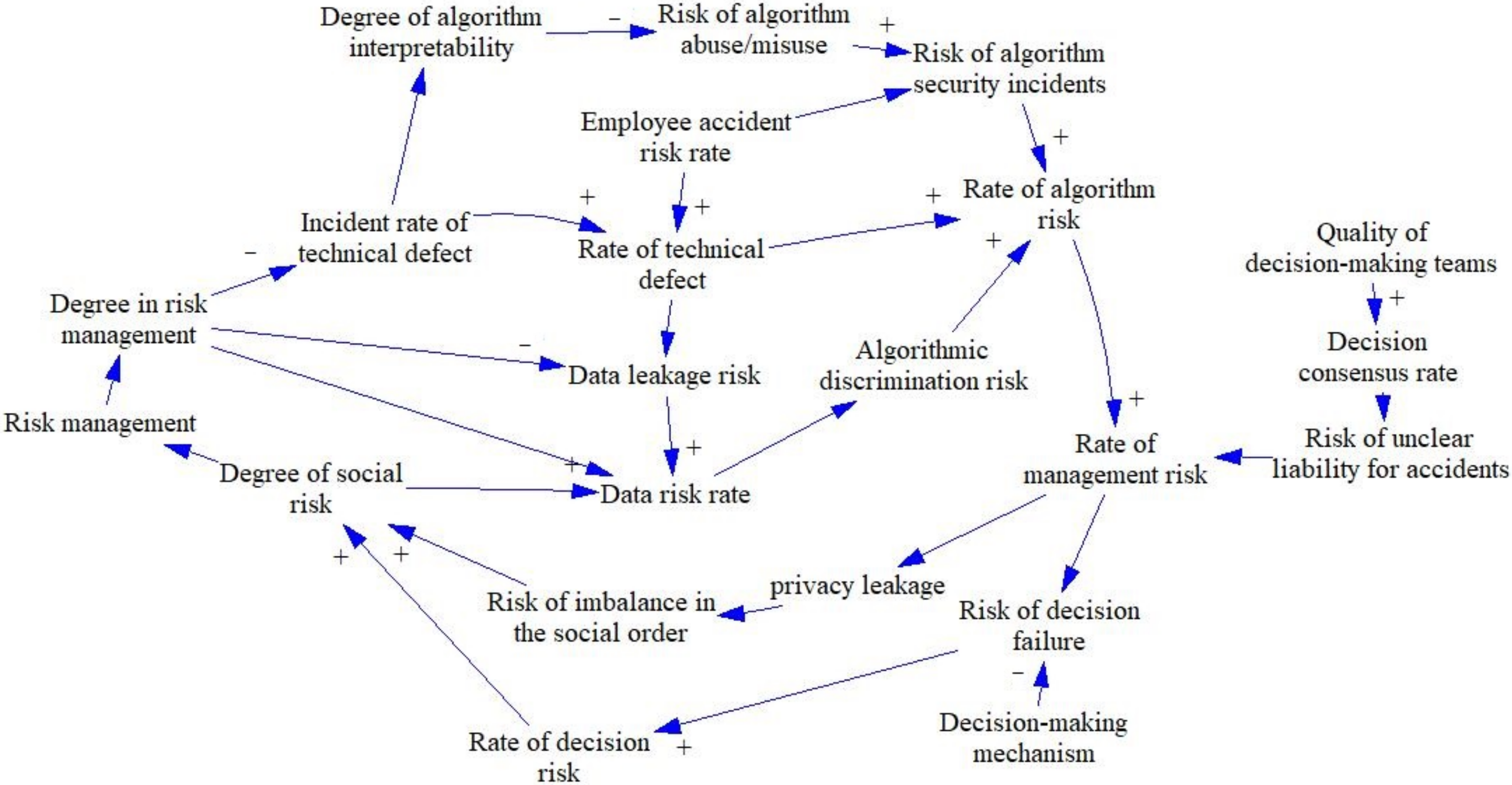

4.1.2. Risk Management Subsystem Causality Analysis

4.2. System Flow Diagram

4.3. Model Assumptions and Equations

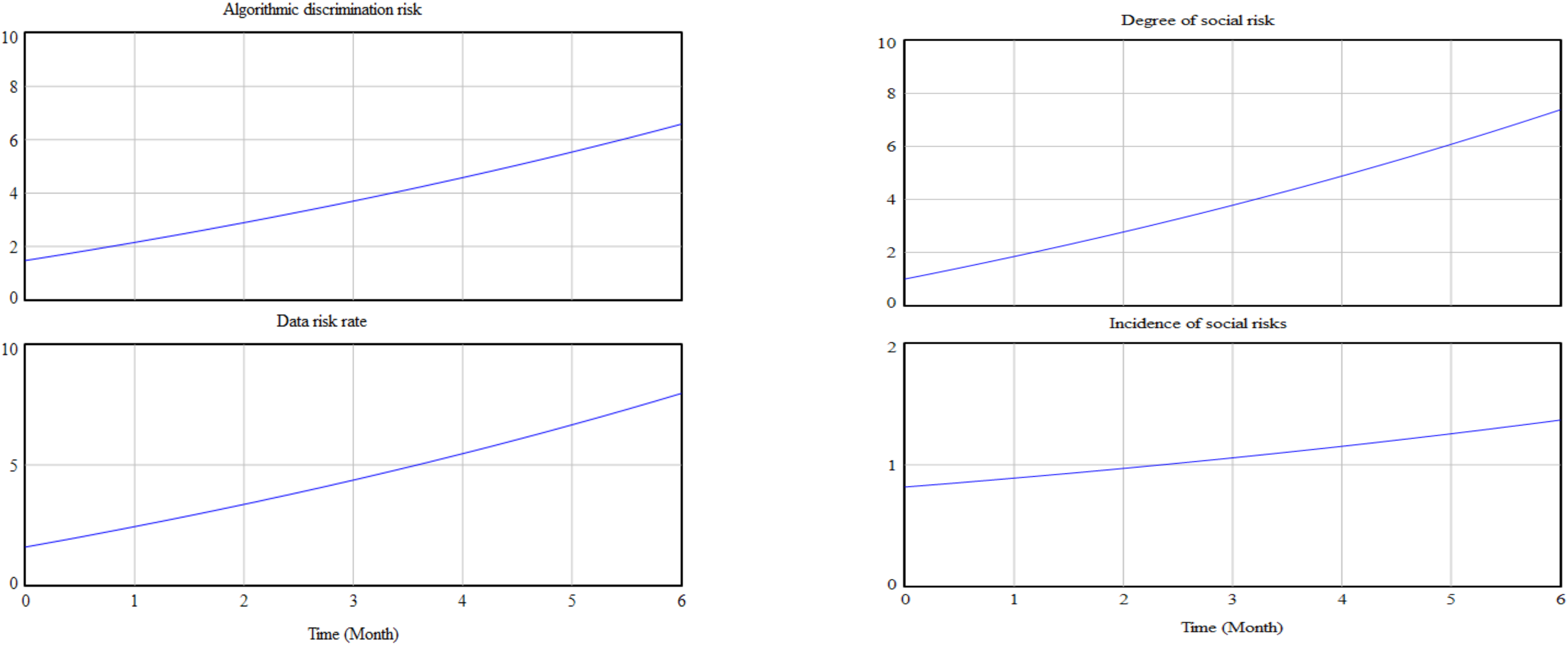

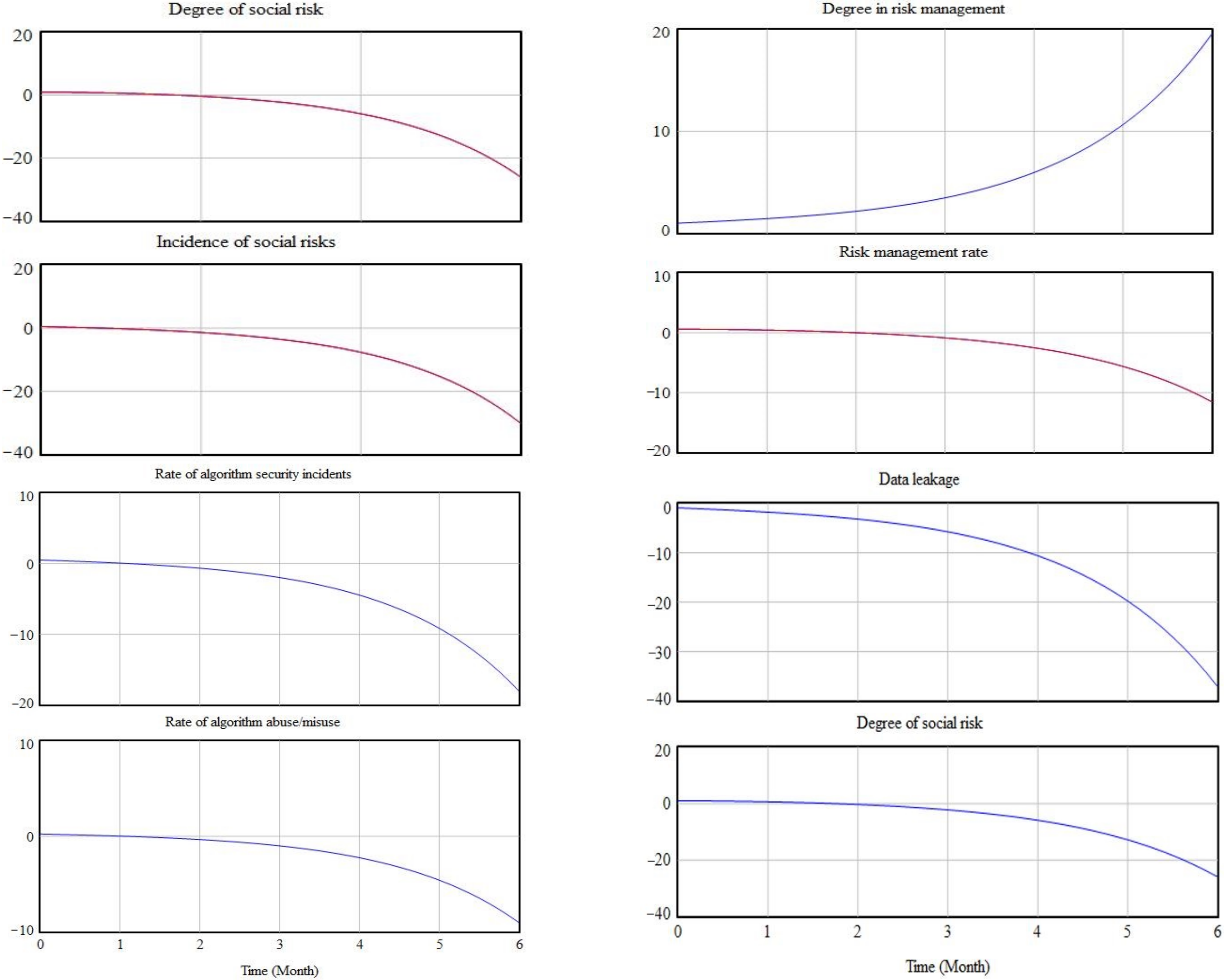

4.4. Simulation and Testing

5. Conclusions

5.1. Ethical Risk Factors for Artificial Intelligence Decision Making

5.2. Ethical Risks of Artificial Intelligence Decision Making and Mechanisms of Governance

5.3. Recommendations for the Governance of Ethical Risks in AI Decision Making

5.4. Contributions of this Research

6. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Crompton, L. The decision-point-dilemma: Yet another problem of responsibility in human-AI interaction. J. Responsible Technol. 2021, 7–8, 100013. [Google Scholar] [CrossRef]

- Yu, C.l.; Hu, W.L.; Liu, Y. US Releases New “The National Artifical Intelligence Research and Development Strategic Plan”. Secrecy Sci. Technol. 2019, 9, 35–37. [Google Scholar]

- Wang, X.F. EU Releases “Artificial Intelligence White Paper: On Artificial Intelligence—A European approach to excellence and trust”. Scitech China 2020, 6, 98–101. [Google Scholar]

- Zhongguancun Institute of Internet Finance. Read More|The European Commission’s Proposal for a 2021 Artificial Intelligence Act; Zhongguancun Institute of Internet Finance: Beijing, China, 2021. [Google Scholar]

- Dayang.com—Guangzhou Daily. Korea to Develop World’s First Code of Ethics for Robots; Guangzhou Daily: Guangzhou, China, 2007. [Google Scholar]

- Sadie think tank. [Quick Comment], Foreign Countries Conduct Ethical and Moral Research on Artificial Intelligence at Multiple Levels. Available online: https://xueqiu.com/4162984112/135453621 (accessed on 20 August 2022).

- State Council. Notice of the State Council on the Issuance of the Development Plan for a New Generation of Artificial Intelligence; State Council: Beijing, China, 20 July 2017.

- Jiang, J. The main purpose and principles of Artificial Intelligence ethics under the perspective of risk. Inf. Commun. Technol. Policy 2019, 6, 13–16. [Google Scholar]

- Susan, F. Ethics of Al: Benefits and risks of artificial intelligence systems. Interesting Engineering. Available online: https://baslangicnoktasi.org/en/ethics-of-ai-benefits-and-risks-of-artificial-intelligence-systems/ (accessed on 20 August 2022).

- Turing, A.M. Computing Machinery and Intelligence. In Parsing Turing Test; Springer: Dordrecht, The Netherlands, 2007; pp. 23–65. [Google Scholar]

- Yan, K.R. Risk of Artificial Intelligence and its Avoidance Path. J. Shanghai Norm. Univ. Philos. Soc. Sci. Ed. 2018, 47, 40–47. [Google Scholar]

- Chen, X.P. The Target, Tasks, and Implementation of Artificial Intelligence Ethics: Six Issues and the Rationale behind Them. Philos. Res. 2020, 9, 79–87+107+129. [Google Scholar]

- Marabelli, M.; Newell, N.; Handunge, V. The lifecycle of algorithmic decision-making systems: Organizational choices and ethical challenges. J. Strateg. Inf. Syst. 2021, 30, 101683. [Google Scholar] [CrossRef]

- Arkin, R.C. Governing Lethal Behavior: Embedding Ethics in a Hybrid Deliberative/Reactive Robot Architecture—Part 1: Motivation and Philosophy. In Proceedings of the 3rd ACM/IEEE International Conference on Human Robot Interaction, Amsterdam, The Netherlands, 12–15 March 2008; pp. 121–128. [Google Scholar]

- Zhao, Z.Y.; Xu, F.; Gao, F.; Li, F.; Hou, H.M.; Li, M.W. Understandings of the Ethical Risks of Artificial Intelligence. China Soft Sci. 2021, 6, 1–12. [Google Scholar]

- Leibniz, G.W. Notes on Analysis: Past Master; Oxford University: Oxford, UK, 1984. [Google Scholar]

- Anderson, S.L. Asimov’s “three laws of robotics” and machine metaethics. Sci. Fict. Philos. Time Travel Superintelligence 2016, 22, 290–307. [Google Scholar]

- Joachim, B.; Elisa, O. Towards a unified list of ethical principles for emerging technologies. An analysis of four European reports on molecular biotechnology and artificial intelligence. Sustain. Futures 2022, 4, 100086. [Google Scholar]

- Bernd, W.; Wirtz, J.C.; Weyerer, I.K. Governance of artificial intelligence: A risk and guideline-based integrative framework. Gov. Inf. Q. 2022, 101685. [Google Scholar] [CrossRef]

- Bonnefon, J.F.; Shariff, A.; Rahwan, L. The social dilemma of autonomous vehicles. Science 2016, 352, 1573–1576. [Google Scholar] [CrossRef]

- Johann, C.B.; Kaneko, S. Is Society Ready for AI Ethical Decision Making? Lessons from a Study on Autonomous Cars. J. Behav. Exp. Econ. 2022, 98, 101881. [Google Scholar]

- Cartolovni, A.; Tomicic, A.; Mosler, E.L. Ethical, legal, and social considerations of AI-based medical decision-support tools: A scoping review. Int. J. Med. Inf. 2022, 161, 104738. [Google Scholar] [CrossRef]

- Chen, L.; Wang, B.C.; Huang, S.H.; Zhang, J.Y.; Guo, R.; Lu, J.Q. Artificial Intelligence Ethics Guidelines and Governance System: Current Status and Strategic Suggestions. Sci. Technol. Manag. Res. 2021, 41, 193–200. [Google Scholar]

- Weinmann, M.; Schneider, C.; vom Brocke, J. Digital Nudging. Bus. Inf. Syst. Eng. 2016, 58, 433–436. [Google Scholar] [CrossRef]

- Jian, G. Artificial Intelligence in Healthcare and Medicine: Promises, Ethical Challenges and Governance. Chin. Med. Sci. J. 2019, 34, 76–83. [Google Scholar]

- Stahl, B.C. Responsible innovation ecosystems: Ethical implications of the application of the ecosystem concept to artificial intelligence. Int. J. Inf. Manag. 2022, 62, 102441. [Google Scholar] [CrossRef]

- Galaz, V.; Centeno, M.A. Artificial intelligence, systemic risks, and sustainability. Technol. Soc. 2021, 67, 101741. [Google Scholar] [CrossRef]

- Catherine, M.; Gretchen, B.R. Designing Qualitative Research: Guidance throughout an Effective Research Program; Chongqing University Publisher: Chongqing, China, 2019. [Google Scholar]

- Juliet, M.C.; Anselm, L.S. Procedures and Methods for the Formation of a Rooted Theory Based on Qualitative Research; Chongqing University Publisher: Chongqing, China, 2015. [Google Scholar]

- Flynn, S.V.; Korcuska, J.S. Grounded theory research design: An investigation into practices and procedures. Couns. Outcome Res. Eval. 2018, 9, 102–116. [Google Scholar] [CrossRef]

- Li, X.; Su, D.Y. On the Ethical Risk Representation of Artificial Intelligence. J. Chang. Univ. Sci. Technol. Soc. Sci. 2020, 35, 13–17. [Google Scholar]

- Tan, J.S.; Yang, J.W. The Ethical Risk of Artificial Intelligence and Its Cooperative Governance. Chin. Public Adm. 2019, 10, 46–47. [Google Scholar]

- Zhang, Z.X.; Zhang, J.Y.; Tan, T.N. Analysis and countermeasures of ethical problems in artificial intelligence. Bull. Chin. Acad. Sci. 2021, 36, 1270–1277. [Google Scholar]

- Zhang, T.; Ma, H. System Dynamics Research on the Influencing Factors of Data Security in Artificial Intelligence. Inf. Res. 2021, 3, 1–10. [Google Scholar]

- Zhu, B.Z.; Tang, J.J.; Jiang, M.X.; Wang, P. Simulation and regulation of carbon market risk based on system dynamics. Syst. Eng. Theory Pract. 2022, 42, 1859–1872. [Google Scholar]

- Lo Piano, S. Ethical principles in machine learning and artificial intelligence: A case from the field and possible ways forward. Humanit. Soc. Sci. Commun. 2020, 7, 9. [Google Scholar] [CrossRef]

| No | Initial Scope | Initial Concept |

|---|---|---|

| 1 | Algorithmic discrimination risk | Human-caused discrimination, data-driven discrimination, discrimination caused by machine self-learning, discriminatory algorithm design, non-discriminatory algorithm design, data bias, prejudice, discrimination, user equality |

| 2 | Algorithmic security risks | Algorithm vulnerabilities, malicious exploitation, algorithm design, training, algorithm opacity, algorithm uncontrollability, algorithm unreliability |

| 3 | Algorithm interpretability risk | Informed human interest and subjectivity, algorithmic transparency, algorithmic verifiability |

| 4 | Algorithmic decision-making risk | Algorithm prediction and incorrect decision making, unpredictability of algorithm results, algorithm termination mechanisms |

| 5 | Risk of algorithm abuse/misuse | Algorithm abuse, algorithm misuse, code insecurity, technical malpractice/misuse, over-reliance on algorithms |

| 6 | Technical defect risk | Limited technical competence, inadequate technical awareness, technical failures, inadequate technical manipulation, technical misuse, technical defects, technical immaturity, “black box”, technical uncertainty |

| 7 | Data risk | Hacking, compliant data behavior, biased data omissions, lack of hardware stability, data management gaps, poor data security, image recognition, voice recognition, smart home, data adequacy, false information |

| 8 | Privacy breach risk | Privacy breach due to data resource exploitation, privacy breach due to data management vulnerability, data breach, privacy breach, user knowledge, user consent |

| 9 | Managing risk | Deficiencies in the management of application subjects, inadequate risk management capabilities, lack of supervision, legal loopholes, poor risk-management capabilities, inadequate safety and security measures, inadequate liability mechanisms |

| 10 | Unemployment risk | Machines replacing humans, mass unemployment |

| 11 | Risk of ecological imbalance | High energy consumption in the development of AI, problem of asymmetry in biodiversity [31], disharmony between man and nature |

| 12 | Imbalance in the social order | Imbalance in the social order, social stratification, and solidification due to technological wealth disparity, imbalance in human–computer relations, social order, disruption of equity, uncontrolled ethical norms [32], social discrimination, digital divide, life safety, health |

| 13 | Autonomous controlled risk in human decision making | Substitute human decision making, machine emotions, AI entrusted with the ability to make decisions on human affairs, lack of ethical judgment on decision outcomes, participants and influencers of human decisions, changes in the rights of decision subjects |

| 14 | Risk governance | Educational reform, ethical norms, technical support, legal regulation, international cooperation [33] |

| 15 | Risk of unclear liability | Improper attribution of responsibility, unclear attribution of responsibility for safety, debate over the identification of rights and responsibilities of smart technologies, review and identification of attribution of responsibility, complex ethical subject matter [34] |

| 16 | Risk of inadequate decision-making mechanisms | Inadequate ethical norms and frameworks, inadequate ethical institution building |

| 17 | Decision judgment deficiency risk | Inadequate ethical judgment, poorly described algorithms for ethical implications, faulty instructions, complex algorithmic models, human-centered ethical decision-making frameworks |

| 18 | Decision making in team risk | Expert governance structures reveal limitations and shortcomings, illogical expert decision making structures, low levels of expert accountability |

| 19 | Consensus risk in decision making | Humans often disagree on solutions to real ethical dilemmas, no consensus, a crisis of confidence |

| 20 | Risk prevention | Enhance bottom-line thinking and risk awareness, strengthen the study and judgment of potential risks of AI development, carry out timely and systematic risk monitoring and assessment, establish an effective risk warning mechanism, improve the ability to control and dispose of ethical AI risks |

| 21 | Risk management | Awareness and culture of ethical risk management; establish a risk management department, risk identification, and assessment and handling; establish an ethical risk oversight department; development of internal policies and systems related to ethical risks; establish open lines of communication and consultation; establish a review mechanism for partners and risk reporting; focus on cultural factors and the significance of ethical risk management; governance with coordination |

| 22 | Ethical norms | Fairness, justice, harmony, security, accountability, traceability, reliability, control, right to control, good governance, social wellbeing |

| No | Main Scope | Initial Scope |

|---|---|---|

| 1 | Algorithm risk | Algorithmic discrimination risk; algorithmic security risk; algorithm interpretability risk; algorithmic decision-making risk; risk of algorithm abuse/misuse |

| 2 | Data risk | Data risk |

| 3 | Technology risk | Technical defect risk |

| 4 | Social risk | Unemployment risk; risk of ecological imbalance; imbalance in the social order; privacy breach risk |

| 5 | Management risk | Autonomously controlled risk in human decision making; risk of unclear liability; managing risk |

| 6 | Decision risk | Risk of inadequate decision-making mechanisms; decision judgment deficiency risk; decision making in team risk; consensus risk in decision making |

| 7 | Risk management | Risk prevention; risk management; risk governance; ethical norms |

| No | Variable | Type | Relationship Equation |

|---|---|---|---|

| 1 | Quality of decision-making teams | Constant | 1 |

| 2 | Employee accident risk rate | Constant | 0.01 |

| 3 | Decision-making mechanism | Constant | 0.8 (assuming a 0.2 flaw in the decision-making mechanism) |

| 4 | Incident rate of a technical defect | Constant | 0.2 (technical risk management can reduce most of the risk of technical defects) |

| 5 | Degree of algorithm interpretability | Auxiliary variable | “The incident rate of technical defect” × 0.5 + 0.2 (design discrimination in the algorithm itself + algorithmic black box issues) |

| 6 | Rate of algorithm abuse/misuse | Auxiliary variable | “Employee accident risk rate” + “Degree of algorithm interpretability” |

| 7 | Rate of algorithm security incidents | Auxiliary variable | “The rate of algorithm abuse/misuse” × 2 (algorithm abuse/misuse rate accelerates algorithm security incidents) |

| 8 | Decision consensus rate | Auxiliary variable | “Quality of decision-making teams” × 0.8 (assumes 80% consistency of decision making in absolute teams) |

| 9 | Risk of unclear liability for accidents | Auxiliary variable | “Decision consensus rate” × 0.2 (the higher the consensus rate of decision making, the lower the risk of liability accidents) |

| 10 | Algorithmic discrimination risk | Auxiliary variable | “Data risk rate” × 0.8 + 0.2 (much of the algorithmic discrimination comes from input data + algorithmic design discrimination) |

| 11 | Rate of a technical defect | Auxiliary variable | “The incident rate of technical defect” × 0.95 + “Employee accident risk rate” × 0.05 (a large part of this is due to technical defects and a small part to problems with the designers themselves) |

| 12 | Rate of algorithm risk | Auxiliary variable | “The rate of algorithm security incidents” + “The rate of technical defect” × 0.1 + “Algorithmic discrimination risk” × 0.1 (the algorithmic risk rate is in addition to the risk rate summarized by the current data. There are also risks that may be caused by future technologies and algorithms) |

| 13 | Rate of management risk | Auxiliary variable | “Risk of unclear liability for accidents” + “The rate of algorithm risk” + 0.2 (unclear responsibility for accidents and algorithmic risks can both contribute to management failures, coupled with the risks inherent in management) |

| 14 | Data leakage | Auxiliary variable | “The rate of technical defect” × 0.5 + 0.2 |

| 15 | Data risk rate | Auxiliary variable | “Data leakage” × 2 + “Degree of social risk” (data breaches can accelerate data risk and are extremely risky for the data generated; the level of social risk also increases data risk) |

| 16 | Risk of imbalance in the social order | Auxiliary variable | “Privacy leakage” × 0.3 + 0.1 (privacy breaches can create social injustice by causing citizen panic and creating problems such as big data killings) |

| 17 | Privacy leakage | Auxiliary variable | “The rate of management risk” × 0.9 + 0.1 (privacy breaches are largely the result of mismanagement) |

| 18 | Risk of decision failure | Auxiliary variable | “The rate of management risk” × 0.9 − “Decision-making mechanism” (management failures can lead to decision failure and decision-making mechanisms can reduce the risk of poor decision making by at least half with a decision-making mechanism of 0.5) |

| 19 | Rate of decision risk | Auxiliary variable | “Risk of decision failure” × 0.9 + 0.1 (decision failure is a large part of the cause of decision risk) |

| 20 | Incidence of social risks | Rate variable | “The rate of decision risk” + “The risk of imbalance in the social order” + 0.1 |

| 21 | Degree of social risk | Level variable | INTEG (“Incidence of social risks”, 1) |

| No | Variable | Type | Relationship Equation |

|---|---|---|---|

| 1 | Incident rate of a technical defect | Auxiliary variable | 1-“Degree of risk management” + 0.1 (technology risk management, which reduces the risk of technical defects) |

| 2 | Data leakage | Auxiliary variable | “The rate of technical defect” − “Degree of risk management” (technical risk (including human) due to their data breach, but risk management will reduce the extent of the breach) |

| 3 | Risk prevention rate | Auxiliary variable | “Degree of social risk” × 0.9 + 0.1 (the higher the social risk, the higher the degree of the social risk equation, and the more ethical norms and management systems will strengthen the risk prevention rate) |

| 4 | Risk management rate | Rate variable | “Risk prevention rate” × 0.5 + 0.1 |

| 5 | Degree of social risk | Level variable | INTEG (“Incidence of social risks” − “Risk management rate”, 1) |

| 6 | Degree in risk management | Level variable | INTEG (1-“Risk management rate”, 1) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guan, H.; Dong, L.; Zhao, A. Ethical Risk Factors and Mechanisms in Artificial Intelligence Decision Making. Behav. Sci. 2022, 12, 343. https://doi.org/10.3390/bs12090343

Guan H, Dong L, Zhao A. Ethical Risk Factors and Mechanisms in Artificial Intelligence Decision Making. Behavioral Sciences. 2022; 12(9):343. https://doi.org/10.3390/bs12090343

Chicago/Turabian StyleGuan, Hongjun, Liye Dong, and Aiwu Zhao. 2022. "Ethical Risk Factors and Mechanisms in Artificial Intelligence Decision Making" Behavioral Sciences 12, no. 9: 343. https://doi.org/10.3390/bs12090343

APA StyleGuan, H., Dong, L., & Zhao, A. (2022). Ethical Risk Factors and Mechanisms in Artificial Intelligence Decision Making. Behavioral Sciences, 12(9), 343. https://doi.org/10.3390/bs12090343