The Impact of Shared Information Presentation Time on Users’ Privacy-Regulation Behavior in the Context of Vertical Privacy: A Moderated Mediation Model

Abstract

:1. Introduction

2. Literature Review and Research Hypothesis

2.1. Vertical Privacy

2.2. Privacy-Regulation Behavior

2.3. Privacy Process Model Theory

2.4. Information Presentation Time and Privacy-Regulation Behavior

2.5. Online Vigilance

2.6. Perceived Control

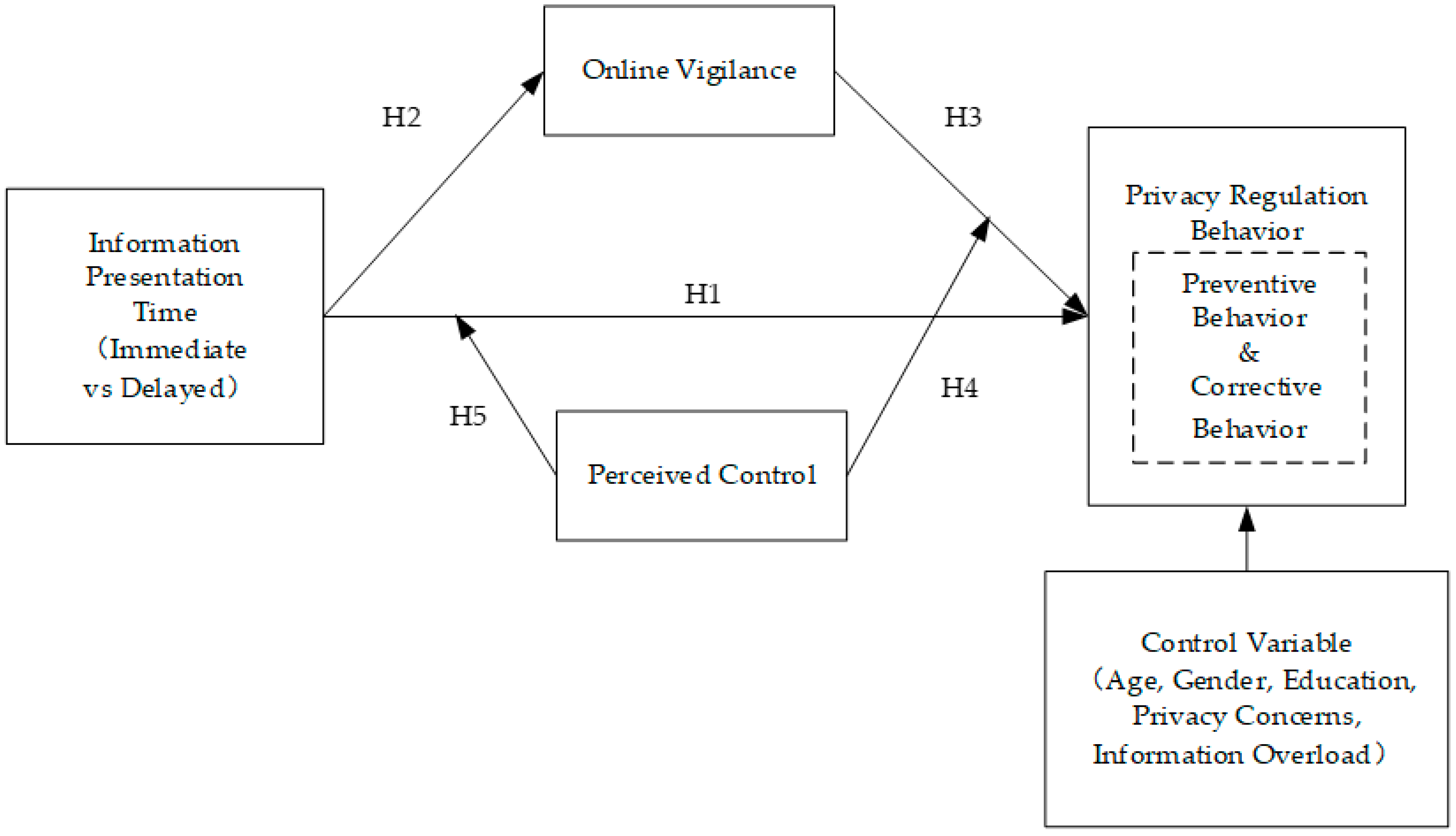

2.7. Research Model

3. Materials and Methods

3.1. Pre-Study

3.2. Study 1

3.2.1. Materials and Procedures

- (1)

- Materials

- (2)

- Procedures

3.2.2. Results of Study 1

- (1)

- Reliability and validity test

- (2)

- Multicollinearity diagnosis

- (3)

- Hypothesis test

3.3. Study 2: The Regulatory Role of Perceived Control

3.3.1. Materials and Procedures

3.3.2. Results of Study 2

- (1)

- Reliability and validity test

- (2)

- Multicollinearity diagnosis

- (3)

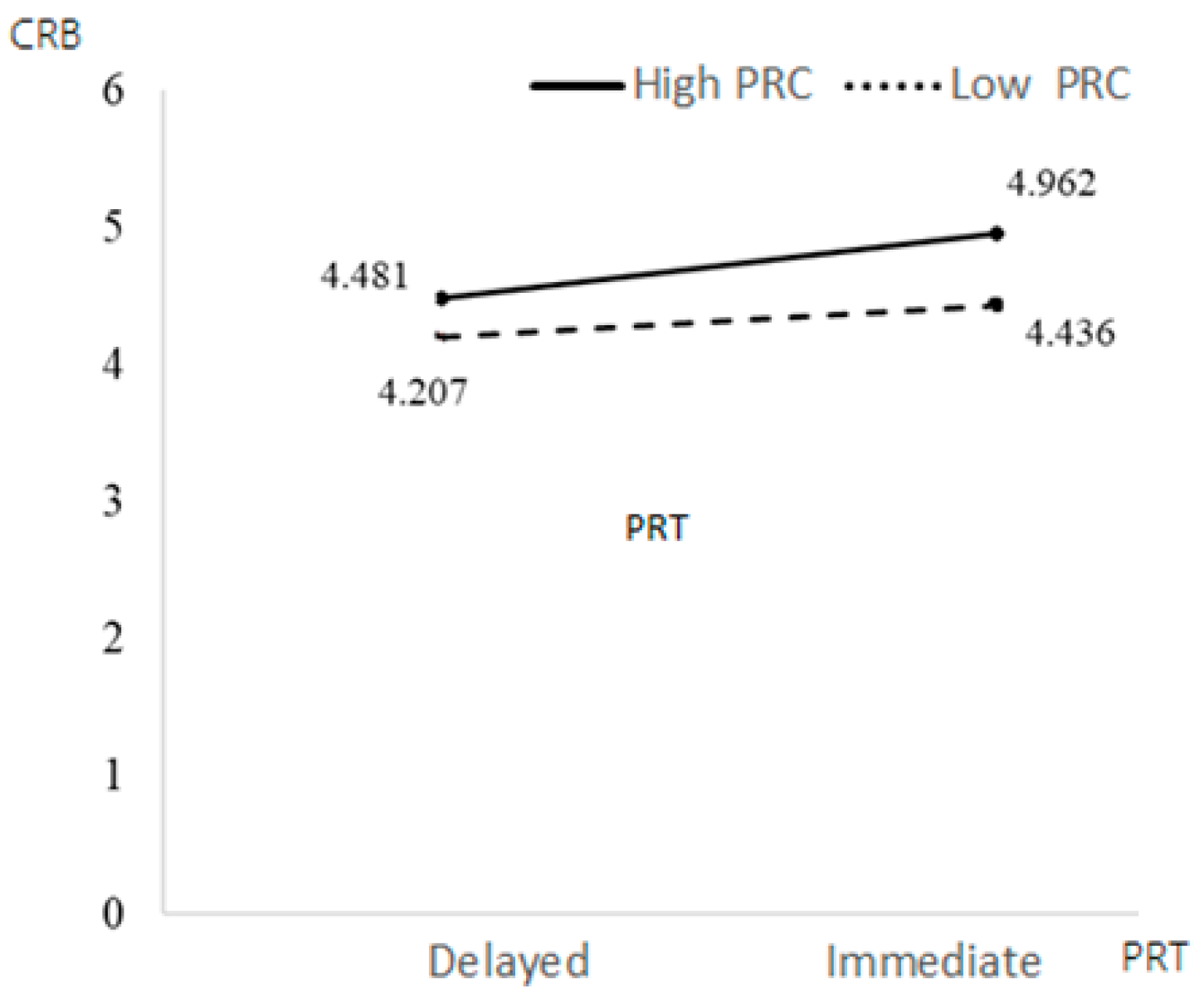

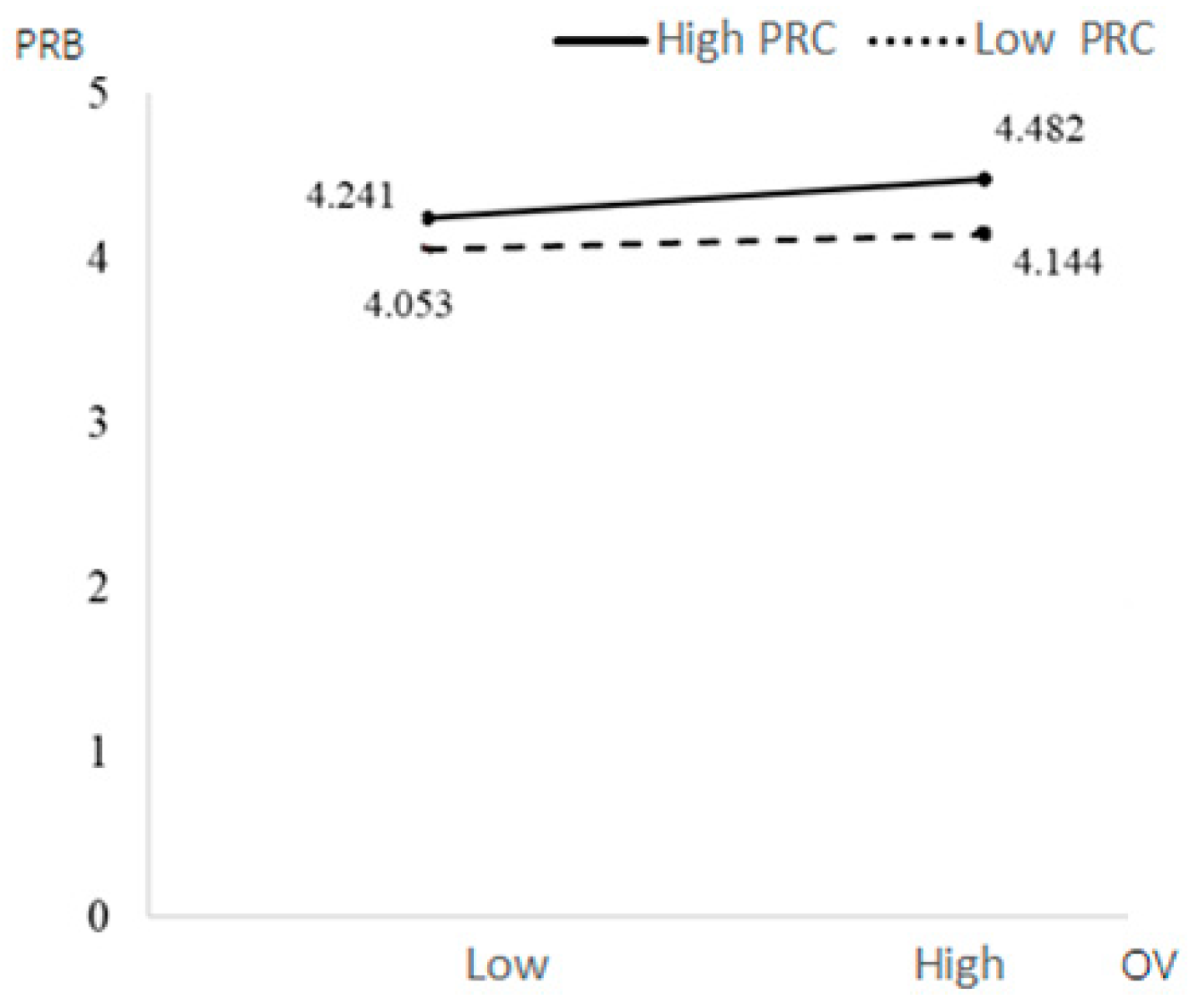

- Hypothesis test

4. Discussion

4.1. Theoretical Implications

4.2. Practical Implications

4.3. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Masur, P.K. Situational Privacy and Self-disclosure: Communication Process in Online Environments; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Teutsch, D.; Masur, P.K.; Trepte, S. Privacy in mediated and nonmediated interpersonal communication how subjective concepts and situational perceptions influence behaviors. Soc. Med. Soc. 2018, 4, 2056305118767134. [Google Scholar] [CrossRef]

- Huckvale, K.; Torous, J.; Larsen, M.E. Assessment of the data sharing and privacy practices of smartphone apps for depression and smoking cessation. JAMA Netw. Open 2019, 4, e192542. [Google Scholar] [CrossRef] [PubMed]

- van Hoboken, J.; Fathaigh, R.O. Smartphone platforms as privacy regulators. Comput. Law Secur. Rev. 2021, 41, 22–43. [Google Scholar] [CrossRef]

- Teubner, T.; Adam, M.T.P.; Hawlitschek, F. Unlocking online reputation on the effectiveness of cross-platform signaling in the sharing economy. Bus. Inf. Syst. Eng. 2020, 62, 501–513. [Google Scholar] [CrossRef]

- Ju, J. Antitrust analysis of some issues concerning big data, market power, and privacy in digital multi-sided platform businesses. J. Korean Compet. Law 2019, 159–202. [Google Scholar] [CrossRef]

- Wang, H.; Li, P.; Liu, Y.; Shao, J. Towards real-time demand-aware sequential POI recommendation. Inf. Sci. 2021, 547, 482–497. [Google Scholar] [CrossRef]

- Kwiecinski, R.; Melniczak, G.; Gorecki, T. Comparison of real-time and batch job recommendations. IEEE Access 2023, 11, 20553–20559. [Google Scholar] [CrossRef]

- Zhou, X.; Qin, D.; Chen, L.; Zhang, Y. Real-time context-aware social media recommendation. VLDB J. 2019, 28, 197–219. [Google Scholar] [CrossRef]

- Weihua, X.; Jianhang, Y. A novel approach to information fusion in multisource dataswts: A granular computing viewpoint. Inf. Sci. 2017, 378, 410–423. [Google Scholar] [CrossRef]

- Cichy, P.; Salge, T.O.; Kohli, R. Privacy Concerns and Data Sharing in the Internet of Things: Mixed Methods Evidence from Connected Cars. MIS Q. 2021, 45, 1863–1891. [Google Scholar] [CrossRef]

- Felipe, T. Ghosts in the dark: How to prepare for times of hyper-privacy. NIM Mark. Intell. Rev. 2021, 13, 36–41. [Google Scholar]

- Djatsa, F. Threat perceptions, avoidance motivation and security behaviors correlations. J. Inf. Secur. 2020, 11, 19–45. [Google Scholar] [CrossRef]

- Kim, T.; Barasz, K.; John, L.K.; Morales, A. Why am i seeing this ad? the effect of ad transparency on ad effectiveness. J. Consum. Res. 2018, 45, 906–932. [Google Scholar] [CrossRef]

- Abbas, A.E.; Agahari, W.; van de Ven, M.; Zuiderwijk, A. Business data sharing through data marketplaces: A systematic literature review. J. Theor. Appl. Electron. Commer. Res. 2021, 16, 3321–3339. [Google Scholar] [CrossRef]

- Xu, H.; Luo, X.R.; Carroll, J.M.; Rosson, M.B. The personalization privacy paradox: An exploratory study of decision making process for location-aware marketing. Decis. Support Syst. 2011, 51, 42–52. [Google Scholar] [CrossRef]

- Aguirre, E.; Mahr, D.; Grewal, D.; de Ruyter, K.; Wetzels, M. Unraveling the personalization paradox: The effect of information collection and trust-building strategies on online advertisement effectiveness. J. Retail. 2015, 91, 34–49. [Google Scholar] [CrossRef]

- Sutanto, J.; Palme, E.; Tan, C.H.; Phang, C.W. Addressing the personalizationprivacy paradox: An empirical assessment from a field experiment on smartphone users. MIS Q. 2013, 37, 1141–1164. [Google Scholar] [CrossRef]

- Awad, N.F.; Krishnan, M.S. The personalization privacy paradox: An empirical evaluation of information transparency and the willingness to be profiled online for personalization. MIS Q. 2006, 30, 13–28. [Google Scholar] [CrossRef]

- Nam, T. What determines the acceptance of government surveillance? Examining the influence of information privacy correlates. Soc. Sci. J. 2019, 56, 530–544. [Google Scholar] [CrossRef]

- Goldfarb, A.; Tucker, C. Online display advertising: Targeting and obtrusiveness. Mark. Sci. 2011, 30, 389–404. [Google Scholar] [CrossRef]

- Jong-Youn, R.; Jin-Myong, L. Personalization-privacy paradox and consumer conflict with the use of location-based mobile e-commerce. Comput. Hum. Behav. 2016, 63, 453–462. [Google Scholar] [CrossRef]

- Martin, K.D.; Borah, A.; Palmatier, R.W.; Jm, H.D.O. Data privacy: Effects on customer and firm performance. J. Mark. 2017, 81, 36–58. [Google Scholar] [CrossRef]

- Zeng, F.; Ye, Q.; Li, J.; Yang, Z. Does self-disclosure matter? A dynamic two-stage perspective for the personalization-privacy paradox. J. Bus. Res. 2021, 124, 667–675. [Google Scholar] [CrossRef]

- Lee, C.H.; Cranage, D.A. Personalisation-privacy paradox: The efects of personalisation and privacy assurance on customer r esponses to travel Web sites. Tour. Manag. 2011, 32, 987–994. [Google Scholar] [CrossRef]

- Li, T.; Unger, T. Willing to pay for quality personalization? Trade-off between quality and privacy. Eur. J. Inform. Syst. 2012, 21, 621–642. [Google Scholar] [CrossRef]

- Lee, N.; Kwon, O. A privacy-aware feature selection method for solving the personalization—Privacy paradox in mobile wellness healthcare services. Expert Syst. Appl. 2015, 42, 2764–2771. [Google Scholar] [CrossRef]

- Liang, H.; Xue, Y. Avoidance of information technology threats: A theoretical perspective. MIS Q. 2009, 33, 71–90. [Google Scholar] [CrossRef]

- Liang, H.; Xue, Y. Understanding Security behaviors in personal computer usage: A threat avoidance perspective. J. Assoc. Inf. Syst. 2010, 11, 1. [Google Scholar] [CrossRef]

- Abraham, J.; Septian, D.L.; Prayoga, T.; Ruman, Y.S. Predictive factors of attitude towards online disruptive advertising. In Strategies for Business Sustainability in a Collaborative Economy; IGI Global: Hershey, PA, USA, 2020; pp. 102–121. [Google Scholar]

- Masur, P.K.; Scharkow, M. Disclosure Management on Social Network Sites: Individual Privacy Perceptions and User-Directed Privacy Strategies. Soc. Med. Soc. 2016, 2, 12–24. [Google Scholar] [CrossRef]

- Crowley, J.L. A framework of relational information control: A review and extension of information control research in interpersonal contexts. Commun. Theory 2017, 27, 202–222. [Google Scholar] [CrossRef]

- Almuhimedi, H.S.F.; Sadeh, N.; Adjerid, I.; Acquisti, A.; Agarwal, Y. A Field Study on Mobile App Privacy Nudging. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems(CHI’15), Seoul, Republic of Korea, 18–23 April 2015; ACM: New York, NY, USA, 2015; pp. 787–796. [Google Scholar]

- Padyab, A.; Päivärinta, T.; Ståhlbröst, A.; Bergvall-Kåreborn, B. Bergvall-Karebom Facebook user attitudes towards secondary use of personal information. In Proceedings of the Thirty Seventh International Conference on Information Systems, Dublin, Ireland, 11–14 December 2016. [Google Scholar]

- van Schaik, P.; Jansen, J.; Onibokun, J.; Camp, J.; Kusev, P. Security and privacy in online social networking: Risk perceptions and precautionary behaviour. Comput. Hum. Behav. 2018, 78, 279–283. [Google Scholar] [CrossRef]

- Trepte, S.; Masur, P.K.; Scharkow, M. Mutual friend’s social support and self-disclosure in face-to-face and instant messenger communication. J. Soc. Psychol. 2017, 158, 430–445. [Google Scholar] [CrossRef] [PubMed]

- Dienlin, T. The privacy process model. In Medien and Privatheir Media and Privacy; Garnett, S., Halft, S., Herz, M., Eds.; Stutz: Passau, Germany, 2014; pp. 105–122. [Google Scholar]

- Bauer, J.M.; Bergstrøm, R.; Foss-Madsen, R. Are you sure, you want a cookie?–The effects of choice architecture on users’ decisions about sharing private online data. Comput. Hum. Behav. 2021, 120, 106729. [Google Scholar] [CrossRef]

- Hui-ting, L.; Lei, C.; Xiao-xue, G.; Peng, Z. Real-time personalized micro-blog recommendation system. Comput. Sci. 2018, 45, 253–259. [Google Scholar]

- Ho, S.Y.; David, B.; Tam, K.Y. Timing of Adaptive Web Personalization and Its Effects on Online Consumer Behavior. Inf. Syst. Res. 2011, 22, 660–679. [Google Scholar] [CrossRef]

- Dienlin, T.; Metzger, M.J. An extended privacy calculus model for SNSs: Analyzing self-disclosure and self-withdrawal in a representative U.S. Sample. J. Comput.-Mediat. Commun. 2016, 21, 368–383. [Google Scholar] [CrossRef]

- Burgoon, J.K.; Parrott, R.; LePoire, B.A.; Kelley, D.L.; Walther, J.B.; Perry, D. Maintaining and restoring privacy through communication in different types of relationships. J. Soc. Pers. Relatsh. 1989, 23, 131–158. [Google Scholar] [CrossRef]

- Tufekci, Z. Can you see me now? audience and disclosure regulation in online social network sites. Bull. Sci. Technol. Soc. 2008, 28, 20–36. [Google Scholar] [CrossRef]

- Petronio, S.; Altman, I. Boundaries of Privacy: Dialectics of Disclosure; State University of New York Press: Albany, NY, USA, 2002. [Google Scholar]

- Liu, S.; Ye, Z.; Chen, L.; Li, G.; Yu, D. Enterprise WeChat groups: Their effect on worklife conflict and life-work enhancement. Front. Bus. Res. China 2015, 9, 516–535. [Google Scholar]

- Klimmt, C.; Hefner, D.; Reinecke, L.; Rieger, D.; Vorderer, P. The permanently online and permanently connected mind.Mapping the cognitive structures behind mobile Internet use. In Permanently Online, Permanently Connected. Living and Communication in a POPC World; Vorderer, P., Hefner, D., Reinecke, L., Klimmt, C., Eds.; Routledge: NewYork, NY, USA, 2018; pp. 18–28. [Google Scholar]

- Bossio, D.; Holton, A.E. Burning out and turning off: Journalists’ disconnection strategies on social media. Journalism 2019, 22, 42422535. [Google Scholar] [CrossRef]

- Wyer, R.S.; Srull, T.K. Human cognition in its social context. Psychol. Rev. 1986, 93, 322–359. [Google Scholar] [CrossRef]

- Xiaomin, S.; Houcan, Z.; Gang, X.; Han, L. The application of the multivariate generalizability theory toa structured interview. J. Psychol. Sci. 2009, 32, 919–922. [Google Scholar] [CrossRef]

- Wen, S.G.; Xing, X.X.; Zong, L.C.; Feng, H.Y. Influences of temporal distances, cover story, and probability on framing effect. Acta Psychol. Sinica 2013, 44, 957–963. [Google Scholar] [CrossRef]

- Gerten, E.; Beckmann, M.; Bellmann, L. Controlling Working Crowds: The impact of digitalization on worker autonomy and monitoring across hierarchical levels. Jahrbücher Natl. Und Stat. 2019, 239, 441–481. [Google Scholar] [CrossRef]

- Schlachter, S.; McDowall, A.; Cropley, M.; Inceoglu, I. Voluntary work-related technology use during non-work time: A narrative synthesis of empirical research and research agenda. Int. J. Manag. Rev. 2018, 20, 825–846. [Google Scholar] [CrossRef]

- Kraft, P.; Rise, J.; Sutton, S.; Røysamb, E. Perceived difficulty in the theory of planned behavior, perceived behavioral control or affective attitude. Br. J. Soc. Psychol. 2005, 44, 479–496. [Google Scholar] [CrossRef] [PubMed]

- Tucker, C.E. Social networks, personalized advertising, and privacy controls. J. Mark. Res. 2014, 51, 546–562. [Google Scholar] [CrossRef]

- Song, J.H.; Kim, H.Y.; Kim, S.; Lee, S.W.; Lee, J.H. Effects of personalized e-mail messages on privacy risk: Moderating roles of control and intimacy. Mark. Lett. 2016, 27, 89–101. [Google Scholar] [CrossRef]

- Brandimarte, L.; Acquisti, A.; Loewenstein, G. Misplaced confidences: Privacy and the control paradox. Soc. Psychol. Personal. Sci. 2013, 4, 340–347. [Google Scholar] [CrossRef]

- Chen, C.; Lee, L.; Yap, A.J. Control deprivation motivates acquisition of utilitarian products. J. Consum. Res. 2017, 43, 1031–1047. [Google Scholar] [CrossRef]

- Landau, M.J.; Kay, A.C.; Whitson, J.A. Compensatory control and the appeal of a structured world. Psychol. Bull. 2015, 141, 694–722. [Google Scholar] [CrossRef]

- Mandel, N.; Rucker, D.D.; Levav, J.; Galinsky, A.D. The compensatory consumer behavior model: How self-discrepancies drive consumer behavior. J. Consum. Psychol. 2017, 27, 133–146. [Google Scholar] [CrossRef]

- Cutright, K.M. The beauty of boundaries: When and why we seek structure in consumption. J. Consum. Res. 2012, 38, 775–790. [Google Scholar] [CrossRef]

- Whitson, J.A.; Galinsky, A.D. Lacking control increases illusory pattern perception. Science 2008, 322, 115–117. [Google Scholar] [CrossRef] [PubMed]

- Massara, F.; Raggiotto, F.; Voss, W.G. Unpacking theprivacy paradox of consumers: A psychological perspective. Psychol. Mark. 2021, 38, 1814–1827. [Google Scholar] [CrossRef]

- Gotsch, M.L.; Schögel, M. Addressing the privacy paradox on the organizational level: Review and future directions. Manag. Rev. Q. 2021, 73, 263–296. [Google Scholar] [CrossRef]

- Nisreen, A.; Sameer, H.; Justin, P. The personalisation-privacy paradox: Consumer interaction with smart technologies and shopping mall loyalty. Comput. Hum. Behav. 2022, 126, 106976. [Google Scholar] [CrossRef]

- Xiaojuan, Z.; Xinluan, T.; Yuxin, H. Influence of privacy fatigue of social media users on their privacy protection disengagement behaviour—A psm based analysis. J. Integr. Des. Process Sci. 2022, 25, 78–92. [Google Scholar] [CrossRef]

- Luchuan, L.; Bingqian, Z.; Xu, L. An experimental study on the effects of social media and user’s anxiety. J. Inf. Resour. Manag. 2019, 9, 66–76. [Google Scholar] [CrossRef]

- Yedi, W.; Yushi, J.; Miao, M.; Xin, A. The Joint Effect of Privacy Salience, Platform Credibility and Regulatory Focus onthePersuasion Effect of Internet Targeted Advertising. Manag. Rev. 2022, 34, 144–156. [Google Scholar] [CrossRef]

- Wolf, R.D.; Willaert, K.; Pierson, J. Managing privacy boundaries together: Exploring individual and group privacy management strategies in Facebook. Comput. Hum. Behav. 2014, 35, 444–454. [Google Scholar] [CrossRef]

- Malhotra, N.K.; Kim, S.S.; Agarwal, J. Internet Users’ Information Privacy Concerns (IUIPC): The Construct, the Scale, and a Causal Model. Inf. Syst. Res. 2004, 15, 336–355. [Google Scholar] [CrossRef]

- Donghwa, C.; Yuanxin, C.; Yanfang, M. Perceived Information overload and intention to discontinue use of short-form video_ the mediating roles of cognitive and psychological factors. Behav. Sci. 2023, 13, 50. [Google Scholar] [CrossRef]

- Broder, H.L.; McGrath, C.; Cisneros, G.J. Ouestionnaire development: Face validity and item impact testing of the Child Oral Health mpact Profile. Community Dent. Oral Epidemiol. 2007, 35, 19. [Google Scholar] [CrossRef] [PubMed]

- Sabry, S.D.; Sucheta, A.; Ibrahim, A.Y.; Momin, M.M.; Ariz, N. Occupational stress among Generation-Y employees in the era of COVID-19: Cases from universities in India. Ind. Commer. Train. 2023, 55, 234–252. [Google Scholar] [CrossRef]

- Steiner, M. Experimental vignette studies in survey research. Methodology 2010, 6, 128–138. [Google Scholar]

- Yuan, Y.; Rui, S.; Jiajia, Z.; Xue, C. A new explanation for the attitude-behavior inconsistency based on the contextualized attitude. Behav. Sci. 2023, 13, 223. [Google Scholar] [CrossRef] [PubMed]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E.; Tatham, R.L. Multivariate Data Analysis, 7th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Andrew, H. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach; Guilford: New York, NY, USA, 2017. [Google Scholar]

- Xu, F.; Michael, K.; Chen, X. Factors affecting privacy disclosure on social Network sites: An integgrated model. Electron. Commer. Res. 2013, 13, 151–168. [Google Scholar] [CrossRef]

- Schönbrodt, F.D.; Perugini, M. At what sample size do correlations stabilize? J. Res. Pers. 2013, 47, 609–612. [Google Scholar] [CrossRef]

- Petronio, S.; Child, J.T. Conceptualization and operationalization: Utility of communication privacy management theory. Curr. Opin. Psychol. 2020, 31, 76–82. [Google Scholar] [CrossRef]

- Petronio, S. Privacy Management Theory: What do we know about family privacy regulation. J. Fam. Theory. Rev. 2010, 2, 175–196. [Google Scholar] [CrossRef]

- Tang, J.; Zhang, B.; Xiao, S. Examining the intention of authorization via apps: Personality traits and expanded privacy calculus perspectives. Behav. Sci. 2022, 12, 218. [Google Scholar] [CrossRef] [PubMed]

- Pang, H.; Ruan, Y.; Wang, Y. Unpacking Detrimental effects of network externalities on privacy invasion, communication overload and mobile app discontinued intentions: A cognition-affectconation perspective. Behav. Sci. 2023, 13, 47. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.K.; Chang, Y.; Kwon, H.Y.; Kim, B. Reconciliation of privacy with preventive cybersecurity: The bright internet approach. Inf. Syst. Front. 2020, 22, 45–57. [Google Scholar] [CrossRef]

- Lei, W.; Hu, S.; Hsu, C. Unveiling the process of phishing precautions taking: The moderating role of optimism bias. Comput. Secur. 2023, 129, 103249. [Google Scholar] [CrossRef]

- Broekhuizen, T.L.J.; Emrich, O.; Gijsenberg, M.J.; Broekhuis, M.; Donkers, B.; Sloot, L.M. Digital platform openness: Drivers, dimensions and outcomes. J. Bus. Res. 2021, 122, 902–914. [Google Scholar] [CrossRef]

- Mishra, A.; Alzoubi, Y.I.; Gill, A.Q.; Anwar, M.J. Cybersecurity enterprises policies: A comparative study. Sensors 2022, 22, 538. [Google Scholar] [CrossRef]

- Alzoubi, Y.I.; Al-Ahmad, A.; Kahtan, H.; Jaradat, A. Internet of things and blockchain integration: Security, privacy, technical, and design challenges. Future Internet 2022, 14, 216. [Google Scholar] [CrossRef]

- Fayyaz, Z.; Ebrahimian, M.; Nawara, D.; Ibrahim, A.; Kashef, R. Recommendation systems: Algorithms, challenges, metrics, and business opportunities. Appl. Sci. 2020, 10, 21. [Google Scholar] [CrossRef]

| Variables | N | % | |

|---|---|---|---|

| Gender | Male | 78 | 43.3 |

| Female | 102 | 56.7 | |

| Age (years) | Mean: 25.66 | ||

| Range: 18–40 | |||

| SD: 5.469 | |||

| Education level | Bachelor’s degree | 114 | 63.3 |

| Master’s degree or above | 38 | 21.1 | |

| Junior college degree/other | 28 | 15.6 | |

| Privacy concern | Low PC level (mean: 3.47, SD: 0.62) | 98 | 54.4 |

| High PC level (mean: 5.27, SD: 0.66) | 82 | 45.6 | |

| Information | Mean: 4.42 | ||

| Overload | SD: 1.11 |

| Effect | Standard Error | Lower Limit CI | Upper Limit CI | |

|---|---|---|---|---|

| Indirect effect | 0.1765 | 0.0790 | 0.0326 | 0.3397 |

| Direct effect | 0.5960 | 0.1803 | 0.2402 | 0.9518 |

| Variables | N | % | |

|---|---|---|---|

| Gender | Male | 72 | 36.2 |

| Female | 127 | 63.8 | |

| Age (years) | Mean: 25.97 | ||

| Range: 18–40 | |||

| SD: 5.529 | |||

| Education level | Bachelor’s degree | 138 | 69.3 |

| Master’s degree or above | 31 | 15.6 | |

| Junior college degree/other | 30 | 15.1 | |

| Privacy concern | Low PC level (mean: 2.86, SD: 0.75) | 104 | 52.26 |

| High PC level (mean: 4.88, SD: 0.74) | 95 | 47.74 | |

| Information Overload | Mean: 4.23 SD: 0.95 |

| Corrective behavior | Preventive behavior | |||||||

|---|---|---|---|---|---|---|---|---|

| Coeff. | SE | t | p | Coeff. | SE | t | p | |

| Constant | 1.7593 | 0.8858 | 1.9862 | 0.0485 | 1.2437 | 0.9501 | 1.3090 | 0.1921 |

| PRT | 0.4811 | 0.1731 | 2.7788 | 0.0060 | 0.6906 | 0.1857 | 3.7186 | 0.0003 |

| OV | 0.2534 | 0.0587 | 4.3184 | 2.5 × 10−5 | 0.2411 | 0.0630 | 3.8300 | 0.0002 |

| PRC | −0.0226 | 0.054 | −0.4185 | 0.6760 | −0.0381 | 0.0579 | −0.6582 | 0.5112 |

| PRT * PRC | −0.2519 | 0.1220 | −2.0649 | 0.0403 | −0.3401 | 0.1309 | −2.5991 | 0.0101 |

| OV * PRC | −0.0912 | 0.0320 | −2.8526 | 0.0048 | −0.0882 | 0.0343 | −2.5707 | 0.0109 |

| Gender | 0.0247 | 0.1759 | 0.1405 | 0.8884 | −0.0145 | 0.1887 | −0.0771 | 0.9386 |

| Age | 0.0057 | 0.0157 | 0.3612 | 0.7183 | 0.0183 | 0.0168 | 1.0878 | 0.2781 |

| Education | 0.3723 | 0.1015 | 3.6690 | 0.0003 | 0.3296 | 0.1088 | 3.0285 | 0.0028 |

| PC | 0.0031 | 0.0693 | 0.0445 | 0.9646 | −0.0386 | 0.0743 | −0.5201 | 0.6036 |

| IO | 0.0264 | 0.0900 | 0.2930 | 0.7698 | 0.0042 | 0.0965 | 0.0436 | 0.9653 |

| R2 | 0.3168 | 0.3307 | ||||||

| F | 8.7171 | 9.2902 | ||||||

| Moderating Variable | Mediating Variable | Dependent Variable | Mediating Effect | Mediated Effect | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Effect Value | Standard Deviation | Lower Limit | Upper Limit | Determine Index | SD | Lower Limit | Upper Limit | |||

| Low PRC | OV | CRB | 0.2526 | 0.0969 | 0.0797 | 0.4549 | −0.0581 | 0.0347 | −0.1402 | −0.0065 |

| High PRC | 0.0704 | 0.0685 | −0.0647 | 0.2166 | ||||||

| Low PRC | OV | PRB | 0.2417 | 0.0860 | 0.0847 | 0.4168 | −0.0562 | 0.0357 | −0.1435 | −0.0063 |

| High PRC | 0.0656 | 0.0787 | −0.1116 | 0.2091 | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhuang, L.; Sun, R.; Chen, L.; Tang, W. The Impact of Shared Information Presentation Time on Users’ Privacy-Regulation Behavior in the Context of Vertical Privacy: A Moderated Mediation Model. Behav. Sci. 2023, 13, 706. https://doi.org/10.3390/bs13090706

Zhuang L, Sun R, Chen L, Tang W. The Impact of Shared Information Presentation Time on Users’ Privacy-Regulation Behavior in the Context of Vertical Privacy: A Moderated Mediation Model. Behavioral Sciences. 2023; 13(9):706. https://doi.org/10.3390/bs13090706

Chicago/Turabian StyleZhuang, Lei, Rui Sun, Lijun Chen, and Wenlong Tang. 2023. "The Impact of Shared Information Presentation Time on Users’ Privacy-Regulation Behavior in the Context of Vertical Privacy: A Moderated Mediation Model" Behavioral Sciences 13, no. 9: 706. https://doi.org/10.3390/bs13090706

APA StyleZhuang, L., Sun, R., Chen, L., & Tang, W. (2023). The Impact of Shared Information Presentation Time on Users’ Privacy-Regulation Behavior in the Context of Vertical Privacy: A Moderated Mediation Model. Behavioral Sciences, 13(9), 706. https://doi.org/10.3390/bs13090706