A Digital Math Game and Multiple-Try Use with Primary Students: A Sex Analysis on Motivation and Learning

Abstract

:1. Introduction

2. Background

2.1. Sex Differences and Learning in Digital Contexts

2.2. Sex, Motivation, and Related Affective Outcomes

2.3. Multiple-Attempt Use with Instructional Feedback

2.4. Motivation and Self-Determination

3. Materials and Methods

3.1. Study Objectives and Research Questions

- RQ1: What are the differences in the learning outcomes between male and female students using MatematicaST?

- RQ2: What differences exist in motivation in terms of interest (RQ2a), competence (RQ2b), and autonomy (RQ2c) between male and female students using MatematicaST?

- RQ3: What are the differences in the perceptions of effort (RQ3a), no pressure (RQ3b), and value (RQ3c) between male and female students using MatematicaST?

- RQ4: What differences exist in the effects of sex between the two feedback conditions (MTF and STF)?

3.2. MatematicaST Game

3.3. Participants and Design

3.4. Procedure

3.5. Instruments

3.5.1. Math Tests

3.5.2. Motivational Questionnaire

4. Results

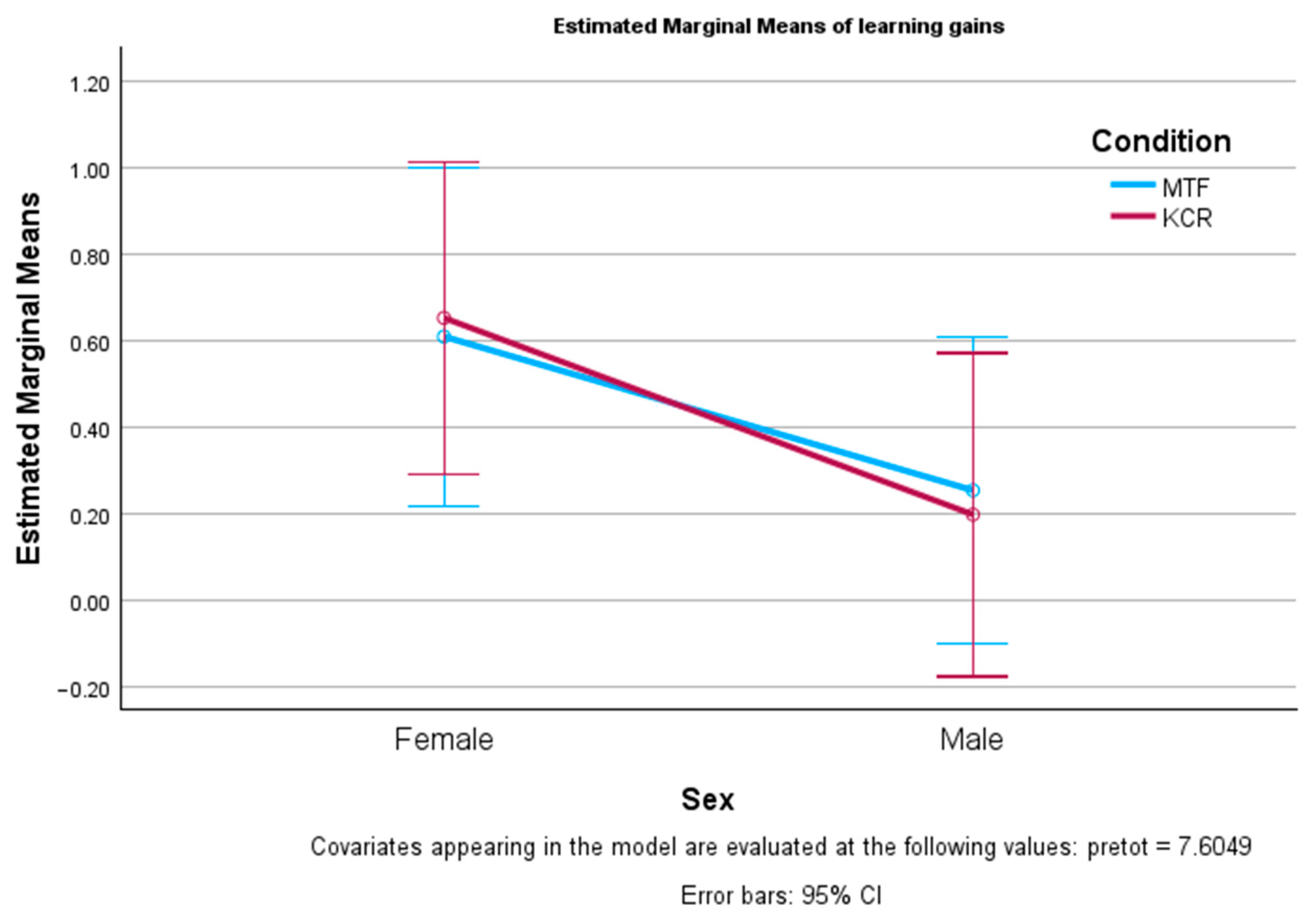

4.1. Learnings by Sex

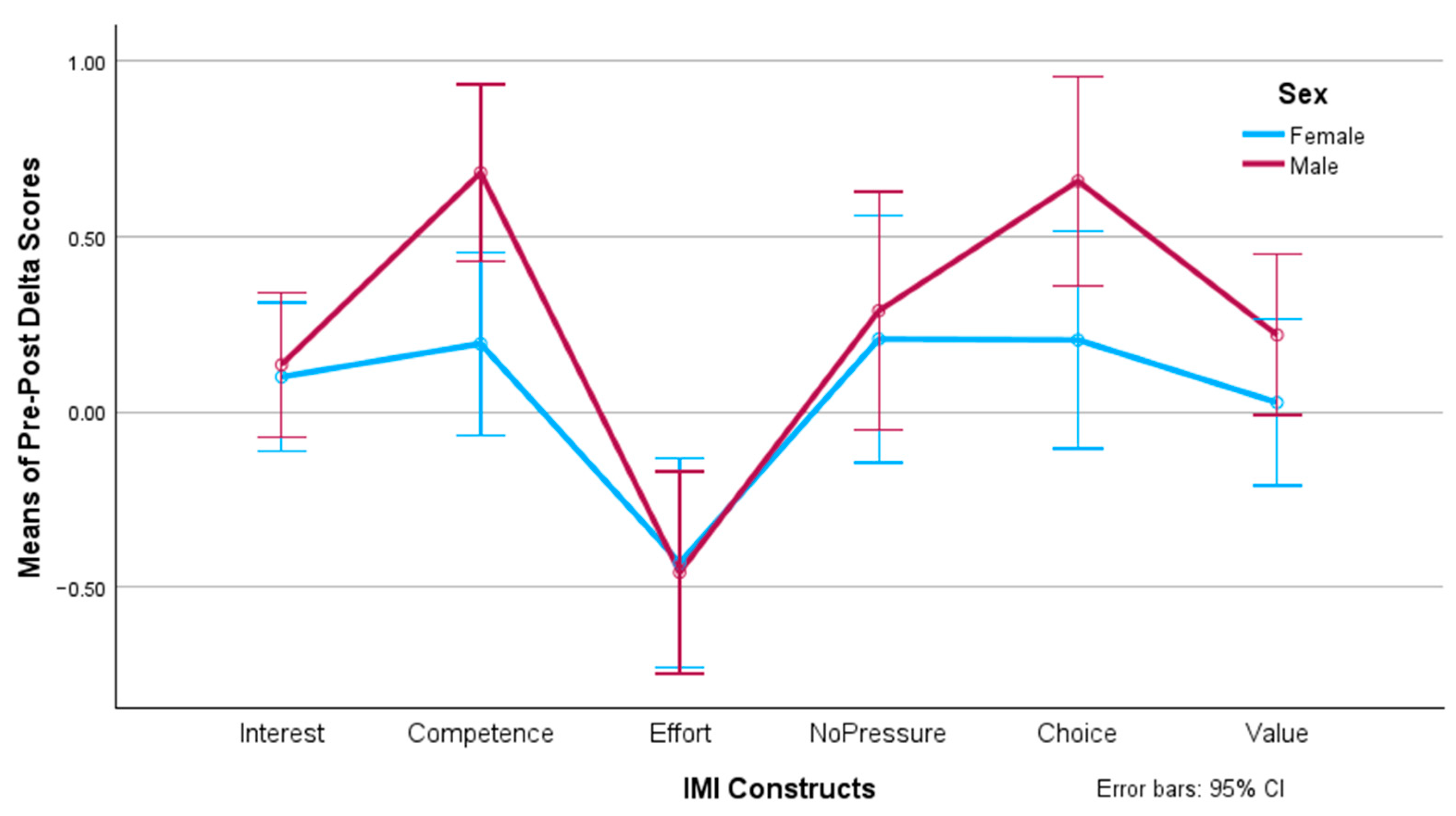

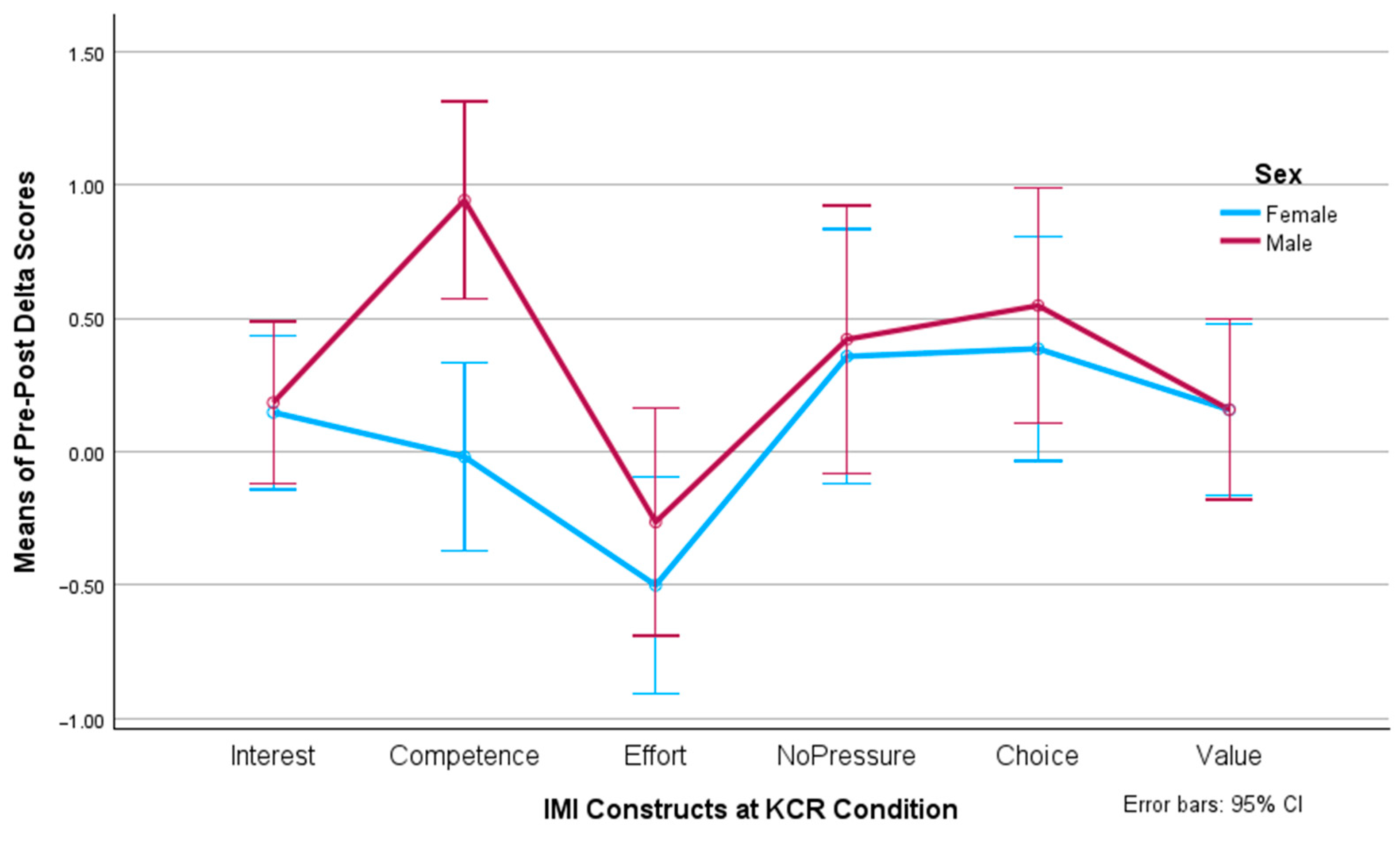

4.2. Sex and Motivation

5. Discussion

5.1. RQ1: What Are the Differences in the Learning Outcomes between Male and Female Students Using MatematicaST?

5.2. RQ2: What Differences Exist in Motivation in Terms of Interest (RQ2a), Competence (RQ2b), and Autonomy (RQ2c) between Male and Female Students Using MatematicaST?

5.3. RQ3: What Are the Differences in Perceptions of Effort (RQ3a), No Pressure (RQ3b), and Value (RQ3c) between Male and Female Students Using MatematicaST?

5.4. RQ4: What Differences Exist in the Effects of Sex between the Two Feedback Conditions (MTF and STF)?

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Higgins, K.; Huscroft-Dangelo, J.; Crawford, L. Effects of Technology in Mathematics on Achievement, Motivation, and Attitude: A Meta-Analysis. J. Educ. Comput. Res. 2019, 57, 283–319. [Google Scholar] [CrossRef]

- Ahuja, M.K.; Thatcher, J.B. Moving beyond intentions and toward the theory of trying: Effects of work environment and gender on post-adoption information technology use. MIS Q. 2005, 29, 427–459. [Google Scholar] [CrossRef]

- Denden, M.; Tlili, M.; Essalmi, F.; Jemni, M.; Chen, N.-S.; Burgos, D. Effects of gender and personality differences on students’ perception of game design elements in educational gamification. Int. J. Hum. Comput. Stud. 2021, 154, 102674. [Google Scholar] [CrossRef]

- Lukosch, H.K.; Kurapati, S.; Groen, D.; Verbraeck, A. Gender and Cultural Differences in Game-Based Learning Experiences. Electron. J. E-Learn. 2017, 15, 310–319. [Google Scholar]

- Naglieri, J.A.; Rojahn, J. Gender differences in planning, attention, simultaneous, and successive (PASS) cognitive processes and achievement. J. Educ. Psychol. 2001, 93, 430–437. [Google Scholar] [CrossRef]

- Boghi, A.; Rasetti, R.; Avidano, F.; Manzone, C.; Orsi, L.; D’agata, F.; Caroppo, P.; Bergui, M.; Rocca, P.; Pulvirenti, L.; et al. The effect of gender on planning: An fMRI study using the Tower of London task. Neuroimage 2006, 33, 999–1010. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Law, V.; Huang, K. The roles of engagement and competition on learner’s performance and motivation in game-based science learning. Educ. Technol. Res. Dev. 2019, 67, 1003–1024. [Google Scholar] [CrossRef]

- Khan, A.; Ahmad, F.; Malik, M.M. Use of digital game based learning and gamification in secondary school science: The effect on student engagement, learning and gender difference. Educ. Inf. Technol. 2017, 22, 2767–2804. [Google Scholar] [CrossRef]

- Bahar, M.; Asil, M. Attitude towards e-assessment: Influence of gender, computer usage and level of education. Open Learn. J. Open Distance E-Learn. 2018, 33, 221–237. [Google Scholar] [CrossRef]

- Dorji, U.; Panjaburee, P.; Srisawasdi, N. Gender differences in students’ learning achievements and awareness through residence energy saving game-based inquiry playing. J. Comput. Educ. 2015, 2, 227–243. [Google Scholar] [CrossRef]

- Chung, L.-Y.; Chang, R.-C. The Effect of Gender on Motivation and Student Achievement in Digital Game-based Learning: A Case Study of a Contented-Based Classroom. Eurasia, J. Math. Sci. Technol. Educ. 2017, 13, 2309–2327. [Google Scholar] [CrossRef]

- Erturan, S.; Jansen, B. An investigation of boys’ and girls’ emotional experience of math, their math performance, and the relation between these variables. Eur. J. Psychol. Educ. 2015, 30, 421–435. [Google Scholar] [CrossRef]

- Wang, L. Mediation Relationships Among Gender, Spatial Ability, Math Anxiety, and Math Achievement. Educ. Psychol. Rev. 2020, 32, 1–15. [Google Scholar] [CrossRef]

- Twenge, J.M.; Martin, G.N. Gender differences in associations between digital media use and psychological well-being: Evidence from three large datasets. J. Adolesc. 2020, 79, 91–102. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.S.; Wu, M.C.; Wang, H.Y. Investigating the determinants and age and gender differences in the acceptance of mobile learning. Br. J. Educ. Technol. 2009, 40, 92–118. [Google Scholar] [CrossRef]

- Bonanno, P.; Kommers, P.A.M. Exploring the influence of gender and gaming competence on attitudes towards using instructional games. Br. J. Educ. Technol. 2008, 39, 97–109. [Google Scholar] [CrossRef]

- Carvalho, R.G.G. Gender differences in academic achievement: The mediating role of personality. Personal. Individ. Differ. 2016, 94, 54–58. [Google Scholar] [CrossRef]

- Matthews, J.S.; Ponitz, C.C.; Morrison, F.J. Early gender differences in self-regulation and academic achievement. J. Educ. Psychol. 2009, 101, 689–704. [Google Scholar] [CrossRef]

- Cipriani, G.P. Gender difference in willingness to guess after a failure. J. Econ. Educ. 2018, 49, 299–306. [Google Scholar] [CrossRef]

- Chang, M.; Evans, M.; Kim, S.; Deater-Deckard, K.; Norton, A. Educational Video Games and Students’ Game Engagement. In Proceedings of the 2014 International Conference on Information Science & Applications (ICISA), Seoul, Republic of Korea, 6–9 May 2014; pp. 1–3. [Google Scholar] [CrossRef]

- Klisch, Y.; Miller, L.M.; Wang, S.; Epstein, J. The impact of a science education game on Students’ learning and perception of inhalants as body pollutants. J. Sci. Educ. Technol. 2012, 21, 295–303. [Google Scholar] [CrossRef]

- Yeo, J.H.; Cho, I.H.; Hwang, G.H.; Yang, H.H. Impact of gender and prior knowledge on learning performance and motivation in a digital game-based learning biology course. Educ. Technol. Res. Dev. 2022, 70, 989–1008. [Google Scholar] [CrossRef]

- Lester, J.C.; Spires, H.A.; Nietfeld, J.L.; Minogue, J.; Mott, B.W.; Lobene, E.V. Designing gamebased learning environments for elementary science education: A narrative-centered learning perspective. Inf. Sci. 2014, 264, 4–18. [Google Scholar] [CrossRef]

- Manero, B.; Torrente, J.; Fernández-Vara, C.; Fernández-Manjón, B. Investigating the Impact of Gaming Habits, Gender, and Age on the Effectiveness of an Educational Video Game: An Exploratory Study. IEEE Trans. Learn. Technol. 2017, 10, 236–246. [Google Scholar] [CrossRef]

- Jones, R. Student Engagement Teacher Handbook; International Center for Leadership in Education: Rexford, MT, USA, 2009. [Google Scholar]

- Keller, J.M. Using the ARCS motivational process in computer-based instruction and distance education. New Dir. Teach. Learn. 1999, 78, 39–47. [Google Scholar] [CrossRef]

- Pérez-Fuentes, M.D.C.; Núñez, A.; Molero, M.D.M.; Gázquez, J.J.; Rosário, P.; Núñez, J.C. The Role of Anxiety in the Relationship between Self-efficacy and Math Achievement. Psicol. Educ. 2020, 26, 137–143. [Google Scholar] [CrossRef]

- Namkung, J.M.; Peng, P.; Lin, X. The relation between mathematics anxiety and mathematics performance among school-aged students: A meta-analysis. Rev. Educ. Res. 2019, 89, 459–496. [Google Scholar] [CrossRef]

- Vanbecelaere, S.; Van den Berghe, K.; Cornillie, F.; Sasanguie, D.; Reynvoet, B.; Depaepe, F. The effects of two digital educational games on cognitive and non-cognitive math and reading outcomes. Comput. Educ. 2020, 143, 103680. [Google Scholar] [CrossRef]

- Abín, A.; Núñez, J.C.; Rodríguez, C.; Cueli, M.; García, T.; Rosário, P. Predicting Mathematics Achievement in Secondary Education: The Role of Cognitive, Motivational, and Emotional Variables. Front. Psychol. 2020, 11, 876. [Google Scholar] [CrossRef]

- Goetz, T.; Bieg, M.; Lüdtke, O.; Pekrun, R.; Hall, N.C. Do Girls Really Experience More Anxiety in Mathematics? Psychol. Sci. 2013, 24, 2079–2087. [Google Scholar] [CrossRef]

- Barana, A.; Marchisio, M.; Sacchet, M. Interactive Feedback for Learning Mathematics in a Digital Learning Environment. Educ. Sci. 2021, 11, 279. [Google Scholar] [CrossRef]

- Van der Kleij, F.M.; Feskens, R.C.W.; Eggen, T.J.H.M. Effects of Feedback in a Computer-Based Learning Environment on Students’ Learning Outcomes: A Meta-Analysis. Rev. Educ. Res. 2015, 85, 475–511. [Google Scholar] [CrossRef]

- Shute, V.J. Focus on Formative Feedback. Rev. Educ. Res. 2008, 78, 153–189. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Candel, C.; Vidal-Abarca, E.; Cerdán, R.; Lippmann, M.; Narciss, S. Effects of timing of formative feedback in computer-assisted learning environments. J. Comput. Assist. Learn. 2020, 36, 718–728. [Google Scholar] [CrossRef]

- Andre, T.; Thieman, A. Level of adjunct question, type of feedback, and learning concepts by reading. Contemp. Educ. Psychol. 1988, 13, 296–307. [Google Scholar] [CrossRef]

- Narciss, S. Feedback strategies for interactive learning tasks. In Handbook of Research on Educational Communications and Technology, 3rd ed.; Spector, J.M., Merril, M.D., van Merriënboer, J.J.G., Driscoll, M.P., Eds.; Lawrence Erlbaum: Mahwah, NJ, USA, 2008; pp. 125–144. [Google Scholar]

- Wang, Z.; Gong, S.-Y.; Xu, S.; Hu, X.-E. Elaborated feedback and learning: Examining cognitive and motivational influences. Comput. Educ. 2019, 136, 130–140. [Google Scholar] [CrossRef]

- Tsai, F.-H.; Tsai, C.-C.; Lin, K.-Y. The evaluation of different gaming modes and feedback types on game-based formative assessment in an online learning environment. Comput. Educ. 2015, 81, 259–269. [Google Scholar] [CrossRef]

- Montazeri, M.; Salimi, E.-A. Assessing motivation to speak (MTS) and willingness to communicate through metalinguistic corrective feedback. Learn. Motiv. 2019, 68, 101594. [Google Scholar] [CrossRef]

- Attali, Y. Effects of multiple-try feedback and question type during mathematics problem solving on performance in similar problems. Comput. Educ. 2015, 86, 260–267. [Google Scholar] [CrossRef]

- Bangert-Drowns, R.L.; Kulik, C.-L.C.; Kulik, J.A.; Morgan, M. The Instructional Effect of Feedback in Test-Like Events. Rev. Educ. Res. 1991, 61, 213–238. [Google Scholar] [CrossRef]

- Clariana, R.B.; Koul, R. Multiple-Try Feedback and Higher-Order Learning Outcomes. Int. J. Instr. Media 2005, 32, 239. [Google Scholar]

- Stevenson, C.E.; Hickendorff, M. Learning to solve figural matrix analogies: The paths children take. Learn. Individ. Differ. 2018, 66, 16–28. [Google Scholar] [CrossRef]

- Understanding Human-Media Interaction: The Effect of the Computer’s Answer-Until-Correct (AUC) vs. Knowledge-of-Result (KR) Task Feedback on Young Users’ Behavioral Interaction Development through a Digital Playground. Available online: https://childhood-developmental-disorders.imedpub.com/articles/towards-understanding-humanmedia-interaction-the-effect-of-the-computers-answeruntil-correct-auc-vs-knowledgeofresult-kr-task-feed.php?aid=7865 (accessed on 23 April 2024).

- Fyfe, E.R. Providing feedback on computer-based algebra homework in middle-school classrooms. Comput. Hum. Behav. 2016, 63, 568–574. [Google Scholar] [CrossRef]

- Clariana, R.B.; Wagner, D.; Murphy, L.C.R. Applying a Connectionist Description of Feedback Timing. Educ. Technol. Res. Dev. 2000, 48, 5–21. [Google Scholar] [CrossRef]

- Clariana, R.B.; Ross, S.M.; Morrison, G.R. The effects of different feedback strategies using computer-administered multiple-choice questions as instruction. Educ. Technol. Res. Dev. 1991, 39, 5–17. [Google Scholar] [CrossRef]

- Guthrie, E.R. The Psychology of Learning; Harper & Brothers: New York, NY, USA, 1935. [Google Scholar]

- Dempsey, J.V. Interactive Instruction and Feedback; Educational Technology: Jersey City, NJ, USA, 1993. [Google Scholar]

- Noonan, J.V. Feedback procedures in computer-assisted instruction: Knowledge-of-results, knowledge-of-correct-response, process explanations, and second attempts after explanations. Diss. Abstr. Int. 1984, 45, 131. [Google Scholar]

- Schimmel, B.J. Feedback use by low-ability students in computer-based education. Diss. Abstr. Int. 1986, 47, 4068. [Google Scholar]

- Dick, W.; Latta, R. Comparative effects of ability and presentation mode in computer-assisted instruction and programed instruction. ECTJ 1970, 18, 33–45. [Google Scholar] [CrossRef]

- Clariana, R.B.; Koul, R. The effects of different forms of feedback on fuzzy and verbatim memory of science principles. Br. J. Educ. Psychol. 2006, 76, 259–270. [Google Scholar] [CrossRef]

- Morrison, G.M.; Ross, S.M.; Gopalakrishnan, M.; Casey, J. The effects of feedback and incentives on achievement in computer-based instruction. Contemp. Educ. Psychol. 1995, 20, 32–50. [Google Scholar] [CrossRef]

- Clariana, R.B.; Lee, D. The effects of recognition and recall study tasks with feedback in a computer-based vocabulary lesson. Educ. Technol. Res. Dev 2001, 49, 23–36. [Google Scholar] [CrossRef]

- Salomon, G.; Globerson, T. Skill may not be enough: The role of mindfulness in learning and transfer. Int. J. Educ. Res. 1987, 11, 623–637. [Google Scholar] [CrossRef]

- Ryan, R.M.; Deci, E.L. Intrinsic and extrinsic motivation from a self-determination theory perspective: Definitions, theory, practices, and future directions. Contemp. Educ. Psychol. 2020, 61, 101860. [Google Scholar] [CrossRef]

- Ryan, R.; Deci, E. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 2000, 55, 68–78. [Google Scholar] [CrossRef] [PubMed]

- Deci, E.L.; Ryan, R.M. Intrinsic Motivation and Self-Determination in Human Behavior; Plenum Press: New York, NY, USA, 1985. [Google Scholar]

- Deci, E.; Ryan, R. The “What” and “Why” of Goal Pursuits: Human Needs and the Self-Determination of Behavior. Psychol. Inq. 2000, 11, 227–268. [Google Scholar] [CrossRef]

- Liao, C.W.; Chen, C.H.; Shih, S.J. The interactivity of video and collaboration for learning achievement, intrinsic motivation, cognitive load, and behavior patterns in a digital game-based learning environment. Comput. Educ. 2019, 133, 43–55. [Google Scholar] [CrossRef]

- Chen, C.-H.; Law, V. Scaffolding individual and collaborative game-based learning in learning performance and intrinsic motivation. Comput. Hum. Behav. 2016, 55B, 1201–1212. [Google Scholar] [CrossRef]

- Huang, Y.; Backman, S.J.; Backman, K.F.; McGuire, F.A.; Moore, D. An investigation of motivation and experience in virtual learning environments: A self-determination theory. Educ. Inf. Technol. 2019, 24, 591–611. [Google Scholar] [CrossRef]

- Proulx, J.-N.; Romero, M.; Arnab, S. Learning Mechanics and Game Mechanics Under the Perspective of Self-Determination Theory to Foster Motivation in Digital Game Based Learning. Simul. Gaming 2017, 48, 81–97. [Google Scholar] [CrossRef]

- Liu, Y.C.; Wang, W.-T.; Lee, T.-L. An Integrated View of Information Feedback, Game Quality, and Autonomous Motivation for Evaluating Game-Based Learning Effectiveness. J. Educ. Comput. Res. 2020, 59, 3–40. [Google Scholar] [CrossRef]

- Cubillos, C.; Roncagliolo, S.; Cabrera-Paniagua, D. Learning and Motivation When Using Multiple-Try in a Digital Game for Primary Students in Chile. Educ. Sci. 2023, 13, 1119. [Google Scholar] [CrossRef]

- Sostiene. Qué Son y Cómo Funcionan los Colegios Particulares Subvencionados en Chile. Available online: https://www.sostiene.cl/colegios-particulares-subvencionados/ (accessed on 23 April 2024).

- Monteiro, V.; Mata, L.; Peixoto, F. Intrinsic Motivation Inventory: Psychometric Properties in the Context of First Language and Mathematics Learning. Psicol. Reflex. Crit. 2015, 28, 434–443. [Google Scholar] [CrossRef]

- Joshi, A.; Kale, S.; Chandel, S.; Pal, D.K. Likert Scale: Explored and Explained. Br. J. Appl. Sci. Technol. 2015, 7, 396–403. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Erlbaum: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Gliem, J.A.; Gliem, R.R. Calculating, Interpreting, and Reporting Cronbach’s Alpha Reliability Coefficient for Likert-Type Scales. In Midwest Research-to-Practice Conference in Adult, Continuing, and Community Education; Ohio State University: Columbus, OH, USA, 2003; Available online: https://hdl.handle.net/1805/344 (accessed on 23 April 2024).

- Fyfe, E.R.; Rittle-Johnson, B.; DeCaro, M.S. The effects of feedback during exploratory mathematics problem solving: Prior knowledge matters. J. Educ. Psychol. 2012, 104, 1094–1108. [Google Scholar] [CrossRef]

- Kruger, J.; Dunning, D. Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J. Personal. Soc. Psychol. 1999, 77, 1121–1134. [Google Scholar] [CrossRef]

- Bandura, A.; Walters, R.H. Social Learning Theory; Prentice Hall: Englewood Cliffs, NJ, USA, 1977; Volume 1. [Google Scholar]

- Xie, F.; Yang, Y.; Xiao, C. Gender-math stereotypes and mathematical performance: The role of attitude toward mathematics and math self-concept. Eur. J. Psychol. Educ. 2023, 38, 695–708. [Google Scholar] [CrossRef]

- Becker, J.R.; Hall, J. Research on gender and mathematics: Exploring new and future directions. ZDM Math. Educ. 2024, 56, 141–151. [Google Scholar] [CrossRef]

- Perez Mejias, P.; McAllister, D.E.; Diaz, K.G.; Ravest, J. A longitudinal study of the gender gap in mathematics achievement: Evidence from Chile. Educ. Stud. Math. 2021, 107, 583–605. [Google Scholar] [CrossRef]

- Rienzo, A.; Cubillos, C. Playability and Player Experience in Digital Games for Elderly: A Systematic Literature Review. Sensors 2020, 20, 3958. [Google Scholar] [CrossRef]

| Sex | Condition | Pre-Test | Post-Test | Gain | N | |||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |||

| Female | MTF | 6.89 | 2.16 | 7.67 | 1.99 | 0.78 *** | 0.94 | 18 |

| STF | 6.99 | 1.97 | 7.79 | 1.55 | 0.80 *** | 1.00 | 21 | |

| Total | 6.94 | 2.03 | 7.73 | 1.74 | 0.79 *** | 0.96 | 39 | |

| Male | MTF | 8.54 | 0.80 | 8.58 | 0.83 | 0.03 | 0.74 | 23 |

| STF | 7.83 | 1.53 | 7.97 | 1.57 | 0.14 | 0.94 | 19 | |

| Total | 8.22 | 1.22 | 8.30 | 1.25 | 0.08 | 0.82 | 42 | |

| Total | MTF | 7.82 | 1.74 | 8.18 | 1.51 | 0.36 ** | 0.90 | 41 |

| STF | 7.39 | 1.80 | 7.88 | 1.54 | 0.49 ** | 1.01 | 40 | |

| Total | 7.60 | 1.77 | 8.03 | 1.52 | 0.42 *** | 0.95 | 81 | |

| IMI Construct | Sex | Condition | Pre-Test | Post-Test | Gain | N | |||

|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | ||||

| Interest | Female | MTF | 4.64 | 0.48 | 4.69 | 0.73 | 0.05 | 0.75 | 18 |

| STF | 4.70 | 0.56 | 4.85 | 0.38 | 0.15 | 0.69 | 21 | ||

| Total | 4.67 | 0.52 | 4.77 | 0.56 | 0.10 | 0.71 | 39 | ||

| Male | MTF | 4.56 | 0.60 | 4.64 | 0.67 | 0.08 | 0.63 | 23 | |

| STF | 4.58 | 0.59 | 4.77 | 0.44 | 0.18 | 0.59 | 19 | ||

| Total | 4.57 | 0.58 | 4.70 | 0.57 | 0.13 | 0.60 | 42 | ||

| Total | MTF | 4.59 | 0.54 | 4.66 | 0.69 | 0.07 | 0.68 | 41 | |

| STF | 4.65 | 0.57 | 4.81 | 0.40 | 0.17 | 0.63 | 40 | ||

| Total | 4.62 | 0.55 | 4.74 | 0.57 | 0.12 | 0.65 | 81 | ||

| Competence | Female | MTF | 3.96 | 0.83 | 4.38 | 0.74 | 0.41 * | 0.82 | 18 |

| STF | 4.29 | 0.75 | 4.29 | 0.92 | −0.02 | 0.72 | 21 | ||

| Total | 4.14 | 0.80 | 4.33 | 0.83 | 0.18 | 0.79 | 39 | ||

| Male | MTF | 4.13 | 0.78 | 4.56 | 0.69 | 0.42 * | 0.82 | 23 | |

| STF | 3.83 | 0.86 | 4.75 | 0.46 | 0.94 *** | 0.90 | 19 | ||

| Total | 4.00 | 0.82 | 4.65 | 0.60 | 0.65 *** | 0.88 | 42 | ||

| Total | MTF | 4.06 | 0.80 | 4.48 | 0.71 | 0.41 ** | 0.81 | 41 | |

| STF | 4.07 | 0.83 | 4.51 | 0.77 | 0.44 *** | 0.93 | 40 | ||

| Total | 4.06 | 0.81 | 4.49 | 0.73 | 0.42 *** | 0.87 | 81 | ||

| Effort | Female | MTF | 4.56 | 0.75 | 4.19 | 0.89 | −0.36 | 0.74 | 18 |

| STF | 4.52 | 0.84 | 4.02 | 0.99 | −0.50 * | 0.87 | 21 | ||

| Total | 4.54 | 0.79 | 4.10 | 0.94 | −0.44 ** | 0.80 | 39 | ||

| Male | MTF | 4.50 | 0.78 | 3.85 | 0.98 | −0.65 ** | 0.99 | 23 | |

| STF | 4.55 | 0.71 | 4.29 | 0.79 | −0.26 | 1.07 | 19 | ||

| Total | 4.52 | 0.74 | 4.05 | 0.92 | −0.48 ** | 1.04 | 42 | ||

| Total | MTF | 4.52 | 0.76 | 4.00 | 0.95 | −0.52 *** | 0.89 | 41 | |

| STF | 4.54 | 0.77 | 4.15 | 0.90 | −0.39 * | 0.96 | 40 | ||

| Total | 4.53 | 0.76 | 4.07 | 0.92 | −0.46 *** | 0.93 | 81 | ||

| No Pressure | Female | MTF | 3.92 | 0.79 | 3.97 | 0.90 | 0.06 | 1.26 | 18 |

| STF | 4.05 | 1.00 | 4.40 | 0.90 | 0.36 | 0.91 | 21 | ||

| Total | 3.99 | 0.90 | 4.21 | 0.92 | 0.22 | 1.08 | 39 | ||

| Male | MTF | 3.67 | 0.91 | 3.83 | 1.24 | 0.15 | 1.21 | 23 | |

| STF | 3.71 | 0.73 | 4.13 | 1.10 | 0.42 † | 0.98 | 19 | ||

| Total | 3.69 | 0.83 | 3.96 | 1.18 | 0.27 † | 1.11 | 42 | ||

| Total | MTF | 3.78 | 0.86 | 3.89 | 1.09 | 0.11 | 1.22 | 41 | |

| STF | 3.89 | 0.89 | 4.27 | 1.00 | 0.39 * | 0.93 | 40 | ||

| Total | 3.83 | 0.87 | 4.08 | 1.06 | 0.25 * | 1.09 | 81 | ||

| Choice | Female | MTF | 3.96 | 0.90 | 3.99 | 0.66 | 0.02 | 0.92 | 18 |

| STF | 3.70 | 1.10 | 4.10 | 0.75 | 0.39 † | 0.97 | 21 | ||

| Total | 3.82 | 1.01 | 4.05 | 0.70 | 0.22 | 0.95 | 39 | ||

| Male | MTF | 3.34 | 1.16 | 4.12 | 0.75 | 0.77 *** | 0.95 | 23 | |

| STF | 3.44 | 0.89 | 3.99 | 0.95 | 0.55 * | 1.03 | 19 | ||

| Total | 3.38 | 1.04 | 4.06 | 0.84 | 0.67 *** | 0.98 | 42 | ||

| Total | MTF | 3.61 | 1.09 | 4.07 | 0.70 | 0.44 ** | 1.00 | 41 | |

| STF | 3.57 | 1.00 | 4.05 | 0.84 | 0.46 ** | 0.99 | 40 | ||

| Total | 3.59 | 1.04 | 4.06 | 0.77 | 0.45 *** | 0.98 | 81 | ||

| Value | Female | MTF | 4.52 | 0.62 | 4.42 | 0.88 | −0.11 | 0.69 | 18 |

| STF | 4.63 | 0.60 | 4.79 | 0.37 | 0.16 | 0.65 | 21 | ||

| Total | 4.58 | 0.60 | 4.62 | 0.67 | 0.04 | 0.67 | 39 | ||

| Male | MTF | 4.46 | 0.52 | 4.74 | 0.47 | 0.28 † | 0.53 | 23 | |

| STF | 4.34 | 0.50 | 4.50 | 0.94 | 0.16 | 1.04 | 19 | ||

| Total | 4.41 | 0.51 | 4.63 | 0.72 | 0.22 † | 0.80 | 42 | ||

| Total | MTF | 4.49 | 0.56 | 4.60 | 0.69 | 0.11 | 0.63 | 41 | |

| STF | 4.49 | 0.57 | 4.65 | 0.71 | 0.16 | 0.85 | 40 | ||

| Total | 4.49 | 0.56 | 4.62 | 0.70 | 0.13 | 0.74 | 81 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cubillos, C.; Roncagliolo, S.; Cabrera-Paniagua, D.; Vicari, R.M. A Digital Math Game and Multiple-Try Use with Primary Students: A Sex Analysis on Motivation and Learning. Behav. Sci. 2024, 14, 488. https://doi.org/10.3390/bs14060488

Cubillos C, Roncagliolo S, Cabrera-Paniagua D, Vicari RM. A Digital Math Game and Multiple-Try Use with Primary Students: A Sex Analysis on Motivation and Learning. Behavioral Sciences. 2024; 14(6):488. https://doi.org/10.3390/bs14060488

Chicago/Turabian StyleCubillos, Claudio, Silvana Roncagliolo, Daniel Cabrera-Paniagua, and Rosa Maria Vicari. 2024. "A Digital Math Game and Multiple-Try Use with Primary Students: A Sex Analysis on Motivation and Learning" Behavioral Sciences 14, no. 6: 488. https://doi.org/10.3390/bs14060488

APA StyleCubillos, C., Roncagliolo, S., Cabrera-Paniagua, D., & Vicari, R. M. (2024). A Digital Math Game and Multiple-Try Use with Primary Students: A Sex Analysis on Motivation and Learning. Behavioral Sciences, 14(6), 488. https://doi.org/10.3390/bs14060488