Exploring Students Online Learning Behavioral Engagement in University: Factors, Academic Performance and Their Relationship

Abstract

1. Introduction

2. Literature Review

2.1. Online Learning Engagement

2.2. Online Learning Behavioral Engagement

3. Research Question

4. Methodology

4.1. Developing an Online Learning Behavior Engagement Evaluation Framework for College Students

4.1.1. Preliminary Evaluation Indicators

4.1.2. Revising the Evaluation Indicators

- 1.

- Expert composition and questionnaire design

- 2.

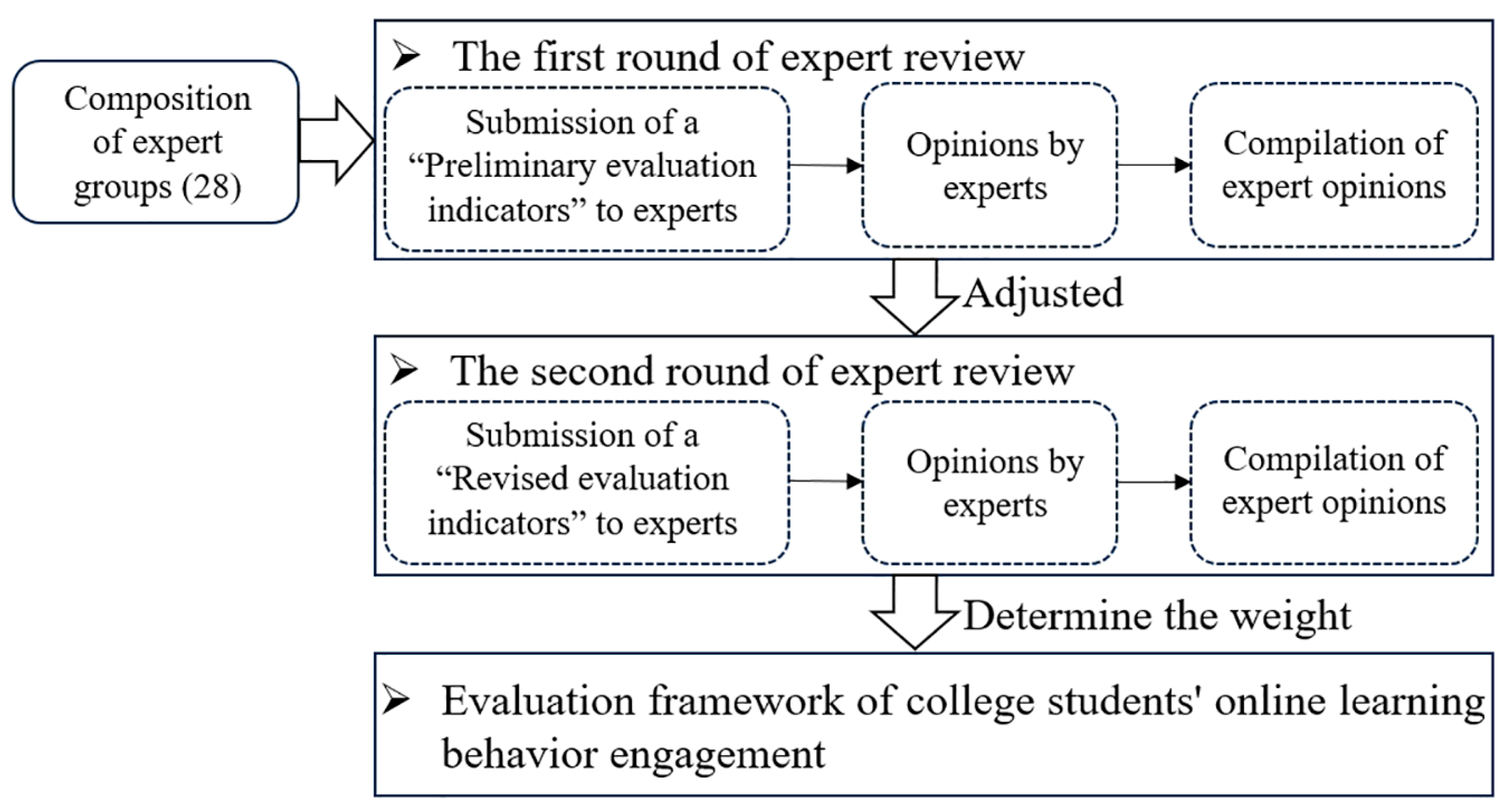

- Processes of expert consultation and evaluation

- (1)

- The first round of expert review

- (2)

- The second round of expert review

- 3.

- Determining the weights of evaluation indicators

- (1)

- Building a hierarchical model

- (2)

- Constructing judgment matrix

- (3)

- Consistency test and weight calculation

4.2. The Formation of Online Learning Behavioral Engagement Evaluation Framework for College Students

5. Data Collection and Procedures

5.1. Data Collection

5.2. Data Processing Methods

6. Data Analysis

7. Results

7.1. Overall Characterization of Online Learning Behaviors

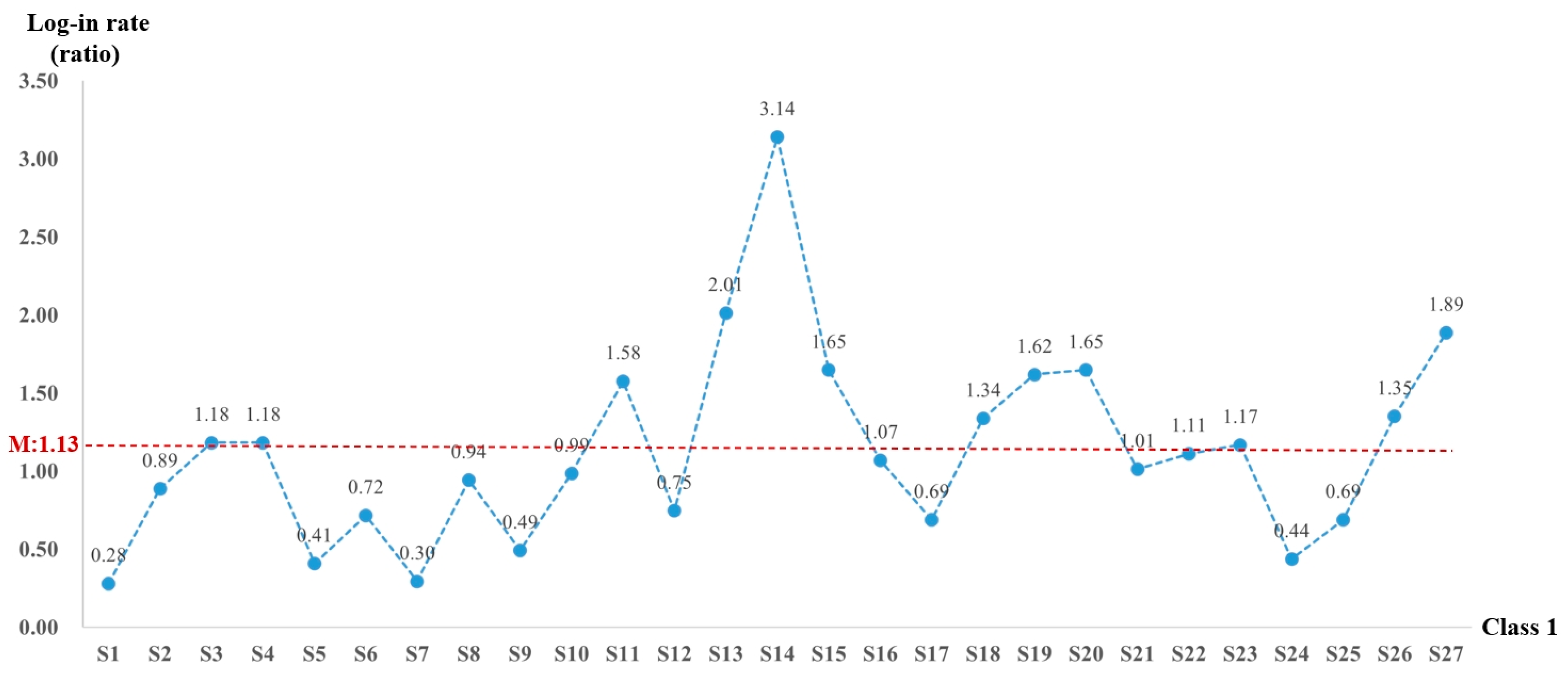

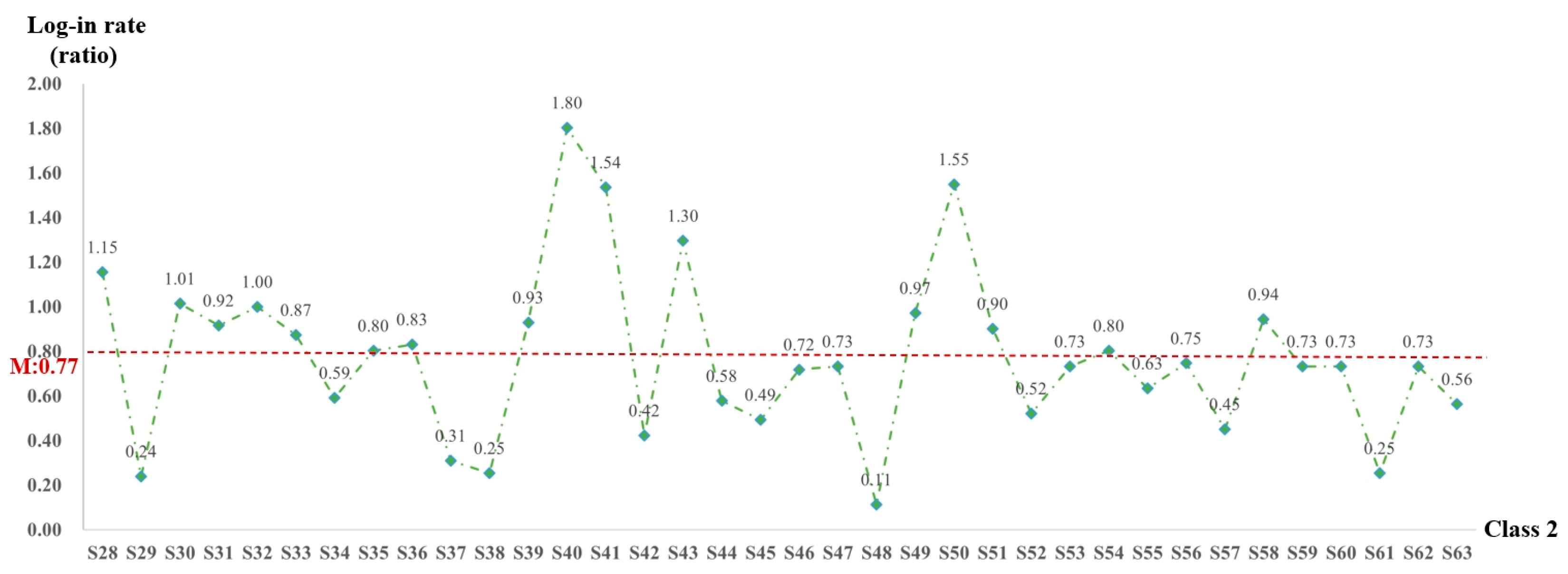

7.1.1. Log-In Learning Behavior Analysis

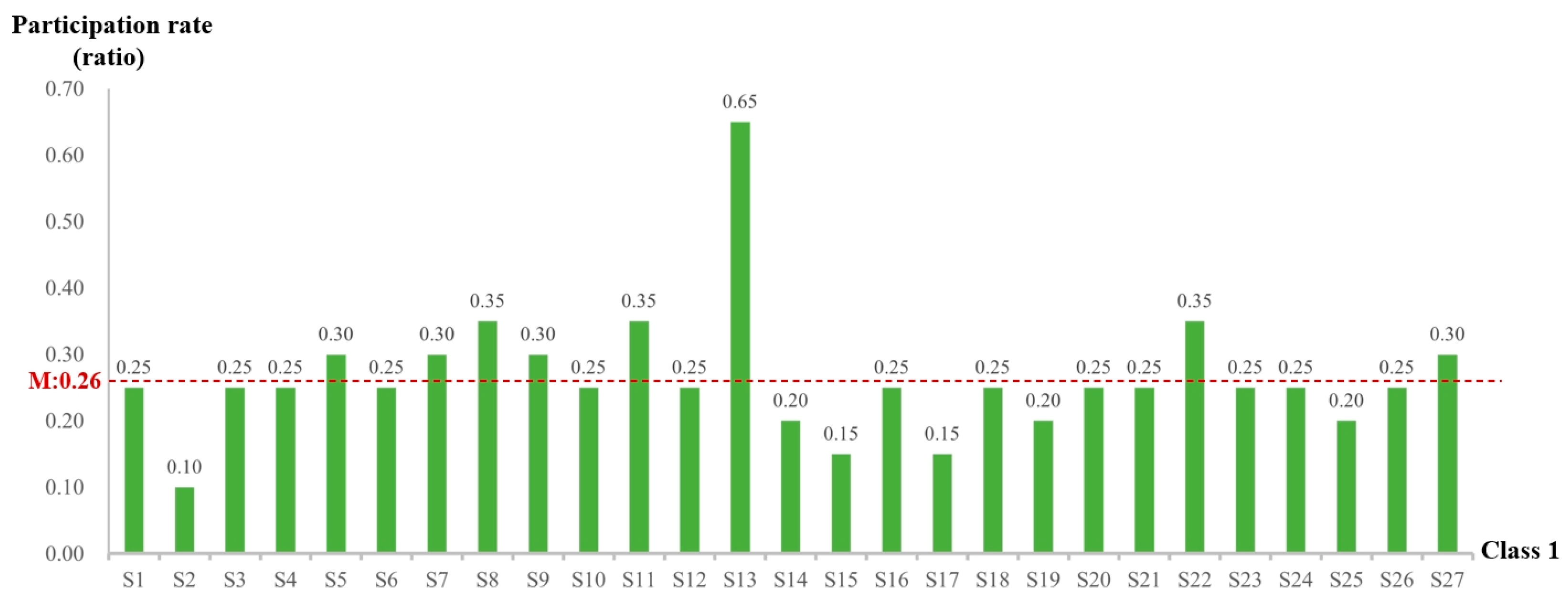

7.1.2. Participation Rate Analysis of Interactive Test Questions

7.2. Correlation Analysis of Assessment Indicators and Academic Performance

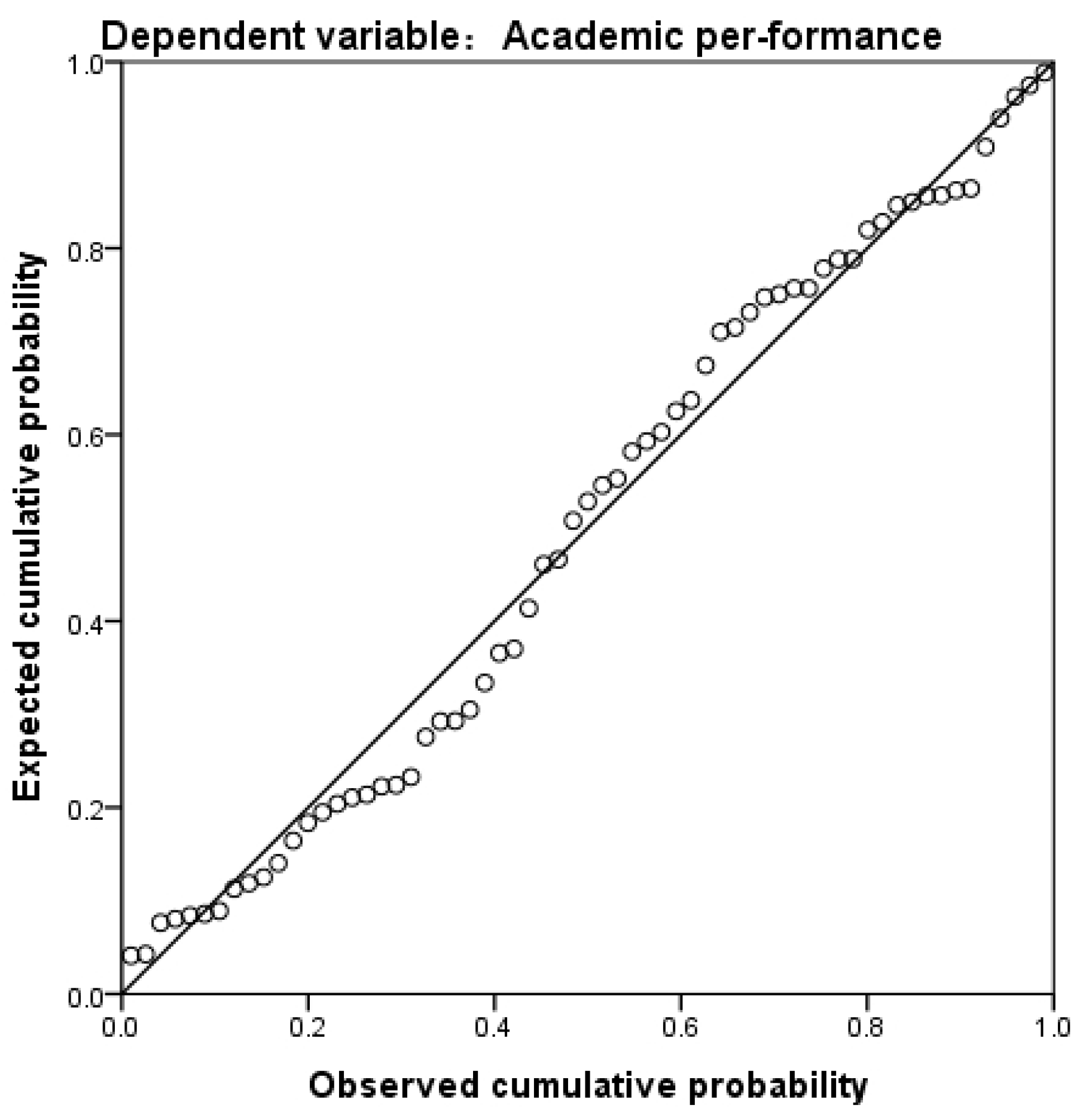

7.3. Predictive Analysis of Assessment Indicators and Academic Performance

8. Discussion

8.1. Framework for Evaluating Online Learning Behavior Engagement: Exploring Influencing Factors from the Perspective of Weights

8.2. Enhancing Engagement in Online Learning: Analysis of Key Behavioral Indicators

9. Limitations and Future Directions

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abou-Khalil, V., Helou, S., Khalifé, E., Chen, M. A., Majumdar, R., & Ogata, H. (2021). Emergency online learning in low-resource settings: Effective student engagement strategies. Education Sciences, 11(1), 24. [Google Scholar] [CrossRef]

- Akyol, Z., & Garrison, D. R. (2011). Assessing metacognition in an online community of inquiry. The Internet and Higher Education, 14(3), 183–190. [Google Scholar] [CrossRef]

- Alamri, H., Lowell, V., Watson, W., & Watson, S. L. (2020). Using personalized learning as an instructional approach to motivate learners in online higher education: Learner self-determination and intrinsic motivation. Journal of Research on Technology in Education, 52(3), 322–352. [Google Scholar] [CrossRef]

- Alghamdi, A. (2013). Pedagogical implications of using discussion board to improve student learning in higher education. Higher Education Studies, 3(5), 68–80. [Google Scholar] [CrossRef]

- Al Mamun, M. A., & Lawrie, G. (2023). Student-content interactions: Exploring behavioural engagement with self-regulated inquiry-based online learning modules. Smart Learning Environments, 10(1), 1. [Google Scholar] [CrossRef]

- Appleton, J. J., Christenson, S. L., & Furlong, M. J. (2008). Student engagement with school: Critical conceptual and methodological issues of the construct. Psychology in the Schools, 45(5), 369–386. [Google Scholar] [CrossRef]

- Aristovnik, A., Keri, D., Ravelj, D., Umek, L., & Tomaevi, N. (2020). Impacts of the COVID-19 pandemic on life of higher education students: A global perspective. Sustainability, 12(20), 8438. [Google Scholar] [CrossRef]

- Atherton, M., Shah, M., Vazquez, J., Griffiths, Z., Jackson, B., & Burgess, C. (2017). Using learning analytics to assess student engagement and academic outcomes in open access enabling programmes. Open Learning: The Journal of Open, Distance and e-Learning, 32(2), 119–136. [Google Scholar] [CrossRef]

- Babakhani, N. (2014). The relationship between the big-five model of personality, self-regulated learning strategies and academic performance of Islamic Azad University students. Procedia-Social and Behavioral Sciences, 116, 3542–3547. [Google Scholar] [CrossRef]

- Baltà-Salvador, R., Olmedo-Torre, N., Peña, M., & Renta-Davids, A. I. (2021). Academic and emotional effects of online learning during the COVID-19 pandemic on engineering students. Education and Information Technologies, 26(6), 7407–7434. [Google Scholar] [CrossRef]

- Barrot, J. S., Llenares, I. I., & Del Rosario, L. S. (2021). Students’ online learning challenges during the pandemic and how they cope with them: The case of the Philippines. Education and Information Technologies, 26(6), 7321–7338. [Google Scholar] [CrossRef] [PubMed]

- Berman, N. B., & Artino, A. R. (2018). Development and initial validation of an online engagement metric using virtual patients. BMC Medical Education, 18, 213. [Google Scholar] [CrossRef] [PubMed]

- Bolliger, D. U., & Halupa, C. (2018). Online student perceptions of engagement, transactional distance, and outcomes. Distance Education, 39(3), 299–316. [Google Scholar] [CrossRef]

- Bond, M., & Bedenlier, S. (2019). Facilitating Student Engagement Through Educational Technology: Towards a Conceptual Framework. Journal of Interactive Media in Education, 2019(1), 11. [Google Scholar] [CrossRef]

- Borup, J., Graham, C. R., West, R. E., Archambault, L., & Spring, K. J. (2020). Academic communities of engagement: An expansive lens for examining support structures in blended and online learning. Educational Technology Research and Development, 68(2), 807–832. [Google Scholar] [CrossRef]

- Bravo-Agapito, J., Romero, S. J., & Pamplona, S. (2021). Early prediction of undergraduate Student’s academic performance in completely online learning: A five-year study. Computers in Human Behavior, 115, 106595. [Google Scholar] [CrossRef]

- Bråten, I., Brante, E. W., & Strømsø, H. I. (2018). What really matters: The role of behavioural engagement in multiple document literacy tasks. Journal of Research in Reading, 41(4), 680–699. [Google Scholar] [CrossRef]

- Chiu, T. K. (2022). Applying the self-determination theory (SDT) to explain student engagement in online learning during the COVID-19 pandemic. Journal of Research on Technology in Education, 54(Suppl. 1), S14–S30. [Google Scholar] [CrossRef]

- Coates, H. (2010). Development of the Australasian survey of student engagement (AUSSE). Higher Education, 60(1), 1–17. [Google Scholar] [CrossRef]

- Ding, L., Kim, C., & Orey, M. (2017). Studies of student engagement in gamified online discussions. Computers & Education, 115, 126–142. [Google Scholar] [CrossRef]

- El-Sayad, G., Md Saad, N. H., & Thurasamy, R. (2021). How higher education students in Egypt perceived online learning engagement and satisfaction during the COVID-19 pandemic. Journal of Computers in Education, 8(4), 527–550. [Google Scholar] [CrossRef]

- Ergulec, F., & Agmaz, R. F. (2023). Self-regulated learning behaviors of pre-school teacher candidates in flipped learning. Educational Policy Analysis and Strategic Research, 18(3), 197–222. [Google Scholar] [CrossRef]

- Feng, L., He, L., & Ding, J. (2023). The association between perceived teacher support, students’ ICT self-efficacy, and online English academic engagement in the blended learning context. Sustainability, 15(8), 6839. [Google Scholar] [CrossRef]

- Fredricks, J. A., Blumenfeld, P. C., & Paris, A. H. (2004). School engagement: Potential of the concept, state of the evidence. Review of Educational Research, 74(1), 59–109. [Google Scholar] [CrossRef]

- Garrison, D. R. (1997). Self-directed learning: Toward a comprehensive model. Adult Education Quarterly, 48(1), 18–33. [Google Scholar] [CrossRef]

- Green, B., Jones, M., Hughes, D., & Williams, A. (1999). Applying the Delphi technique in a study of GPs’ information requirements. Health & Social Care in the Community, 7(3), 198–205. [Google Scholar] [CrossRef]

- Hamane, A. C. (2014). Student engagement in an online course and its impact on student success. Pepperdine University. Available online: https://www.proquest.com/dissertations-theses/student-engagement-online-course-impact-on/docview/1518130624/se-2?accountid=11523 (accessed on 6 July 2024).

- Hew, K. F. (2016). Promoting engagement in online courses: What strategies can we learn from three highly rated MOOCS. British Journal of Educational Technology, 47(2), 320–341. [Google Scholar] [CrossRef]

- Hew, K. F., Cheung, W. S., & Ng, C. S. L. (2010). Student contribution in asynchronous online discussion: A review of the research and empirical exploration. Instructional Science, 38, 571–606. [Google Scholar] [CrossRef]

- Hollister, B., Nair, P., Hill-Lindsay, S., & Chukoskie, L. (2022). Engagement in online learning: Student attitudes and behavior during COVID-19. Frontiers in Education, 7, 851019. [Google Scholar] [CrossRef]

- Hui, Y. K., Li, C., & Qian, S. (2019). Learning engagement via promoting situational interest in a blended learning environment. Journal of Computing in Higher Education, 31(2), 408–425. [Google Scholar] [CrossRef]

- Isaac, O., Aldholay, A., Abdullah, Z., & Ramayah, T. (2019). Online learning usage within Yemeni higher education: The role of compatibility and task-technology fit as mediating variables in the IS success model. Computers & Education, 136, 113–129. [Google Scholar] [CrossRef]

- Johar, N. A., Kew, S. N., Tasir, Z., & Koh, E. (2023). Learning analytics on student engagement to enhance students’ learning performance: A systematic review. Sustainability, 15(10), 7849. [Google Scholar] [CrossRef]

- Johnson, M. L., & Sinatra, G. M. (2013). Use of task-value instructional inductions for facilitating engagement and conceptual change. Contemporary Educational Psychology, 38(1), 51–63. [Google Scholar] [CrossRef]

- Jung, Y., & Lee, J. (2018). Learning engagement and persistence in massive open online courses (M-OOCS). Computers & Education, 122, 9–22. [Google Scholar] [CrossRef]

- Klem, A. M., & Connell, J. P. (2004). Relationships matter: Linking teacher support to student engagement and achievement. Journal of School Health, 74, 262–273. [Google Scholar] [CrossRef] [PubMed]

- Kobicheva, A. (2022). Comparative study on students’ engagement and academic outcomes in live online learning at university. Education Sciences, 12(6), 371. [Google Scholar] [CrossRef]

- Kop, R., Fournier, H., & Mak, S. F. J. (2011). A pedagogy of abundance or a pedagogy for human-beings: Participant support on massive open online courses. The International Review of Research in Open and Distance Learning, 12(7), 74–93. [Google Scholar] [CrossRef]

- Lam, S. F., Jimerson, S., Wong, B. P., Kikas, E., Shin, H., Veiga, F. H., & Zollneritsch, J. (2014). Understanding and measuring student engagement in school: The results of an international study from 12 countries. School Psychology Quarterly, 29(2), 213–232. Available online: https://hub.hku.hk/bitstream/10722/201626/1/Content.pdf?accept=1 (accessed on 10 July 2024). [CrossRef]

- Liang, Y. Z. (2018). A study on the impact of rubric-based peer assessment on cognitive and emotional engagement and learning effectiveness in online learning. E-Education Research, 39(9), 66–74. [Google Scholar]

- Liu, K., Yao, J., Tao, D., & Yang, T. (2023). Influence of individual-technology-task-environment fit on university student online learning performance: The mediating role of behavioral, emotional, and cognitive engagement. Education and Information Technologies, 28(12), 15949–15968. [Google Scholar] [CrossRef]

- Luan, L., Yi, Y., & Liu, J. (2021). Modelling the relationship between English language learners’ academic hardiness and their online learning engagement during the COVID-19 Pandemic. In International conference on computers in education (pp. 516–520). APSCE. Available online: https://library.apsce.net/index.php/ICCE/article/view/4192 (accessed on 6 August 2024).

- Macfadyen, L. P., & Dawson, S. (2010). Mining LMS data to develop an “early warning system” for educators: A proof of concept. Computers & Education, 54(2), 588–599. [Google Scholar] [CrossRef]

- Malan, M. (2020). Engaging students in a fully online accounting degree: An action research study. Accounting Education, 29(4), 321–339. [Google Scholar] [CrossRef]

- Martin, A. J. (2008). Enhancing student motivation and engagement: The effects of a multidimensional intervention. Contemporary Educational Psychology, 33(2), 239–269. [Google Scholar] [CrossRef]

- Martin, F., & Borup, J. (2022). Online learner engagement: Conceptual definitions, research themes, and supportive practices. Educational Psychologist, 57(3), 162–177. [Google Scholar] [CrossRef]

- Martin, N., Kelly, N., & Terry, P. (2018). A framework for self-determination in massive open online courses: Design for autonomy, competence, and relatedness. Australasian Journal of Educational Technology, 34(2), 35–55. [Google Scholar] [CrossRef]

- Morris, L. V., Finnegan, C., & Wu, S. S. (2005). Tracking student behavior, persistence, and achievement in online courses. The Internet and Higher Education, 8(3), 221–231. [Google Scholar] [CrossRef]

- Muir, T., Milthorpe, N., Stone, C., Dyment, J., Freeman, E., & Hopwood, B. (2019). Chronicling engagement: Students’ experience of online learning over time. Distance Education, 40, 262–277. [Google Scholar] [CrossRef]

- Nandi, D., Hamilton, M., Chang, S., & Balbo, S. (2012). Evaluating quality in online asynchronous interactions between students and discussion facilitators. Australasian Journal of Educational Technology, 28(4), 684–702. [Google Scholar] [CrossRef]

- Newmann, F. M. (1992). Student engagement and achievement in American secondary schools. Teachers College Press. [Google Scholar] [CrossRef]

- Paulsen, J., & McCormick, A. C. (2020). Reassessing disparities in online learner student engagement in higher education. Educational Researcher, 49(1), 20–29. [Google Scholar] [CrossRef]

- Rajabalee, Y. B., & Santally, M. I. (2021). Learner satisfaction, engagement and performances in an online module: Implications for institutional e-learning policy. Education and Information Technologies, 26(3), 2623–2656. [Google Scholar] [CrossRef]

- Redmond, P., Abawi, L., Brown, A., Henderson, R., & Hefernan, A. (2018). An online engagement framework for higher education. Online Learning Journal, 22(1), 183–204. [Google Scholar] [CrossRef]

- Reyes-de-Cózar, S., Merino-Cajaraville, A., & Salguero-Pazos, M. R. (2023). Avoiding academic burnout: Academic factors that enhance university student engagement. Behavioral Sciences, 13(12), 989. [Google Scholar] [CrossRef] [PubMed]

- Roque-Hernández, R. V., Díaz-Roldan, J. L., López-Mendoza, A., & Salazar-Hernández, R. (2021). Instructor presence, interactive tools, student engagement, and satisfaction in online education du-ring the COVID-19 Mexican lockdown. Interactive Learning Environments, 31, 2841–2854. [Google Scholar] [CrossRef]

- Samat, M. F., Awang, N. A., Hussin, S. N. A., & Nawi, F. A. M. (2020). Online distance learning amidst COVID-19 pandemic among university students: A practicality of partial least squares structural equation modelling approach. Asian Journal of University Education (AJUE), 16(3), 220–233. [Google Scholar] [CrossRef]

- Sinha, S., Rogat, T. K., Adams-wiggins, K. R., & Hmelo-silver, C. E. (2015). Collaborative group engagement in a computer-supported inquiry learning environment. International Journal of Computer Supported Collaborative Learning, 10(3), 273–307. [Google Scholar] [CrossRef]

- Skinner, E. A. (2016). Engagement and disaffection as central to processes of motivational resilience and development. In Handbook of motivation at school (pp. 145–168). Routledge. [Google Scholar] [CrossRef]

- Suppan, M., Stuby, L., Carrera, E., Cottet, P., Koka, A., Assal, F., Savoldelli, G. L., & Suppan, L. (2021). Asynchronous distance learning of the national institutes of health stroke scale during the COVID-19 pandemic (E-learning vs. video): Randomized controlled trial. Journal of Medical Internet Research, 23(1), e23594. [Google Scholar] [CrossRef] [PubMed]

- Tarazi, A., & Ruiz-Cecilia, R. (2023). Students’ perceptions towards the role of online teaching platforms in enhancing online engagement and academic performance levels in Palestinian higher education institutions. Education Sciences, 13(5), 449. [Google Scholar] [CrossRef]

- Tyler, T. R. (1989). The psychology of procedural justice: A test of the group-value model. Journal of Personality and Social Psychology, 57(5), 873–883. [Google Scholar] [CrossRef]

- Vermeulen, E. J., & Volman, M. L. (2024). Promoting student engagement in online education: Online learning experiences of Dutch university students. Technology, Knowledge and Learning, 29, 941–961. [Google Scholar] [CrossRef]

- Vo, H., & Ho, H. (2024). Online learning environment and student engagement: The mediating role of expectancy and task value beliefs. The Australian Educational Researcher, 51, 2183–2207. [Google Scholar] [CrossRef]

- Wang, F. H. (2017). An exploration of online behaviour engagement and achievement in fipped classroom supported by learning management system. Computers & Education, 114, 79–91. [Google Scholar] [CrossRef]

- Wang, M. T., Henry, D. A., & Degol, J. L. (2020). A development-in-sociocultural-context perspective on the multiple pathways to youth’s engagement in learning. In A. J. Elliot (Ed.), Advances in motivation science. Elsevier. [Google Scholar] [CrossRef]

- Wang, Y., Cao, Y., Gong, S., Wang, Z., Li, N., & Ai, L. (2022). Interaction and learning engagement in online learning: The mediating roles of online learning self-efficacy and academic emotions. Learning and Individual Differences, 94, 102128. [Google Scholar] [CrossRef]

- Wong, L. (2013, July). Student engagement with online resources and its impact on learning outcomes. In Proceedings of the informing science and information technology education conference (pp. 129–146). Informing Science Institute. [Google Scholar] [CrossRef]

- Yin, R., & Xu, H. Y. (2016). Research progress and prospects of foreign online learning engagement. Open Education Research, (3), 89–97. [Google Scholar] [CrossRef]

- Yokoyama, S. (2019). Academic self-efficacy and academic performance in online learning: A mini review. Frontiers in Psychology, 9, 2794. [Google Scholar] [CrossRef]

- Zapata-Cuervo, N., Montes-Guerra, M. I., Shin, H. H., Jeong, M., & Cho, M.-H. (2022). Students’ psychological perceptions toward online learning engagement and outcomes during the COVID-19 pandemic: A comparative analysis of students in three different Countries. Journal of Hospitality and Tourism Education, 35, 108–122. [Google Scholar] [CrossRef]

- Zheng, J., Lajoie, S., & Li, S. (2023). Emotions in self-regulated learning: A critical literature review and meta-analysis. Frontiers in Psychology, 14, 1137010. [Google Scholar] [CrossRef] [PubMed]

- Zhu, M., & Doo, M. Y. (2022). The relationship among motivation, self-monitoring, self-management, and learning strategies of MOOC learners. Journal of Computing in Higher Education, 34(2), 321–342. [Google Scholar] [CrossRef] [PubMed]

| A (Behavioral Engagement) | B1 (Participation) | B2 (Focus) | B3 (Interaction) | B4 (Challenge) | B5 (Self-Monitoring) | Weight |

|---|---|---|---|---|---|---|

| B1 (Participation) | 1 | 0.3567 | 0.7920 | 1.9841 | 0.3637 | 0.1202 |

| B2 (Focus) | 2.8034 | 1 | 2.2204 | 5.5623 | 1.0195 | 0.3370 |

| B3 (Interaction) | 1.2626 | 0.4504 | 1 | 2.5051 | 0.4591 | 0.1518 |

| B4 (Challenge) | 0.5040 | 0.1798 | 0.3992 | 1 | 0.1833 | 0.0606 |

| B5 (Self-monitoring) | 2.7498 | 0.9809 | 2.1780 | 5.4561 | 1 | 0.3305 |

| Key Factors | Weight | Indicators | Weight | Indicators Description |

|---|---|---|---|---|

| Participation | 0.1202 | Log-in rate | 0.0112 | Frequency of log-in to online learning platform. |

| Online engagement duration | 0.0604 | Average length of online learning platform log-in. | ||

| Visits of online learning resources | 0.0486 | Frequency of browsing online learning resources. | ||

| Focus | 0.3370 | Submission rate of online homework | 0.0532 | Submission status of online homework. |

| Long-term online learning | 0.1326 | Each time students logged in to the platform to participate in online learning was longer than the average number of times of their own learning time in the whole semester. | ||

| Completion rate of online test questions | 0.1512 | The completion rate of online release test questions. | ||

| Interaction | 0.1518 | Active interaction | 0.0735 | Students’ own active participation in platform interactions. |

| Peer interaction | 0.0444 | Students’ own participation in online peer interactions. | ||

| Teacher–student interaction | 0.0338 | Online interaction between students and lecturers. | ||

| Challenge | 0.0606 | Completion rate of online challenge tasks | 0.0260 | Students’ completion of tasks beyond online learning requirements. For example, the total number of “rush to answer”. |

| Use of online cognitive tools | 0.0246 | Students’ use of online information tools in order to access, save and process online information. | ||

| Expanding resource usage online | 0.0100 | Overall download or browsing of online learning resources. | ||

| Self-monitoring | 0.3305 | Clear learning objectives | 0.0807 | Use of the learning plan creation module in the learning platform. |

| Self-evaluation and reflection | 0.1212 | Self-judgement and evaluation of the completion of online learning tasks and problems and difficulties encountered. | ||

| Use of online management tools | 0.0384 | Students’ use of online management tools that enhance learning efficiency. | ||

| Completion of learning plan | 0.0903 | Overall completion of various tasks and programs in online learning. |

| Data Sources | Data Processing Methods |

|---|---|

| Log-in rate | Counted the number of visits to the platform. |

| Online engagement duration | Total time spent logging on the platform divided by the number of visits to the class. |

| Visits of online learning resources | The number of student interactions divided by the total number of Teacher Organizational interactions. |

| Submission rate of online homework | The number of student assignments submitted divided by the total number of assignments required to be submitted. |

| Long-term online learning | Each time students logged into the platform to participate in online learning is longer than the average number of times of their own learning time in the whole semester. |

| Completion rate of online test questions | Counted the number of completed test questions divided by the total number of published test questions. |

| Expanding resource usage online | Counted the total number of times students downloaded learning resources. |

| Self-evaluation and reflection | Total number of comments on announcements in the online learning platform. |

| Use of online management tools | Counted the total number of times students managed “Personal Portfolio”. |

| Dependent Variable | Online Learning Behavioral Indicators | N | Correlation Coefficient (rs) | Coefficient of Determination (R2) | p-Value |

|---|---|---|---|---|---|

| Academic performance | Log-in rate | 63 | 0.315 * | 0.0992 | 0.012 |

| Online engagement duration | 63 | 0.377 ** | 0.1421 | 0.002 | |

| Visits of online- learning resources | 63 | 0.574 *** | 0.3295 | 0.000 | |

| Submission rate of online homework | 63 | 0.318 * | 0.1011 | 0.011 | |

| Long-term online learning | 63 | 0.771 *** | 0.5944 | 0.000 | |

| Completion rate of online test questions | 63 | 0.432 *** | 0.1866 | 0.000 | |

| Self-evaluation and reflection | 63 | 0.168 | 0.0282 | 0.188 | |

| Use of online management tools | 63 | 0.123 | 0.0151 | 0.335 | |

| Expanding resource usage online | 63 | 0.162 | 0.0262 | 0.204 |

| R | R2 | Adjusted R2 | Errors in Standardized Estimates | Durbin-Watson | F | p-Value |

|---|---|---|---|---|---|---|

| 0.822 | 0.675 | 0.658 | 4.0936 | 1.364 | 40.835 | 0.000 |

| Unstandardized Coefficient | Standardized Coefficient | t | p-Value | Covariance Statistics | |||

|---|---|---|---|---|---|---|---|

| B | Standard Error | β | Tolerances | VIF | |||

| (Constant) | 64.543 | 5.002 | 12.904 | 0.000 | |||

| Visits of online learning resources | 0.030 | 0.010 | 0.260 | 3.088 | 0.003 | 0.776 | 1.289 |

| Long-term online learning | 0.513 | 0.073 | 0.603 | 6.984 | 0.000 | 0.738 | 1.355 |

| Completion rate of online test questions | 9.620 | 5.569 | 0.139 | 1.727 | 0.089 | 0.850 | 1.177 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zuo, M.; He, X.; Wang, Z. Exploring Students Online Learning Behavioral Engagement in University: Factors, Academic Performance and Their Relationship. Behav. Sci. 2025, 15, 78. https://doi.org/10.3390/bs15010078

Wang Y, Zuo M, He X, Wang Z. Exploring Students Online Learning Behavioral Engagement in University: Factors, Academic Performance and Their Relationship. Behavioral Sciences. 2025; 15(1):78. https://doi.org/10.3390/bs15010078

Chicago/Turabian StyleWang, Yonghong, Mingzhang Zuo, Xiangchun He, and Zhifeng Wang. 2025. "Exploring Students Online Learning Behavioral Engagement in University: Factors, Academic Performance and Their Relationship" Behavioral Sciences 15, no. 1: 78. https://doi.org/10.3390/bs15010078

APA StyleWang, Y., Zuo, M., He, X., & Wang, Z. (2025). Exploring Students Online Learning Behavioral Engagement in University: Factors, Academic Performance and Their Relationship. Behavioral Sciences, 15(1), 78. https://doi.org/10.3390/bs15010078