Public Perceptions of Judges’ Use of AI Tools in Courtroom Decision-Making: An Examination of Legitimacy, Fairness, Trust, and Procedural Justice

Abstract

1. Introduction

1.1. The Role of AI in the Justice System

1.2. Symbolic Interaction Theory

1.3. Procedural Justice

1.4. Legitimacy

1.5. Trust in AI and Judicial Decision-Making

1.6. Racial and Ethnic Disparities in Judicial Perceptions

2. Materials and Methods

- How does the public symbolically view a judge who uses artificial intelligence compared to one who relies on their expertise in bail and sentencing decisions, and how does this vary among Black, Hispanic, and White individuals?

- How does the application of AI in bail and sentencing decisions impact perceived legitimacy and procedural justice, and how do these perceptions vary across racial and ethnic groups?

- How does a judge’s perceived trust in AI influence public trust in AI, and how does this vary among racial and ethnic groups?

- What social psychological themes emerge in participants’ open-ended responses about judges using AI in decision-making?

2.1. Study: Bail (Phase 1) and Sentencing (Phase 2)

2.1.1. Participants

2.1.2. Phase 1: Bail

2.1.3. Phase 2: Sentencing

2.2. Design and Procedure

2.3. Measures

2.3.1. Symbolic Perceptions

2.3.2. Procedural Justice

2.3.3. Legitimacy

2.3.4. Trust

2.3.5. Open-Ended Question

2.4. Data Analysis and Ethics

3. Results

3.1. R1. How Does the Public Symbolically View a Judge Who Uses Artificial Intelligence Compared to Their Expertise in Bail and Sentencing Decisions, and How Does This Vary by Black, Hispanic, and White Individuals?

3.2. R2. How Does the Application of AI in Bail and Sentencing Decisions Impact the Perceived Legitimacy and Procedural Justice, and How Does This Vary by Black, Hispanic, and White Individuals?

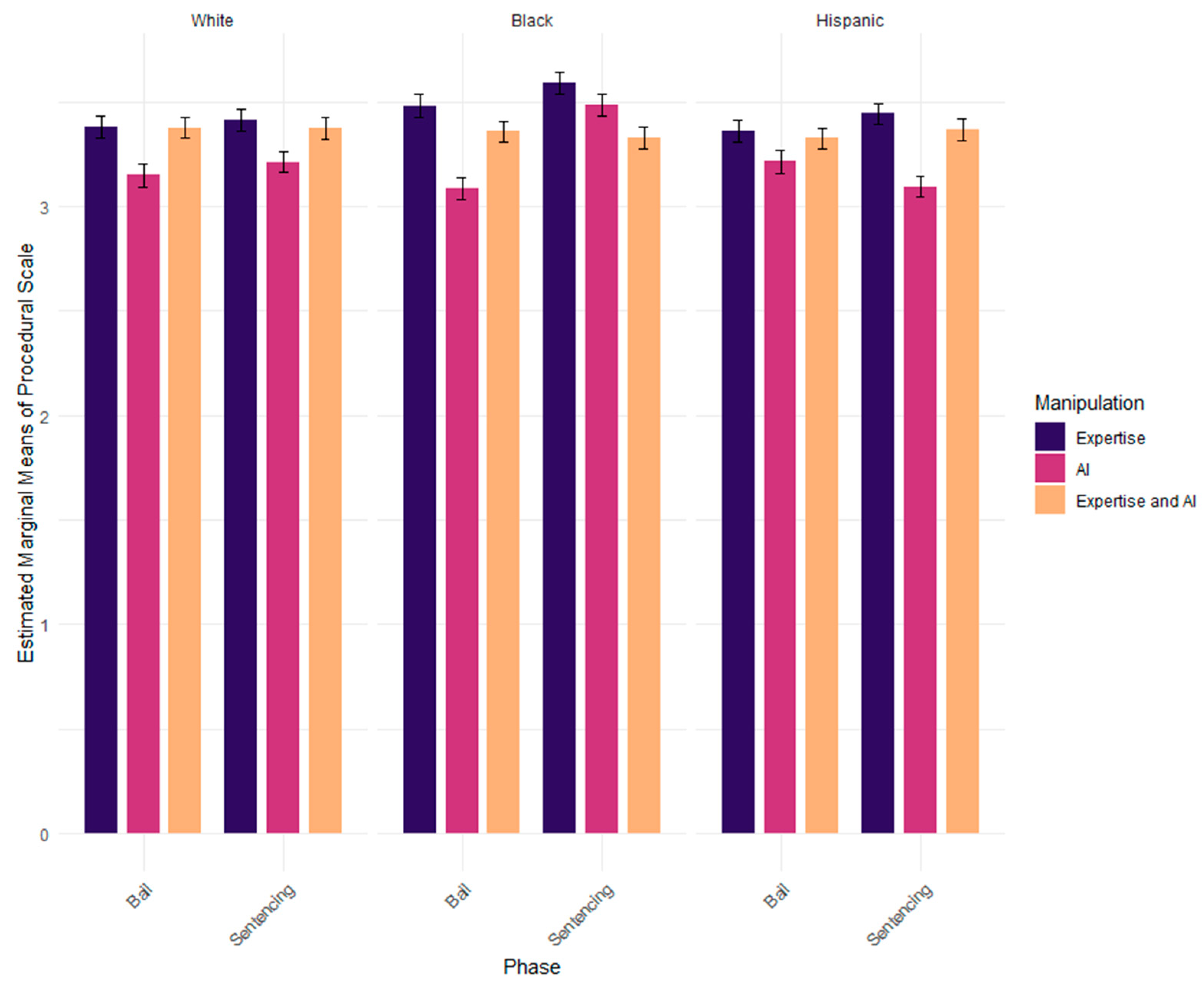

Procedural Scale

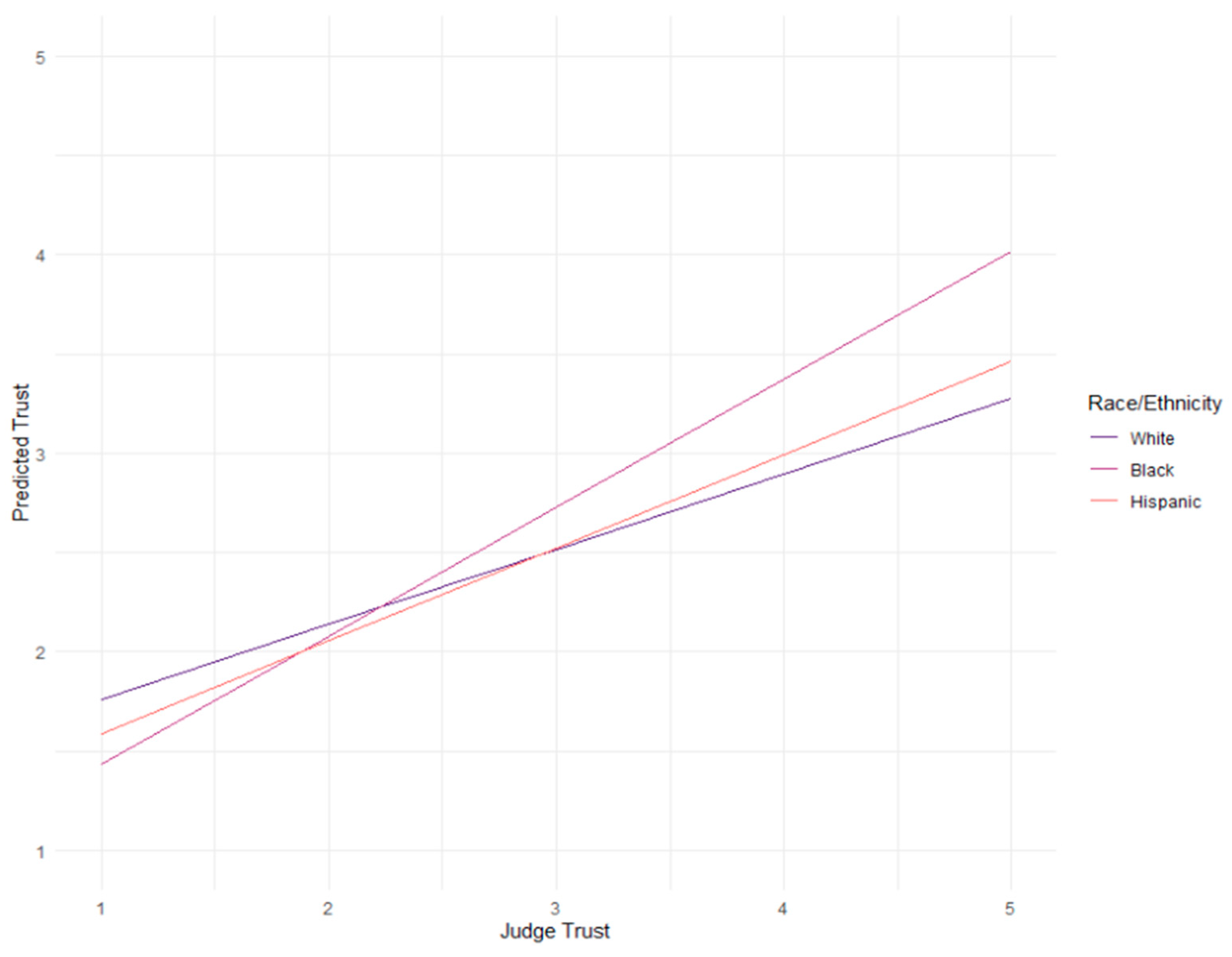

3.3. R3. How Does Perceived Judges’ Trust in AI Influence Participants’ Trust in AI, and How Does This Vary by Black, Hispanic, and White Individuals?

3.4. R4. What Themes Are Found Within the Open-Ended Questions?

4. Discussion

4.1. RQ1. Symbolic Perceptions

4.2. RQ2. Perceived Legitimacy and Procedural Justice

4.3. RQ3. Trust in AI

4.4. RQ4. Themes from Open-Ended Responses

4.5. Limitations

4.6. Implications and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Vignettes for Phase 1 (Bail)

Appendix A.2. Vignettes for Phase 2 (Sentencing)

References

- Acikgoz, Y., Davison, K. H., Compagnone, M., & Laske, M. (2020). Justice perceptions of artificial intelligence in selection. International Journal of Selection and Assessment, 28(4), 399–416. [Google Scholar] [CrossRef]

- Aletras, N., Tsarapatsanis, D., Preoţiuc-Pietro, D., & Lampos, V. (2016). Predicting judicial decisions of the European Court of Human Rights: A natural language processing perspective. PeerJ Computer Science, 2, e93. Available online: https://peerj.com/articles/cs-93.pdf (accessed on 6 February 2025).

- Angwin, J., Larson, J., Mattu, S., & Kirchner, L. (2016). Machine bias. ProPublica. Available online: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing (accessed on 6 February 2025).

- Ashley, K. D. (2019). A brief history of the changing roles of case prediction in AI and law. Law Context, 36(1), 93–112. [Google Scholar] [CrossRef]

- Audette, A. P., & Weaver, C. L. (2015). Faith in the court: Religious out-groups and the perceived legitimacy of judicial decisions. Law & Society Review, 49(4), 999–1022. [Google Scholar] [CrossRef]

- Avery, J. J., Agha, A. A., Glynn, E. J., & Cooper, J. (2020). Using artificial intelligence to improve the fairness and equity of government decision making. The Princeton Project in Computational Law. [Google Scholar]

- Badas, A. (2019). Policy disagreement and judicial legitimacy: Evidence from the 1937 court-packing plan. The Journal of Legal Studies, 48(2), 377–408. Available online: https://www.jstor.org/stable/26906562?seq=1 (accessed on 3 February 2025). [CrossRef]

- Bagaric, M., Hunter, D., & Stobbs, N. (2019). Erasing the bias against using artificial intelligence to predict future criminality: Algorithms are color-blind and never tire. University of Cincinnati Law Review, 88, 1037. [Google Scholar]

- Baker, J. E., Hobart, L. N., & Mittelsteadt, M. (2023). An introduction to artificial intelligence for federal judges. Federal Judicial Center.

- Benjamin, R. (2019). Race after technology: Abolitionist tools for the new Jim code. Polity. [Google Scholar]

- Berger, P. L., & Luckmann, T. (1966). The social construction of reality: A treatise in the sociology of knowledge. Doubleday. [Google Scholar]

- Blumer, H. (1969). Symbolic interactionism: Perspective and method. University of California Press. [Google Scholar]

- Bonneau, C. W., & Hall, M. G. (2009). In defense of judicial elections. Routledge. [Google Scholar]

- Buckland, R. (2023). AI, judges and judgement: Setting the scene [M-RCBG Associate Working Paper Series No. 220]. Harvard Kennedy School, Mossavar-Rahmani Center for Business & Government. [Google Scholar]

- Bühlmann, M., & Kunz, R. (2011). Confidence in the Judiciary: Comparing the Independence and Legitimacy of Judicial Systems. West European Politics, 34(2), 317–345. [Google Scholar] [CrossRef]

- Buolamwini, J., & Gebru, T. (2018, February 23–24). Gender shades: Intersectional accuracy disparities in commercial gender classification. 1st Conference on Fairness, Accountability, and Transparency (pp. 77–91), New York, NY, USA. Available online: https://proceedings.mlr.press/v81/buolamwini18a.html (accessed on 6 February 2025).

- Caldeira, G. A., & Gibson, J. L. (1992). The etiology of public support for the Supreme Court. American Journal of Political Science, 36, 635–664. [Google Scholar] [CrossRef]

- Cann, D. M., & Yates, J. (2008). Homegrown institutional legitimacy: Assessing citizens’ diffuse support for state courts. American Politics Research, 36(2), 297–329. [Google Scholar] [CrossRef]

- Cheong, B. C. (2024). Transparency and accountability in AI systems: Safeguarding wellbeing in the age of algorithmic decision-making. Frontiers in Human Dynamics, 6, 1421273. Available online: https://www.frontiersin.org/journals/human-dynamics/articles/10.3389/fhumd.2024.1421273/full (accessed on 6 February 2025). [CrossRef]

- Chowdhary, K., & Chowdhary, K. R. (2020). Natural language processing. In Fundamentals of artificial intelligence (pp. 603–649). Springer. [Google Scholar] [CrossRef]

- Coglianese, C., & Lehr, D. (2017). Regulating by robot: Administrative decision making in the machine-learning era. Georgetown Law Journal, 105, 1147–1223. Available online: https://scholarship.law.upenn.edu/faculty_scholarship/1734/ (accessed on 2 April 2025).

- Council on Criminal Justice (CCJ). (2024). The implications of AI for criminal justice. Available online: https://counciloncj.org/the-implications-of-ai-for-criminal-justice/ (accessed on 7 February 2025).

- Dixon, H. B., Jr. (2020). What judges and lawyers should understand about artificial intelligence technology. Judges’ Journal, 59(1), 36–39. Available online: https://www.americanbar.org/content/dam/aba/publications/judges_journal/vol59no1-jj2020-tech.pdf (accessed on 7 February 2025).

- Dressel, J., & Farid, H. (2018). The accuracy, fairness, and limits of predicting recidivism. Science Advances, 4(1), eaao5580. [Google Scholar] [CrossRef] [PubMed]

- Eaglin, J. M. (2017). Constructing recidivism risk. Emory Law Journal, 67, 59–122. Available online: https://scholarlycommons.law.emory.edu/elj/vol67/iss1/2/ (accessed on 5 February 2025).

- Entman, R. M. (1993). Framing: Toward clarification of a fractured paradigm. Journal of Communication, 43(4), 51–58. [Google Scholar] [CrossRef]

- Erfanian, F., Latifnejad Roudsari, R., Heydari, A., & Noghani Dokht Bahmani, M. (2020). A narrative on using vignettes: Its advantages and drawbacks. Journal of Midwifery and Reproductive Health, 8(2), 2134–2145. [Google Scholar] [CrossRef]

- Farayola, M. M., Tal, I., Connolly, R., Saber, T., & Bendechache, M. (2023). Ethics and trustworthiness of ai for predicting the risk of recidivism: A systematic literature review. Information, 14(8), 426. [Google Scholar] [CrossRef]

- Fine, A., & Marsh, S. (2024). Judicial leadership matters (yet again): The association between judge and public trust for artificial intelligence in courts. Discover Artificial Intelligence, 4(1), 44. [Google Scholar] [CrossRef]

- Geisen, E. (2022, August 4). Improve data quality by using a commitment request instead of attention checks. Qualtrics. Available online: https://www.qualtrics.com/blog/attention-checks-and-data-quality/ (accessed on 5 February 2025).

- Gibson, J. L. (2006). Judicial Institutions. In R. A. Rhodes, S. A. Binder, & B. A. Rockman (Eds.), The Oxford Handbook of Political Institutions. Oxford University Press. [Google Scholar]

- Gibson, J. L. (2012). Electing judges: The surprising effects of campaigning on judicial legitimacy. University of Chicago Press. [Google Scholar]

- Gillespie, T. (2020). Content moderation, AI, and the question of scale. Big Data & Society, 7(2), 1–5. [Google Scholar] [CrossRef]

- Goodman, J. K., Cryder, C. E., & Cheema, A. (2013). Data collection in a flat world: The strengths and weaknesses of Mechanical Turk samples. Journal of Behavioral Decision Making, 26(3), 213–224. [Google Scholar] [CrossRef]

- Government Accountability Office (GAO). (2021). Artificial intelligence: An accountability framework for federal agencies and other entities. Available online: https://www.gao.gov/assets/gao-21-519sp.pdf (accessed on 7 February 2025).

- Grossman, N. (2011). Sex on the bench: Do women judges matter to the legitimacy of international courts. Chicago Journal of International Law, 12, 647. Available online: https://chicagounbound.uchicago.edu/cjil/vol12/iss2/9 (accessed on 3 February 2025). [CrossRef][Green Version]

- Grove, W. M., Zald, D. H., Lebow, B. S., Snitz, B. E., & Nelson, C. (2000). Clinical versus mechanical prediction: A meta-analysis. Psychological Assessment, 12(1), 19–30. [Google Scholar] [CrossRef] [PubMed]

- Hannah-Moffat, K. (2019). Algorithmic risk governance: Big data analytics, race and information activism in criminal justice debates. Theoretical Criminology, 23(4), 453–470. [Google Scholar] [CrossRef]

- Hegtvedt, K. A. (2006). Justice principles. In P. J. Burke (Ed.), Contemporary social psychological theories. Stanford University Press. [Google Scholar]

- Ho, Y., Jabr, W., & Zhang, Y. (2023). AI enforcement: Examining the impact of AI on judicial fairness and public safety. SSRN Electronic Journal. [Google Scholar] [CrossRef]

- Hofmann, V., Kalluri, P. R., Jurafsky, D., & King, S. (2024). AI generates covertly racist decisions about people based on their dialect. Nature 633, 1–8. [Google Scholar] [CrossRef]

- Jadhav, E. B., Sankhla, M. S., & Kumar, R. (2020). Artificial intelligence: Advancing automation in forensic science & criminal investigation. Journal of Seybold Report, 15(8), 2064–2075. Available online: https://www.researchgate.net/profile/Mahipal-Singh-Sankhla/publication/343826071_Artificial_Intelligence_Advancing_Automation_in_Forensic_Science_Criminal_Investigation/links/5f434a0a299bf13404ebe8cd/Artificial-Intelligence-Advancing-Automation-in-Forensic-Science-Criminal-Investigation.pdf?_sg%5B0%5D=started_experiment_milestone&origin=journalDetail&_rtd=e30%3D (accessed on 5 February 2025).

- Joint Technology Committee. (2024). Introduction to AI for courts (Version 2.0). National Center for State Courts. [Google Scholar]

- Katz, D. M., Hartung, D., Gerlach, L., Jana, A., & Bommarito, M. J., II. (2023). Natural Language Processing in the Legal Domain. arXiv, arXiv:2302.12039. [Google Scholar] [CrossRef]

- Kawakami, A., Sivaraman, V., Cheng, H. F., Stapleton, L., Cheng, Y., Qing, D., Perer, A., Wu, Z. S., Zhu, H., & Holstein, K. (2022, April 29–May 5). Improving human-AI partnerships in child welfare: Understanding worker practices, challenges, and desires for algorithmic decision support. 2022 CHI Conference on Human Factors in Computing Systems (pp. 1–18), New Orleans, LA, USA. [Google Scholar] [CrossRef]

- Klingele, C. (2015). The promises and perils of evidence-based corrections. Notre Dame Law Review, 91, 537. Available online: https://scholarship.law.nd.edu/ndlr/vol91/iss2/2 (accessed on 6 February 2025).

- Latham, A., & Crockett, K. (2024, June 30–July 5). Towards trustworthy AI: Raising awareness in marginalized communities. 2024 International Joint Conference on Neural Networks (IJCNN) (pp. 1–8), Yokohama, Japan. [Google Scholar] [CrossRef]

- Lee, J. D., & See, K. A. (2004). Trust in automation: Designing for appropriate reliance. Human Factors, 46(1), 50–80. [Google Scholar] [CrossRef]

- Lee, M. K., & Rich, K. (2021, May 8–13). Who is included in human perceptions of AI?: Trust and perceived fairness around healthcare AI and cultural mistrust. 2021 CHI Conference on Human Factors in Computing Systems (pp. 1–14), Yokohama, Japan. [Google Scholar]

- Lind, E. A., & Tyler, T. R. (1988). The social psychology of procedural justice. Springer Science & Business Media. [Google Scholar]

- Longazel, J. G., Parker, L. S., & Sun, I. Y. (2011). Experiencing court, experiencing race: Perceived procedural injustice among court users. Race and Justice, 1(2), 202–227. Available online: https://www.researchgate.net/profile/Ivan-Sun-7/publication/258182283_Experiencing_Court_Experiencing_Race_Perceived_Procedural_Injustice_Among_Court_Users/links/62010e9015ebcb296c76f0c0/Experiencing-Court-Experiencing-Race-Perceived-Procedural-Injustice-Among-Court-Users.pdf (accessed on 4 February 2025). [CrossRef]

- Luban, D. (2008). Fairness to rightness: Jurisdiction, legality, and the legitimacy of international criminal law. Georgetown Public Law Research Paper. [Google Scholar] [CrossRef]

- Mahesh, B. (2020). Machine learning algorithms—A review. International Journal of Science and Research (IJSR), 9(1), 381–386. Available online: https://www.researchgate.net/profile/Batta-Mahesh/publication/344717762_Machine_Learning_Algorithms_-A_Review/links/5f8b2365299bf1b53e2d243a/Machine-Learning-Algorithms-A-Review.pdf?eid=5082902844932096 (accessed on 8 February 2025). [CrossRef]

- Martin, K., & Waldman, A. (2022). Are algorithmic decisions legitimate? The effect of process and outcomes on perceptions of legitimacy of AI decisions. Journal of Business Ethics, 1–18. [Google Scholar] [CrossRef]

- Mayson, S. G. (2017). Dangerous defendants. The Yale Law Journal, 127, 490. Available online: https://www.yalelawjournal.org/pdf/Mayson_rd74jtry.pdf (accessed on 3 February 2025).

- MIT Computational Law Report. (2024). Governing digital legal systems: Insights on artificial intelligence and rules as code. Available online: https://law.mit.edu/pub/governingdigitallegalsystems (accessed on 5 February 2025).

- Monahan, J., & Skeem, J. L. (2016). Risk assessment in criminal sentencing. Annual Review of Clinical Psychology, 12, 489–513. [Google Scholar] [CrossRef]

- National Center for State Courts. (2020). Juries in a (post) pandemic world: National survey analysis. GBAO Strategies. Available online: https://www.ncsc.org/__data/assets/pdf_file/0006/41001/NCSC-Juries-Post-Pandemic-World-Survey-Analysis.pdf (accessed on 6 February 2025).

- O’Neil, C. (2016). Weapons of math destruction: How big data increases inequality and threatens democracy. Crown Publishing Group. [Google Scholar]

- OpenAI. (2023). ChatGPT (August 10 version) [Large language model]. OpenAI. [Google Scholar]

- Panjari, H. (2023, July 2). Role of artificial intelligence in judiciary system. LinkedIn. Available online: https://www.linkedin.com/pulse/role-artificial-intelligence-judiciary-system-hetal-panjari/ (accessed on 7 February 2025).

- Peer, E., Brandimarte, L., Samat, S., & Acquisti, A. (2017). Beyond the Turk: Alternative platforms for crowdsourcing behavioral research. Journal of Experimental Social Psychology, 70, 153–163. [Google Scholar] [CrossRef]

- Peffley, M., & Hurwitz, J. (2010). Justice in America: The separate realities of Blacks and Whites. Cambridge University Press. [Google Scholar]

- Perkins, M., & Roe, J. (2024). The use of generative AI in qualitative analysis: Inductive thematic analysis with ChatGPT. Journal of Applied Learning & Teaching, 7(1), 390–395. [Google Scholar] [CrossRef]

- Pew Research Center. (2019, April 9). Race in America: Public attitudes and experiences. Available online: https://www.pewresearch.org/social-trends/2019/04/09/race-in-america-2019/ (accessed on 3 February 2025).

- Pillai, V. (2024). Enhancing transparency and understanding in AI decision-making processes. Iconic Research and Engineering Journals, 8(1), 168–172. Available online: https://www.irejournals.com/formatedpaper/1706039.pdf (accessed on 8 February 2025).

- Richardson, R. (2019). Dirty data, bad predictions: How civil rights violations impact police data, predictive policing systems, and justice. New York University Law Review, 94, 192–233. [Google Scholar]

- Siau, K., & Wang, W. (2018). Building trust in artificial intelligence, machine learning, and robotics. Cutter Business Technology Journal, 31(2), 47–53. Available online: https://www.researchgate.net/profile/Keng-Siau-2/publication/324006061_Building_Trust_in_Artificial_Intelligence_Machine_Learning_and_Robotics/links/5ab8744baca2722b97cf9d33/Building-Trust-in-Artificial-Intelligence-Machine-Learning-and-Robotics.pdf (accessed on 3 February 2025).

- Simmons, R. (2018). Big data, machine judges, and the legitimacy of the criminal justice system. UC Davis Law Review, 52, 1067–1118. Available online: https://lawreview.law.ucdavis.edu/sites/g/files/dgvnsk15026/files/media/documents/52-2_Simmons.pdf (accessed on 7 February 2025).

- Smith, A. (2018, November 16). Public attitudes toward computer algorithms. Pew Research Center. Available online: https://www.pewresearch.org/internet/2018/11/16/public-attitudes-toward-computer-algorithms/ (accessed on 5 February 2025).

- Thibaut, J. W., & Walker, L. (1975). Procedural justice: A psychological analysis. Lawrence Erlbaum Associates. [Google Scholar]

- Thomson Reuters Institute. (2023). Addressing bias in AI: Surveying the current regulatory and legislative landscape. Available online: https://www.thomsonreuters.com/en-us/posts/technology/ai-bias-report-duke-law/ (accessed on 5 February 2025).

- Tyler, T. R. (2006a). Psychological perspectives on legitimacy and legitimation. Annual Review of Psychology, 57, 375–400. [Google Scholar] [CrossRef]

- Tyler, T. R. (2006b). Restorative Justice and Procedural Justice: Dealing with Rule Breaking. Journal of Social Issues, 62(2), 307–326. [Google Scholar] [CrossRef]

- Tyler, T. R. (2006c). Why people obey the law. Princeton University Press. [Google Scholar]

- Tyler, T. R., & Blader, S. L. (2000). Cooperation in Groups: Procedural Justice, Social Identity, and Behavioral Engagement. Psychology Press. [Google Scholar]

- Tyler, T. R., & Huo, Y. J. (2002). Trust in the law: Encouraging public cooperation with the police and courts. Russell Sage Foundation. [Google Scholar]

- Tyler, T. R., & Rasinski, K. (1991). Procedural justice, institutional legitimacy, and the acceptance of unpopular US Supreme Court decisions: A reply to Gibson. Law and Society Review, 621–630. [Google Scholar] [CrossRef]

- Watamura, E., Liu, Y., & Ioku, T. (2025). Judges versus artificial intelligence in juror decision-making in criminal trials: Evidence from two pre-registered experiments. PLoS ONE, 20(1), e0318486. [Google Scholar] [CrossRef]

- Zelditch, M., Jr. (2018). Legitimacy theory. In P. J. Burke (Ed.), Contemporary social psychological theories (pp. 340–371). Stanford University Press. [Google Scholar]

- Zhong, H., Xiao, C., Tu, C., Zhang, T., Liu, Z., & Sun, M. (2020). How does NLP benefit legal system: A summary of legal artificial intelligence. arXiv, arXiv:2004.12158. [Google Scholar]

| Condition | Ethnicity | M | SD |

|---|---|---|---|

| Expertise | |||

| Bail | 6.97 | 3.42 | |

| Sentencing | 7.41 | 2.90 | |

| AI | |||

| Bail | 2.08 | 5.61 | |

| Sentencing | 0.81 | 5.90 | |

| Expertise + AI | |||

| Bail | 5.81 | 4.46 | |

| Sentencing | 5.34 | 5.12 |

| Condition | Ethnicity | M | SD |

|---|---|---|---|

| Expertise | |||

| Black | 3.48 | 0.47 | |

| Hispanic | 3.36 | 0.47 | |

| White | 3.38 | 0.38 | |

| AI | |||

| Black | 3.09 | 0.61 | |

| Hispanic | 3.21 | 0.63 | |

| White | 3.15 | 0.55 | |

| Expertise + AI | |||

| Black | 3.36 | 0.50 | |

| Hispanic | 3.33 | 0.56 | |

| White | 3.38 | 0.40 |

| Condition | Ethnicity | M | SD |

|---|---|---|---|

| Expertise | |||

| Black | 3.59 | 0.55 | |

| Hispanic | 3.44 | 0.53 | |

| White | 3.41 | 0.33 | |

| AI | |||

| Black | 3.49 | 0.57 | |

| Hispanic | 3.09 | 0.59 | |

| White | 3.21 | 0.56 | |

| Expertise + AI | |||

| Black | 3.32 | 0.56 | |

| Hispanic | 3.37 | 0.47 | |

| White | 3.37 | 0.37 |

| Condition | Estimate | Std. Error | t-Value | p-Value |

|---|---|---|---|---|

| (Intercept) | 1.38 | 0.11 | 12.414 | <0.001 |

| Judge trust in AI | 0.38 | 0.04 | 8.787 | <0.001 |

| Black | −0.59 | 0.15 | −4.024 | <0.001 |

| Hispanic | −0.26 | 0.15 | −1.757 | 0.079 |

| Judge trust: Black | 0.27 | 0.06 | 4.848 | <0.001 |

| Judge trust: Hispanic | 0.09 | 0.06 | 1.567 | 0.117 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fine, A.; Berthelot, E.R.; Marsh, S. Public Perceptions of Judges’ Use of AI Tools in Courtroom Decision-Making: An Examination of Legitimacy, Fairness, Trust, and Procedural Justice. Behav. Sci. 2025, 15, 476. https://doi.org/10.3390/bs15040476

Fine A, Berthelot ER, Marsh S. Public Perceptions of Judges’ Use of AI Tools in Courtroom Decision-Making: An Examination of Legitimacy, Fairness, Trust, and Procedural Justice. Behavioral Sciences. 2025; 15(4):476. https://doi.org/10.3390/bs15040476

Chicago/Turabian StyleFine, Anna, Emily R. Berthelot, and Shawn Marsh. 2025. "Public Perceptions of Judges’ Use of AI Tools in Courtroom Decision-Making: An Examination of Legitimacy, Fairness, Trust, and Procedural Justice" Behavioral Sciences 15, no. 4: 476. https://doi.org/10.3390/bs15040476

APA StyleFine, A., Berthelot, E. R., & Marsh, S. (2025). Public Perceptions of Judges’ Use of AI Tools in Courtroom Decision-Making: An Examination of Legitimacy, Fairness, Trust, and Procedural Justice. Behavioral Sciences, 15(4), 476. https://doi.org/10.3390/bs15040476