Consumer Autonomy in Generative AI Services: The Role of Task Difficulty and AI Design Elements in Enhancing Trust, Satisfaction, and Usage Intention

Abstract

:1. Introduction

2. Literature Review and Hypotheses

2.1. Perceived Consumer Autonomy in AI Services

2.2. Task Difficulty and Perceived Autonomy in AI Interactions

2.3. AI Design Elements Supporting Perceived Autonomy

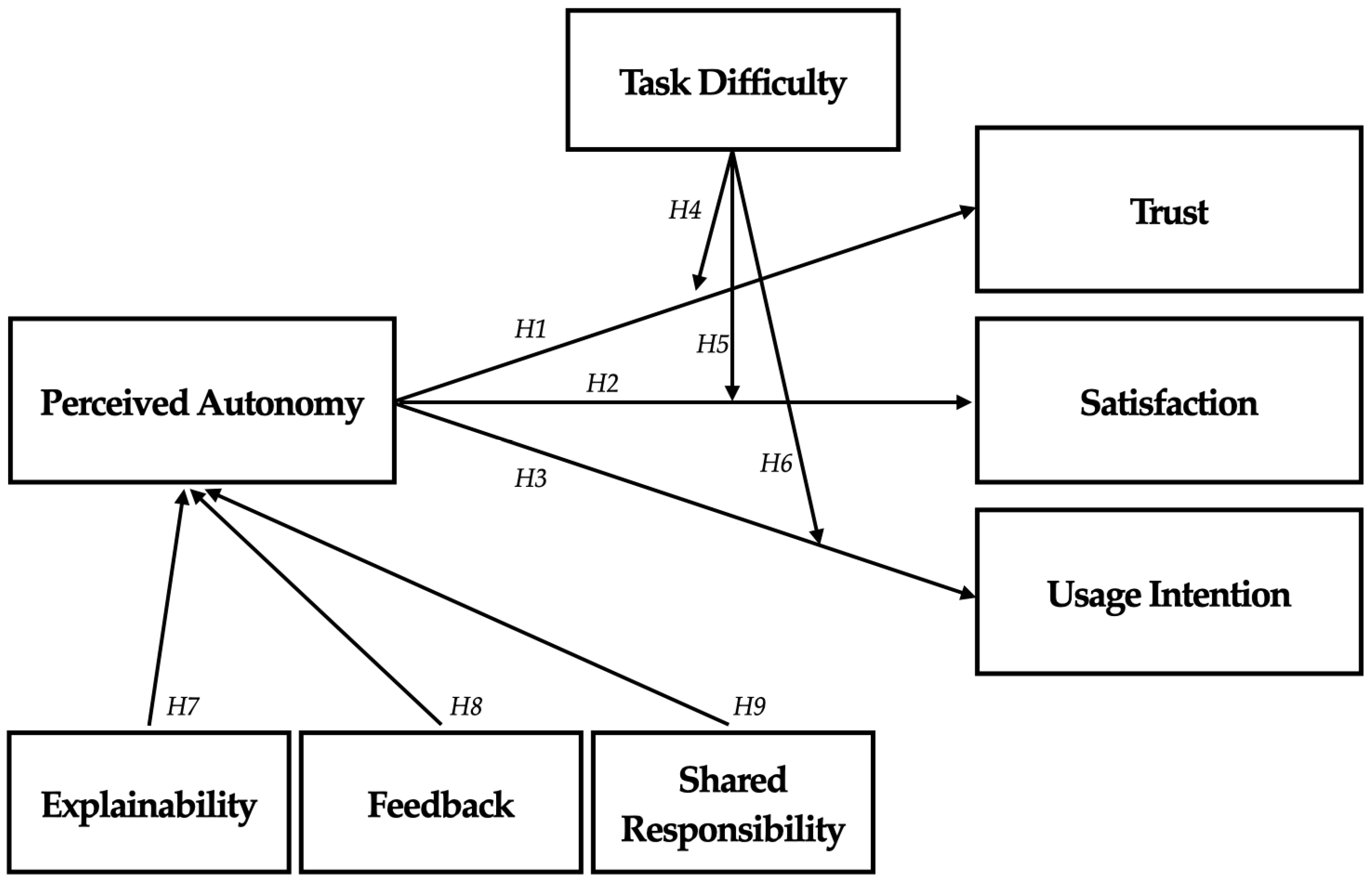

2.4. Research Model Overview

3. Empirical Studies

3.1. Study 1: Effects of Perceived Autonomy and Task Difficulty

3.1.1. Measurement and Manipulation Check

3.1.2. Descriptive and Preliminary Analyses

- -

- Group 1: low task difficulty, absent perceived autonomy

- -

- Group 2: low task difficulty, present perceived autonomy

- -

- Group 3: high task difficulty, absent perceived autonomy

- -

- Group 4: high task difficulty, present perceived autonomy

3.1.3. Hypothesis Testing

3.1.4. Group-Level Comparisons

3.1.5. Discussion

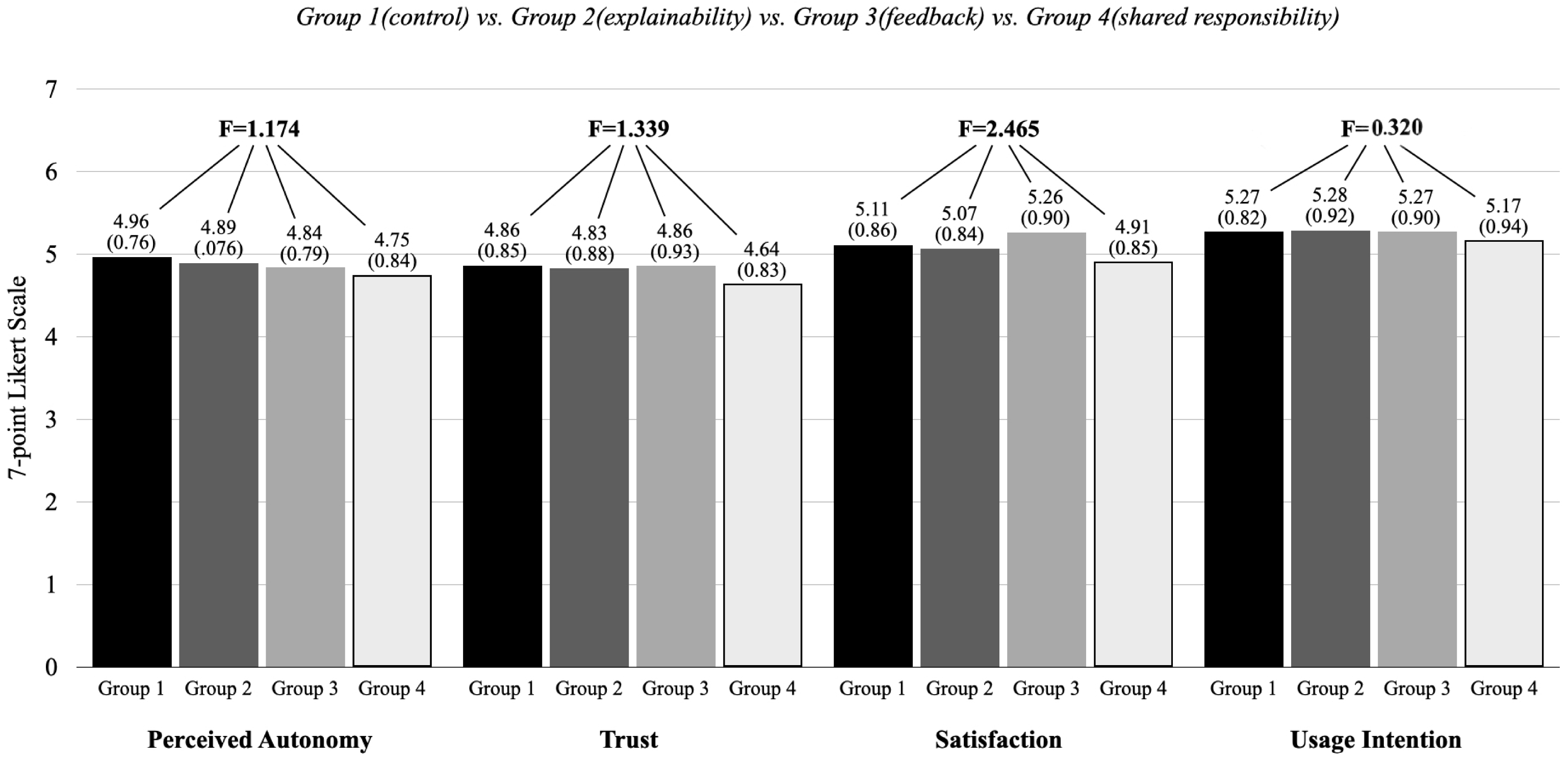

3.2. Study 2

3.2.1. Participants

3.2.2. Design and Procedure

- -

- Control condition (Group 1): The AI service provided a standard response with no additional design interventions.

- -

- Explainability condition (Group 2): The AI explained the reasoning behind its response.

- -

- Feedback condition (Group 3): Participants were asked to justify their response selection, with the AI informing them that their feedback would be used to improve future AI outputs.

- -

- Shared responsibility condition (Group 4): Participants were explicitly informed that the AI service’s response was for reference only and that they held the final responsibility for evaluating and applying the information.

3.2.3. Measures

3.2.4. Manipulation Check

3.2.5. Results

3.2.6. Discussion

4. General Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SDT | Self-determination Theory |

| OLS | Ordinary Least Squares |

| M | Mean |

| SD | Standard Deviation |

Appendix A

| Questions |

| Study 1 |

| Subjective Competence of AI services |

| - I can understand the information provided by generative AI services. |

| - I know which information provided by generative AI services is trustworthy. |

| - I know how to request helpful information from generative AI services. |

| - I can judge whether information provided by generative AI services is reliable or not. |

| - I can determine if information obtained from generative AI services is applicable to my situation. |

| - I can collect or evaluate information that fits my purpose through generative AI services. |

| Objective Competence of AI services |

| - Generative AI creates new information based on data it has previously learned. |

| - When using generative AI, writing specific requests increases the chance of receiving more accurate answers. |

| - Generative AI always provides the same answer to the same question. |

| - Generative AI can correct biases in its learned data by itself. |

| - Conversation records with generative AI may be stored by service providers. |

| - Generative AI can improve some aspects of its performance through user feedback. |

| Task Difficulty |

| - The task I just requested from the generative AI service would be quite difficult if I did it myself. |

| - The task I just requested from the generative AI service would take a long time if I did it myself. |

| Perceived Autonomy |

| - The results from the generative AI service adequately reflected my opinions. |

| - The results from the generative AI service were provided according to my desired standards and preferences. |

| - I believe I played a leading role in the process of creating the results from the generative AI service. |

| Trust |

| - The results just presented by the generative AI service are safe and trustworthy. |

| - The information provided in the results just presented by the generative AI service is reliable. |

| - The results just presented by the generative AI service are generally trustworthy. |

| Satisfaction |

| - I am generally satisfied with the generative AI service experience just presented. |

| Usage Intention |

| - I will continue to use this type of generative AI service in the future. |

| - I will recommend this type of generative AI service to others. |

| - I plan to use this type of generative AI service long-term. |

| Study 2 |

| Explainability |

| - The above generative AI service clearly explained what focus its response was written with. |

| - The above generative AI service transparently explained why the answer was presented that way. |

| Feedback |

| - The above generative AI service requested feedback on its answer. |

| - I felt that the above generative AI service valued my feedback. |

| Shared Responsibility |

| - The above generative AI service clearly stated that it has no responsibility for the final use of its answer. |

| - The above generative AI service recommended that I should make the final decision. |

Appendix B

Study 1-Group 1

| |

|  |

Study 1-Group 2

| |

|  |

Study 1-Group 3

| |

|  |

Study 1-Group 4

| |

|  |

Study 2-Group 1

| Study 2-Group 2

|

|  |

Study 2-Group 3

| Study 2-Group 4

|

|  |

References

- Ahmad Husairi, M. A., & Rossi, P. (2024). Delegation of purchasing tasks to AI: The role of perceived choice and decision autonomy. Decision Support Systems, 179, 114166. [Google Scholar] [CrossRef]

- Aldausari, N., Sowmya, A., Marcus, N., & Mohammadi, G. (2023). Video generative adversarial networks: A review. ACM Computing Surveys, 55(2), 1–25. [Google Scholar] [CrossRef]

- André, Q., Carmon, Z., Wertenbroch, K., Crum, A., Frank, D., Goldstein, W., Huber, J., van Boven, L. V., Weber, B., & Yang, H. (2018). Consumer choice and autonomy in the age of artificial intelligence and big data. Customer Needs and Solutions, 5(1–2), 28–37. [Google Scholar] [CrossRef]

- Ashfaq, M., Yun, J., Yu, S., & Loureiro, S. M. C. (2020). I, chatbot: Modeling the determinants of users’ satisfaction and continuance intention of AI-powered service agents. Telematics and Informatics, 54, 101473. [Google Scholar] [CrossRef]

- Bergkvist, L., & Rossiter, J. R. (2007). The predictive validity of multiple-item versus single-item measures of the same constructs. Journal of Marketing Research, 44(2), 175–184. [Google Scholar] [CrossRef]

- Bhattacherjee, A. (2001). Understanding information systems continuance: An expectation-confirmation model. MIS Quarterly, 25(3), 351–370. [Google Scholar] [CrossRef]

- Bjørlo, L., Moen, Ø., & Pasquine, M. (2021). The role of consumer autonomy in developing sustainable AI: A conceptual framework. Sustainability, 13(4), 2332. [Google Scholar] [CrossRef]

- Cai, C. J., Reif, E., Hegde, N., Hipp, J., Kim, B., Smilkov, D., Wattenberg, M., Viegas, F., Corrado, G. S., Stumpe, M. C., & Terry, M. (2019). Human-centered tools for coping with imperfect algorithms during medical decision-making. In Proceedings of the 2019 CHI conference on human factors in computing systems, CHI (pp. 1–14). Association for Computing Machinery. [Google Scholar] [CrossRef]

- Castelo, N., Bos, M. W., & Lehmann, D. R. (2019). Task-dependent algorithm aversion. Journal of Marketing Research, 56(5), 809–825. [Google Scholar] [CrossRef]

- Choi, J. K., & Ji, Y. G. (2015). Investigating the importance of trust on adopting an autonomous vehicle. International Journal of Human-Computer Interaction, 31(10), 692–702. [Google Scholar] [CrossRef]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. [Google Scholar] [CrossRef]

- Fan, Y., & Liu, X. (2022). Exploring the role of AI algorithmic agents: The impact of algorithmic decision autonomy on consumer purchase decisions. Frontiers in Psychology, 13, 1009173. [Google Scholar] [CrossRef]

- Felzmann, H., Villaronga, E. F., Lutz, C., & Tamò-Larrieux, A. (2019). Transparency you can trust: Transparency requirements for artificial intelligence between legal norms and contextual concerns. Big Data & Society, 6(1), 2053951719860542. [Google Scholar] [CrossRef]

- Flavián, C., Pérez-Rueda, A., Belanche, D., & Casaló, L. V. (2022). Intention to use analytical artificial intelligence (AI) in services: The effect of technology readiness and awareness. Journal of Service Management, 33(2), 293–320. [Google Scholar] [CrossRef]

- Formosa, P. (2021). Robot autonomy vs. human autonomy: Social robots, artificial intelligence (AI), and the nature of autonomy. Minds and Machines, 31(4), 595–616. [Google Scholar] [CrossRef]

- Frank, D. A., & Otterbring, T. (2024). Autonomy, power and the special case of scarcity: Consumer adoption of highly autonomous artificial intelligence. British Academy of Management, 35(4), 1700–1723. [Google Scholar] [CrossRef]

- Gaggioli, A., Riva, G., Peters, D., & Calvo, R. A. (2017). Positive technology, computing, and design: Shaping a future in which technology promotes psychological well-being. Emotions and affect in human factors and human–computer interaction (pp. 477–502). Academic Press. [Google Scholar]

- Gefen, D., Karahanna, E., & Straub, D. W. (2003). Trust and TAM in online shopping: An integrated model. MIS Quarterly, 27(1), 51–90. [Google Scholar] [CrossRef]

- Glikson, E., & Woolley, A. W. (2020). Human trust in artificial intelligence: Review of empirical research. Academy of Management Annals, 14(2), 627–660. [Google Scholar] [CrossRef]

- Goddard, K., Roudsari, A., & Wyatt, J. C. (2012). Automation bias: A systematic review of frequency, effect mediators, and mitigators. Journal of the American Medical Informatics Association, 19(1), 121–127. [Google Scholar] [CrossRef]

- Goodie, A. S., & Fortune, E. E. (2013). Measuring cognitive distortions in pathological gambling: Review and meta-analyses. Psychology of Addictive Behaviors: Journal of the Society of Psychologists in Addictive Behaviors, 27(3), 730–743. [Google Scholar] [CrossRef]

- Grote, T., & Berens, P. (2020). On the ethics of algorithmic decision-making in healthcare. Journal of Medical Ethics, 46(3), 205–211. [Google Scholar] [CrossRef]

- Hu, H. F., & Krishen, A. S. (2019). When is enough, enough? Investigating product reviews and information overload from a consumer empowerment perspective. Journal of Business Research, 100, 27–37. [Google Scholar] [CrossRef]

- Hyman, M. R., Kostyk, A., & Trafimow, D. (2023). True consumer autonomy: A formalization and implications. Journal of Business Ethics, 183(3), 841–863. [Google Scholar] [CrossRef]

- Kelly, S., Kaye, S. A., & Oviedo-Trespalacios, O. (2023). What factors contribute to the acceptance of artificial intelligence? A systematic review. Telematics and Informatics, 77, 101925. [Google Scholar] [CrossRef]

- Kim, S. S., & Malhotra, N. (2005). A longitudinal model of continued IS use: An integrative view of four mechanisms underlying post-adoption phenomena. Management Science, 51(5), 741–755. [Google Scholar] [CrossRef]

- Kirk, A. (2024). Consumer autonomy and the charter of fundamental rights. Journal of European Consumer and Market Law, 13(4), 170–178. Available online: https://kluwerlawonline.com/journalarticle/Journal+of+European+Consumer+and+Market+Law/13.4/EuCML2024023 (accessed on 2 January 2025).

- Lee, J. Y., & Kim, J. G. (2006). Comparing data qualities of on-line panel and off-line interview surveys: Reliability and validity. Korean Journal of Marketing, 21(4), 209–231. [Google Scholar]

- Logg, J. M., Minson, J. A., & Moore, D. A. (2019). Algorithm appreciation: People prefer algorithmic to human judgment. Organizational Behavior and Human Decision Processes, 151, 90–103. [Google Scholar] [CrossRef]

- Mackenzie, C. (2014). Three dimensions of autonomy: A relational analysis. Oxford University Press. [Google Scholar]

- McDougall, R. J. (2019). Computer knows best? The need for value-flexibility in medical AI. Journal of Medical Ethics, 45(3), 156–160. [Google Scholar] [CrossRef]

- Mollman, S. (2022). ChatGPT has gained 1 million followers in a single week. Here’s why the AI chatbot is primed to disrupt search as we know it. Yahoo Finance. Available online: https://finance.yahoo.com/news/chatgpt-gained-1-million-followers-224523258.html (accessed on 3 January 2025).

- Moore, S. M., & Ohtsuka, K. (1999). Beliefs about control over gambling among young people, and their relation to problem gambling. Psychology of Addictive Behaviors, 13(4), 339–347. [Google Scholar] [CrossRef]

- Nizette, F., Hammedi, W., van Riel, A. C. R., & Steils, N. (2025). Why should I trust you? Influence of explanation design on consumer behavior in AI-based services. Journal of Service Management, 36(1), 50–74. [Google Scholar] [CrossRef]

- Petit, N. (2017). Law and regulation of artificial intelligence and robots—Conceptual framework and normative implications. SSRN Electronic Journal. [Google Scholar] [CrossRef]

- Pirhonen, J., Melkas, H., Laitinen, A., & Pekkarinen, S. (2020). Could robots strengthen the sense of autonomy of older people residing in assisted living facilities?—A future-oriented study. Ethics and Information Technology, 22(2), 151–162. [Google Scholar] [CrossRef]

- Prunkl, C. (2024). Human autonomy at risk? An analysis of the challenges from AI. Minds and Machines, 34(3), 26. [Google Scholar] [CrossRef]

- Ryan, R. M., & Deci, E. L. (2000). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. American Psychologist, 55(1), 68–78. [Google Scholar] [CrossRef] [PubMed]

- Sankaran, S., Zhang, C., Aarts, H., & Markopoulos, P. (2021). Exploring peoples’ perception of autonomy and reactance in everyday AI interactions. Frontiers in Psychology, 12, 713074. [Google Scholar] [CrossRef]

- Schmidt, P., Biessmann, F., & Teubner, T. (2020). Transparency and trust in artificial intelligence systems. Journal of Decision Systems, 29(4), 260–278. [Google Scholar] [CrossRef]

- Schneider, S., Nebel, S., Beege, M., & Rey, G. D. (2018). The autonomy-enhancing effects of choice on cognitive load, motivation and learning with digital media. Learning and Instruction, 58, 161–172. [Google Scholar] [CrossRef]

- Shin, D., & Park, Y. J. (2019). Role of fairness, accountability, and transparency in algorithmic affordance. Computers in Human Behavior, 98, 277–284. [Google Scholar] [CrossRef]

- Singh, P., & Singh, V. (2024). The power of AI: Enhancing customer loyalty through satisfaction and efficiency. Cogent Business & Management, 11(1), 2326107. [Google Scholar] [CrossRef]

- Sundar, S. S. (2008). The main model: A heuristic approach to understanding technology effects on credibility. In M. J. Metzger, & A. J. Flanagin (Eds.), Digital media, youth, and credibility. [The John, D. and Catherine, T. MacArthur Foundation Series on Digital Media and Learning]. The MIT Press. [Google Scholar] [CrossRef]

- Susser, D., Roessler, B., & Nissenbaum, H. (2018). Online manipulation: Hidden influences in a digital world. 4 Georgetown Law. Technology Review, 4(1), 1–45. [Google Scholar]

- Venkatesh, V., & Davis, F. D. (2000). A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science, 46(2), 186–204. Available online: https://www.jstor.org/stable/2634758 (accessed on 1 January 2025). [CrossRef]

- Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. [Google Scholar] [CrossRef]

- Wertenbroch, K., Schrift, R. Y., Alba, J. W., Barasch, A., Bhattacharjee, A., Giesler, M., Knobe, J., Lehmann, D. R., Matz, S., Nave, G., Parker, J. R., Puntoni, S., Zheng, Y., & Zwebner, Y. (2020). Autonomy in consumer choice. Marketing Letters, 31(4), 429–439. [Google Scholar] [CrossRef] [PubMed]

- Xia, Q., Chiu, T. K. F., Lee, M., Sanusi, I. T., Dai, Y., & Chai, C. S. (2022). A Self-Determination Theory (SDT) design approach for inclusive and diverse Artificial Intelligence (AI) education. Computers and Education, 189, 104582. [Google Scholar] [CrossRef]

- Xiao, B., & Benbasat, I. (2018). An empirical examination of the influence of biased personalized product recommendations on consumers’ decision making outcomes. Decision Support Systems, 110(6), 46–57. [Google Scholar] [CrossRef]

- Xie, Y., Shuping, Z., Zhou, P., & Liang, C. (2023). Understanding continued use intention of AI assistants. Journal of Computer Information Systems, 63(6), 1424–1437. [Google Scholar] [CrossRef]

- Yan, Y., Fan, W., Shao, B., & Lei, Y. (2022). The impact of perceived control and power on adolescents’ acceptance intention of intelligent online services. Frontiers in Psychology, 13, 1013436. [Google Scholar] [CrossRef]

- Zhang, C., Sankaran, S., & Aarts, H. (2023). A functional analysis of personal autonomy: How restricting ‘what’, ‘when’, and ‘how’ affects experienced agency and goal motivation. European Journal of Social Psychology, 53(3), 567–584. [Google Scholar] [CrossRef]

| Total Sample (N = 332) | Group 1 (N = 90) | Group 2 (N = 68) | Group 3 (N = 87) | Group 4 (N = 87) | X2/F | |

|---|---|---|---|---|---|---|

| Gender (%) | 0.749 | |||||

| Male | 38.3 | 38.9 | 33.8 | 39.1 | 40.2 | |

| Female | 61.7 | 61.1 | 66.2 | 60.9 | 59.8 | |

| Age (M, SD) | 38.57 (11.03) | 38.82 (10.81) | 37.69 (11.87) | 38.61 (10.60) | 38.95 (11.17) | 0.194 |

| Education (%) | 7.585 | |||||

| High school graduate | 19.3 | 12.2 | 26.5 | 23.0 | 17.2 | |

| College graduate | 70.8 | 74.4 | 63.2 | 69.0 | 74.7 | |

| Postgraduate degree | 9.9 | 13.3 | 10.3 | 8.0 | 8.0 | |

| Household income (KRW 10k, M, SD) | 327.94 (202.11) | 339.40 (177.67) | 286.28 (178.74) | 334.34 (210.82) | 342.24 (231.20) | 1.237 |

| Freq. of generative AI usage (%) | 14.331 | |||||

| Several times a day | 25.9 | 22.2 | 26.5 | 27.6 | 27.6 | |

| Once a day (daily) | 10.2 | 11.1 | 8.8 | 8.0 | 12.6 | |

| 5–6 times a week | 7.5 | 5.6 | 5.9 | 6.9 | 11.5 | |

| 3–4 times a week | 15.1 | 22.2 | 11.8 | 14.9 | 10.3 | |

| 1–2 times a week | 20.5 | 18.9 | 17.6 | 23.0 | 21.8 | |

| 1–3 times a month | 15.4 | 15.6 | 22.1 | 12.6 | 12.6 | |

| Less than once a month | 5.4 | 4.4 | 7.4 | 6.9 | 3.4 | |

| Objective competence (Generative AI, M, SD) | 4.27 (1.26) | 4.24 (1.32) | 4.15 (1.18) | 4.32 (1.31) | 4.36 (1.21) | 0.413 |

| Subjective competence (Generative AI, M, SD) | 5.39 (0.78) | 5.42 (0.76) | 5.25 (0.80) | 5.39 (0.78) | 5.46 (0.80) | 0.931 |

| Task Difficulty | Perceived Autonomy | Trust | Satisfaction | Usage Intention | |

|---|---|---|---|---|---|

| Task difficulty | 1.000 | ||||

| Perceived autonomy | 0.242 *** | 1.000 | |||

| Trust | 0.156 ** | 0.520 *** | 1.000 | ||

| Satisfaction | 0.165 ** | 0.619 *** | 0.692 *** | 1.000 | |

| Usage intention | 0.313 *** | 0.554 *** | 0.550 *** | 0.687 *** | 1.000 |

| - | With Interaction Terms | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| b | SE | b | SE | b | SE | b | SE | b | SE | b | SE | |

| Dependent Variable | Trust | Satisfaction | Usage Intention | Trust | Satisfaction | Usage Intention | ||||||

| Intercept | 1.056 ** | 0.398 | 1.204 ** | 0.388 | 1.085 ** | 0.364 | 1.348 ** | 0.478 | 1.577 ** | 0.467 | 1.473 ** | 0.437 |

| Gender (male = 0) | −0.115 | 0.083 | −0.030 | 0.081 | −0.073 | 0.076 | −0.115 | 0.083 | −0.029 | 0.081 | −0.070 | 0.076 |

| Age | 0.013 ** | 0.004 | 0.002 | 0.004 | 0.006 | 0.004 | 0.014 ** | 0.004 | 0.002 | 0.004 | 0.006 | 0.004 |

| Monthly income (KRW 10k) | −0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | −0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| High school (university = 0) | −0.014 | 0.105 | −0.020 | 0.102 | 0.060 | 0.096 | −0.006 | 0.105 | −0.011 | 0.102 | 0.066 | 0.096 |

| Graduate (university = 0) | 0.053 | 0.128 | 0.073 | 0.125 | −0.136 | 0.117 | 0.039 | 0.128 | 0.061 | 0.125 | −0.141 | 0.117 |

| Daily usage (week = 0) | −0.061 | 0.086 | 0.034 | 0.084 | 0.308 *** | 0.079 | −0.059 | 0.086 | 0.035 | 0.084 | 0.308 *** | 0.079 |

| Monthly usage (week = 0) | 0.038 | 0.102 | −0.107 | 0.099 | −0.040 | 0.093 | 0.040 | 0.102 | −0.099 | 0.100 | −0.028 | 0.093 |

| Objective competence | −0.013 | 0.032 | −0.038 | 0.031 | 0.053 | 0.029 | −0.013 | 0.032 | −0.040 | 0.031 | 0.049 | 0.029 |

| Subjective competence | 0.211 *** | 0.053 | 0.193 *** | 0.052 | 0.272 *** | 0.049 | 0.215 *** | 0.054 | 0.195 *** | 0.052 | 0.272 *** | 0.049 |

| Perceived autonomy | 0.492 *** | 0.053 | 0.619 *** | 0.051 | 0.460 *** | 0.048 | 0.439 *** | 0.072 | 0.551 *** | 0.070 | 0.388 *** | 0.066 |

| Task difficulty (low = 0) | −0.573 | 0.503 | −0.716 | 0.490 | −0.729 | 0.459 | ||||||

| Difficulty × autonomy | 0.094 | 0.095 | 0.129 | 0.093 | 0.143 | 0.087 | ||||||

| F | 16.397 *** | 23.150 *** | 25.101 *** | 13.862 *** | 19.499 *** | 21.193 *** | ||||||

| Adj. R2 | 0.318 | 0.401 | 0.421 | 0.318 | 0.401 | 0.423 | ||||||

| Total Sample (N = 376) | Group 1 (N = 95) | Group 2 (N = 96) | Group 3 (N = 94) | Group 4 (N = 91) | X2/F | |

|---|---|---|---|---|---|---|

| Gender (%) | 2.971 | |||||

| Male | 40.2 | 45.3 | 35.4 | 43.6 | 36.3 | |

| Female | 59.8 | 54.7 | 64.6 | 56.4 | 63.7 | |

| Age (M, SD) | 39.40 (10.77) | 39.61 (11.06) | 39.16 (10.74) | 39.52 (10.06) | 39.32 (11.37) | 0.034 |

| Education (%) | 12.743 * | |||||

| High school graduate | 12.5 | 10.5 | 17.7 | 9.6 | 12.1 | |

| College graduate | 73.9 | 72.6 | 70.8 | 70.2 | 82.4 | |

| Postgraduate degree | 13.6 | 16.8 | 11.5 | 20.2 | 5.5 | |

| Household income (KRW 10k, M, SD) | 337.16 (198.55) | 347.05 (218.17) | 316.13 (170.06) | 347.80 (186.25) | 338.05 (217.89) | 0.526 |

| Freq. of generative AI usage (%) | 22.539 | |||||

| Several times a day | 26.6 | 27.4 | 19.8 | 28.7 | 30.8 | |

| Once a day (daily) | 7.7 | 10.5 | 8.3 | 6.4 | 5.5 | |

| 5–6 times a week | 6.9 | 9.5 | 5.2 | 6.4 | 6.6 | |

| 3–4 times a week | 20.7 | 21.1 | 25.0 | 21.3 | 15.4 | |

| 1–2 times a week | 19.1 | 20.0 | 18.8 | 11.7 | 26.4 | |

| 1–3 times a month | 14.4 | 10.5 | 17.7 | 20.2 | 8.8 | |

| Less than once a month | 4.5 | 1.1 | 5.2 | 5.3 | 6.6 | |

| Objective competence (generative AI, M, SD) | 4.28 (1.25) | 4.52 (1.15) | 4.17 (1.32) | 4.12 (1.30) | 4.31 (1.19) | 1.960 |

| Subjective competence (generative AI, M, SD) | 5.25 (0.74) | 5.31 (0.68) | 5.17 (0.70) | 5.28 (0.80) | 5.25 (0.79) | 0.574 |

| Explainability | Feedback | Shared Responsibility | |

|---|---|---|---|

| Group 1 (n = 95) | 5.11 a (0.91) | 4.38 a (1.41) | 4.05 b (1.53) |

| Group 2 (n = 96) | 5.24 b (0.81) | 4.42 a (1.31) | 4.13 b (1.35) |

| Group 3 (n = 94) | 4.93 a (1.01) | 4.78 b (1.25) | 3.63 a (1.58) |

| Group 4 (n = 91) | 4.86 a (0.99) | 4.02 a (1.48) | 5.29 c (1.21) |

| Perceived Consumer Autonomy | Trust | Satisfaction | Continued Usage Intention | |

|---|---|---|---|---|

| Perceived consumer autonomy | 1.000 | |||

| Trust | 0.631 *** | 1.000 | ||

| Satisfaction | 0.600 *** | 0.670 *** | 1.000 | |

| Continued usage intention | 0.551 *** | 0.588 *** | 0.650 *** | 1.000 |

| Dependent Variable | Group 1 (Control) | Group 2 (Explainability) | Group 3 (Feedback) | Group 4 (Shared Responsibility) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Trust | Satisfaction | Usage Intention | Trust | Satisfaction | Usage Intention | Trust | Satisfaction | Usage Intention | Trust | Satisfaction | Usage Intention | |

| Intercept | 0.708 (0.816) | 0.745 (0.850) | 1.453 (0.839) | 0.174 (0.684) | 1.995 ** (0.732) | 1.787 (0.945) | −0.228 (0.662) | 1.147 * (0.530) | 1.165 * (0.537) | 1.363 (0.793) | 0.809 (0.692) | −0.003 (0.771) |

| Gender (male = 0) | −0.231 (0.145) | −0.151 (0.153) | 0.009 (0.151) | −0.423 ** (0.147) | 0.044 (0.165) | −0.050 (0.213) | 0.086 (0.160) | −0.185 (0.128) | −0.132 (0.130) | −0.198 (0.162) | 0.168 (0.140) | 0.282 (0.156) |

| Age | 0.013 (0.008) | 0.005 (0.008) | −0.008 (0.008) | 0.002 (0.007) | −0.012 (0.007) | −0.013 (0.009) | 0.017 (0.009) | −0.016 * (0.008) | −0.009 (0.008) | 0.020 * (0.008) | 0.000 (0.007) | 0.007 (0.008) |

| Monthly income (KRW 10k) | −0.001 (0.000) | −0.000 (0.000) | 0.000 (0.000) | −0.000 (0.000) | 0.000 (0.000) | 0.001 (0.001) | 0.001 (0.001) | 0.000 (0.000) | −0.000 (0.000) | −0.001 (0.000) | −0.001 (0.000) | 0.000 (0.000) |

| High school (university = 0) | −0.240 (0.257) | −0.396 (0.268) | −0.016 (0.265) | 0.092 (0.167) | 0.045 (0.179) | −0.082 (0.231) | −0.143 (0.258) | 0.096 (0.207) | −0.065 (0.210) | −0.121 (0.245) | −0.468 * (0.211) | −0.104 (0.235) |

| Graduate (university = 0) | −0.024 (0.188) | −0.262 (0.195) | −0.090 (0.192) | 0.269 (0.181) | −0.005 (0.197) | −0.163 (0.254) | −0.149 (0.189) | 0.283 (0.152) | 0.315 * (0.154) | 0.338 (0.345) | 0.294 (0.297) | 0.313 (0.331) |

| Week usage (daily = 0) | −0.259 (0.156) | 0.108 (0.164) | −0.035 (0.162) | 0.068 (0.147) | −0.069 (0.157) | −0.184 (0.203) | −0.040 (0.176) | −0.067 (0.141) | −0.184 (0.143) | 0.009 (0.170) | 0.008 (0.146) | −0.176 (0.163) |

| Monthly usage (daily = 0) | −0.068 (0.237) | 0.191 (0.246) | −0.212 (0.243) | 0.079 (0.172) | −0.251 (0.184) | −0.179 (0.238) | −0.064 (0.194) | −0.051 (0.155) | −0.393 * (0.157) | 0.008 (0.250) | 0.140 (0.214) | −0.340 (0.238) |

| Objective competence | 0.032 (0.063) | 0.115 (0.066) | 0.037 (0.065) | −0.045 (0.045) | 0.009 (0.048) | 0.130 * (0.062) | −0.021 (0.060) | 0.093 (0.048) | 0.161 ** (0.049) | −0.077 (0.072) | 0.022 (0.062) | −0.004 (0.069) |

| Subjective competence | 0.087 (0.113) | −0.002 (0.118) | 0.282 * (0.117) | 0.320 ** (0.103) | −0.027 (0.116) | 0.089 (0.150) | 0.259 * (0.110) | 0.042 (0.091) | 0.140 (0.092) | 0.262 * (0.113) | 0.029 (0.100) | 0.311 ** (0.112) |

| Perceived consumer autonomy | 0.713 *** (0.105) | 0.317 * (0.136) | 0.044 (0.134) | 0.675 *** (0.093) | 0.058 (0.127) | 0.273 (0.163) | 0.614 *** (0.107) | 0.444 *** (0.101) | 0.349 ** (0.103) | 0.372 *** (0.098) | 0.416 *** (0.091) | 0.315 ** (0.102) |

| Trust | - | 0.452 *** (0.113) | 0.457 *** (0.112) | - | 0.685 *** (0.116) | 0.343 * (0.150) | - | 0.411 *** (0.088) | 0.319 *** (0.089) | - | 0.426 *** (0.096) | 0.401 *** (0.107) |

| F | 8.157 *** | 6.514 *** | 5.516 *** | 16.369 *** | 10.322 *** | 5.494 *** | 8.618 *** | 15.289 *** | 14.417 *** | 4.616 *** | 9.316 *** | 9.020 *** |

| Adj. R2 | 0.432 | 0.392 | 0.346 | 0.618 | 0.519 | 0.342 | 0.450 | 0.628 | 0.613 | 0.287 | 0.504 | 0.495 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, J.; Ko, D. Consumer Autonomy in Generative AI Services: The Role of Task Difficulty and AI Design Elements in Enhancing Trust, Satisfaction, and Usage Intention. Behav. Sci. 2025, 15, 534. https://doi.org/10.3390/bs15040534

Han J, Ko D. Consumer Autonomy in Generative AI Services: The Role of Task Difficulty and AI Design Elements in Enhancing Trust, Satisfaction, and Usage Intention. Behavioral Sciences. 2025; 15(4):534. https://doi.org/10.3390/bs15040534

Chicago/Turabian StyleHan, Jihyung, and Daekyun Ko. 2025. "Consumer Autonomy in Generative AI Services: The Role of Task Difficulty and AI Design Elements in Enhancing Trust, Satisfaction, and Usage Intention" Behavioral Sciences 15, no. 4: 534. https://doi.org/10.3390/bs15040534

APA StyleHan, J., & Ko, D. (2025). Consumer Autonomy in Generative AI Services: The Role of Task Difficulty and AI Design Elements in Enhancing Trust, Satisfaction, and Usage Intention. Behavioral Sciences, 15(4), 534. https://doi.org/10.3390/bs15040534