EEG-Based BCI System to Detect Fingers Movements

Abstract

1. Introduction

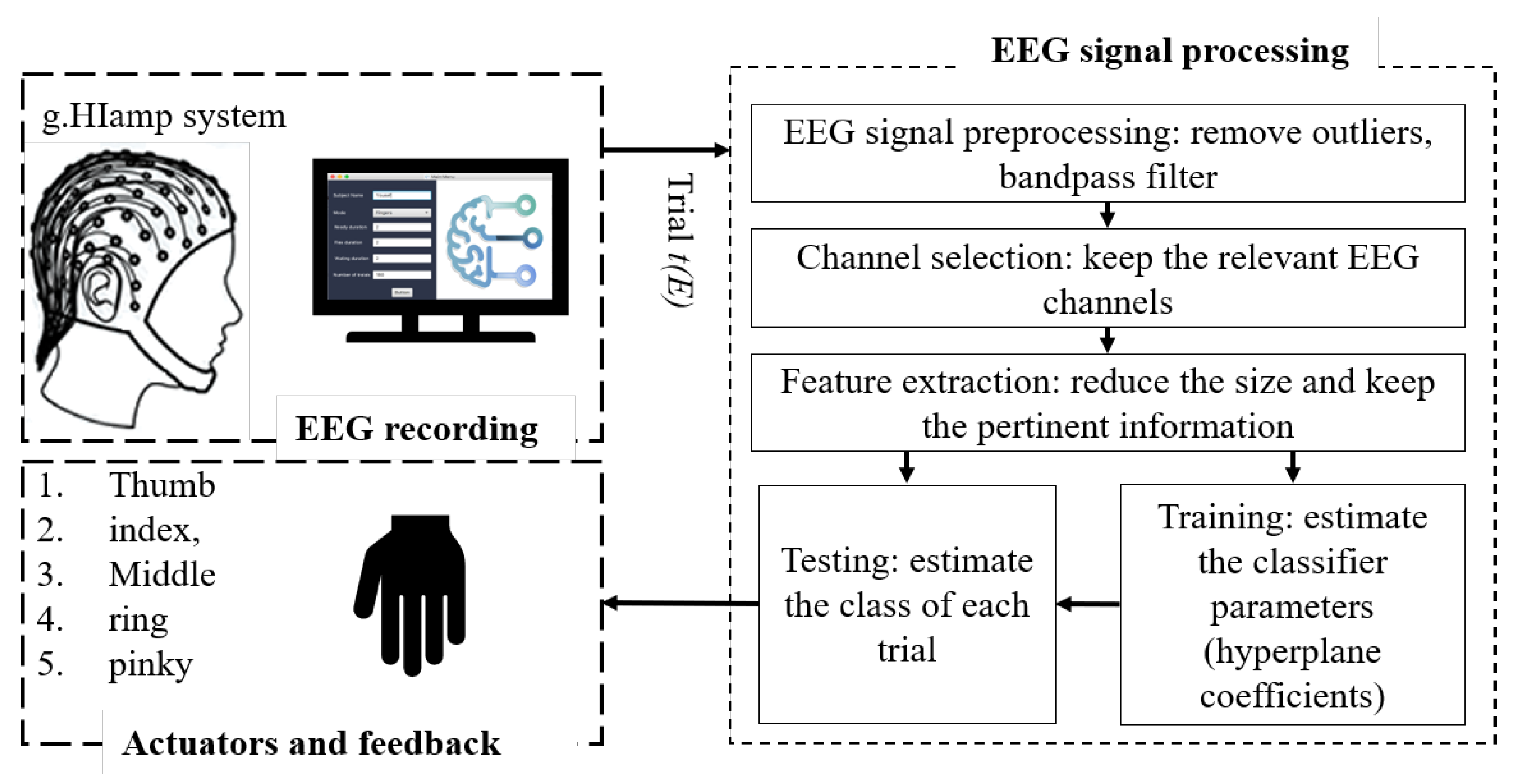

2. Materials and Methods

2.1. Data Acquisition

2.1.1. EEG Signal Acquisition

2.1.2. Experimental Paradigm

2.1.3. Monitoring the Recording Sessions

- Get ready phase: during this phase, a random finger/limb movement was selected and the corresponding animated picture “gif file” was displayed by the scenario program on the screen.

- Action phase: during this phase, the subject moved the selected finger/limb.

- Rest phase: during this phase, the subject was in a resting state.

2.1.4. Labeling Signals

2.2. Artifacts Removal

2.3. Selection of Relevant Electrodes

2.3.1. Annotations

- An electrode (e) is an electrical conductor used to acquire brain signals.

- E is the set of electrodes (e) on a cap.

- A motor imagery (m), also called the motor imagery task, is a mental process by which an individual simulates a given movement action.

- is a set of motor imagery tasks. The motor imagery tasks which were considered here are the imaginary movements of the thumb, index, middle, ring and pinky fingers.

- A trial (t) is a set of brain signals that are recorded with a set of electrodes E during a given motor imagery task m.

- is a set of trials. =

- is a set of subjects from each of which a set of trials was recorded.

- A rest is a set of brain signals, recorded using a set of electrodes E that corresponds to the mental state during a resting period. In this study, the resting period corresponds to the portion of a trial t recorded during the 0–1 s period of t. It is denoted .

2.3.2. Preliminaries

- : For a given motor imagery task , this function returns the subset of trials that were recorded during . It is defined as follows:

- –

- :

- –

- in a way that is recorded during the motor imagery task .

- : For a given subject , this function returns the subset of trials that have been recorded during the sessions of the subject . It is defined as follows:

- –

- –

- in a way that is recorded during a session of the subject .

- –

- is the power, also called the energy, of the electrode e calculated from the trial t. It is computed using a spectral representation of the trial t with the application of the fast Fourier transformation. It is measured according to the following expression:whereThe power spectrum of each trial using the FFT function are available online at Supplementary Materials (Folder S1).

- –

- is defined as the percentage of the power increase or decrease in the electrode e during the trial t in relation to a reference power , according to the following expression:

2.3.3. The Selection Models

- The function that calculates the subset of trials recorded during the sessions of the subject while performing the motor imagery task . It is defined as follows:

- –

- :

- –

- The function that calculates the subset of , where changes in the brain activity of the subject in the electrode are significant during the motor imagery task . Brain activity variations during a given motor imagery are considered significant if they exceed the variation in power in a reference electrode during the same motor imagery. It is defined as follows:

- –

- :

- –

- such that

- *

- Where ref_Power (, e) is defined as the average power of the electrode e during the distinct rest periods of , according to the following expression:

- *

- Regarding the reference electrode () we selected as the reference for all electrodes located at the left-brain hemisphere. Moreover, we selected as the reference for all electrodes located at the right brain hemisphere.

- and are the total number of trials of and , respectively.

- such that

2.4. Feature Extraction

2.5. Finger Movements Classification

2.6. Prosthesis Control

3. Results

| Algorithm 1: Commented algorithm of the basic steps of feature extraction and classification problems |

|

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

| TLA | Three letter acronym |

| BCI | Brain Computer Interfaces |

| SVM | Support vector machine |

| LDA | Linear discriminant analysis |

| RF | random forest |

| kNN | k-nearest neighborhood |

| HCI | Human computer interaction |

| EEG | Electroencephalogram |

| MEG | Magnetoencephalography |

| ECoG | Electrocorticogram |

| fNIRS | Functional Near-Infrared Spectroscopy |

| EMG | Electromyography |

| UN | United Nations |

| ERPs | Event-related potentials |

| SMR | sensorimotor rhythms |

| CWD | Choi–Williams distribution |

| 2LCF | Two-layer classification framework |

| CSP | Common spatial pattern |

| LSTM | long short-term memory |

| CNN | convolutional neural network model |

| RCNN | recurrent convolutional neural network |

| PCA | Principal component analysis |

| PSD | Power Spectral Densities |

| SNR | signal-to-noise ratio |

| SPI | Scenario program interface |

References

- Gannouni, S.; Alangari, N.; Mathkour, H.; Aboalsamh, H.; Belwafi, K. BCWB: A P300 brain-controlled web browser. Int. J. Semant. Web Inf. Syst. 2017, 13, 55–73. [Google Scholar] [CrossRef]

- Mudgal, S.K.; Sharma, S.K.; Chaturvedi, J.; Sharma, A. Brain computer interface advancement in neurosciences: Applications and issues. Interdiscip. Neurosurg. 2020, 20, 100694. [Google Scholar] [CrossRef]

- Pulliam, C.L.; Stanslaski, S.R.; Denison, T.J. Chapter 25—Industrial perspectives on brain-computer interface technology. In Brain-Computer Interfaces; Handbook of Clinical Neurology; Ramsey, N.F., del R. Millán, J., Eds.; Elsevier: Amsterdam, The Netherlands, 2020; Volume 168, pp. 341–352. [Google Scholar] [CrossRef]

- Zhang, F.; Yu, H.; Jiang, J.; Wang, Z.; Qin, X. Brain–computer control interface design for virtual household appliances based on steady-state visually evoked potential recognition. Vis. Inform. 2020, 4, 1–7. [Google Scholar] [CrossRef]

- AL-Quraishi, M.; Elamvazuthi, I.; Daud, S.; Parasuraman, S.; Borboni, A. EEG-Based Control for Upper and Lower Limb Exoskeletons and Prostheses: A Systematic Review. Sensors 2018, 18, 3342. [Google Scholar] [CrossRef] [PubMed]

- Tayeb, Z.; Fedjaev, J.; Ghaboosi, N.; Richter, C.; Everding, L.; Qu, X.; Wu, Y.; Cheng, G.; Conradt, J. Validating Deep Neural Networks for Online Decoding of Motor Imagery Movements from EEG Signals. Sensors 2019, 19, 210. [Google Scholar] [CrossRef] [PubMed]

- Jia, T.; Liu, K.; Lu, Y.; Liu, Y.; Li, C.; Ji, L.; Qian, C. Small-Dimension Feature Matrix Construction Method for Decoding Repetitive Finger Movements From Electroencephalogram Signals. IEEE Access 2020, 8, 56060–56071. [Google Scholar] [CrossRef]

- Alazrai, R.; Alwanni, H.; Daoud, M.I. EEG-based BCI system for decoding finger movements within the same hand. Neurosci. Lett. 2019, 698, 113–120. [Google Scholar] [CrossRef] [PubMed]

- Anam, K.; Nuh, M.; Al-Jumaily, A. Comparison of EEG Pattern Recognition of Motor Imagery for Finger Movement Classification. In Proceedings of the 2019 6th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Bandung, Indonesia, 18–20 September 2019. [Google Scholar] [CrossRef]

- Liao, K.; Xiao, R.; Gonzalez, J.; Ding, L. Decoding Individual Finger Movements from One Hand Using Human EEG Signals. PLoS ONE 2014, 9, e85192. [Google Scholar] [CrossRef] [PubMed]

- Yong, X.; Li, Y.; Menon, C. The Use of an MEG/fMRI-Compatible Finger Motion Sensor in Detecting Different Finger Actions. Front. Bioeng. Biotechnol. 2016, 3. [Google Scholar] [CrossRef] [PubMed]

- Quandt, F.; Reichert, C.; Hinrichs, H.; Heinze, H.; Knight, R.; Rieger, J. Single trial discrimination of individual finger movements on one hand: A combined MEG and EEG study. NeuroImage 2012, 59, 3316–3324. [Google Scholar] [CrossRef] [PubMed]

- Xie, Z.; Schwartz, O.; Prasad, A. Decoding of finger trajectory from ECoG using deep learning. J. Neural Eng. 2018, 15, 036009. [Google Scholar] [CrossRef] [PubMed]

- Branco, M.P.; Freudenburg, Z.V.; Aarnoutse, E.J.; Bleichner, M.G.; Vansteensel, M.J.; Ramsey, N.F. Decoding hand gestures from primary somatosensory cortex using high-density ECoG. NeuroImage 2017, 147, 130–142. [Google Scholar] [CrossRef] [PubMed]

- Onaran, I.; Ince, N.F.; Cetin, A.E. Classification of multichannel ECoG related to individual finger movements with redundant spatial projections. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011. [Google Scholar] [CrossRef]

- Lee, S.H.; Jin, S.H.; Jang, G.; Lee, Y.J.; An, J.; Shik, H.K. Cortical activation pattern for finger movement: A feasibility study towards a fNIRS based BCI. In Proceedings of the 2015 10th Asian Control Conference (ASCC), Kota Kinabalu, Malaysia, 31 May–3 June 2015. [Google Scholar] [CrossRef]

- Soltanmoradi, M.A.; Azimirad, V.; Hajibabazadeh, M. Detecting finger movement through classification of electromyography signals for use in control of robots. In Proceedings of the 2014 Second RSI/ISM International Conference on Robotics and Mechatronics (ICRoM), Tehran, Iran, 15–17 October 2014. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; da Silva, F.L. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Belwafi, K.; Romain, O.; Gannouni, S.; Ghaffari, F.; Djemal, R.; Ouni, B. An embedded implementation based on adaptive filter bank for brain-computer interface systems. J. Neurosci. Methods 2018, 305, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Edelman, B.J.; Baxter, B.; He, B. EEG Source Imaging Enhances the Decoding of Complex Right-Hand Motor Imagery Tasks. IEEE Trans. Biomed. Eng. 2016, 63, 4–14. [Google Scholar] [CrossRef] [PubMed]

- Zapała, D.; Zabielska-Mendyk, E.; Augustynowicz, P.; Cudo, A.; Jaśkiewicz, M.; Szewczyk, M.; Kopiś, N.; Francuz, P. The effects of handedness on sensorimotor rhythm desynchronization and motor-imagery BCI control. Sci. Rep. 2020, 10. [Google Scholar] [CrossRef] [PubMed]

- Belwafi, K.; Djemal, R.; Ghaffari, F.; Romain, O. An adaptive EEG filtering approach to maximize the classification accuracy in motor imagery. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence, Cognitive Algorithms, Mind, and Brain (CCMB), Orlando, FL, USA, 9–12 December 2014. [Google Scholar] [CrossRef]

- Mitra, S.K. Digital Signal Processing; Wcb/McGraw-Hill: Boston, MA, USA, 2010. [Google Scholar]

- Lepage, K.Q.; Kramer, M.A.; Chu, C.J. A statistically robust EEG re-referencing procedure to mitigate reference effect. J. Neurosci. Methods 2014, 235, 101–116. [Google Scholar] [CrossRef] [PubMed]

- Lotte, F.; Guan, C. Regularizing Common Spatial Patterns to Improve BCI Designs: Unified Theory and New Algorithms. IEEE Trans. Biomed. Eng. 2011, 58, 355–362. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Neuper, C. Motor imagery and direct brain-computer communication. Proc. IEEE 2001, 89, 1123–1134. [Google Scholar] [CrossRef]

| (a) results | |||||

|---|---|---|---|---|---|

| Finger | Raw Accuracy | Proposed method | |||

| Accuracy | Precision | Recall | F_Measure | ||

| Thumb | 50 | 80.35 | 85.71 | 83.33 | 84.50 |

| Index | 60.70 | 80.35 | 90.32 | 77.77 | 83.58 |

| Middle | 57.14 | 83.92 | 86.48 | 88.88 | 87.67 |

| Ring | 55.35 | 85.71 | 91.17 | 86.11 | 88.57 |

| Pinky | 57.14 | 87.50 | 93.93 | 86.11 | 89.85 |

| (b)results | |||||

| Finger | Raw Accuracy | Proposed method | |||

| Accuracy | Precision | Recall | F_Measure | ||

| Thumb | 66.07 | 73.21 | 81.81 | 75 | 78.26 |

| Index | 58.18 | 85.45 | 93.54 | 82.85 | 87.87 |

| Middle | 61.81 | 89.09 | 91.42 | 91.42 | 91.42 |

| Ring | 56.36 | 83.63 | 96.42 | 77.14 | 85.71 |

| Pinky | 60.71 | 73.21 | 86.20 | 69.44 | 76.92 |

| (c)results | |||||

| Finger | Raw Accuracy | Proposed method | |||

| Accuracy | Precision | Recall | F_Measure | ||

| Thumb | 58.62 | 75.86 | 78.57 | 86.84 | 82.50 |

| Index | 53.44 | 79.31 | 82.50 | 86.84 | 84.61 |

| Middle | 55.17 | 89.65 | 88.09 | 97.36 | 92.50 |

| Ring | 53.44 | 84.48 | 87.17 | 89.47 | 88.31 |

| Pinky | 42.1 | 85.96 | 91.42 | 86.48 | 88.88 |

| (d)results | |||||

| Finger | Raw Accuracy | Proposed method | |||

| Accuracy | Precision | Recall | F_Measure | ||

| Thumb | 75 | 69.64 | 74.35 | 80.55 | 77.33 |

| Index | 60.71 | 85.71 | 85. | 94.44 | 89.47 |

| Middle | 50 | 83.92 | 81.39 | 97.22 | 88.60 |

| Ring | 56.36 | 72.72 | 72.72 | 91.42 | 81.01 |

| Pinky | 56.36 | 78.18 | 78.04 | 91.42 | 84.21 |

| (e)results | |||||

| Finger | Raw Accuracy | Proposed method | |||

| Accuracy | Precision | Recall | F_Measure | ||

| Thumb | 51.78 | 76.78 | 81.08 | 83.33 | 82.19 |

| Index | 60.71 | 83.92 | 82.92 | 94.44 | 88.31 |

| Middle | 58.92 | 76.78 | 75.55 | 94.44 | 83.95 |

| Ring | 67.85 | 78.57 | 76.08 | 97.22 | 85.36 |

| Pinky | 60.71 | 76.78 | 76.74 | 91.66 | 83.54 |

| (f) Summary of the accuracy by subject | |||||

| Finger | |||||

| Thumb | 80.35 | 73.21 | 75.86 | 69.64 | 76.78 |

| Index | 80.35 | 85.45 | 79.31 | 85.71 | 83.92 |

| Middle | 83.92 | 89.09 | 89.65 | 83.92 | 76.78 |

| Ring | 85.71 | 83.63 | 84.48 | 72.72 | 78.57 |

| Pinky | 87.50 | 73.21 | 85.96 | 78.18 | 76.78 |

| Average | 83.56 | 80.91 | 83.52 | 78.03 | 78.56 |

| Studies | N of Fingers | Signal Processing Chain | N of Subjects | Accuracy (%) |

|---|---|---|---|---|

| [7] | 2 | Band-pass filter & ERD/ERS & SVM | 10 | ≈62.5 |

| [8] | 4 | CWD & 2LCF | 18 | 43.5 |

| [9] | 5 | RF & LDA & SVM & KNN | 4 | 54 |

| [10] | 5 | PCA & PSD & SVM | 11 | 77 |

| Proposed method | 5 | Band-pass filter & CAR &CSP & LDA | 5 | 81 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gannouni, S.; Belwafi, K.; Aboalsamh, H.; AlSamhan, Z.; Alebdi, B.; Almassad, Y.; Alobaedallah, H. EEG-Based BCI System to Detect Fingers Movements. Brain Sci. 2020, 10, 965. https://doi.org/10.3390/brainsci10120965

Gannouni S, Belwafi K, Aboalsamh H, AlSamhan Z, Alebdi B, Almassad Y, Alobaedallah H. EEG-Based BCI System to Detect Fingers Movements. Brain Sciences. 2020; 10(12):965. https://doi.org/10.3390/brainsci10120965

Chicago/Turabian StyleGannouni, Sofien, Kais Belwafi, Hatim Aboalsamh, Ziyad AlSamhan, Basel Alebdi, Yousef Almassad, and Homoud Alobaedallah. 2020. "EEG-Based BCI System to Detect Fingers Movements" Brain Sciences 10, no. 12: 965. https://doi.org/10.3390/brainsci10120965

APA StyleGannouni, S., Belwafi, K., Aboalsamh, H., AlSamhan, Z., Alebdi, B., Almassad, Y., & Alobaedallah, H. (2020). EEG-Based BCI System to Detect Fingers Movements. Brain Sciences, 10(12), 965. https://doi.org/10.3390/brainsci10120965