Watershed Brain Regions for Characterizing Brand Equity-Related Mental Processes

Abstract

:1. Introduction

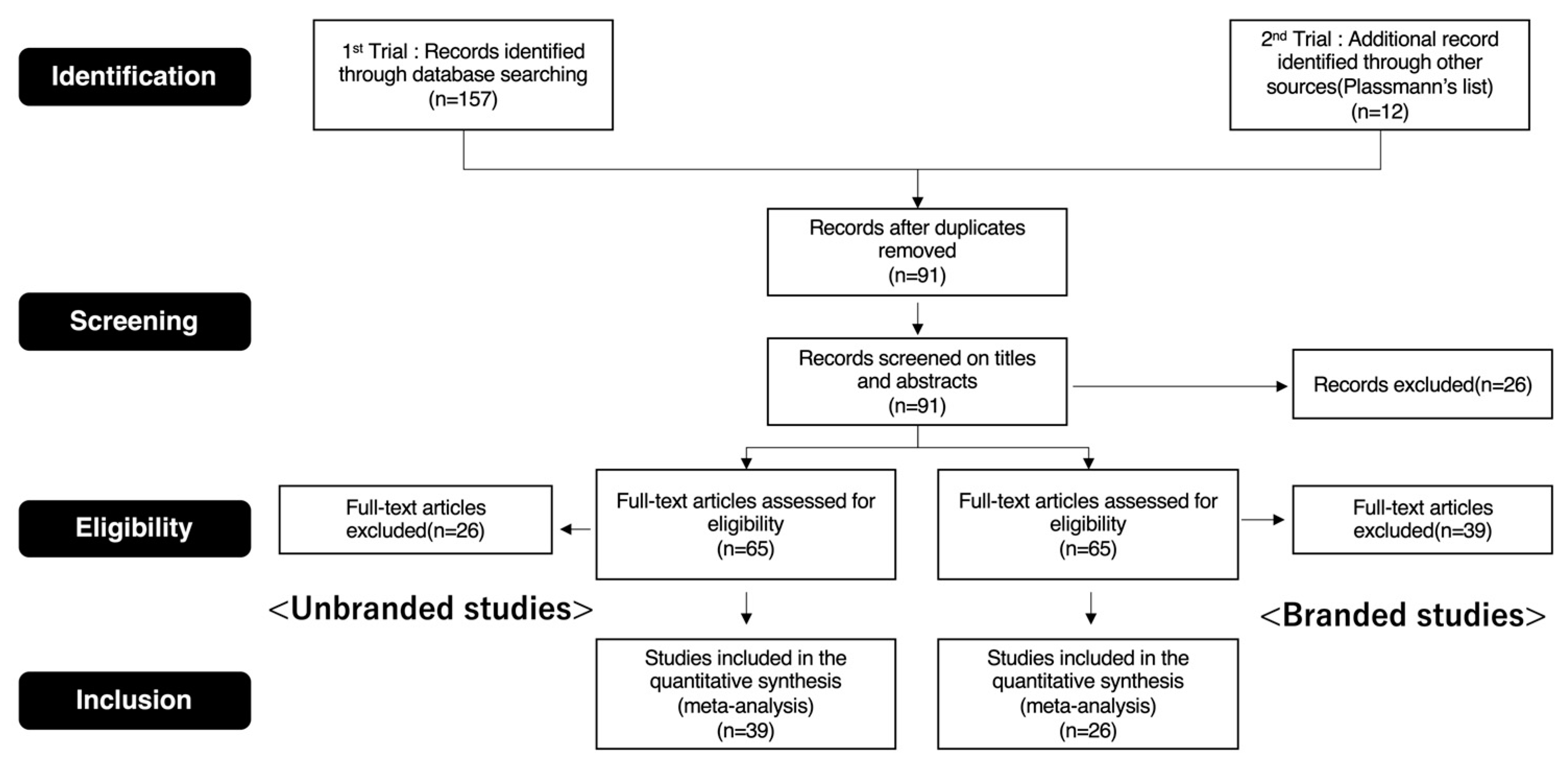

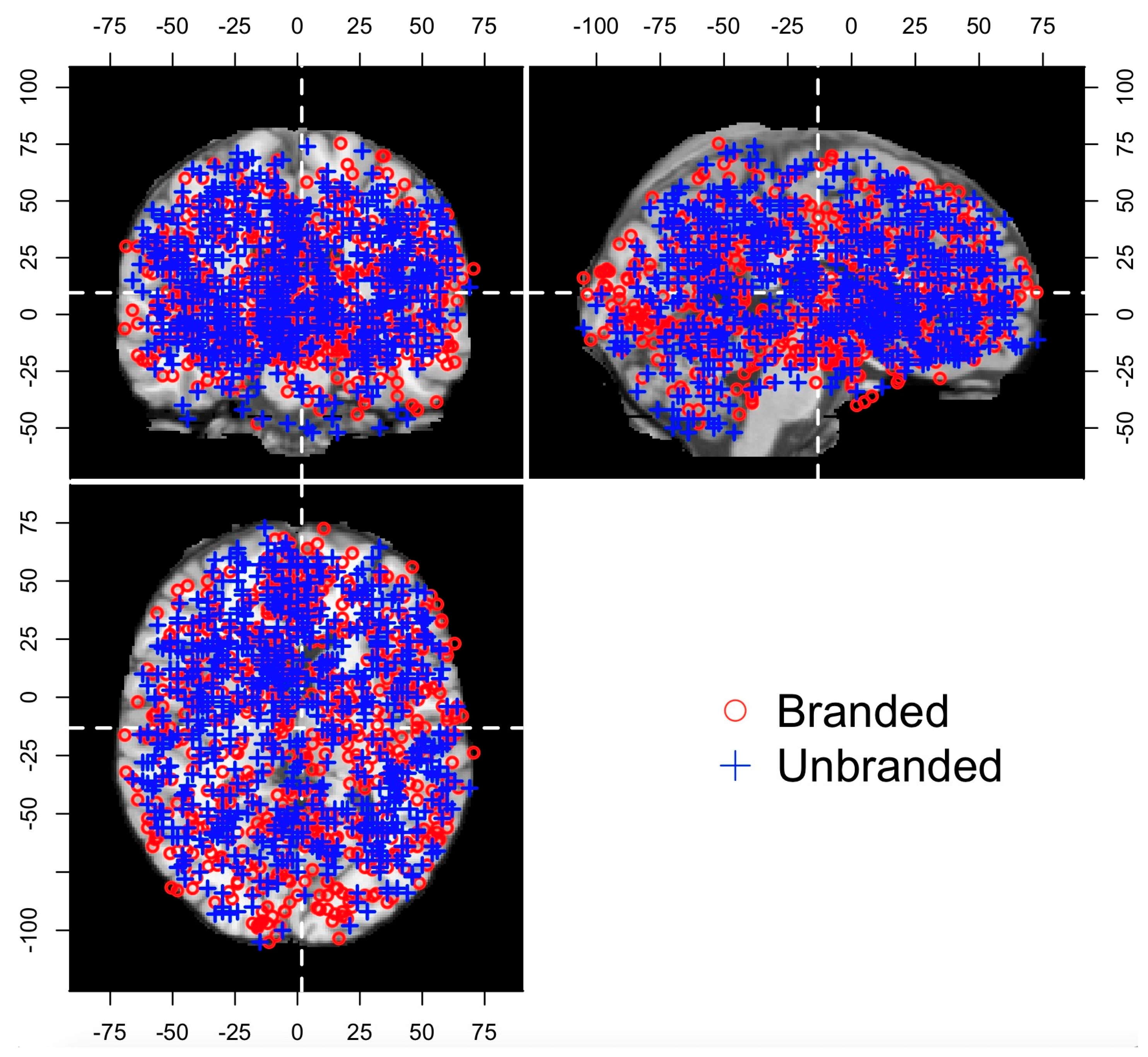

2. Materials and Methods

2.1. Procedures of the ALE Method

2.2. Procedures of the Chi-Square Test

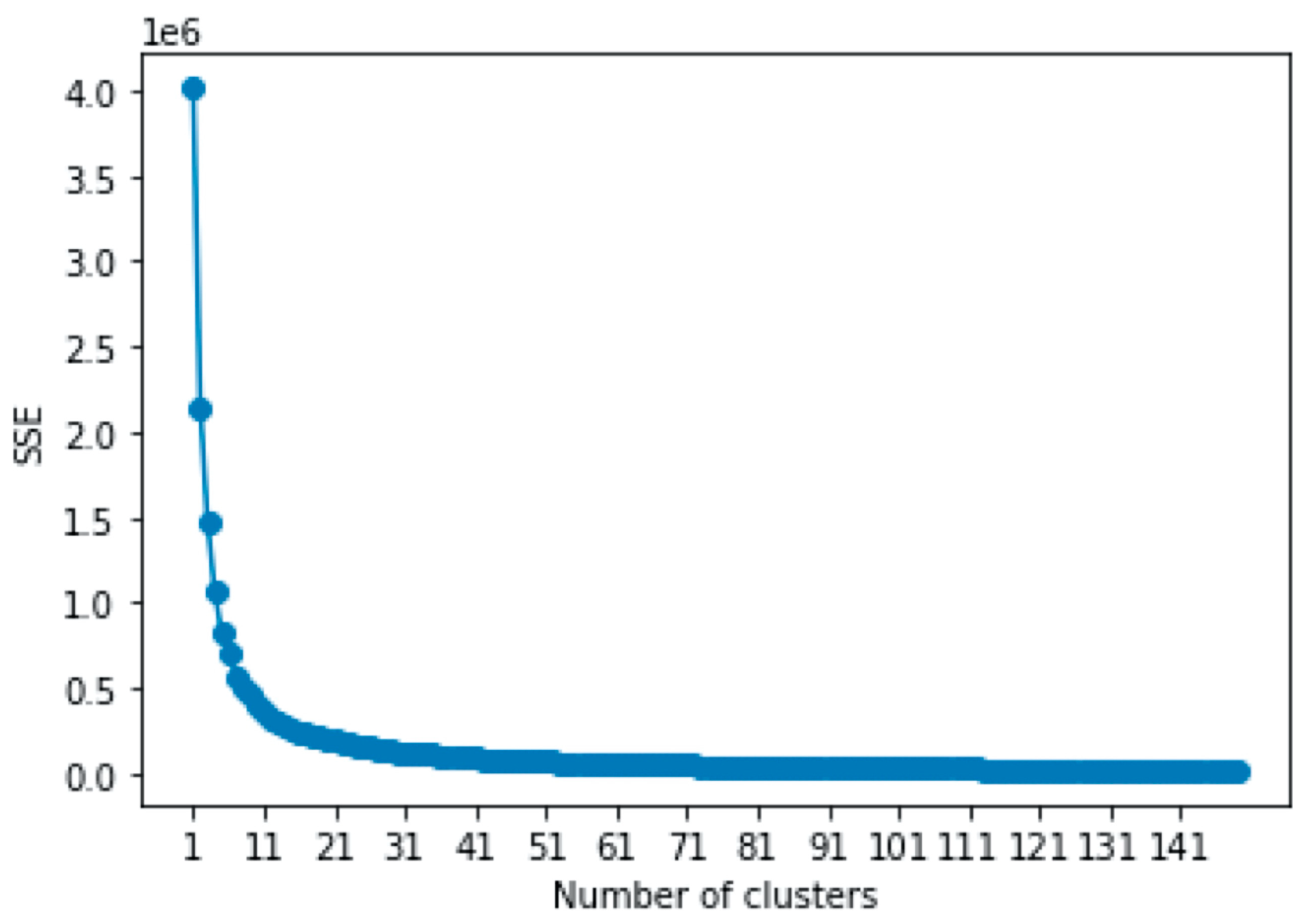

2.3. Procedures of Machine Learning

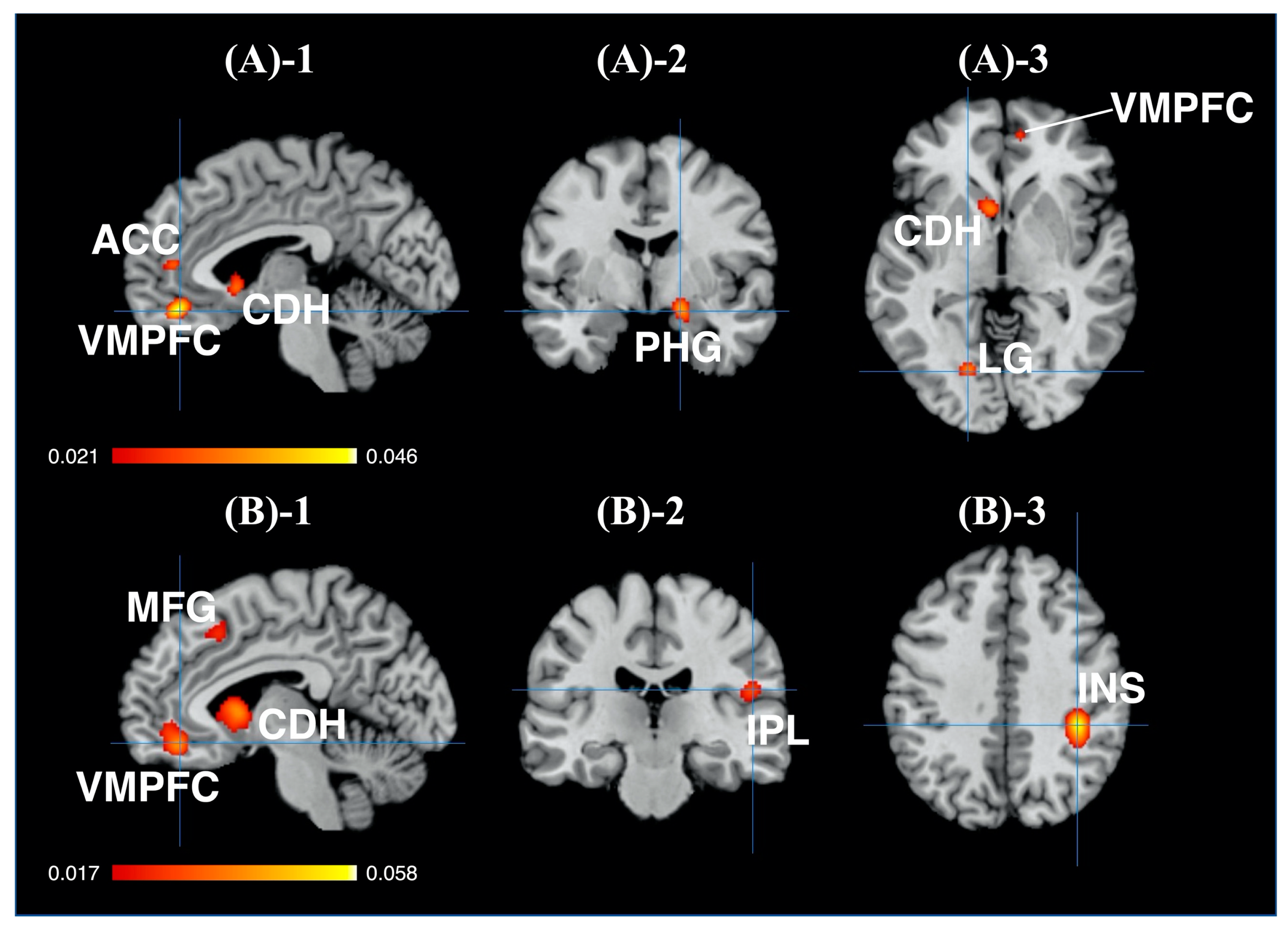

3. Results

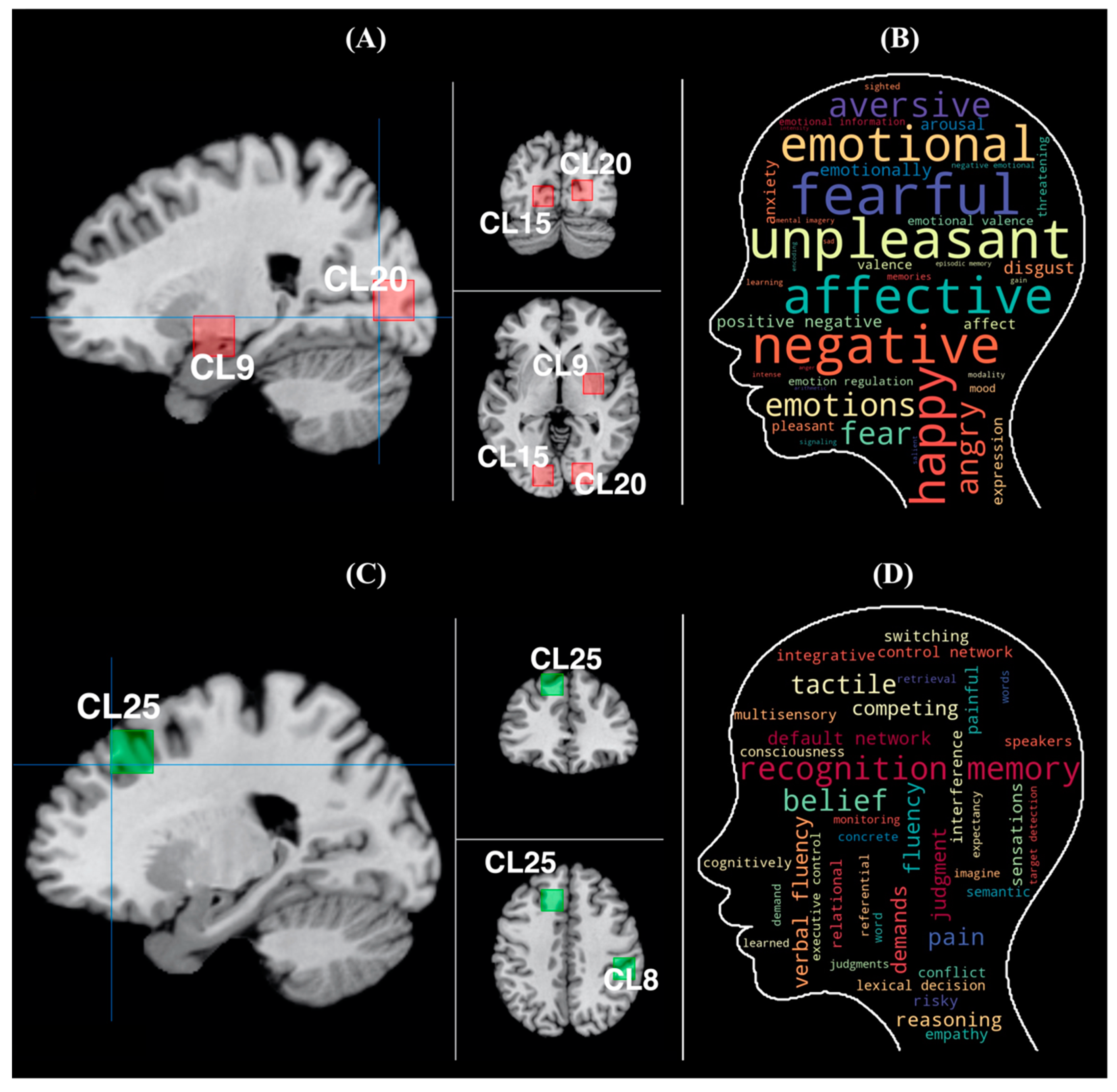

3.1. Results of the ALE

3.2. Results of the Chi-Square Test

3.3. Results of Machine Learning

4. Discussion

5. Conclusions

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Farquhar, P.H. Managing Brand Equity. Mark. Res. 1989, 1, 24–33. [Google Scholar]

- Aaker, D.A. The Value of Brand Equity. J. Bus. Strategy 1992, 13, 27–32. [Google Scholar] [CrossRef]

- McClure, S.M.; Li, J.; Tomlin, D.; Cypert, K.S.; Montague, L.M.; Montague, P.R. Neural Correlates of Behavioral Preference for Culturally Familiar Drinks. Neuron 2004, 44, 379–387. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Erk, S.; Spitzer, M.; Wunderlich, A.P.; Galley, L.; Walter, H. Cultural Objects Modulate Reward Circuitry. NeuroReport 2002, 13, 2499–2503. [Google Scholar] [CrossRef]

- Koeneke, S.; Pedroni, A.F.; Dieckmann, A.; Bosch, V.; Jäncke, L. Individual Preferences Modulate Incentive Values: Evidence from Functional MRI. Behav. Brain Funct. 2008, 4, 55. [Google Scholar] [CrossRef] [Green Version]

- Murawski, C.; Harris, P.G.; Bode, S.; Domínguez D, J.F.; Egan, G.F. Led into Temptation? Rewarding Brand Logos Bias the Neural Encoding of Incidental Economic Decisions. PLoS ONE 2012, 7, e34155. [Google Scholar] [CrossRef] [Green Version]

- Enax, L.; Krapp, V.; Piehl, A.; Weber, B. Effects of Social Sustainability Signaling on Neural Valuation Signals and Taste-Experience of Food Products. Front. Behav. Neurosci. 2015, 9, 247. [Google Scholar] [CrossRef] [Green Version]

- Jung, D.; Sul, S.; Lee, M.; Kim, H. Social Observation Increases Functional Segregation Between MPFC Subregions Predicting Prosocial Consumer Decisions. Sci. Rep. 2018, 8, 3368. [Google Scholar] [CrossRef]

- Bartra, O.; McGuire, J.T.; Kable, J.W. The Valuation System: A Coordinate-Based Meta-Analysis of BOLD FMRI Experiments Examining Neural Correlates of Subjective Value. Neuroimage 2013, 76, 412–427. [Google Scholar] [CrossRef] [Green Version]

- Petit, O.; Merunka, D.; Anton, J.L.; Nazarian, B.; Spence, C.; Cheok, A.D.; Raccah, D.; Oullier, O. Health and Pleasure in Consumers’ Dietary Food Choices: Individual Differences in the Brain’s Value System. PLoS ONE 2016, 11, e0156333. [Google Scholar] [CrossRef]

- Motoki, K.; Sugiura, M.; Kawashima, R. Common Neural Value Representations of Hedonic and Utilitarian Products in the Ventral Stratum: An FMRI Study. Sci. Rep. 2019, 9, 15630. [Google Scholar] [CrossRef] [PubMed]

- Setton, R.; Fisher, G.; Spreng, R.N. Mind the Gap: Congruence Between Present and Future Motivational States Shapes Prospective Decisions. Neuropsychologia 2019, 132, 107130. [Google Scholar] [CrossRef]

- Schaefer, M.; Berens, H.; Heinze, H.J.; Rotte, M. Neural Correlates of Culturally Familiar Brands of Car Manufacturers. Neuroimage 2006, 31, 861–865. [Google Scholar] [CrossRef] [PubMed]

- Schaefer, M.; Rotte, M. Thinking on Luxury or Pragmatic Brand Products: Brain Responses to Different Categories of Culturally Based Brands. Brain Res. 2007, 1165, 98–104. [Google Scholar] [CrossRef]

- Chen, Y.P.; Nelson, L.D.; Hsu, M. From “Where” to “What”: Distributed Representations of Brand Associations in the Human Brain. J. Mark. Res. 2015, 52, 453–466. [Google Scholar] [CrossRef] [Green Version]

- Chib, V.S.; Rangel, A.; Shimojo, S.; O’Doherty, J.P. Evidence for a Common Representation of Decision Values for Dissimilar Goods in Human Ventromedial Prefrontal Cortex. J. Neurosci. 2009, 29, 12315–12320. [Google Scholar] [CrossRef] [Green Version]

- Tusche, A.; Bode, S.; Haynes, J.D. Neural Responses to Unattended Products Predict Later Consumer Choices. J. Neurosci. 2010, 30, 8024–8031. [Google Scholar] [CrossRef] [Green Version]

- Berns, G.S.; Capra, C.M.; Moore, S.; Noussair, C. Neural Mechanisms of the Influence of Popularity on Adolescent Ratings of Music. Neuroimage 2010, 49, 2687–2696. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Van der Laan, L.N.; De Ridder, D.T.; Viergever, M.A.; Smeets, P.A. Appearance Matters: Neural Correlates of Food Choice and Packaging Aesthetics. PLoS ONE 2012, 7, e41738. [Google Scholar] [CrossRef] [Green Version]

- Kang, M.J.; Camerer, C.F. FMRI Evidence of a Hot-Cold Empathy Gap in Hypothetical and Real Aversive Choices. Front. Neurosci. 2013, 7, 104. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, Y.; Chong, M.F.; Liu, J.C.; Libedinsky, C.; Gooley, J.J.; Chen, S.; Wu, T.; Tan, V.; Zhou, M.; Meaney, M.J.; et al. Dietary Disinhibition Modulates Neural Valuation of Food in the Fed and Fasted States. Am. J. Clin. Nutr. 2013, 97, 919–925. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lighthall, N.R.; Huettel, S.A.; Cabeza, R. Functional Compensation in the Ventromedial Prefrontal Cortex Improves Memory-Dependent Decisions in Older Adults. J. Neurosci. 2014, 34, 15648–15657. [Google Scholar] [CrossRef] [Green Version]

- Yokoyama, R.; Nozawa, T.; Sugiura, M.; Yomogida, Y.; Takeuchi, H.; Akimoto, Y.; Shibuya, S.; Kawashima, R. The Neural Bases Underlying Social Risk Perception in Purchase Decisions. NeuroImage 2014, 91, 120–128. [Google Scholar] [CrossRef] [PubMed]

- Giuliani, N.R.; Pfeifer, J.H. Age-Related Changes in Reappraisal of Appetitive Cravings During Adolescence. NeuroImage 2015, 108, 173–181. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stuke, H.; Gutwinski, S.; Wiers, C.E.; Schmidt, T.T.; Gröpper, S.; Parnack, J.; Gawron, C.; Hindi Attar, C.; Spengler, S.; Walter, H.; et al. To Drink or Not to Drink: Harmful Drinking Is Associated with Hyperactivation of Reward Areas Rather than Hypoactivation of Control Areas in Men. J. Psychiatry Neurosci. 2016, 41, E24–E36. [Google Scholar] [CrossRef] [Green Version]

- Waskow, S.; Markett, S.; Montag, C.; Weber, B.; Trautner, P.; Kramarz, V.; Reuter, M. Pay What You Want! A Pilot Study on Neural Correlates of Voluntary Payments for Music. Front. Psychol. 2016, 7, 1023. [Google Scholar] [CrossRef] [Green Version]

- De Martino, B.; Bobadilla-Suarez, S.; Nouguchi, T.; Sharot, T.; Love, B.C. Social Information Is Integrated into Value and Confidence Judgments According to Its Reliability. J. Neurosci. 2017, 37, 6066–6074. [Google Scholar] [CrossRef]

- Reimann, M.; Castaño, R.; Zaichkowsky, J.; Bechara, A. How We Relate to Brands: Psychological and Neurophysiological Insights into Consumer–Brand Relationships. J. Con. Psychol. 2012, 22, 128–142. [Google Scholar] [CrossRef]

- Yoon, C.; Gutchess, A.H.; Feinberg, F.; Polk, T.A. A Functional Magnetic Resonance Imaging Study of Neural Dissociations Between Brand and Person Judgments. J. Con. Res. 2006, 33, 31–40. [Google Scholar] [CrossRef]

- Deppe, M.; Schwindt, W.; Krämer, J.; Kugel, H.; Plassmann, H.; Kenning, P.; Ringelstein, E.B. Evidence for a Neural Correlate of a Framing Effect: Bias-Specific Activity in the Ventromedial Prefrontal Cortex During Credibility Judgments. Brain Res. Bull. 2005, 67, 413–421. [Google Scholar] [CrossRef]

- Kato, J.; Ide, H.; Kabashima, I.; Kadota, H.; Takano, K.; Kansaku, K. Neural Correlates of Attitude Change Following Positive and Negative Advertisements. Front. Behav. Neurosci. 2009, 3, 6. [Google Scholar] [CrossRef] [Green Version]

- Plassmann, H.; Ramsøy, T.Z.; Milosavljevic, M. Branding the Brain: A Critical Review and Outlook. J. Con. Psychol. 2012, 22, 18–36. [Google Scholar] [CrossRef]

- Klucharev, V.; Smidts, A.; Fernández, G. Brain Mechanisms of Persuasion: How “Expert Power” Modulates Memory and Attitudes. Soc. Cogn. Affect. Neurosci. 2008, 3, 353–366. [Google Scholar] [CrossRef] [Green Version]

- Plassmann, H.; O’Doherty, J.; Shiv, B.; Rangel, A. Marketing Actions Can Modulate Neural Representations of Experienced Pleasantness. Proc. Natl. Acad. Sci. USA 2008, 105, 1050–1054. [Google Scholar] [CrossRef] [Green Version]

- Knutson, B.; Rick, S.; Wimmer, G.E.; Prelec, D.; Loewenstein, G. Neural Predictors of Purchases. Neuron 2007, 53, 147–156. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lancaster, J.L.; Tordesillas-Gutiérrez, D.; Martinez, M.; Salinas, F.; Evans, A.; Zilles, K.; Mazziotta, J.C.; Fox, P.T. Bias Between MNI and Talairach Coordinates Analyzed Using the ICBM-152 Brain Template. Hum. Brain Mapp. 2007, 28, 1194–1205. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brett, M.; Johnsrude, I.S.; Owen, A.M. The Problem of Functional Localization in the Human Brain. Nat. Rev. Neurosci. 2002, 3, 243–249. [Google Scholar] [CrossRef]

- Turkeltaub, P.E.; Eden, G.F.; Jones, K.M.; Zeffiro, T.A. Meta-Analysis of the Functional Neuroanatomy of Single-Word Reading: Method and Validation. Neuroimage 2002, 16 Pt 1, 765–780. [Google Scholar] [CrossRef] [PubMed]

- Eickhoff, S.B.; Bzdok, D.; Laird, A.R.; Kurth, F.; Fox, P.T. Activation Likelihood Estimation Meta-Analysis Revisited. Neuroimage 2012, 59, 2349–2361. [Google Scholar] [CrossRef] [Green Version]

- Eickhoff, S.B.; Laird, A.R.; Grefkes, C.; Wang, L.E.; Zilles, K.; Fox, P.T. Coordinate-Based Activation Likelihood Estimation Meta-Analysis of Neuroimaging Data: A Random-Effects Approach Based on Empirical Estimates of Spatial Uncertainty. Hum. Brain Mapp. 2009, 30, 2907–2926. [Google Scholar] [CrossRef] [Green Version]

- Acar, F.; Seurinck, R.; Eickhoff, S.B.; Moerkerke, B. Assessing Robustness Against Potential Publication Bias in Activation Likelihood Estimation (ALE) Meta-Analyses for FMRI. PLoS ONE 2018, 13, e0208177. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Turkeltaub, P.E.; Eickhoff, S.B.; Laird, A.R.; Fox, M.; Wiener, M.; Fox, P. Minimizing Within-Experiment and Within-Group Effects in Activation Likelihood Estimation Meta-Analyses. Hum. Brain Mapp. 2012, 33, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Eickhoff, S.B.; Nichols, T.E.; Laird, A.R.; Hoffstaedter, F.; Amunts, K.; Fox, P.T.; Bzdok, D.; Eickhoff, C.R. Behavior, Sensitivity, and Power of Activation Likelihood Estimation Characterized by Massive Empirical Simulation. Neuroimage 2016, 137, 70–85. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- LeDell, E.; Poirier, S. H2O AutoMLml: Scalable Automatic Machine Learning. In Proceedings of the 7th ICML Workshop on Automated Machine Learning, Vienna, Austria, 17–18 July 2020; Available online: https://www.automl.org/wp-content/uploads/2020/07/AutoML_2020_paper_61.pdf (accessed on 15 July 2021).

- Diana, R.A.; Yonelinas, A.P.; Ranganath, C. Imaging Recollection and Familiarity in the Medial Temporal Lobe: A Three-Component Model. Trends Cogn. Sci. 2007, 11, 379–386. [Google Scholar] [CrossRef]

- Kirwan, C.B.; Stark, C.E. Medial Temporal Lobe Activation During Encoding and Retrieval of Novel Face-Name Pairs. Hippocampus 2004, 14, 919–930. [Google Scholar] [CrossRef] [Green Version]

- Düzel, E.; Habib, R.; Rotte, M.; Guderian, S.; Tulving, E.; Heinze, H.J. Human Hippocampal and Parahippocampal Activity During Visual Associative Recognition Memory for Spatial and Nonspatial Stimulus Configurations. J. Neurosci. 2003, 23, 9439–9444. [Google Scholar] [CrossRef] [Green Version]

- Ekstrom, A.D.; Bookheimer, S.Y. Spatial and Temporal Episodic Memory Retrieval Recruit Dissociable Functional Networks in the Human Brain. Learn. Mem. 2007, 14, 645–654. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Epstein, R.A. Parahippocampal and Retrosplenial Contributions to Human Spatial Navigation. Trends Cogn. Sci. 2008, 12, 388–396. [Google Scholar] [CrossRef] [Green Version]

- Epstein, R.; Kanwisher, N. A Cortical Representation of the Local Visual Environment. Nature 1998, 392, 598–601. [Google Scholar] [CrossRef]

- Henson, R.N.; Rugg, M.D.; Shallice, T.; Josephs, O.; Dolan, R.J. Recollection and Familiarity in Recognition Memory: An Event-Related Functional Magnetic Resonance Imaging Study. J. Neurosci. 1999, 19, 3962–3972. [Google Scholar] [CrossRef] [PubMed]

- Uncapher, M.R.; Rugg, M.D. Encoding and the Durability of Episodic Memory: A Functional Magnetic Resonance Imaging Study. J. Neurosci. 2005, 25, 7260–7267. [Google Scholar] [CrossRef]

- Ranganath, C.; Yonelinas, A.P.; Cohen, M.X.; Dy, C.J.; Tom, S.M.; D’Esposito, M. Dissociable Correlates of Recollection and Familiarity Within the Medial Temporal Lobes. Neuropsychologia 2004, 42, 2–13. [Google Scholar] [CrossRef]

- Woodruff, C.C.; Johnson, J.D.; Uncapher, M.R.; Rugg, M.D. Content-Specificity of the Neural Correlates of Recollection. Neuropsychologia 2005, 43, 1022–1032. [Google Scholar] [CrossRef] [PubMed]

- Aminoff, E.M.; Kveraga, K.; Bar, M. The Role of the Parahippocampal Cortex in Cognition. Trends Cogn. Sci. 2013, 17, 379–390. [Google Scholar] [CrossRef] [Green Version]

- de Gelder, B.; Tamietto, M.; Pegna, A.J.; Van den Stock, J. Visual Imagery Influences Brain Responses to Visual Stimulation in Bilateral Cortical Blindness. Cortex 2015, 72, 15–26. [Google Scholar] [CrossRef] [PubMed]

- Burianova, H.; McIntosh, A.R.; Grady, C.L. A Common Functional Brain Network for Autobiographical, Episodic, and Semantic Memory Retrieval. Neuroimage 2010, 49, 865–874. [Google Scholar] [CrossRef]

- Zhang, L.; Qiao, L.; Chen, Q.; Yang, W.; Xu, M.; Yao, X.; Qiu, J.; Yang, D. Gray Matter Volume of the Lingual Gyrus Mediates the Relationship Between Inhibition Function and Divergent Thinking. Front. Psychol. 2016, 7, 1532. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jin, H.; Liu, H.L.; Mo, L.; Fang, S.Y.; Zhang, J.X.; Lin, C.D. Involvement of the Left Inferior Frontal Gyrus in Predictive Inference Making. Int. J. Psychophysiol. 2009, 71, 142–148. [Google Scholar] [CrossRef]

- Andreasen, N.C.; O’Leary, D.S.; Arndt, S.; Cizadlo, T.; Hurtig, R.; Rezai, K.; Watkins, G.L.; Ponto, L.B.; Hichwa, R.D. Neural Substrates of Facial Recognition. J. Neuropsychiatry Clin. Neurosci. 1996, 8, 139–146. [Google Scholar] [CrossRef]

- Xiao, Z.; Zhang, J.X.; Wang, X.; Wu, R.; Hu, X.; Weng, X.; Tan, L.H. Differential Activity in Left Inferior Frontal Gyrus for Pseudowords and Real Words: An Event-Related fMRI Study on Auditory Lexical Decision. Hum. Brain Mapp. 2005, 25, 212–221. [Google Scholar] [CrossRef]

- Vitacco, D.; Brandeis, D.; Pascual-Marqui, R.; Martin, E. Correspondence of Event-Related Potential Tomography and Functional Magnetic Resonance Imaging During Language Processing. Hum. Brain Mapp. 2002, 17, 4–12. [Google Scholar] [CrossRef] [PubMed]

- Hinojosa, J.A.; Martín-Loeches, M.; Gómez-Jarabo, G.; Rubia, F.J. Common Basal Extrastriate Areas for the Semantic Processing of Words and Pictures. Clin. Neurophysiol. 2000, 111, 552–560. [Google Scholar] [CrossRef]

- Van de Putte, E.; De Baene, W.; Price, C.J.; Duyck, W. “Neural Overlap of L1 and L2 Semantic Representations across Visual and Auditory Modalities: A Decoding Approach”. Neuropsychologia 2018, 113, 68–77. [Google Scholar] [CrossRef]

- Zhang, C.; Lee, T.M.C.; Fu, Y.; Ren, C.; Chan, C.C.H.; Tao, Q. Properties of Cross-Modal Occipital Responses in Early Blindness: An ALE Meta-Analysis. NeuroImage Clin. 2019, 24, 102041. [Google Scholar] [CrossRef]

- Musz, E.; Thompson-Schill, S.L. Semantic Variability Predicts Neural Variability of Object Concepts. Neuropsychologia 2015, 76, 41–51. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Buckner, R.L.; Andrews-Hanna, J.R.; Schacter, D.L. The Brain’s Default Network: Anatomy, Function, and Relevance to Disease. Ann. N. Y. Acad. Sci. 2008, 1124, 1–38. [Google Scholar] [CrossRef] [Green Version]

- Lee, T.W.; Xue, S.W. Functional Connectivity Maps Based on Hippocampal and Thalamic Dynamics May Account for the Default-Mode Network. Eur. J. Neurosci. 2018, 47, 388–398. [Google Scholar] [CrossRef] [PubMed]

- Andrews-Hanna, J.R. The Brain’s Default Network and Its Adaptive Role in Internal Mentation. Neuroscientist 2012, 18, 251–270. [Google Scholar] [CrossRef]

- Vatansever, D.; Menon, D.K.; Stamatakis, E.A. Default Mode Contributions to Automated Information Processing. Proc. Natl. Acad. Sci. USA 2017, 114, 12821–12826. [Google Scholar] [CrossRef] [Green Version]

- Zago, L.; Tzourio-Mazoyer, N. Distinguishing Visuospatial Working Memory and Complex Mental Calculation Areas Within the Parietal Lobes. Neurosci. Lett. 2002, 331, 45–49. [Google Scholar] [CrossRef]

- Arsalidou, M.; Pawliw-Levac, M.; Sadeghi, M.; Pascual-Leone, J. Brain Areas Associated with Numbers and Calculations in Children: Meta-Analyses of fMRI Studies. Dev. Cogn. Neurosci. 2018, 30, 239–250. [Google Scholar] [CrossRef] [PubMed]

- Vickery, T.J.; Jiang, Y.V. Inferior Parietal Lobule Supports Decision Making Under Uncertainty in Humans. Cereb. Cortex 2009, 19, 916–925. [Google Scholar] [CrossRef] [Green Version]

- Gloy, K.; Herrmann, M.; Fehr, T. Decision Making under Uncertainty in a Quasi Realistic Binary Decision Task—An fMRI Study. Brain Cogn. 2020, 140, 105549. [Google Scholar] [CrossRef] [PubMed]

- Qiu, L.; Su, J.; Ni, Y.; Bai, Y.; Zhang, X.; Li, X.; Wan, X. The Neural System of Metacognition Accompanying Decision-Making in the Prefrontal Cortex. PLOS Biol. 2018, 16, e2004037. [Google Scholar] [CrossRef]

- Rushworth, M.F.S.; Walton, M.E.; Kennerley, S.W.; Bannerman, D.M. Action Sets and Decisions in the Medial Frontal Cortex. Trends Cogn. Sci. 2004, 8, 410–417. [Google Scholar] [CrossRef]

- Rushworth, M.F.; Buckley, M.J.; Behrens, T.E.; Walton, M.E.; Bannerman, D.M. Functional Organization of the Medial Frontal Cortex. Curr. Opin. Neurobiol. 2007, 17, 220–227. [Google Scholar] [CrossRef]

- Venkatraman, V.; Huettel, S.A. Strategic Control in Decision-Making Under Uncertainty. Eur. J. Neurosci. 2012, 35, 1075–1082. [Google Scholar] [CrossRef]

- Menon, V.; Uddin, L.Q. Saliency, Switching, Attention and Control: A Network Model of Insula Function. Brain Struct. Funct. 2010, 214, 655–667. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, W.; Mai, X.; Liu, C. The Default Mode Network and Social Understanding of Others: What Do Brain Connectivity Studies Tell Us. Front. Hum. Neurosci. 2014, 8, 74. [Google Scholar] [CrossRef]

- Ridderinkhof, K.R.; Ullsperger, M.; Crone, E.A.; Nieuwenhuis, S. The Role of the Medial Frontal Cortex in Cognitive Control. Science 2004, 306, 443–447. [Google Scholar] [CrossRef] [PubMed]

- Chang, P.-L.; Chieng, M.-H. Building Consumer–Brand Relationship: A Cross-Cultural Experiential View. Psychol. Mark. 2006, 23, 927–959. [Google Scholar] [CrossRef]

- Srivastava, M.; Sharma, G.D.; Srivastava, A.K.; Kumaran, S.S. What’s in the Brain for Us: A Systematic Literature Review of Neuroeconomics and Neurofinance. Qual. Res. Financ. Markets 2020, 12, 413–435. [Google Scholar] [CrossRef]

- Supphellen, M. Understanding Core Brand Equity: Guidelines for In-Depth Elicitation of Brand Associations. Int. J. Mark. Res. 2000, 42, 1–14. [Google Scholar] [CrossRef]

- Aaker, D.A.; Jacobson, R. The Value Relevance of Brand Attitude in High-Technology Markets; SAGE Publications: Sage, CA, USA, 2001. [Google Scholar] [CrossRef] [Green Version]

| Database | Variables | Variable Type | N | Mean | SD | Median | Min | Max |

|---|---|---|---|---|---|---|---|---|

| ALL | x | Numerical | 1412 | 0.43 | 32.42 | −2 | −69.17 | 70.58 |

| y | Numerical | 1412 | −10.88 | 41.99 | −6 | −105.07 | 72.9 | |

| z | Numerical | 1412 | 11.39 | 24.5 | 8 | −52 | 75.36 | |

| Flag | Categorical | 1412 | - | - | - | - | - | |

| Branded | x | Numerical | 679 | 1.74 | 32.04 | 0 | −69.17 | 70.58 |

| y | Numerical | 679 | −13.18 | 43.3 | −8 | −105.07 | 72.56 | |

| z | Numerical | 679 | 9.57 | 23.7 | 6 | −48 | 75.36 | |

| Flag | Categorical | 679 | - | - | - | - | - | |

| Unbranded | x | Numerical | 733 | −0.78 | 32.74 | −3 | −66 | 69 |

| y | Numerical | 733 | −8.74 | 40.66 | −3 | −105 | 72.9 | |

| z | Numerical | 733 | 13.08 | 25.12 | 11 | −52 | 74 | |

| Flag | Categorical | 733 | - | - | - | - | - |

| (a) Brand Equity−Related Brain Regions | ||||||||

|---|---|---|---|---|---|---|---|---|

| Cluster # | Side | Brain Region | BA | Peak Voxel Coordinates (MNI) | ALE Values | Cluster Size (mm3) | ||

| x | y | z | ||||||

| 1 | L | ACC (rostral region/VMPFC) | 32 | −4 | 42 | −16 | 0.046 | 6368 |

| L | ACC (MPFC) | 32 | −4 | 44 | 8 | 0.030 | ||

| R | ACC (rostral region/MPFC) | 32 | 10 | 50 | −6 | 0.027 | ||

| L | Medial Frontal Gyrus (MPFC) | 10 | −10 | 52 | 10 | 0.023 | ||

| L | Medial Frontal Gyrus (MPFC) | 10 | 0 | 58 | 6 | 0.021 | ||

| 2 | R | PHG (entorhinal cortex) | 28 | 18 | −4 | −16 | 0.036 | 2216 |

| R | PHG (hippocampus) | − | 30 | −18 | −18 | 0.020 | ||

| 3 | L | Caudate Head (VS) | − | −6 | 12 | −4 | 0.034 | 1936 |

| 4 | R | PCC (retosplenial region) | 30 | 6 | −52 | 16 | 0.026 | 1064 |

| L | PCC (retosplenial region) | 30 | −6 | −58 | 12 | 0.020 | ||

| L | PCC (retosplenial region) | 29 | −4 | −50 | 14 | 0.019 | ||

| 5 | L | Lingual Gyrus | 18 | −18 | −74 | −4 | 0.033 | 1032 |

| L | Lingual Gyrus | 18 | −6 | −78 | −2 | 0.019 | ||

| (b) Unbranded Objects−Related Brain Regions | ||||||||

| Cluster # | Side | Brain Region | BA | Peak Voxel Coordinates(MNI) | ALE Values | Cluster Size (mm3) | ||

| x | y | z | ||||||

| 1 | L | ACC (rostral region/VMPFC) | 32 | −6 | 40 | −14 | 0.0392 | 3912 |

| L | ACC (MPFC) | 24 | −2 | 36 | 4 | 0.0299 | ||

| R | ACC (MPFC) | 32 | 4 | 44 | 2 | 0.0205 | ||

| 2 | L | Caudate Head (VS) | − | −10 | 10 | 0 | 0.0448 | 3624 |

| 3 | R | Inferior Parietal Lobule | 40 | 38 | −36 | 38 | 0.0576 | 2560 |

| 4 | R | Caudate Head (VS) | − | 12 | 14 | −8 | 0.0300 | 1608 |

| R | Caudate Body | − | 10 | 14 | 6 | 0.0254 | ||

| 5 | R | Insula | 13 | 52 | −24 | 18 | 0.0286 | 1024 |

| 6 | L | Medial Frontal Gyrus | 6 | −4 | 20 | 44 | 0.0258 | 1000 |

| Cluster_ID | Coordinates (MNI) | L/R | Brain Regions | ||

|---|---|---|---|---|---|

| x | y | z | |||

| cl_0 | 15 | −59 | −36 | R | Pyramis |

| cl_1 | −1 | 44 | −10 | L | Medial Frontal Gyrus (VMPFC) |

| cl_2 | −40 | 9 | 35 | L | Middle Frontal Gyrus (BA6) |

| cl_3 | 0 | −54 | 11 | I | Posterior Cingulate |

| cl_4 | 48 | 8 | 24 | R | Inferior Frontal Gyrus |

| cl_5 | −2 | 10 | 3 | L | Lateral Ventricle |

| cl_6 | −38 | −57 | −12 | L | Fusiform Gyrus (BA37) |

| cl_7 | −47 | −4 | −4 | L | Superior Temporal Gyrus (BA22) |

| cl_8 | 48 | −32 | 32 | R | Inferior Parietal Lobule (BA40) |

| cl_9 | 30 | −5 | −11 | R | PHG |

| cl_10 | 24 | 37 | 36 | R | Superior Frontal Gyrus |

| cl_11 | −43 | −60 | 29 | L | Middle Temporal Gyrus |

| cl_12 | −39 | 31 | 2 | L | Inferior Frontal Gyrus |

| cl_13 | 41 | −48 | −8 | R | Sub−Gyral |

| cl_14 | −25 | 6 | −16 | L | Subcallosal Gyrus |

| cl_15 | −15 | −87 | 0 | L | Lingual Gyrus (BA17) |

| cl_16 | 4 | −57 | 47 | R | Precuneus (BA7) |

| cl_17 | 41 | 34 | 0 | R | Inferior Frontal Gyrus |

| cl_18 | −54 | −25 | 27 | L | Inferior Parietal Lobule |

| cl_19 | −12 | −23 | −9 | L | Midbrain |

| cl_20 | 20 | −85 | 5 | R | Lingual Gyrus (BA17) |

| cl_21 | 5 | −8 | 40 | R | Middle Cingulate Gyrus (BA24) |

| cl_22 | 40 | −64 | 30 | R | Angular Gyrus |

| cl_23 | −5 | 53 | 16 | L | Medial Frontal Gyrus (BA9) |

| cl_24 | −31 | −33 | 57 | L | Postcentral Gyrus |

| cl_25 | −13 | 26 | 46 | L | Superior Frontal Gyrus (BA8) |

| Cluster_ID | Contingency Table | Adjusted Residual | p-Value | |||

|---|---|---|---|---|---|---|

| Branded | Unbranded | Branded | Unbranded | Branded | Unbranded | |

| cl_0 | 17 | 25 | −1.0023 | 1.0023 | 0.3162 | 0.3162 |

| cl_1 | 37 | 46 | −0.6596 | 0.6596 | 0.5095 | 0.5095 |

| cl_2 | 19 | 25 | −0.6617 | 0.6617 | 0.5081 | 0.5081 |

| cl_3 | 29 | 23 | 1.1296 | −1.1296 | 0.2586 | 0.2586 |

| cl_4 | 29 | 22 | 1.2775 | −1.2775 | 0.2014 | 0.2014 |

| cl_5 | 36 | 52 | −1.3919 | 1.3919 | 0.164 | 0.164 |

| cl_6 | 29 | 31 | 0.0389 | −0.0389 | 0.969 | 0.969 |

| cl_7 | 23 | 26 | −0.1639 | 0.1639 | 0.8698 | 0.8698 |

| cl_8 | 29 | 50 | −2.0834 | 2.0834 | 0.0372 ** | 0.0372 ** |

| cl_9 | 54 | 29 | 3.1900 | −3.1900 | 0.0014 *** | 0.0014 *** |

| cl_10 | 20 | 25 | −0.4972 | 0.4972 | 0.6191 | 0.6191 |

| cl_11 | 21 | 22 | 0.0999 | −0.0999 | 0.9204 | 0.9204 |

| cl_12 | 26 | 37 | −1.1081 | 1.1081 | 0.2678 | 0.2678 |

| cl_13 | 28 | 25 | 0.7044 | −0.7044 | 0.4812 | 0.4812 |

| cl_14 | 19 | 23 | −0.3753 | 0.3753 | 0.7075 | 0.7075 |

| cl_15 | 34 | 15 | 3.0373 | −3.0373 | 0.0024 *** | 0.0024 *** |

| cl_16 | 19 | 30 | −1.3279 | 1.3279 | 0.1842 | 0.1842 |

| cl_17 | 28 | 39 | −1.0570 | 1.0570 | 0.2905 | 0.2905 |

| cl_18 | 14 | 24 | −1.4065 | 1.4065 | 0.1596 | 0.1596 |

| cl_19 | 24 | 16 | 1.5297 | −1.5297 | 0.1261 | 0.1261 |

| cl_20 | 29 | 7 | 3.9497 | −3.9497 | 0.0001 *** | 0.0001 *** |

| cl_21 | 21 | 27 | −0.6120 | 0.6120 | 0.5405 | 0.5405 |

| cl_22 | 12 | 24 | −1.7949 | 1.7949 | 0.0727 * | 0.0727 * |

| cl_23 | 43 | 34 | 1.4010 | −1.4010 | 0.1612 | 0.1612 |

| cl_24 | 10 | 18 | −1.3236 | 1.3236 | 0.1856 | 0.1856 |

| cl_25 | 29 | 38 | −0.8064 | 0.8064 | 0.42 | 0.42 |

| Database | Variables | Variable Type | N | Mean | SD | Median | Min | Max |

|---|---|---|---|---|---|---|---|---|

| ALL | x | Numerical | 945 | 6.85 | 30.89 | 4 | −68.67 | 70.58 |

| y | Numerical | 945 | −12.9 | 42.89 | −12 | −105.07 | 72.56 | |

| z | Numerical | 945 | 13.28 | 24.15 | 12 | −52 | 75.36 | |

| Flag | Categorical | 945 | - | - | - | - | - | |

| Branded | x | Numerical | 462 | 7.89 | 29.7 | 6 | −68.67 | 70.58 |

| y | Numerical | 462 | −15.05 | 44.53 | −13 | −105.07 | 72.56 | |

| z | Numerical | 462 | 10.84 | 22.96 | 8.26 | −48 | 75.36 | |

| Flag | Categorical | 462 | - | - | - | - | - | |

| Unbranded | x | Numerical | 483 | 5.85 | 31.99 | 2 | −66 | 69 |

| y | Numerical | 483 | −10.84 | 41.2 | −10 | −105 | 66 | |

| z | Numerical | 483 | 15.61 | 25.04 | 15 | −52 | 74 | |

| Flag | Categorical | 483 | - | - | - | - | - |

| Rank | Model_ID | AUC | Logloss | AUC-PR |

|---|---|---|---|---|

| 1 | XRT_1_AutoML_20210907_131623 | 0.5841 | 0.6804 | 0.5426 |

| 2 | XGBoost_1_AutoML_20210907_131623 | 0.5826 | 0.6794 | 0.5449 |

| 3 | GBM_2_AutoML_20210907_131623 | 0.5810 | 0.6813 | 0.5390 |

| 4 | XGBoost_3_AutoML_20210907_131623 | 0.5796 | 0.6833 | 0.5375 |

| 5 | GBM_4_AutoML_20210907_131623 | 0.5782 | 0.6814 | 0.5361 |

| 6 | DRF_1_AutoML_20210907_131623 | 0.5774 | 0.6827 | 0.5378 |

| 7 | XGBoost_grid__1_AutoML_20210907_131623_model_1 | 0.5770 | 0.6829 | 0.5383 |

| 8 | XGBoost_grid__1_AutoML_20210907_131623_model_6 | 0.5759 | 0.6810 | 0.5333 |

| 9 | GBM_grid__1_AutoML_20210907_131623_model_6 | 0.5757 | 0.6809 | 0.5359 |

| 10 | GBM_5_AutoML_20210907_131623 | 0.5757 | 0.6803 | 0.5343 |

| Feature Values | Cluster Centered Brain Regions | Relative_Importance | Scaled_Importance |

|---|---|---|---|

| Cluster_id.cl_9 | PHG | 29.023 | 1.000 |

| Cluster_id.cl_15 | Lingual Gyrus (BA17) | 20.785 | 0.716 |

| Cluster_id.cl_20 | Lingual Gyrus (BA17) | 19.454 | 0.670 |

| Cluster_id.cl_25 | Superior Frontal Gyrus (BA8) | 15.762 | 0.543 |

| Cluster_id.cl_16 | Precuneus (BA7) | 13.721 | 0.473 |

| Cluster_id.cl_19 | Midbrain | 12.747 | 0.439 |

| Cluster_id.cl_5 | Lateral Ventricle | 11.915 | 0.411 |

| Cluster_id.cl_17 | Inferior Frontal Gyrus | 11.477 | 0.396 |

| Cluster_id.cl_4 | Inferior Frontal Gyrus | 11.266 | 0.388 |

| Cluster_id.cl_3 | PCC | 10.938 | 0.377 |

| Branded Objects | Unbranded Objects | ||

|---|---|---|---|

| Cognitive Terms | Correlation | Cognitive Terms | Correlation |

| Fearful | 0.129 | Recognition memory | 0.079 |

| Unpleasant | 0.124 | Belief | 0.068 |

| Negative | 0.116 | Tactile | 0.051 |

| Affective | 0.109 | Pain | 0.043 |

| Emotional | 0.101 | Fluency | 0.036 |

| Happy | 0.098 | Demands | 0.035 |

| Aversive | 0.098 | Verbal fluency | 0.033 |

| Emotions | 0.093 | Competing | 0.03 |

| Angry | 0.09 | Judgment | 0.03 |

| Fear | 0.088 | Reasoning | 0.029 |

| Emotionally | 0.08 | Default network | 0.025 |

| Disgust | 0.078 | Sensations | 0.023 |

| Positive negative | 0.077 | Painful | 0.022 |

| Arousal | 0.071 | Interference | 0.02 |

| Anxiety | 0.068 | Switching | 0.02 |

| Expression | 0.065 | Integrative | 0.019 |

| Affect | 0.06 | Relational | 0.019 |

| Pleasant | 0.054 | Empathy | 0.019 |

| Valence | 0.052 | Risky | 0.019 |

| Threatening | 0.049 | Control network | 0.019 |

| Emotional valence | 0.049 | Conflict | 0.017 |

| Emotion regulation | 0.047 | Multisensory | 0.017 |

| Mood | 0.045 | Speakers | 0.017 |

| Emotional information | 0.041 | Consciousness | 0.016 |

| Memories | 0.038 | Lexical decision | 0.016 |

| Sighted | 0.036 | Semantic | 0.016 |

| Learning | 0.029 | Cognitively | 0.015 |

| Negative emotional | 0.029 | Executive control | 0.014 |

| Modality | 0.027 | Concrete | 0.013 |

| Intense | 0.024 | Referential | 0.013 |

| Signaling | 0.023 | Word | 0.013 |

| Salient | 0.022 | Demand | 0.012 |

| Mental imagery | 0.021 | Retrieval | 0.011 |

| Gain | 0.021 | Words | 0.011 |

| Sad | 0.019 | Imagine | 0.011 |

| Episodic memory | 0.018 | Learned | 0.011 |

| Encoding | 0.016 | Monitoring | 0.011 |

| Intensity | 0.013 | Expectancy | 0.011 |

| Arithmetic | 0.013 | Judgments | 0.011 |

| Anger | 0.012 | Target detection | 0.009 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Watanuki, S. Watershed Brain Regions for Characterizing Brand Equity-Related Mental Processes. Brain Sci. 2021, 11, 1619. https://doi.org/10.3390/brainsci11121619

Watanuki S. Watershed Brain Regions for Characterizing Brand Equity-Related Mental Processes. Brain Sciences. 2021; 11(12):1619. https://doi.org/10.3390/brainsci11121619

Chicago/Turabian StyleWatanuki, Shinya. 2021. "Watershed Brain Regions for Characterizing Brand Equity-Related Mental Processes" Brain Sciences 11, no. 12: 1619. https://doi.org/10.3390/brainsci11121619

APA StyleWatanuki, S. (2021). Watershed Brain Regions for Characterizing Brand Equity-Related Mental Processes. Brain Sciences, 11(12), 1619. https://doi.org/10.3390/brainsci11121619