The Neural Responses of Visual Complexity in the Oddball Paradigm: An ERP Study

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

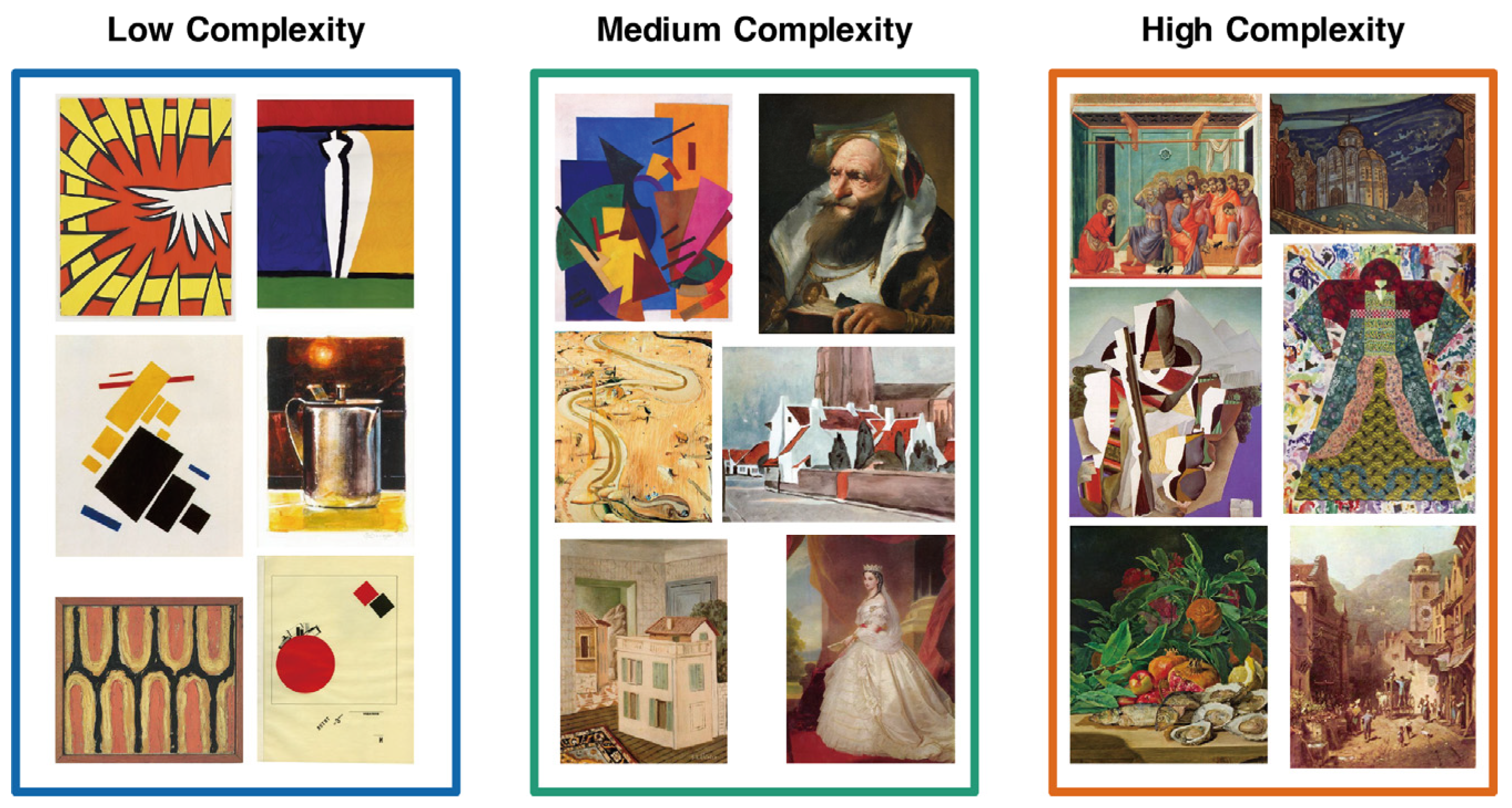

2.2. Materials

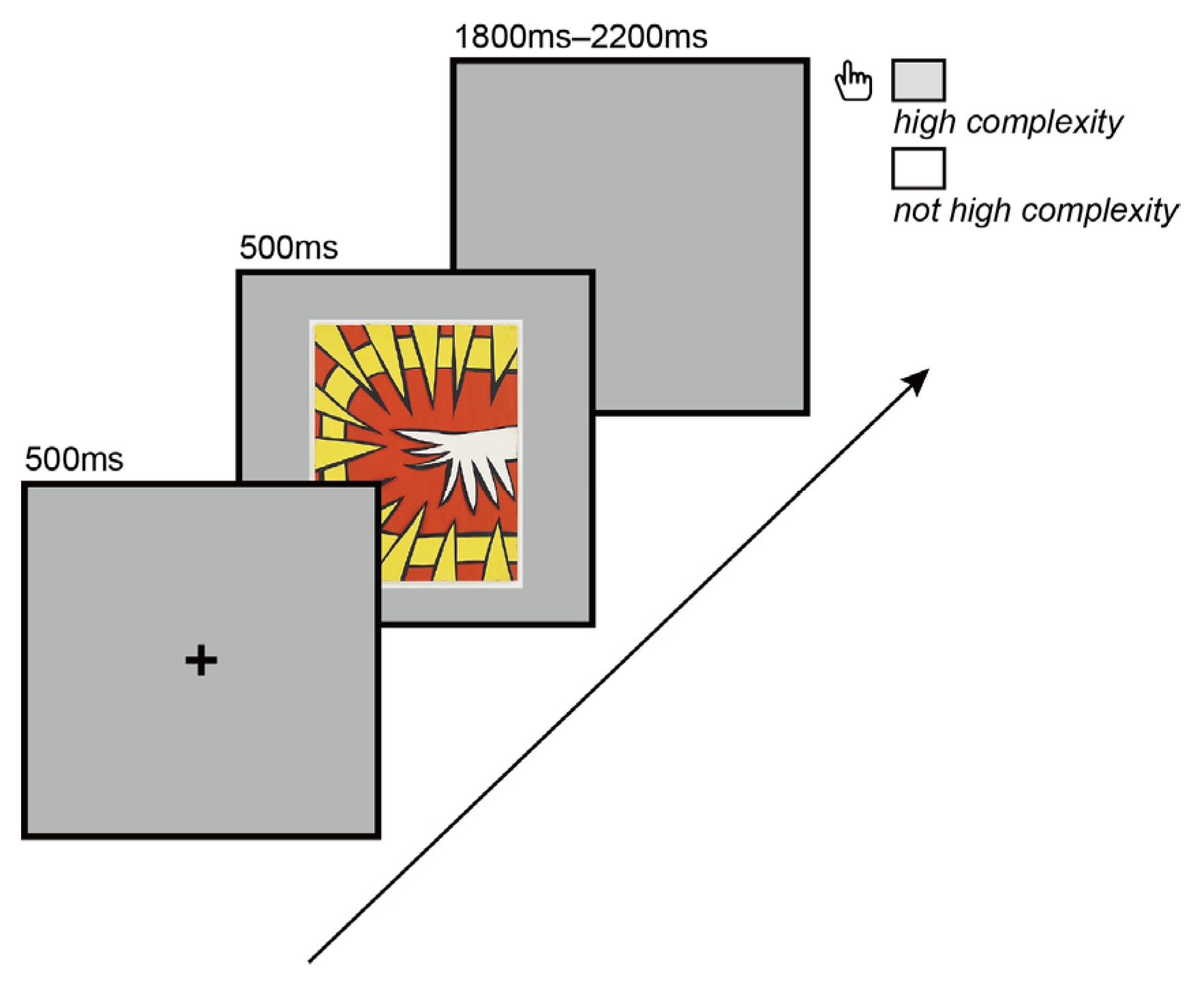

2.3. Procedure

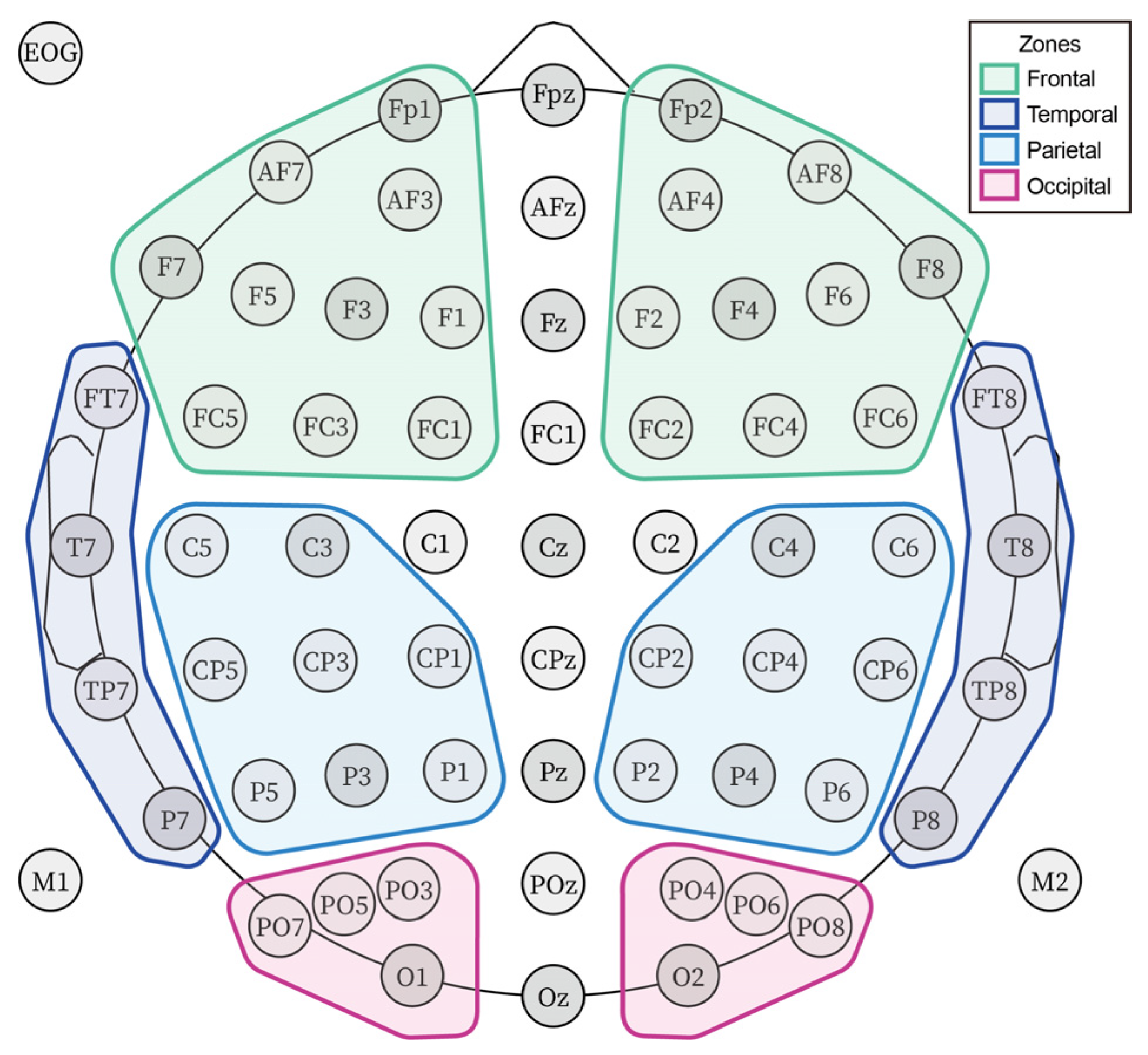

2.4. Data Recording and Analysis

3. Results

3.1. Behavioral Analysis

3.2. Event-Related Potentials

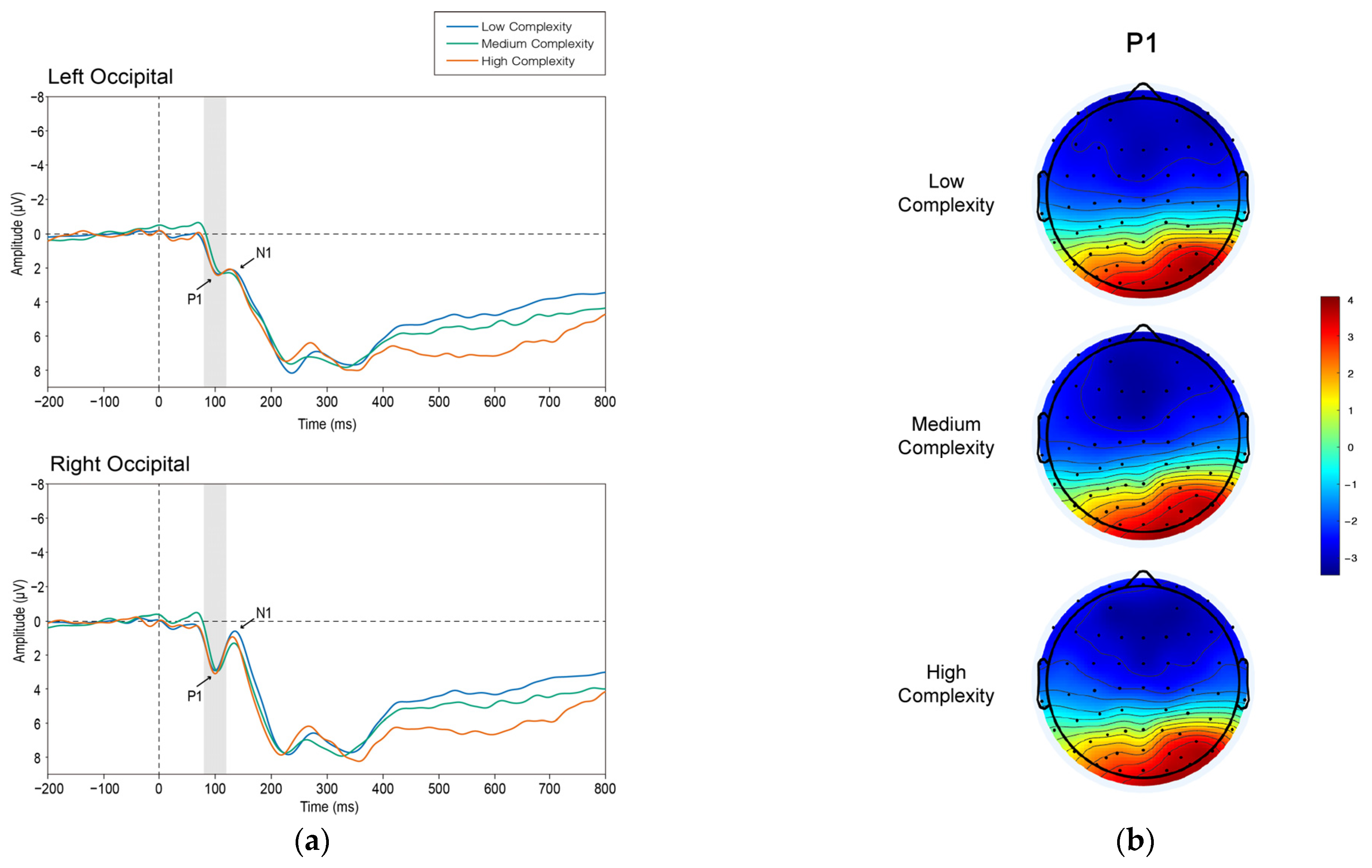

3.2.1. P1

3.2.2. N2

3.2.3. P3

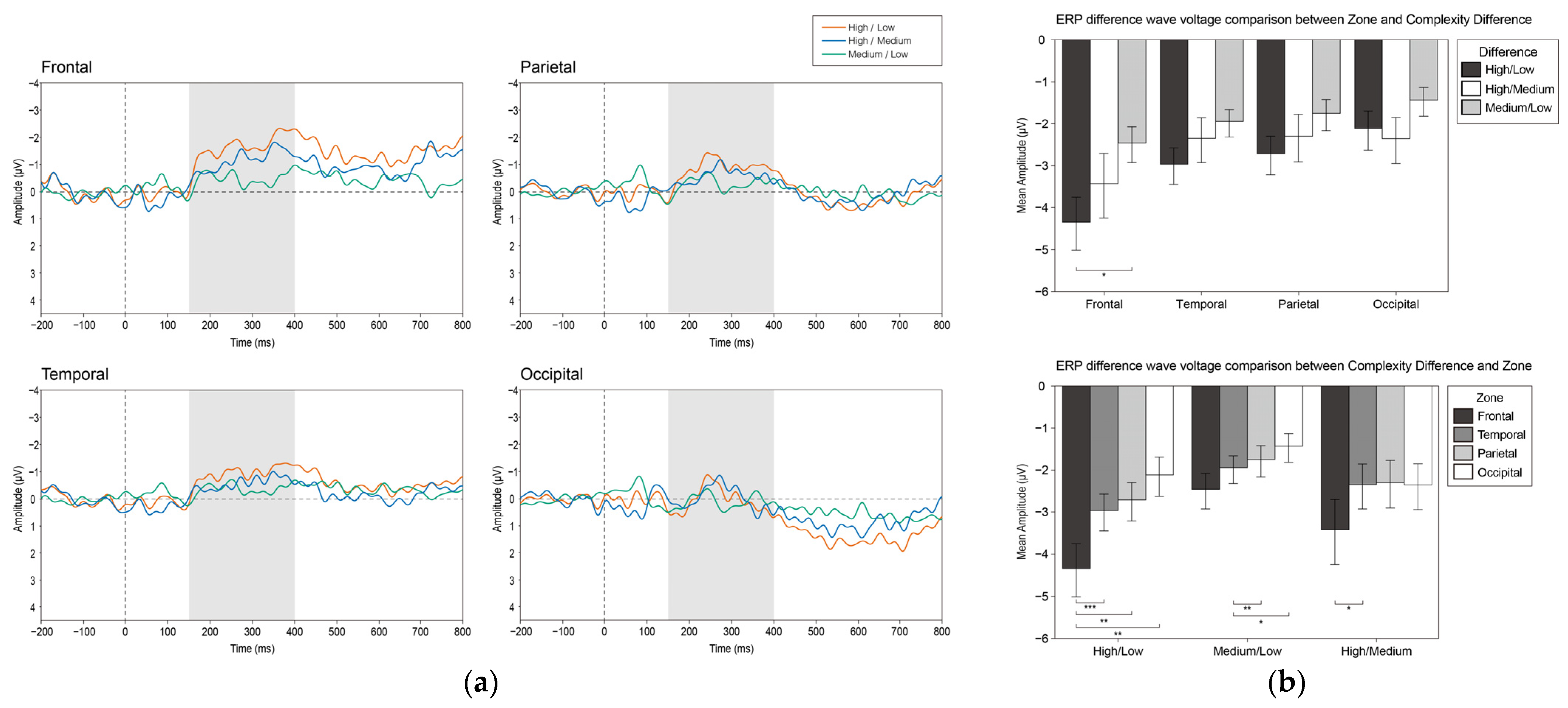

3.2.4. vMMN

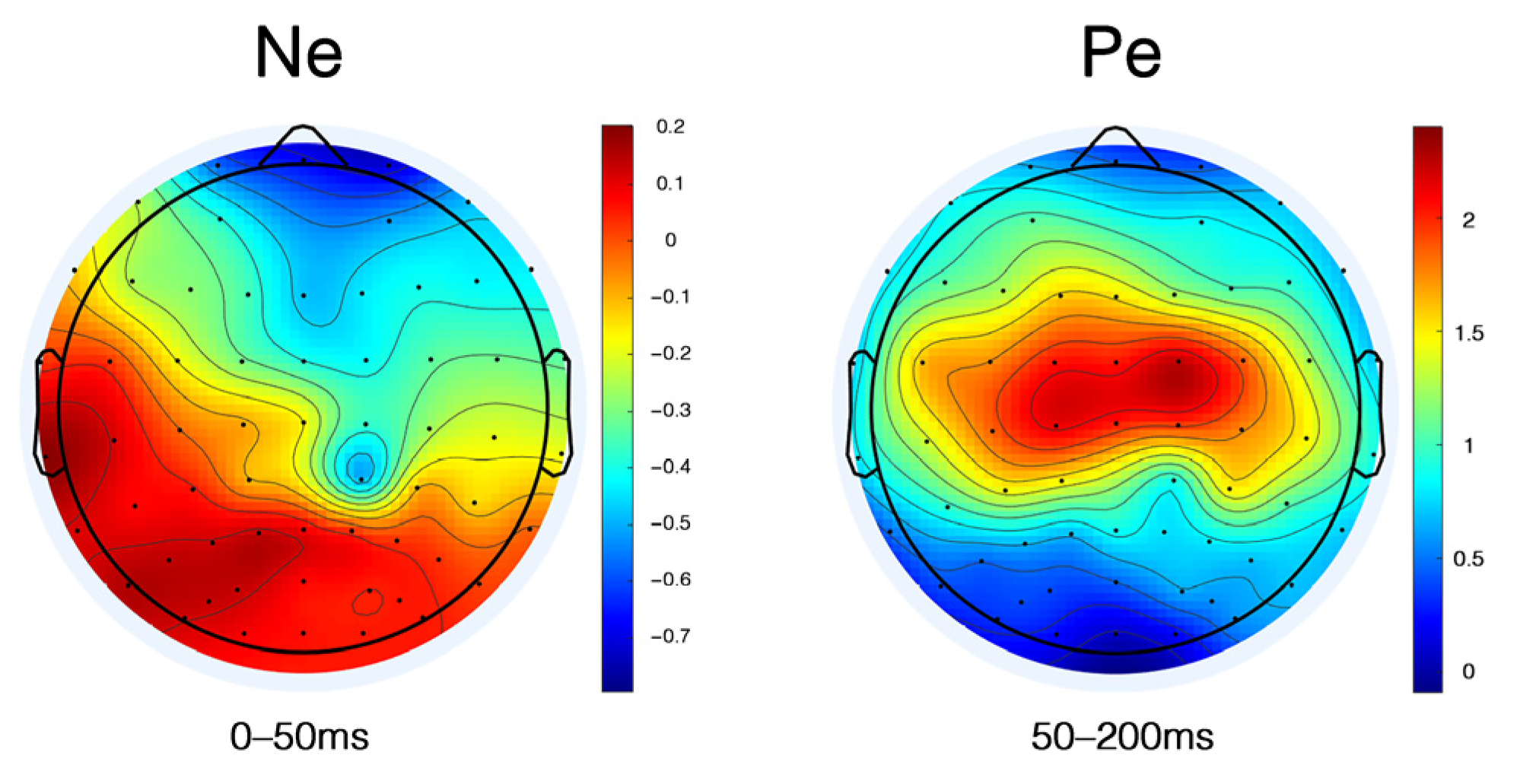

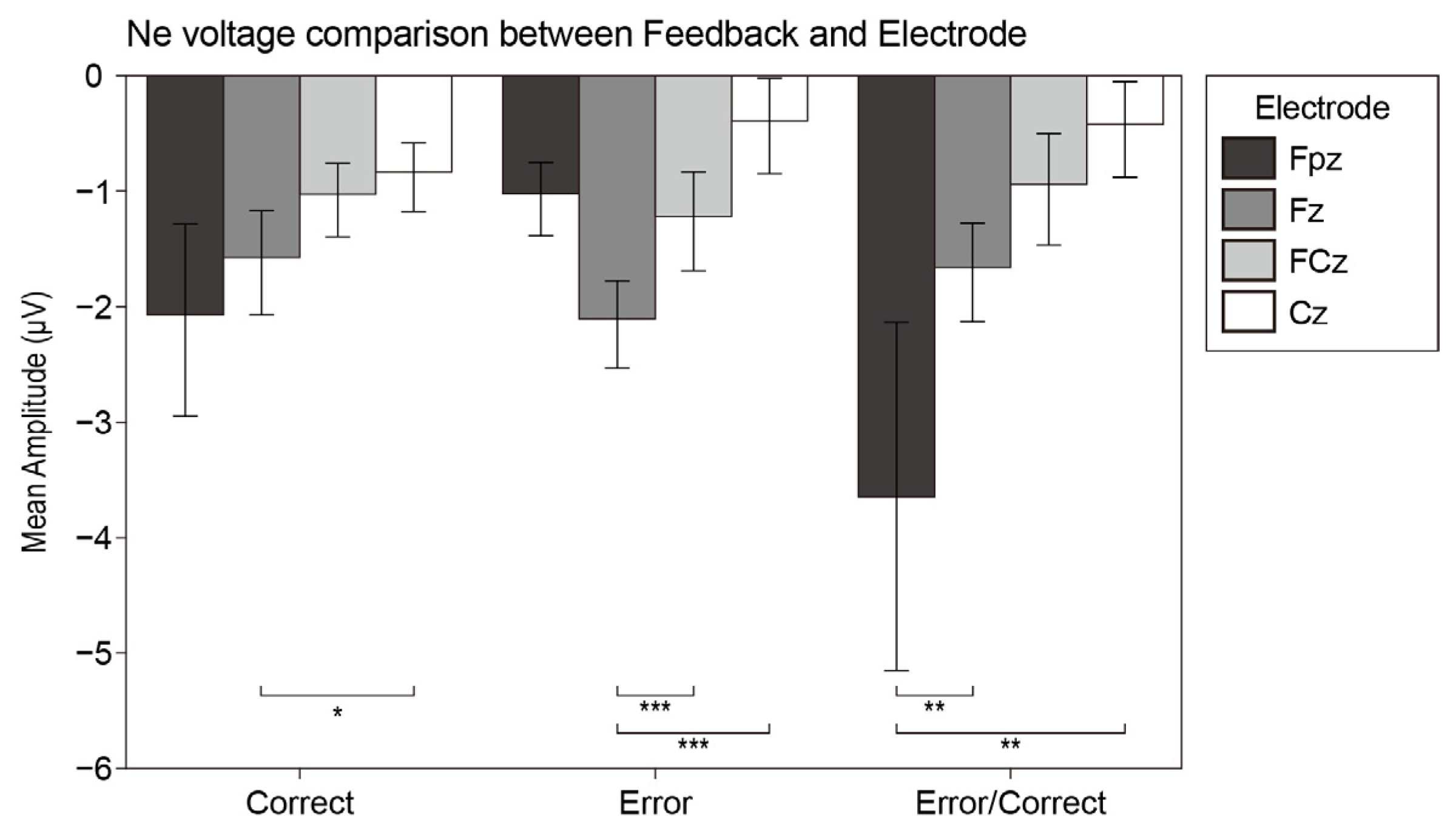

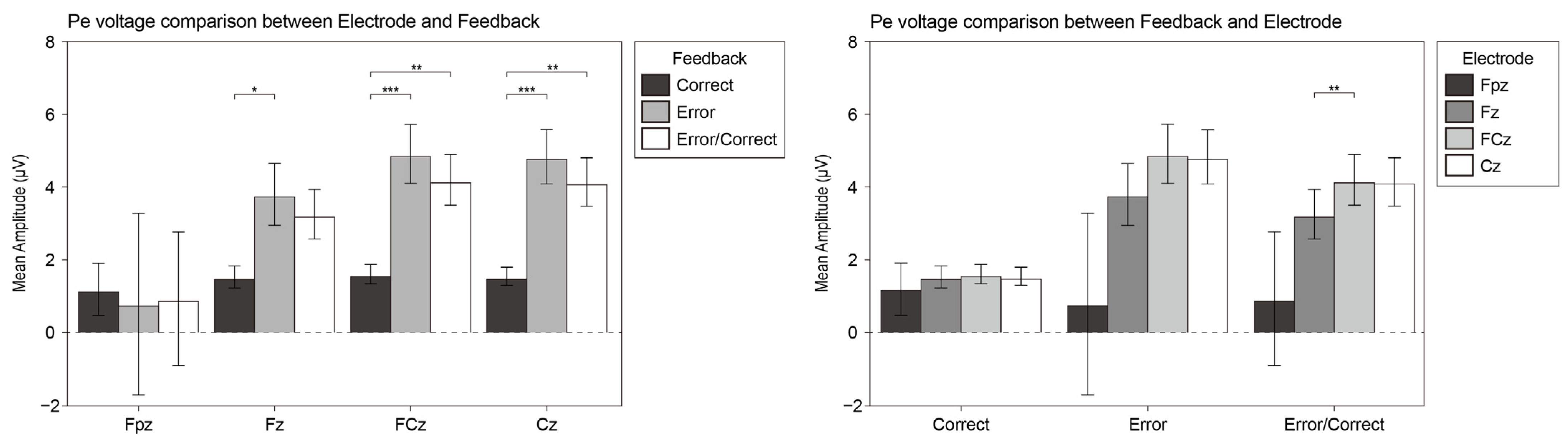

3.2.5. Ne and Pe

4. Discussion

4.1. P1 and N1

4.2. N2

4.3. P3

4.4. Visual MMN

4.5. Ne and Pe

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Roach, N.W.; Hogben, J.H. Attentional Modulation of Visual Processing in Adult Dyslexia: A Spatial-Cuing Deficit. Psychol. Sci. 2004, 15, 650–654. [Google Scholar] [CrossRef] [PubMed]

- Standish, R.K. Concept and definition of complexity. In Intelligent Complex Adaptive Systems; Yang, A., Shan, Y., Eds.; IGI Publishing: Hershey, PA, USA, 2008; pp. 105–124. [Google Scholar]

- Koffka, K. Principles of Gestalt Psychology; Routledge: England, UK, 2013; Volume 44. [Google Scholar]

- Berlyne, D.E. The influence of complexity and novelty in visual figures on orienting responses. J. Exp. Psychol. 1958, 55, 289–296. [Google Scholar] [CrossRef]

- Berlyne, D.E. Novelty, complexity, and hedonic value. Percept. Psychophys. 1970, 8, 279–286. [Google Scholar] [CrossRef]

- Oliva, A.; Mack, M.L.; Shrestha, M.; Peeper, A. Identifying the perceptual dimensions of visual complexity of scenes. In Proceedings of the 26th Annual Cognitive Science Society, Chicago, IL, USA, 1 January 2014. [Google Scholar]

- Harper, S.; Michailidou, E.; Stevens, R. Toward a definition of visual complexity as an implicit measure of cognitive load. ACM Trans. Appl. Percept. 2009, 6, 1–18. [Google Scholar] [CrossRef]

- Nadal, M.; Munar, E.; Marty, G.; Cela-Conde, C. Visual Complexity and Beauty Appreciation: Explaining the Divergence of Results. Empir. Stud. Arts 2010, 28, 173–191. [Google Scholar] [CrossRef] [Green Version]

- Da Silva, M.P.; Courboulay, V.; Estraillier, P. Image complexity measure based on visual attention. In Proceedings of the 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011. [Google Scholar]

- Wang, Q.Z.; Yang, S.; Liu, M.L.; Cao, Z.K.; Ma, Q.G. An eye-tracking study of website complexity from cognitive load perspective. Decis. Support Syst. 2014, 62, 1–10. [Google Scholar] [CrossRef]

- Coleman, L.J.; Elliott, M.A. Disentangling cognitive from perceptual load using relational complexity. Vis. Cogn. 2021, 29, 339–347. [Google Scholar] [CrossRef]

- Kriegeskorte, N.; Goebel, R.; Bandettini, P. Information-based functional brain mapping. Proc. Natl. Acad. Sci. USA 2006, 103, 3863–3868. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pfurtscheller, G.; Da Silva, F.H.L. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Thorpe, S.; Fize, D.; Marlot, C. Speed of processing in the human visual system. Am. J. Ophthalmol. 1996, 381, 520–522. [Google Scholar]

- Cepeda-Freyre, H.A.; Garcia, A.G.; Eguibar, J.R.; Cortes, C. Brain Processing of Complex Geometric Forms in a Visual Memory Task Increases P2 Amplitude. Brain Sci. 2020, 10, 114. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shigeto, H.; Ishiguro, J.; Nittono, H. Effects of visual stimulus complexity on event-related brain potentials and viewing duration in a free-viewing task. Neurosci. Lett. 2011, 497, 85–89. [Google Scholar] [CrossRef] [PubMed]

- Kursawe, M.A.; Zimmer, H.D. Costs of storing colour and complex shape in visual working memory: Insights from pupil size and slow waves. Acta Psychol. 2015, 158, 67–77. [Google Scholar] [CrossRef]

- Groen, I.I.A.; Jahfari, S.; Seijdel, N.; Ghebreab, S.; Lamme, V.A.F.; Scholte, H.S. Scene complexity modulates degree of feedback activity during object detection in natural scenes. PLoS Comput. Biol. 2018, 14, 1–28. [Google Scholar] [CrossRef] [Green Version]

- Luria, R.; Sessa, P.; Gotler, A.; Jolicoeur, P.; Dell’Acqua, R. Visual Short-term Memory Capacity for Simple and Complex Objects. J. Cogn. Neurosci. 2010, 22, 496–512. [Google Scholar] [CrossRef]

- Klimesch, W. Evoked alpha and early access to the knowledge system: The P1 inhibition timing hypothesis. Brain Res. 2011, 1408, 52–71. [Google Scholar] [CrossRef] [Green Version]

- Rugg, M.D.; Coles, M.G.H. (Eds.) Electrophysiology of mind. In Event-Related Brain Potentials and Cognition; Oxford University Press: Oxford, UK, 1995; pp. 40–85. [Google Scholar]

- Luck, S.J. An Introduction to the Event-related Technique; MIT Press: Cambridge, UK, 2005. [Google Scholar]

- Di Russo, F.; Martinez, A.; Sereno, M.I.; Pitzalis, S.; Hillyard, S.A. Cortical sources of the early components of the visual evoked potential. Hum. Brain Mapp. 2002, 15, 95–111. [Google Scholar] [CrossRef] [Green Version]

- Foxe, J.J.; Simpson, G.V. Flow of activation from V1 to frontal cortex in humans-A framework for defining "early" visual processing. Exp. Brain Res. 2002, 142, 139–150. [Google Scholar] [CrossRef]

- Schindler, S.; Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex 2020, 130, 362–386. [Google Scholar] [CrossRef] [PubMed]

- De Haan, M.; Johnson, M.H.; Halit, H. Development of face-sensitive event-related potentials during infancy: A review. Int. J. Psychophysiol. 2003, 51, 45–58. [Google Scholar] [CrossRef]

- Goffaux, V.; Gauthier, I.; Rossion, B. Spatial scale contribution to early visual differences between face and object processing. Cogn. Brain Res. 2003, 16, 416–424. [Google Scholar] [CrossRef]

- Taylor, M.J.; Batty, M.; Itier, R.J. The faces of development: A review of face processing in early childhood. J. Cogn. Neurosci. 2004, 16, 1426–1442. [Google Scholar] [CrossRef]

- Itier, R.J.; Taylor, M.J. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 2004, 14, 132–142. [Google Scholar] [CrossRef] [Green Version]

- Rossion, B.; Caharel, S. ERP evidence for the speed of face categorization in the human brain: Disentangling the contribution of low-level visual cues from face perception. Vis. Res. 2011, 51, 1297–1311. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Herrmann, C.S.; Knight, R.T. Mechanisms of human attention: Event-related potentials and oscillations. Neurosci. Biobehav. Rev. 2001, 25, 465–476. [Google Scholar] [CrossRef]

- Luck, S.J.; Heinze, H.J.; Mangun, G.R.; Hillyard, S.A. Visual event-related potentials index focused attention within bilateral stimulus arrays. ii. Functional dissociation of P1 and N1 components. Electroencephalogr. Clin. Neurophysiol. 1990, 75, 528–542. [Google Scholar] [CrossRef]

- Luck, S.J.; Ford, M.A. On the role of selective attention in visual perception. Proc. Natl. Acad. Sci. USA 1998, 95, 825–830. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ángel, C.; Juan, L.; Eduardo, M.; Pío, T. Temporal attention enhances early visual processing: A review and new evidence from event-related potentials. Brain Res. 2006, 1076, 116–128. [Google Scholar]

- Zhang, W.; Luck, S.J. Feature-based attention modulates feedforward visual processing. Nat. Neurosci. 2009, 12, 24. [Google Scholar] [CrossRef]

- Righi, S.; Orlando, V.; Marzi, T. Attractiveness and affordance shape tools neural coding: Insight from ERPs. Int. J. Psychophysiol. 2014, 91, 240–253. [Google Scholar] [CrossRef]

- He, X.; Fan, S.L.; Zhou, K.; Chen, L. Cue Validity and Object-Based Attention. J. Cogn. Neurosci. 2004, 16, 1085–1097. [Google Scholar] [CrossRef]

- He, X.; Humphreys, G.; Fan, S.L.; Chen, L.; Han, S.H. Differentiating spatial and object-based effects on attention: An event-related brain potential study with peripheral cueing. Brain Res. 2008, 1245, 116–125. [Google Scholar] [CrossRef] [PubMed]

- Vogel, E.K.; Luck, S.J. The visual N1 component as an index of a discrimination process. Psychophysiology 2000, 37, 190–203. [Google Scholar] [CrossRef] [PubMed]

- Hopf, J.M.; Vogel, E.; Woodman, G.; Heinze, H.J.; Luck, S.J. Localizing Visual Discrimination Processes in Time and Space. J. Neurophysiol. 2002, 88, 2088–2095. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Perri, R.L.; Berchicci, M.; Bianco, V.; Quinzi, F.; Spinelli, D.; Di Russo, F. Perceptual load in decision making: The role of anterior insula and visual areas. An ERP study. Neuropsychologia 2019, 129, 65–71. [Google Scholar] [CrossRef]

- Doherty, J.R. Synergistic effect of combined temporal and spatial expectations on visual attention. J. Neurosci. Off. J. Soc. Neurosci. 2005, 25, 8259. [Google Scholar] [CrossRef] [PubMed]

- Folstein, J.R.; Petten, C.V. Influence of cognitive control and mismatch on the N2 component of the ERP: A review. Psychophysiology 2008, 45, 152–170. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Näätänen, R.; Gaillard, A.W.K. The orienting reflex and the N2 deflection of the event related potential (ERP). Adv. Psychol. 1983, 10, 119–141. [Google Scholar]

- Pritchard, W.S.; Shappell, S.A.; Brandt, M.E. Psychophysiology of N200/N400: A review and classification scheme. Adv. Psychophysiol. 1991, 4, 43–106. [Google Scholar]

- Ritter, W.; Simson, R.; Vaughan, H.G., Jr.; Friedman, D. A brain event related to the making of a sensory discrimination. Science 1979, 203, 1358–1361. [Google Scholar] [CrossRef] [PubMed]

- Borchard, J.P.; Barry, R.J.; Blasio, F.D. Sequential processing in an auditory equiprobable Go/NoGo task with variable interstimulus interval. Int. J. Psychophysiol. 2015, 97, 145–152. [Google Scholar] [CrossRef] [PubMed]

- Ritter, W.; Simson, R.; Vaughan, H.G., Jr.; Macht, M. Manipulation of event-related potential manifestations of information processing stages. Science 1982, 218, 909–911. [Google Scholar] [CrossRef] [PubMed]

- Courchesne, E.; Hillyard, S.A.; Galambos, R. Stimulus novelty, task relevance and the visual evoked potential in man. Electroencephalogr. Clin. Neurophysiol. 1975, 39, 131–143. [Google Scholar] [CrossRef] [Green Version]

- Stefanics, G.; Kremlacek, J.; Czigler, I. Visual mismatch negativity: A predictive coding view. Front. Hum. Neurosci. 2014, 8, 666. [Google Scholar] [CrossRef] [PubMed]

- Garrido, M.I.; Friston, K.J.; Kiebel, S.J.; Stephan, K.E.; Baldeweg, T.; Kilner, J.M. The functional anatomy of the MMN: A DCM study of the roving paradigm. Neuroimage 2008, 42, 936–944. [Google Scholar] [CrossRef] [Green Version]

- Stefanics, G.; Czigler, I. Automatic prediction error response to hands with unexpected laterality: An electrophysiological study. Neuroimage 2012, 63, 253–261. [Google Scholar] [CrossRef] [PubMed]

- Astikainen, P.; Lillstrang, E.; Ruusuvirta, T. Visual mismatch negativity for changes in orientation—A sensory memory-dependent response. Eur. J. Neurosci. 2008, 28, 2319–2324. [Google Scholar] [CrossRef]

- Kimura, M.; Katayama, J.; Ohira, H.; Schroger, E. Visual mismatch negativity: New evidence from the equiprobable paradigm. Psychophysiology 2009, 46, 402–409. [Google Scholar] [CrossRef] [PubMed]

- Kimura, M.; Schroger, E.; Czigler, I.; Ohira, H. Human visual system automatically encodes sequential regularities of discrete events. J. Cogn. Neurosci. 2010, 22, 1124–1139. [Google Scholar] [CrossRef]

- Czigler, I.; Balázs, L.; Winkler, I. Memory-based detection of task-irrelevant visual changes. Psychophysiology 2002, 39, 869–873. [Google Scholar] [CrossRef]

- Czigler, I.; Weisz, J.; Winkler, I. Backward masking and visual mismatch negativity: Electrophysiological evidence for memory-based detection of deviant stimuli. Psychophysiology 2007, 44, 610–619. [Google Scholar] [CrossRef] [PubMed]

- Stefanics, G.; Kimura, M.; Czigler, I. Visual mismatch negativity reveals automatic detection of sequential regularity violation. Front. Hum. Neurosci. 2011, 5, 46. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- File, D.; File, B.; Bodnar, F.; Sulykos, I.; Kecskes-Kovacs, K.; Czigler, I. Visual mismatch negativity (vMMN) for low- and high-level deviances: A control study. Atten. Percept. Psychophys. 2007, 79, 2153–2170. [Google Scholar] [CrossRef] [PubMed]

- Kojouharova, P.; File, D.; Sulykos, I.; Czigler, I. Visual mismatch negativity and stimulus-specific adaptation: The role of stimulus complexity. Exp. Brain Res. 2019, 237, 1179–1194. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kujala, T.; Tervaniemi, M.; Schröger, E. The mismatch negativity in cognitive and clinical neuroscience: Theoretical and methodological considerations. Biol. Psychol. 2007, 74, 1–19. [Google Scholar] [CrossRef]

- Kujala, T.; Näätänen, R. The adaptive brain: A neurophysiological perspective. Prog. Neurobiol. 2010, 91, 55–67. [Google Scholar] [CrossRef] [PubMed]

- Czigler, I. Visual mismatch negativity and categorization. Brain Topogr. 2013, 27, 590–598. [Google Scholar] [CrossRef] [Green Version]

- Beck, A.K.; Berti, S.; Czernochowski, D.; Lachmann, T. Do categorical representations modulate early automatic visual processing? A visual mismatch-negativity study. Biol. Psychol. 2021, 163, 108139. [Google Scholar] [CrossRef] [PubMed]

- Falkenstein, M.; Hoormann, J.; Christ, S.; Hohnsbein, J. ERP components on reaction errors and their functional significance: A tutorial. Biol. Psychol. 2000, 51, 87–107. [Google Scholar] [CrossRef]

- Falkenstein, M.; Hohnsbein, J.; Hoormann, J.; Blanke, L. Effects of errors in choice reaction tasks on the ERP under focused and divided attention. Psychophysiological Brain Res. 1990, 1, 192–195. [Google Scholar]

- Kopp, B.; Rist, F.; Mattler, U. N200 in the flanker task as a neurobehavioral tool for investigating executive control. Psychophysiology 1996, 33, 282–294. [Google Scholar] [CrossRef] [PubMed]

- Van Veen, V.; Carter, C.S. The timing of action-monitoring processes in the anterior cingulate cortex. J. Cogn. Neurosci. 2002, 14, 593–602. [Google Scholar] [CrossRef] [PubMed]

- Frank, M.J.; Woroch, B.S.; Curran, T. Error-related negativity predictions reinforcement learning and conflict biases. Neuron 2005, 47, 495–501. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Elham, S.; Mona, J.; Margrit, B. Visual complexity analysis using deep intermediate-layer features. Comput. Vis. Image Underst. 2020, 195, 1077–3142. [Google Scholar]

- Deiber, M.P.; Hasler, R.; Colin, J.; Dayer, A.; Aubry, J.M.; Baggio, S.; Perroud, N.; Ros, T. Linking alpha oscillations, attention and inhibitory control in adult ADHD with EEG neurofeedback. NeuroImage Clin. 2020, 25, 102145. [Google Scholar] [CrossRef] [PubMed]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lopez-Calderon, J.; Luck, S.J. ERPLAB: An open-source toolbox for the analysis of event-related potentials. Front. Hum. Neurosci. 2014, 8, 213. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kimura, M. Visual mismatch negativity and unintentional temporal-context-based prediction in vision. Int. J. Psychophysiol. 2012, 83, 144–155. [Google Scholar] [CrossRef] [PubMed]

- Bernstein, P.S.; Scheffers, M.K. “Where did I go wrong?” A psychophysiological analysis of error detection. J. Exp. Psychol. Hum. Percept. Perform. 1995, 21, 1312–1322. [Google Scholar] [CrossRef] [PubMed]

- Wills, A.J.; Lavric, A.; Croft, G.S.; Hodgson, T.L. Predictive learning, prediction errors, and attention: Evidence from event-related potentials and eye tracking. J. Cogn. Neurosci. 2007, 19, 843–854. [Google Scholar] [CrossRef] [Green Version]

- Freunberger, R.; Klimesch, W.; Griesmayr, B.; Sauseng, P.; Gruber, W. Alpha phase coupling reflects object recognition. Neuroimage 2008, 42, 928–935. [Google Scholar] [CrossRef] [PubMed]

- Busch, N.A.; Herrmann, C.S.; Müller, M.M.; Lenz, D.; Gruber, T. A cross-laboratory study of event-related gamma activity in a standard object recognition paradigm. Neuroimage 2006, 33, 1169–1177. [Google Scholar] [CrossRef] [PubMed]

- Rokszin, A.A.; Gyori-Dani, D.; Nyul, L.G.; Csifcsak, G. Electrophysiological correlates of top-down effects facilitating natural image categorization are disrupted by the attenuation of low spatial frequency information. International. J. Psychophysiol. 2016, 100, 19–27. [Google Scholar] [CrossRef] [PubMed]

- Mattavelli, G.; Rosanova, M.; Casali, A.G.; Papagno, C.; Lauro, L.J.R. Timing of emotion representation in right and left occipital region: Evidence from combined TMS-EEG. Brain Cogn. 2016, 106, 13–22. [Google Scholar] [CrossRef]

- Prete, G.; Capotosto, P.; Zappasodi, F.; Tommasi, L. Contrasting Hemispheric Asymmetries for Emotional Processing From Event-Related Potentials and Behavioral Responses. Neuropsychology 2018, 32, 317–328. [Google Scholar] [CrossRef] [PubMed]

- Daffner, K.R.; Mesulam, M.M.; Scinto, L.F.M.; Calvo, V.; Faust, R.; Holcomb, P.J. An electrophysiological index of stimulus unfamiliarity. Psychophysiology 2000, 37, 737–747. [Google Scholar] [CrossRef] [PubMed]

- Maekawa, T.; Goto, Y.; Kinukawa, N.; Taniwaki, T.; Kanba, S.; Tobimatsu, S. Functional characterization of mismatch negativity to a visual stimulus. Clin. Neurophysiol. 2005, 116, 2392–2402. [Google Scholar] [CrossRef]

- Bruin, K.J.; Wijers, A.A. Inhibition, response mode, and stimulus probability: A comparative event-related potential study. Clin. Neurophysiol. 2002, 113, 1172–1182. [Google Scholar] [CrossRef]

- Polich, J. Updating P300: An integrative theory of P3a and P3b. Clin. Neurophysiol. 2007, 113, 1172–1182. [Google Scholar] [CrossRef] [Green Version]

- Katayama, J.; Polich, J. Stimulus context determines P3a and P3b. Psychophysiology 1998, 35, 22–23. [Google Scholar] [CrossRef]

- Goldstein, A.; Spencer, K.M.; Donchin, E. The influence of stimulus deviance and novelty on the P300 and novelty P3. Psychophysiology 2002, 39, 781–790. [Google Scholar] [CrossRef] [PubMed]

- Volpe, U.; Mucci, A.; Bucci, P.; Merlotti, E.; Galderisi, S.; Maj, M. The cortical generators of P3a and P3b: A LORETA study. Brain Res. Bull. 2007, 73, 220–230. [Google Scholar] [CrossRef]

- Luck, S.J.; Hillyard, S.A. Electrophysiological correlates of feature analysis during visual search. Psychophysiology 1994, 31, 291–308. [Google Scholar] [CrossRef] [PubMed]

- Kimura, M.; Ohira, H.; Schroger, E. Localizing sensory and cognitive systems for pre-attentive visual deviance detection: An sLORETA analysis of the data of Kimura et al. (2009). Neurosci. Lett. 2010, 485, 198–203. [Google Scholar] [CrossRef] [PubMed]

- Bubic, A.; Bendixen, A.; Schubotz, R.I.; Jacobsen, T.; Schroger, E. Differences in processing violations of sequential and feature regularities as revealed by visual event-related brain potentials. Brain Res. 2010, 1317, 192–202. [Google Scholar] [CrossRef] [PubMed]

- Kimura, M.; Kondo, H.; Ohira, H.; Schroger, E. Unintentional temporal-context-based prediction of emotional faces: An electrophysiological study. Cereb. Cortex 2012, 22, 1774–1785. [Google Scholar] [CrossRef]

- Hedge, C.; Stothart, G.; Jones, J.T.; Frias, P.R.; Magee, K.L.; Brooks, J.C.W. A frontal attention mechanism in the visual mismatch negativity. Behav. Brain Res. 2015, 293, 173–181. [Google Scholar] [CrossRef] [Green Version]

- MacLean, S.E.; Ward, L.M. Temporo-frontal phase synchronization supports hierarchical network for mismatch negativity. Clin. Neurophysiol. 2014, 125, 1604–1617. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, Y.; Huang, X.T.; Luo, Y.M.; Peng, C.H.; Liu, C.X. Differences in the neural basis of automatic auditory and visual time perception: ERP evidence from an across-modal delayed response oddball task. Brain Res. 2010, 1325, 100–111. [Google Scholar] [CrossRef] [PubMed]

- Tse, C.Y.; Shum, Y.H.; Wang, Y. Fronto-occipital mismatch responses in pre-attentive detection of visual changes: Implication on a generic brain network underlying Mismatch Negativity (MMN). Neuroimage 2021, 244, 118633. [Google Scholar] [CrossRef] [PubMed]

- Karl, F.; James, K.; Harrison, L. A free energy principle for the brain. J. Physiol. -Paris 2006, 100, 70–87. [Google Scholar]

- Friston, K. Hierarchical models in the brain. PLoS Comput. Biol. 2008, 4, e1000211. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2012, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Claudia, M.; Gyula, K.; Catarina, A.; Gregor, U.; Hayn, L.; Christoph, R. Visual mismatch negativity indicates automatic, task-independent detection of artistic image composition in abstract artworks. Biol. Psychol. 2018, 136, 76–86. [Google Scholar]

- Gehring, W.; Coles, M.; Meyer, D.; Donchin, E. The error-related negativity: An event-related brain potential accompanying errors. Psychophysiology 1990, 27, 34. [Google Scholar]

- William, J.; Gehring, B.G.; Michael, G.H.; Coles, D.E.; Meyer, E.D. A Neural System for Error Detection and Compensation. Psychol. Sci. 1993, 4, 385–390. [Google Scholar]

- Falkenstein, M.; Hohnsbein, J.; Hoormann, J.; Blanke, L. Effects of crossmodal divided attention on late ERP components. II. Error processing in choice reaction tasks. Electroencephalogr. Clin. Neurophysiol. 1991, 78, 447–455. [Google Scholar] [CrossRef]

- Vincent, V.V.; Cameron, S.C. The anterior cingulate as a conflict monitor: fMRI and ERP studies. Physiol. Behav. 2002, 77, 477–482. [Google Scholar]

- MacLeod, C.M. Half a century of research on the Stroop effect: An integrative review. Psychol. Bull. 1991, 109, 163–203. [Google Scholar] [CrossRef] [PubMed]

- Gratton, G.; Coles, M.G.; Donchin, E. Optimizing the use of information: Strategic control of activation of responses. J. Exp. Psychol. 1992, 121, 480–506. [Google Scholar] [CrossRef]

- Kerns, J.G.; Cohen, J.D.; MacDonald, A.W.; Cho, R.Y.; Stenger, V. Andrew and Carter Cameron, S. Anterior Cingulate Conflict Monitoring and Adjustments in Control. Science 2004, 303, 1023–1026. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sander, N.K.; Richard, R.; Jos, B.; Guido, P.H.B.; Albert, K. Error-related brain potentials are differentially related to awareness of response errors: Evidence from an antisaccade task. Psychophysiology 2001, 38, 752–760. [Google Scholar]

- Roland, V.; Gilles, P.; Patrik, V. Unavoidable errors: A spatio-temporal analysis of time-course and neural sources of evoked potentials associated with error processing in a speeded task. Neuropsychologia 2008, 46, 2545–2555. [Google Scholar]

- Beck, A.K.; Czernochowski, D.; Lachmann, T.; Berti, S. Do categorical representations modulate early perceptual or later cognitive visual processing? An ERP study. Brain Cogn. 2021, 150, 105724. [Google Scholar] [CrossRef] [PubMed]

- Qin, Y.; Zhan, Y.; Wang, C.M.; Zhang, J.C.; Yao, L.; Guo, X.J.; Wu, X.; Hu, B. Classifying four-category visual objects using multiple ERP components in single-trial ERP. Cogn. Neurodynamics 2016, 10, 275–285. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 5. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, R.; Zhang, L.; Meng, P.; Meng, X.; Weng, M. The Neural Responses of Visual Complexity in the Oddball Paradigm: An ERP Study. Brain Sci. 2022, 12, 447. https://doi.org/10.3390/brainsci12040447

Hu R, Zhang L, Meng P, Meng X, Weng M. The Neural Responses of Visual Complexity in the Oddball Paradigm: An ERP Study. Brain Sciences. 2022; 12(4):447. https://doi.org/10.3390/brainsci12040447

Chicago/Turabian StyleHu, Rui, Liqun Zhang, Pu Meng, Xin Meng, and Minghan Weng. 2022. "The Neural Responses of Visual Complexity in the Oddball Paradigm: An ERP Study" Brain Sciences 12, no. 4: 447. https://doi.org/10.3390/brainsci12040447

APA StyleHu, R., Zhang, L., Meng, P., Meng, X., & Weng, M. (2022). The Neural Responses of Visual Complexity in the Oddball Paradigm: An ERP Study. Brain Sciences, 12(4), 447. https://doi.org/10.3390/brainsci12040447