The Brain Dynamics of Syllable Duration and Semantic Predictability in Spanish

Abstract

:1. Introduction

1.1. Duration Approaches

1.2. The Present Study

2. Method

2.1. Participants

2.2. Design and Material

2.3. Speech Signal

2.4. Procedure

2.5. ERP: Recording, Preprocessing and Data Analyses

3. Results

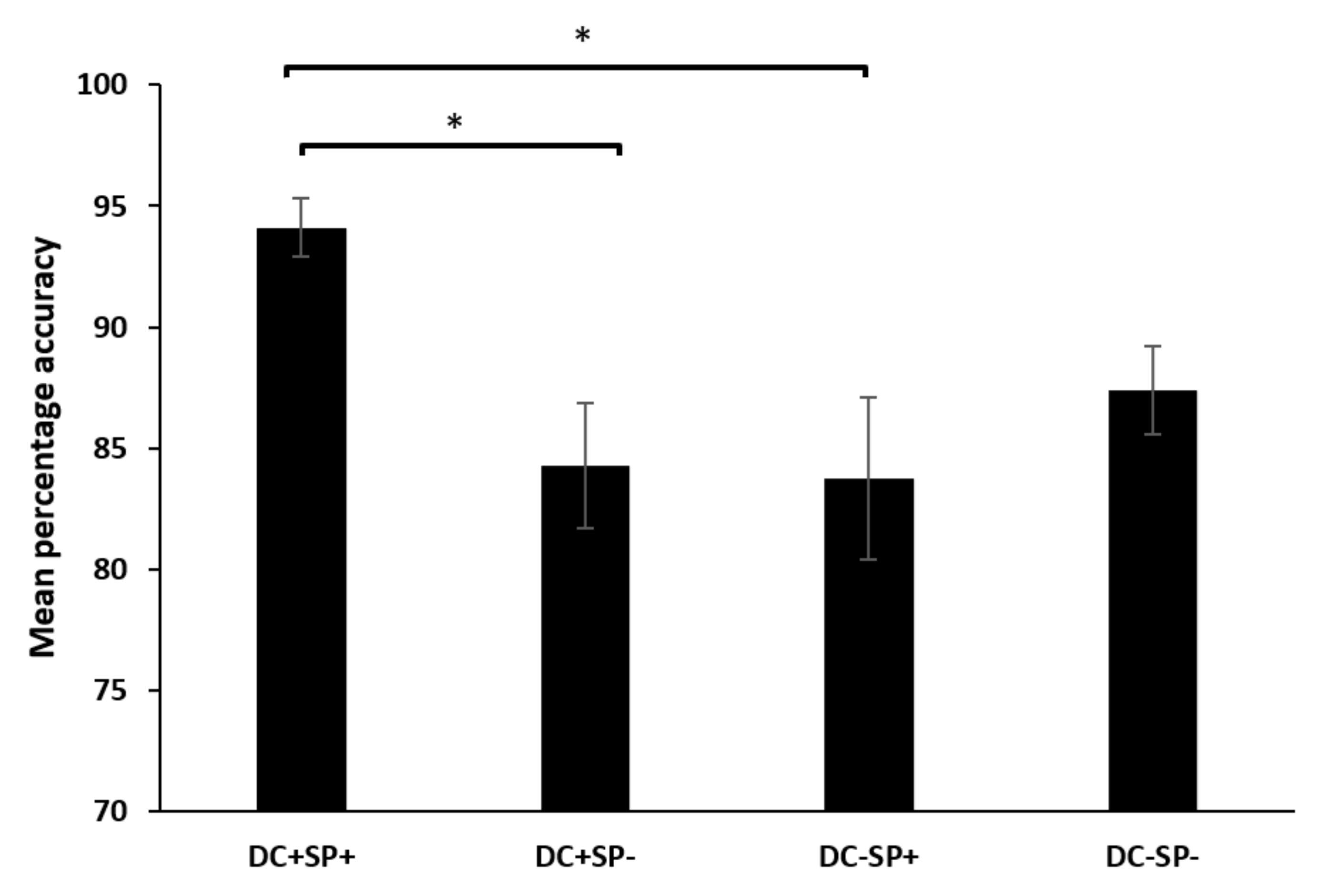

3.1. Behavioral Results

3.2. Electrophysiological Results

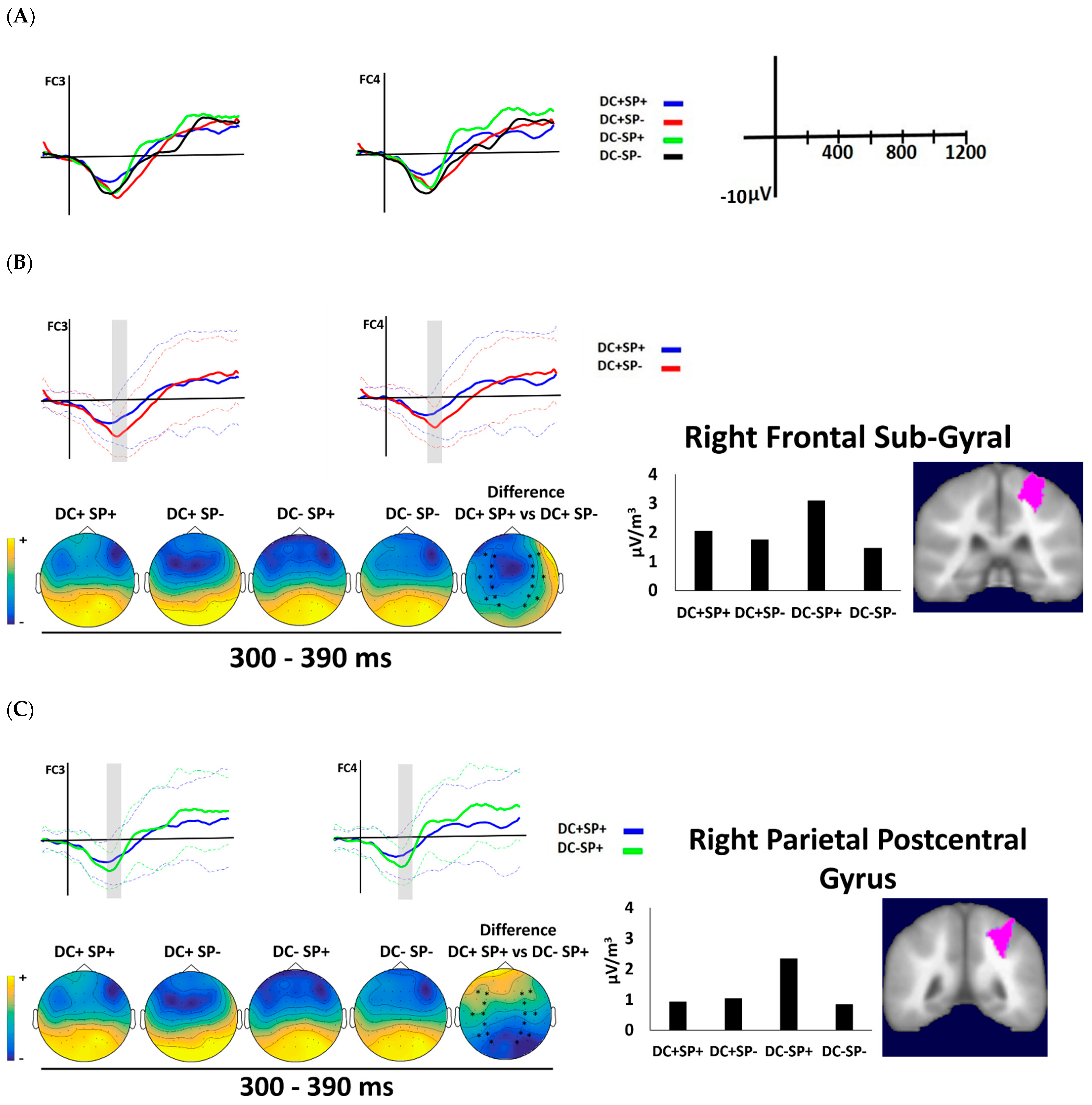

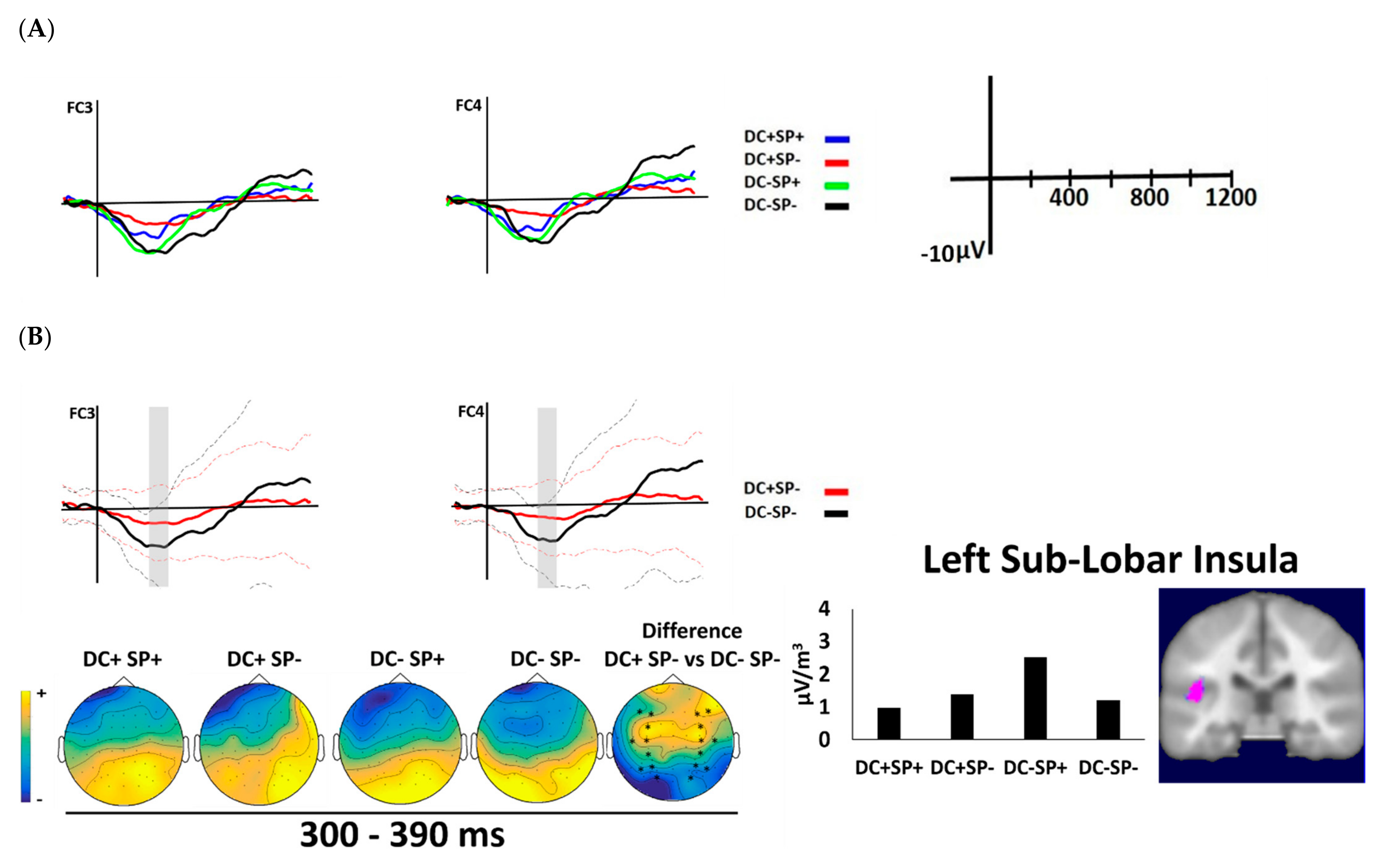

3.2.1. First Time Window (300–390)

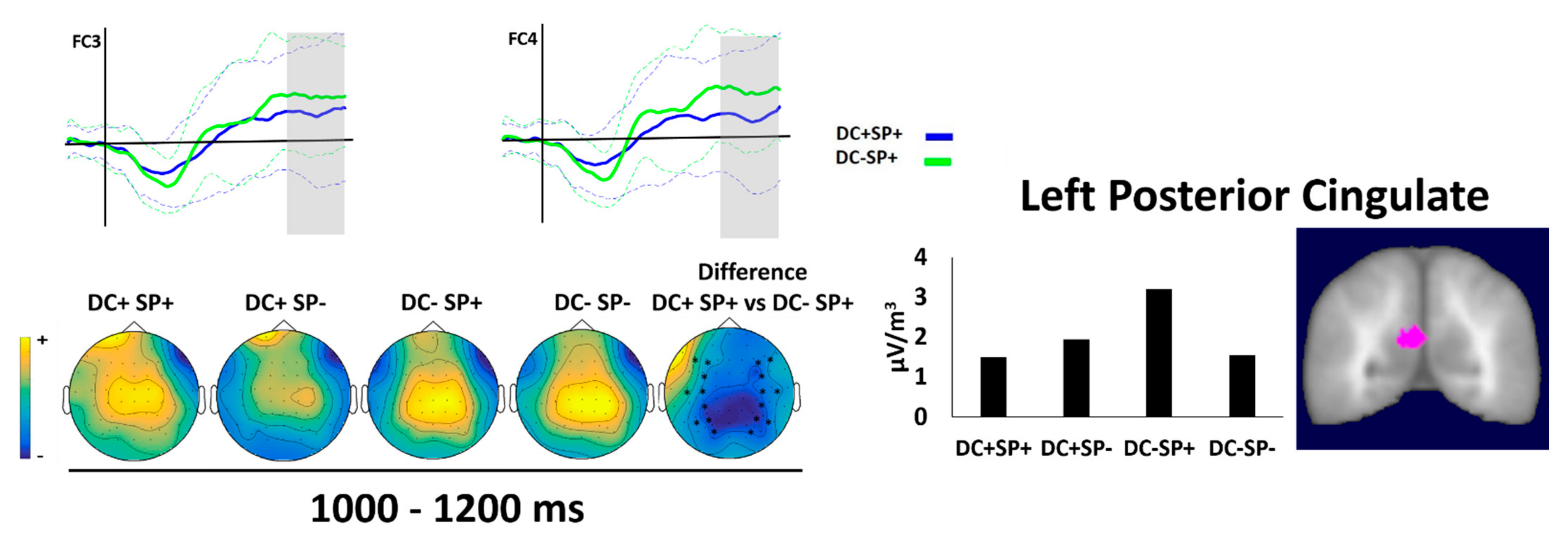

3.2.2. Second Time Window (1000–1200)

4. Discussion

4.1. Behavioral

4.2. ERPs

4.3. Sources

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Kochanski, G.; Grabe, E.; Coleman, J.; Rosner, B. Loudness Predicts Prominence: Fundamental Frequency Lends Little. J. Acoust. Soc. Am. 2005, 118, 1038–1054. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cabrera, F. Stress and Intonation in Spanish for Affirmative and Interrogative Sentences. In Proceedings of the EUROSPEECH’95, Fourth European Conference on Speech Communication and Technology, Madrid, Spain, 18–21 September 1995; pp. 2085–2088. [Google Scholar]

- Candia, L.; Urrutia Cárdenas, S.; Fernández Ulloa, T. Rasgos Acústicos de la Prosodia Acentual del Español. Boletín Filol. 2006, 41, 11–44. [Google Scholar]

- Fox, A. Prosody Features and Prosodic Structure; Oxford University Press: Oxford, UK, 2000; ISBN 0-191-823785-5. [Google Scholar]

- Muñetón-Ayala, M.; Dorta, J. Estudio de la Duración En El Marco de la Entonación de las Principales Ciudades de Colombia [Study of the Duration within the Framework of the Intonation of the Most Important Cities of Colombia]. Estud. Fonética Exp. 2019, 28, 161–184. [Google Scholar]

- Muñetón-Ayala, M. Asociación de la F0, Duración e Intensidad en el Habla de una Mujer de Medellín (Colombia) en Función de la Modalidad Oracional y sus Sintagmas [F0, Duration and Intensity Association in Colombian Spanish Female of Medellin on Function of Sentence Modalit. Rev. Lingüística Teórica Y Apl. 2017, 55, 53–72. [Google Scholar] [CrossRef] [Green Version]

- Varnet, L.; Ortiz-Barajas, M.C.; Erra, R.G.; Gervain, J.; Lorenzi, C. A Cross-Linguistic Study of Speech Modulation Spectra. J. Acoust. Soc. Am. 2017, 142, 1976–1989. [Google Scholar] [CrossRef] [PubMed]

- Abercrombie, D. Elements of General Phonetics; Edinburgh University Press: Edinburgh, UK, 1967; ISBN 9780852240281. [Google Scholar]

- Pike, K.L. The Intonation of American English; University of Michigan Press: Ann Arbor, MI, USA, 1945; ISBN 0472087312. [Google Scholar]

- Dauer, R.M. Stress-Timing and Syllable-Timing Reanalyzed. J. Phone 1983, 11, 51–62. [Google Scholar] [CrossRef]

- Dorta, J.; Mora, E. Patrones Temporales en dos Variedades del Español Hablado en Venezuela y Canarias. [Temporal Patterns in Two Varieties of Spoken Spanish in Venezuela and the Canary Islands]. Rev. Int. Lingüística Iberoam. 2011, 9, 91–100. [Google Scholar]

- Canellada, M.J.; Madsen, J.K. Pronunciación del Español: Lengua Hablada y Literaria; Castalia: Madrid, Spain, 1987; ISBN 84-7039-483-5. [Google Scholar]

- Clegg, J.H.; Fails, W.C. Structure of the Syllable and Syllable Length in Spanish. Proc. Deseret Lang. Linguist. Soc. Symp. 1987, 13, 47–54. [Google Scholar]

- Muñetón Ayala, M. La F0, Duración e Intensidad de las Oraciones Interrogativas Absolutas en un Informante Varón de Medellín. Estud. Fonética Exp. 2016, 25, 167–192. [Google Scholar]

- Ortega-Llebaria, M.; Prieto, P. Acoustic Correlates of Stress in Central Catalan and Castilian Spanish. Lang. Speech 2011, 54, 73–97. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Quilis, A.; Fernández, J. Curso de Fonética y Fonología Españolas; CSIC: Madrid, Spain, 1972; ISBN 8400070887. [Google Scholar]

- Van Lancker, D.; Sidtis, J.J. The Identification of Affective-Prosodic Stimuli by Left- and Right- Hemisphere-Damaged Subjects: All Errors Are Not Created Equal. J. Speech Hear. Res. 1992, 35, 963–970. [Google Scholar] [CrossRef]

- Yang, S.; Van Lancker Sidtis, D. Production of Korean Idiomatic Utterances Following Left- and Right-Hemisphere Damage: Acoustic Studies. J. Speech Lang. Hear. Res. 2016, 59, 267–280. [Google Scholar] [CrossRef]

- Astésano, C.; Besson, M.; Alter, K. Brain Potentials during Semantic and Prosodic Processing in French. Cogn. Brain Res. 2004, 18, 172–184. [Google Scholar] [CrossRef]

- Eckstein, K.; Friederici, A.D. It’s Early: Event-Related Potential Evidence for Initial Interaction of Syntax and Prosody in Speech Comprehension. J. Cogn. Neurosci. 2006, 18, 1696–1711. [Google Scholar] [CrossRef]

- Magne, C.; Astesano, C.; Aramaki, M.; Ystad, S.; Kronland-Martinet, R.; Besson, M. Influence of Syllabic Lengthening on Semantic Processing in Spoken French: Behavioral and Electrophysiological Evidence. Cereb. Cortex 2007, 17, 2659–2668. [Google Scholar] [CrossRef] [Green Version]

- Magne, C.; Jordan, D.K.; Gordon, R.L. Speech Rhythm Sensitivity and Musical Aptitude: ERPs and Individual Differences. Brain Lang. 2016, 153–154, 13–19. [Google Scholar] [CrossRef] [Green Version]

- Moon, H.; Magne, C. Noun/Verb Distinction in English Stress Homographs: An ERP Study. Neuroreport 2015, 26, 753–757. [Google Scholar] [CrossRef]

- Witteman, J.; Van Ijzendoorn, M.H.; Van de Velde, D.; Van Heuven, V.J.J.P.; Schiller, N.O. The Nature of Hemispheric Specialization for Linguistic and Emotional Prosodic Perception: A Meta-Analysis of the Lesion Literature. Neuropsychologia 2011, 49, 3722–3738. [Google Scholar] [CrossRef]

- Friederici, A.D.; Alter, K. Lateralization of Auditory Language Functions: A Dynamic Dual Pathway Model. Brain Lang. 2004, 89, 267–276. [Google Scholar] [CrossRef]

- Kutas, M.; Federmeier, K. Thirty years and counting: Finding meaning en the N400 component of the event related brain potential (ERP). Ann. Rev. Psychol. 2011, 62, 621–647. [Google Scholar] [CrossRef] [Green Version]

- Morgan, E.U.; van der Meer, A.; Vulchanova, M.; Blasi, D.E.; Baggio, G. Meaning before grammar: A review of ERP experiments on the neurodevelopmental origins of semantic processing. Psychon. Bull. Rev. 2020, 27, 441–464. [Google Scholar] [CrossRef] [PubMed]

- Domahs, U.; Bornkessel-schlesewsky, I.; Schlesewsky, M. The Processing of German Word Stress: Evidence for the Prosodic Hierarchy. Phonology 2008, 25, 1–36. [Google Scholar] [CrossRef]

- Marie, C.; Magne, C.; Besson, M. Musicians and the Metric Structure of Words. J. Cogn. Neurosci. 2011, 23, 294–305. [Google Scholar] [CrossRef] [PubMed]

- McCauley, S.M.; Hestvik, A.; Vogel, I. Perception and Bias in the Processing of Compound versus Phrasal Stress: Evidence from Event-Related Brain Potentials. Lang. Speech 2012, 56, 23–44. [Google Scholar] [CrossRef] [PubMed]

- Böcker, K.B.E.; Bastiaansen, M.C.M.; Vroomen, J.; Brunia, C.H.M.; De Gelder, B. An ERP Correlate of Metrical Stress in Spoken Word Recognition. Psychophysiology 1999, 36, 706–720. [Google Scholar] [CrossRef] [PubMed]

- Bohn, K.; Knaus, J.; Wiese, R.; Domahs, U. The Influence of Rhythmic (Ir)Regularities on Speech Processing: Evidence from an ERP Study on German Phrases. Neuropsychologia 2013, 51, 760–771. [Google Scholar] [CrossRef] [PubMed]

- Rothermich, K.; Schmidt-Kassow, M.; Schwartze, M.; Kotz, S.A. Event-Related Potential Responses to Metric Violations: Rules versus Meaning. Neuroreport 2010, 21, 580–584. [Google Scholar] [CrossRef] [PubMed]

- Paulmann, S.; Jessen, S.; Kotz, S.A. It’s Special the Way You Say It: An ERP Investigation on the Temporal Dynamics of Two Types of Prosody. Neuropsychologia 2012, 50, 1609–1620. [Google Scholar] [CrossRef] [PubMed]

- Eckstein, K.; Friederici, A. Late Interaction of Syntactic and Prosodic Processes in Sentence Comprehension as Revealed by ERPs. Brain Res. Cogn. Brain Res. 2005, 25, 130–143. [Google Scholar] [CrossRef] [PubMed]

- Quilis, A. Fonética Acústica de La Lengua Española; Gredos: Madrid, Spain, 1981. [Google Scholar]

- Álvarez, C.J.; Carreiras, M.; De Vega, M. Syllable-Frequency Effect in Visual Word Recognition: Evidence of Sequential-Type Processing. Psicológica 2000, 21, 341–374. [Google Scholar]

- González-Alvarez, J.; Palomar-García, M.A. Syllable Frequency and Spoken Word Recognition: An Inhibitory Effect. Psychol. Rep. 2016, 119, 263–275. [Google Scholar] [CrossRef] [Green Version]

- Perea, M.; Carreiras, M. Effects of Syllable Frequency and Syllable Neighborhood Frequency in Visual Word Recognition. J. Exp. Psychol. Hum. Percept. Perform. 1998, 24, 134–144. [Google Scholar] [CrossRef]

- Taft, M.; Forster, K.I. Lexical Storage and Retrieval of Polymorphemic and Polysyllabic Words. J. Verbal Learn. Verbal Behav. 1976, 15, 607–620. [Google Scholar] [CrossRef]

- Pamies Bertrán, A.; Fernández Planas, A.M. La Percepción de la Duración Vocálica en Español. Actas del V Congreso andaluz de lingüística General. 2006, Volume 1, pp. 501–513. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=5014998 (accessed on 6 December 2021).

- Dorta, J.; Hernández, B.; Díaz, C. Duración e Intensidad en la Entonación de las Declarativas e Interrogativas de Canarias [Duration and Intensity in Intonation of Declarative and Interrogative Sentences in the Canary Islands]. In Sosalivm Mvnera, Homenaje a Francisco González Luis; Hernández, F., Martínez, M., Hernández y Pino, L., Eds.; Ediciones Clásicas: Madrid, Spain, 2011. [Google Scholar] [CrossRef]

- Muñetón Ayala, M.A. Influence of Duration on the Recognition of Sentence Modes in Colombian Spanish. Lit. Linguist. 2020, 41, 263–287. [Google Scholar] [CrossRef]

- Lau, E.; Phillips, C.; Poeppel, D. A Cortical Network Form Semantis (de)constructing the N400. Nature 2008, 9, 920–933. [Google Scholar] [CrossRef]

- Meyer, M.; Alter, K.; Friederici, A.; Lohmann, G.; von Cramon, D.Y. FMRI Reveals Brain regions Mediating Slow Prosodic Modulatons in Spoken Sentences. Hum. Brain Mapp. 2002, 17, 73–88. [Google Scholar] [CrossRef] [Green Version]

- Boersma, P.; Weenink, D. Praat: Doing Phonetics by Computer [Computer Program]. Version 6.0.35. Available online: http://www.praat.org/ (accessed on 19 October 2017).

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.M. FieldTrip: Open Source Software for Advanced Analysis of MEG, EEG, and Invasive Electrophysiological Data. Comput. Intell. Neurosci. 2011, 2011, 156869. [Google Scholar] [CrossRef]

- De Peralta, R.G.; González, S.; Lantz, G.; Michel, C.M.; Landis, T. Noninvasive localization of electromagnetic epileptic activity. I. method descriptions and simulations. Brain Topogr. 2001, 14, 131–137. [Google Scholar]

- Tailarach, J.; Tournoux, P. Co-Planar Stereotaxic Atlas of the Human Brain; Thieme: Stuttgart, Germany, 1988. [Google Scholar]

- Alvarez, C.J.; Carreiras, M.; Taft, M. Syllables and Morphemes: Contrasting Frequency Effects in Spanish. J. Exp. Psychol. Learn. Mem. Cogn. 2001, 27, 545–555. [Google Scholar] [CrossRef]

- Astheimer, L.B.; Sanders, L.D. Predictability Affects Early Perceptual Processing of Word Onsets in Continuous Speech. Neuropsychologia 2011, 49, 3512–3516. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kakouros, S.; Räsänen, O. Perception of Sentence Stress in Speech Correlates with the Temporal Unpredictability of Prosodic Features. Cogn. Sci. 2016, 40, 1739–1774. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Paulmann, S.; Kotz, S.A. An ERP Investigation on the Temporal Dynamics of Emotional Prosody and Emotional Semantics in Pseudo- and Lexical-Sentence Context. Brain Lang. 2008, 105, 59–69. [Google Scholar] [CrossRef] [PubMed]

- Lima, C.F.; Krishnan, S.; Scott, S.K. Roles of Supplementary Motor Areas in Auditory Processing and Auditory Imagery. Trends Neurosci. 2016, 39, 527–542. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hyde, K.L.; Zatorre, R.J.; Griffiths, T.D.; Lerch, J.P.; Peretz, I. Morphometry of the Amusic Brain: A Two-Site Study. Brain 2006, 129, 2562–2570. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hyde, K.L.; Lerch, J.P.; Zatorre, R.J.; Griffiths, T.D.; Evans, A.C.; Peretz, I. Cortical Thickness in Congenital Amusia: When Less Is Better than More. J. Neurosci. 2007, 27, 13028–13032. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Albouy, P.; Mattout, J.; Bouet, R.; Maby, E.; Sanchez, G.; Aguera, P.E.; Daligault, S.; Delpuech, C.; Bertrand, O.; Caclin, A.; et al. Impaired Pitch Perception and Memory in Congenital Amusia: The Deficit Starts in the Auditory Cortex. Brain 2013, 136, 1639–1661. [Google Scholar] [CrossRef] [PubMed]

- Zora, H.; Rudner, M.; Montell Magnusson, A.K. Concurrent Affective and Linguistic Prosody with the Same Emotional Valence Elicits a Late Positive ERP Response. Eur. J. Neurosci. 2020, 51, 2236–2249. [Google Scholar] [CrossRef] [PubMed]

- Bernal, B.; Ardila, A.; Rosselli, M. The Network of Brodmann’s Area 22 in Lexico-Semantic Processing: A Pooling-Data Connectivity Study. AIMS Neurosci. 2016, 3, 306–316. [Google Scholar] [CrossRef]

- Aziz-Zadeh, L.; Sheng, T.; Gheytanchi, A. Common Premotor Regions for the Perception and Production of Prosody and Correlations with Empathy and Prosodic Ability. PLoS ONE 2010, 5, e8759. [Google Scholar] [CrossRef] [PubMed]

- Janata, P. Neural Basis of Music Perception. Handb. Clin. Neurol. 2015, 129, 187–208. [Google Scholar] [CrossRef]

- Leech, R.; Braga, R.; Sharp, D.J. Echoes of the Brain within the Posterior Cingulate Cortex. J. Neurosci. 2012, 32, 215–222. [Google Scholar] [CrossRef] [Green Version]

- Leech, R.; Sharp, D.J. The Role of the Posterior Cingulate Cortex in Cognition and Disease. Brain 2014, 137, 12–32. [Google Scholar] [CrossRef] [Green Version]

- Kotz, S.A.; Paulmann, S. When Emotional Prosody and Semantics Dance Cheek to Cheek: ERP Evidence. Brain Res. 2007, 1151, 107–118. [Google Scholar] [CrossRef]

| Duration | Semantic | |

|---|---|---|

| Predictable (SP+) | Unpredictable (SP−) | |

| Congruous (DC+) | El ciclista sufrió mucho en la subida (the cyclist suffered a lot on the climb) | El ciclista sufrió mucho en la visita (the cyclist suffered a lot on the visit) |

| Incongruous (DC−) | El ciclista sufrió mucho en la subida (the cyclist suffered a lot on the climb) | El ciclista sufrió mucho en la visita (the cyclist suffered a lot on the visit) |

| Duration | Semantic | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Predictable (SP+) | Unpredictable (SP−) | ||||||||

| Syllables | Total Word | Syllables | Total Word | ||||||

| 1 | 2 | 3 | 1 | 2 | 3 | ||||

| Congruous (DC+) | M | 129.35 | 197.70 | 162.07 | 489.14 | 137.32 | 198.36 | 185.85 | 551.75 |

| DS | 22.18 | 27.61 | 57.73 | 63.91 | 18.52 | 26.03 | 59.65 | 63.31 | |

| Incongruous (DC−) | M | 202.68 | 197.70 | 162.07 | 582.47 | 207.74 | 198.36 | 185.85 | 611.97 |

| DS | 24.76 | 27.61 | 57.73 | 64.99 | 30.68 | 26.03 | 59.65 | 64.83 | |

| N400 | Metric Task | Semantic Task |

|---|---|---|

| Semantic effect | Contrast: DC+ SP+ vs. DC+ SP− F(1, 19) = 5.30, p < 0.033, ηp2 = 0.22 | --- |

| Duration Effect | Contrast: DC+ SP+ vs. DC− SP+ F(1, 19) = 5.25, p < 0.034, ηp2 = 0.22 | Contrast: DC+ SP− vs. DC− SP− F(1, 19) = 6.69, p < 0.018, ηp2 = 0.26 |

| N400 | Metric Task | Semantic Task |

|---|---|---|

| Semantic effect | Contrast: DC+ SP+ vs. DC+ SP− x = 11.82, y = −8.45, z = 28.71 | --- |

| Duration Effect | Contrast: DC+ SP+ vs. DC− SP+ x = 15.20, y = −15.20, z = 18.58 | Contrast: DC+ SP− vs. DC− SP− x = −21.96, y = −5.07, z = 8.45 |

| LPC | Metric Task | Semantic Task |

|---|---|---|

| Semantic effect | --- | --- |

| Duration effect | Contrast: DC+ SP+ vs. DC− SP+ F(1, 19) = 5.34, p < 0.032, ηp2 = 0.22 | --- |

| LPC | Metric Task | Semantic Task |

|---|---|---|

| Semantic effect | --- | --- |

| Duration effect | Contrast: DC+ SP+ vs. DC− SP+ x = −1.69, y = −21.96, z = 11.82 | --- |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muñetón-Ayala, M.; De Vega, M.; Ochoa-Gómez, J.F.; Beltrán, D. The Brain Dynamics of Syllable Duration and Semantic Predictability in Spanish. Brain Sci. 2022, 12, 458. https://doi.org/10.3390/brainsci12040458

Muñetón-Ayala M, De Vega M, Ochoa-Gómez JF, Beltrán D. The Brain Dynamics of Syllable Duration and Semantic Predictability in Spanish. Brain Sciences. 2022; 12(4):458. https://doi.org/10.3390/brainsci12040458

Chicago/Turabian StyleMuñetón-Ayala, Mercedes, Manuel De Vega, John Fredy Ochoa-Gómez, and David Beltrán. 2022. "The Brain Dynamics of Syllable Duration and Semantic Predictability in Spanish" Brain Sciences 12, no. 4: 458. https://doi.org/10.3390/brainsci12040458