Development and Validation of a Novel Robot-Based Assessment of Upper Limb Sensory Processing in Chronic Stroke

Abstract

:1. Introduction

2. Materials and Methods

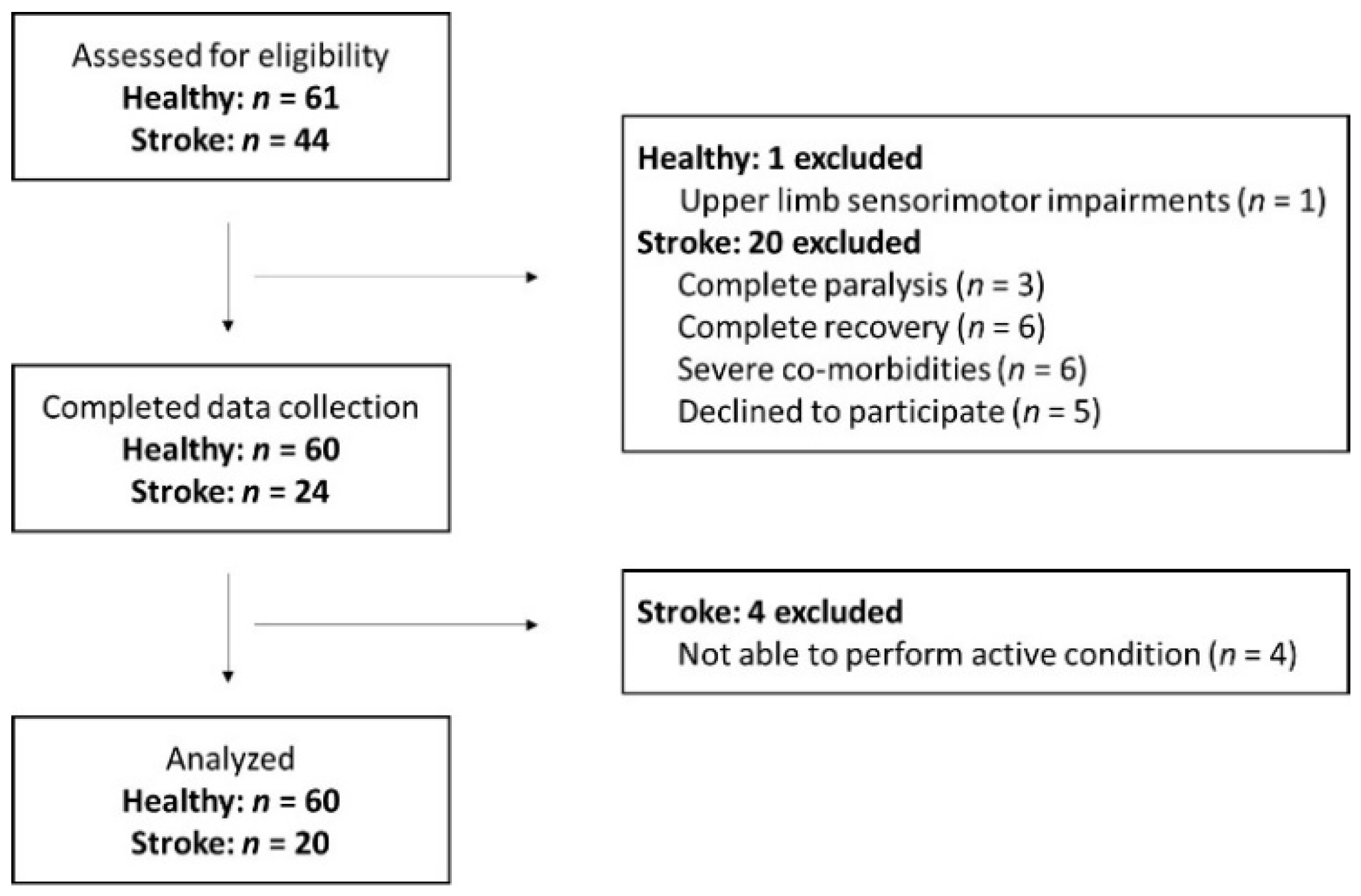

2.1. Participants

2.2. Experimental Set-Up

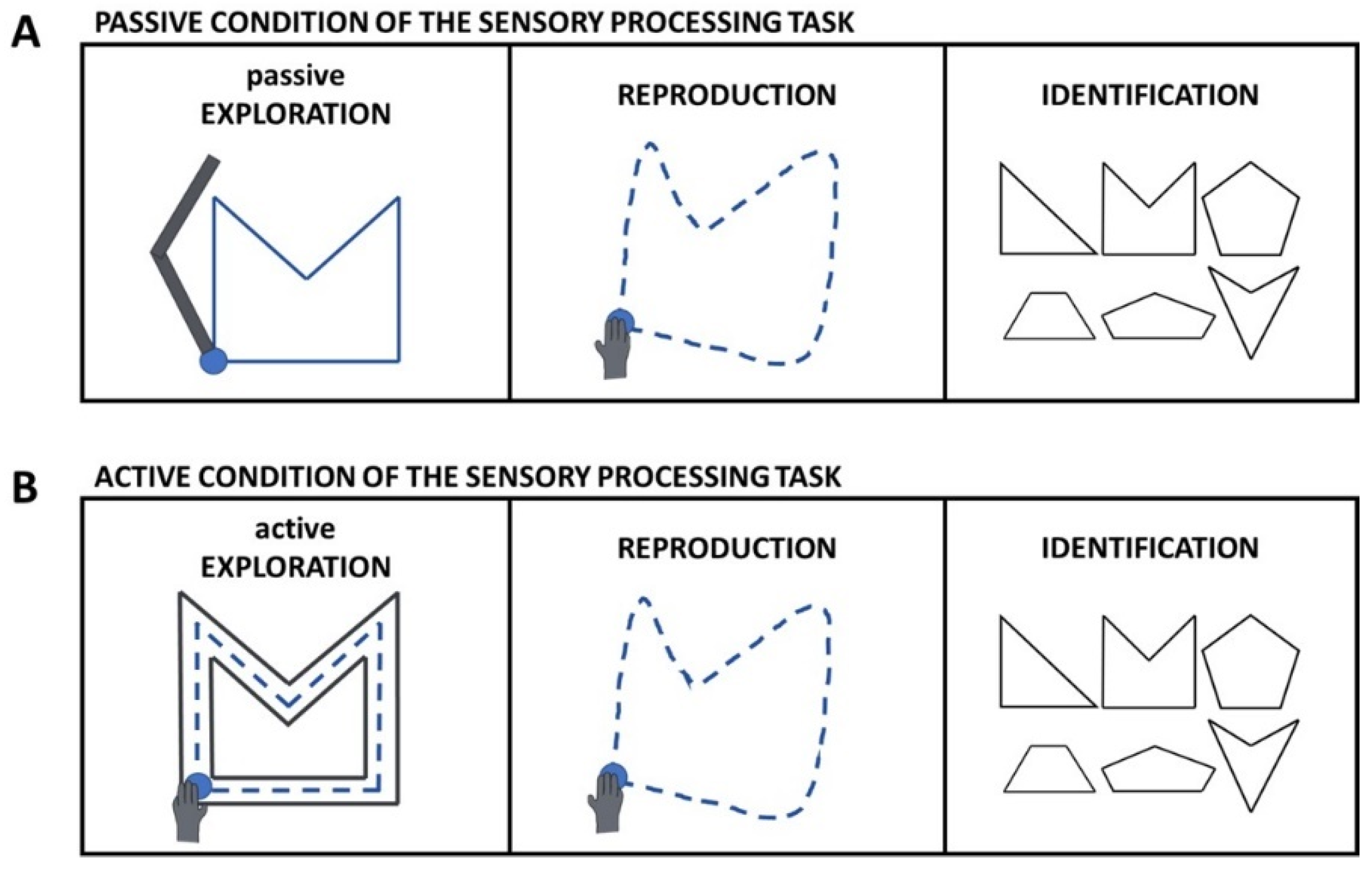

2.3. Experimental Task

2.4. Other Robot-Based Assessments

2.5. Clinical Assessments

2.6. Data Analysis

2.7. Statistical Analysis

3. Results

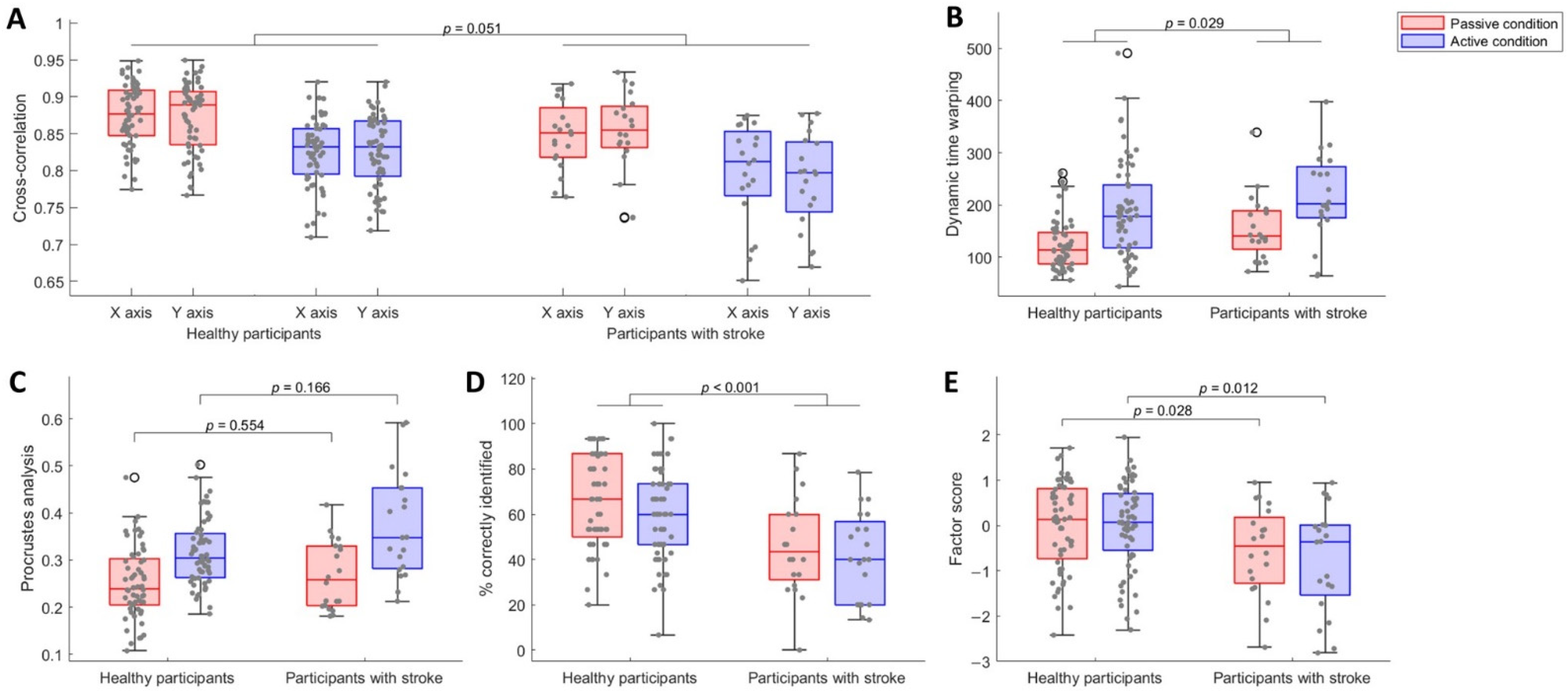

3.1. Participants with Stroke Were Less Accurate in Reproducing the Explored Shapes

3.2. Participants with Stroke Identified Less Shapes than Healthy Participants, despite Being Equally Certain about Their Answers

3.3. Participants with Stroke Showed Worse Sensory Processing Ability

3.4. Identification of Abnormal Performance in Participants with Stroke

3.5. The Robot-Based Sensory Processing Task Was Moderately to Highly Correlated with Sensitive Clinical Tests of Sensory Processing

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Meyer, S. Recovery after Stroke: Long Term Outcome and Upper Limb Somatosensory Function. Ph.D. Thesis, KU Leuven, Leuven, Belgium, 2015. Available online: https://limo.libis.be/permalink/f/t5q1v2/LIRIAS1687925 (accessed on 24 June 2022).

- Campbell, W.W.; Barohn, R.J. DeJong’s The Neurologic Examination, 8th ed.; Wolters Kluwer: Philadelphia, PA, USA, 2020. [Google Scholar]

- Boccuni, L.; Meyer, S.; Kessner, S.S.; De Bruyn, N.; Essers, B.; Cheng, B.; Thomalla, G.; Peeters, A.; Sunaert, S.; Duprez, T.; et al. Is There Full or Proportional Somatosensory Recovery in the Upper Limb After Stroke? Investigating Behavioral Outcome and Neural Correlates. Neurorehabilit. Neural Repair 2018, 32, 691–700. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Meyer, S.; de Bruyn, N.; Krumlinde-Sundholm, L.; Peeters, A.; Feys, H.; Thijs, V.; Verheyden, G. Associations Between Sensorimotor Impairments in the Upper Limb at 1 Week and 6 Months After Stroke. J. Neurol. Phys. Ther. 2016, 40, 186–195. [Google Scholar] [CrossRef] [PubMed]

- Rand, D. Proprioception Deficits in Chronic Stroke—Upper Extremity Function and Daily Living. PLoS ONE 2018, 13, e0195043. [Google Scholar] [CrossRef] [Green Version]

- Meyer, S.; Karttunen, A.H.; Thijs, V.; Feys, H.; Verheyden, G. How Do Somatosensory Deficits in the Arm and Hand Relate to Upper Limb Impairment, Activity, and Participation Problems After Stroke? A Systematic Review. Phys. Ther. 2014, 94, 1220–1231. [Google Scholar] [CrossRef] [Green Version]

- Au-Yeung, S.S.Y.; Hui-Chan, C.W.Y. Predicting Recovery of Dextrous Hand Function in Acute Stroke. Disabil. Rehabil. 2009, 31, 394–401. [Google Scholar] [CrossRef]

- Meyer, S.; de Bruyn, N.; Lafosse, C.; van Dijk, M.; Michielsen, M.; Thijs, L.; Truyens, V.; Oostra, K.; Krumlinde-Sundholm, L.; Peeters, A.; et al. Somatosensory Impairments in the Upper Limb Poststroke: Distribution and Association With Motor Function and Visuospatial Neglect. Neurorehabilit. Neural Repair 2016, 30, 731–742. [Google Scholar] [CrossRef]

- Carey, L.M.; Matyas, T.A.; Baum, C. Effects of Somatosensory Impairment on Participation After Stroke. Am. J. Occup. Ther. 2018, 72, 7203205100p1–7203205100p10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ingemanson, M.L.; Rowe, J.R.; Chan, V.; Wolbrecht, E.T.; Reinkensmeyer, D.J.; Cramer, S.C. Somatosensory System Integrity Explains Differences in Treatment Response after Stroke. Neurology 2019, 92, e1098–e1108. [Google Scholar] [CrossRef] [PubMed]

- Zandvliet, S.B.; Kwakkel, G.; Nijland, R.H.M.; van Wegen, E.E.H.; Meskers, C.G.M. Is Recovery of Somatosensory Impairment Conditional for Upper-Limb Motor Recovery Early After Stroke? Neurorehabilit. Neural Repair 2020, 34, 403–416. [Google Scholar] [CrossRef]

- Kessner, S.S.; Schlemm, E.; Cheng, B.; Bingel, U.; Fiehler, J.; Gerloff, C.; Thomalla, G. Somatosensory Deficits After Ischemic Stroke. Stroke 2019, 50, 1116–1123. [Google Scholar] [CrossRef]

- van Gils, A. Bimanual Performance and Life Balance One Year after Stroke: Assessment, Evolution and Prognosis. Doctoral thesis, KU Leuven, Leuven, Belgium, 2019. Available online: https://limo.libis.be/permalink/f/t5q1v2/LIRIAS2811582 (accessed on 24 June 2022).

- Scott, S.H.; Dukelow, S.P. Potential of Robots as Next-Generation Technology for Clinical Assessment of Neurological Disorders and Upper-Limb Therapy. J. Rehabil. Res. Dev. 2011, 48, 335–354. [Google Scholar] [CrossRef]

- Kwakkel, G.; Lannin, N.A.; Borschmann, K.; English, C.; Ali, M.; Churilov, L.; Saposnik, G.; Winstein, C.; van Wegen, E.E.H.; Wolf, S.L.; et al. Standardized Measurement of Sensorimotor Recovery in Stroke Trials: Consensus-Based Core Recommendations from the Stroke Recovery and Rehabilitation Roundtable. Neurorehabilit. Neural Repair 2017, 31, 784–792. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dukelow, S.P.; Herter, T.M.; Moore, K.D.; Demers, M.J.; Glasgow, J.I.; Bagg, S.D.; Norman, K.E.; Scott, S.H. Quantitative Assessment of Limb Position Sense Following Stroke. Neurorehabilit. Neural Repair 2010, 24, 178–187. [Google Scholar] [CrossRef] [PubMed]

- Semrau, J.A.; Herter, T.M.; Scott, S.H.; Dukelow, S.P. Robotic Identification of Kinesthetic Deficits After Stroke. Stroke 2013, 44, 3414–3421. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mrotek, L.A.; Bengtson, M.; Stoeckmann, T.; Botzer, L.; Ghez, C.P.; McGuire, J.; Scheidt, R.A. The Arm Movement Detection (AMD) Test: A Fast Robotic Test of Proprioceptive Acuity in the Arm. J. NeuroEng. Rehabil. 2017, 14, 64. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Coderre, A.M.; Abou Zeid, A.; Dukelow, S.P.; Demmer, M.J.; Moore, K.D.; Demers, M.J.; Bretzke, H.; Herter, T.M.; Glasgow, J.I.; Norman, K.E.; et al. Assessment of Upper-Limb Sensorimotor Function of Subacute Stroke Patients Using Visually Guided Reaching. Neurorehabilit. Neural Repair 2010, 24, 528–541. [Google Scholar] [CrossRef] [PubMed]

- BKIN Technologies Ltd. Kinarm Standard Tests; BKIN Technologies Ltd.: Kingston, ON, Canada, 2018. [Google Scholar]

- Otaka, E.; Otaka, Y.; Kasuga, S.; Nishimoto, A.; Yamazaki, K.; Kawakami, M.; Ushiba, J.; Liu, M. Clinical Usefulness and Validity of Robotic Measures of Reaching Movement in Hemiparetic Stroke Patients. J. NeuroEng. Rehabil. 2015, 12, 66. [Google Scholar] [CrossRef] [Green Version]

- World Health Organization towards a Common Language for Functioning, Disability and Health: ICF. Available online: https://www.who.int/classifications/icf/icfbeginnersguide.pdf (accessed on 11 October 2021).

- Stolk-Hornsveld, F.; Crow, J.L.; Hendriks, E.P.; van der Baan, R.; Harmeling-van der Wel, B.C. The Erasmus MC Modifications to the (Revised) Nottingham Sensory Assessment: A Reliable Somatosensory Assessment Measure for Patients with Intracranial Disorders. Clin. Rehabil. 2006, 20, 160–172. [Google Scholar] [CrossRef] [PubMed]

- Lincoln, N.B.; Jackson, J.M.; Adams, S.A. Reliability and Revision of the Nottingham Sensory Assessment for Stroke Patients. Physiotherapy 1998, 84, 358–365. [Google Scholar] [CrossRef]

- Gaubert, C.S.; Mockett, S.P. Inter-Rater Reliability of the Nottingham Method of Stereognosis Assessment. Clin. Rehabil. 2000, 14, 153–159. [Google Scholar] [CrossRef]

- Eek, E.; Engardt, M. Assessment of the Perceptual Threshold of Touch (PTT) with High-Frequency Transcutaneous Electric Nerve Stimulation (Hf/TENS) in Elderly Patients with Stroke: A Reliability Study. Clin. Rehabil. 2003, 17, 825–834. [Google Scholar] [CrossRef] [PubMed]

- Eek, E.; Holmqvist, L.W.; Sommerfeld, D.K. Adult Norms of the Perceptual Threshold of Touch (PTT) in the Hands and Feet in Relation to Age, Gender, and Right and Left Side Using Transcutaneous Electrical Nerve Stimulation. Physiother. Theory Pract. 2012, 28, 373–383. [Google Scholar] [CrossRef] [PubMed]

- Carey, L.M.; Oke, L.E.; Matyas, T.A. Impaired Touch Discrimination After Stroke: A Quantiative Test. Neurorehabilit. Neural Repair 1997, 11, 219–232. [Google Scholar] [CrossRef] [Green Version]

- Carey, L.M.; Oke, L.E.; Matyas, T.A. Impaired Limb Position Sense after Stroke: A Quantitative Test for Clinical Use. Arch. Phys. Med. Rehabil. 1996, 77, 1271–1278. [Google Scholar] [CrossRef]

- Carey, L.M.; Mak-Yuen, Y.Y.K.; Matyas, T.A. The Functional Tactile Object Recognition Test: A Unidimensional Measure With Excellent Internal Consistency for Haptic Sensing of Real Objects After Stroke. Front. Neurosci. 2020, 14, 542590. [Google Scholar] [CrossRef]

- Carey, L.M.; Nankervis, J.; LeBlanc, S.; Harvey, L. A New Functional Tactual Object Recognition Test (FTORT) for Stroke Clients: Normative Standards and Discriminative Validity. In Proceedings of the 14th International Congress of the World Federation of Occupational Therapists, Sydney, Australia, 23 July 2006. [Google Scholar]

- Fugl-Meyer, A.R.; Jääskö, L.; Leyman, I.; Olsson, S.; Steglind, S. The Post-Stroke Hemiplegic Patient. 1. A Method for Evaluation of Physical Performance. Scand. J. Rehabil. Med. 1975, 7, 13–31. [Google Scholar]

- See, J.; Dodakian, L.; Chou, C.; Chan, V.; McKenzie, A.; Reinkensmeyer, D.J.; Cramer, S.C. A Standardized Approach to the Fugl-Meyer Assessment and Its Implications for Clinical Trials. Neurorehabilit. Neural Repair 2013, 27, 732–741. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool For Mild Cognitive Impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef]

- Friedman, P.J. The Star Cancellation Test in Acute Stroke. Clin. Rehabil. 1992, 6, 23–30. [Google Scholar] [CrossRef]

- Manly, T.; Dove, A.; Blows, S.; George, M.; Noonan, M.P.; Teasdale, T.W.; Dodds, C.M.; Fish, J.; Warburton, E. Assessment of Unilateral Spatial Neglect: Scoring Star Cancellation Performance from Video Recordings—Method, Reliability, Benefits, and Normative Data. Neuropsychology 2009, 23, 519–528. [Google Scholar] [CrossRef]

- Yozbatiran, N.; Der-Yeghiaian, L.; Cramer, S.C. A Standardized Approach to Performing the Action Research Arm Test. Neurorehabilit. Neural Repair 2008, 22, 78–90. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mahoney, F.I.; Barthel, D.W. Functional Evaluation: The Barthel Index. Md. State Med. J. 1965, 14, 61–65. [Google Scholar] [PubMed]

- Duffy, L.; Gajree, S.; Langhorne, P.; Stott, D.J.; Quinn, T.J. Reliability (Inter-Rater Agreement) of the Barthel Index for Assessment of Stroke Survivors: Systematic Review and Meta-Analysis. Stroke 2013, 44, 462–468. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hsueh, I.-P.; Lin, J.-H.; Jeng, J.-S.; Hsieh, C.-L. Comparison of the Psychometric Characteristics of the Functional Independence Measure, 5 Item Barthel Index, and 10 Item Barthel Index in Patients with Stroke. J. Neurol. Neurosurg. Psychiatry 2002, 73, 188. [Google Scholar] [CrossRef] [PubMed]

- R Core Team R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020.

- Osborne, J.W. Best Practices in Exploratory Factor Analysis; CreateSpace Independent Publishing Platform: Scotts Valley, CA, USA, 2014; ISBN 9781500594343. [Google Scholar]

- Revelle, W.R. Psych: Procedures for Personality and Psychological Research; Northwestern University: Evanston, IL, USA, 2020. [Google Scholar]

- Wilcox, R.R. Introduction to Robust Estimation and Hypothesis Testing, 4th ed.; Elsevier: Amsterdam, The Netherlands, 2017; ISBN 9780128047330. [Google Scholar]

- Holm, S. A Simple Sequentially Rejective Multiple Test Procedure. Scand. J. Stat. 1979, 6, 65–70. [Google Scholar]

- Bakeman, R. Recommended Effect Size Statistics for Repeated Measures Designs. Behav. Res. Methods 2005, 37, 379–384. [Google Scholar] [CrossRef]

- Lakens, D. Calculating and Reporting Effect Sizes to Facilitate Cumulative Science: A Practical Primer for t-Tests and ANOVAs. Front. Psychol. 2013, 4, 863. [Google Scholar] [CrossRef] [Green Version]

- Kassambara, A. Rstatix: Pipe-Friendly Framework for Basic Statistical Tests. Available online: https://rpkgs.datanovia.com/rstatix/ (accessed on 13 February 2021).

- Torchiano, M. Effsize: Efficient Effect Size Computation. Available online: https://github.com/mtorchiano/effsize/ (accessed on 5 October 2020).

- Hinkle, D.; Wiersma, W.; Jurs, S. Applied Statistics for the Behavioral Sciences, 4th ed.; Houghton Mifflin: Boston, MA, USA, 1998. [Google Scholar]

- Fletcher, T.D. Psychometric: Applied Psychometric Theory 2010. Available online: https://cran.r-project.org/package=psychometric (accessed on 8 August 2010).

- Cohen, J.; Cohen, P.; West, S.G.; Aiken, L.S. Applied Multiple Regression/Correlation Analysis for the Behavioral Sciences, 3rd ed.; Taylor and Francis: London, UK, 2013. [Google Scholar]

- Connell, L.; Lincoln, N.; Radford, K. Somatosensory Impairment after Stroke: Frequency of Different Deficits and Their Recovery. Clin. Rehabil. 2008, 22, 758–767. [Google Scholar] [CrossRef]

- Ballardini, G.; Carlini, G.; Giannoni, P.; Scheidt, R.A.; Nisky, I.; Casadio, M. Tactile-STAR: A Novel Tactile STimulator And Recorder System for Evaluating and Improving Tactile Perception. Front. Neurorobotics 2018, 12, 12. [Google Scholar] [CrossRef] [Green Version]

- Henriques, D.Y.P.; Flanders, M.; Soechting, J.F. Haptic Synthesis of Shapes and Sequences. J. Neurophysiol. 2004, 91, 1808–1821. [Google Scholar] [CrossRef]

- Semrau, J.A.; Herter, T.M.; Kenzie, J.M.; Findlater, S.E.; Scott, S.H.; Dukelow, S.P. Robotic Characterization of Ipsilesional Motor Function in Subacute Stroke. Neurorehabilit. Neural Repair 2017, 31, 571–582. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lugtmeijer, S.; Lammers, N.A.; de Haan, E.H.F.; de Leeuw, F.-E.; Kessels, R.P.C. Post-Stroke Working Memory Dysfunction: A Meta-Analysis and Systematic Review. Neuropsychol. Rev. 2021, 31, 202–219. [Google Scholar] [CrossRef] [PubMed]

- Mokkink, L.B.; de Vet, H.C.W.; Prinsen, C.A.C.; Patrick, D.L.; Alonso, J.; Bouter, L.M.; Terwee, C.B. COSMIN Risk of Bias Checklist for Systematic Reviews of Patient-Reported Outcome Measures. Qual. Life Res. 2018, 27, 1171–1179. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Healthy Participants (n = 60) | Participants with Stroke (n = 20) | p-Value | |

|---|---|---|---|

| Median age in years (IQR) | 62.36 (57.30–66.74) | 59.47 (48.94–65.69) | 0.284 |

| Male, n (%) | 28 (47) | 13 (65) | 0.200 |

| Right-handed, n (%) | 56 (93) | 18 (90) | 0.637 |

| Ischemic stroke, n (%) | 11 (55) | ||

| Median time since stroke in years (IQR) | 2.65 (1.79–3.36) | ||

| Left hemiparesis, n (%) | 13 (65) | ||

| Median MoCA score 0–30 (IQR) | 28 (27–29) | 27 (26–28) | 0.017 * |

| Median FM-UE score 0–66 (IQR) | 66 (64–66) | 63 (48–64) | <0.001 * |

| Median ARAT score 0–57 (IQR) | 57 (57–57) | 57 (36–57) | <0.001 * |

| Median BI score 0–20 (IQR) | 20 (20–20) | 20 (19–20) | <0.001 * |

| Median EmNSA score 0–40 (IQR) | 40 (39–40) | 39 (37–40) | 0.004 * |

| Median st-NSA score 0–22 (IQR) | 21 (21–22) | 21 (20–22) | 0.116 |

| Median PTT average in mA (IQR) | 2.5 (2.2–3.2) | 3.2 (2.1–3.7) | 0.218 |

| Median TDT score 0–25 (IQR) | 18 (16–18) | 15 (11–17) | 0.002 * |

| Median TDT area under the curve (IQR) | 64.83 (52.75–75.17) | 37.41 (13.62–56.16) | <0.001 * |

| Median WPST average error in degrees (IQR) | 6.23 (5.43–8.51) | 9.20 (7.65–11.40) | 0.002 * |

| Median fTORT score 0–42 (IQR) | 41 (41–42) | 40 (38–41) | 0.002 * |

| Median VGR non-dominant/affected arm task score (IQR) | 0.98 (0.73–1.39) | 2.46 (1.62–4.03) | <0.001 * |

| Median VGR dominant/less affected arm task score (IQR) | 0.86 (0.60–1.35) | 1.45 (0.98–1.84) | 0.005 * |

| Median VGR inter-limb task score (IQR) | 0.73 (0.36–1.20) | 1.48 (1.18–3.59) | <0.001 * |

| Median APM non-dominant/affected arm task score (IQR) | 0.78 (0.34–1.27) | 0.96 (0.52–2.09) | 0.075 |

| Modality Tested | Scoring: Range, Cut-Off and Interpretation | Reliability | Validity | |

|---|---|---|---|---|

| Erasmus modified Nottingham sensory assessment [23] | Somatosensory function: exteroception, proprioception and sensory processing | Ordinal score: 0–40 Higher scores = better | Good to excellent [23] | |

| Stereognosis subscale of original Nottingham sensory assessment [24] | Somatosensory function: sensory processing | Ordinal score: 0–22 Higher scores = better | Moderate to good [25] | |

| Perceptual threshold of touch [26] | Somatosensory function: exteroception | Smallest detectable stimulus: 0–10 mA Lower scores = better Adult norms available [27] | Good [26] | |

| Tactile discrimination test [28] | Somatosensory function: sensory processing | Number of correct answers: 0–25 Area under the curve: <60.25 indicates abnormal performance Higher scores = better | Good [28] | Discriminative validity [28] |

| Wrist position sense test [29] | Somatosensory function: proprioception | Average error: >10.37° indicates abnormal performance Lower scores = better | Good [29] | Discriminative validity [29] |

| Functional tactile object recognition test [30] | Somatosensory function: sensory processing | Ordinal score: 0–42 Higher scores = better | Discriminative validity [31] | |

| Fugl-Meyer upper extremity assessment [32] | Motor function | Ordinal score: 0–66 Higher scores = better | Excellent [33] | Good convergent validity [33] |

| Montreal cognitive assessment [34] | Cognitive function | Ordinal score: 0–30, <26 indicates mild cognitive deficits Higher scores = better | Discriminative validity [34] | |

| Star cancellation test [35] | Visuospatial neglect | Number of crossed out stars: 0–54, <44 indicates visuospatial neglect Higher scores = better | Good [36] | Moderate convergent validity [35] Discriminative validity [35,36] |

| Action research arm test [37] | Motor activity | Ordinal score: 0–57 Higher scores = better | Excellent [37] | Excellent convergent validity [37] |

| Barthel index [38] | Activities of daily life | Ordinal score: 0–20 Higher scores = better | Good to excellent [39,40] | Good convergent validity [40] |

| Factor Loading | ||

|---|---|---|

| Passive Condition | Active Condition | |

| Cross-correlation on X-axis | 0.87 | 0.87 |

| Cross-correlation on Y-axis | 0.92 | 0.87 |

| Dynamic time warping | 0.45 | 0.46 |

| Procrustes analysis | 0.92 | 0.91 |

| % correctly identified | 0.68 | 0.64 |

| Mean (95% CI) of Healthy Participants | Participants with Stroke Outside of 95% CI Indicating Worse Performance, N (%) | ||

|---|---|---|---|

| Passive condition | Cross-correlation on X-axis | 0.87 (0.86–0.89) | 14 (70) |

| Cross-correlation on Y-axis | 0.87 (0.86–0.88) | 10 (50) | |

| Dynamic time warping | 122.67 (110.62–134.72) | 12 (60) | |

| Procrustes analysis | 0.25 (0.23–0.27) | 9 (45) | |

| % correctly identified | 66.16 (61.06–71.26) | 16 (80) | |

| Factor score | 0.00 (−0.25–0.25) | 11 (55) | |

| Active condition | Cross-correlation on X-axis | 0.83 (0.81–0.84) | 10 (50) |

| Cross-correlation on Y-axis | 0.83 (0.81–0.84) | 13 (65) | |

| Dynamic time warping | 188.50 (165.11–211.89) | 9 (45) | |

| Procrustes analysis | 0.32 (0.30–0.33) | 11 (55) | |

| % correctly identified | 59.68 (54.58–64.79) | 15 (75) | |

| Factor score | 0.00 (−0.25–0.25) | 11 (55) |

| Factor Score Passive Condition | Factor Score Active Condition | ||||

|---|---|---|---|---|---|

| rW | 95% CI | rW | 95% CI | ||

| Somatosensory function | EmNSA-SB total score | 0.38 | (−0.08 0.70) | 0.40 | (−0.05 0.72) |

| EmNSA total score | 0.40 | (−0.05 0.72) | 0.49 | (0.06 0.77) | |

| st-NSA total score | 0.16 | (−0.31 0.56) | 0.32 | (−0.14 0.67) | |

| PTT average | 0.25 | (−0.21 0.63) | 0.14 | (−0.32 0.55) | |

| TDT total score | 0.52 | (0.10 0.78) | 0.65 | (0.29 0.85) | |

| TDT area under the curve | 0.53 | (0.11 0.79) | 0.71 | (0.38 0.87) | |

| WPST average error | −0.40 | (−0.72 0.05) | −0.43 | (−0.73 0.02) | |

| fTORT total score | 0.37 | (−0.09 0.70) | 0.47 | (0.03 0.75) | |

| APM affected arm task score | −0.20 | (−0.59 0.27) | −0.37 | (−0.70 0.09) | |

| Motor function | FM-UE total score | 0.37 | (−0.08 0.70) | 0.54 | (0.13 0.79) |

| VGR affected arm task score | −0.44 | (−0.74 0.00) | −0.57 | (−0.81 −0.17) | |

| VGR less affected arm task score | −0.03 | (−0.47 0.42) | −0.06 | (−0.49 0.39) | |

| VGR inter-limb task score | −0.49 | (−0.77 −0.06) | −0.62 | (−0.84 −0.25) | |

| Cognitive function | MoCA total score | −0.15 | (−0.56 0.31) | −0.31 | (−0.66 0.16) |

| Activities | ARAT total score | 0.30 | (−0.16 0.66) | 0.46 | (0.03 0.75) |

| BI total score | 0.24 | (−0.23 0.61) | 0.34 | (−0.12 0.68) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saenen, L.; Orban de Xivry, J.-J.; Verheyden, G. Development and Validation of a Novel Robot-Based Assessment of Upper Limb Sensory Processing in Chronic Stroke. Brain Sci. 2022, 12, 1005. https://doi.org/10.3390/brainsci12081005

Saenen L, Orban de Xivry J-J, Verheyden G. Development and Validation of a Novel Robot-Based Assessment of Upper Limb Sensory Processing in Chronic Stroke. Brain Sciences. 2022; 12(8):1005. https://doi.org/10.3390/brainsci12081005

Chicago/Turabian StyleSaenen, Leen, Jean-Jacques Orban de Xivry, and Geert Verheyden. 2022. "Development and Validation of a Novel Robot-Based Assessment of Upper Limb Sensory Processing in Chronic Stroke" Brain Sciences 12, no. 8: 1005. https://doi.org/10.3390/brainsci12081005

APA StyleSaenen, L., Orban de Xivry, J.-J., & Verheyden, G. (2022). Development and Validation of a Novel Robot-Based Assessment of Upper Limb Sensory Processing in Chronic Stroke. Brain Sciences, 12(8), 1005. https://doi.org/10.3390/brainsci12081005