The Role of Augmented Reality Neuronavigation in Transsphenoidal Surgery: A Systematic Review

Abstract

:1. Introduction

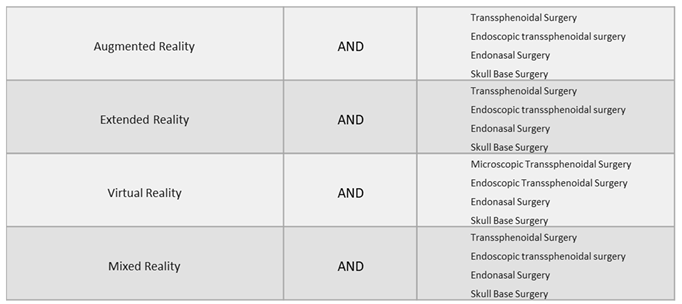

2. Materials and Methods

2.1. Eligibility Criteria

- Full article in English;

- Original peer-reviewed articles reporting the use of AR-assisted transsphenoidal surgery;

- Studies including patients (in vivo), cadaver specimens or experimental models (i.e., phantoms);

- Clinical articles: case reports, case series, prospective and retrospective cohort studies, original articles and technical notes.

2.2. Exclusion Criteria

- Articles not in English;

- Articles reporting surgical simulation just in virtual reality (VR).

2.3. Data Extraction

3. Results

3.1. Patients’ Characteristics and Demographics

3.2. Augmented Reality Techniques

3.3. Landmarks Identification

3.4. Accuracy of the Results

3.5. AR Surgeons’ Perspectives

4. Discussion

4.1. Augmented Reality in Neurosurgery

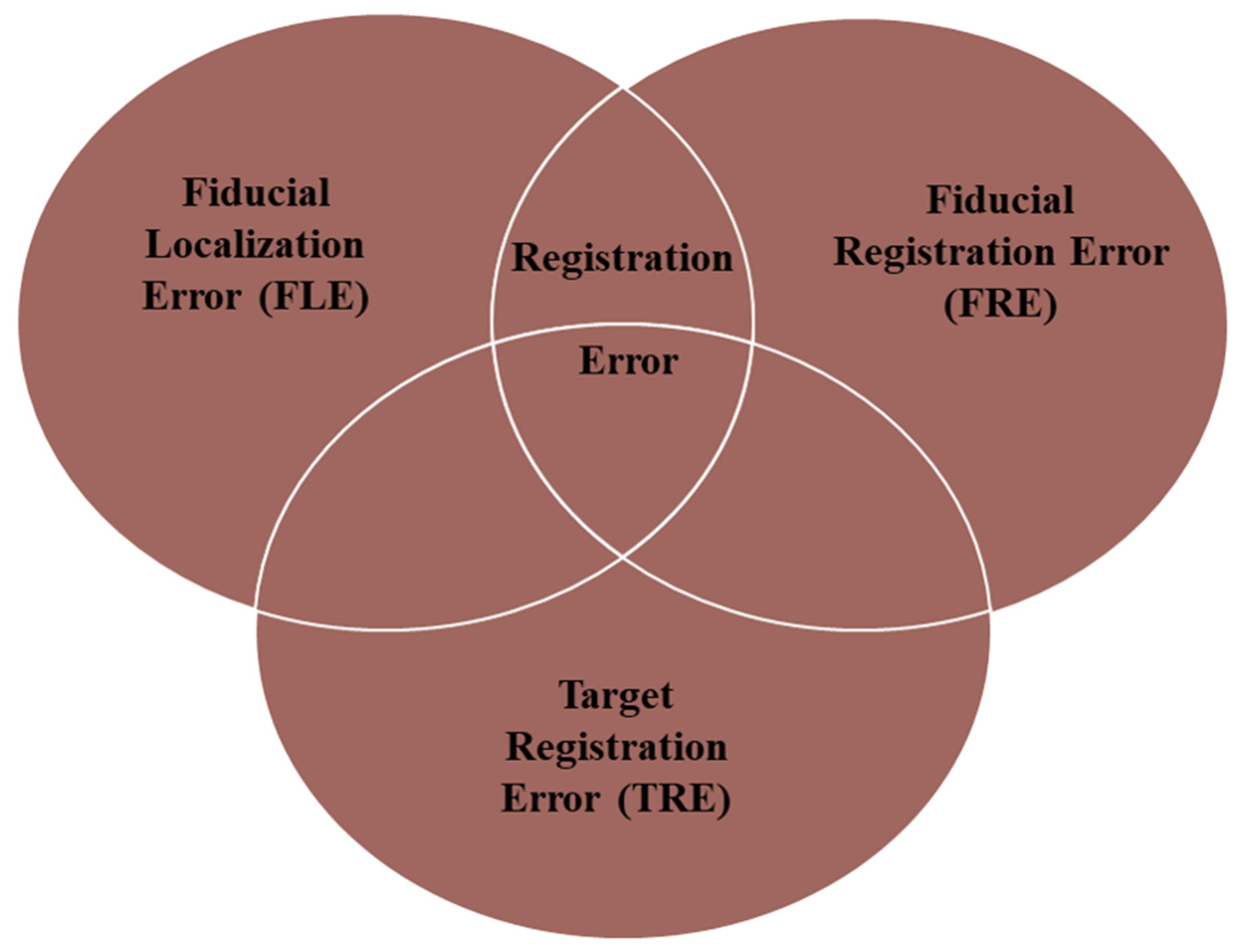

4.1.1. Can Augmented Reality Improve the Accuracy of Image-Guided Surgery?

4.1.2. Augmented Reality-Assisted Neuronavigation: Workflow

4.2. Augmented Reality-Assisted Neuronavigation in Transsphenoidal Surgery (TS)

4.2.1. Augmented Reality’s Application in Microscopic Transsphenoidal Surgery (MTS)

4.2.2. Augmented Reality’s Application in Endoscopic Transsphenoidal Surgery (ETS)

4.3. AR’s Application in ETS (Cadavers or Experimental Models)

4.4. AR’s Application in ETS (Vivo)

5. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lapeer, R.; Chen, M.S.; Gonzalez, G.; Linney, A.; Alusi, G. Image-enhanced surgical navigation for endoscopic sinus surgery: Evaluating calibration, registration and tracking. Int. J. Med. Robot. 2008, 4, 32–45, Erratum in Int. J. Med. Robot. 2008, 4, 286. [Google Scholar] [CrossRef]

- Brigham, T.J. Reality Check: Basics of Augmented, Virtual, and Mixed Reality. Med. Ref. Serv. Q. 2017, 36, 171–178. [Google Scholar] [CrossRef]

- Drake-Brockman, T.F.; Datta, A.; von Ungern-Sternberg, B.S. Patient monitoring with Google Glass: A pilot study of a novel monitoring technology. Paediatr. Anaesth. 2016, 26, 539–546. [Google Scholar] [CrossRef]

- Kelly, P.J.; Alker, G.J., Jr.; Goerss, S. Computer-assisted stereotactic microsurgery for the treatment of intracranial neoplasms. Neurosurgery 1982, 10, 324–331. [Google Scholar] [CrossRef]

- Roberts, D.W.; Strohbehn, J.W.; Hatch, J.F.; Murray, W.; Kettenberger, H. A frameless stereotaxic integration of computerized tomographic imaging and the operating microscope. J. Neurosurg. 1986, 65, 545–549. [Google Scholar] [CrossRef] [PubMed]

- Cavallo, L.M.; Frank, G.; Cappabianca, P.; Solari, D.; Mazzatenta, D.; Villa, A.; Zoli, M.; D’Enza, A.I.; Esposito, F.; Pasquini, E. The endoscopic endonasal approach for the management of craniopharyngiomas: A series of 103 patients. J. Neurosurg. 2014, 121, 100–113. [Google Scholar] [CrossRef] [PubMed]

- Cavallo, L.M.; Somma, T.; Solari, D.; Iannuzzo, G.; Frio, F.; Baiano, C.; Cappabianca, P. Endoscopic endonasal transsphenoidal surgery: History and evolution. World Neurosurg. 2019, 127, 686–694. [Google Scholar] [CrossRef] [PubMed]

- Chibbaro, S.; Cornelius, J.F.; Froelich, S.; Tigan, L.; Kehrli, P.; Debry, C.; Romano, A.; Herman, P.; George, B.; Bresson, D. Endoscopic endonasal approach in the management of skull base chordomas—Clinical experience on a large series, technique, outcome, and pitfalls. Neurosurg. Rev. 2014, 37, 217–224; discussion 224–225. [Google Scholar] [CrossRef] [PubMed]

- De Divitiis, E.; Cappabianca, P.; Cavallo, L.M.; Esposito, F.; de Divitiis, O.; Messina, A. Extended endoscopic transsphenoidal approach for extrasellar craniopharyngiomas. Neurosurgery 2007, 61 (Suppl. S2), 219–227; discussion 228. [Google Scholar] [CrossRef] [PubMed]

- Jho, H.-D.; Carrau, R.L. Endoscopic endonasal transsphenoidal surgery: Experience with 50 patients. J. Neurosurg. 1997, 87, 44–51. [Google Scholar] [CrossRef] [PubMed]

- Thavarajasingam, S.G.; Vardanyan, R.; Rad, A.A.; Thavarajasingam, A.; Khachikyan, A.; Mendoza, N.; Nair, R. The use of augmented reality in transsphenoidal surgery: A systematic review. Br. J. Neurosurg. 2022, 36, 457–471. [Google Scholar] [CrossRef] [PubMed]

- Batista, R.L.; Trarbach, E.B.; Marques, M.D.; Cescato, V.A.; da Silva, G.O.; Herkenhoff, C.G.B.; Cunha-Neto, M.B.; Musolino, N.R. Nonfunctioning pituitary adenoma recurrence and its relationship with sex, size, and hormonal immunohistochemical profile. World Neurosurg. 2018, 120, e241–e246. [Google Scholar] [CrossRef]

- Sasagawa, Y.; Tachibana, O.; Doai, M.; Hayashi, Y.; Tonami, H.; Iizuka, H.; Nakada, M. Carotid artery protrusion and dehiscence in patients with acromegaly. Pituitary 2016, 19, 482–487. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Kawamata, T.; Iseki, H.; Shibasaki, T.; Hori, T. Endoscopic augmented reality navigation system for endonasal transsphenoidal surgery to treat pituitary tumors: Technical note. Neurosurgery 2002, 50, 1393–1397. [Google Scholar] [CrossRef]

- Caversaccio, M.; Langlotz, F.; Nolte, L.P.; Häusler, R. Impact of a self-developed planning and self-constructed navigation system on skull base surgery: 10 years experience. Acta Otolaryngol. 2007, 127, 403–407. [Google Scholar] [CrossRef]

- Prisman, E.; Daly, M.J.; Chan, H.; Siewerdsen, J.H.; Vescan, A.; Irish, J.C. Real-time tracking and virtual endoscopy in cone-beam CT-guided surgery of the sinuses and skull base in a cadaver model. Int. Forum Allergy Rhinol. 2011, 1, 70–77. [Google Scholar] [CrossRef]

- Dixon, B.J.; Chan, H.; Daly, M.J.; Vescan, A.D.; Witterick, I.J.; Irish, J.C. The effect of augmented real-time image guidance on task workload during endoscopic sinus surgery. Int. Forum Allergy Rhinol. 2012, 2, 405–410. [Google Scholar] [CrossRef]

- Dixon, B.J.; Daly, M.J.; Chan, H.; Vescan, A.; Witterick, I.J.; Irish, J.C. Augmented real-time navigation with critical structure proximity alerts for endoscopic skull base surgery. Laryngoscope 2014, 124, 853–859. [Google Scholar] [CrossRef]

- Cabrilo, I.; Sarrafzadeh, A.; Bijlenga, P.; Landis, B.N.; Schaller, K. Augmented reality-assisted skull base surgery. Neurochirurgie 2014, 60, 304–306. [Google Scholar] [CrossRef]

- Li, L.; Yang, J.; Chu, Y.; Wu, W.; Xue, J.; Liang, P.; Chen, L. A Novel Augmented Reality Navigation System for Endoscopic Sinus and Skull Base Surgery: A Feasibility Study. PLoS ONE 2016, 11, e0146996. [Google Scholar] [CrossRef]

- Bong, J.H.; Song, H.J.; Oh, Y.; Park, N.; Kim, H.; Park, S. Endoscopic navigation system with extended field of view using augmented reality technology. Int. J. Med. Robot. 2018, 14, e1886. [Google Scholar] [CrossRef] [PubMed]

- Barber, S.R.; Wong, K.; Kanumuri, V.; Kiringoda, R.; Kempfle, J.; Remenschneider, A.K.; Kozin, E.D.; Lee, D.J. Augmented Reality, Surgical Navigation, and 3D Printing for Transcanal Endoscopic Approach to the Petrous Apex. OTO Open 2018, 2, 2473974X18804492. [Google Scholar] [CrossRef] [PubMed]

- Carl, B.; Bopp, M.; Voellger, B.; Saß, B.; Nimsky, C. Augmented Reality in Transsphenoidal Surgery. World Neurosurg. 2019, 125, e873–e883. [Google Scholar] [CrossRef] [PubMed]

- Lai, M.; Skyrman, S.; Shan, C.; Babic, D.; Homan, R.; Edström, E.; Persson, O.; Burström, G.; Elmi-Terander, A.; Hendriks, B.H.W.; et al. Fusion of augmented reality imaging with the endoscopic view for endonasal skull base surgery; a novel application for surgical navigation based on intraoperative cone beam computed tomography and optical tracking. PLoS ONE 2020, 15, e0227312, Erratum in PLoS ONE 2020, 15, e0229454. [Google Scholar] [CrossRef]

- Zeiger, J.; Costa, A.; Bederson, J.; Shrivastava, R.K.; Iloreta, A.M.C. Use of Mixed Reality Visualization in Endoscopic Endonasal Skull Base Surgery. Oper. Neurosurg. 2020, 19, 43–52. [Google Scholar] [CrossRef]

- Pennacchietti, V.; Stoelzel, K.; Tietze, A.; Lankes, E.; Schaumann, A.; Uecker, F.C.; Thomale, U.W. First experience with augmented reality neuronavigation in endoscopic assisted midline skull base pathologies in children. Child’s Nerv. Syst. 2021, 37, 1525–1534. [Google Scholar] [CrossRef]

- Bopp, M.H.A.; Saß, B.; Pojskić, M.; Corr, F.; Grimm, D.; Kemmling, A.; Nimsky, C. Use of Neuronavigation and Augmented Reality in Transsphenoidal Pituitary Adenoma Surgery. J. Clin. Med. 2022, 11, 5590. [Google Scholar] [CrossRef]

- Goto, Y.; Kawaguchi, A.; Inoue, Y.; Nakamura, Y.; Oyama, Y.; Tomioka, A.; Higuchi, F.; Uno, T.; Shojima, M.; Kin, T.; et al. Efficacy of a Novel Augmented Reality Navigation System Using 3D Computer Graphic Modeling in Endoscopic Transsphenoidal Surgery for Sellar and Parasellar Tumors. Cancers 2023, 15, 2148. [Google Scholar] [CrossRef]

- Cho, J.; Rahimpour, S.; Cutler, A.; Goodwin, C.R.; Lad, S.P.; Codd, P. Enhancing Reality: A Systematic Review of Augmented Reality in Neuronavigation and Education. World Neurosurg. 2020, 139, 186–195. [Google Scholar] [CrossRef]

- Kundu, M.; Ng, J.C.; Awuah, W.A.; Huang, H.; Yarlagadda, R.; Mehta, A.; Nansubuga, E.P.; Jiffry, R.; Abdul-Rahman, T.; Ou Yong, B.M.; et al. NeuroVerse: Neurosurgery in the era of Metaverse and other technological breakthroughs. Postgrad. Med. J. 2023, 99, 240–243. [Google Scholar] [CrossRef]

- Maurer, C.R., Jr.; Fitzpatrick, J.M.; Wang, M.Y.; Galloway, R.L., Jr.; Maciunas, R.J.; Allen, G.S. Registration of head volume images using implantable fiducial markers. IEEE Trans. Med. Imaging 1997, 16, 447–462. [Google Scholar] [CrossRef]

- Labadie, R.F.; Davis, B.M.; Fitzpatrick, J.M. Image-guided surgery: What is the accuracy? Curr. Opin. Otolaryngol. Head Neck Surg. 2005, 13, 27–31. [Google Scholar] [CrossRef] [PubMed]

- West, J.B.; Fitzpatrick, J.M.; Toms, S.A.; Maurer, C.R., Jr.; Maciunas, R.J. Fiducial point placement and the accuracy of point-based, rigid body registration. Neurosurgery 2001, 48, 810–816; discussion 816–817. [Google Scholar] [CrossRef]

- Citardi, M.J.; Batra, P.S. Intraoperative surgical navigation for endoscopic sinus surgery: Rationale and indications. Curr. Opin. Otolaryngol. Head Neck Surg. 2007, 15, 23–27. [Google Scholar] [CrossRef] [PubMed]

- Citardi, M.J.; Yao, W.; Luong, A. Next-Generation Surgical Navigation Systems in Sinus and Skull Base Surgery. Otolaryngol. Clin. North Am. 2017, 50, 617–632. [Google Scholar] [CrossRef]

- Snyderman, C.; Zimmer, L.A.; Kassam, A. Sources of registration error with image guidance systems during endoscopic anterior cranial base surgery. Otolaryngol. Head Neck Surg. 2004, 131, 145–149. [Google Scholar] [CrossRef]

- Cappabianca, P.; Solari, D. The endoscopic endonasal approach for the treatment of recurrent or residual pituitary adenomas: Widening what to see expands what to do? World Neurosurg. 2012, 77, 455–456. [Google Scholar] [CrossRef] [PubMed]

- Kiya, N.; Dureza, C.; Fukushima, T.; Maroon, J.C. Computer navigational microscope for minimally invasive neurosurgery. Minim Invasive Neurosurg. 1997, 40, 110–115. [Google Scholar] [CrossRef]

- Nakamura, M.; Tamaki, N.; Tamura, S.; Yamashita, H.; Hara, Y.; Ehara, K. Image-guided microsurgery with the Mehrkoordinaten Manipulator system for cerebral arteriovenous malformations. J. Clin. Neurosci. 2000, 7 (Suppl. S1), 10–13. [Google Scholar] [CrossRef]

- Brinker, T.; Arango, G.; Kaminsky, J.; Samii, A.; Thorns, U.; Vorkapic, P.; Samii, M. An experimental approach to image guided skull base surgery employing a microscope-based neuronavigation system. Acta Neurochir. 1998, 140, 883–889. [Google Scholar] [CrossRef] [PubMed]

- Eliashar, R.; Sichel, J.Y.; Gross, M.; Hocwald, E.; Dano, I.; Biron, A.; Ben-Yaacov, A.; Goldfarb, A.; Elidan, J. Image guided navigation system—A new technology for complex endoscopic endonasal surgery. Postgrad. Med. J. 2003, 79, 686–690. [Google Scholar] [CrossRef] [PubMed]

- Heermann, R.; Schwab, B.; Issing, P.R.; Haupt, C.; Hempel, C.; Lenarz, T. Image-guided surgery of the anterior skull base. Acta Otolaryngol. 2001, 121, 973–978. [Google Scholar] [CrossRef] [PubMed]

| Authors | Study Design | Nr of Patients and Sex | Age | Test Subjects | Pathology | Landmark Identification | AR Display Device(s) | AR Technique | Accuracy Results | Surgical Time | Reoperation or Anatomical Variants | Outcomes | AR Surgeons’ Perspective |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kawamata et al. 2002 [15] | Technical note | 12 | N/S | Vivo | Pituitary adenomas (9), craniopharyngioma (1), Rathke’s cleft cyst (1), chordoma (1) | Internal carotid arteries, optic nerves, sphenoid sinuses, sphenopalatine foramen midline, vidian nerve, pituitary gland | Pentium III-based personal computer | Virtual 3D anatomical images overlaid on live endoscopic images | TRE was less than 2 mm | N/S | N/S | N/S | Surgeons can define the exact real-time surgical orientation |

| Caversaccio et al. 2007 [16] | Retrospective cohort study | 313 | N/S | Vivo | Recurrent polyposis nasi (Widal), chronic sinusitis (181), biopsy (29), frontal sinus recess revision (29), tumor (22) sphenoid sinus (fungi) (18), mucocele (11), choanal atresia (8), CSF leak (7), recurrent cystic fibrosis (6), embolization (1), crista galli cyst (1) | N/S | Color-coded AR images superimposed onto the endoscopic or microscopic view | Virtual 3D anatomical images overlaid on live endoscopic or microscopic images | 1.1–1.8 mm accuracy for position detection | Reduces surgery time by 10–25 min | Recurrent cystic fibrosis (6) | An improvement in the quality of patient outcomes was observed compared to the control group and the literature | N/S |

| Lapeer et al. 2008 [1] | Original article | N/S | N/S | Experimental model | N/S | N/S | AR images superimposed onto the endoscopic view | Virtual 3D anatomical images overlaid on live endoscopic images | The TRE near ROI was 2.3 ± 0.4 mm | N/S | N/S | N/S | N/S |

| Prisman et al. 2011 [17] | Original article | 3 | N/S | Cadaver dissection | N/S | Pituitary gland turbinate, hiatus semilunaris, infundibulum, ethmoid bullae, agger nasi, frontal recess, fovea ethmoidalis, cribriform, lateral papyracea, sphenoethmoid recess, infraorbital nerve, sella | AR images superimposed onto the endoscopic view | Virtual 3D anatomical images overlaid on live endoscopic images | TRE ≤ 2 m | N/S | N/S | N/S | AR was defined as a useful tool for all surgical tasks, especially in the spatial understanding of anatomical structures |

| Dixon et al. 2012 [18] | Original article | N/S | N/S | Cadaver dissection | N/S | Internal carotid arteries, optic nerves, pituitary gland, pterygopalatine fossa, periorbital fossa | AR images superimposed onto the endoscopic view | Virtual 3D anatomical images overlaid on live endoscopic images | TRE was 1–2mm | The system was considered sufficiently accurate by most surgeons | N/S | N/S | Mental demand, time demand, effort and frustration were significantly reduced |

| Dixon et al. 2013 [19] | Randomized-controlled trial plus qualitative analysis | 14 | N/S | Cadaver dissection | N/S | Internal carotid arteries, optic nerves, pituitary gland, orbits | AR images superimposed onto the endoscopic view | Virtual 3D anatomical images overlaid on live endoscopic images | TRE were in line with current clinical practice < 2 mm | N/S | N/S | N/S | Mental demand, time demand, effort and frustration were significantly reduced |

| Cabrilo et al. 2014 [20] | Technical note | 1 M | 46 years | Vivo | Recurrent inferior clivus chordoma | Tumor, internal carotid arteries, vertebral arteries | AR images superimposed onto the microscope’s ocular | Virtual 3D anatomical images overlaid on live microscopic images | N/S | N/S | Fibrosis from previous surgery, making it difficult to assess the depth of the tumor | N/S | It reduces the surgeon’s need to mentally match neuronavigation data to the surgical field. |

| Li et al. 2016 [21] | Research article | N/S | N/S | Cadaver dissection and Experimental model | N/S | Piriform aperture vertex, bilateral supraorbital foramen, turbinate, anterior and posterior clinoid process | AR images superimposed onto the endoscopic view | Virtual 3D anatomical images overlaid on live endoscopic images | TRE was 1.28 ± 0.45 mm | 88.27 ± 20.45 for AR-neuronavigation system vs. 104.93 ± 24.61 min for the conventional one | N/S | N/S | Mental and time demand, such as effort and frustration, were significantly reduced |

| Bong et al. 2018 [22] | Original article | N/S | N/S | Experimental model | N/S | N/S | AR images superimposed onto the endoscopic view | Virtual 3D anatomical images overlaid on live endoscopic images | TRE was <1 mm | Total operation time was lightly longer with the AR system (18.26 ± 7.82 s) than in the control group (13.75 ± 6.30 s). | N/S | N/S | Surgeons performed the task with less mental effort |

| Barber et al. 2018 [23] | Short scientific communication | 1 F | 48 years | Vivo | Petrous apex cyst (1) | internal carotid arteries, jugular bulb, facial nerve, petrous apex cyst, cochlea, semicircular canal, sigmoid sinus | AR app built for Android mobiles | 3D-printed CT scans were imported onto a the Stealth3D workstation | The range of error between landmarks was 0.7 mm | N/S | N/S | Patient experienced symptomatic relief one year postoperatively | AR support allows surgeons to avoid critical structures |

| Carl et al. 2019 [24] | Original article | 47 (28 M, 19 F) | 55.25 (range 16–85 years) | Vivo | Recurrent inactive adenoma (14), recurrent Cushing disease (3), acromegaly, kissing carotid arteries (3), Rathke cyst, incomplete pneumatization (3), recurrent Rathke cyst (3), recurrent acromegaly (2), Inactive macroadenoma (2), recurrent craniopharyngioma (1), cavernous sinus meningioma (1), Cushing disease, incomplete pneumatization(1), recurrent prolactinoma (1), clival ependymoma (1), recurrent pleomorphic sarcoma (1), clival fibrous tumor (1), craniopharyngioma (1), sphenoidal adenoid cystic carcinoma (1), sphenoidal aspergilloma and intracavernous aneurysm (1), fibrous dysplasia (1), CSF fistula after adenoma resection (1), clival lipoma (1), sphenoidal giant inactive adenoma (1), adenoma, Addison (1), sphenoidal giant inactive adenoma (1), sphenoidal prostate carcinoma metastasis (1) | Internal carotid arteries, skull base, optic nerve | Heads-up microscopic display | AR was established using the heads-up displays integrated into operating microscopes | The TRE in patients with fiducial-based registration was 2.33 ± 1.30 mm. The TRE in the iCT-based registration group was 0.83 ± 0.44 | N/S | 28 reoperations | AR was reported to increase intraoperative orientation markedly | AR support increases surgeon security and awareness |

| Lai et al. 2020 [25] | Research article | N/S | N/S | Experimental model | N/S | Two inserts simulating the internal carotid arteries, one insert simulating the optic nerve and one insert to simulate the pituitary gland | AR images superimposed onto the endoscopic view | Virtual 3D anatomical images overlaid on live endoscopic images | TRE was 0.55 | N/S | N/S | N/S | N/S |

| Zeiger et al. 2020 [26] | Retrospective cohort study | 134 (64 F, 70 M) | 56.4 ± 14.6 years | Vivo | Nonsecretory pituitary tumor (53), secretory pituitary tumor (15), meningioma (16), Rathke’s cyst (10), chronic rhinosinusitis (7), chordoma (3), CSF leak (3), epidermoid cyst (3), olfactory neuroblastoma (3), pituitary cyst (2), craniopharyngioma (2), mucocele (2), encephalocele (2), squamous cell carcinoma (2), inverted papilloma (1), juvenile nasopharyngeal angiofibroma (1), acute invasive fungal sinusitis (1), malignant mixed germ cell tumor (1), myxoid chondrosarcoma (1), nasopharyngeal carcinoma (1), plasmacytoma (1), sarcoid (1), pituitary abscess (1), ectopic pituitary tumor (1), sinonasal sarcoma (1) | Internal carotid arteries, vomer, sphenoid, optico-carotid recess | AR images superimposed onto the endoscopic view using EndoSNAP | Virtual 3D anatomical images overlaid on live endoscopic images using EndoSNAP | Surgeons reported that the system was accurate in almost all cases. | Mean operative time was 3 h and 48 min ± 2 h and 3 min | N/S | Hospital re-admission (6.7%), hyponatraemia (6.5%), DI (6.5%) | AR support allows surgeons to identify the delicate structures in order to properly preserve them |

| Pennacchietti et al. 2021 [27] | Case series | 11 (6 M, 5 F) | 14.5 ± 2.4 years | Vivo | Craniopharyngio (1), Rathke’s cysts (3), GH-secreting macroadenoma (1), myxoma (1), germinoma (1), aneurysmal bone cyst (1), CSF leak (1), benign fibrous lesion (1), osteochondromyxoma (1) | Internal carotid arteries, optic nerves, tuberculum sellae, optico-carotid recesses, dorsum sella | AR images superimposed onto the endoscopic view. | Virtual 3D anatomical images overlaid on live endoscopic images | N/S | Mean duration of surgery was 146.7 ± 52.6 min (range: 27–258 min) | Craniopharyngioma (4 surgeries), Myxoma (3 surgeries) and 1 recurrent Rathke’s cleft cyst | Complete remissions (8), progressive craniopharyngioma (1), stable diseases (4), panhypopituitarism (3) | AR-assisted neuronavigation was found to be extremely helpful with its approach guidance |

| Bopp et al. 2022 [28] | Prospective cohort study | 84 (41 M, 43 F) | 55.95 ± 17.65 years | Vivo | PitNET (2), not specified (82) | Tumor, internal carotid arteries, chiasm, optic nerves | Heads-up microscopic display | Virtual 3D anatomical images overlaid on live microscopic images | The TRE was 0.76 ± 0.33 mm | The mean surgery time was 69.87 ± 24.71 min. The patient preparation time was 44.13 ± 13.67 | N/S | Intraoperative CSF leakage (42.86%), major complications (0%), postoperative CSF fistula (3.57%) | AR significantly and reliably facilitated surgical orientation, increasing surgeons’ comfort and patient safety, especially for anatomical variants |

| Goto et al. 2023 [29] | Original article | 15 (8 M, 7 F) | 55.88 (range 16–82 years) | Vivo | Petroclival meningioma (2), NF-PitNET (5), PitNET (1), skull base chondrosarcoma (2), intraorbital cavernous malformation (1), craniopharyngioma (1), chordoma of the craniovertebral junction (1), meningioma of the tubercle sellae (1), somatotroph-PitNET (1) | Tumor, internal carotid arteries, sphenoid sinus, clival recess, optic canals and carotid prominence, and the saddle floor | 3D images were superimposed onto the second monitor in preparation for the topographic adjustment. | Virtual 3D anatomical images overlaid on live endoscopic images using EndoSNAP | N/S. | N/S | N/S | N/S | AR support was superior to conventional systems in providing a direct 3D view of the tumor and surrounding structures |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Campisi, B.M.; Costanzo, R.; Gulino, V.; Avallone, C.; Noto, M.; Bonosi, L.; Brunasso, L.; Scalia, G.; Iacopino, D.G.; Maugeri, R. The Role of Augmented Reality Neuronavigation in Transsphenoidal Surgery: A Systematic Review. Brain Sci. 2023, 13, 1695. https://doi.org/10.3390/brainsci13121695

Campisi BM, Costanzo R, Gulino V, Avallone C, Noto M, Bonosi L, Brunasso L, Scalia G, Iacopino DG, Maugeri R. The Role of Augmented Reality Neuronavigation in Transsphenoidal Surgery: A Systematic Review. Brain Sciences. 2023; 13(12):1695. https://doi.org/10.3390/brainsci13121695

Chicago/Turabian StyleCampisi, Benedetta Maria, Roberta Costanzo, Vincenzo Gulino, Chiara Avallone, Manfredi Noto, Lapo Bonosi, Lara Brunasso, Gianluca Scalia, Domenico Gerardo Iacopino, and Rosario Maugeri. 2023. "The Role of Augmented Reality Neuronavigation in Transsphenoidal Surgery: A Systematic Review" Brain Sciences 13, no. 12: 1695. https://doi.org/10.3390/brainsci13121695