“When You’re Smiling”: How Posed Facial Expressions Affect Visual Recognition of Emotions

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Stimuli

2.3. Procedures

2.4. Behavioral Data Analyses

2.5. fMRI Data Acquisition and Analyses

3. Results

3.1. Behavioral Data

3.2. fMRI Data

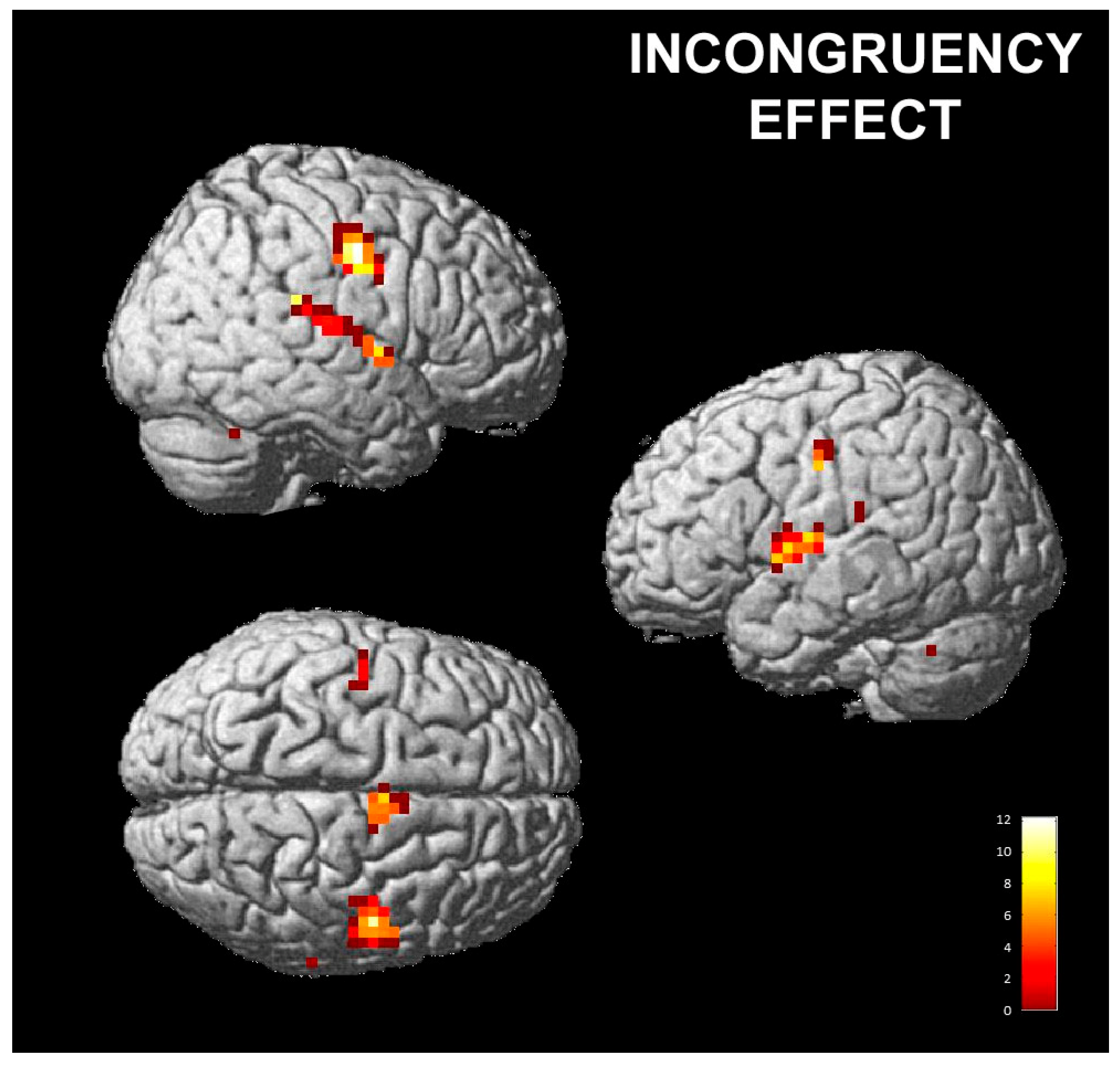

3.2.1. Effect of Posed Emotions on Perceived Emotions

3.2.2. Correlations with Empathy Subscales

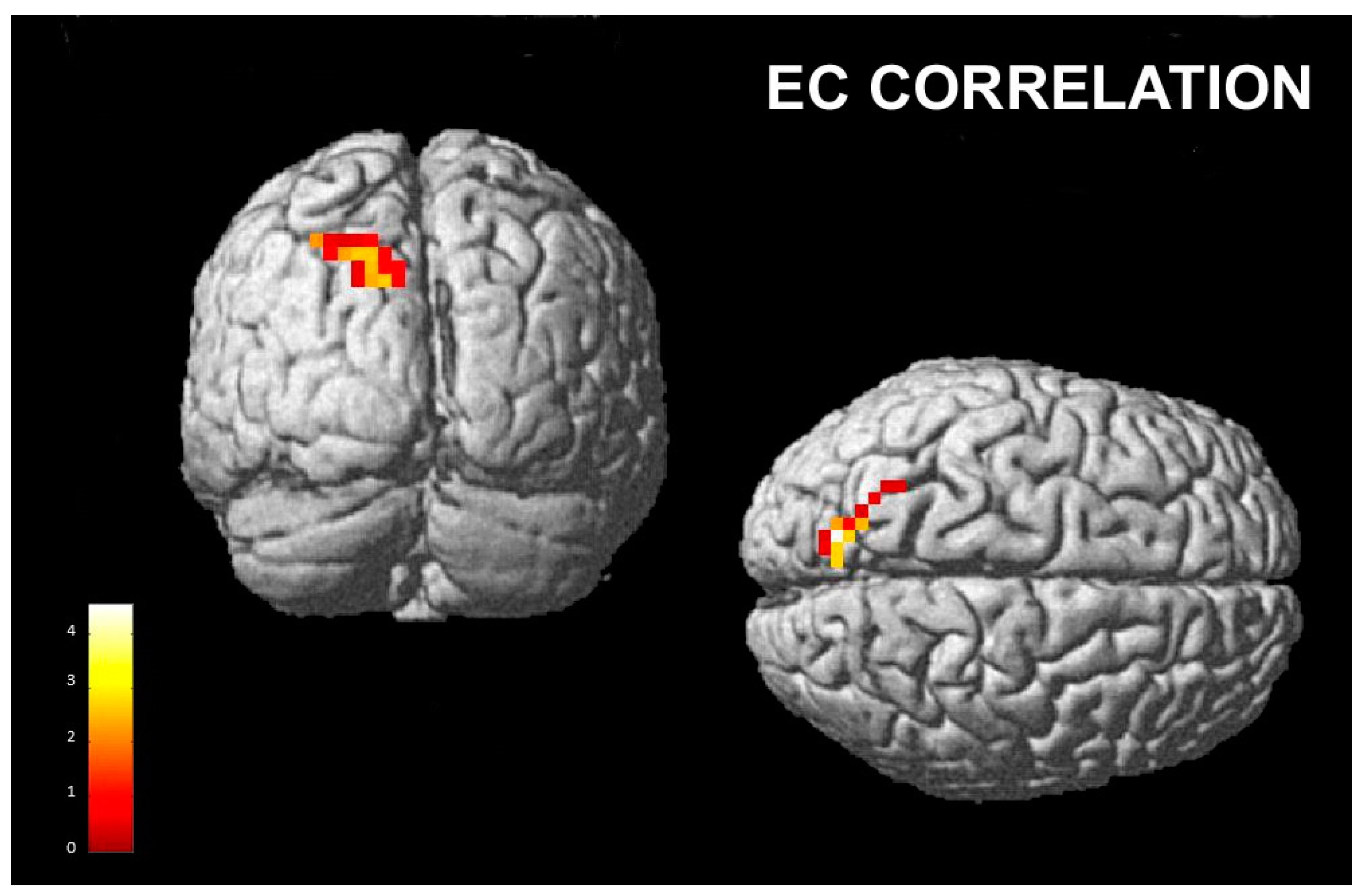

- Empathic Concern Subscale

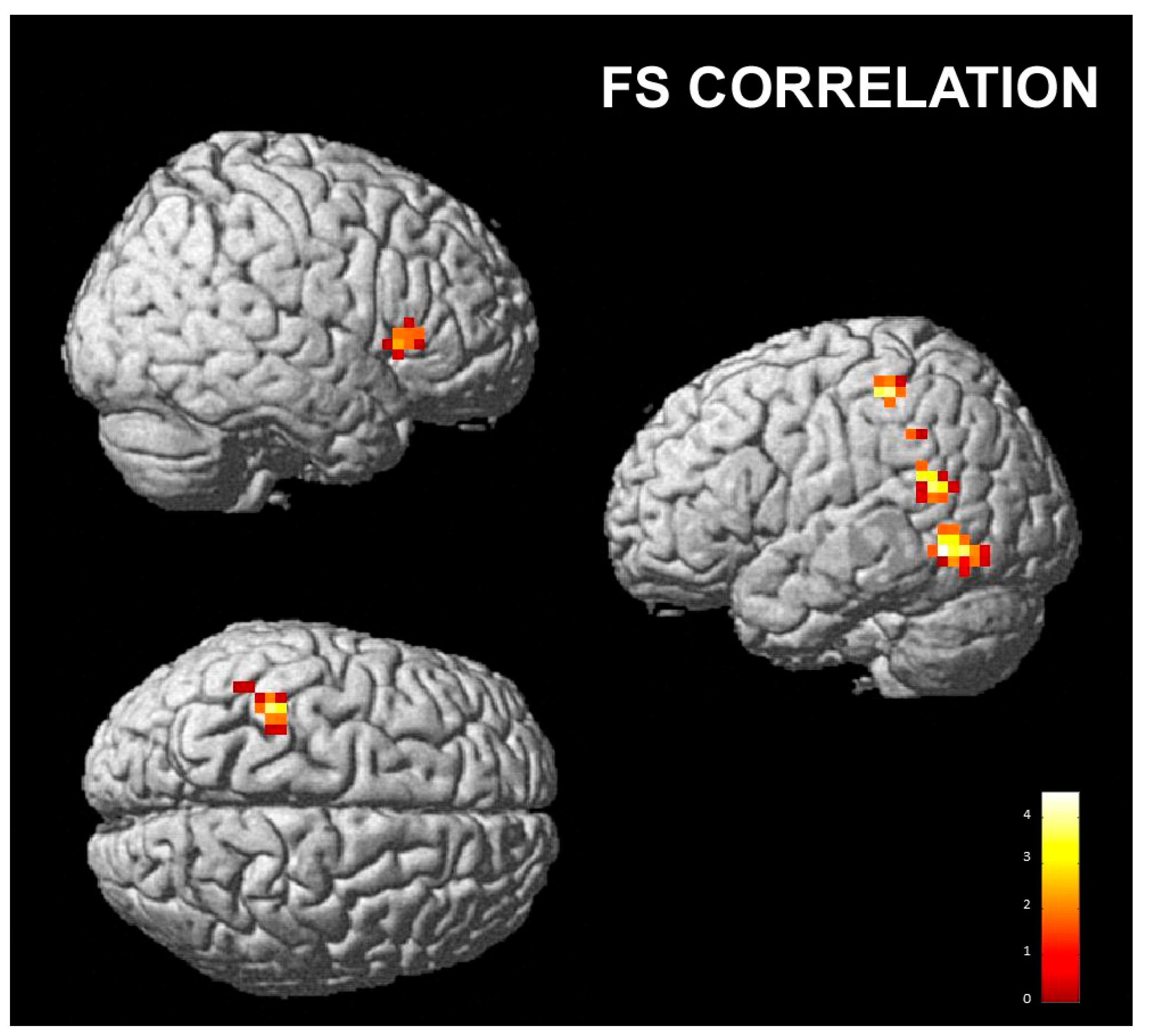

- Fantasy subscale

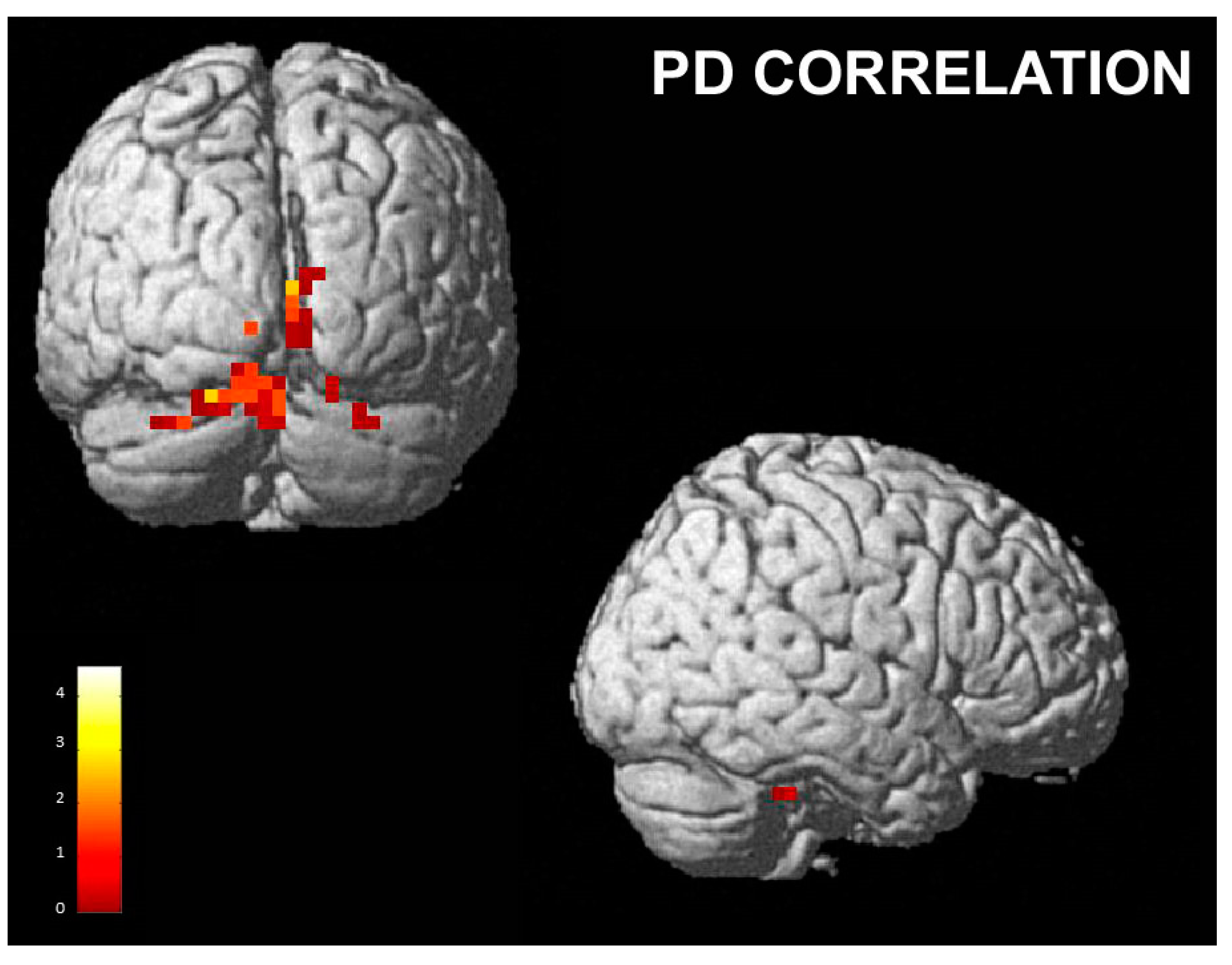

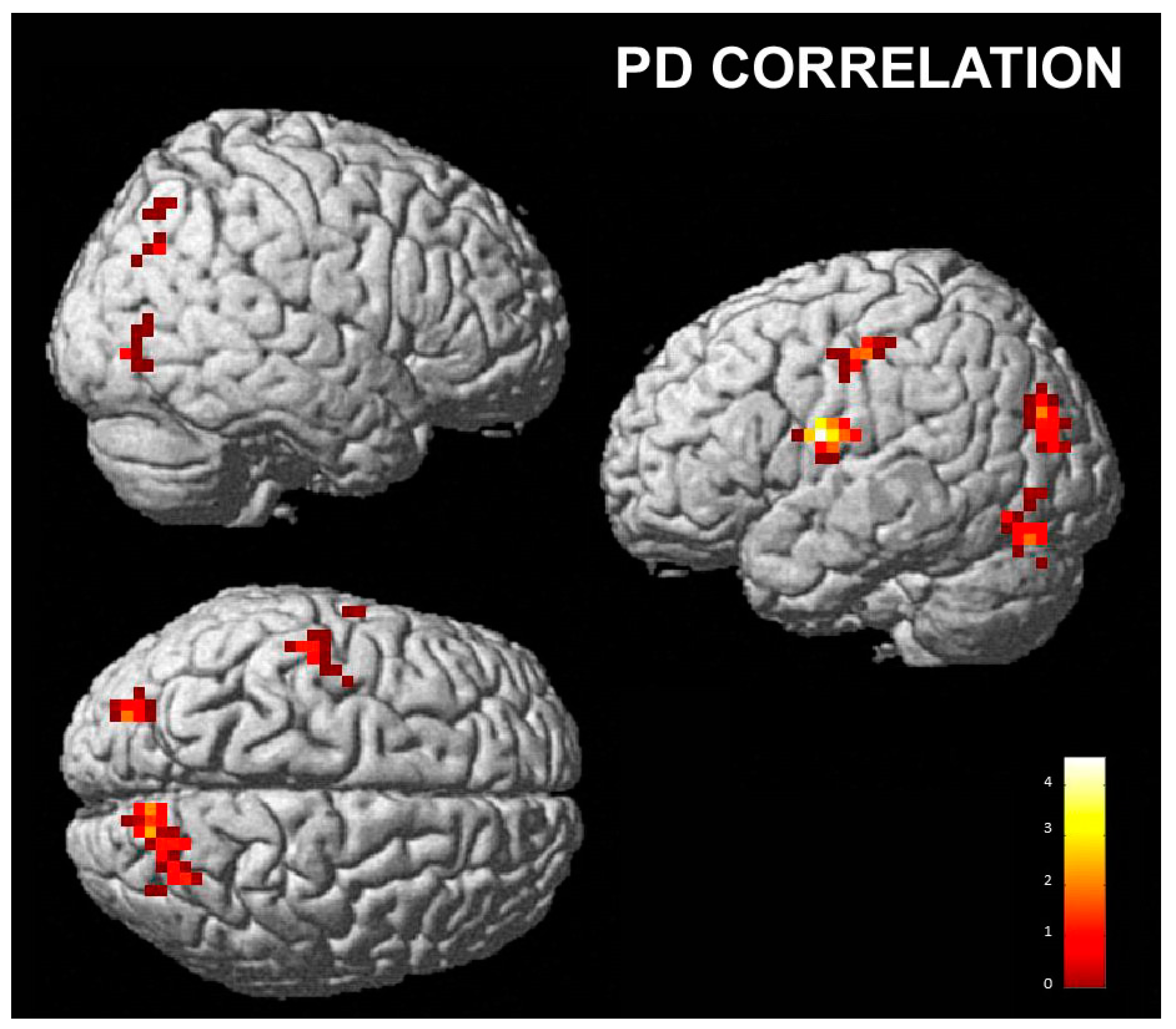

- Personal distress subscale

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| fMRI | Functional Magnetic Resonance |

| IRI | Interpersonal Reactivity Index |

| PT | Perspective Taking |

| FS | Fantasy |

| EC | Empathic Concern |

| PD | Personal Distress |

| EMG | Electromyography |

| emoticons (posed emotion): | |

| H | Happy |

| D | Disgusted |

| N | Neutral |

| perceived emotions: | |

| h | Happiness |

| d | Disgust |

| TR | Repetition time |

| BNC | Bayonet Neill Concelma |

| AAS | Average artefact subtraction |

| TE | Echo Time |

| SPM | Statistical Parametric Mapping |

| FWE | Family Wise Error |

| AI | Anterior insula |

| IFG | Inferior Frontal Gyrus |

| BA | Brodmann area |

| l | Left |

| r | Right |

References

- Darwin, C. The Expression of Emotions in Man and Animals; Oxford University Press: London, UK, 1872. [Google Scholar]

- Ekman, P.; Cordaro, D. What is meant by calling emotions basic. Emot. Rev. 2011, 3, 364–370. [Google Scholar] [CrossRef]

- Elfenbein, H.A. Nonverbal dialects and accents in facial expressions of emotion. Emot. Rev. 2013, 5, 90–96. [Google Scholar] [CrossRef]

- Elfenbein, H.A. Emotional dialects in the language of emotion. In The Science of Facial Expression; Fernandez-Dols, J.-M., Russell, J.A., Eds.; Oxford University Press: New York, NY, USA, 2017; pp. 479–496. [Google Scholar]

- Matsumoto, D. Cultural similarities and differences in display rules. Motiv. Emot. 1990, 14, 195–214. [Google Scholar] [CrossRef]

- Matsumoto, D.; Keltner, D.; Shiota, M.N.; O’Sullivan, M.; Frank, M. Facial expressions of emotion. In Handbook of Emotions, 3rd ed.; Lewis, M., Haviland-Jones, J.M., Barrett, L.F., Eds.; The Guilford Press: New York, NY, USA, 2008; pp. 211–234. [Google Scholar]

- Tracy, J.L.; Randles, D. Four models of basic emotions: A review of Ekman and Cordaro, Izard, Levenson, and Panksepp and Watt. Emot. Rev. 2011, 3, 397–405. [Google Scholar] [CrossRef]

- Niedenthal, P.M. Embodying emotion. Science 2007, 316, 1002–1005. [Google Scholar] [CrossRef] [PubMed]

- Niedenthal, P.M.; Mondillon, L.; Winkielman, P.; Vermeulen, N. Embodiment of emotion concepts. J. Pers. Soc. Psychol. 2009, 96, 1120–1136. [Google Scholar] [CrossRef]

- Niedenthal, P.M.; Mermillod, M.; Maringer, M.; Hess, U. The Simulation of Smiles (SIMS) model: Embodied simulation and the meaning of facial expression. Behav. Brain Sci. 2010, 33, 417–433. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef]

- Kilner, J.M.; Friston, K.J.; Frith, C.D. Predictive coding: An account of the mirror neuron system. Cogn. Process. 2007, 8, 159–166. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Fogassi, L.; Gallese, V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nat. Rev. Neurosci. 2001, 2, 661–670. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Sinigaglia, C. The functional role of the parieto-frontal mirror circuit: Interpretations and misinterpretations. Nat. Rev. Neurosci. 2010, 11, 264–274. [Google Scholar] [CrossRef]

- Iacoboni, M. Imitation, empathy, and mirror neurons. Annu. Rev. Psychol. 2009, 60, 653–670. [Google Scholar] [CrossRef]

- Tramacere, A.; Ferrari, P.F. Faces in the mirror, from the neuroscience of mimicry to the emergence of mentalizing. J. Anthr. Sci. 2016, 94, 113–126. [Google Scholar]

- Benuzzi, F.; Lui, F.; Ardizzi, M.; Ambrosecchia, M.; Ballotta, D.; Righi, S.; Pagnoni, G.; Gallese, V.; Porro, C.A. Pain Mirrors: Neural Correlates of Observing Self or Others’ Facial Expressions of Pain. Front. Psychol. 2018, 9, 1825. [Google Scholar] [CrossRef]

- Wood, A.; Rychlowska, M.; Korb, S.; Niedenthal, P. Fashioning the face: Sensorimotor simulation contributes to facial expression recognition. Trends Cogn. Sci. 2016, 20, 227–240. [Google Scholar] [CrossRef]

- Goldman, A.I.; Sripada, C.S. Simulationist models of face-based emotion recognition. Cognition 2005, 94, 193–213. [Google Scholar] [CrossRef] [PubMed]

- Korb, S.; Grandjean, D.; Scherer, K.R. Timing and voluntary suppression of facial mimicry to smiling faces in a go/nogo task—An EMG study. Biol. Psychol. 2010, 85, 347–349. [Google Scholar] [CrossRef] [PubMed]

- Krumhuber, E.G.; Likowski, K.U.; Weyers, P. Facial mimicry of spontaneous and deliberate Duchenne and non-Duchenne smiles. J. Nonverbal Behav. 2014, 38, 1–11. [Google Scholar] [CrossRef]

- Adelmann, P.K.; Zajonc, R.B. Facial efference and the experience of emotion. Ann. Rev. Psychol. 1989, 40, 249–280. [Google Scholar] [CrossRef] [PubMed]

- Buck, R. Nonverbal behavior and the theory of emotion: The facial feedback hypothesis. J. Personal. Soc. Psychol. 1980, 38, 811–824. [Google Scholar] [CrossRef]

- McIntosh, D.N. Facial feedback hypothesis: Evidence, implications, and directions. Motiv. Emot. 1996, 20, 121–147. [Google Scholar] [CrossRef]

- Dimberg, U.; Söderkvist, S. The voluntary facial action technique: A method to test the facial feedback hypothesis. J. Nonverbal Behav. 2011, 35, 17–33. [Google Scholar] [CrossRef]

- Söderkvist, S.; Ohlén, K.; Dimberg, U. How the Experience of Emotion is Modulated by Facial Feedback. J. Nonverbal Behav. 2018, 42, 129–151. [Google Scholar] [CrossRef]

- Laird, J.D. Self-attribution of emotion: The effects of expressive behavior on the quality of emotional experience. J. Personal. Soc. Psychol. 1974, 29, 475–486. [Google Scholar] [CrossRef] [PubMed]

- Rutledge, L.L.; Hupka, R.B. The facial feedback hypothesis: Methodological concerns and new supporting evidence. Motiv. Emot. 1985, 9, 219–240. [Google Scholar] [CrossRef]

- Flack, W.F.; Laird, J.D.; Cavallaro, L.A. Separate and combined effects of facial expressions and bodily postures on emotional feelings. Eur. J. Soc. Psychol. 1999, 29, 203–217. [Google Scholar] [CrossRef]

- Lewis, M.B. Exploring the positive and negative implications of facial feedback. Emotion 2012, 12, 852–859. [Google Scholar] [CrossRef]

- Coles, N.A.; Larsen, J.T.; Lench, H.C. A meta-analysis of the facial feedback literature: Effects of facial feedback on emotional experience are small and variable. Psychol. Bull. 2019, 145, 610–651. [Google Scholar] [CrossRef] [PubMed]

- Dimberg, U.; Thunberg, M. Rapid facial reactions to emotional facial expressions. Scand. J. Psychol. 1998, 39, 39–45. [Google Scholar] [CrossRef]

- Dimberg, U.; Thunberg, M.; Elmehed, K. Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 2000, 11, 86–89. [Google Scholar] [CrossRef] [PubMed]

- Hess, U.; Fischer, A. Emotional mimicry as social regulation. Personal. Soc. Psychol. Rev. 2013, 17, 142–157. [Google Scholar] [CrossRef]

- Moody, E.J.; McIntosh, D.N.; Mann, L.J.; Weisser, K.R. More than mere mimicry? The influence of emotion on rapid facial reactions to faces. Emotion 2007, 7, 447–457. [Google Scholar] [CrossRef] [PubMed]

- Achaibou, A.; Pourtois, G.; Schwartz, S.; Vuilleumier, P. Simultaneous recording of EEG and facial muscle reactions during spontaneous emotional mimicry. Neuropsychologia 2008, 46, 1104–1113. [Google Scholar] [CrossRef] [PubMed]

- Dimberg, U.; Petterson, M. Facial reactions to happy and angry facial expressions: Evidence for right hemisphere dominance. Psychophysiology 2000, 37, 693–696. [Google Scholar] [CrossRef] [PubMed]

- Lakin, J.L.; Jefferis, V.E.; Cheng, C.M.; Chartrand, T.L. The Chameleon effect as social glue: Evidence for the evolutionary significance of nonconscious mimicry. J. Nonverbal Behav. 2003, 27, 145–162. [Google Scholar] [CrossRef]

- Kulesza, W.; Dolinski, D.; Huisman, A.; Majewski, R. The Echo Effect: The power of verbal mimicry to influence prosocial behavior. J. Lang Soc. Psychol. 2014, 33, 183–201. [Google Scholar] [CrossRef]

- Bos, P.A.; Jap-tjong, N.; Spencer, H.; Hofman, D. Social context modulates facial imitation of children’s emotional expressions. PLoS ONE 2016, 11, e0167991. [Google Scholar] [CrossRef]

- Borgomaneri, S.; Bolloni, C.; Sessa, P.; Avenanti, A. Blocking facial mimicry affects recognition of facial and body expressions. PLoS ONE 2020, 15, e0229364. [Google Scholar] [CrossRef]

- Dimberg, U.; Andreasson, P.; Thunberg, M. Emotional empathy and facial reactions to facial expressions. J. Psychophysiol. 2011, 25, 26–31. [Google Scholar] [CrossRef]

- Prochazkova, E.; Kret, M.E. Connecting minds and sharing emotions through mimicry: A neurocognitive model of emotional contagion. Neurosci. Biobehav. Rev. 2017, 80, 99–114. [Google Scholar] [CrossRef]

- Sonnby-Borgström, M. Automatic mimicry reactions as related to differences in emotional empathy. Scand. J. Psychol. 2002, 43, 433–443. [Google Scholar] [CrossRef]

- Neal, D.T.; Chartrand, T.L. Embodied emotion perception: Amplifying and dampening facial feedback modulates emotion perception accuracy. Soc. Psychol. Personal. Sci. 2011, 2, 673–678. [Google Scholar] [CrossRef]

- Oberman, L.M.; Winkielman, P.; Ramachandran, V.S. Face to face: Blocking facial mimicry can selectively impair recognition of emotional expressions. Soc. Neurosci. 2007, 2, 167–178. [Google Scholar] [CrossRef] [PubMed]

- Ponari, M.; Conson, M.; D’Amico, N.P.; Grossi, D.; Troiano, L. Mapping Correspondence Between Facial Mimicry and Emotion Recognition in Healthy Subjects. Emotion 2012, 12, 1398–1403. [Google Scholar] [CrossRef] [PubMed]

- Ipser, A.; Cook, R. Blocking facial mimicry reduces perceptual sensitivity for facial expressions. J. Vis. 2015, 15, 1376. [Google Scholar] [CrossRef]

- Stel, M.; van Knippenberg, A. The role of facial mimicry in the recognition of affect. Psychol. Sci. 2008, 19, 984–985. [Google Scholar] [CrossRef] [PubMed]

- Sessa, P.; Schiano Lomoriello, A.; Luria, R. Neural measures of the causal role of observers’ facial mimicry on visual working memory for facial expressions. Soc. Cogn. Affect. Neurosci. 2018, 13, 1281–1291. [Google Scholar] [CrossRef]

- Lee, T.; Josephs, O.; Dolan, R.J.; Critchley, H.D. Imitating expressions: Emotion-specific neural substrates in facial mimicry. Soc. Cogn. Affect. Neurosci. 2006, 1, 122–135. [Google Scholar] [CrossRef] [PubMed]

- Hennenlotter, A.; Dresel, C.; Castrop, F.; Ceballos-Baumann, A.O.; Wohlschläger, A.M.; Haslinger, B. The Link between facial feedback and neural activity within central circuitries of emotion—New insights from botulinum toxin–induced denervation of frown muscles. Cereb. Cortex 2008, 19, 537–542. [Google Scholar] [CrossRef] [PubMed]

- Seibt, B.; Mühlberger, A.; Likowski, K.U.; Weyers, P. Facial mimicry in its social setting. Front. Psychol. 2015, 6, 1122. [Google Scholar] [CrossRef]

- Balconi, M.; Canavesio, Y. Is empathy necessary to comprehend the emotional faces? The empathic effect on attentional mechanisms (eye movements), cortical correlates (N200 event-related potentials) and facial behaviour (electromyography) in face processing. Cogn. Emot. 2016, 30, 210–224. [Google Scholar] [CrossRef] [PubMed]

- Harrison, N.A.; Morgan, R.; Critchley, H.D. From facial mimicry to emotional empathy: A role for norepinephrine? Soc. Neurosci. 2010, 5, 393–400. [Google Scholar] [CrossRef] [PubMed]

- Davis, M.H. Empathy: A Social Psychological Approach; Westview Press: Boulder, CO, USA, 1996. [Google Scholar]

- Oldfield, R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef] [PubMed]

- Decety, J.; Jackson, P.L. The functional architecture of human empathy. Behav. Cogn. Neurosci. Rev. 2004, 3, 71–100. [Google Scholar] [CrossRef]

- Singer, T.; Seymour, B.; O’Doherty, J.P.; Stephan, K.E.; Dolan, R.J.; Frith, C.D. Empathic neural responses are modulated by the perceived fairness of others. Nature 2006, 439, 466–469. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Pictures of Facial Affect; Consulting Psychologists Press: Palo Alto, CA, USA, 1976. [Google Scholar]

- Cacioppo, J.T.; Petty, R.E.; Losch, M.E.; Kim, H.S. Electromyographic activity over facial muscle regions can differentiate the valence and intensity of affective reactions. J. Personal. Soc. Psychol. 1986, 50, 260. [Google Scholar] [CrossRef]

- Fridlund, A.J.; Cacioppo, J.T. Guidelines for human electromyographic research. Psychophysiology 1986, 23, 567–589. [Google Scholar] [CrossRef]

- Allen, P.J.; Josephs, O.; Turner, R. A method for removing imaging artifact from continuous EEG recorded during functional MRI. Neuroimage 2000, 12, 230–239. [Google Scholar] [CrossRef]

- Davis, M.H. A multidimensional approach to individual differences in empathy. JSAS Catal. Sel. Docs. Psychol. 1980, 10, 85. [Google Scholar]

- Albiero, P.; Ingoglia, S.; Lo Coco, A. Contributo all’adattamento italiano dell’Interpersonal Reactivity Index. Test.-Psicometria-Metodol. 2006, 13, 107–125. [Google Scholar]

- Davis, M.H. Measuring individual differences in empathy: Evidence for a multidimensional approach. J. Personal. Soc. Psychol. 1983, 44, 113–126. [Google Scholar] [CrossRef]

- Talairach, J.; Tournoux, P. Co-Planar Stereotaxic Atlas of the Human Brain; Thieme Medical Publisher: New York, NY, USA, 1988. [Google Scholar]

- Chartrand, T.L.; Bargh, J.A. The chameleon effect: The perception—Behavior link and social interaction. J. Personal. Soc. Psychol. 1999, 76, 893–910. [Google Scholar] [CrossRef] [PubMed]

- Kret, M.E.; Fischer, A.H.; De Dreu, C.K.W. Pupil mimicry correlates with trust in in-group partners with dilating pupils. Psychol. Sci. 2015, 26, 1401–1410. [Google Scholar] [CrossRef]

- Dimberg, U. Facial electromyographic reactions and autonomic activity to auditory stimuli. Biol. Psychol. 1990, 31, 137–147. [Google Scholar] [CrossRef] [PubMed]

- Haxby, J.V.; Hoffman, E.A.; Gobbini, M.I. The distributed human neural system for face perception. Trends Cogn. Sci. 2000, 4, 223–233. [Google Scholar] [CrossRef]

- Braadbaart, L.; de Grauw, H.; Perrett, D.I.; Waiter, G.D.; Williams, J.H. The shared neural bases of empathy and facial imitation accuracy. Neuroimage 2014, 84, 367–375. [Google Scholar] [CrossRef]

- Ullsperger, M.; Harsay, H.A.; Wessel, J.R.; Ridderinkhof, K.R. Conscious perception of errors and its relation to the anterior insula. Anat. Embryol. 2010, 214, 629–643. [Google Scholar] [CrossRef]

- Carr, L.; Iacoboni, M.; Dubeau, M.; Mazziotta, J.C.; Lenzi, G.L. Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proc. Natl. Acad. Sci. USA 2003, 100, 5497–5502. [Google Scholar] [CrossRef] [PubMed]

- Wicker, B.; Keysers, C.; Plailly, J.; Royet, J.; Gallese, V.; Rizzolatti, G. Both of us disgusted in my insula: The common neural basis of seeing and feeling disgust. Neuron 2003, 40, 655–664. [Google Scholar] [CrossRef]

- Williams, J.H.G.; Nicolson, A.T.A.; Clephan, K.J.; De Grauw, H.; Perrett, D. A novel method testing the ability to imitate composite emotional expressions reveals an association with empathy. PLoS ONE 2013, 8, e61941. [Google Scholar] [CrossRef]

- Piaget, J. Play, Dreams, and Imitation in Childhood; Routledge and Kegan Paul: London, UK, 1951. [Google Scholar]

- Suddendorf, T.; Whiten, A. Mental evolution and development: Evidence for secondary representation in children, great apes and other animals. Psychol. Bull. 2001, 127, 629–650. [Google Scholar] [CrossRef] [PubMed]

- Damasio, A.; Carvalho, G.B. The nature of feelings: Evolutionary and neurobiological origins. Nat. Rev. Neurosci. 2013, 14, 143–152. [Google Scholar] [CrossRef]

- Wolpert, D.M.; Doya, K.; Kawato, M. A unifying computational framework for motor control and social interaction. Philos. Trans. R. Soc. B Biol. Sci. 2003, 358, 593–602. [Google Scholar] [CrossRef] [PubMed]

- Baron-Cohen, S.; Wheelwright, S. The empathy quotient: An investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. J. Autism Dev. Disord. 2004, 34, 163–175. [Google Scholar] [CrossRef] [PubMed]

- Gallese, V.; Goldman, A. Mirror neurons and the simulation theory of mind-reading. Trends Cogn. Sci. 1998, 2, 493–501. [Google Scholar] [CrossRef]

- Preston, S.D.; de Waal, F.B. Empathy: Its ultimate and proximate bases. Behav. Brain Sci. 2002, 25, 1–20. [Google Scholar] [CrossRef]

- Williams, J.H.; Whiten, A.; Suddendorf, T.; Perrett, D.I. Imitation, mirror neurons and autism. Neurosci. Biobehav. Rev. 2001, 25, 287–295. [Google Scholar] [CrossRef]

- Lord, C.; Risi, S.; Lambrecht, L.; Cook, E.H., Jr.; Leventhal, B.L.; Di Lavore, P.C.; Pickles, A.; Rutter, M. The autism diagnostic observation schedule-generic: A standard measure of social and communication deficits associated with the spectrum of autism. J Autism Dev. Disord. 2000, 30, 205–223. [Google Scholar] [CrossRef]

- Buckner, R.; Andrews-Hanna, J.; Schacter, D. The brain’s default network: Anatomy, function, and relevance to disease. Ann. N. Y. Acad. Sci. 2008, 1124, 1–38. [Google Scholar] [CrossRef]

- Raichle, M.; MacLeod, A.M.; Snyder, A.Z.; Powers, W.J.; Gusnard, D.A.; Shulman, G.L. A default mode of brain function. Proc. Natl. Acad. Sci. USA 2001, 98, 676–682. [Google Scholar] [CrossRef]

- Gusnard, D.; Akbudak, E.; Shulman, G.; Raichle, M. Medial prefrontal cortex and self-referential mental activity: Relation to a default mode of brain function. Proc. Natl. Acad. Sci. USA 2001, 98, 4259–4264. [Google Scholar] [CrossRef] [PubMed]

- Kabat-Zinn, J. Full Catastrophe Living: How to Cope with Stress, Pain and Illness Using Mindfulness Meditation; Delacorte: New York, NY, USA, 1990. [Google Scholar]

- O’Leary, K.; Dockray, S. The effects of two novel gratitude and mindfulness interventions on well-being. J. Altern. Complement. Med. 2015, 21, 243–245. [Google Scholar] [CrossRef] [PubMed]

- Kurth, F.; Luders, E.; Wu, B.; Black, D.S. Brain gray matter changes associated with mindfulness meditation in older adults: An exploratory pilot study using voxel-based morphometry. Neuro 2014, 1, 23–26. [Google Scholar] [PubMed]

- Way, B.M.; Creswell, J.D.; Eisenberger, N.I.; Lieberman, M.D. Dispositional mindfulness and depressive symptomatology: Correlations with limbic and self-referential neural activity during rest. Emotion 2010, 10, 12–24. [Google Scholar] [CrossRef]

- Nan, H.; Ni, M.Y.; Lee, P.H.; Tam, W.W.S.; Lam, T.H.; Leung, G.M.; McDowell, I. Psychometric evaluation of the Chinese version of the Subjective Happiness Scale: Evidence from the Hong Kong FAMILY cohort. Int. J. Behav. Med. 2014, 21, 646–652. [Google Scholar] [CrossRef]

- Shen, Z.; Cheng, Y.; Yang, S.; Dai, N.; Ye, J.; Liu, X.; Lu, J.; Li, N.; Liu, F.; Lu, Y.; et al. Changes of grey matter volume in first-episode drug-naive adult major depressive disorder patients with different age-onset. NeuroImage Clin. 2016, 12, 492–498. [Google Scholar] [CrossRef] [PubMed]

- Jing, B.; Liu, C.H.; Ma, X.; Yan, H.G.; Zhuo, Z.Z.; Zhang, Y.; Wang, S.H.; Li, H.Y.; Wang, C.Y. Difference in amplitude of low-frequency fluctuation between currently depressed and remitted females with major depressive disorder. Brain Res. 2013, 1540, 74–83. [Google Scholar] [CrossRef]

- Wei, X.; Shen, H.; Ren, J.; Liu, W.; Yang, R.; Liu, J.; Wu, H.; Xu, X.; Lai, L.; Hu, J.; et al. Alteration of spontaneous neuronal activity in young adults with non-clinical depressive symptoms. Psychiatry Res. 2015, 233, 36–42. [Google Scholar] [CrossRef]

- Dumas, R.; Richieri, R.; Guedj, E.; Auquier, P.; Lancon, C.; Boyer, L. Improvement of health-related quality of life in depression after transcranial magnetic stimulation in a naturalistic trial is associated with decreased perfusion in precuneus. Health Qual. Life Outcomes 2012, 10, 87. [Google Scholar] [CrossRef]

- Llamas-Alonso, L.A.; Barrios, F.A.; González-Garrido, A.A.; Ramos-Loyo, J. Emotional faces interfere with saccadic inhibition and attention re-orientation: An fMRI study. Neuropsychologia 2022, 173, 108300. [Google Scholar] [CrossRef]

- Loi, N.; Ginatempo, F.; Manca, A.; Melis, F.; Deriu, F. Faces emotional expressions: From perceptive to motor areas in aged and young subjects. J. Neurophysiol. 2021, 126, 1642–1652. [Google Scholar] [CrossRef] [PubMed]

- Jung, H.Y.; Pae, C.; An, I.; Bang, M.; Choi, T.K.; Cho, S.J.; Lee, S.H. A multimodal study regarding neural correlates of the subjective well-being in healthy individuals. Sci. Rep. 2022, 12, 13688. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Kong, F.; Qi, S.; You, X.; Huang, X. Resting-state functional connectivity of the default mode network associated with happiness. Soc. Cogn. Affect. Neurosci. 2016, 11, 516–524. [Google Scholar] [CrossRef] [PubMed]

| BA | Side | Cluster | Voxel Level | MNI Coordinates | Talairach Coordinates | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Brain Areas | K | T | x | y | z | x | y | z | ||

| Incongruent vs. Neutral | ||||||||||

| Precentral gyrus, postcentral gyrus | 4, 6 | r | 53 | 12.19 | 46 | −8 | 38 | 46 | −6 | 35 |

| Cerebellum | l | 49 | 8.76 | −18 | −60 | −22 | −18 | −59 | −16 | |

| 6.18 | −38 | −56 | −34 | −38 | −56 | −34 | ||||

| Cerebellum | r | 28 | 8.25 | 22 | −60 | 26 | 22 | −57 | 27 | |

| 7.59 | 30 | −60 | −30 | 30 | −59 | −22 | ||||

| Postcentral gyrus, inferior parietal lobule, superior temporal gyrus | 40, 41, 42 | r | 21 | 8.14 | 62 | −20 | 14 | 61 | −19 | 14 |

| 7.31 | 62 | −28 | 18 | 61 | −26 | 18 | ||||

| 6.08 | 54 | −32 | 22 | 53 | −30 | 22 | ||||

| Thalamus | r | 6 | 7.62 | 14 | −8 | 2 | 14 | −8 | 2 | |

| Operculum, precentral gyrus, superior temporal gyrus, insula | 6, 13, 22, 43, 44 | l | 39 | 7.4 | −50 | −12 | 10 | −50 | −11 | 10 |

| 7.16 | −50 | −4 | 6 | −50 | −4 | 6 | ||||

| 6.69 | −58 | 4 | 6 | −57 | 4 | 5 | ||||

| Superior temporal gyrus, operculum, insula, precentral gyrus | 6, 13, 22 | r | 27 | 7.3 | 62 | 0 | 2 | 61 | 0 | 2 |

| 6.88 | 46 | −8 | 10 | 46 | −7 | 10 | ||||

| 6.15 | 50 | 4 | −2 | 50 | 4 | −2 | ||||

| Cerebellum | r | 28 | 7.29 | 6 | −4 | 62 | 6 | −1 | 57 | |

| 6.3 | 2 | 4 | 66 | 2 | 7 | 60 | ||||

| Precentral gyrus | 6 | l | 13 | 7.18 | −42 | −12 | 42 | −42 | −10 | 39 |

| Inferior parietal lobule | 40 | l | 2 | 6.16 | −58 | −28 | 22 | −57 | −26 | 22 |

| Cerebellum | 1 | 5.94 | 2 | −36 | −2 | 2 | −35 | 0 | ||

| BA | Side | Cluster | Voxel Level | MNI Coordinates | Talairach Coordinates | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Brain Areas | K | T | x | y | z | x | y | z | ||

| Hh vs. Hd | ||||||||||

| Precuneus, superior parietal lobule | 7 | l | 34 | 4.53 | −10 | −76 | 38 | −10 | −72 | 39 |

| 4.38 | −14 | −72 | 50 | −14 | −67 | 49 | ||||

| 4.14 | −22 | −64 | 38 | −22 | −60 | 38 | ||||

| BA | Side | Cluster | Voxel Level | MNI Coordinates | Talairach Coordinates | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Brain Areas | K | T | x | y | z | x | y | z | ||

| Dh vs. Hh | ||||||||||

| Anterior insula, inferior frontal gyrus | 45, 47 | r | 16 | 4.9 | 42 | 20 | 2 | 42 | 19 | 1 |

| 3.79 | 50 | 28 | 6 | 50 | 27 | 4 | ||||

| Postcentral gyrus | 3 | l | 24 | 4.81 | −38 | −36 | 54 | −38 | −32 | 51 |

| Inferior temporal gyrus | 37 | l | 35 | 4.69 | −50 | −64 | −10 | −50 | −62 | −5 |

| Superior temporal gyrus | l | 36 | 4.48 | −46 | −52 | 18 | −46 | −50 | 19 | |

| 4.39 | −42 | −44 | 34 | −42 | −41 | 33 | ||||

| 4.1 | −54 | −48 | 22 | −53 | −45 | 23 | ||||

| Caudate nucleus | r | 21 | 4.24 | 14 | 0 | 10 | 14 | 0 | 9 | |

| 3.77 | 22 | −12 | 18 | 22 | −11 | 17 | ||||

| BA | Side | Cluster | Voxel Level | MNI Coordinates | Talairach Coordinates | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Brain Areas | K | T | x | y | z | x | y | z | ||

| h vs. d | ||||||||||

| Cerebellum | r | 30 | 5.76 | 30 | −40 | −30 | 30 | −40 | −23 | |

| Cuneus, superior parietal lobule, angular gyrus | 7, 39 | r | 104 | 5.44 | 10 | −76 | 38 | 10 | −72 | 39 |

| 4.81 | 18 | −68 | 46 | 18 | −64 | 46 | ||||

| 4.81 | 26 | −64 | 46 | 26 | −60 | 45 | ||||

| Pre- and post-central gyrus | l | 36 | 4.9 | −50 | −20 | 50 | −50 | −17 | 47 | |

| 4.26 | −34 | −12 | 42 | −34 | −10 | 39 | ||||

| Cuneus, lingual gyrus, PCC, cerebellum | 18, 19 | r | 131 | 4.86 | 6 | −72 | 14 | 6 | −69 | 16 |

| 4.09 | 18 | −64 | −6 | 18 | −62 | −2 | ||||

| 3.95 | 18 | −52 | −18 | 18 | −51 | −13 | ||||

| Inferior frontal gyrus, anterior insula, postcentral gyrus | 43 | l | 69 | 4.82 | −38 | 8 | 14 | −38 | 8 | 12 |

| 4.78 | −54 | −4 | 18 | −53 | −3 | 17 | ||||

| 4.52 | −46 | −16 | 18 | −46 | −15 | 17 | ||||

| Middle and superior occipital cortex | l | 39 | 4.6 | −26 | −80 | 22 | −26 | −76 | 24 | |

| 4.34 | −30 | −88 | 14 | −30 | −85 | 17 | ||||

| Middle occipital gyrus, fusiform gyrus | 19 | l | 33 | 4.14 | −46 | −76 | −2 | −46 | −74 | −2 |

| 4.12 | −38 | −72 | −18 | −38 | −71 | −12 | ||||

| 4.12 | −30 | −80 | −14 | −30 | −78 | −8 | ||||

| BA | Side | Cluster | Voxel Level | MNI Coordinates | Talairach Coordinates | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Brain Areas | K | T | x | y | z | x | y | z | ||

| Nh vs. Nd | ||||||||||

| Lingual gyrus, cerebellum | 18 | r | 144 | 4.89 | 22 | −72 | −6 | 22 | −70 | −2 |

| 4.29 | 30 | −68 | −30 | 30 | −67 | −22 | ||||

| 4.12 | 22 | −76 | −18 | 22 | −74 | −11 | ||||

| Cerebellum | r | 52 | 4.65 | 22 | −40 | −30 | 22 | −40 | −23 | |

| 4.03 | 18 | −52 | −18 | 18 | −51 | −13 | ||||

| 3.74 | 22 | −44 | −14 | 22 | −43 | −10 | ||||

| Lingual gyrus, cerebellum | 18 | l | 56 | 4.31 | −2 | −76 | −22 | −2 | −75 | −15 |

| 4.06 | −14 | −76 | −26 | −14 | −75 | −18 | ||||

| 3.87 | −30 | −72 | −30 | −30 | −71 | −22 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Benuzzi, F.; Ballotta, D.; Casadio, C.; Zanelli, V.; Porro, C.A.; Nichelli, P.F.; Lui, F. “When You’re Smiling”: How Posed Facial Expressions Affect Visual Recognition of Emotions. Brain Sci. 2023, 13, 668. https://doi.org/10.3390/brainsci13040668

Benuzzi F, Ballotta D, Casadio C, Zanelli V, Porro CA, Nichelli PF, Lui F. “When You’re Smiling”: How Posed Facial Expressions Affect Visual Recognition of Emotions. Brain Sciences. 2023; 13(4):668. https://doi.org/10.3390/brainsci13040668

Chicago/Turabian StyleBenuzzi, Francesca, Daniela Ballotta, Claudia Casadio, Vanessa Zanelli, Carlo Adolfo Porro, Paolo Frigio Nichelli, and Fausta Lui. 2023. "“When You’re Smiling”: How Posed Facial Expressions Affect Visual Recognition of Emotions" Brain Sciences 13, no. 4: 668. https://doi.org/10.3390/brainsci13040668