Looming Angry Faces: Preliminary Evidence of Differential Electrophysiological Dynamics for Filtered Stimuli via Low and High Spatial Frequencies

Abstract

:1. Introduction

2. Experiment 1

2.1. Method

2.1.1. Participants

2.1.2. Stimuli

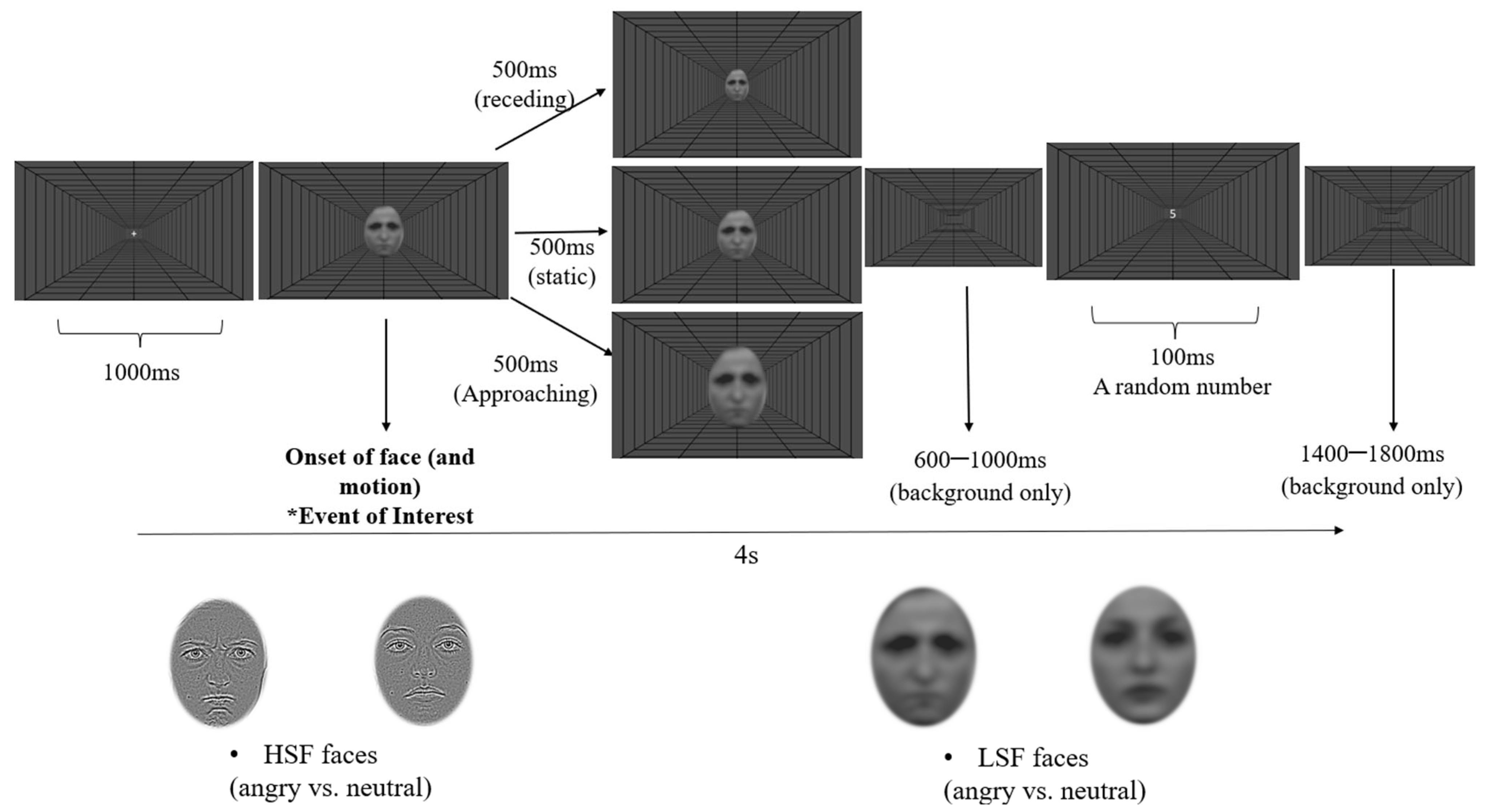

2.1.3. Design and Procedure

2.1.4. Apparatus

2.1.5. EEG Data Processing

2.2. Results

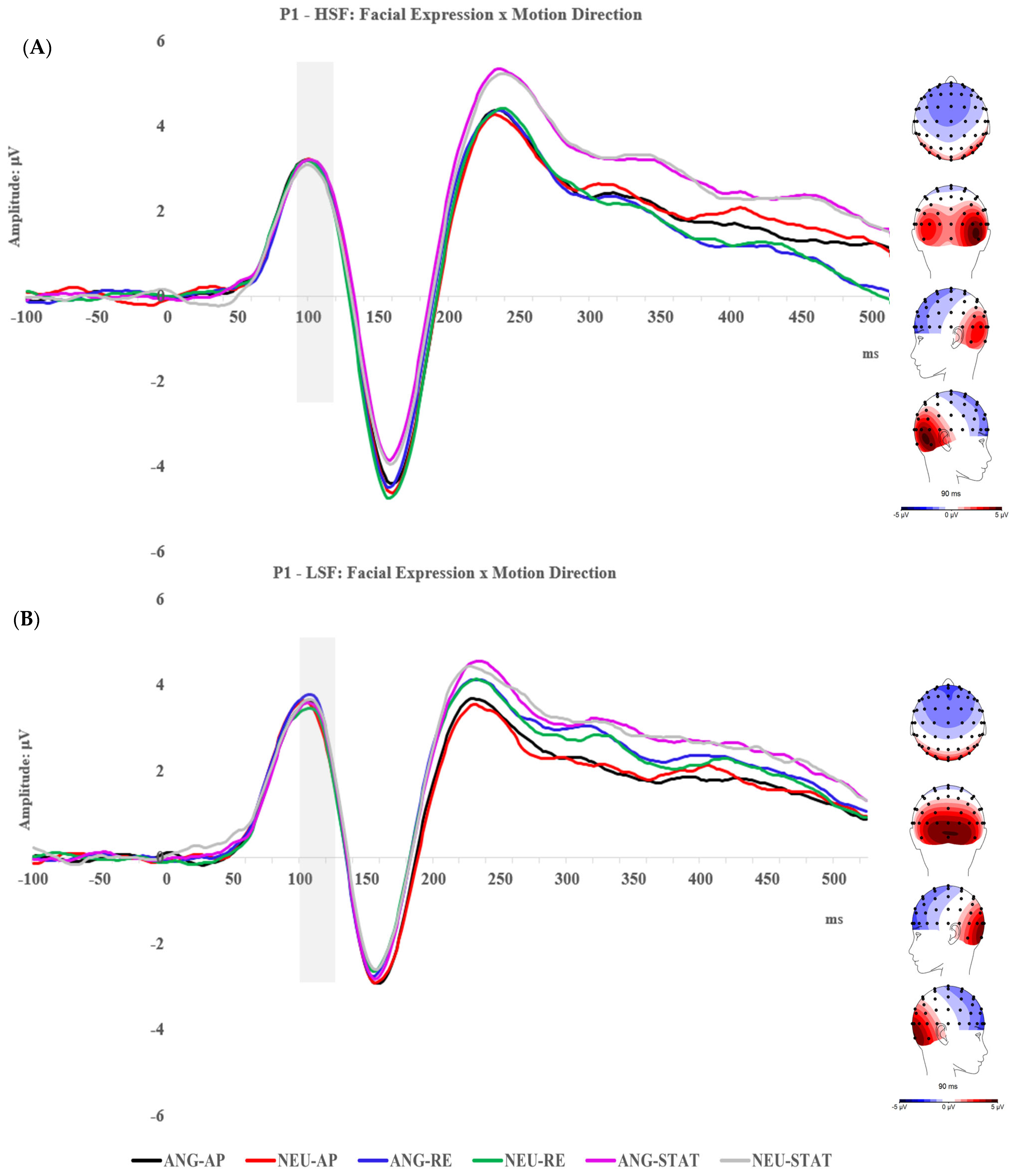

2.2.1. P1 Component

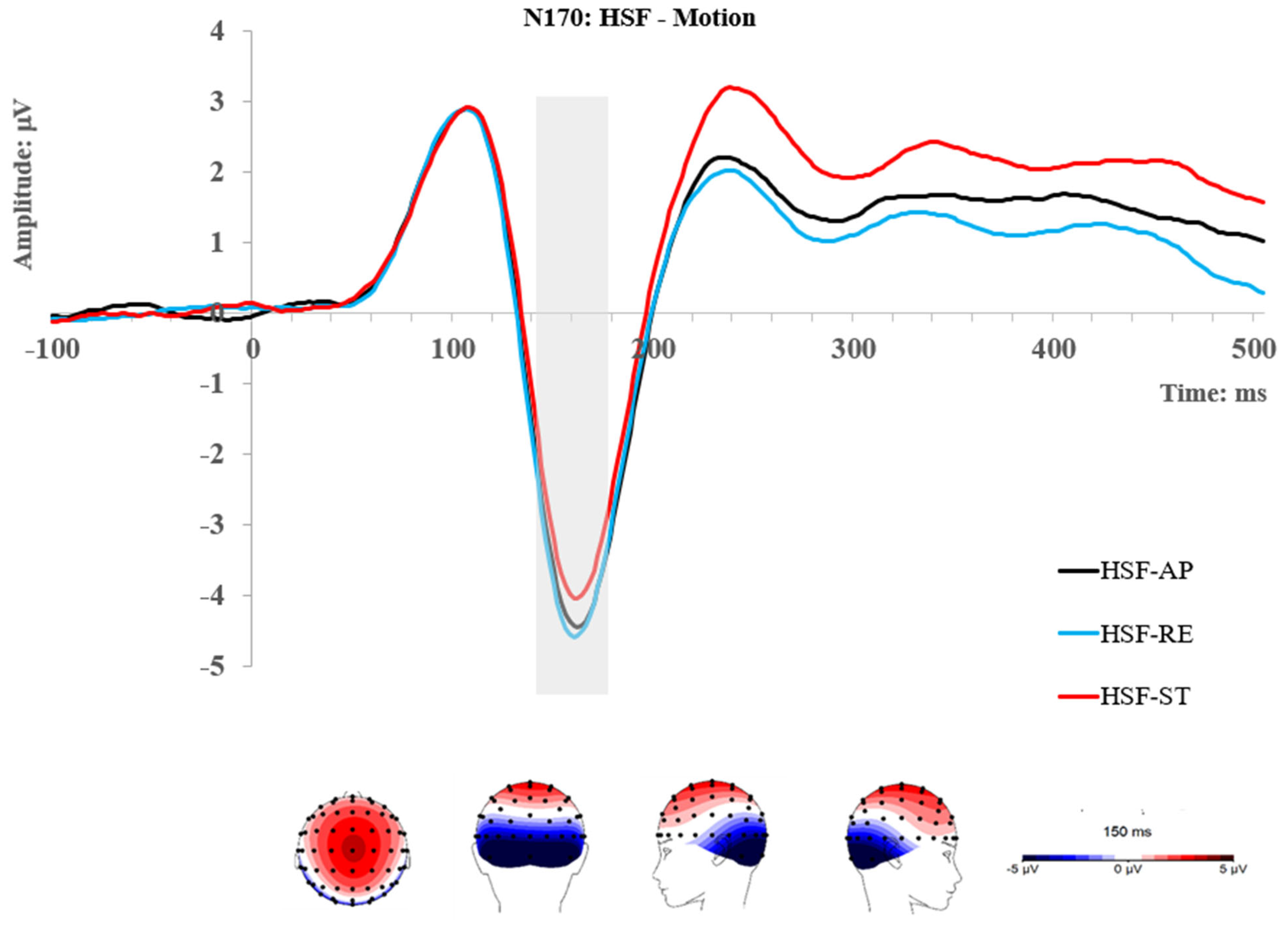

2.2.2. N170 Component

2.2.3. P2 Component

2.3. Experiment 1 Summary

3. Experiment 2

3.1. Method

3.1.1. Participants

3.1.2. Design and Procedure

3.1.3. Apparatus and EEG Data Processing

3.2. Results

3.2.1. P1 Component

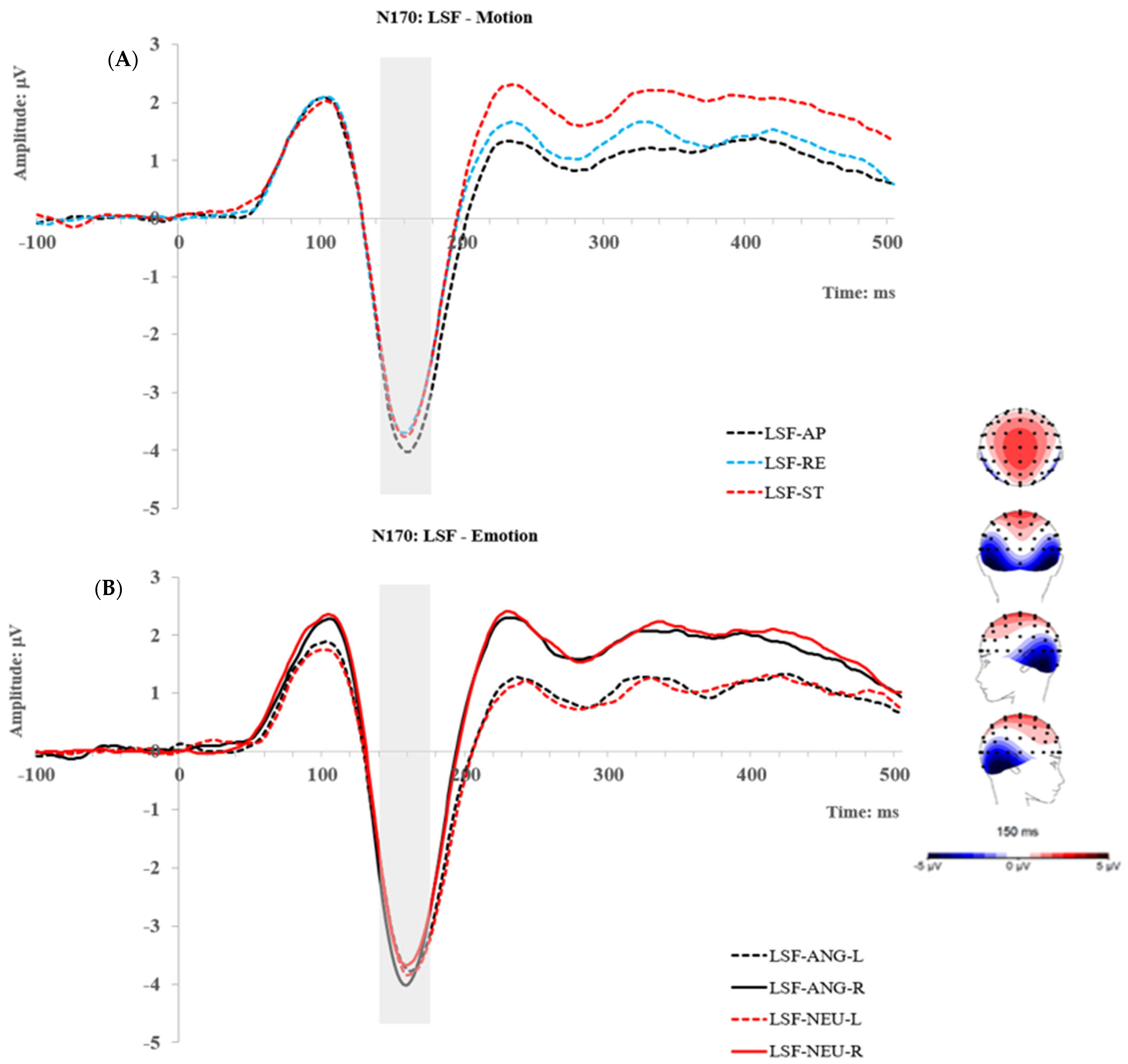

3.2.2. N170 Component

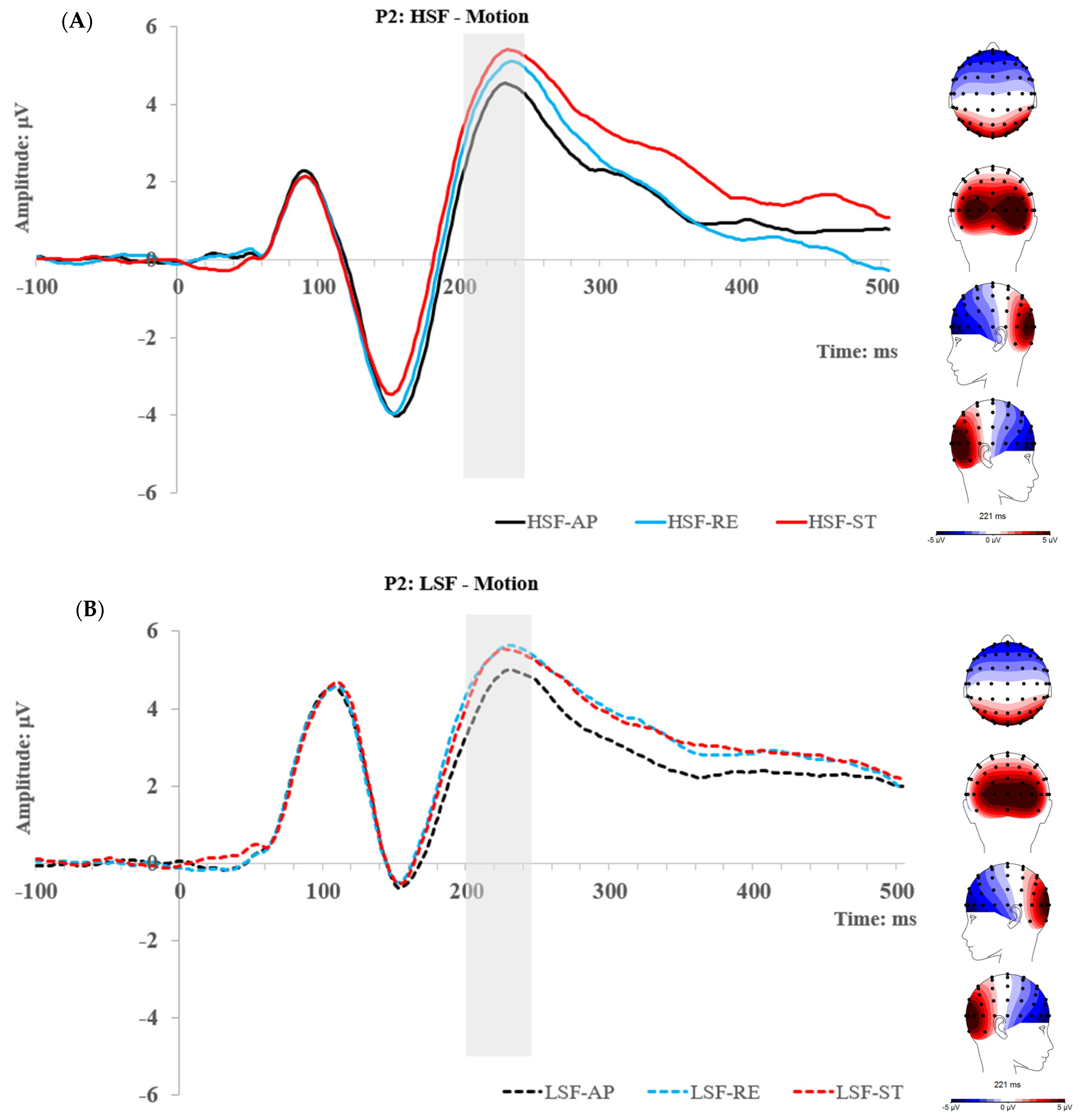

3.2.3. P2 Component

3.3. Experiment 2 Summary

4. Discussion

4.1. The P1 Component

4.2. The N170 Component

4.3. The P2 Component

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adams, R.B.; Ambady, N.; Macrae, C.N.; Kleck, R.E. Emotional expressions forecast approach-avoidance behavior. Motiv. Emot. 2006, 30, 177–186. [Google Scholar] [CrossRef]

- LeDoux, J. Rethinking the emotional brain. Neuron 2012, 73, 653–676. [Google Scholar] [CrossRef] [PubMed]

- Marsh, A.A.; Ambady, N.; Kleck, R.E. The effects of fear and anger facial expressions on approach-and avoidance-related behaviors. Emotion 2005, 5, 119–124. [Google Scholar] [CrossRef]

- Mennella, R.; Vilarem, E.; Grèzes, J. Rapid approach-avoidance responses to emotional displays reflect value-based decisions: Neural evidence from an EEG study. NeuroImage 2020, 222, 117253. [Google Scholar] [CrossRef] [PubMed]

- Roelofs, K.; Putman, P.; Schouten, S.; Lange, W.-G.; Volman, I.; Rinck, M. Gaze direction differentially affects avoidance tendencies to happy and angry faces in socially anxious individuals. Behav. Res. Ther. 2010, 48, 290–294. [Google Scholar] [CrossRef] [PubMed]

- Springer, U.S.; Rosas, A.; McGetrick, J.; Bowers, D. Differences in startle reactivity during the perception of angry and fearful faces. Emotion 2007, 7, 516–525. [Google Scholar] [CrossRef]

- Adams, R.B., Jr.; Kleck, R.E. Perceived gaze direction and the processing of facial displays of emotion. Psychol. Sci. 2003, 14, 644–647. [Google Scholar] [CrossRef]

- Mühlberger, A.; Wieser, M.J.; Gerdes, A.B.; Frey, M.C.; Weyers, P.; Pauli, P. Stop looking angry and smile, please: Start and stop of the very same facial expression differentially activate threat-and reward-related brain networks. Soc. Cogn. Affect. Neurosci. 2011, 6, 321–329. [Google Scholar] [CrossRef]

- de Vignemont, F.; Iannetti, G. How many peripersonal spaces? Neuropsychologia 2015, 70, 327–334. [Google Scholar] [CrossRef]

- Graziano, M.S.; Cooke, D.F. Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia 2006, 44, 845–859. [Google Scholar] [CrossRef]

- Hunley, S.B.; Lourenco, S.F. What is peripersonal space? An examination of unresolved empirical issues and emerging findings. Wiley Interdiscip. Rev. Cogn. Sci. 2018, 9, e1472. [Google Scholar] [CrossRef] [PubMed]

- Perry, A.; Rubinsten, O.; Peled, L.; Shamay-Tsoory, S.G. Don’t stand so close to me: A behavioral and ERP study of preferred interpersonal distance. Neuroimage 2013, 83, 761–769. [Google Scholar] [CrossRef] [PubMed]

- Sambo, C.F.; Iannetti, G.D. Better safe than sorry? The safety margin surrounding the body is increased by anxiety. J. Neurosci. 2013, 33, 14225–14230. [Google Scholar] [CrossRef] [PubMed]

- Spaccasassi, C.; Maravita, A. Peripersonal space is diversely sensitive to a temporary vs permanent state of anxiety. Cognition 2020, 195, 104133. [Google Scholar] [CrossRef] [PubMed]

- Bufacchi, R.J. Approaching threatening stimuli cause an expansion of defensive peripersonal space. J. Neurophysiol. 2017, 118, 1927–1930. [Google Scholar] [CrossRef] [PubMed]

- Wei, P.; Liu, N.; Zhang, Z.; Liu, X.; Tang, Y.; He, X.; Wu, B.; Zhou, Z.; Liu, Y.; Li, J.; et al. Processing of visually evoked innate fear by a non-canonical thalamic pathway. Nat. Commun. 2015, 6, 6756. [Google Scholar] [CrossRef] [PubMed]

- Lischinsky, J.E.; Lin, D. Looming Danger: Unraveling the Circuitry for Predator Threats. Trends Neurosci. 2019, 42, 841–842. [Google Scholar] [CrossRef]

- Ball, W.; Tronick, E. Infant responses to impending collision: Optical and real. Science 1971, 171, 818–820. [Google Scholar] [CrossRef]

- van der Weel, F.R.; van der Meer, A.L. Seeing it coming: Infants’ brain responses to looming danger. Naturwissenschaften 2009, 96, 1385–1391. [Google Scholar] [CrossRef]

- van der Weel, F.R.; Agyei, S.B.; van der Meer, A.L.H. Infants’ Brain Responses to Looming Danger: Degeneracy of Neural Connectivity Patterns. Ecol. Psychol. 2019, 31, 182–197. [Google Scholar] [CrossRef]

- Yonas, A.; Pettersen, L.; Lockman, J.J. Young infant’s sensitivity to optical information for collision. Can. J. Psychol. 1979, 33, 268–276. [Google Scholar] [CrossRef] [PubMed]

- Schiff, W.; Caviness, J.A.; Gibson, J.J. Persistent fear responses in rhesus monkeys to the optical stimulus of “looming”. Science 1962, 136, 982–983. [Google Scholar] [CrossRef] [PubMed]

- Blechert, J.; Sheppes, G.; Di Tella, C.; Williams, H.; Gross, J.J. See what you think: Reappraisal modulates behavioral and neural responses to social stimuli. Psychol. Sci. 2012, 23, 346–353. [Google Scholar] [CrossRef] [PubMed]

- Carretié, L.; Hinojosa, J.A.; Martín-Loeches, M.; Mercado, F.; Tapia, M. Automatic attention to emotional stimuli: Neural correlates. Hum. Brain Mapp. 2004, 22, 290–299. [Google Scholar] [CrossRef]

- Rellecke, J.; Sommer, W.; Schacht, A. Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biol. Psychol. 2012, 90, 23–32. [Google Scholar] [CrossRef] [PubMed]

- Batty, M.; Taylor, M.J. Early processing of the six basic facial emotional expressions. Cogn. Brain Res. 2003, 17, 613–620. [Google Scholar] [CrossRef] [PubMed]

- Righart, R.; De Gelder, B. Rapid influence of emotional scenes on encoding of facial expressions: An ERP study. Soc. Cogn. Affect. Neurosci. 2008, 3, 270–278. [Google Scholar] [CrossRef]

- Blau, V.C.; Maurer, U.; Tottenham, N.; McCandliss, B.D. The face-specific N170 component is modulated by emotional facial expression. Behav. Brain Funct. 2007, 3, 7. [Google Scholar] [CrossRef]

- Hinojosa, J.; Mercado, F.; Carretié, L. N170 sensitivity to facial expression: A meta-analysis. Neurosci. Biobehav. Rev. 2015, 55, 498–509. [Google Scholar] [CrossRef]

- Schindler, S.; Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex 2020, 130, 362–386. [Google Scholar] [CrossRef]

- Carretié, L.; Hinojosa, J.A.; López-Martín, S.; Albert, J.; Tapia, M.; Pozo, M.A. Danger is worse when it moves: Neural and behavioral indices of enhanced attentional capture by dynamic threatening stimuli. Neuropsychologia 2009, 47, 364–369. [Google Scholar] [CrossRef] [PubMed]

- Hillyard, S.A.; Anllo-Vento, L. Event-related brain potentials in the study of visual selective attention. Proc. Natl. Acad. Sci. USA 1998, 95, 781–787. [Google Scholar] [CrossRef] [PubMed]

- Kubova, Z.; Kuba, M.; Spekreijse, H.; Blakemore, C. Contrast dependence of motion-onset and pattern-reversal evoked potentials. Vis. Res. 1995, 35, 197–205. [Google Scholar] [CrossRef] [PubMed]

- Ffytche, D.H.; Guy, C.N.; Zeki, S. The parallel visual motion inputs into areas V1 and V5 of human cerebral cortex. Brain 1995, 118, 1375–1394. [Google Scholar] [CrossRef]

- Fernández-Folgueiras, U.; Méndez-Bértolo, C.; Hernández-Lorca, M.; Bódalo, C.; Giménez-Fernández, T.; Carretié, L. Realistic (3D) looming of emotional visual stimuli: Attentional effects at neural and behavioral levels. Psychophysiology 2021, 58, e13785. [Google Scholar] [CrossRef] [PubMed]

- Vagnoni, E.; Lourenco, S.F.; Longo, M.R. Threat modulates neural responses to looming visual stimuli. Eur. J. Neurosci. 2015, 42, 2190–2202. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Kritikos, A.; Pegna, A.J. Enhanced early ERP responses to looming angry faces. Biol. Psychol. 2022, 170, 108308. [Google Scholar] [CrossRef]

- Itier, R.J.; Taylor, M.J. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 2004, 14, 132–142. [Google Scholar] [CrossRef]

- Pourtois, G.; Grandjean, D.; Sander, D.; Vuilleumier, P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cereb. Cortex 2004, 14, 619–633. [Google Scholar] [CrossRef]

- Bruchmann, M.; Schindler, S.; Straube, T. The spatial frequency spectrum of fearful faces modulates early and mid-latency ERPs but not the N170. Psychophysiology 2020, 57, e13597. [Google Scholar] [CrossRef]

- Schindler, S.; Bruchmann, M.; Gathmann, B.; Moeck, R.; Straube, T. Effects of low-level visual information and perceptual load on P1 and N170 responses to emotional expressions. Cortex 2021, 136, 14–27. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Kritikos, A.; Pegna, A.J. Up close and emotional: Electrophysiological dynamics of approaching angry faces. Biol. Psychol. 2023, 176, 108479. [Google Scholar] [CrossRef] [PubMed]

- Rakover, S.S. Explaining the face-inversion effect: The face–scheme incompatibility (FSI) model. Psychon. Bull. Rev. 2013, 20, 665–692. [Google Scholar] [CrossRef] [PubMed]

- Taubert, J.; Apthorp, D.; Aagten-Murphy, D.; Alais, D. The role of holistic processing in face perception: Evidence from the face inversion effect. Vis. Res. 2011, 51, 1273–1278. [Google Scholar] [CrossRef] [PubMed]

- Farah, M.J.; Wilson, K.D.; Drain, H.M.; Tanaka, J.R. The inverted face inversion effect in prosopagnosia: Evidence for mandatory, face-specific perceptual mechanisms. Vis. Res. 1995, 35, 2089–2093. [Google Scholar] [CrossRef]

- Valentine, T. Upside-down faces: A review of the effect of inversion upon face recognition. Br. J. Psychol. 1988, 79, 471–491. [Google Scholar] [CrossRef] [PubMed]

- Cashon, C.H.; Holt, N.A. Chapter Four—Developmental origins of the face inversion effect. Adv. Child Dev. Behav. 2015, 48, 117–150. [Google Scholar]

- Yin, R.K. Looking at upside-down faces. J. Exp. Psychol. 1969, 81, 141–145. [Google Scholar] [CrossRef]

- Itier, R.J.; Taylor, M.J. Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: A repetition study using ERPs. Neuroimage 2002, 15, 353–372. [Google Scholar] [CrossRef]

- Jacques, C.; d’Arripe, O.; Rossion, B. The time course of the inversion effect during individual face discrimination. J. Vis. 2007, 7, 3. [Google Scholar] [CrossRef]

- Taylor, M.; Batty, M.; Itier, R. The faces of development: A review of early face processing over childhood. J. Cogn. Neurosci. 2004, 16, 1426–1442. [Google Scholar] [CrossRef] [PubMed]

- LeDoux, J. The emotional brain, fear, and the amygdala. Cell. Mol. Neurobiol. 2003, 23, 727–738. [Google Scholar] [CrossRef] [PubMed]

- LeDoux, J.E. Emotion, memory and the brain. Sci. Am. 1994, 270, 50–57. [Google Scholar] [CrossRef] [PubMed]

- Tamietto, M.; De Gelder, B. Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 2010, 11, 697–709. [Google Scholar] [CrossRef] [PubMed]

- Diano, M.; Celeghin, A.; Bagnis, A.; Tamietto, M. Amygdala Response to Emotional Stimuli without Awareness: Facts and Interpretations. Front. Psychol. 2016, 7, 2029. [Google Scholar] [CrossRef] [PubMed]

- Tamietto, M.; Pullens, P.; de Gelder, B.; Weiskrantz, L.; Goebel, R. Subcortical connections to human amygdala and changes following destruction of the visual cortex. Curr. Biol. 2012, 22, 1449–1455. [Google Scholar] [CrossRef]

- Pegna, A.J.; Khateb, A.; Lazeyras, F.; Seghier, M.L. Discriminating emotional faces without primary visual cortices involves the right amygdala. Nat. Neurosci. 2004, 8, 24–25. [Google Scholar] [CrossRef]

- Burra, N.; Hervais-Adelman, A.; Celeghin, A.; De Gelder, B.; Pegna, A.J. Affective blindsight relies on low spatial frequencies. Neuropsychologia 2019, 128, 44–49. [Google Scholar] [CrossRef]

- Laycock, R.; Crewther, D.P.; Fitzgerald, P.B.; Crewther, S.G. Evidence for fast signals and later processing in human V1/V2 and V5/MT+: A TMS study of motion perception. J. Neurophysiol. 2007, 98, 1253–1262. [Google Scholar] [CrossRef]

- Kawano, K.; Shidara, M.; Watanabe, Y.; Yamane, S. Neural activity in cortical area MST of alert monkey during ocular following responses. J. Neurophysiol. 1994, 71, 2305–2324. [Google Scholar] [CrossRef]

- Grasso, P.A.; Ladavas, E.; Bertini, C.; Caltabiano, S.; Thut, G.; Morand, S. Decoupling of Early V5 Motion Processing from Visual Awareness: A Matter of Velocity as Revealed by Transcranial Magnetic Stimulation. J. Cogn. Neurosci. 2018, 30, 1517–1531. [Google Scholar] [CrossRef] [PubMed]

- Billington, J.; Wilkie, R.M.; Field, D.T.; Wann, J.P. Neural processing of imminent collision in humans. Proc. R. Soc. B Biol. Sci. 2011, 278, 1476–1481. [Google Scholar] [CrossRef] [PubMed]

- Cléry, J.C.; Schaeffer, D.J.; Hori, Y.; Gilbert, K.M.; Hayrynen, L.K.; Gati, J.S.; Menon, R.S.; Everling, S. Looming and receding visual networks in awake marmosets investigated with fMRI. Neuroimage 2020, 215, 116815. [Google Scholar] [CrossRef] [PubMed]

- Livingstone, M.; Hubel, D. Segregation of form, color, movement, and depth: Anatomy, physiology, and perception. Science 1988, 240, 740–749. [Google Scholar] [CrossRef] [PubMed]

- Bullier, J. Integrated model of visual processing. Brain Res. Brain Res. Rev. 2001, 36, 96–107. [Google Scholar] [CrossRef] [PubMed]

- Lupp, U.; Hauske, G.; Wolf, W. Perceptual latencies to sinusoidal gratings. Vis. Res. 1976, 16, 969–972. [Google Scholar] [CrossRef]

- Broggin, E.; Savazzi, S.; Marzi, C.A. Similar effects of visual perception and imagery on simple reaction time. Q. J. Exp. Psychol. 2012, 65, 151–164. [Google Scholar] [CrossRef]

- Mihaylova, M.; Stomonyakov, V.; Vassilev, A. Peripheral and central delay in processing high spatial frequencies: Reaction time and VEP latency studies. Vis. Res. 1999, 39, 699–705. [Google Scholar] [CrossRef]

- Schiller, P.H.; Malpeli, J.G.; Schein, S.J. Composition of geniculostriate input ot superior colliculus of the rhesus monkey. J. Neurophysiol. 1979, 42, 1124–1133. [Google Scholar] [CrossRef]

- Mishkin, M.; Ungerleider, L.G.; Macko, K.A. Object vision and spatial vision: Two cortical pathways. Trends Neurosci. 1983, 6, 414–417. [Google Scholar] [CrossRef]

- Goffaux, V.; Hault, B.; Michel, C.; Vuong, Q.C.; Rossion, B. The respective role of low and high spatial frequencies in supporting configural and featural processing of faces. Perception 2005, 34, 77–86. [Google Scholar] [CrossRef] [PubMed]

- Goffaux, V.; Rossion, B. Faces are “spatial”--holistic face perception is supported by low spatial frequencies. J. Exp. Psychol. Hum. Percept. Perform. 2006, 32, 1023–1039. [Google Scholar] [CrossRef] [PubMed]

- Halit, H.; de Haan, M.; Schyns, P.G.; Johnson, M.H. Is high-spatial frequency information used in the early stages of face detection? Brain Res. 2006, 1117, 154–161. [Google Scholar] [CrossRef] [PubMed]

- Vuilleumier, P.; Armony, J.L.; Driver, J.; Dolan, R.J. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat. Neurosci. 2003, 6, 624–631. [Google Scholar] [CrossRef] [PubMed]

- Winston, J.S.; Vuilleumier, P.; Dolan, R.J. Effects of low-spatial frequency components of fearful faces on fusiform cortex activity. Curr. Biol. 2003, 13, 1824–1829. [Google Scholar] [CrossRef] [PubMed]

- Nakashima, T.; Kaneko, K.; Goto, Y.; Abe, T.; Mitsudo, T.; Ogata, K.; Makinouchi, A.; Tobimatsu, S. Early ERP components differentially extract facial features: Evidence for spatial frequency-and-contrast detectors. Neurosci. Res. 2008, 62, 225–235. [Google Scholar] [CrossRef]

- Jeantet, C.; Laprevote, V.; Schwan, R.; Schwitzer, T.; Maillard, L.; Lighezzolo-Alnot, J.; Caharel, S. Time course of spatial frequency integration in face perception: An ERP study. Int. J. Psychophysiol. 2019, 143, 105–115. [Google Scholar] [CrossRef]

- Obayashi, C.; Nakashima, T.; Onitsuka, T.; Maekawa, T.; Hirano, Y.; Hirano, S.; Oribe, N.; Kaneko, K.; Kanba, S.; Tobimatsu, S. Decreased spatial frequency sensitivities for processing faces in male patients with chronic schizophrenia. Clin. Neurophysiol. 2009, 120, 1525–1533. [Google Scholar] [CrossRef]

- Campbell, J.I.; Thompson, V.A. MorePower 6.0 for ANOVA with relational confidence intervals and Bayesian analysis. Behav. Res. Methods 2012, 44, 1255–1265. [Google Scholar] [CrossRef]

- Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.; Hawk, S.T.; Van Knippenberg, A. Presentation and validation of the Radboud Faces Database. Cogn. Emot. 2010, 24, 1377–1388. [Google Scholar] [CrossRef]

- MathWorks. MATLAB, Version: 9.13. 0 (R2022b). Available online: https://www.mathworks.com (accessed on 4 July 2022).

- Perfetto, S.; Wilder, J.; Walther, D.B. Effects of spatial frequency filtering choices on the perception of filtered images. Vision 2020, 4, 29. [Google Scholar] [CrossRef] [PubMed]

- Peirce, J.; MacAskill, M. Building Experiments in PsychoPy. Available online: https://www.psychopy.org/index.html (accessed on 16 January 2024).

- Hajcak, G.; Weinberg, A.; MacNamara, A.; Foti, D. ERPs and the Study of Emotion. In The Oxford Handbook of Event-Related Potential Components; Kappenman, E.S., Luck, S.J., Eds.; Oxford University Press: Oxford, UK, 2012; p. 441. [Google Scholar]

- Luck, S.J. An Introduction to the Event-Related Potential Technique; MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Luck, S.J.; Kappenman, E.S. The Oxford Handbook of Event-Related Potential Components; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Pessoa, L.; McKenna, M.; Gutierrez, E.; Ungerleider, L.G. Neural processing of emotional faces requires attention. Proc. Natl. Acad. Sci. USA 2002, 99, 11458–11463. [Google Scholar] [CrossRef] [PubMed]

- Pessoa, L.; Padmala, S.; Morland, T. Fate of unattended fearful faces in the amygdala is determined by both attentional resources and cognitive modulation. Neuroimage 2005, 28, 249–255. [Google Scholar] [CrossRef] [PubMed]

- Hopfinger, J.B.; West, V.M. Interactions between endogenous and exogenous attention on cortical visual processing. Neuroimage 2006, 31, 774–789. [Google Scholar] [CrossRef] [PubMed]

- Pessoa, L. To what extent are emotional visual stimuli processed without attention and awareness? Curr. Opin. Neurobiol. 2005, 15, 188–196. [Google Scholar] [CrossRef] [PubMed]

- Vuilleumier, P. How brains beware: Neural mechanisms of emotional attention. Trends Cogn. Sci. 2005, 9, 585–594. [Google Scholar] [CrossRef] [PubMed]

- Burt, A.; Hugrass, L.; Frith-Belvedere, T.; Crewther, D. Insensitivity to Fearful Emotion for Early ERP Components in High Autistic Tendency Is Associated with Lower Magnocellular Efficiency. Front. Hum. Neurosci. 2017, 11, 495. [Google Scholar] [CrossRef]

- Park, G.; Moon, E.; Kim, D.W.; Lee, S.H. Individual differences in cardiac vagal tone are associated with differential neural responses to facial expressions at different spatial frequencies: An ERP and sLORETA study. Cogn. Affect. Behav. Neurosci. 2012, 12, 777–793. [Google Scholar] [CrossRef]

- Vlamings, P.H.; Goffaux, V.; Kemner, C. Is the early modulation of brain activity by fearful facial expressions primarily mediated by coarse low spatial frequency information? J. Vis. 2009, 9, 12. [Google Scholar] [CrossRef]

- Wang, Y.; Luo, L.; Chen, G.; Luan, G.; Wang, X.; Wang, Q.; Fang, F. Rapid Processing of Invisible Fearful Faces in the Human Amygdala. J. Neurosci. 2023, 43, 1405–1413. [Google Scholar] [CrossRef]

- Mendez-Bertolo, C.; Moratti, S.; Toledano, R.; Lopez-Sosa, F.; Martinez-Alvarez, R.; Mah, Y.H.; Vuilleumier, P.; Gil-Nagel, A.; Strange, B.A. A fast pathway for fear in human amygdala. Nat. Neurosci. 2016, 19, 1041–1049. [Google Scholar] [CrossRef] [PubMed]

- Rotshtein, P.; Richardson, M.P.; Winston, J.S.; Kiebel, S.J.; Vuilleumier, P.; Eimer, M.; Driver, J.; Dolan, R.J. Amygdala damage affects event-related potentials for fearful faces at specific time windows. Hum. Brain Mapp. 2010, 31, 1089–1105. [Google Scholar] [CrossRef] [PubMed]

- Chan, A.H.S.; Courtney, A.J. Foveal acuity, peripheral acuity and search performance: A review. Int. J. Ind. Ergon. 1996, 18, 113–119. [Google Scholar] [CrossRef]

- Shahangian, K.; Oruc, I. Looking at a blurry old family photo? Zoom out! Perception 2014, 43, 90–98. [Google Scholar] [CrossRef] [PubMed]

- Pitcher, D.; Dilks, D.D.; Saxe, R.R.; Triantafyllou, C.; Kanwisher, N. Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage 2011, 56, 2356–2363. [Google Scholar] [CrossRef] [PubMed]

- Pitcher, D.; Duchaine, B.; Walsh, V. Combined TMS and FMRI reveal dissociable cortical pathways for dynamic and static face perception. Curr. Biol. 2014, 24, 2066–2070. [Google Scholar] [CrossRef]

- Pitcher, D.; Ungerleider, L.G. Evidence for a Third Visual Pathway Specialized for Social Perception. Trends Cogn. Sci. 2021, 25, 100–110. [Google Scholar] [CrossRef]

- Schoenfeld, M.A.; Heinze, H.J.; Woldorff, M.G. Unmasking Motion-Processing Activity in Human Brain Area V5/MT+ Mediated by Pathways That Bypass Primary Visual Cortex. NeuroImage 2002, 17, 769–779. [Google Scholar] [CrossRef]

- Ajina, S.; Kennard, C.; Rees, G.; Bridge, H. Motion area V5/MT+ response to global motion in the absence of V1 resembles early visual cortex. Brain 2015, 138, 164–178. [Google Scholar] [CrossRef]

- Carretie, L. Exogenous (automatic) attention to emotional stimuli: A review. Cogn. Affect. Behav. Neurosci. 2014, 14, 1228–1258. [Google Scholar] [CrossRef]

- Carretie, L.; Albert, J.; Lopez-Martin, S.; Tapia, M. Negative brain: An integrative review on the neural processes activated by unpleasant stimuli. Int. J. Psychophysiol. 2009, 71, 57–63. [Google Scholar] [CrossRef] [PubMed]

- Adolphs, R.; Spezio, M. Role of the amygdala in processing visual social stimuli. Prog. Brain Res. 2006, 156, 363–378. [Google Scholar] [CrossRef]

- Pessoa, L.; Adolphs, R. Emotion processing and the amygdala: From a ‘low road’ to ‘many roads’ of evaluating biological significance. Nat. Rev. Neurosci. 2010, 11, 773–783. [Google Scholar] [CrossRef]

- Sani, I.; Stemmann, H.; Caron, B.; Bullock, D.; Stemmler, T.; Fahle, M.; Pestilli, F.; Freiwald, W.A. The human endogenous attentional control network includes a ventro-temporal cortical node. Nat. Commun. 2021, 12, 360. [Google Scholar] [CrossRef] [PubMed]

- McFadyen, J.; Mermillod, M.; Mattingley, J.B.; Halász, V.; Garrido, M.I. A rapid subcortical amygdala route for faces irrespective of spatial frequency and emotion. J. Neurosci. 2017, 37, 3864–3874. [Google Scholar] [CrossRef] [PubMed]

- Straube, S.; Fahle, M. The electrophysiological correlate of saliency: Evidence from a figure-detection task. Brain Res. 2010, 1307, 89–102. [Google Scholar] [CrossRef]

- Pieters, R.; Wedel, M.; Zhang, J. Optimal feature advertising design under competitive clutter. Manag. Sci. 2007, 53, 1815–1828. [Google Scholar] [CrossRef]

- Holmes, A.; Kiss, M.; Eimer, M. Attention modulates the processing of emotional expression triggered by foveal faces. Neurosci. Lett. 2006, 394, 48–52. [Google Scholar] [CrossRef]

- Kanske, P.; Plitschka, J.; Kotz, S.A. Attentional orienting towards emotion: P2 and N400 ERP effects. Neuropsychologia 2011, 49, 3121–3129. [Google Scholar] [CrossRef]

- Carretié, L.; Kessel, D.; Carboni, A.; López-Martín, S.; Albert, J.; Tapia, M.; Mercado, F.; Capilla, A.; Hinojosa, J.A. Exogenous attention to facial vs non-facial emotional visual stimuli. Soc. Cogn. Affect. Neurosci. 2013, 8, 764–773. [Google Scholar] [CrossRef]

| BSF | LSF | HSF | |

|---|---|---|---|

| Approaching | 3.809 | 4.246 | 0.466 |

| Receding | 4.236 | 5.105 | 1.230 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, Z.; Moses, E.; Kritikos, A.; Pegna, A.J. Looming Angry Faces: Preliminary Evidence of Differential Electrophysiological Dynamics for Filtered Stimuli via Low and High Spatial Frequencies. Brain Sci. 2024, 14, 98. https://doi.org/10.3390/brainsci14010098

Yu Z, Moses E, Kritikos A, Pegna AJ. Looming Angry Faces: Preliminary Evidence of Differential Electrophysiological Dynamics for Filtered Stimuli via Low and High Spatial Frequencies. Brain Sciences. 2024; 14(1):98. https://doi.org/10.3390/brainsci14010098

Chicago/Turabian StyleYu, Zhou, Eleanor Moses, Ada Kritikos, and Alan J. Pegna. 2024. "Looming Angry Faces: Preliminary Evidence of Differential Electrophysiological Dynamics for Filtered Stimuli via Low and High Spatial Frequencies" Brain Sciences 14, no. 1: 98. https://doi.org/10.3390/brainsci14010098

APA StyleYu, Z., Moses, E., Kritikos, A., & Pegna, A. J. (2024). Looming Angry Faces: Preliminary Evidence of Differential Electrophysiological Dynamics for Filtered Stimuli via Low and High Spatial Frequencies. Brain Sciences, 14(1), 98. https://doi.org/10.3390/brainsci14010098