Abstract

Background: Visual perceptual learning plays a crucial role in shaping our understanding of how the human brain integrates visual cues to construct coherent perceptual experiences. The visual system is continually challenged to integrate a multitude of visual cues, including form and motion, to create a unified representation of the surrounding visual scene. This process involves both the processing of local signals and their integration into a coherent global percept. Over the past several decades, researchers have explored the mechanisms underlying this integration, focusing on concepts such as internal noise and sampling efficiency, which pertain to local and global processing, respectively. Objectives and Methods: In this study, we investigated the influence of visual perceptual learning on non-directional motion processing using dynamic Glass patterns (GPs) and modified Random-Dot Kinematograms (mRDKs). We also explored the mechanisms of learning transfer to different stimuli and tasks. Specifically, we aimed to assess whether visual perceptual learning based on illusory directional motion, triggered by form and motion cues (dynamic GPs), transfers to stimuli that elicit comparable illusory motion, such as mRDKs. Additionally, we examined whether training on form and motion coherence thresholds improves internal noise filtering and sampling efficiency. Results: Our results revealed significant learning effects on the trained task, enhancing the perception of dynamic GPs. Furthermore, there was a substantial learning transfer to the non-trained stimulus (mRDKs) and partial transfer to a different task. The data also showed differences in coherence thresholds between dynamic GPs and mRDKs, with GPs showing lower coherence thresholds than mRDKs. Finally, an interaction between visual stimulus type and session for sampling efficiency revealed that the effect of training session on participants’ performance varied depending on the type of visual stimulus, with dynamic GPs being influenced differently than mRDKs. Conclusion: These findings highlight the complexity of perceptual learning and suggest that the transfer of learning effects may be influenced by the specific characteristics of both the training stimuli and tasks, providing valuable insights for future research in visual processing.

1. Introduction

Visual perceptual learning is a behavioral method to study long-lasting perceptual improvements resulting from training [1,2,3,4,5]. Practice induces perceptual improvements across different visual domains, such as motion and form perception [3,6], contrast sensitivity [7], and more. The long-term effects of visual perceptual learning are linked to brain plasticity, which refers to the brain’s ability to modify its structure and function [8]. Visual perceptual learning often shows specific improvements tied to trained features such as location and orientation. This specificity has led to contrasting theories in the field: one suggesting localized changes in specific brain regions, and another proposing enhanced interpretation in higher-level areas [9,10]. These opposing views have shaped research for decades. Recent studies have expanded our understanding of these mechanisms. For instance, Ahissar and Hochstein [11,12] proposed a top-down learning process that begins in higher cortical levels and moves downstream to engage the neurons most relevant for stimulus encoding. Simpler tasks are processed at these higher levels, allowing for broader feature transfer, while more complex tasks rely on lower-level cortical areas where neurons are more finely tuned. Furthermore, Jeter et al. [13] found that task precision, rather than difficulty, influences the level of transfer in perceptual learning, suggesting that improvements are more specific to the conditions under which training occurs. The structure and length of training sessions also play a role in generalization, with fewer sessions increasing the likelihood of transfer [14,15]. McGovern et al. [16] contributed to this research topic by demonstrating that perceptual learning can facilitate transfer across different tasks. Their research showed a significant transfer among orientation discrimination, curvature discrimination, and global form tasks, indicating that perceptual learning improvements are not confined to a single task, but can extend across various perceptual domains and tasks. This understanding aligns with the idea that visual perceptual learning involves multiple brain networks [17,18], making it plausible for learning effects to transfer to visual stimuli with similar characteristics. Given this complexity, it is reasonable to suggest that the improvements gained through visual perceptual learning could extend to other stimuli that activate similar neural pathways.

Visual perceptual learning and learning transfer effects have also been used to study the mechanisms underlying form–motion integration and to compare it with directional motion [3]. This is exemplified by our previous study [3], where we conducted an online visual perceptual learning experiment to investigate whether non-directional motion evoked by dynamic Glass patterns (GPs) shares the same processing mechanisms as directional motion evoked by Random-Dot Kinematograms (RDKs). Dynamic GPs are visual stimuli formed by dot pairs called dipoles, and based on their orientation, they can create different global shapes such as translational (i.e., oriented), circular, radial, spiral, etc. [19]. GPs become dynamic when composed of multiple independent frames shown in rapid succession. The frames are independent because dipoles do not follow a specific trajectory throughout the frames (i.e., no dipole-to-dipole correspondence across frames) but maintain a constant orientation, creating an illusory directional motion [3,20,21,22,23,24,25,26,27]. This type of motion has been called non-directional motion [3,28]. Conversely, RDKs are composed of single dots that follow a specific trajectory throughout the frames. In our previous study [3], we conducted a ten-session experiment with two distinct groups of participants: one group trained using dynamic GPs, while the other group trained with RDKs. Participants performed a two-interval forced-choice (2IFC) task, where they had to detect which of the two stimuli presented to the screen was the oriented/directional pattern. The results indicated that visual perceptual learning is specific to the trained stimulus, suggesting that directional and non-directional motion may rely on different mechanisms [3]. Despite the growing evidence supporting an interconnected system for motion and form processing, a fundamental question remains: how do these neural networks integrate local form and motion cues to construct a global and coherent perceptual experience? A substantial body of psychophysical research has highlighted the potential of internal noise and sampling efficiency as key parameters in elucidating the interplay between local and global processing of form and motion information [24,29,30,31,32]. Pelli [33] was one of the pioneers of this concept in the field of psychology and psychophysics. Simplifying his model, the author suggested that when we perceive something, our brain adds a consistent amount of internal noise to the sensory input and then processes it to make a decision. By testing individuals’ ability to see against different levels of external noise, we can learn about their sensitivity to that specific visual property tested and how efficiently their brains process that visual information. Within the domain of form and motion perception, internal noise refers to the observer’s ability to detect the inherent variability (variance) in the orientation or motion direction of individual elements within a visual pattern in the absence of any external noise [24,34,35]. Conversely, sampling efficiency reflects the visual system’s capacity to integrate visual information from various spatial and temporal locations into a unified global percept [34]. Both internal noise and sampling efficiency can be estimated using equivalent noise analysis [32,34,35,36,37]. Another prevalent approach in psychophysical research to study global motion (and form) perception has been the use of coherence tasks introduced by Newsome and colleagues [38]. Coherence tasks involve measuring the number of coherently moving or oriented elements (i.e., same direction or orientation) that can be replaced by randomly moving or oriented elements while still allowing for reliable discrimination of the overall direction or orientation, for example, left/right or horizontal/vertical. High motion or orientation coherence thresholds reflect poor global pooling of motion or orientation signals across space [34].

Building on these considerations, while visual perceptual learning has shed light on the mechanisms behind long-lasting perceptual improvements, several critical questions remain unresolved. Our study addresses three key research questions: (i) Does improvement in global form–motion integration transfer to non-directional motion in mRDK? (ii) Does the improvement rely on more efficient noise filtering and/or more efficient sampling? (iii) Are mRDKs easier to discriminate than GPs, even when both stimuli convey non-directional motion? These questions form the foundation of our investigation and are explored in detail in the following sections.

1.1. Does Improvement in Global Form–Motion Integration Transfer to Non-Directional Motion from mRDK?

In the current study, we focused on visual perceptual learning in visual local and global processing through two classes of visual stimuli: dynamic translational GPs and a modified version of RDKs that we call modified Random-Dot Kinematograms (mRDKs). To recreate non-directional motion with RDKs, we developed the mRDK so that the individual dots in the coherent portion move either upwards or downwards, while the remaining ones move randomly. Each coherent dot is randomly assigned an upward or downward direction for each frame, resulting in a perceived motion of the dots along the vertical axis (see Video S1b in the Supplementary Material). However, it is not possible to discern an upward or downward trajectory (for further details, please see Section 2.3). Although we attempted to recreate non-directional motion with the mRDK, the main difference between dynamic GPs and the mRDK is that the former is composed of dipoles while the latter is made of single dots. This means that, unlike mRDKs, dynamic GPs involve both motion and form processing elicited by dipole orientation. Visual perceptual learning allowed us to monitor participants’ performance changes on a task involving dynamic GPs to evaluate form–motion global processing. This methodology enabled us to investigate transfer effects onto a structurally different yet perceptually similar visual stimulus (i.e., mRDK). We hypothesized that visual perceptual learning based on a coherence task with dynamic translational GP would cause a gradual decrease in participants’ coherence thresholds, implying that observers enhance their sensitivity to integrate form and motion cues at a global level [3]. We also hypothesized that dynamic translational GPs and mRDKs, being characterized by the same type of non-directional motion, would be processed similarly. Therefore, we expected a learning transfer to the non-trained stimulus.

1.2. Does the Improvement of Global Motion–Form Integration Rely upon More Efficient Noise Filtering and/or More Efficient Sampling?

We also aimed to address the following question: what aspects of visual processing are enhanced by learning based on a global coherence discrimination task? Dosher and Lu [39] investigated the mechanisms underlying visual perceptual learning with the aim of determining if distinct mechanisms enhance performance in noisy and clear displays. Two mechanisms—external noise filtering and stimulus amplification—are processes involved in how the human perceptual system adapts to different environments. Their existence has been identified and examined by the authors using a perceptual template model, with specific manipulations of external noise. These mechanisms serve distinct functions: external-noise filtering is crucial in noisy settings, while stimulus amplification is essential in clear environments. Visual perceptual learning associated with these mechanisms reflects improvements in the enhancement of stimulus information quality through external-noise filtering, and/or overcoming intrinsic processing limitations of the human observer through stimulus amplification. The visual stimuli the authors adopted were a simple Gabor and a Gabor with a random noise mask. Participants’ task was to discriminate two different orientations, ±8° from vertical. The results revealed an asymmetric transfer of learning. Training with clear displays improved performance in both clear and noisy environments, suggesting that learning increases the stimulus signal or noise filtering, while training with noisy displays did not benefit performance with clear displays, suggesting that, in this case, learning reduced only the impact of external noise (external noise exclusion) [40]. Using an equivalent noise approach, we tested whether visual perceptual learning could transfer to a task that requires noise filtering and/or sampling efficiency (integration of local signals). According to this approach, all the individual elements (dots or dipoles) can be defined as signals, as they contribute to the global motion/form. This is achieved by assigning the direction/orientation of dots/dipoles based on a Gaussian distribution around a given mean value. The variability in direction/orientation is introduced by varying the standard deviation of the Gaussian distribution [35,41,42,43]. By using an orientation/direction discrimination with variable standard deviation of the Gaussian distribution, we assessed whether visual perceptual learning of dynamic GPs produced changes related to noise filtering at a local level, or sampling efficiency at a global level. It is important to note that in the equivalent noise approach, internal noise is considered even when dealing with external noise, as the standard deviation of direction/orientation affects the internal representation of the stimulus.

1.3. Are RDKs Easier to Discriminate than GPs Even When Both Stimuli Convey Non-Directional Motion?

Finally, some studies have shown that RDKs are easier to discriminate than dynamic GPs [3,19,44]. Our final objective was to determine whether this difference is maintained when non-directional motion is introduced into RDKs.

2. Materials and Methods

2.1. Participants

A total of twelve naïve participants (mean age: 21; SD: 4.427; all females) took part in this experiment. However, two participants were excluded from the final analysis due to their inability to show perceptual learning. All participants had normal or corrected-to-normal vision, and binocular viewing was employed throughout the experiment. This study was conducted in accordance with the tenets of the World Medical Association Declaration of Helsinki [45]. Ethical approval for this study was obtained by the Department of Psychology Ethics Committee at the University of Padova (Protocol number: 4764). Written informed consent was obtained from all participants before the first session.

2.2. Apparatus

Visual stimuli were presented on a 23.8-inch Hp Elite E240 monitor (Coimbra, Portugal), with a spatial resolution of 1920 × 1080 pixels and a refresh rate of 60 Hz. Each pixel subtended 1.65 arcminutes. Participants were positioned in a dark room at a viewing distance of 57 cm from the screen. The display of visual stimuli was controlled using MATLAB R2021b Psychtoolbox-3 [46,47,48].

2.3. Stimuli

The visual stimuli consisted of dynamic Glass patterns (GPs) and modified Random-Dot Kinematograms (mRDKs). Dynamic GPs consisted of 250 white dipoles, while mRDKs consisted of 500 white dots, each 0.083 deg wide, presented on a gray background. Dipoles and dots were randomly positioned within a circular window with inner and outer radii of 0.2° and 5°, respectively. The distance between the centers of adjacent dots within each dipole was 0.18 degrees [26,49,50]. In the mRDKs, a proportion of dots moved vertically, either upward or downward, while the other dots moved in random directions. The step size for each dot in the mRDKs was 0.18 deg. Specifically, the signal dots were randomly reassigned positions throughout the frames at regular intervals, maintaining a constant vertical directional axis. This produced a flickering texture that created the perception of motion along the vertical axis, without following a specific trajectory. Similarly, in the dynamic GPs, the positions of the signal and noise dipoles (i.e., randomly oriented dipoles) continuously changed throughout the frames, while their orientation remained constant. The frames composing the dynamic GPs and mRDKs were sequentially presented at a rate of 10 Hz, with each frame lasting approximately 0.1 s (see Video S1a,b in the Supplementary Material).

3. Procedure

This study comprised ten sessions, including a 2 h pre-test, eight training sessions of approximately 40 min each [3,51,52], and a 2 h post-test session. Participants completed one training session per day, with a maximum interval of three days between sessions. Both the pre-test and post-test involved four tasks, each consisting of 300 trials: a coherence task with dynamic GPs, a coherence task with mRDKs, an equivalent noise task with dynamic GPs, and an equivalent noise task with mRDKs. The order of tasks was counterbalanced, except for the post-test, which maintained the same task order as the pre-test. The initial coherence of the stimuli was set at 50%.

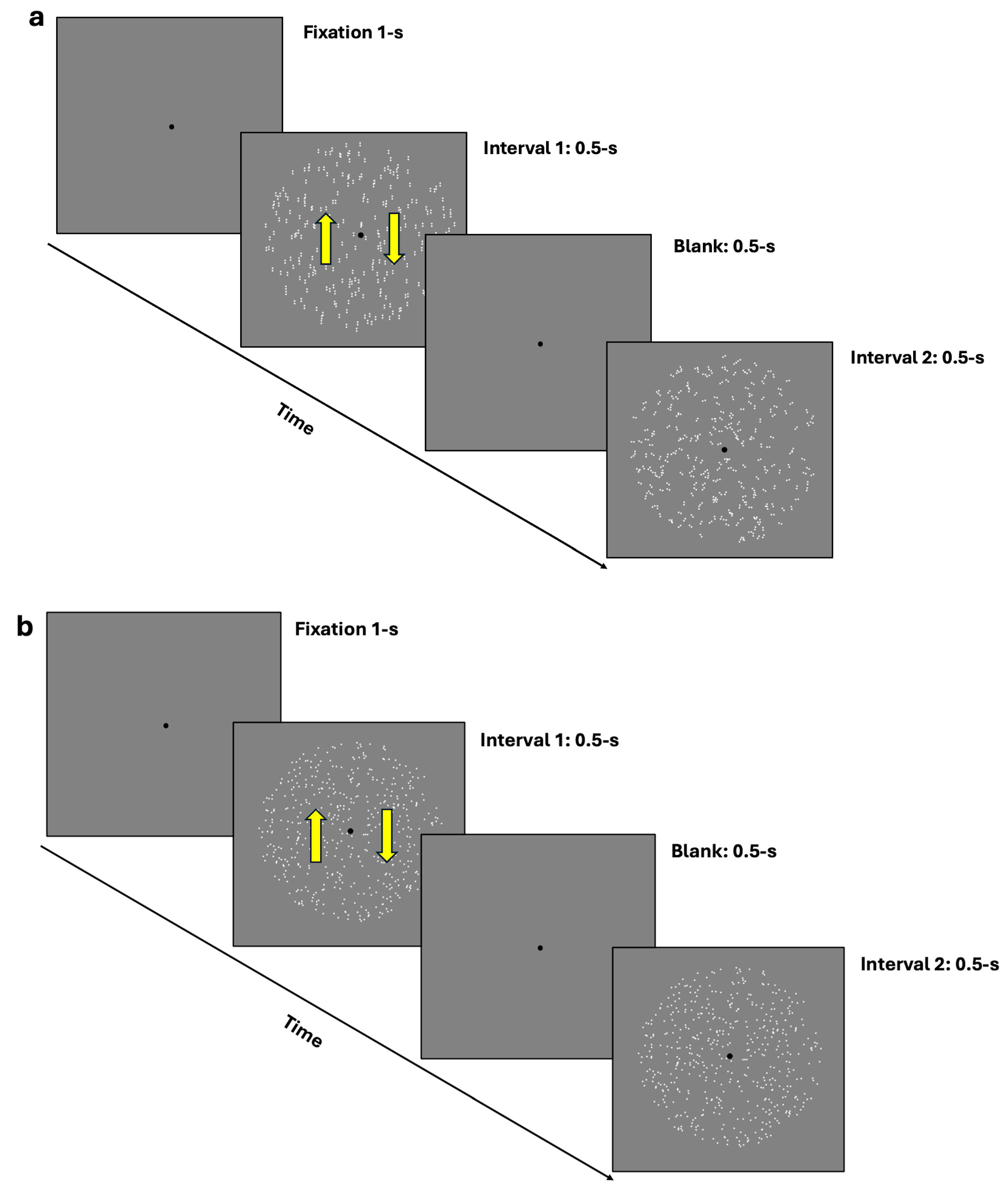

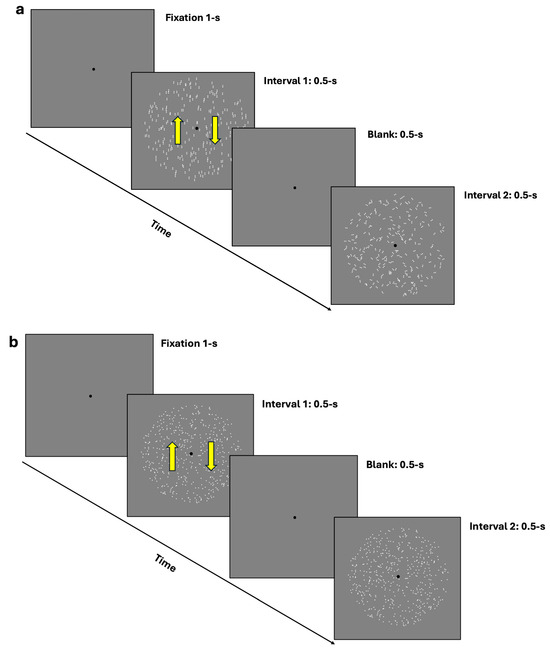

For the coherence task, participants were presented with two rapid intervals: one containing the coherent translational stimulus (either GPs or mRDKs) and the other containing the noisy stimulus (random dots/dipoles). Participants’ task was to identify which interval contained the coherent stimulus by pressing ‘1’ on the keyboard if they observed the coherent pattern in the first interval and ‘2’ if they observed it in the second interval (2IFC) (see Figure 1a,b). A 1-up/2-down Levitt staircase [53] was used to estimate the 70.7% coherence threshold, calculated as the mean of the last 14 reversals. The steps of the staircase were 25, 20, 15, 10, 5, 2, and 1. These values indicate the extent to which the stimulus coherence is adjusted after each response. If a response is correct, the stimulus coherence is reduced according to these values, progressively adapting to the level of difficulty.

Figure 1.

(a,b) depict the 2IFC task procedure. (a) illustrates the procedure using dynamic GPs, where the first interval contains a vertically oriented GP, and the second interval contains a random/noisy GP. (b) depicts the procedure using mRDKs, with bidirectional movement along the vertical axis. In both figures, the arrows in the first interval indicate the bidirectional illusory motion along the vertical axis. The interval order shown in the figure is just an example; in the actual experiment, the coherent stimulus could randomly appear in either the first or the second temporal interval. Additionally, for illustrative purposes, the stimuli are shown at the maximum level (100%) of their coherence in this figure.

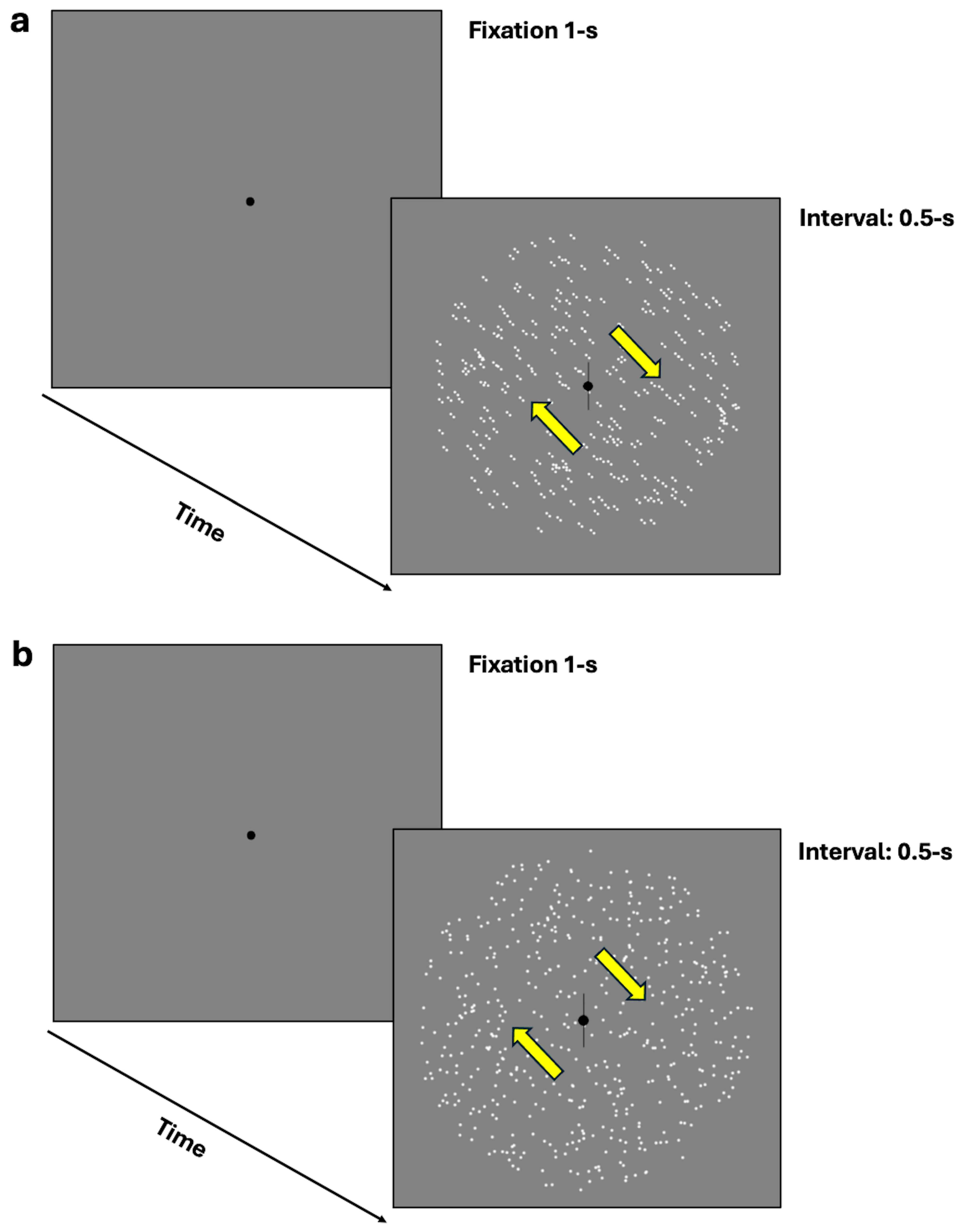

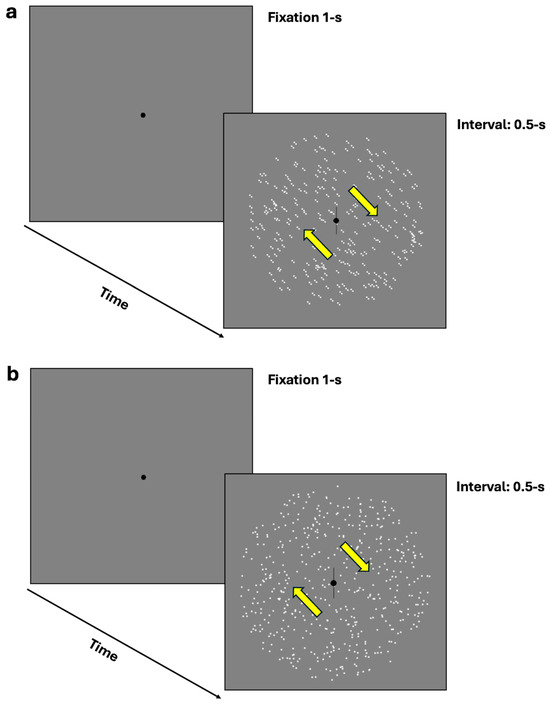

In the equivalent noise task, participants were required to discriminate the perceived orientation or the illusory direction of motion through a two-alternative forced-choice (2AFC) task. Specifically, they were required to indicate whether the moving dots or oriented dipoles were tilted clockwise or counterclockwise from vertical by pressing the left arrow key for counterclockwise perception and the right arrow key for clockwise perception (see Figure 2a,b). The 70.7% discrimination thresholds were assessed using a 1-up/2-down Levitt staircase [53]. However, due to an error in the equivalent noise task staircase, additional computations were necessary, as outlined in the Appendix A.

Figure 2.

(a,b) represent the equivalent noise task where participants were required to discriminate the perceived orientation or illusory direction of motion (either clockwise or counterclockwise from vertical) using a two-alternative forced-choice task (2AFC). (a) depicts the procedure with dynamic GPs, while (b) illustrates the mRDKs. The arrows represent the bidirectional illusory motion along the oblique axis and were not displayed during the experiment.

In coherence tasks, participants can readily discriminate between the signal and noise components. In contrast, within the equivalent noise paradigm, all dots/dipoles are considered as signals, contributing to the overall motion or form. This is achieved by determining the direction or orientation of dots or dipoles based on a Gaussian distribution centered around a specific mean value [35,42,43]. Before starting the pre-test, all participants underwent a familiarization phase with the tasks, performing a series of trials to ensure confidence with the visual stimuli and the experimental procedure. The training sessions focused exclusively on the coherence task with dynamic GPs, comprising two blocks of 300 trials each.

4. Results

The analyses and visualizations were conducted using R (v4.4.0; Boston, MA, USA) [54,55].

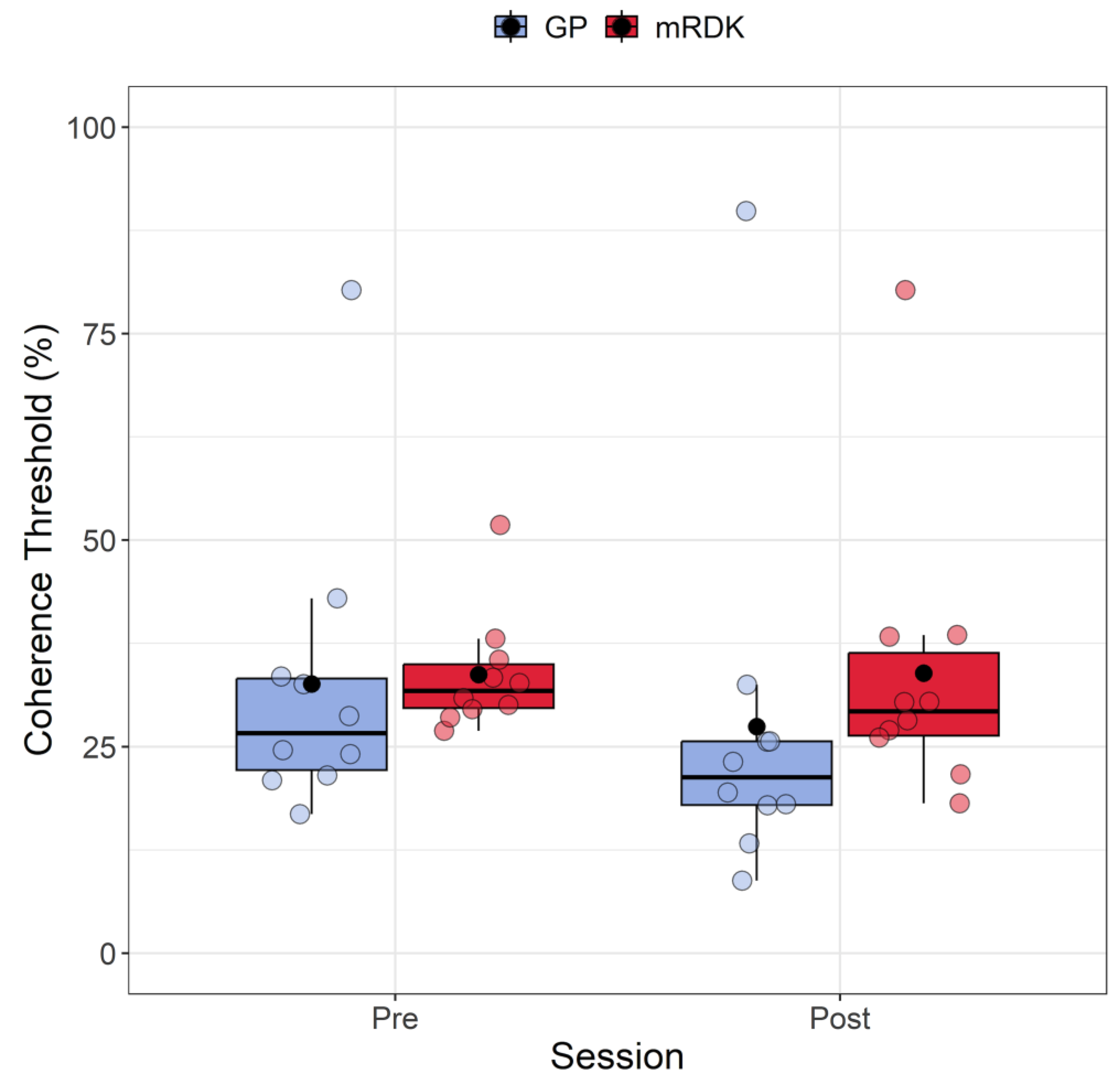

4.1. Coherence Thresholds: Analysis of Pre- and Post-Test

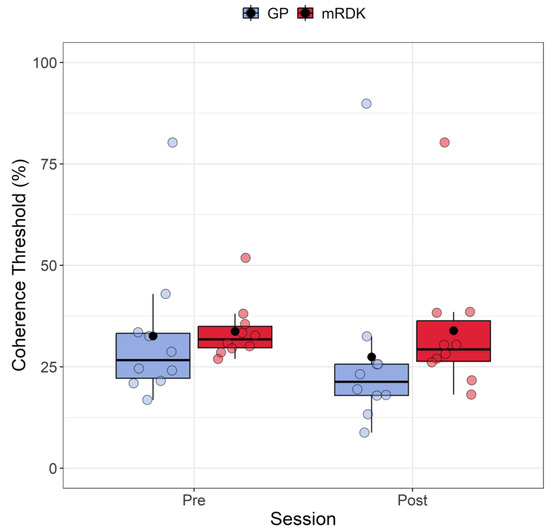

Figure 3 shows the coherence thresholds measured in both pre- and post-test for each type of visual stimulus. The Shapiro–Wilk test revealed that the residuals for coherence thresholds in every condition (i.e., a combination of stimulus and session) deviated from a normal distribution (W = 0.756, p < 0.001). Each condition’s data also displayed a high positive skewness ≥ 1.7. Additionally, we identified five outliers (80.28, 51.82, 8.77, 89.82, and 80.27) using the Double Median Absolute Deviation (MAD) [56], which were included in the analysis. Due to the non-normality of residuals, we decided to perform a non-parametric factorial ANOVA using the Aligned Rank Transform (ART) method from the R package ARTool [57,58]. ART is a robust non-parametric approach specifically designed for analyzing data when assumptions like normality and equal variances are violated. The ART process involves ranking the data from lowest to highest, assigning each data point a rank based on its relative value within the group. These ranks are then aligned across different conditions by matching ranks of equivalent values, addressing issues related to varying scales or variances among groups. Following alignment, the ranks are transformed back to the original data scale, enabling the application of traditional statistical methods like ANOVA or a linear mixed model. This transformation ensures accurate data analysis while accommodating rank alignment across groups.

Figure 3.

Boxplots depicting coherence thresholds for pre- and post-test sessions, as well as for GPs and mRDKs. Each box in the plot represents the interquartile range (IQR) of the data, with the median indicated by the horizontal line inside the box. The whiskers extend to the minimum and maximum values within 1.5 times the IQR from the first and third quartiles, respectively. Additionally, the black point inside each box denotes the mean of that condition. The colored dots represent individual data points, with blue indicating dynamic GPs and red indicating mRDK.

Post hoc analysis was conducted using the art.con function [57,59]. Following the rank assignment, we conducted a linear mixed model with session (pre- and post-test) and stimulus (GPs and mRDKs) as within-subject factors, and the intercept across participants functioned as a random effect. Our analysis revealed significant main effects for session (F(1, 27) = 4.74; p = 0.038) and stimulus (F(1, 27) = 8.17, p = 0.008), indicating that both factors impact the response variable. However, the interaction between session and stimulus did not reach statistical significance (F(1, 27) = 0.25, p = 0.621). The analysis revealed that coherence thresholds were lower in the post-test phase than in the pre-test phase, and mRDKs showed significantly higher coherence thresholds compared to GPs.

4.2. Equivalent Noise: Analysis of Pre- and Post-Test

For each participant, discrimination thresholds were used to estimate internal noise (σint) and sampling efficiency (η). This process involves breaking down the total uncertainty in perceiving the stimulus (σobs) into two separate components, which are treated independently before being combined quadratically. We followed the approach outlined by Ghin et al. [37], employing the following equivalent noise formula for calculating :

σext refers to the inherent noise in the stimulus, commonly known as external noise, while σint represents the intrinsic uncertainty in the observer, referred to as internal noise. The summation is adjusted by a factor η, which denotes the effective number of simultaneous samplings conducted by the observer on the stimulus, a process known as sampling. In this study, the equivalent noise parametrization was implemented using a two-point procedure [32]: one with zero external noise (σext fixed at 0) to determine the minimum detectable directional deviation from vertical in the absence of external noise, and another with high external noise to establish the maximum tolerable noise level in terms of orientation/direction deviation from the mean.

The values for zero external noise were obtained from the average of the last 14 reversals from each 1-up/2-down staircase procedure, the uncertainties being the standard deviations of the considered reversals. A different procedure, detailed in the Appendix A, was followed for the high external noise. Here, σobs was set at 45° (equivalent to π/4 radians), while σext corresponded to the level of external noise at which an observer achieves 70.7% accuracy in discriminating motion direction. Simplifying the equivalent noise parametrization, at 0 external noise, Equation (1) becomes the following:

instead, at high noise, considering , it becomes the following:

combining Equations (2) and (3) can result in the following:

The uncertainties associated with and can be computed as follows:

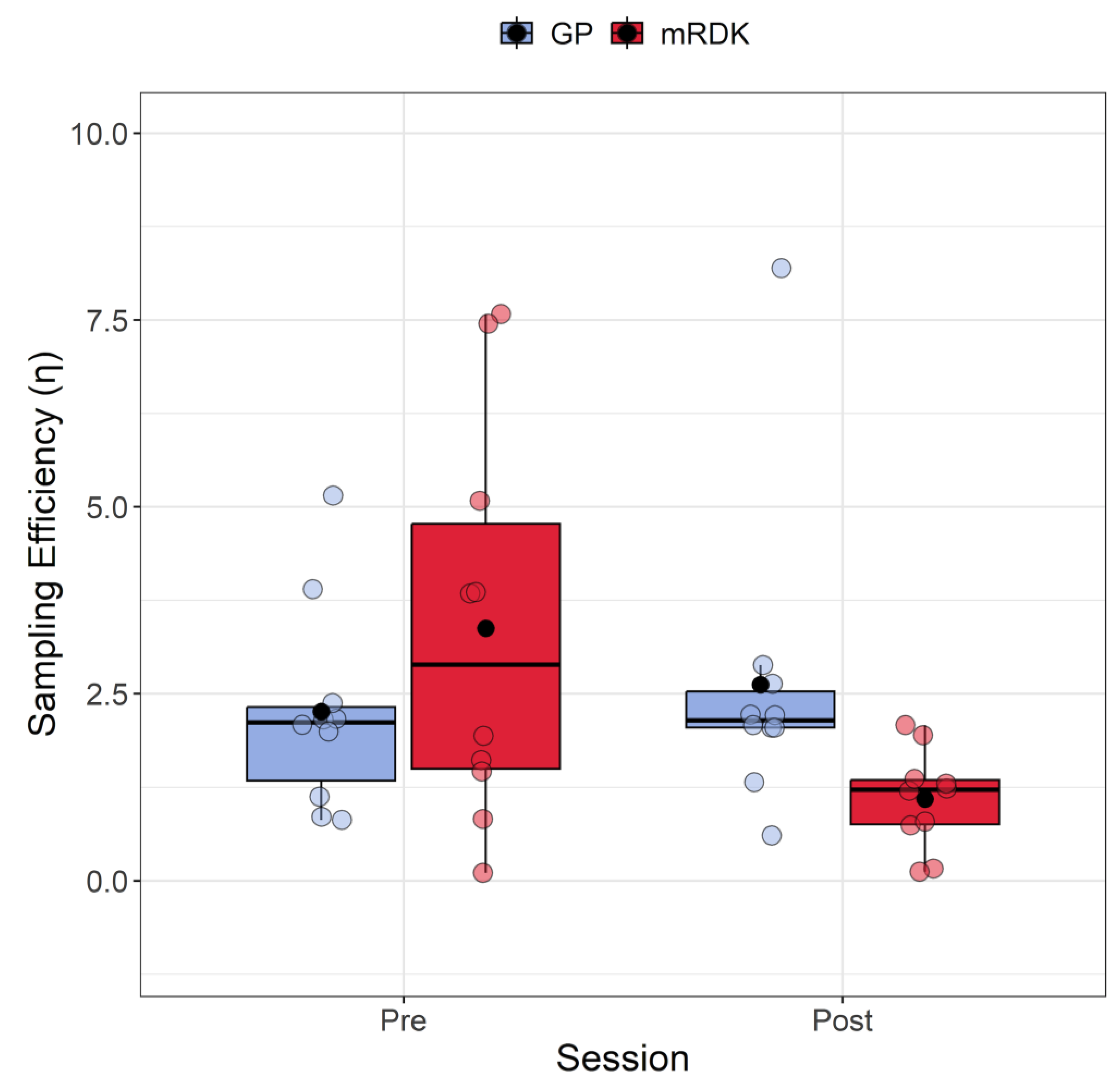

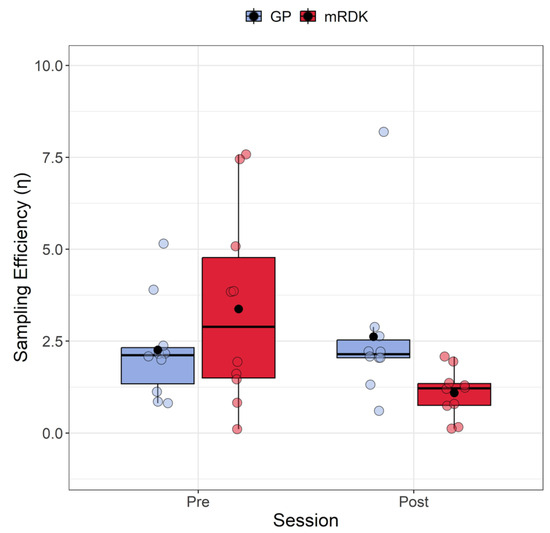

Based on the extracted values, we conducted an analysis to compare the sampling efficiency and internal noise for GPs and mRDKs to observe the impact of learning transfer. Figure 4 shows the results of sampling efficiency measured in both pre- and post-test. The Shapiro–Wilk test indicated that the residuals deviated from a normal distribution only for one condition (W = 0.66, p = 0.0003), displaying a high positive skewness in the same condition 2.17 (SE = 0.69) and a kurtosis of 3.74 (SE = 1.33). We also detected eight outliers (3.84, 7.58, 5.15, 3.86, 3.90, 7.45, 8.19, and 5.07). Due to the presence of outliers and non-normally distributed residuals in one condition, we employed again the ARTool package. Following rank assignment, we performed a linear mixed model with session (pre- and post-test) and stimulus (GPs vs. mRDKs) as within-subjects factors. The random effect was always the intercept across participants. The analysis revealed that the main effect of the session is not significant (F(1, 27) = 3.48, p = 0.07), as well as the main effect of stimulus (F(1, 27) = 0.01, p = 0.91). However, a significant interaction between session and stimulus was observed (F(1, 27) = 4.85, p = 0.03). Subsequent Holm-corrected post hoc comparisons were conducted to further investigate the interaction effect (Holm correction applied for six tests). A significant difference was identified in the comparison between GPs and mRDKs in the post-test session (padj = 0.027), as well as between pre- and post-test for mRDK (padj = 0.029). These results highlight the substantial impact of visual perceptual learning on sampling efficiency, revealing that sampling efficiency in fact improved only for the non-trained mRDK stimulus. Specifically, for GPs, there was no significant difference in terms of simultaneous samplings conducted by the observer on the stimulus (pre-test: M = 2.26, SEM = 0.14; post-test: M = 2.62, SEM = 0.21). In contrast, for the mRDKs, a significant reduction in simultaneous samplings was observed (pre-test: M = 3.38, SEM = 0.27; post-test: M = 1.1, SEM = 0.07).

Figure 4.

Boxplots of sampling efficiency (η) for pre- and post-test conditions. For each boxplot, the horizontal black line indicates the median, whereas the dot within each box represents the mean sampling efficiency for each condition. The colored dots represent individual data points, with blue indicating dynamic GPs and red indicating mRDK.

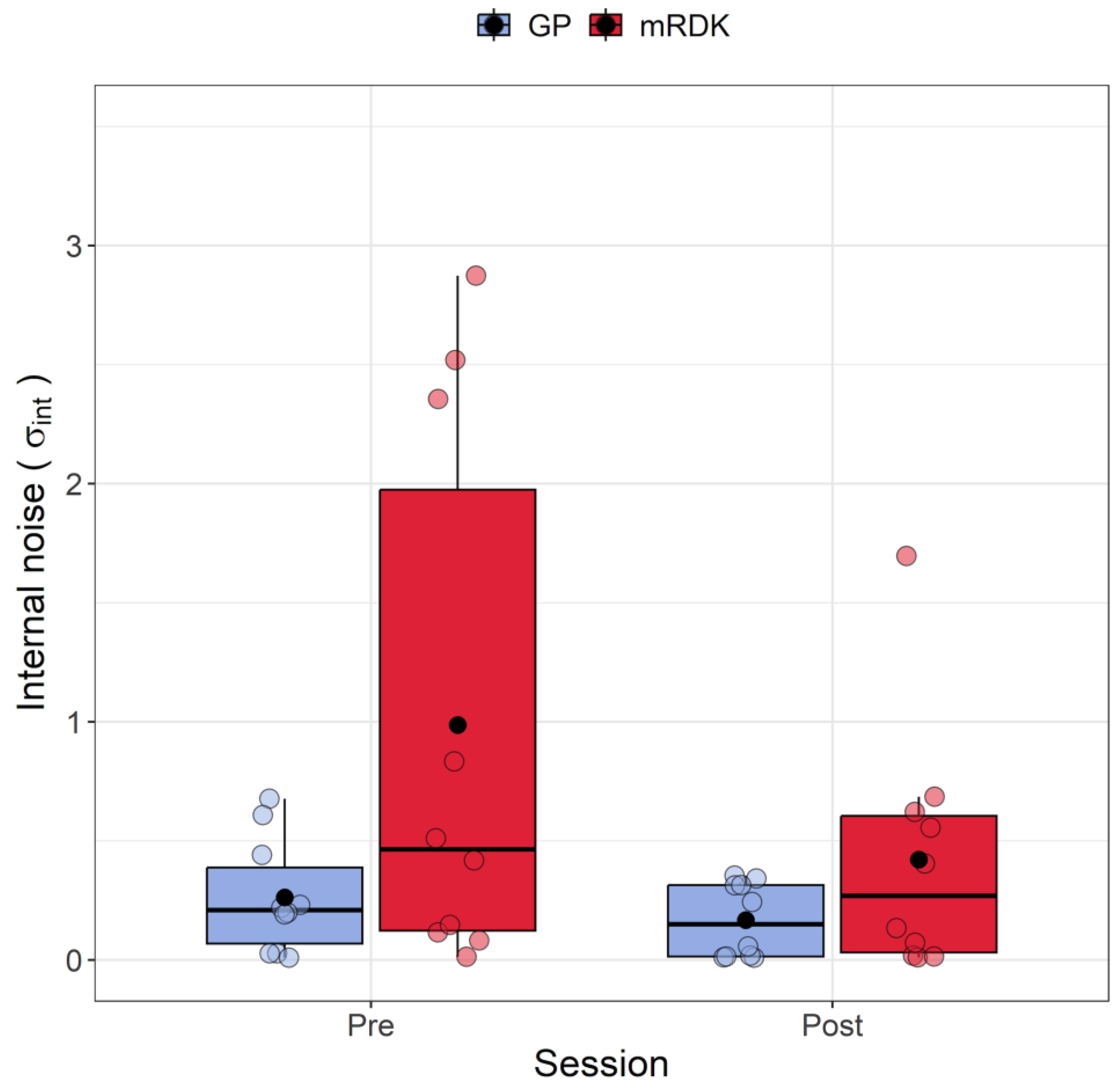

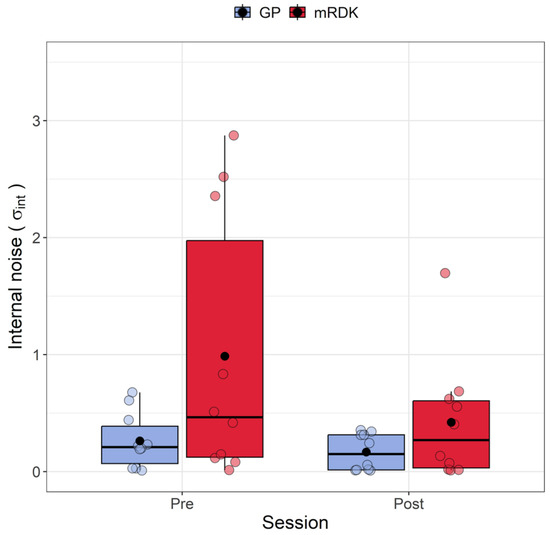

Figure 5 shows the variation in internal noise between pre- and post-tests for both GPs and mRDKs. The Shapiro–Wilk test revealed that the residuals were not normally distributed in three out of four conditions (all p < 0.01), showing a positive skewness of 2.35. Four outliers were identified with values of 2.52, 2.87, 1.69, and 2.35. To analyze the internal noise values, the ARTool method was employed. After rank assignment, a linear mixed model was performed, revealing significant effects for the session (F(1, 27) = 4.24, p = 0.049) and stimulus (F(1, 27) = 5.168, p = 0.031). However, the interaction effect between session and stimulus was not statistically significant (F(1, 27) = 1.535, p = 0.225). Examining the impact of visual perceptual learning, a significant decrease in internal noise was observed from the pre-test to the post-test (pre-test: mean = 0.62, SEM = 0.044; post-test: mean = 0.29, SEM = 0.02). Furthermore, higher levels of internal noise were found for mRDK compared to GPs (mRDK: mean = 0.7, SEM = 0.045; GP: mean = 0.22, SEM = 0.01).

Figure 5.

Boxplots of internal noise () for pre- and post-test conditions. For each boxplot, the horizontal black line indicates the median, whereas the dot within each box represents the mean internal noise (in radians) for each condition. The colored dots represent individual data points, with blue indicating dynamic GPs and red indicating mRDK.

4.3. Learning Curves

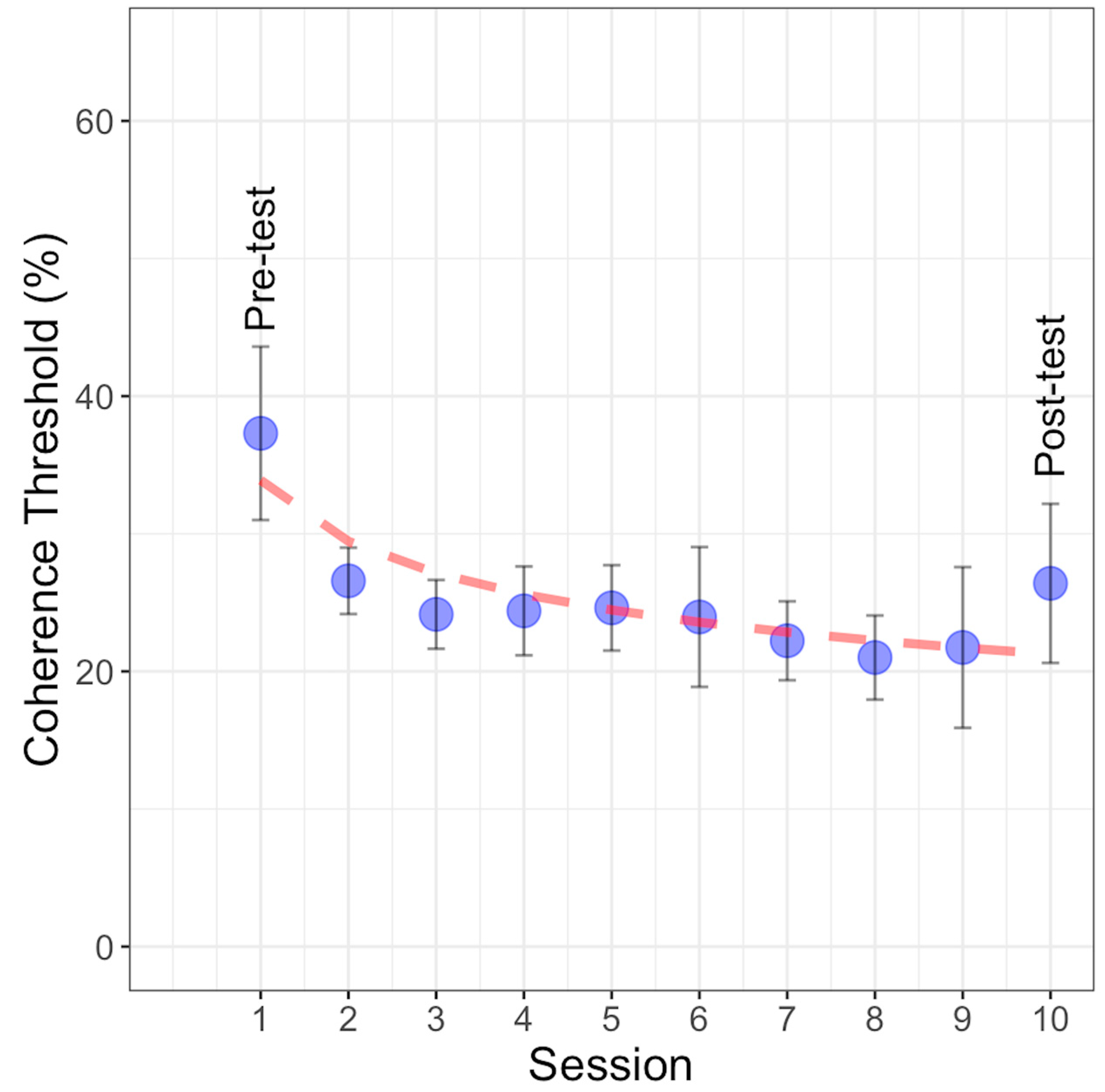

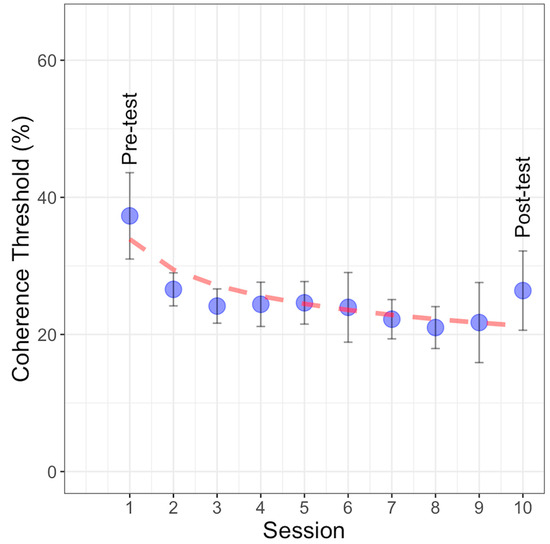

Figure 6 depicts the average coherence thresholds estimated across the eight learning sessions with GPs. The starting and ending points on the curve represent the contrast thresholds estimated before and after training. To analyze the learning curve and investigate the relationship between learning sessions and coherence threshold, three different models were examined: linear, power, and exponential. The models’ forms are described as follows.

Figure 6.

Coherence threshold percentages with GP stimuli observed across ten experimental sessions, including the pre-test, post-test, and eight training sessions. The blue points represent the mean coherence threshold for each session, with error bars indicating ±1SEM. The dashed red line represents the power function fit to the data.

These functions were fitted to the learning curve, and the best-fitting model was determined to be the power function based on the lowest values of AIC, AICc, and BIC (refer to Table 1). In the power function, parameter ‘a’ represents the scale parameter, indicating the power function’s value at x = 1 (initial assessment), while ‘b’ represents the learning rate, with smaller values suggesting slower progress across sessions; ‘x’ denotes the extent of practice (learning sessions). In this study, the parameters were estimated as a = 33.89 and b = 0.20. The power fitting model indicates that the rate of improvement in learning performance does not follow a linear pattern with the number of practice sessions. This nonlinearity suggests that the learning rate is higher at the beginning of the training and decreases as the number of sessions increases. The linear and exponential models did not fit as well because the linear model assumes a constant rate of improvement over time, and the exponential model assumes rapid early gains that continue exponentially. Neither model captured the gradual slowing of the learning rate observed in our data. The power function, with its flexibility, better modeled this nonlinear learning process.

Table 1.

Functions used to fit the coherence threshold values and associated estimators of prediction error.

5. Discussion

The primary objective of this study was to further investigate the impact of visual perceptual learning on visual stimuli containing form and motion cues that evoke non-directional motion. We aimed to examine the learning transfer effects to a different, yet similar, stimulus, as well as to a different task. To achieve this, we estimated coherence thresholds for dynamic Glass patterns (GPs) during a motion discrimination task across ten sessions, where participants identified in which of the two presented intervals the coherent pattern appeared. Additionally, to assess learning transfer at both the stimulus and task levels, we introduced tasks in the pre- and post-test to evaluate coherence thresholds for modified Random-Dot Kinematograms (mRDKs) and discrimination thresholds for both high- and zero-noise levels, computing internal noise and sampling efficiency for both dynamic GPs and mRDKs. This study is the first to test a new class of visual stimuli that simulates the illusory directional motion elicited by mRDKs in the absence of form cues. This approach was crucial for better understanding the dynamics underlying learning transfer to a different visual stimulus.

As reported in the introduction, our previous visual perceptual learning study [3] showed that eight days of training on dynamic translational GPs did not lead to learning transfer to directional RDKs, suggesting that distinct mechanisms may underly directional and non-directional motion processing. In contrast to directional RDKs, where each dot follows a specific trajectory, the current study found not only significant learning effects on the trained stimulus (i.e., dynamic GPs) and task (i.e., coherence thresholds) but also substantial learning transfer to the non-trained stimulus (i.e., mRDKs). This suggests that the processing mechanisms underlying two forms of non-directional motion—one induced by dynamic GPs, which integrate both form and motion cues, and the other by mRDKs, which contain only ambiguous motion cues—share overlapping processing mechanisms.

We also observed partial learning transfer to a different task, evidenced by improved internal noise filtering after eight days of training on coherence thresholds. According to signal detection theory, internal responses to stimuli are probabilistic, meaning that a particular stimulus has only a certain probability of triggering a specific internal response [60,61]. In the context of perceptual learning, each trial elicits an internal response in the observer that may be based on decreasing the internal noise, enhancing processing efficiency (external noise exclusion), or both. Our findings indicate that training on global features discrimination tasks can enhance local noise filtering. However, the ability to integrate local information into a global percept did not achieve statistical significance (p = 0.07). Nonetheless, the trend observed suggests that a larger sample size may help clarify this effect. Our results also show that the variance in sampling efficiency and internal noise decreases drastically between pre- and post-training sessions. This may reflect an optimization of perceptual processes, such as local and global cues processing, leading to more accurate and reliable performance on visual discrimination tasks.

A slightly different outcome was observed in a study by Gold et al. [60], where the authors investigated visual perceptual learning using the signal detection theory. Their aim was to determine whether perceptual improvements resulted from increased internal signal strength or decreased internal noise. They employed an external noise masking and a double-pass response consistency method (which measures how reliably participants provide the same answers when repeating the same task under identical conditions) to analyze how observers learned to detect unfamiliar visual stimuli. Specifically, the tasks involved discriminating between two types of unfamiliar patterns: human faces and abstract textures. Although participants’ performance improved with practice, internal noise filtering did not change. This suggested that learning enhanced internal signal strength without decreasing internal noise. Similarly, Kurki and Eckstein [62] used a classification image methodology to investigate which parts and features of the stimulus the visual system processes at different stages of learning. They found that while sampling efficiency increased with training, internal noise filtering was not affected. These differences between studies may arise from the distinct methodologies and models used, suggesting that future research should explore the variations between these models in the context of visual perceptual learning.

Furthermore, we observed a significant interaction between the visual stimulus and the session, as well as a generalization of learning to the non-trained stimulus (mRDK) for sampling efficiency. This indicates that the impact of the training session varied depending on the type of visual stimulus, revealing distinct effects in the post-test tasks. In other words, for sampling efficiency, the training had a different impact on participants’ performance depending on whether the stimulus was a dynamic GP or an mRDK. It is important to consider that the equivalent noise model is a mathematical framework, and we did not specifically train participants on any equivalent noise tasks. Since sampling efficiency for dynamic GPs was already at floor level in the pre-test, there was likely little room for improvement, suggesting that the task with dynamic GPs was relatively simple. For the mRDK, being a more challenging stimulus to discriminate, there might have been a small generalization effect. These different effects suggest that the type of visual stimulus plays a critical role in how training influences visual perceptual learning and the ability to integrate the orientations/directions of dynamic GPs and mRDKs.

Finally, we found a statistical difference between dynamic GPs and mRDKs, with mRDKs showing higher coherence thresholds compared with dynamic GPs. This indicates that participants could more easily discriminate the motion axis orientation in dynamic GPs than in mRDKs. This could be attributed to the increased difficulty in processing mRDKs due to the random scrambling of the positions of individual elements within the pattern, as mRDKs introduce visual noise and disrupt a coherent motion direction, thus requiring more complex computation to be perceived. Therefore, we can conclude that the well-defined directional motion in directional RDKs makes them easier to process than dynamic GPs, as supported by previous investigations [3,19,44].

6. Conclusions

This study examined the impact of visual perceptual learning on stimuli that integrate both form and motion cues, with a particular focus on non-directional motion. The primary aim was to evaluate the transferability of learning across different stimuli and tasks. The results showed that training on dynamic Glass patterns (GPs) not only improved performance on the trained task (coherence threshold) but also transferred to an untrained stimulus—modified Random-Dot Kinematograms (mRDKs). This suggests that both types of non-directional motion share common processing mechanisms. Additionally, partial transfer to a different task was observed, as reflected by enhanced internal noise filtering.

Participants also found dynamic GPs easier to process than mRDKs.

Overall, these findings deepen our understanding of visual perceptual learning and its transfer mechanisms in non-directional motion perception, highlighting the importance of stimulus characteristics and the potential for visual perceptual learning to enhance internal processing efficiency.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/brainsci14100997/s1, Video S1. (a) Illustrates the dynamic GPs, where dipoles appear to shift along the vertical axis, creating an illusion of directional motion despite the absence of coherent motion (i.e., no dipole-to-dipole correspondence between successive patterns). (b) Shows the mRDKs, depicting randomly distributed dots drifting along the vertical axis. In both videos, two temporal intervals are presented: the first interval always contains the coherent pattern (100% coherence), while the second interval always contains a random/noise pattern (GPs and mRDKs). However, in the actual experiment, the temporal intervals with the coherent non-directional motion and the noise pattern were presented in random order.

Author Contributions

R.D.: Conceptualization, Methodology, Data curation, Software, Writing—original draft, Writing—review & editing. A.C.: Conceptualization, Methodology, Data curation, Software, Writing—review and editing, Supervision; G.C.: Conceptualization, Methodology, Writing—review and editing, Supervision; M.R.: Methodology, Writing—review and editing; Ó.F.G.: Writing—review and editing, Supervision; A.P.: Conceptualization, Methodology, Data curation, Software, Writing—review and editing, Supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of the University of Padova, Department of General Psychology (protocol code: 4819; date of approval: 03/06/2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study as well as the MATLAB scripts used for the experiment are openly available in Open Science Framework (OSF) at https://osf.io/whtpd/?view_only=4229c09c807d47beb88dbab6b78a7681 (Accessed on 27 September 2024).

Acknowledgments

This work was carried out within the scope of the project “Use-inspired basic research”, for which the Department of General Psychology of the University of Padova has been recognized as “Dipartimento di Eccellenza” by the Ministry of University and Research. MR and RD were supported by the University of Padova, Department of Psychology and by the Human Inspired Centre.

Conflicts of Interest

The authors declare no competing financial interests.

Appendix A

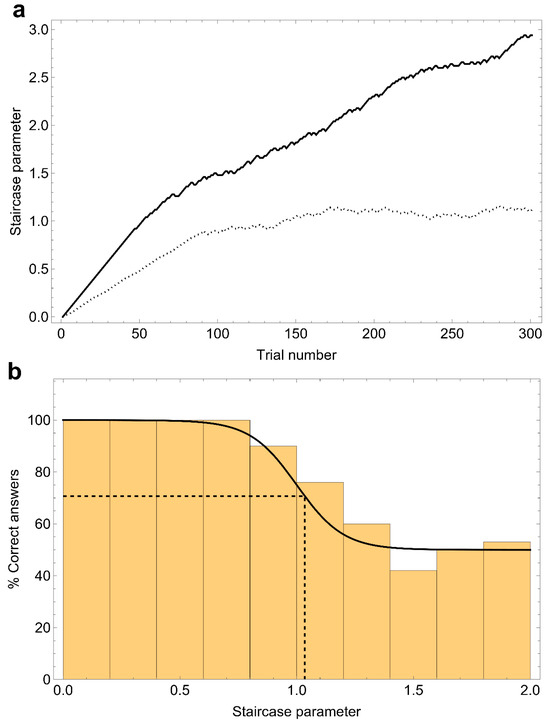

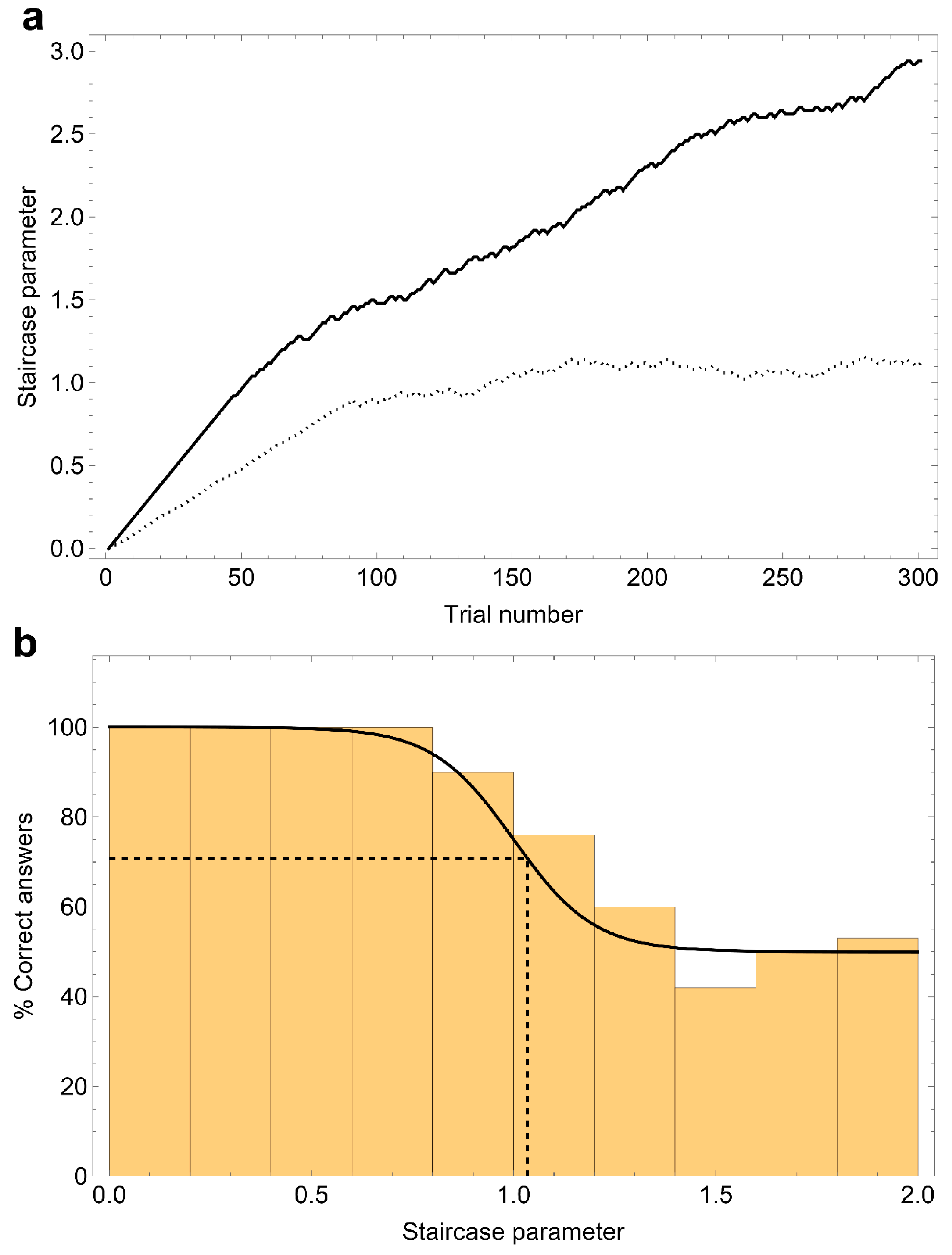

By design, a Levitt staircase autonomously converges to a value of the parameter which corresponds to a specific fraction of correct answers. Such a fraction depends on the implementation details of the staircase: for example, it is equal to 70.7% in the case of 1-up/2-down. Now, let us highlight a noteworthy difference between the zero-noise and the high-noise task. In the former case, where the staircase parameter is the deviation of the stimulus orientation from the vertical direction, the complexity of the task increases as the parameter approaches zero. Conversely, in the high-noise task, where the average orientation is fixed and the parameter is the spread of the stimulus around this average, complexity increases with the parameter. To ensure that both tasks converge to the same fraction of correct answers, “up” and “down” in the staircase should be interpreted as “easier” and “harder”, thus leading to an inversion of the up/down directions for the high-noise task.

Unfortunately, an error in the coding of the staircase for the high-noise task caused it to function as a “2-easier/1-harder” staircase. As a result, instead of converging to the intended fraction of correct answers, these staircases inexorably drifted towards the parameter regime corresponding to pure noise. To estimate the 70.7% threshold, we sorted the trials of each staircase into bins based on external noise levels, calculated the fraction of correct answers for each bin, fitted the resulting distribution against a sigmoid curve, and determined the external noise level at which the curve crosses the threshold. The specific sigmoid function was a logistic curve:

where p represents the staircase parameter, p0 is the center of the sigmoid, and k is the growth rate. This curve is constrained to interpolate between the low-noise regime (where all answers are correct) and the very high-noise regime (where responses are entirely random, resulting in a 50% correct rate). Figure A1 illustrates this procedure for clarity.

Σ(p) = 1/2 (1 + 1/(1 + exp(k(p − p0))))

Figure A1.

Illustration of the procedure used to extract the threshold value from a divergent staircase. (a) The solid line represents the behavior of the divergent staircase, while the dotted line shows a standard staircase for reference. (b) Trials are sorted into bins with respect to the scale parameter, with each bin counting the percentage of correct answers in that group of trials. The distribution is then fitted against a sigmoid curve (solid line), and the parameter value corresponding to 70.7% is extrapolated (dashed lines).

Figure A1.

Illustration of the procedure used to extract the threshold value from a divergent staircase. (a) The solid line represents the behavior of the divergent staircase, while the dotted line shows a standard staircase for reference. (b) Trials are sorted into bins with respect to the scale parameter, with each bin counting the percentage of correct answers in that group of trials. The distribution is then fitted against a sigmoid curve (solid line), and the parameter value corresponding to 70.7% is extrapolated (dashed lines).

References

- Amitay, S.; Zhang, Y.X.; Moore, D.R. Asymmetric transfer of auditory perceptual learning. Front. Psychol. 2012, 3, 508. [Google Scholar] [CrossRef] [PubMed]

- Azulai, O.; Shalev, L.; Mevorach, C. Feature discrimination learning transfers to noisy displays in complex stimuli. Front. Cogn. 2024, 3, 1349505. [Google Scholar] [CrossRef]

- Donato, R.; Pavan, A.; Cavallin, G.; Ballan, L.; Betteto, L.; Nucci, M.; Campana, G. Mechanisms Underlying Directional Motion Processing and Form-Motion Integration Assessed with Visual Perceptual Learning. Vision 2022, 6, 29. [Google Scholar] [CrossRef]

- Gold, J.I.; Watanabe, T. Perceptual learning. Curr. Biol. 2010, 20, R46–R48. [Google Scholar] [CrossRef] [PubMed]

- Mishra, J.; Rolle, C.; Gazzaley, A. Neural plasticity underlying visual perceptual learning in aging. Brain Res. 2015, 1612, 140–151. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Tadin, D. Disentangling locus of perceptual learning in the visual hierarchy of motion processing. Sci. Rep. 2019, 9, 1557. [Google Scholar] [CrossRef]

- Barollo, M.; Contemori, G.; Battaglini, L.; Pavan, A.; Casco, C. Perceptual learning improves contrast sensitivity, visual acuity, and foveal crowding in amblyopia. Restor. Neurol. Neurosci. 2017, 35, 483–496. [Google Scholar] [CrossRef]

- Kang, D.W.; Kim, D.; Chang, L.H.; Kim, Y.H.; Takahashi, E.; Cain, M.S.; Watanabe, T.; Sasaki, Y. Structural and Functional Connectivity Changes Beyond Visual Cortex in a Later Phase of Visual Perceptual Learning. Sci. Rep. 2018, 8, 5186. [Google Scholar] [CrossRef]

- Maniglia, M.; Seitz, A.R. Towards a whole brain model of Perceptual Learning. Curr. Opin. Behav. Sci. 2018, 20, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.Y.; Zhang, G.L.; Xiao, L.Q.; Klein, S.A.; Levi, D.M.; Yu, C. Rule-based learning explains visual perceptual learning and its specificity and transfer. J. Neurosci. 2010, 30, 12323–12328. [Google Scholar] [CrossRef] [PubMed]

- Ahissar, M.; Hochstein, S. Task difficulty and the specificity of perceptual learning. Nature 1997, 387, 401–406. [Google Scholar] [CrossRef] [PubMed]

- Ahissar, M.; Hochstein, S. The reverse hierarchy theory of visual perceptual learning. Trends Cogn. Sci. 2004, 8, 457–464. [Google Scholar] [CrossRef]

- Jeter, P.E.; Dosher, B.A.; Petrov, A.; Lu, Z.L. Task precision at transfer determines specificity of perceptual learning. J. Vis. 2009, 9, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Jeter, P.E.; Dosher, B.A.; Liu, S.H.; Lu, Z.L. Specificity of perceptual learning increases with increased training. Vis. Res. 2010, 50, 1928–1940. [Google Scholar] [CrossRef] [PubMed]

- Aberg, K.C.; Tartaglia, E.M.; Herzog, M.H. Perceptual learning with Chevrons requires a minimal number of trials, transfers to untrained directions, but does not require sleep. Vis. Res. 2009, 49, 2087–2094. [Google Scholar] [CrossRef] [PubMed]

- McGovern, D.P.; Webb, B.S.; Peirce, J.W. Transfer of perceptual learning between different visual tasks. J. Vis. 2012, 12, 4. [Google Scholar] [CrossRef]

- Shibata, K.; Sasaki, Y.; Kawato, M.; Watanabe, T. Neuroimaging Evidence for 2 Types of Plasticity in Association with Visual Perceptual Learning. Cereb. Cortex 2016, 26, 3681–3689. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, T.; Sasaki, Y. Perceptual learning: Toward a comprehensive theory. Annu. Rev. Psychol. 2015, 66, 197–221. [Google Scholar] [CrossRef]

- Donato, R.; Pavan, A.; Campana, G. Investigating the Interaction Between Form and Motion Processing: A Review of Basic Research and Clinical Evidence. Front. Psychol. 2020, 11, 566848. [Google Scholar] [CrossRef]

- Donato, R.; Pavan, A.; Nucci, M.; Campana, G. The neural mechanisms underlying directional and apparent circular motion assessed with repetitive transcranial magnetic stimulation (rTMS). Neuropsychologia 2020, 149, 107656. [Google Scholar] [CrossRef]

- Krekelberg, B.; Dannenberg, S.; Hoffmann, K.P.; Bremmer, F.; Ross, J. Neural correlates of implied motion. Nature 2003, 424, 674–677. [Google Scholar] [CrossRef]

- Krekelberg, B.; Vatakis, A.; Kourtzi, Z. Implied motion from form in the human visual cortex. J. Neurophysiol. 2005, 94, 4373–4386. [Google Scholar] [CrossRef]

- Joshi, M.R.; Simmers, A.J.; Jeon, S.T. Implied Motion From Form Shows Motion Aids the Perception of Global Form in Amblyopia. Investig. Ophthalmol. Vis. Sci. 2020, 61, 58. [Google Scholar] [CrossRef]

- Joshi, M.R.; Simmers, A.J.; Jeon, S.T. The interaction of global motion and global form processing on the perception of implied motion: An equivalent noise approach. Vis. Res. 2021, 186, 34–40. [Google Scholar] [CrossRef] [PubMed]

- Pavan, A.; Bimson, L.M.; Gall, M.G.; Ghin, F.; Mather, G. The interaction between orientation and motion signals in moving oriented Glass patterns. Vis. Neurosci. 2017, 34, E010. [Google Scholar] [CrossRef] [PubMed]

- Pavan, A.; Ghin, F.; Donato, R.; Campana, G.; Mather, G. The neural basis of form and form-motion integration from static and dynamic translational Glass patterns: A rTMS investigation. NeuroImage 2017, 157, 555–560. [Google Scholar] [CrossRef] [PubMed]

- Pavan, A.; Contillo, A.; Ghin, F.; Donato, R.; Foxwell, M.J.; Atkins, D.W.; Mather, G.; Campana, G. Spatial and Temporal Selectivity of Translational Glass Patterns Assessed With the Tilt After-Effect. i-Perception 2021, 12, 20416695211017924. [Google Scholar] [CrossRef] [PubMed]

- Roccato, M.; Campana, G.; Vicovaro, M.; Donato, R.; Pavan, A. Perception of complex Glass patterns through spatial summation across unique frames. Vis. Res. 2024, 216, 108364. [Google Scholar] [CrossRef]

- Bogfjellmo, L.G.; Bex, P.J.; Falkenberg, H.K. Reduction in direction discrimination with age and slow speed is due to both increased internal noise and reduced sampling efficiency. Investig. Ophthalmol. Vis. Sci. 2013, 54, 5204–5210. [Google Scholar] [CrossRef] [PubMed]

- Falkenberg, H.K.; Simpson, W.A.; Dutton, G.N. Development of sampling efficiency and internal noise in motion detection and discrimination in school-aged children. Vis. Res. 2014, 100, 8–17. [Google Scholar] [CrossRef] [PubMed]

- Simpson, W.A.; Falkenberg, H.K.; Manahilov, V. Sampling efficiency and internal noise for motion detection, discrimination, and summation. Vis. Res. 2003, 43, 2125–2132. [Google Scholar] [CrossRef] [PubMed]

- Tibber, M.S.; Kelly, M.G.; Jansari, A.; Dakin, S.C.; Shepherd, A.J. An inability to exclude visual noise in migraine. Investig. Ophthalmol. Vis. Sci. 2014, 55, 2539–2546. [Google Scholar] [CrossRef] [PubMed]

- Pelli, D.G. Effects of Visual Noise. Ph.D. Thesis, University of Cambridge, Cambridge, UK, 1981. [Google Scholar]

- Dakin, S.C.; Mareschal, I.; Bex, P.J. Local and global limitations on direction integration assessed using equivalent noise analysis. Vis. Res. 2005, 45, 3027–3049. [Google Scholar] [CrossRef] [PubMed]

- Pavan, A.; Contillo, A.; Yilmaz, S.K.; Kafaligonul, H.; Donato, R.; O’Hare, L. A comparison of equivalent noise methods in investigating local and global form and motion integration. Atten. Percept. Psychophys. 2023, 85, 152–165. [Google Scholar] [CrossRef]

- Baldwin, A.S.; Baker, D.H.; Hess, R.F. What Do Contrast Threshold Equivalent Noise Studies Actually Measure? Noise vs. Nonlinearity in Different Masking Paradigms. PLoS ONE 2016, 11, e0150942. [Google Scholar] [CrossRef]

- Ghin, F.; Pavan, A.; Contillo, A.; Mather, G. The effects of high-frequency transcranial random noise stimulation (hf-tRNS) on global motion processing: An equivalent noise approach. Brain Stimul. 2018, 11, 1263–1275. [Google Scholar] [CrossRef]

- Newsome, W.T.; Britten, K.H.; Movshon, J.A. Neuronal correlates of a perceptual decision. Nature 1989, 341, 52–54. [Google Scholar] [CrossRef] [PubMed]

- Dosher, B.A.; Lu, Z.L. Perceptual learning in clear displays optimizes perceptual expertise: Learning the limiting process. Proc. Natl. Acad. Sci. USA 2005, 102, 5286–5290. [Google Scholar] [CrossRef] [PubMed]

- Dosher, B.A.; Lu, Z.L. Perceptual learning reflects external noise filtering and internal noise reduction through channel reweighting. Proc. Natl. Acad. Sci. USA 1998, 95, 13988–13993. [Google Scholar] [CrossRef] [PubMed]

- Manning, C.; Hulks, V.; Tibber, M.S.; Dakin, S.C. Integration of visual motion and orientation signals in dyslexic children: An equivalent noise approach. R. Soc. Open Sci. 2022, 9, 200414. [Google Scholar] [CrossRef] [PubMed]

- Watamaniuk, S.N.; Sekuler, R.; Williams, D.W. Direction perception in complex dynamic displays: The integration of direction information. Vis. Res. 1989, 29, 47–59. [Google Scholar] [CrossRef] [PubMed]

- Watamaniuk, S.N. Ideal observer for discrimination of the global direction of dynamic random-dot stimuli. J. Opt. Soc. Am. A Opt. Image Sci. 1993, 10, 16–28. [Google Scholar] [CrossRef]

- Nankoo, J.F.; Madan, C.R.; Spetch, M.L.; Wylie, D.R. Perception of dynamic glass patterns. Vis. Res. 2012, 72, 55–62. [Google Scholar] [CrossRef] [PubMed]

- World Medical Association. World Medical Association Declaration of Helsinki: Ethical principles for medical research involving human subjects. JAMA 2013, 310, 2191–2194. [Google Scholar] [CrossRef]

- Brainard, D.H. The Psychophysics Toolbox. Spat. Vis. 1997, 10, 433–436. [Google Scholar] [CrossRef] [PubMed]

- Pelli, D.G. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spat. Vis. 1997, 10, 437–442. [Google Scholar] [CrossRef] [PubMed]

- Kleiner, M.; Brainard, D.H.; Pelli, D.G.; Broussard, C.; Wolf, T.; Niehorster, D. What’s new in Psychtoolbox-3? Perception 2007, 36, 1–16. [Google Scholar]

- Clifford, C.W.; Weston, E. Aftereffect of adaptation to Glass patterns. Vis. Res. 2005, 45, 1355–1363. [Google Scholar] [CrossRef] [PubMed]

- Pavan, A.; Contillo, A.; Ghin, F.; Foxwell, M.J.; Mather, G. Limited attention diminishes spatial suppression from large field Glass patterns. Perception 2019, 48, 286–315. [Google Scholar] [CrossRef]

- Dosher, B.A.; Lu, Z.L. Mechanisms of perceptual learning. Vis. Res. 1999, 39, 3197–3221. [Google Scholar] [CrossRef]

- Song, Y.; Chen, N.; Fang, F. Effects of daily training amount on visual motion perceptual learning. J. Vis. 2021, 21, 6. [Google Scholar] [CrossRef] [PubMed]

- Levitt, H. Transformed up-down methods in psychoacoustics. J. Acoust. Soc. Am. 1971, 49, 467–477. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: http://www.R-project.org (accessed on 7 September 2024).

- RStudio Team. RStudio: Integrated Development for R; RStudio, Inc.: Boston, MA, USA, 2021. [Google Scholar]

- Leys, C.; Ley, C.; Klein, O.; Bernard, P.; Licata, L. Detecting outliers: Do not use standard deviation around the mean, use absolute deviation around the median. J. Exp. Soc. Psychol. 2013, 49, 764–766. [Google Scholar] [CrossRef]

- Elkin, L.A.; Kay, M.; Higgins, J.J.; Wobbrock, J.O. An aligned rank transform procedure for multifactor contrast tests. In Proceedings of the 34th Annual ACM Symposium on User Interface Software and Technology, Virtual Event, 10–14 October 2021; pp. 754–768. [Google Scholar] [CrossRef]

- Wobbrock, J.O.; Findlater, L.; Gergle, D.; Higgins, J.J. The aligned rank transform for nonparametric factorial analyses using only anova procedures. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 143–146. [Google Scholar] [CrossRef]

- Kay, M.; Elkin, L.A.; Higgins, J.J.; Wobbrock, J.O. ARTool: Aligned Rank Transform for Nonparametric Factorial ANOVAs. R Package Version 0.11.1. 2021. Available online: https://github.com/mjskay/ARTool (accessed on 7 September 2024).

- Gold, J.M.; Sekuler, A.B.; Bennett, P.J. Characterizing perceptual learning with external noise. Cogn. Sci. 2004, 28, 167–207. [Google Scholar] [CrossRef]

- Green, D.M.; Swets, J.A. Signal Detection Theory and Psychophysics; Wiley: New York, NY, USA, 1966; Volume 1, pp. 1969–2012. [Google Scholar]

- Kurki, I.; Eckstein, M.P. Template changes with perceptual learning are driven by feature informativeness. J. Vis. 2014, 14, 6. [Google Scholar] [CrossRef] [PubMed][Green Version]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).