Musicianship Modulates Cortical Effects of Attention on Processing Musical Triads

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

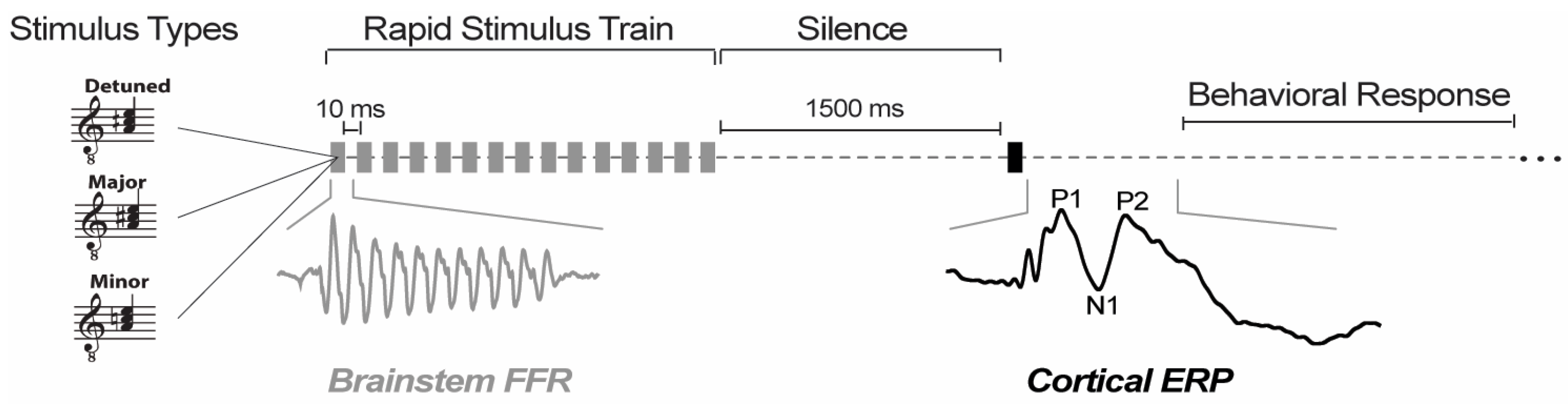

2.2. Stimuli and Task

2.3. EEG Recording Procedures

2.4. Brainstem FFR Analysis

2.5. Cortical ERP Analysis

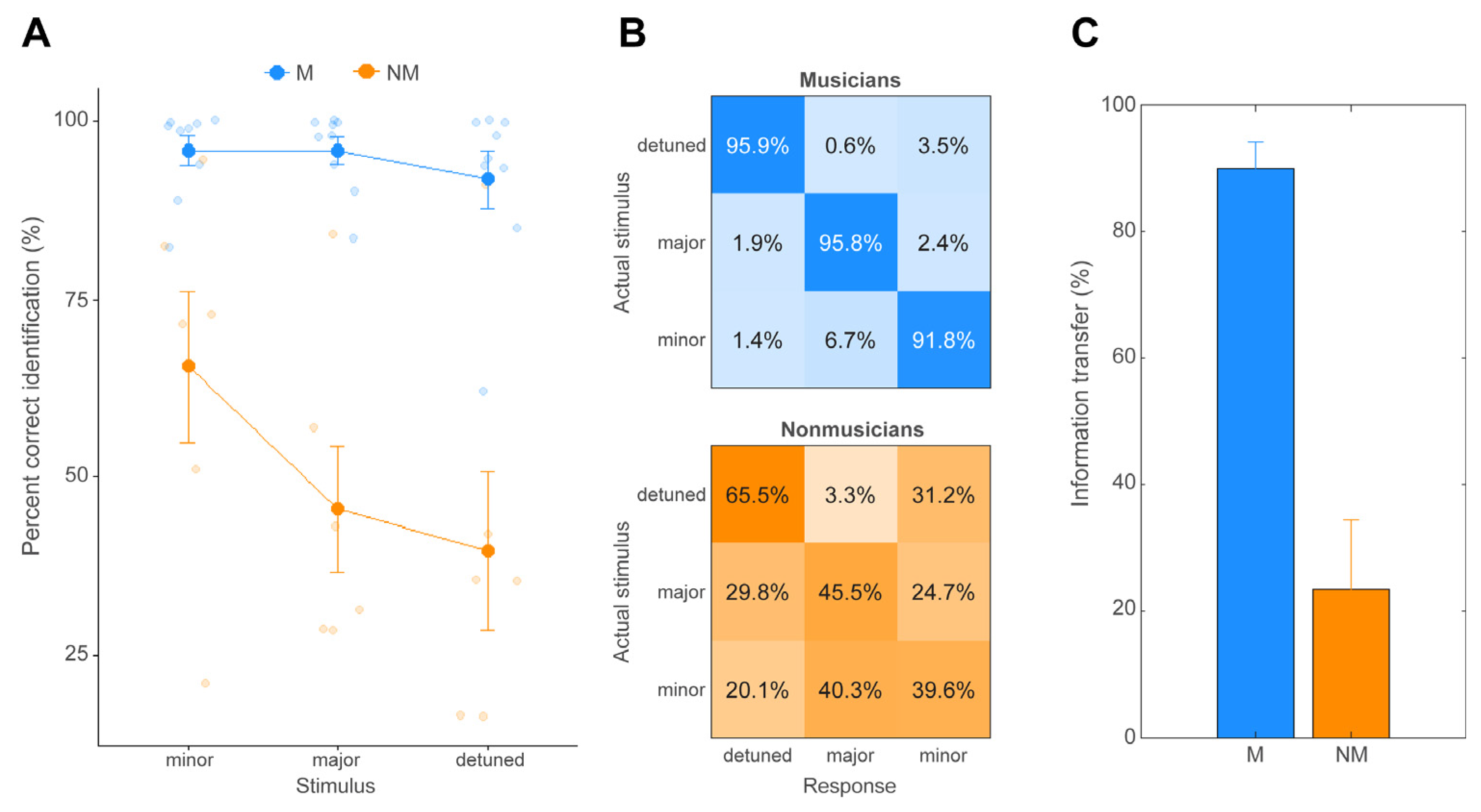

2.6. Confusion Matrices and Information Transfer (IT) Analysis

2.7. Neural Differentiation of Musical Chords

2.8. Statistical Analysis

3. Results

3.1. Behavioral Chord Identification

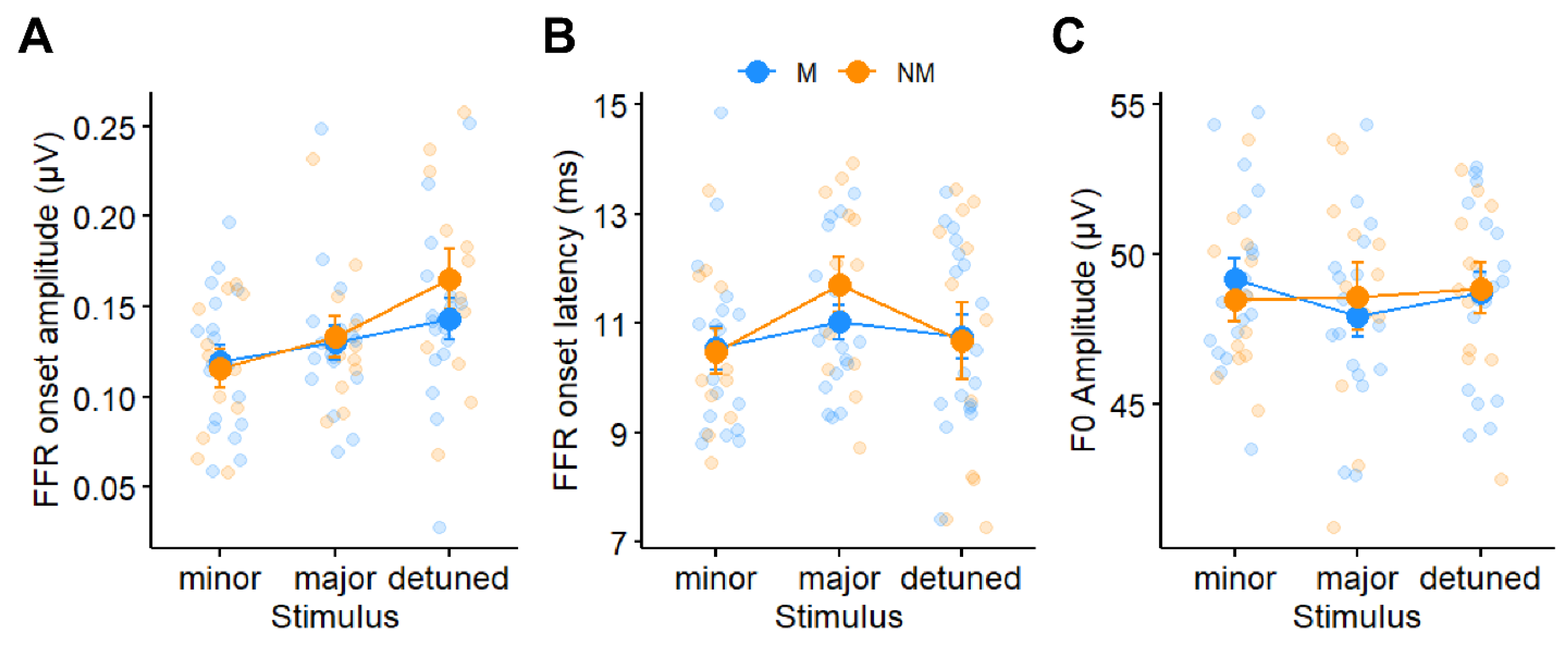

3.2. Brainstem FFRs

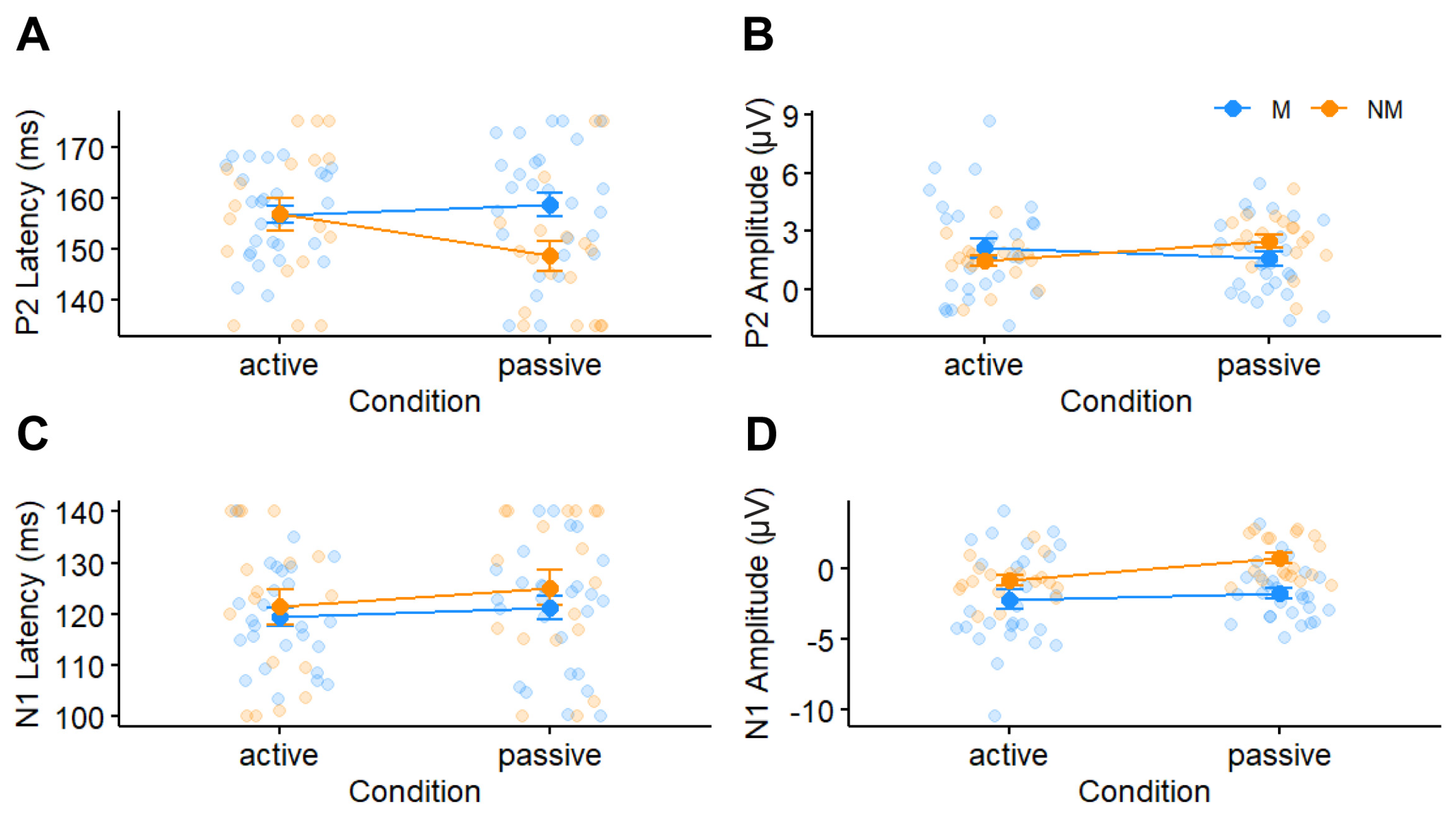

3.3. Cortical ERP Responses

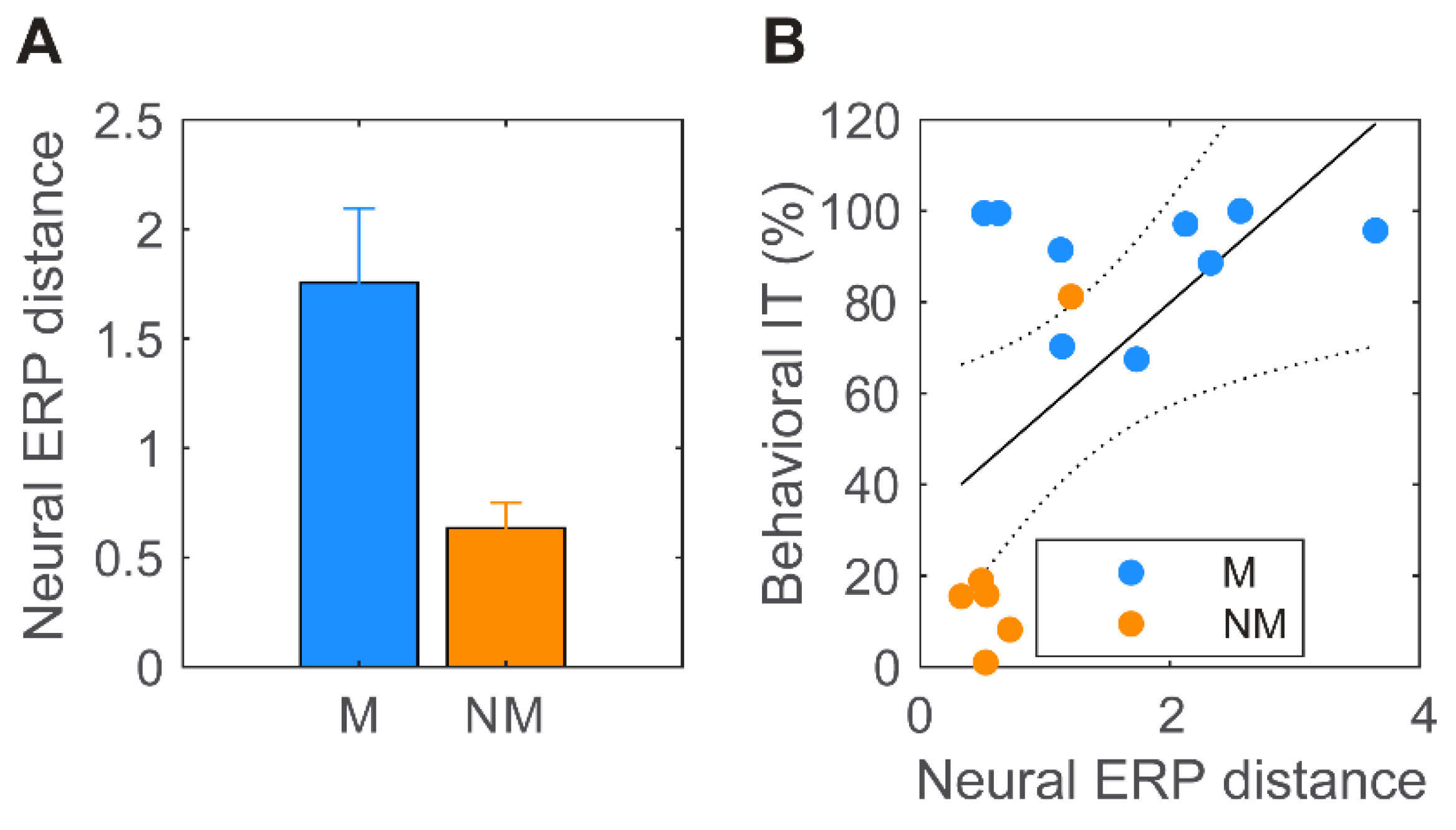

3.4. Brain–Behavior Relationships

4. Discussion

4.1. (High-Frequency) Subcortical Responses Are Largely Invariant to Musicianship and Attention

4.2. Cortical Responses Vary with Attention and Musicianship

4.3. Cortical Stimulus Differentiation Mirrored Behavioral Discrimination

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bidelman, G.M.; Krishnan, A.; Gandour, J.T. Enhanced brainstem encoding predicts musicians’ perceptual advantages with pitch. Eur. J. Neurosci. 2011, 33, 530–538. [Google Scholar] [CrossRef] [PubMed]

- Musacchia, G.; Sams, M.; Skoe, E.; Kraus, N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc. Natl. Acad. Sci. USA 2007, 104, 15894–15898. [Google Scholar] [CrossRef] [PubMed]

- Koelsch, S.; Schroger, E.; Tervaniemi, M. Superior pre-attentive auditory processing in musicians. Neuroreport 1999, 10, 1309–1313. [Google Scholar] [CrossRef]

- Tervaniemi, M.; Just, V.; Koelsch, S.; Widmann, A.; Schroger, E. Pitch discrimination accuracy in musicians vs nonmusicians: An event-related potential and behavioral study. Exp. Brain Res. 2005, 161, 1–10. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Gandour, J.T.; Krishnan, A. Musicians demonstrate experience-dependent brainstem enhancement of musical scale features within continuously gliding pitch. Neurosci. Lett. 2011, 503, 203–207. [Google Scholar] [CrossRef]

- Micheyl, C.; Delhommeau, K.; Perrot, X.; Oxenham, A.J. Influence of musical and psychoacoustical training on pitch discrimination. Hear. Res. 2006, 219, 36–47. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Gandour, J.T.; Krishnan, A. Cross-domain effects of music and language experience on the representation of pitch in the human auditory brainstem. J. Cogn. Neurosci. 2011, 23, 425–434. [Google Scholar] [CrossRef]

- Kishon-Rabin, L.; Amir, O.; Vexler, Y.; Zaltz, Y. Pitch discrimination: Are professional musicians better than non-musicians? J. Basic Clin. Physiol. Pharmacol. 2001, 12, 125–143. [Google Scholar] [CrossRef]

- Pitt, M.A. Perception of pitch and timbre by musically trained and untrained listeners. J. Exp. Psychol. Hum. Percept. Perform. 1994, 20, 976–986. [Google Scholar] [CrossRef]

- Yoo, J.; Bidelman, G.M. Linguistic, perceptual, and cognitive factors underlying musicians’ benefits in noise-degraded speech perception. Hear. Res. 2019, 377, 189–195. [Google Scholar] [CrossRef]

- Kraus, N.; Chandrasekaran, B. Music training for the development of auditory skills. Nat. Rev. Neurosci. 2010, 11, 599–605. [Google Scholar] [CrossRef] [PubMed]

- Alain, C.; Zendel, B.R.; Hutka, S.; Bidelman, G.M. Turning down the noise: The benefit of musical training on the aging auditory brain. Hear. Res. 2014, 308, 162–173. [Google Scholar] [CrossRef] [PubMed]

- Mankel, K.; Bidelman, G.M. Inherent auditory skills rather than formal music training shape the neural encoding of speech. Proc. Natl. Acad. Sci. USA 2018, 115, 13129–13134. [Google Scholar] [CrossRef] [PubMed]

- Bidelman, G.M.; Yoo, J. Musicians show improved speech segregation in competitive, multi-talker cocktail party scenarios. Front. Psychol. 2020, 11, 1927. [Google Scholar] [CrossRef]

- Brattico, E.; Jacobsen, T. Subjective appraisal of music. Ann. N. Y. Acad. Sci. 2009, 1169, 308–317. [Google Scholar] [CrossRef]

- Shahin, A.; Bosnyak, D.J.; Trainor, L.J.; Roberts, L.E. Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. J. Neurosci. 2003, 23, 5545–5552. [Google Scholar] [CrossRef]

- Schneider, P.; Sluming, V.; Roberts, N.; Scherg, M.; Goebel, R.; Specht, H.J.; Dosch, H.G.; Bleeck, S.; Stippich, C.; Rupp, A. Structural and functional asymmetry of lateral Heschl’s gyrus reflects pitch perception preference. Nat. Neurosci. 2005, 8, 1241–1247. [Google Scholar] [CrossRef]

- Virtala, P.; Huotilainen, M.; Partanen, E.; Tervaniemi, M. Musicianship facilitates the processing of Western music chords—An ERP and behavioral study. Neuropsychologia 2014, 61, 247–258. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Weiss, M.W.; Moreno, S.; Alain, C. Coordinated plasticity in brainstem and auditory cortex contributes to enhanced categorical speech perception in musicians. Eur. J. Neurosci. 2014, 40, 2662–2673. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Alain, C. Musical training orchestrates coordinated neuroplasticity in auditory brainstem and cortex to counteract age-related declines in categorical vowel perception. J. Neurosci. 2015, 35, 1240–1249. [Google Scholar] [CrossRef]

- MacLean, J.; Stirn, J.; Sisson, A.; Bidelman, G.M. Short- and long-term neuroplasticity interact during the perceptual learning of concurrent speech. Cereb. Cortex 2024, 34, bhad543. [Google Scholar] [CrossRef] [PubMed]

- Lai, J.; Price, C.N.; Bidelman, G.M. Brainstem speech encoding is dynamically shaped online by fluctuations in cortical α state. NeuroImage 2022, 263, 119627. [Google Scholar] [CrossRef] [PubMed]

- Carter, J.A.; Bidelman, G.M. Perceptual warping exposes categorical representations for speech in human brainstem responses. NeuroImage 2023, 269, 119899. [Google Scholar] [CrossRef] [PubMed]

- Picton, T.W.; Hillyard, S.A. Human auditory evoked potentials. II. Effects of attention. Electroencephalogr. Clin. Neurophysiol. 1974, 36, 191–199. [Google Scholar] [CrossRef]

- Brown, J.A.; Bidelman, G.M. Attention, musicality, and familiarity shape cortical speech tracking at the musical cocktail party. bioRxiv 2023. [Google Scholar] [CrossRef]

- Strait, D.L.; Kraus, N.; Parbery-Clark, A.; Ashley, R. Musical experience shapes top-down auditory mechanisms: Evidence from masking and auditory attention performance. Hear. Res. 2010, 261, 22–29. [Google Scholar] [CrossRef]

- Bidelman, G.M. Towards an optimal paradigm for simultaneously recording cortical and brainstem auditory evoked potentials. J. Neurosci. Methods 2015, 241, 94–100. [Google Scholar] [CrossRef]

- Rizzi, R.; Bidelman, G.M. Duplex perception reveals brainstem auditory representations are modulated by listeners’ ongoing percept for speech. Cereb. Cortex 2023, 33, 10076–10086. [Google Scholar] [CrossRef]

- Zhang, J.D.; Susino, M.; McPherson, G.E.; Schubert, E. The definition of a musician in music psychology: A literature review and the six-year rule. Psychol. Music 2020, 48, 389–409. [Google Scholar] [CrossRef]

- Brattico, E.; Pallesen, K.J.; Varyagina, O.; Bailey, C.; Anourova, I.; Jarvenpaa, M.; Eerola, T.; Tervaniemi, M. Neural discrimination of nonprototypical chords in music experts and laymen: An MEG study. J. Cogn. Neurosci. 2009, 21, 2230–2244. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Walker, B. Attentional modulation and domain specificity underlying the neural organization of auditory categorical perception. Eur. J. Neurosci. 2017, 45, 690–699. [Google Scholar] [CrossRef] [PubMed]

- Moore, B.C.J. Introduction to the Psychology of Hearing, 5th ed.; Academic Press: San Diego, CA, USA, 2003. [Google Scholar]

- Joris, P.X.; Schreiner, C.E.; Rees, A. Neural processing of amplitude-modulated sounds. Physiol. Rev. 2004, 84, 541–577. [Google Scholar] [CrossRef] [PubMed]

- Brugge, J.F.; Nourski, K.V.; Oya, H.; Reale, R.A.; Kawasaki, H.; Steinschneider, M.; Howard, M.A., III. Coding of repetitive transients by auditory cortex on Heschl’s gyrus. J. Neurophysiol. 2009, 102, 2358–2374. [Google Scholar] [CrossRef] [PubMed]

- Bidelman, G.M. Subcortical sources dominate the neuroelectric auditory frequency-following response to speech. Neuroimage 2018, 175, 56–69. [Google Scholar] [CrossRef] [PubMed]

- Campbell, T.; Kerlin, J.R.; Bishop, C.W.; Miller, L.M. Methods to eliminate stimulus transduction artifact from insert earphones during electroencephalography. Ear Hear. 2012, 33, 144–150. [Google Scholar] [CrossRef] [PubMed]

- Price, C.N.; Bidelman, G.M. Attention reinforces human corticofugal system to aid speech perception in noise. NeuroImage 2021, 235, 118014. [Google Scholar] [CrossRef]

- Bidelman, G.M. Multichannel recordings of the human brainstem frequency-following response: Scalp topography, source generators, and distinctions from the transient abr. Hear. Res. 2015, 323, 68–80. [Google Scholar] [CrossRef]

- Musacchia, G.; Strait, D.; Kraus, N. Relationships between behavior, brainstem and cortical encoding of seen and heard speech in musicians and non-musicians. Hear. Res. 2008, 241, 34–42. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Moreno, S.; Alain, C. Tracing the emergence of categorical speech perception in the human auditory system. NeuroImage 2013, 79, 201–212. [Google Scholar] [CrossRef]

- Saiz-Alía, M.; Forte, A.E.; Reichenbach, T. Individual differences in the attentional modulation of the human auditory brainstem response to speech inform on speech-in-noise deficits. Sci. Rep. 2019, 9, 14131. [Google Scholar] [CrossRef]

- Galbraith, G.C.; Brown, W.S. Cross-correlation and latency compensation analysis of click-evoked and frequency-following brain-stem responses in man. Electroencephalogr. Clin. Neurophysiol. 1990, 77, 295–308. [Google Scholar] [CrossRef] [PubMed]

- Bidelman, G.M.; Momtaz, S. Subcortical rather than cortical sources of the frequency-following response (FFR) relate to speech-in-noise perception in normal-hearing listeners. Neurosci. Lett. 2021, 746, 135664. [Google Scholar] [CrossRef] [PubMed]

- Hillyard, S.A.; Hink, R.F.; Schwent, V.L.; Picton, T.W. Electrical signs of selective attention in the human brain. Science 1973, 182, 177–180. [Google Scholar] [CrossRef]

- Schwent, V.L.; Hillyard, S.A. Evoked potential correlates of selective attention with multi-channel auditory inputs. Electroencephalogr. Clin. Neurophysiol. 1975, 28, 131–138. [Google Scholar] [CrossRef] [PubMed]

- Woldorff, M.G.; Gallen, C.C.; Hampson, S.A.; Hillyard, S.A.; Pantev, C.; Sobel, D.; Bloom, F.E. Modulation of early sensory processing in human auditory cortex during auditory selective attention. Proc. Natl. Acad. Sci. USA 1993, 90, 8722–8726. [Google Scholar] [CrossRef] [PubMed]

- Neelon, M.F.; Williams, J.; Garell, P.C. The effects of auditory attention measured from human electrocorticograms. Clin. Neurophysiol. 2006, 117, 504–521. [Google Scholar] [CrossRef]

- Ding, N.; Simon, J.Z. Emergence of neural encoding of auditory objects while listening to competing speakers. Proc. Natl. Acad. Sci. USA 2012, 109, 11854–11859. [Google Scholar] [CrossRef]

- Kaganovich, N.; Kim, J.; Herring, C.; Schumaker, J.; Macpherson, M.; Weber-Fox, C. Musicians show general enhancement of complex sound encoding and better inhibition of irrelevant auditory change in music: An ERP study. Eur. J. Neurosci. 2013, 37, 1295–1307. [Google Scholar] [CrossRef]

- Brown, J.A.; Bidelman, G.M. Familiarity of background music modulates the cortical tracking of target speech at the “cocktail party”. Brain Sci. 2022, 12, 1320. [Google Scholar] [CrossRef]

- Lee, S.; Bidelman, G.M. Objective identification of simulated cochlear implant settings in normal-hearing listeners via auditory cortical evoked potentials. Ear Hear. 2017, 38, e215–e226. [Google Scholar] [CrossRef]

- Miller, G.A.; Nicely, P.E. An analysis of perceptual confusions among some English consonants. J. Acoust. Soc. Am. 1955, 27, 338–352. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Yellamsetty, A. Noise and pitch interact during the cortical segregation of concurrent speech. Hear. Res. 2017, 351, 34–44. [Google Scholar] [CrossRef]

- R-Core-Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020; Available online: https://www.R-project.org/ (accessed on 1 August 2024).

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting linear mixed-effects models using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Krishnan, A. Neural correlates of consonance, dissonance, and the hierarchy of musical pitch in the human brainstem. J. Neurosci. 2009, 29, 13165–13171. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.M.; Skoe, E.; Kraus, N.; Ashley, R. Selective subcortical enhancement of musical intervals in musicians. J. Neurosci. 2009, 29, 5832–5840. [Google Scholar] [CrossRef] [PubMed]

- Skoe, E.; Kraus, N. Hearing it again and again: On-line subcortical plasticity in humans. PLoS ONE 2010, 5, e13645. [Google Scholar] [CrossRef]

- Bones, O.; Hopkins, K.; Krishnan, A.; Plack, C.J. Phase locked neural activity in the human brainstem predicts preference for musical consonance. Neuropsychologia 2014, 58, 23–32. [Google Scholar] [CrossRef]

- Losorelli, S.; Kaneshiro, B.; Musacchia, G.A.; Blevins, N.H.; Fitzgerald, M.B. Factors influencing classification of frequency following responses to speech and music stimuli. Hear. Res. 2020, 398, 108101. [Google Scholar] [CrossRef]

- Galbraith, G.; Olfman, D.M.; Huffman, T.M. Selective attention affects human brain stem frequency-following response. Neuroreport 2003, 14, 735–738. [Google Scholar] [CrossRef]

- Lehmann, A.; Schonwiesner, M. Selective attention modulates human auditory brainstem responses: Relative contributions of frequency and spatial cues. PLoS ONE 2014, 9, e85442. [Google Scholar] [CrossRef]

- Forte, A.E.; Etard, O.; Reichenbach, T. The human auditory brainstem response to running speech reveals a subcortical mechanism for selective attention. eLife 2017, 6, e27203. [Google Scholar] [CrossRef] [PubMed]

- Tichko, P.; Skoe, E. Frequency-dependent fine structure in the frequency-following response: The byproduct of multiple generators. Hear. Res. 2017, 348, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Coffey, E.B.J.; Nicol, T.; White-Schwoch, T.; Chandrasekaran, B.; Krizman, J.; Skoe, E.; Zatorre, R.J.; Kraus, N. Evolving perspectives on the sources of the frequency-following response. Nat. Commun. 2019, 10, 5036. [Google Scholar] [CrossRef]

- Gorina-Careta, N.; Kurkela, J.L.O.; Hämäläinen, J.; Astikainen, P.; Escera, C. Neural generators of the frequency-following response elicited to stimuli of low and high frequency: A magnetoencephalographic (MEG) study. NeuroImage 2021, 231, 117866. [Google Scholar] [CrossRef]

- Hartmann, T.; Weisz, N. Auditory cortical generators of the frequency following response are modulated by intermodal attention. NeuroImage 2019, 203, 116185. [Google Scholar] [CrossRef]

- Schüller, A.; Schilling, A.; Krauss, P.; Rampp, S.; Reichenbach, T. Attentional modulation of the cortical contribution to the frequency-following response evoked by continuous speech. J. Neurosci. 2023, 43, 7429–7440. [Google Scholar] [CrossRef]

- Lai, J.; Alain, C.; Bidelman, G.M. Cortical-brainstem interplay during speech perception in older adults with and without hearing loss. Front. Neurosci. 2023, 17, 1075368. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Krishnan, A. Brainstem correlates of behavioral and compositional preferences of musical harmony. Neuroreport 2011, 22, 212–216. [Google Scholar] [CrossRef]

- Parbery-Clark, A.; Strait, D.L.; Kraus, N. Context-dependent encoding in the auditory brainstem subserves enhanced speech-in-noise perception in musicians. Neuropsychologia 2011, 49, 3338–3345. [Google Scholar] [CrossRef]

- Strait, D.L.; Kraus, N.; Skoe, E.; Ashley, R. Musical experience and neural efficiency: Effects of training on subcortical processing of vocal expressions of emotion. Eur. J. Neurosci. 2009, 29, 661–668. [Google Scholar] [CrossRef]

- Wong, P.C.; Skoe, E.; Russo, N.M.; Dees, T.; Kraus, N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat. Neurosci. 2007, 10, 420–422. [Google Scholar] [CrossRef] [PubMed]

- Parbery-Clark, A.; Skoe, E.; Lam, C.; Kraus, N. Musician enhancement for speech-in-noise. Ear Hear. 2009, 30, 653–661. [Google Scholar] [CrossRef] [PubMed]

- Coffey, E.B.; Herholz, S.C.; Chepesiuk, A.M.; Baillet, S.; Zatorre, R.J. Cortical contributions to the auditory frequency-following response revealed by MEG. Nat. Commun. 2016, 7, 11070. [Google Scholar] [CrossRef] [PubMed]

- Hansen, J.C.; Hillyard, S.A. Endogenous brain potentials associated with selective auditory attention. Electroencephalogr. Clin. Neurophysiol. 1980, 49, 277–290. [Google Scholar] [CrossRef]

- Ross, B.; Jamali, S.; Tremblay, K.L. Plasticity in neuromagnetic cortical responses suggests enhanced auditory object representation. BMC Neurosci. 2013, 14, 151. [Google Scholar] [CrossRef]

- Crowley, K.E.; Colrain, I.M. A review of the evidence for P2 being an independent component process: Age, sleep and modality. Clin. Neurophysiol. 2004, 115, 732–744. [Google Scholar] [CrossRef]

- Alain, C.; Campeanu, S.; Tremblay, K.L. Changes in sensory evoked responses coincide with rapid improvement in speech identification performance. J. Cogn. Neurosci. 2010, 22, 392–403. [Google Scholar] [CrossRef]

- Mankel, K.; Shrestha, U.; Tipirneni-Sajja, A.; Bidelman, G.M. Functional plasticity coupled with structural predispositions in auditory cortex shape successful music category learning. Front. Neurosci. 2022, 16, 897239. [Google Scholar] [CrossRef]

- Tervaniemi, M. Musicians—Same or different? Ann. N. Y. Acad. Sci. 2009, 1169, 151–156. [Google Scholar] [CrossRef]

- Putkinen, V.; Tervaniemi, M.; Saarikivi, K.; de Vent, N.; Huotilainen, M. Investigating the effects of musical training on functional brain development with a novel melodic MMN paradigm. Neurobiol. Learn. Mem. 2014, 110, 8–15. [Google Scholar] [CrossRef]

- Hutka, S.; Bidelman, G.M.; Moreno, S. Pitch expertise is not created equal: Cross-domain effects of musicianship and tone language experience on neural and behavioural discrimination of speech and music. Neuropsychologia 2015, 71, 52–63. [Google Scholar] [CrossRef] [PubMed]

- Jo, H.S.; Hsieh, T.H.; Chien, W.C.; Shaw, F.Z.; Liang, S.F.; Kung, C.C. Probing the neural dynamics of musicians and non-musicians’ consonant/dissonant perception: Joint analyses of electrical encephalogram (EEG) and functional magnetic resonance imaging (fMRI). NeuroImage 2024, 298, 120784. [Google Scholar] [CrossRef] [PubMed]

- Panda, S.; Shivakumar, D.; Majumder, Y.; Gupta, C.N.; Hazra, B. Real-time dynamic analysis of EEG response for live Indian classical vocal stimulus with therapeutic indications. Smart Health 2024, 32, 100461. [Google Scholar] [CrossRef]

- Jiang, Y.; Zheng, M. EEG microstates are associated with music training experience. Front. Hum. Neurosci. 2024, 18, 1434110. [Google Scholar] [CrossRef]

- Holmes, E.; Purcell, D.W.; Carlyon, R.P.; Gockel, H.E.; Johnsrude, I.S. Attentional modulation of envelope-following responses at lower (93–109 Hz) but not higher (217–233 Hz) modulation rates. J. Assoc. Res. Otolaryngol. 2018, 19, 83–97. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

MacLean, J.; Drobny, E.; Rizzi, R.; Bidelman, G.M. Musicianship Modulates Cortical Effects of Attention on Processing Musical Triads. Brain Sci. 2024, 14, 1079. https://doi.org/10.3390/brainsci14111079

MacLean J, Drobny E, Rizzi R, Bidelman GM. Musicianship Modulates Cortical Effects of Attention on Processing Musical Triads. Brain Sciences. 2024; 14(11):1079. https://doi.org/10.3390/brainsci14111079

Chicago/Turabian StyleMacLean, Jessica, Elizabeth Drobny, Rose Rizzi, and Gavin M. Bidelman. 2024. "Musicianship Modulates Cortical Effects of Attention on Processing Musical Triads" Brain Sciences 14, no. 11: 1079. https://doi.org/10.3390/brainsci14111079

APA StyleMacLean, J., Drobny, E., Rizzi, R., & Bidelman, G. M. (2024). Musicianship Modulates Cortical Effects of Attention on Processing Musical Triads. Brain Sciences, 14(11), 1079. https://doi.org/10.3390/brainsci14111079