A Methodological Approach to Quantifying Silent Pauses, Speech Rate, and Articulation Rate across Distinct Narrative Tasks: Introducing the Connected Speech Analysis Protocol (CSAP)

Abstract

:1. Introduction

1.1. Silent Pauses’ Measures

1.2. Speech Fluency Measures

1.3. The Importance of Assessing Different Speech Genres

1.4. Aim of Current Study

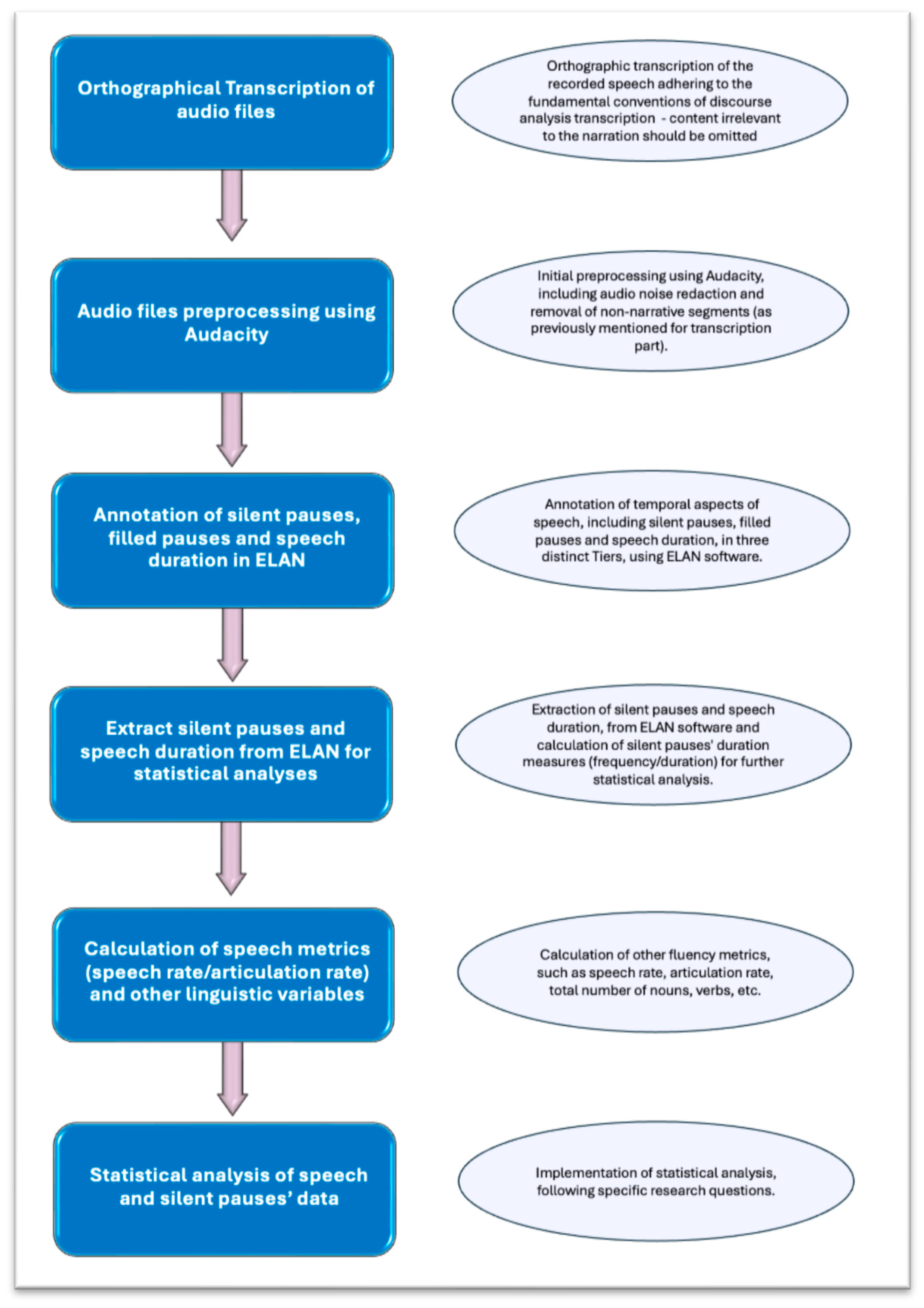

2. Suggested Methodological Pipeline for the Connected Speech Analysis Protocol (CSAP)

2.1. Orthographical Transcription

2.2. Audio Files Prepossessing

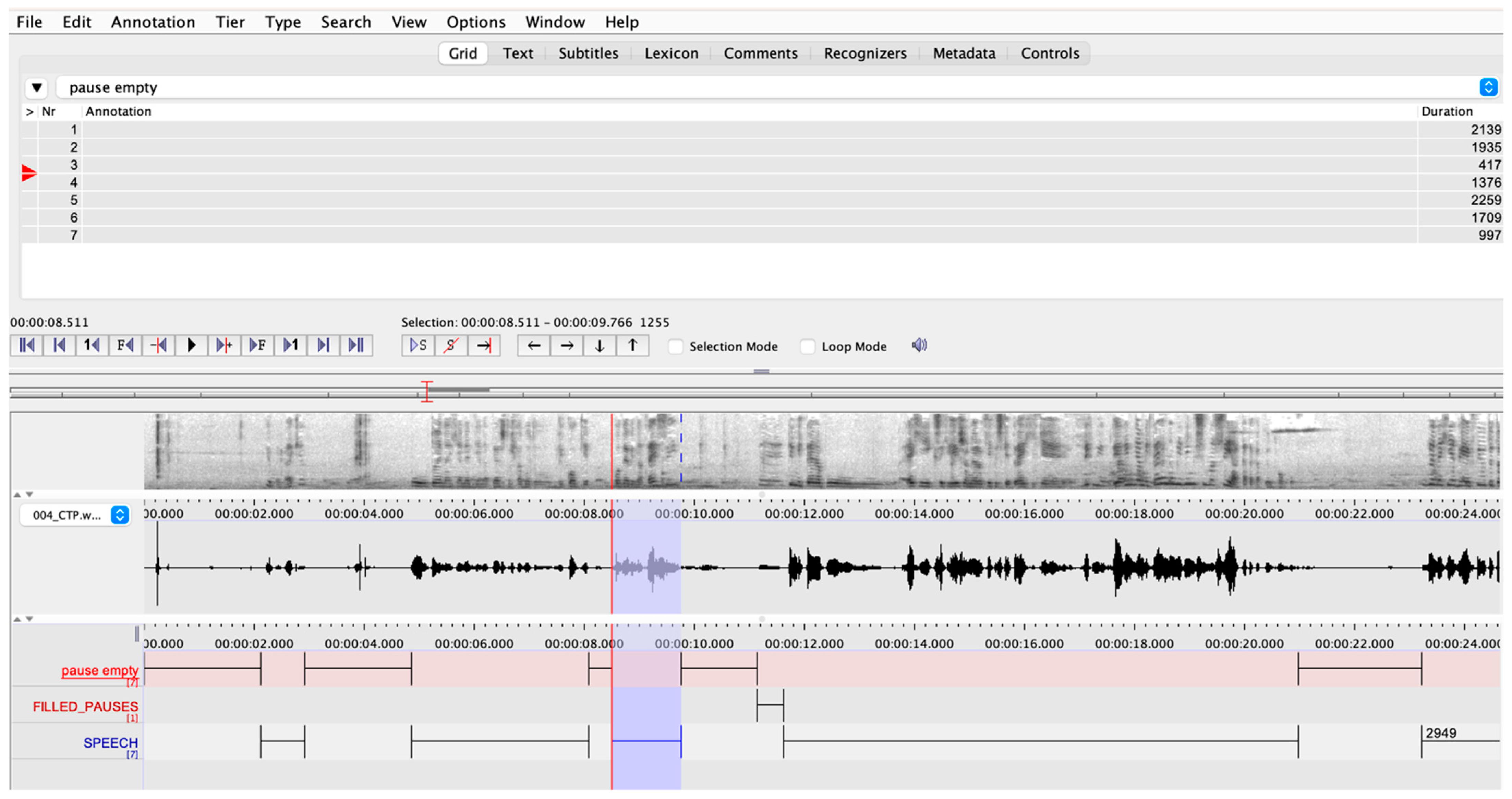

2.3. Silent Pauses and Speech Annotation

2.4. Further Linguistic Variables Annotation

3. An Example of the Implementation of CSAP

3.1. Participants

3.2. Speech Samples

3.3. Statistical Analysis

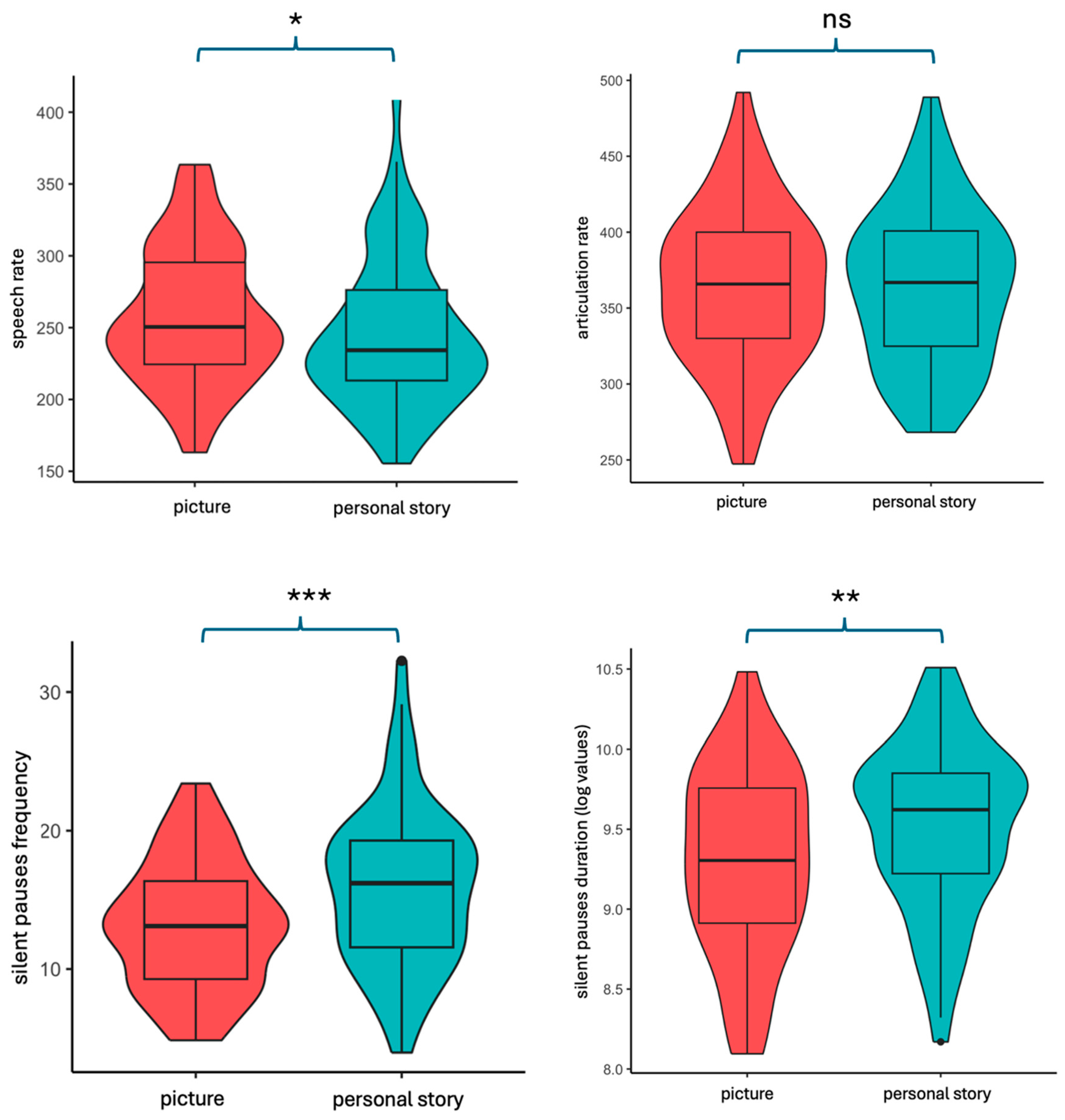

3.4. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Boschi, V.; Catricala, E.; Consonni, M.; Chesi, C.; Moro, A.; Cappa, S.F. Connected speech in neurodegenerative language disorders: A review. Front. Psychol. 2017, 8, 269. [Google Scholar] [CrossRef] [PubMed]

- Armstrong, E. Aphasic discourse analysis: The story so far. Aphasiology 2000, 4, 875–892. [Google Scholar] [CrossRef]

- Angelopoulou, G.; Meier, E.L.; Kasselimis, D.; Pan, Y.; Tsolakopoulos, D.; Velonakis, G.; Karavasilis, E.; Kelekis, N.L.; Goutsos, D.; Potagas, C.; et al. Investigating gray and white matter structural substrates of sex differences in the narrative abilities of healthy adults. Front. Neurosci. 2020, 3, 1424. [Google Scholar] [CrossRef] [PubMed]

- Ardila, A.; Rosselli, M. Spontaneous language production and aging: Sex and educational effects. Int. J. Neurosci. 1996, 7, 71–78. [Google Scholar] [CrossRef] [PubMed]

- Capilouto, G.J.; Wright, H.H.; Maddy, K.M. Microlinguistic processes that contribute to the ability to relay main events: Influence of age. Aging Neuropsychol. Cogn. 2016, 3, 445–463. [Google Scholar] [CrossRef] [PubMed]

- Andreetta, S.; Cantagallo, A.; Marini, A. Narrative discourse in anomic aphasia. Neuropsychologia 2012, 50, 1787–1793. [Google Scholar] [CrossRef] [PubMed]

- Angelopoulou, G.; Kasselimis, D.; Varkanitsa, M.; Tsolakopoulos, D.; Papageorgiou, G.; Velonakis, G.; Meier, E.; Karavasilis, E.; Pantolewn, V.; Laskaris, N.; et al. Investigating Silent Pauses in Connected Speech: Integrating Linguistic, Neuropsychological, and Neuroanatomical Perspectives across Narrative Tasks in Post-Stroke Aphasia. Front. Neurol. 2024, 15, 1347514. [Google Scholar] [CrossRef] [PubMed]

- DeDe, G.; Salis, C. Temporal and episodic analyses of the story of Cinderella in latent aphasia. Am. J. Speech-Lang. Pathol. 2020, 29, 449–462. [Google Scholar] [CrossRef] [PubMed]

- Efthymiopoulou, E.; Kasselimis, D.S.; Ghika, A.; Kyrozis, A.; Peppas, C.; Evdokimidis, I.; Petrides, M.; Potagas, C. The effect of cortical and subcortical lesions on spontaneous expression of memory-encoded and emotionally infused information: Evidence for a role of the ventral stream. Neuropsychologia 2017, 101, 115–120. [Google Scholar] [CrossRef]

- Fromm, D.; Forbes, M.; Holland, A.; Dalton, S.G.; Richardson, J.; MacWhinney, B. Discourse characteristics in aphasia beyond the Western Aphasia Battery cutoff. Am. J. Speech-Lang. Pathol. 2017, 6, 762–768. [Google Scholar] [CrossRef]

- Gordon, J.K.; Clough, S. How fluent? Part B. Underlying contributors to continuous measures of fluency in aphasia. Aphasiology 2020, 4, 643–663. [Google Scholar] [CrossRef]

- Cordella, C.; Dickerson, B.C.; Quimby, M.; Yunusova, Y.; Green, J.R. Slowed articulation rate is a sensitive diagnostic marker for identifying non-fluent primary progressive aphasia. Aphasiology 2017, 1, 241–260. [Google Scholar] [CrossRef] [PubMed]

- Nevler, N.; Ash, S.; Irwin, D.J.; Liberman, M.; Grossman, M. Validated automatic speech biomarkers in primary progressive aphasia. Ann. Clin. Transl. Neurol. 2019, 6, 4–14. [Google Scholar] [CrossRef]

- Potagas, C.; Nikitopoulou, Z.; Angelopoulou, G.; Kasselimis, D.; Laskaris, N.; Kourtidou, E.; Constantinides, V.C.; Bougea, A.; Paraskevas, G.P.; Papageorgiou, G.; et al. Silent pauses and speech indices as Biomarkers for Primary Progressive Aphasia. Medicina 2022, 8, 1352. [Google Scholar] [CrossRef]

- Lofgren, M.; Hinzen, W. Breaking the flow of thought: Increase of empty pauses in the connected speech of people with mild and moderate Alzheimer’s disease. J. Commun. Disord. 2022, 7, 106–214. [Google Scholar] [CrossRef]

- Pistono, A.; Pariente, J.; Bézy, C.; Lemesle, B.; Le Men, J.; Jucla, M. What happens when nothing happens? An investigation of pauses as a compensatory mechanism in early Alzheimer’s disease. Neuropsychologia 2019, 24, 133–143. [Google Scholar] [CrossRef]

- Pistono, A.; Jucla, M.; Barbeau, E.J.; Saint-Aubert, L.; Lemesle, B.; Calvet, B.; Köpke, B.; Puel, M.; Pariente, J. Pauses during autobiographical discourse reflect episodic memory processes in early Alzheimer’s disease. J. Alzheimer’s Dis. 2016, 50, 687–698. [Google Scholar] [CrossRef]

- Themistocleous, C.; Eckerström, M.; Kokkinakis, D. Voice quality and speech fluency distinguish individuals with mild cognitive impairment from healthy controls. PLoS ONE 2020, 5, e0236009. [Google Scholar] [CrossRef] [PubMed]

- Cohen, A.S.; McGovern, J.E.; Dinzeo, T.J.; Covington, M.A. Speech deficits in serious mental illness: A cognitive resource issue? Schizophr. Res. 2014, 160, 173–179. [Google Scholar] [CrossRef] [PubMed]

- Robb, M.P.; Maclagan, M.A.; Chen, Y. Speaking rates of American and New Zealand varieties of English. Clin. Linguist. Phon. 2004, 8, 1–15. [Google Scholar] [CrossRef]

- Hampsey, E.; Meszaros, M.; Skirrow, C.; Strawbridge, R.; Taylor, R.H.; Chok, L.; Aarsland, D.; Al-Chalabi, A.; Chaudhuri, R.; Weston, J.; et al. Protocol for rhapsody: A longitudinal observational study examining the feasibility of speech phenotyping for remote assessment of neurodegenerative and psychiatric disorders. BMJ Open 2022, 12, e061193. [Google Scholar] [CrossRef] [PubMed]

- Angelopoulou, G.; Kasselimis, D.; Makrydakis, G.; Varkanitsa, M.; Roussos, P.; Goutsos, D.; Evdokimidis, I.; Potagas, C. Silent pauses in aphasia. Neuropsychologia 2018, 14, 41–49. [Google Scholar] [CrossRef] [PubMed]

- Baqué, L.; Machuca, M.J. Hesitations in Primary Progressive Aphasia. Languages 2023, 8, 45. [Google Scholar] [CrossRef]

- Mack, J.E.; Chandler, S.D.; Meltzer-Asscher, A.; Rogalski, E.; Weintraub, S.; Mesulam, M.M.; Thompson, C.K. What do pauses in narrative production reveal about the nature of word retrieval deficits in PPA? Neuropsychologia 2015, 7, 211–222. [Google Scholar] [CrossRef]

- Çokal, D.; Zimmerer, V.; Turkington, D.; Ferrier, N.; Varley, R.; Watson, S.; Hinzen, W. Disturbing the rhythm of thought: Speech pausing patterns in schizophrenia, with and without formal thought disorder. PLoS ONE 2019, 4, e0217404. [Google Scholar] [CrossRef] [PubMed]

- Rapcan, V.; D’Arcy, S.; Yeap, S.; Afzal, N.; Thakore, J.; Reilly, R.B. Acoustic and temporal analysis of speech: A potential biomarker for schizophrenia. Med. Eng. Phys. 2010, 2, 1074–1079. [Google Scholar] [CrossRef]

- Salis, C.; DeDe, G. Sentence production in a discourse context in latent aphasia: A real-time Study. Am. J. Speech-Lang. Pathol. 2022, 1, 1284–1296. [Google Scholar] [CrossRef]

- Pastoriza-Dominguez, P.; Torre, I.G.; Dieguez-Vide, F.; Gómez-Ruiz, I.; Geladó, S.; Bello-López, J.; Ávila-Rivera, A.; Matias-Guiu, J.A.; Pytel, V.; Hernández-Fernández, A. Speech pause distribution as an early marker for Alzheimer’s disease. Speech Commun. 2022, 36, 107–117. [Google Scholar] [CrossRef]

- Goldman-Eisler, F. Psycholinguistics: Experiments in Spontaneous Speech; Academic Press: London, UK, 1968. [Google Scholar]

- Grunwell, P. Assessment of articulation and phonology. In Assessment in Speech and Language Therapy; Routledge: Abingdon, UK, 2018; pp. 49–67. [Google Scholar]

- Tremblay, P.; Deschamps, I.; Gracco, V.L. Neurobiology of speech production: A motor control perspective. In Neurobiology of Language; Academic Press: Cambridge, MA, USA, 2016; pp. 741–750. [Google Scholar]

- Campione, E.; Véronis, J. A large-scale multilingual study of silent pause duration. In Proceedings of the Speech Prosody 2002 International Conference, Aix-en-Provence, France, 11–13 April 2002. [Google Scholar]

- Kirsner, K.; Dunn, J.; Hird, K. Language Production: A complex dynamic system with a chronometric footprint. In Proceedings of the 2005 International Conference on Computational Science, Atlanta, GA, USA, 22–25 May 2005. [Google Scholar]

- Rosen, K.; Murdoch, B.; Folker, J.; Vogel, A.; Cahill, L.; Delatycki, M.; Corben, L. Automatic method of pause measurement for normal and dysarthric speech. Clin. Linguist. Phon. 2010, 4, 141–154. [Google Scholar] [CrossRef]

- Ash, S.; McMillan, C.; Gross, R.G.; Cook, P.; Gunawardena, D.; Morgan, B.; Boller, A.; Siderowf, A.; Grossman, M. Impairments of speech fluency in Lewy body spectrum disorder. Brain Lang. 2012, 20, 290–302. [Google Scholar] [CrossRef]

- Wardle, M.; Cederbaum, K.; de Wit, H. Quantifying talk: Developing reliable measures of verbal productivity. Behav. Res. Methods 2011, 3, 168–178. [Google Scholar] [CrossRef] [PubMed]

- Nip, I.S.; Green, J.R. Increases in cognitive and linguistic processing primarily account for increases in speaking rate with age. Child Dev. 2013, 4, 1324–1337. [Google Scholar] [CrossRef] [PubMed]

- Wilson, S.M.; Henry, M.L.; Besbris, M.; Ogar, J.M.; Dronkers, N.F.; Jarrold, W.; Miller, B.L.; Gorno-Tempini, M.L. Connected speech production in three variants of primary progressive aphasia. Brain 2010, 33, 2069–2088. [Google Scholar] [CrossRef]

- Filiou, R.P.; Bier, N.; Slegers, A.; Houze, B.; Belchior, P.; Brambati, S.M. Connected speech assessment in the early detection of Alzheimer’s disease and mild cognitive impairment: A scoping review. Aphasiology 2020, 4, 723–755. [Google Scholar] [CrossRef]

- Goldman-Eisler, F. The determinants of the rate of speech output and their mutual relations. J. Psychosom. Res. 1956, 1, 137–143. [Google Scholar] [CrossRef]

- Fergadiotis, G.; Wright, H.H.; Capilouto, G.J. Productive vocabulary across discourse types. Aphasiology 2011, 5, 1261–1278. [Google Scholar] [CrossRef] [PubMed]

- Ulatowska, H.K.; North, A.J.; Macaluso-Haynes, S. Production of narrative and procedural discourse in aphasia. Brain Lang. 1981, 3, 345–371. [Google Scholar] [CrossRef] [PubMed]

- Bliss, L.S.; McCabe, A. Comparison of discourse genres: Clinical implications. Contemp. Issues Commun. Sci. Disord. 2006, 33, 126–167. [Google Scholar] [CrossRef]

- Armstrong, E.; Ciccone, N.; Godecke, E.; Kok, B. Monologues and dialogues in aphasia: Some initial comparisons. Aphasiology 2011, 5, 1347–1371. [Google Scholar] [CrossRef]

- Fergadiotis, G.; Wright, H.H. Lexical diversity for adults with and without aphasia across discourse elicitation tasks. Aphasiology 2011, 5, 1414–1430. [Google Scholar] [CrossRef]

- Glosser, G.; Wiener, M.; Kaplan, E. Variations in aphasic language behaviors. J. Speech Hear. Disord. 1988, 3, 115–124. [Google Scholar] [CrossRef] [PubMed]

- Richardson, J.D.; Dalton, S.G. Main concepts for three different discourse tasks in a large non-clinical sample. Aphasiology 2016, 30, 45–73. [Google Scholar] [CrossRef]

- Petrides, M.; Pandya, D.N. Distinct parietal and temporal pathways to the homologues of Broca’s area in the monkey. PLoS Biol. 2009, 7, e1000170. [Google Scholar] [CrossRef] [PubMed]

- Saur, D.; Kreher, B.W.; Schnell, S.; Kümmerer, D.; Kellmeyer, P.; Vry, M.S.; Umarova, R.; Musso, M.; Glauche, V.; Abel, S.; et al. Ventral and dorsal pathways for language. Proc. Natl. Acad. Sci. USA 2008, 5, 18035–18040. [Google Scholar] [CrossRef] [PubMed]

- Georgakopoulou, A.; Goutsos, D. Text and Communication; Patakis: Athens, Greece, 2011. (In Greek) [Google Scholar]

- Kasselimis, D.; Varkanitsa, M.; Angelopoulou, G.; Evdokimidis, I.; Goutsos, D.; Potagas, C. Word error analysis in aphasia: Introducing the Greek aphasia error corpus (GRAEC). Front. Psychol. 2020, 1, 1577. [Google Scholar] [CrossRef]

- Saffran, E.M.; Berndt, R.S.; Schwartz, M.F. The quantitative analysis of agrammatic production: Procedure and data. Brain Lang. 1989, 37, 440–479. [Google Scholar] [CrossRef]

- Rochon, E.; Saffran, E.M.; Berndt, R.S.; Schwartz, M.F. Quantitative analysis of aphasic sentence production: Further development and new data. Brain Lang. 2000, 72, 193–218. [Google Scholar] [CrossRef]

- Varkanitsa, M. Quantitative and error analysis of connected speech: Evidence from Greek-speaking patients with aphasia and normal speakersitle. In Current Trends in Greek Linguistics; Fragaki, G., Georgakopoulos, A., Themistocleous, C., Eds.; Cambridge Scholars Publishing: Cambridge, UK, 2012; pp. 313–338. [Google Scholar]

- Wittenburg, P.; Brugman, H.; Russel, A.; Klassmann, A.; Sloetjes, H. ELAN: A professional framework for multimodality research. In Proceedings of the Fifth International Conference on Language Resources and Evaluation LREC, Genoa, Italy, 22–28 May 2006. [Google Scholar]

- Brugman, H.; Russel, A.; Nijmegen, X. Annotating Multi-media/Multi-modal Resources with ELAN. In Proceedings of the LREC, Lisbon, Portugal, 26–28 May 2004; pp. 2065–2068. [Google Scholar]

- Zellner, B. Pauses and the temporal structure of speech. In Zellner, B. Pauses and the temporal structure of speech. In Fundamentals of Speech Synthesis and Speech Recognition; Keller, E., Ed.; John Wiley: Chichester, UK, 1994; pp. 41–62. [Google Scholar]

- Grande, M.; Hussmann, K.; Bay, E.; Christoph, S.; Piefke, M.; Willmes, K.; Huber, W. Basic parameters of spontaneous speech as a sensitive method for measuring change during the course of aphasia. Int. J. Lang. Commun. Disord. 2008, 43, 408–426. [Google Scholar] [CrossRef]

- Grande, M.; Meffert, E.; Schoenberger, E.; Jung, S.; Frauenrath, T.; Huber, W.; Hussmann, K.; Moormann, M.; Heim, S. From a concept to a word in a syntactically complete sentence: An fMRI study on spontaneous language production in an overt picture description task. Neuroimage 2012, 61, 702–714. [Google Scholar] [CrossRef]

- Hawkins, P.R. The syntactic location of hesitation pauses. Lang. Speech 1971, 14, 277–288. [Google Scholar] [CrossRef]

- Goodglass, H.; Kaplan, E. The Assessment of Aphasia and Related Disorders; Lea & Febiger: Philadelphia, PA, USA, 1983. [Google Scholar]

- Tsapkini, K.; Vlahou, C.H.; Potagas, C. Adaptation and validation of standardized aphasia tests in different languages: Lessonsfrom the Boston Diagnostic Aphasia Examination—Short Form in Greek. Behav. Neurol. 2010, 2, 111–119. [Google Scholar] [CrossRef]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. lme4: Linear Mixed-Effects Models Using Eigen and S4; R Package Version; 2016; p. 1. Available online: http://CRAN.Rproject.org/package=lme4.

- Satterthwaite, F.E. Synthesis of variance. Psychometrika 1941, 6, 309–316. [Google Scholar] [CrossRef]

- Luke, S.G. Evaluating significance in linear mixed-effects models in R. Behav. Res. Methods 2017, 9, 1494–1502. [Google Scholar] [CrossRef] [PubMed]

- Wickham, H.; Chang, W.; Wickham, M.H. Package ‘ggplot2’. In Create Elegant Data Visualisations Using the Grammar of Graphics; Version 1; 2016; pp. 1–189. Available online: https://ggplot2.tidyverse.org/reference/ggplot2-package.html.

- Levelt, W.J. Monitoring and self-repair in speech. Cognition 1983, 4, 41–104. [Google Scholar] [CrossRef] [PubMed]

- Levelt, W.J. Speaking: From Intention to Articulation; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Goldman-Eisler, F. The significance of changes in the rate of articulation. Lang. Speech 1961, 4, 171–174. [Google Scholar] [CrossRef]

- Mueller, K.D.; Hermann, B.; Mecollari, J.; Turkstra, L.S. Connected speech and language in mild cognitive impairment and Alzheimer’s disease: A review of picture description tasks. J. Clin. Exp. Neuropsychol. 2018, 40, 917–939. [Google Scholar] [CrossRef] [PubMed]

- Mar, R.A. The neuropsychology of narrative: Story comprehension, story production and their interrelation. Neuropsychologia 2004, 2, 1414–1434. [Google Scholar] [CrossRef]

- Gola, K.A.; Thorne, A.; Veldhuisen, L.D.; Felix, C.M.; Hankinson, S.; Pham, J.; Shany-Ur, T.; Schauer, G.P.; Stanley, C.M.; Glenn, S.; et al. Neural substrates of spontaneous narrative production in focal neurodegenerative disease. Neuropsychologia 2015, 9, 158–171. [Google Scholar] [CrossRef]

| Microstructure Indices | ||

|---|---|---|

| Speech Metric | Description | References |

Speech rate | Words (or syllables) per minute | Healthy speakers Angelopoulou et al., 2020 [3] Ardila and Rosselli, 1996 [4] (referred as total number of words) Capilouto et al., 2016 [5] (referred as total number of words) |

Patients with post-stroke aphasia Andreetta et al., 2012 [6] Angelopoulou et al., 2024 [7] Dede and Salis, 2020 [8] Efthymiopoulou et al., 2017 [9] Fromm et al., 2017 [10] Gordon and Clough, 2020 [11] | ||

Patients with primary progressive aphasia Cordella et al., 2017 [12] Nevler, et al., 2019 [13] Potagas et al., 2022 [14] | ||

| Patients with MCI/AD Lofgren and Hinzen, 2022 [15] Pistono et al., 2016; 2019 [16,17] Themistocleous et al., 2020 [18] | ||

Patients with psychiatric disorders Cohen et al., 2014 [19] (referred as total number of words) | ||

| Articulation rate | Words (or syllables) per minute, referring only to phonation time | Healthy speakers Robb et al., 2004 [20] |

Patients with post-stroke aphasia Dede and Salis, 2020 [8] | ||

Patients with primary progressive aphasia Cordella et al., 2017 [12] Potagas et al., 2022 [14] | ||

Patients with MCI/AD Themistocleous et al., 2020 [18] | ||

Patients with psychiatric disorders Hampsey et al., 2022 (a suggested study) [21] | ||

| Silent pauses’ frequency | Patients with post-stroke aphasia Angelopoulou et al., 2018; 2024 [7,22] | |

Patients with primary progressive aphasia Baqué et al., 2023 [23] Cordella et al., 2017 [12] Mack et al., 2015 [24] (restricted to nouns and verbs) Nevler, et al., 2019 [13] Potagas et al., 2022 [14] | ||

| Patients with MCI/AD Lofgren and Hinzen, 2022 [15] Pistono et al., 2016; 2019 [16,17] | ||

Patients with psychiatric disorders Cohen et al., 2014 [19] Çokal et al., 2019 [25] Rapcan et al., 2010 [26] | ||

| Silent pauses’ duration | Patients with post-stroke aphasia Angelopoulou et al., 2018; 2024 [7,22] Dede and Salis, 2020 [8] Salis and Dede, 2022 [27] | |

| Patients with primary progressive aphasia Baqué et al., 2023 [23] Cordella et al., 2017 [12] Nevler, et al., 2019 [13] Potagas et al., 2022 [14] | ||

| Patients with MCI/AD Lofgren and Hinzen, 2022 [15] Pastoriza-Dominguez et al., 2022 [28] Pistono et al., 2016; 2019 [16,17] | ||

| Patients with psychiatric disorders Cohen et al., 2014 [19] Rapcan et al., 2010 [26] | ||

| Speech Variables | ||

|---|---|---|

| 1. | Total duration of speech | counted in milliseconds (msec) |

| 2. | Total duration of phonation | duration of speech minus duration of disfluencies (silent and filled pauses), counted in milliseconds (msec) |

| 3. | Total duration of silent pauses | duration of speech minus duration of phonation and filled pauses), counted in milliseconds (msec) |

| 4. | Mean duration of silent pauses | calculated from the total cohort of individual pauses for each participant |

| 5. | Median duration of silent pauses | calculated from the total cohort of individual pauses for each participant |

| 6. | Total number of syllables | |

| 7. | Total number of words | |

| 8. | Speech rate | number of syllables to total duration of speech [(total number of syllables × 60)/total duration of audio file] |

| 9. | Articulation rate | number of syllables to total duration of phonation [(total number of syllables × 60)/duration of phonation] |

| 10. | Pause frequency | total number of pauses to total number of words [(total number of silent pauses × 100)/total number of words] |

| 11. | Pause frequency between sentences | total number of pauses between sentences to total number of words [(total number of silent pauses between sentences × 100)/total number of words] |

| 12. | Pause frequency within sentences | total number of pauses within sentences to total number of words [(total number of silent pauses within sentences × 100)/total number of words] |

| 13. | Open class word frequency | total number of open class words to total number of words [(total number of open class words × 100)/total number of words] |

| 14. | Pause frequency before open class words | total number of pauses before open class words to total number of open class words [(total number of silent pauses before open class words × 100)/total number of open class words] |

| 15. | Noun frequency | total number of nouns to total number of words [(total number of nouns × 100)/total number of words] |

| 16. | Pause frequency before nouns | total number of pauses before nouns to total number of nouns [(total number of silent pauses before nouns × 100)/total number of nouns] |

| 17. | Total number of verbs | total number of verbs to total number of words [(total number of verbs × 100)/total number of words] |

| 18. | Pause frequency before verbs | total number of pauses before verbs to total number of verbs [(total number of silent pauses before verbs × 100)/total number of verbs] |

| 19. | Number of clause-like units (CLU) | a syntactically and/or prosodically marked part of speech containing one verb |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Angelopoulou, G.; Kasselimis, D.; Goutsos, D.; Potagas, C. A Methodological Approach to Quantifying Silent Pauses, Speech Rate, and Articulation Rate across Distinct Narrative Tasks: Introducing the Connected Speech Analysis Protocol (CSAP). Brain Sci. 2024, 14, 466. https://doi.org/10.3390/brainsci14050466

Angelopoulou G, Kasselimis D, Goutsos D, Potagas C. A Methodological Approach to Quantifying Silent Pauses, Speech Rate, and Articulation Rate across Distinct Narrative Tasks: Introducing the Connected Speech Analysis Protocol (CSAP). Brain Sciences. 2024; 14(5):466. https://doi.org/10.3390/brainsci14050466

Chicago/Turabian StyleAngelopoulou, Georgia, Dimitrios Kasselimis, Dionysios Goutsos, and Constantin Potagas. 2024. "A Methodological Approach to Quantifying Silent Pauses, Speech Rate, and Articulation Rate across Distinct Narrative Tasks: Introducing the Connected Speech Analysis Protocol (CSAP)" Brain Sciences 14, no. 5: 466. https://doi.org/10.3390/brainsci14050466

APA StyleAngelopoulou, G., Kasselimis, D., Goutsos, D., & Potagas, C. (2024). A Methodological Approach to Quantifying Silent Pauses, Speech Rate, and Articulation Rate across Distinct Narrative Tasks: Introducing the Connected Speech Analysis Protocol (CSAP). Brain Sciences, 14(5), 466. https://doi.org/10.3390/brainsci14050466