EEG-Based Seizure Prediction Using Hybrid DenseNet–ViT Network with Attention Fusion

Abstract

1. Introduction

- Multi-model architecture with DenseNet and ViT: A hybrid model is proposed, combining the strengths of DenseNet and ViT architectures. Local features are captured by the DenseNet component, while global patterns are focused on by the ViT component. This combination allows for effective learning from the input data, resulting in improved seizure prediction performance;

- Attention-based feature fusion layer: An innovative attention-based feature fusion layer is included, dynamically weighing features extracted from DenseNet and ViT based on their relevance to the prediction task, resulting in a more robust and effective representation of seizure prediction;

- Pre-trained model transfer and optimized training strategy: The pre-trained DenseNet and ViT models are transferred to patient-specific models, which could enhance initial performance by utilizing their learned representations. Optimized training strategies include hyperparameter optimization with Optuna and early stopping. These techniques work together to improve the performance of the network.

2. Materials and Methodology

2.1. EEG Dataset

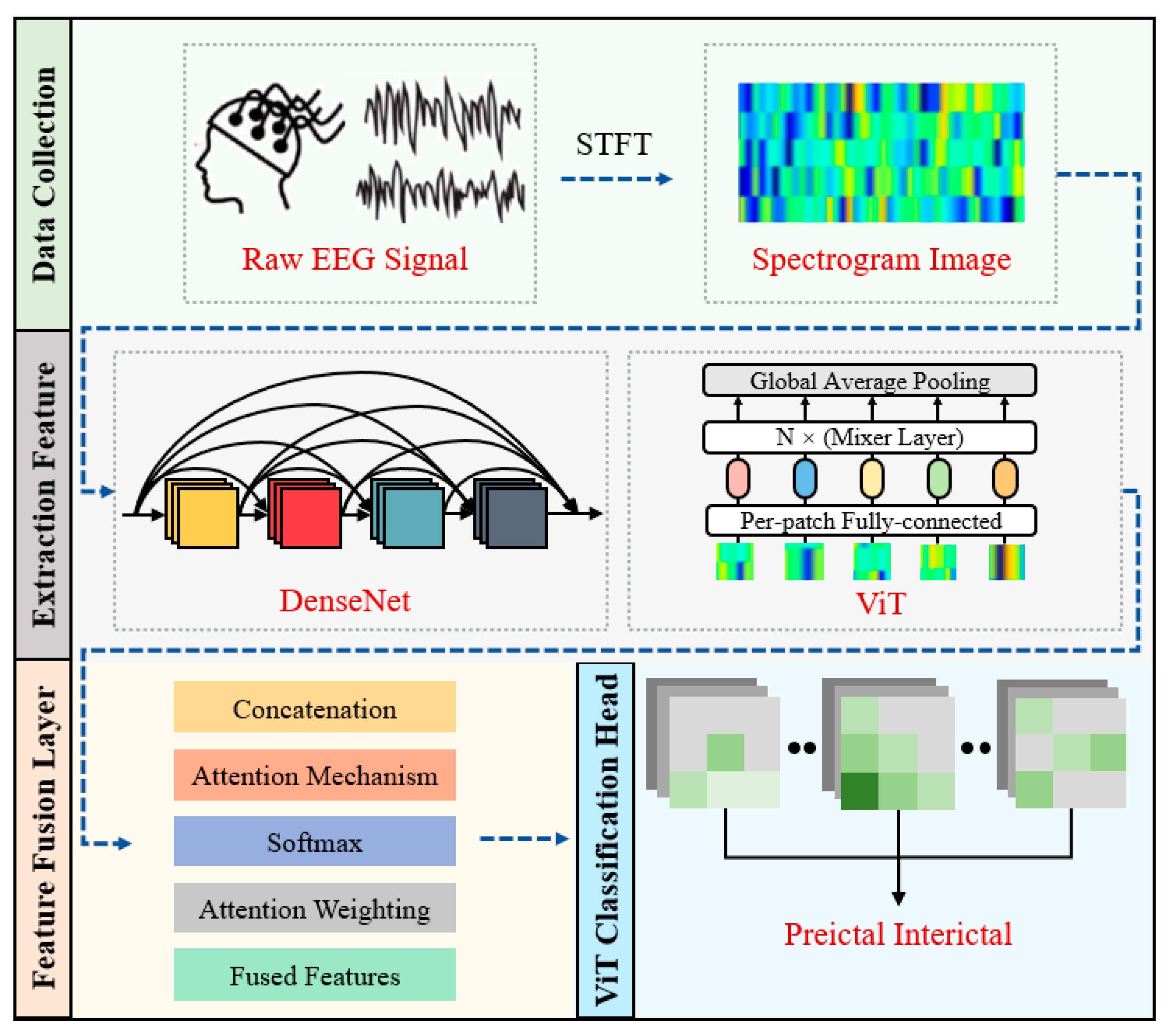

2.2. Algorithm Framework

2.3. Preprocessing

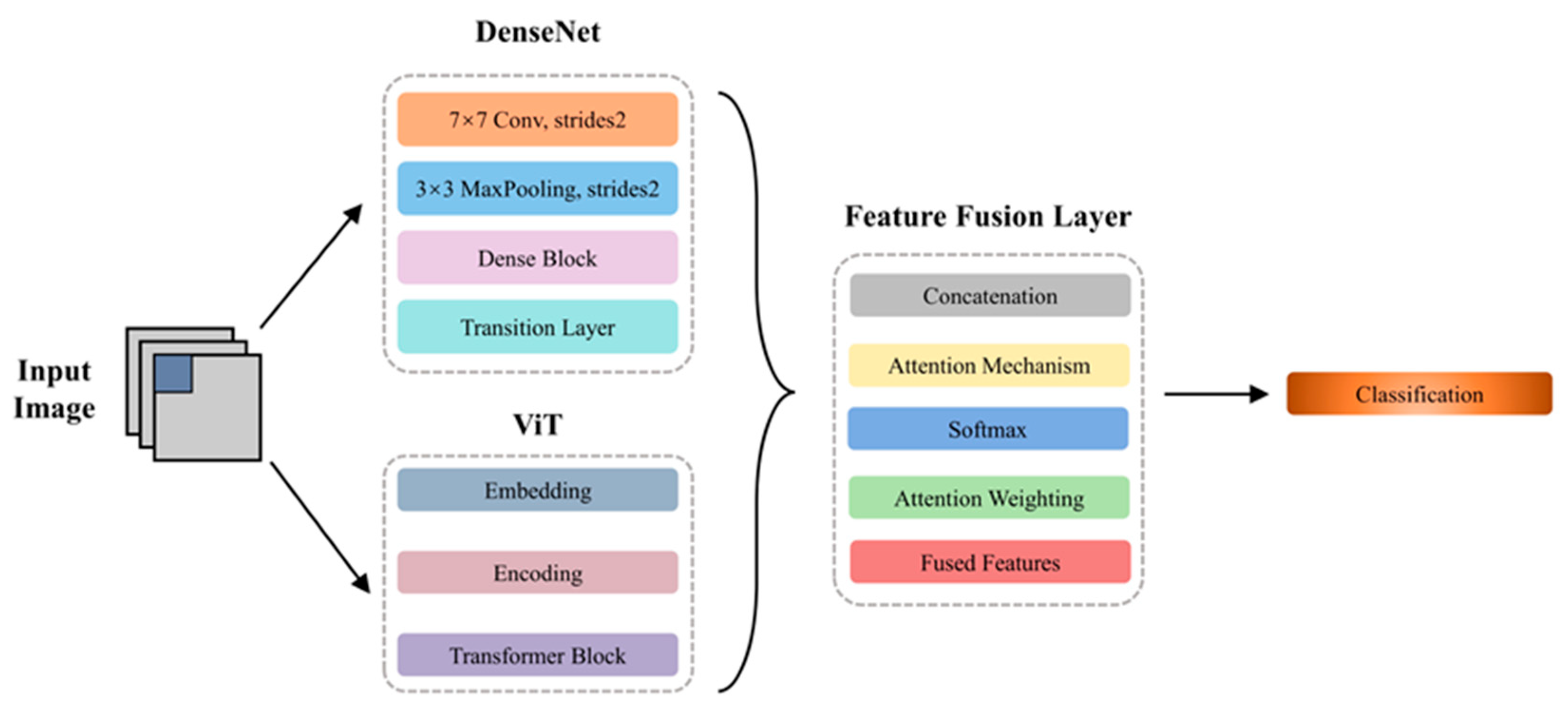

2.4. Hybrid Model

2.4.1. DenseNet

2.4.2. Vision Transformer

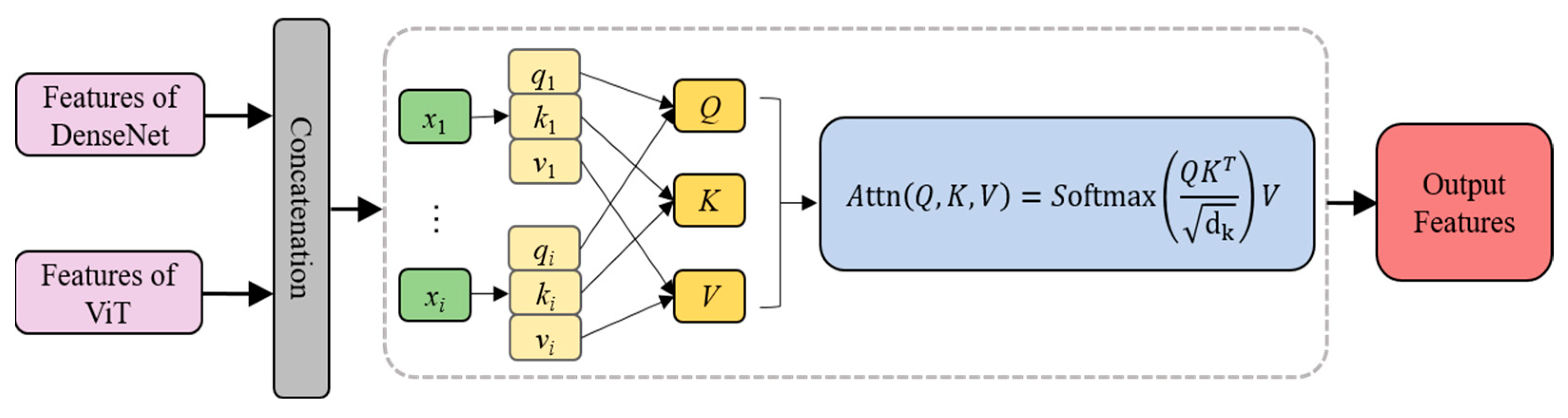

2.4.3. Feature Fusion Layer

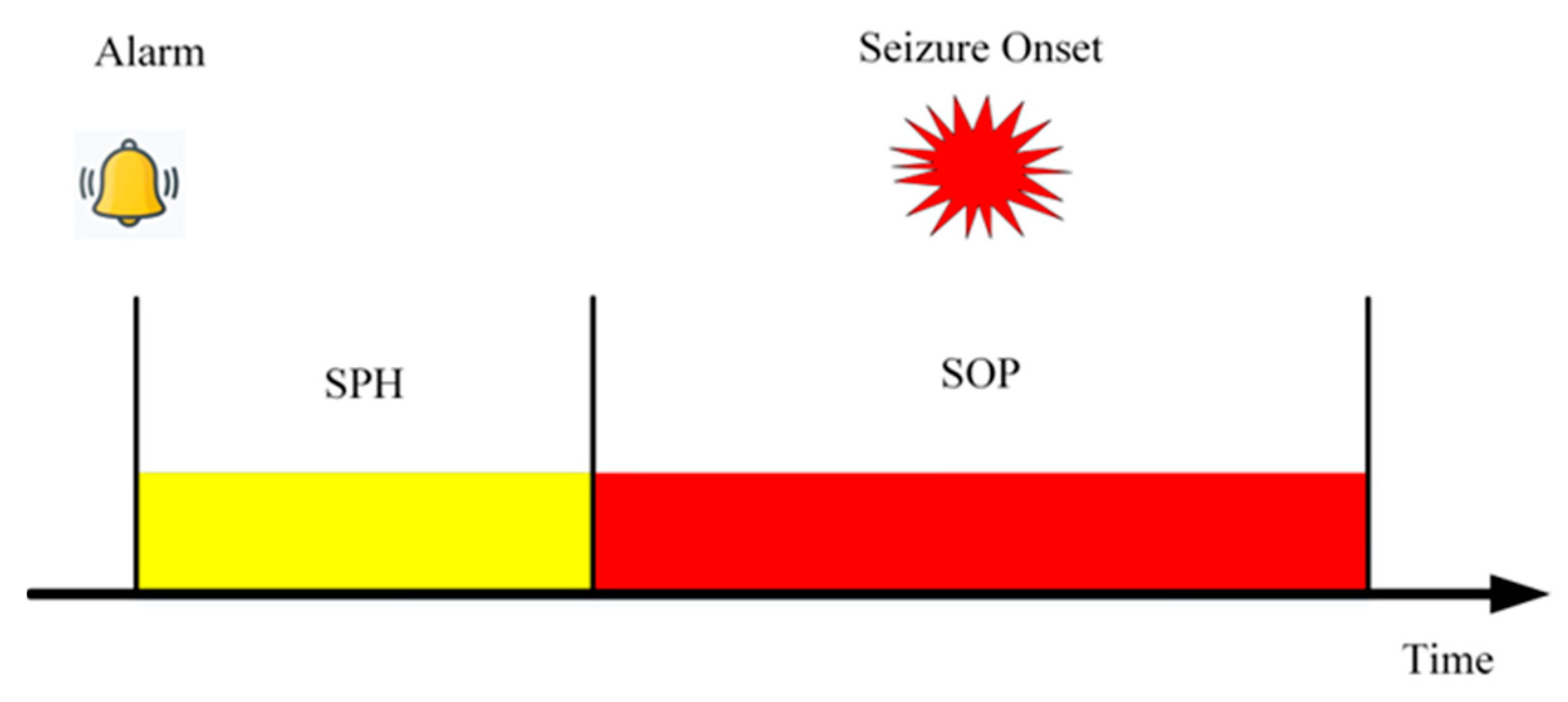

2.5. Postprocessing

3. Experimental Section

3.1. Experimental Details

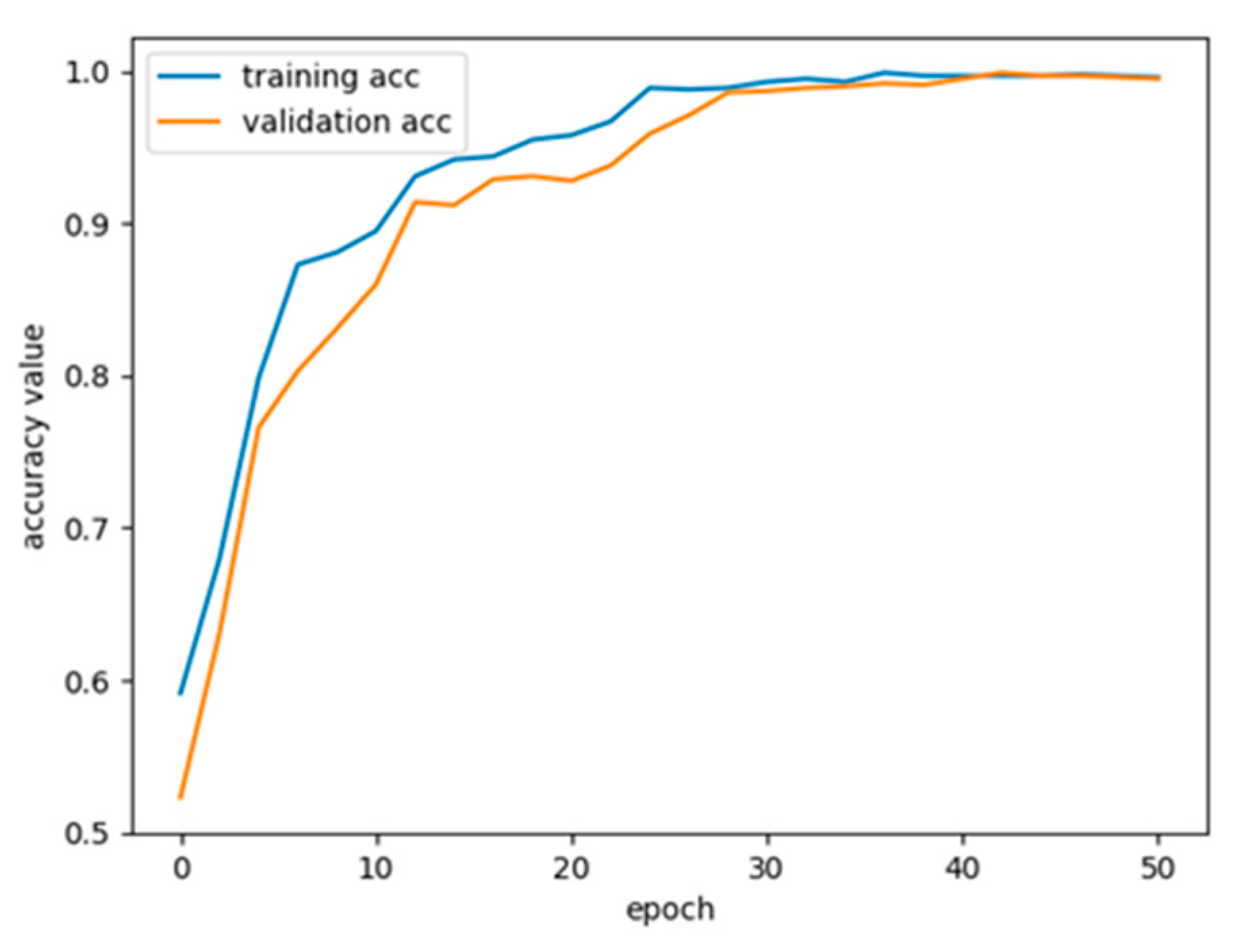

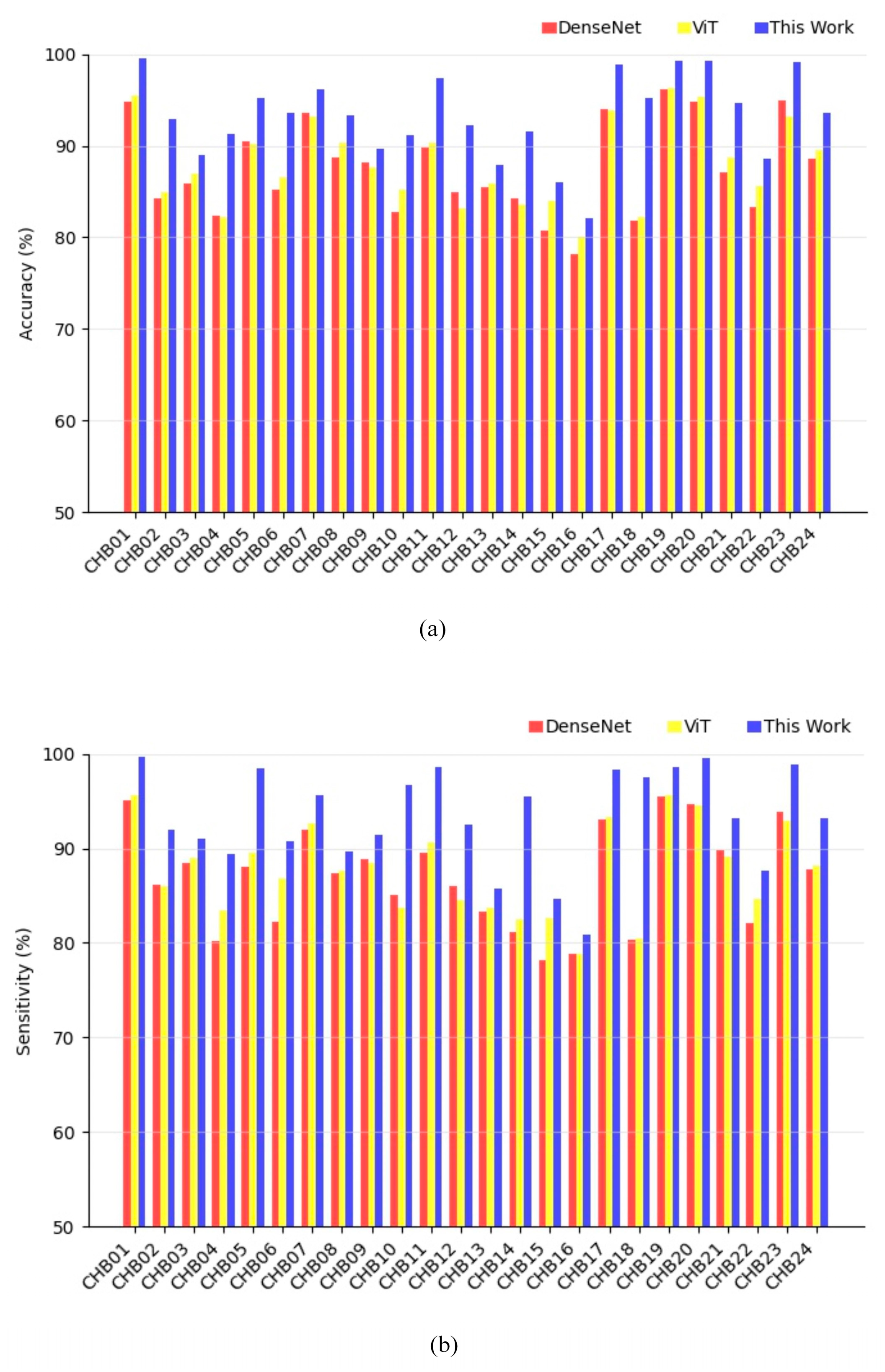

3.2. Evaluation Approach and Results

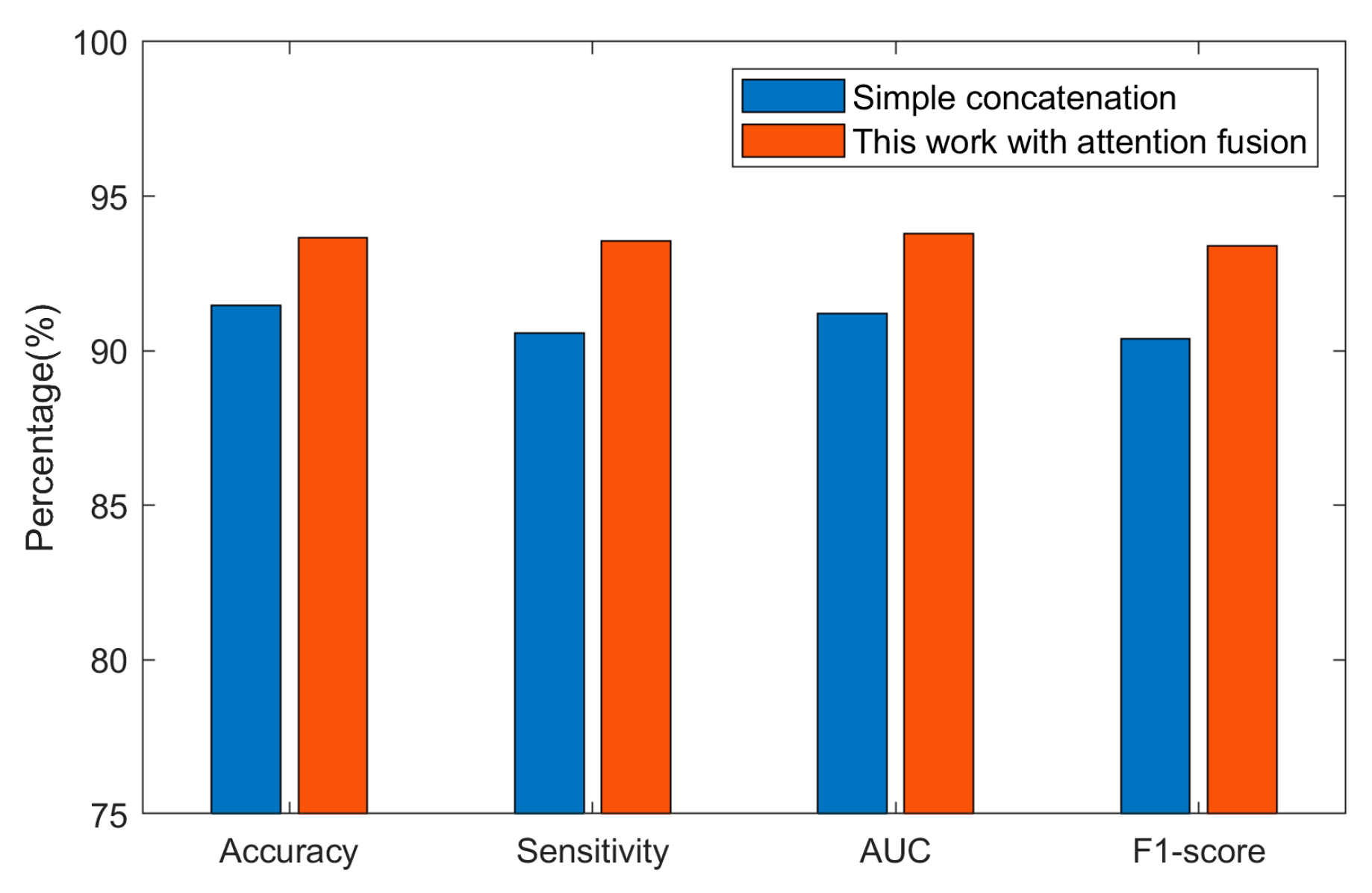

3.3. Ablation Experiment

- (1)

- Standalone DenseNet model: A standalone DenseNet model was trained and evaluated on the preprocessed EEG dataset. This experiment enabled us to assess DenseNet’s effectiveness in extracting spatial features and its contribution to the hybrid model.

- (2)

- Standalone ViT model: Similarly, we evaluated an independent ViT model on the same dataset. This experiment determined the effectiveness of ViT in capturing long-range dependencies and global contextual information.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Acharya, U.R.; Sree, S.V.; Swapna, G.; Martis, R.J.; Suri, J.S. Automated EEG Analysis of Epilepsy: A Review. Knowl.-Based Syst. 2013, 45, 147–165. [Google Scholar]

- Ehrens, D.; Cervenka, M.C.; Bergey, G.K.; Jouny, C.C. Dynamic training of a novelty classifier algorithm for real-time detection of early seizure onset. Clin. Neurophysiol. 2022, 135, 85–95. [Google Scholar] [PubMed]

- Savadkoohi, M.; Oladunni, T.; Thompson, L. A machine learning approach to epileptic seizure prediction using Electroencephalogram (EEG) Signal. Biocybern. Biomed. Eng. 2020, 40, 1328–1341. [Google Scholar] [PubMed]

- Dong, X.; He, L.; Li, H.; Liu, Z.; Shang, W.; Zhou, W. Deep learning based automatic seizure prediction with EEG time-frequency representation. Biomed. Signal Process. Control. 2024, 95, 106447. [Google Scholar]

- Shiao, H.-T.; Cherkassky, V.; Lee, J.; Veber, B.; Patterson, E.E.; Brinkmann, B.H.; Worrell, G.A. SVM-based system for prediction of epileptic seizures from iEEG signal. IEEE Trans. Biomed. Eng. 2016, 64, 1011–1022. [Google Scholar]

- Siddiqui, M.K.; Morales-Menendez, R.; Huang, X.; Hussain, N. A Review of Epileptic Seizure Detection Using Machine Learning Classifiers. Brain Inform. 2020, 7, 5. [Google Scholar]

- Ghaderyan, P.; Abbasi, A.; Sedaaghi, M.H. An Efficient Seizure Prediction Method Using KNN-Based Undersampling and Linear Frequency Measures. J. Neurosci. Methods 2014, 232, 134–142. [Google Scholar]

- Behnam, M.; Pourghassem, H. Real-time seizure prediction using RLS filtering and interpolated histogram feature based on hybrid optimization algorithm of Bayesian classifier and Hunting search. Comput. Methods Programs Biomed. 2016, 132, 115–136. [Google Scholar]

- Williamson, J.R.; Bliss, D.W.; Browne, D.W.; Narayanan, J.T. Seizure Prediction Using EEG Spatiotemporal Correlation Structure. Epilepsy Behav. 2012, 25, 230–238. [Google Scholar]

- Zhang, Z.; Parhi, K.K. Low-Complexity Seizure Prediction from iEEG/sEEG Using Spectral Power and Ratios of Spectral Power. IEEE Trans. Biomed. Circuits Syst. 2015, 10, 693–706. [Google Scholar]

- Elgohary, S.; Eldawlatly, S.; Khalil, M.I. Epileptic Seizure Prediction Using Zero-Crossings Analysis of EEG Wavelet Detail Coefficients. In Proceedings of the 2016 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Chiang Mai, Thailand, 5–7 October 2016; pp. 1–6. [Google Scholar]

- Kulaklı, G.; Akinci, T.C. PSD and Wavelet Analysis of Signals from a Healthy and Epileptic Patient. J. Cogn. Syst. 2018, 3, 12–14. [Google Scholar]

- Zhang, Z.; Chen, Z.; Zhou, Y.; Du, S.; Zhang, Y.; Mei, T.; Tian, X. Construction of Rules for Seizure Prediction Based on Approximate Entropy. Clin. Neurophysiol. 2014, 125, 1959–1966. [Google Scholar] [PubMed]

- Jana, R.; Mukherjee, I. Deep Learning Based Efficient Epileptic Seizure Prediction with EEG Channel Optimization. Biomed. Signal Process. Control. 2021, 68, 102767. [Google Scholar]

- Lekshmy, H.; Panickar, D.; Harikumar, S. Comparative Analysis of Multiple Machine Learning Algorithms for Epileptic Seizure Prediction. J. Phys. Conf. Ser. 2022, 2161, 012055. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Truong, N.D.; Nguyen, A.D.; Kuhlmann, L.; Bonyadi, M.R.; Yang, J.; Ippolito, S.; Kavehei, O. Convolutional Neural Networks for Seizure Prediction Using Intracranial and Scalp Electroencephalogram. Neural Netw. 2018, 105, 104–111. [Google Scholar]

- Tsiouris, K.M.; Pezoulas, V.C.; Zervakis, M.; Konitsiotis, S.; Koutsouris, D.D.; Fotiadis, D.I. A Long Short-Term Memory Deep Learning Network for the Prediction of Epileptic Seizures Using EEG Signals. Comput. Biol. Med. 2018, 99, 24–37. [Google Scholar]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine Learning and Deep Learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Jana, R.; Bhattacharyya, S.; Das, S. Epileptic seizure prediction from EEG signals using DenseNet. In Proceedings of the 2019 IEEE Symposium Series on Computational Intelligence (SSCI), Xiamen, China, 6–9 December 2019; pp. 604–609. [Google Scholar]

- Jibon, F.A.; Miraz, M.H.; Khandaker, M.U.; Rashdan, M.; Salman, M.; Tasbir, A.; Nishar, N.H.; Siddiqui, F.H. Epileptic seizure detection from electroencephalogram (EEG) signals using linear graph convolutional network and DenseNet based hybrid framework. J. Radiat. Res. Appl. Sci. 2023, 16, 100607. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Bhattacharya, A.; Baweja, T.; Karri, S. Epileptic Seizure Prediction Using Deep Transformer Model. Int. J. Neural Syst. 2022, 32, 2150058. [Google Scholar] [PubMed]

- Zhang, X.; Li, H. Patient-Specific Seizure Prediction from Scalp EEG Using Vision Transformer. In Proceedings of the 2022 IEEE 6th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 4–6 March 2022; Volume 6, pp. 1663–1667. [Google Scholar]

- Hussein, R.; Lee, S.; Ward, R. Multi-channel vision transformer for epileptic seizure prediction. Biomedicines 2022, 10, 1551. [Google Scholar] [CrossRef]

- Deng, Z.; Li, C.; Song, R.; Liu, X.; Qian, R.; Chen, X. EEG-based seizure prediction via hybrid vision transformer and data uncertainty learning. Eng. Appl. Artif. Intell. 2023, 123, 106401. [Google Scholar]

- Bengio, Y. Deep Learning of Representations for Unsupervised and Transfer Learning. In Proceedings of the ICML Workshop on Unsupervised and Transfer Learning, JMLR Workshop and Conference Proceedings, Washington, DC, USA, 2 July 2011; p. 1736. [Google Scholar]

- Colo, C.; Segura-Bedmar, I. Comparing Deep Learning Architectures for Sentiment Analysis on Drug Reviews. J. Biomed. Inform. 2020, 110, 103539. [Google Scholar]

- Wang, Z.; Wang, Y.; Hu, C.; Yin, Z.; Song, Y. Transformers for EEG-Based Emotion Recognition: A Hierarchical Spatial Information Learning Model. IEEE Sens. J. 2022, 22, 4359–4368. [Google Scholar]

- Shoeb, A.H. Application of Machine Learning to Epileptic Seizure Onset Detection and Treatment; Massachusetts Institute of Technology: Cambridge, MA, USA, 2009. [Google Scholar]

- Ryu, S.; Joe, I. A Hybrid DenseNet-LSTM Model for Epileptic Seizure Prediction. Appl. Sci. 2021, 11, 7661. [Google Scholar] [CrossRef]

- Pusarla, N.; Singh, A.; Tripathi, S. Learning DenseNet Features from EEG Based Spectrograms for Subject Independent Emotion Recognition. Biomed. Signal Process. Control. 2022, 74, 103485. [Google Scholar]

- Ullah, H.; Bin Heyat, B.; Akhtar, F.; Sumbul; Muaad, A.Y.; Islam, S.; Abbas, Z.; Pan, T.; Gao, M.; Lin, Y.; et al. An End-to-End Cardiac Arrhythmia Recognition Method with an Effective DenseNet Model on Imbalanced Datasets Using ECG Signal. Comput. Intell. Neurosci. 2022, 2022, 9475162. [Google Scholar]

- Zuo, S.; Xiao, Y.; Chang, X.; Wang, X. Vision Transformers for Dense Prediction: A Survey. Knowl.-Based Syst. 2022, 253, 109552. [Google Scholar]

- Maiwald, T.; Winterhalder, M.; Aschenbrenner-Scheibe, R.; Voss, H.U.; Schulze-Bonhage, A.; Timmer, J. Comparison of Three Nonlinear Seizure Prediction Methods by Means of the Seizure Prediction Characteristic. Phys. D Nonlinear Phenom. 2004, 194, 357–368. [Google Scholar]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the Fourteenth International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 20–25 August 1995; Volume 14, pp. 1137–1145. [Google Scholar]

- Khan, H.; Marcuse, L.; Fields, M.; Swann, K.; Yener, B. Focal Onset Seizure Prediction Using Convolutional Networks. IEEE Trans. Biomed. Eng. 2017, 65, 2109–2118. [Google Scholar] [PubMed]

- Zhang, Y.; Guo, Y.; Yang, P.; Chen, W.; Lo, B. Epilepsy Seizure Prediction on EEG Using Common Spatial Pattern and Convolutional Neural Network. IEEE J. Biomed. Health Inform. 2019, 24, 465–474. [Google Scholar] [PubMed]

- Ozcan, A.R.; Erturk, S. Seizure Prediction in Scalp EEG Using 3D Convolutional Neural Networks with an Image-Based Approach. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 2284–2293. [Google Scholar] [PubMed]

- Godoy, R.V.; Reis, T.J.; Polegato, P.H.; Lahr, G.J.; Saute, R.L.; Nakano, F.N.; Machado, H.R.; Sakamoto, A.C.; Becker, M.; Caurin, G.A. EEG-Based Epileptic Seizure Prediction Using Temporal Multi-Channel Transformers. arXiv 2022, arXiv:2209.11172. [Google Scholar]

| Case | Gender | Age | Record Time (h) | Duration of Seizures (s) | Number of Seizures (No Merge) | Number of Seizures # (Merge) |

|---|---|---|---|---|---|---|

| CHB01 | Female | 11 | 40.6 | 442 | 7 | 7 |

| CHB02 | Male | 11 | 35.3 | 172 | 3 | 3 |

| CHB03 | Female | 14 | 38.0 | 402 | 7 | 7 |

| CHB04 | Male | 22 | 155.9 | 382 | 4 | 4 |

| CHB05 | Female | 7 | 39.0 | 558 | 5 | 5 |

| CHB06 | Female | 1.5 | 66.7 | 147 | 10 | 10 |

| CHB07 | Female | 14.5 | 67.0 | 328 | 3 | 3 |

| CHB08 | Male | 3.5 | 20.0 | 919 | 5 | 5 |

| CHB09 | Female | 10 | 67.8 | 276 | 4 | 4 |

| CHB10 | Male | 3 | 50.0 | 454 | 7 | 7 |

| CHB11 | Female | 12 | 34.8 | 806 | 3 | 2 |

| CHB12 | Female | 2 | 20.6 | 1487 | 40 | 21 |

| CHB13 | Female | 3 | 33.0 | 547 | 12 | 10 |

| CHB14 | Female | 9 | 26.0 | 169 | 8 | 8 |

| CHB15 | Male | 16 | 40.0 | 1992 | 20 | 17 |

| CHB16 | Female | 7 | 19.0 | 84 | 10 | 9 |

| CHB17 | Female | 12 | 21.0 | 293 | 3 | 3 |

| CHB18 | Female | 18 | 35.6 | 317 | 6 | 6 |

| CHB19 | Female | 19 | 30.0 | 236 | 3 | 3 |

| CHB20 | Female | 6 | 27.5 | 294 | 8 | 8 |

| CHB21 | Female | 13 | 33.0 | 199 | 4 | 4 |

| CHB22 | Female | 9 | 31.0 | 204 | 3 | 3 |

| CHB23 | Female | 6 | 26.5 | 424 | 7 | 7 |

| CHB24 | - | - | 21.3 | 511 | 16 | 14 |

| Total | - | - | 979.6 | 11,646 | 198 | 170 |

| Case | Accuracy (%) | Sensitivity (%) | FPR (/h) | AUC | F1-Score |

|---|---|---|---|---|---|

| CHB01 | 99.51 | 99.67 | 0.002 | 0.99 | 0.99 |

| CHB02 | 92.88 | 91.96 | 0.119 | 0.92 | 0.91 |

| CHB03 | 90.13 | 90.98 | 0.151 | 0.92 | 0.92 |

| CHB04 | 91.27 | 89.42 | 0.132 | 0.93 | 0.92 |

| CHB05 | 95.20 | 95.51 | 0.073 | 0.96 | 0.95 |

| CHB06 | 93.62 | 90.77 | 0.041 | 0.95 | 0.92 |

| CHB07 | 96.17 | 95.66 | 0.031 | 0.96 | 0.94 |

| CHB08 | 93.32 | 89.61 | 0.062 | 0.93 | 0.92 |

| CHB09 | 90.67 | 91.39 | 0.139 | 0.91 | 0.91 |

| CHB10 | 91.18 | 93.65 | 0.143 | 0.90 | 0.92 |

| CHB11 | 97.31 | 98.61 | 0.011 | 0.96 | 0.95 |

| CHB12 | 92.17 | 92.56 | 0.130 | 0.91 | 0.93 |

| CHB13 | 87.88 | 85.71 | 0.147 | 0.90 | 0.89 |

| CHB14 | 91.62 | 95.50 | 0.138 | 0.93 | 0.95 |

| CHB15 | 90.97 | 89.64 | 0.152 | 0.92 | 0.90 |

| CHB16 | 85.11 | 87.81 | 0.201 | 0.89 | 0.88 |

| CHB17 | 98.90 | 98.31 | 0.006 | 0.97 | 0.98 |

| CHB18 | 95.16 | 97.52 | 0.017 | 0.95 | 0.97 |

| CHB19 | 99.28 | 98.66 | 0.003 | 0.99 | 0.99 |

| CHB20 | 99.33 | 99.56 | 0.003 | 0.99 | 0.99 |

| CHB21 | 94.66 | 93.18 | 0.108 | 0.94 | 0.93 |

| CHB22 | 88.56 | 87.69 | 0.120 | 0.89 | 0.88 |

| CHB23 | 99.13 | 98.92 | 0.012 | 0.99 | 0.97 |

| CHB24 | 93.58 | 93.16 | 0.047 | 0.92 | 0.92 |

| Average | 93.65 | 93.56 | 0.084 | 0.938 | 0.934 |

| Authors | Year | Case of Patients | Method | Acc (%) | Sen (%) | FPR (/h) | AUC | F1-Score |

|---|---|---|---|---|---|---|---|---|

| Khan et al. | 2017 | 13 | CWT + CNN | - | 87.8 | 0.147 | - | - |

| Truong et al. | 2018 | 13 | STFT + CNN | - | 81.2 | 0.16 | - | - |

| Tsiouris et al. | 2018 | 24 | LSTM | - | 99.2 | 0.11 | - | - |

| Zhang et al. | 2019 | 23 | wavelet packet + CNN | 90.0 | 92.0 | 0.12 | 0.90 | 0.91 |

| Ozcan et al. | 2019 | 16 | 3D CNN | - | 85.71 | 0.096 | - | - |

| Bhattacharya et al. | 2021 | 21 | STFT + Transformer | - | 98.46 | 0.124 | - | - |

| Zhang et al. | 2022 | 14 | STFT + ViT | 81.19 | 75.58 | - | 0.85 | - |

| Godoy et al. | 2022 | 22 | TMC-ViT | 82.0 | 80.0 | - | 0.89 | - |

| Deng et al. | 2023 | 18 | HViT-DUL | - | 87.9 | 0.056 | 0.937 | - |

| This work | 2024 | 24 | STFT + DenseNet–ViT | 93.65 | 93.56 | 0.083 | 0.938 | 0.934 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, S.; Yan, K.; Wang, S.; Liu, J.-X.; Wang, J. EEG-Based Seizure Prediction Using Hybrid DenseNet–ViT Network with Attention Fusion. Brain Sci. 2024, 14, 839. https://doi.org/10.3390/brainsci14080839

Yuan S, Yan K, Wang S, Liu J-X, Wang J. EEG-Based Seizure Prediction Using Hybrid DenseNet–ViT Network with Attention Fusion. Brain Sciences. 2024; 14(8):839. https://doi.org/10.3390/brainsci14080839

Chicago/Turabian StyleYuan, Shasha, Kuiting Yan, Shihan Wang, Jin-Xing Liu, and Juan Wang. 2024. "EEG-Based Seizure Prediction Using Hybrid DenseNet–ViT Network with Attention Fusion" Brain Sciences 14, no. 8: 839. https://doi.org/10.3390/brainsci14080839

APA StyleYuan, S., Yan, K., Wang, S., Liu, J.-X., & Wang, J. (2024). EEG-Based Seizure Prediction Using Hybrid DenseNet–ViT Network with Attention Fusion. Brain Sciences, 14(8), 839. https://doi.org/10.3390/brainsci14080839